Comparing Virtual and Real-Life Rapid Prototyping Methods for User Testing Smart City Interfaces: A Case Study

Abstract

Featured Application

Abstract

1. Introduction

1.1. Low-Fidelity Prototypes for In Situ Testing

1.2. Virtual Prototyping and Testing in the Lab

1.3. High-Fidelity Prototypes for Validation Testing

1.4. Evaluating VR Prototyping

2. Method

2.1. Use Case

2.2. Testing Protocol

2.3. Physical Prototyping and Testing in the Field (TIF)

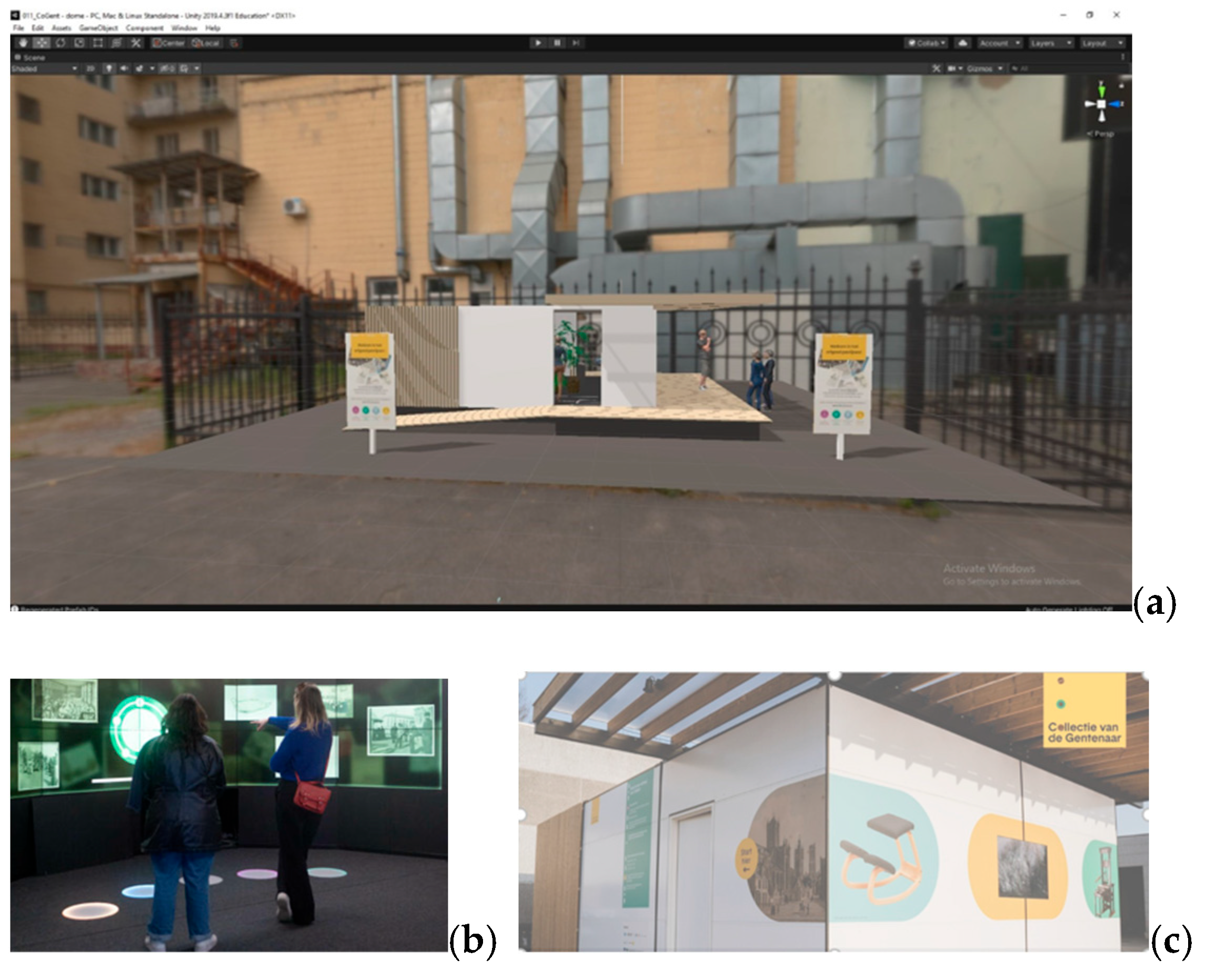

2.4. Virtual Prototyping and Testing in the Lab (VRT)

2.5. Final Product High-Fidelity Testing (FP)

2.6. Evaluation Methods

- 1.

- Observations

- 2.

- Quantitative analysis of questionnaire data

- 3.

- Expert interviews

3. Results

3.1. Physical Prototyping and Testing in the Field (TIF)

“I could imagine that I was present in this Pop-up Museum, but the experience still felt like separate tests. It is a little too far removed from the visualization (render).”(Participant in the field lab test)

“I really liked the fact that field testing puts you in the right environment, you also get closer contact to your stakeholders.”(Participant in field lab test)

3.2. Virtual Prototyping and Testing in the Lab (VRT)

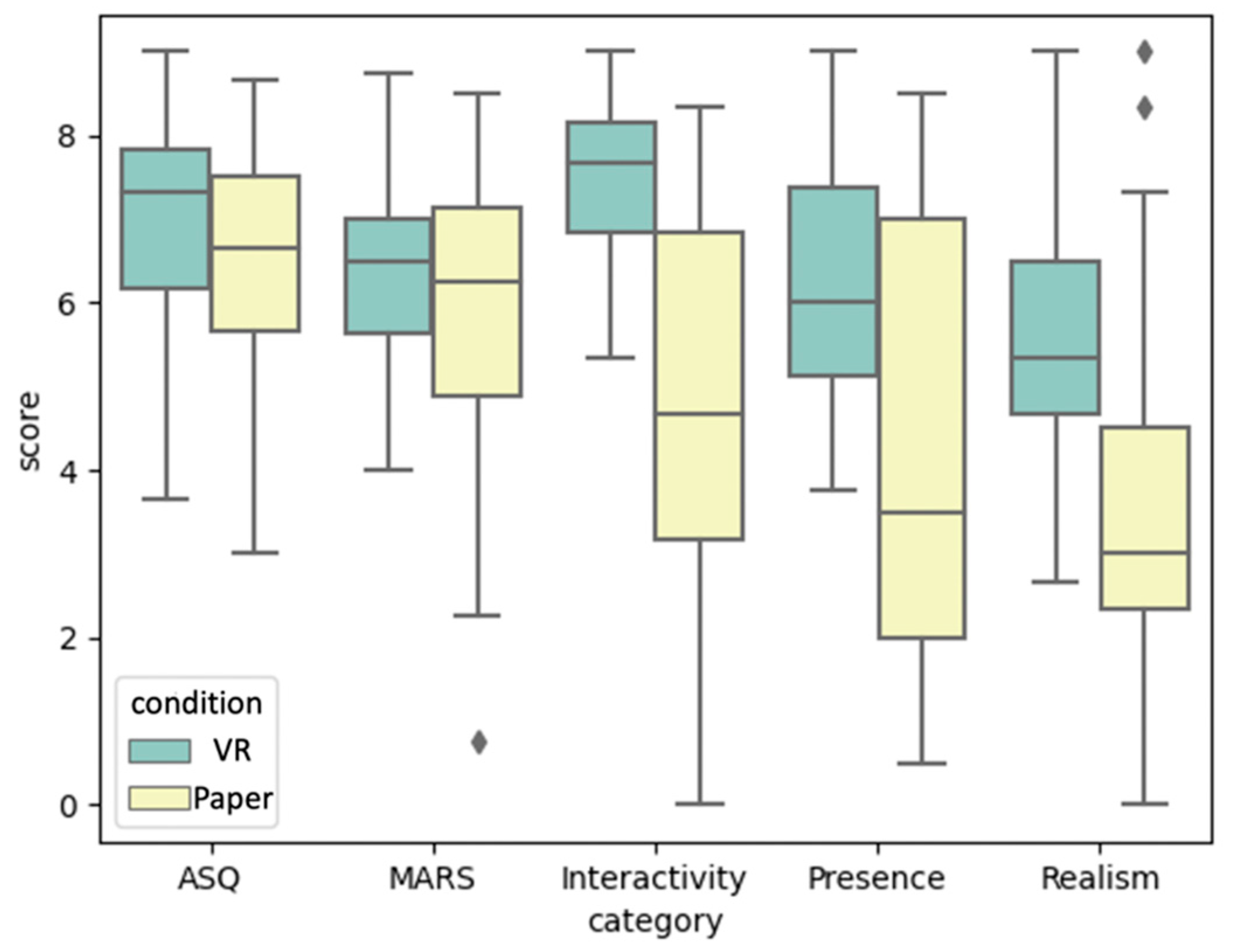

- Quantitative comparative test

- 2.

- Qualitative reporting

“Some of my colleagues really couldn’t handle VR properly. They sometimes said: I’m too old for this, I can’t do this. Some things were just difficult such as picking up an object. Sometimes VR does not do what you would expect it to do.”(Expert on content creation)

“When I finally saw the finished result, if felt already familiar in a way.”(Expert on social cohesion)

“VR places the product in a context and allows the user to see the product on a 1-to-1 scale. The same idea applies for smaller products that are 3D printed or viewed using Figma. It (VR) has a big added value for internal use even with people (designers) who have a lot of spatial cognition”(Expert on product design)

“…with renders I cannot perform the actions. I really like that the experience was included with the visualization”(Expert on content creation)

“I was impressed, I think this technology will keep improving as well. Most dimensions of the VR model were good, the proportions and structure of the VR model came very close to the final result”(Expert on project management)

“I think it (VR prototype) produces procedural knowledge…you can explore the actions you can do in the final product…”(Expert on social cohesion)

“VR helped with the ability to experience interfaces and use of the to be developed product, it made the product immediately clear”(Expert on project management)

“I think it was a lot of fun to do since it was my first VR experience”(Expert on content creation)

“We want to be quite certain of the high-level concept before we start drawing in 3D. The original concept was made on paper, so VR can only be used after this phase in the development process.”(Expert on product design)

“The (Oculus) Quest is easy because it is very portable, so there is not a lot of overhead. Multiplayer is cool, but too much of a hassle to implement”(Expert on project management)

“It is partly our job to take up an expert role and choose from this perspective and even have a preference for certain options.”(Expert on product design)

3.3. Final Product High-Fidelity Testing (FP)

“I am not gonna say it is the same, it looks the same.”(Expert on content creation)

“In VR everything seemed closer together and the prototype looked smaller. Also, pressing buttons made it unnatural for some people”(Expert on project management)

“It was very noisy and warm inside. I felt more uncomfortable in real life. The VR test itself was realistic, but there might have been too little focus on the social context.”(Participant final test)

4. Discussion

4.1. VR as a Bridge Between Prototyping and User-Centred Design Validation

4.2. The Role of VRT in the Design Process

4.3. Enhancing VR Prototyping for Future Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Variable | Shapiro_w | Shapiro_p | Kolmogorov_d | Kolmogorov_p | Parametric/ Non-Parametric |

|---|---|---|---|---|---|

| Involvement_paper | 0.87 | 0.00 | 0.11 | 0.51 | Non-parametric |

| Involvement_VR | 0.95 | 0.20 | 0.15 | 0.10 | Parametric |

| Presence_paper | 0.91 | 0.02 | 0.16 | 0.07 | Non-parametric |

| Presence_VR | 0.96 | 0.42 | 0.12 | 0.42 | Parametric |

| Realism_paper | 0.94 | 0.15 | 0.15 | 0.14 | Parametric |

| Realism_VR | 0.98 | 0.79 | 0.10 | 0.73 | Parametric |

| Interactivity_paper | 0.97 | 0.54 | 0.09 | 0.83 | Parametric |

| Interactivity_VR | 0.97 | 0.55 | 0.12 | 0.45 | Parametric |

| MARS_paper | 0.92 | 0.04 | 0.14 | 0.18 | Non-parametric |

| MARS_VR | 0.98 | 0.92 | 0.09 | 0.79 | Parametric |

| ASQ_paper | 0.94 | 0.12 | 0.14 | 0.23 | Parametric |

| ASQ_VR | 0.93 | 0.08 | 0.20 | 0.01 | Non-parametric |

References

- Soute, I.; Vacaretu, T.; De Wit, J.; Markopoulos, P. Design and evaluation of RaPIDO, A platform for rapid prototyping of interactive outdoor games. ACM Trans. Comput.-Hum. Interact. 2017, 24, 1–30. [Google Scholar] [CrossRef]

- Söderman, M. Virtual reality in product evaluations with potential customers: An exploratory study comparing virtual reality with conventional product representations. J. Eng. Des. 2005, 16, 311–328. [Google Scholar] [CrossRef]

- Palacios-Ibáñez, A.; Pirault, S.; Ochando-Martí, F.; Contero, M.; Camba, J.D. An examination of the relationship between visualization media and consumer product evaluation. IEEE Trans. Vis. Comput. Graph. 2023, 30, 3636–3649. [Google Scholar] [CrossRef]

- Lim, Y.K.; Stolterman, E.; Tenenberg, J. The anatomy of prototypes: Prototypes as filters, prototypes as manifestations of design ideas. ACM Trans. Comput.-Hum. Interact. 2008, 15, 1–27. [Google Scholar] [CrossRef]

- Hoppmann, J.; Zurich, E.; Dombrowski, U.; Zahn, T. A Framework for Organizing Lean Product Development. Eng. Manag. J. 2011, 23, 3–15. [Google Scholar] [CrossRef]

- Quaresma, M.; Soares, M.M.; Correia, M. UX Concepts and Perspectives–From Usability to User-Experience Design. In Handbook of Usability and User-Experience; CRC Press: Boca Raton, FL, USA, 2022; pp. 3–16. [Google Scholar]

- Araújo, D.; Davids, K.; Passos, P. Ecological validity, representative design, and correspondence between experimental task constraints and behavioral setting: Comment on Rogers, Kadar, and Costall (2005). In Ecological Psychology; Lawrence Erlbaum Associates Inc.: Mahwah, NJ, USA, 2007; Volume 19, pp. 69–78. [Google Scholar] [CrossRef]

- Holleman, G.A.; Hooge, I.T.; Kemner, C.; Hessels, R.S. The ‘real-world approach’and its problems: A critique of the term ecological validity. Front. Psychol. 2020, 11, 721. [Google Scholar] [CrossRef]

- Nastase, S.A.; Goldstein, A.; Hasson, U. Keep it real: Rethinking the primacy of experimental control in cognitive neuroscience. NeuroImage 2020, 222, 117254. [Google Scholar] [CrossRef]

- Maurya, S.; Takeda, Y.; Mougenot, C. Enabling designers to generate concepts of interactive product behaviours: A mixed reality design approach. In Proceedings of the International Conference on Engineering Design, ICED, Delft, The Netherlands, 5–8 August 2019; Cambridge University Press: Cambridge, UK, 2019; pp. 1933–1942. [Google Scholar] [CrossRef]

- Sefelin, R.; Tscheligi, M.; Giller, V. Paper Prototyping-What is it good for? A Comparison of Paper-and Computer-based Low-fidelity Prototyping. In CHI’03 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2003; pp. 778–779. [Google Scholar]

- Affairs, P. 2014. Available online: https://www.usability.gov/how-to-and-tools/methods/prototyping.html (accessed on 1 May 2022).

- Goel, V. Sketches of Thought; MIT Press: Cambridge, MA, USA, 1995. [Google Scholar]

- Liikkanen, L.A.; Kilpiö, H.; Svan, L.; Hiltunen, M. Lean UX—The next generation of user-centered Agile development? In Proceedings of the NordiCHI 2014: The 8th Nordic Conference on Human-Computer Interaction: Fun, Fast, Foundational, Helsinki, Finland, 26–30 October 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 1095–1100. [Google Scholar] [CrossRef]

- Oztoprak, A.; Erbug, C. Field versus Laboratory Usability Testing: A First Comparison. 2008. Available online: https://www.researchgate.net/profile/Cigdem-Erbug/publication/228982599_Field_versus_laboratory_usability_testing_A_first_Comparison/links/54476a5a0cf22b3c14e0d7fc/Field-versus-laboratory-usability-testing-A-first-Comparison.pdf (accessed on 21 October 2024).

- Kang, B.; Crilly, N.; Ning, W.; Kristensson, P.O. Prototyping to elicit user requirements for product development: Using head-mounted augmented reality when designing interactive devices. Des. Stud. 2023, 84, 101147. [Google Scholar] [CrossRef]

- Kaikkonen, A.; Kekäläinen, A.; Cankar, M.; Kallio, T.; Kankainen, A. Usability Testing of Mobile Applications: A Comparison between Laboratory and Field Testing. J. Usability Stud. 2005, 1, 23–28. [Google Scholar]

- Marcilly, R.; Monkman, H.; Pelayo, S.; Lesselroth, B.J. Usability Evaluation Ecological Validity: Is More Always Better? Healthcare 2024, 12, 1417. [Google Scholar] [CrossRef]

- Koskinen, I.; Kuusela, K.; Battarbee, K.; Soronen, A.; Mäyrä, F.; Mikkonen, J.; Zakrzewski, M. Morphome: A Constructive Field Study of Proactive Information Technology in the Home. 2006. Available online: https://www.misterhouse.net (accessed on 16 October 2024).

- Cornwall, A.; Jewkes, R. What Is Participatory Research? Soc. Sci. Med. 1995, 41, 1667–1676. [Google Scholar] [CrossRef] [PubMed]

- Ali, H. Understanding the Roles of Low-fidelity Prototypes in Engineering Design Activity Understanding Practical Ingenuity Through the Roles of Low-Fidelity Prototyping in Engineering Design Activity. In Proceedings of the ASEE Annual Conference, Tampa, FL, USA, 16–19 June 2019. [Google Scholar]

- Wang, X.; Chen, R. An experimental study on collaborative effectiveness of augmented reality potentials in urban design. CoDesign 2009, 5, 229–244. [Google Scholar] [CrossRef]

- Russo, M. AR in the Architecture Domain: State of the Art. Appl. Sci. 2021, 11, 6800. [Google Scholar] [CrossRef]

- Kandi, V.R.; Castronovo, F.; Brittle, P.; Ventura, S.M.; Nikolic, D. Assessing the Impact of a Construction Virtual Reality Game on Design Review Skills of Construction Students. J. Archit. Eng. 2020, 26, 04020035. [Google Scholar] [CrossRef]

- Koretsky, M.; Kelly, C.; Gummer, E. Student perceptions of learning in the laboratory: Comparison of industrially situated virtual laboratories to capstone physical laboratories. J. Eng. Educ. 2011, 100, 540–573. [Google Scholar] [CrossRef]

- Seth, A.; Vance, J.M.; Oliver, J.H. Virtual reality for assembly methods prototyping: A review. Virtual Real. 2011, 15, 5–20. [Google Scholar] [CrossRef]

- Guiseppe, C.; Giuliniana, U.; Jelena, P. JCAD-VR: A collaborative design tool for architects. In Proceedings of the 4th International Conference on Collaborative Virtual Environments, Bonn, Germany, 30 September–2 October 2002; pp. 153–154. [Google Scholar]

- Coburn, J.Q.; Freeman, I.; Salmon, J.L. A review of the capabilities of current low-cost virtual reality technology and its potential to enhance the design process. J. Comput. Inf. Sci. Eng. 2017, 17, 031013. [Google Scholar] [CrossRef]

- Wang, G.G. Definition and review of virtual prototyping. J. Comput. Inf. Sci. Eng. 2002, 2, 232–236. [Google Scholar] [CrossRef]

- Yen, P.-Y.; Bakken, S. A Comparison of Usability Evaluation Methods: Heuristic Evaluation versus End-User Think-Aloud Protocol—An Example from a Web-based Communication Tool for Nurse Scheduling. In AMIA Annual Symposium Proceedings; American Medical Informatics Association: Bethesda, MD, USA, 2009; Volume 2009, p. 714. [Google Scholar]

- de Regt, A.; Barnes, S.J.; Plangger, K. The virtual reality value chain. Bus. Horiz. 2020, 63, 737–748. [Google Scholar] [CrossRef]

- Bhandari, J.; MacNeilage, P.R.; Folmer, E. Teleportation without Spatial Disorientation Using Optical Flow Cues. In Proceedings of the Graphics Interface, Toronto, ON, Canada, 8–11 May 2018; pp. 162–167. [Google Scholar]

- Iskander, J.; Hossny, M.; Nahavandi, S. A review on ocular biomechanic models for assessing visual fatigue in virtual reality. IEEE Access 2018, 6, 19345–19361. [Google Scholar] [CrossRef]

- Zhou, Y.; Guo, H. Research on methods of product evaluation based on user experience. In ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2018; pp. 282–287. [Google Scholar] [CrossRef]

- Mukherjee, A.; Hoyer, W.D. The Effect of Novel Attributes on Product Evaluation. 2001. Available online: https://academic.oup.com/jcr/article/28/3/462/1830079 (accessed on 16 October 2024).

- Walker, M.; Takayama, L.; Landay, J.A. High-Fidelity or Low-Fidelity, Paper or Computer? Choosing Attributes When Testing Web Prototypes. 2002. Available online: http://guir.berkeley.edu/prototypefidelity (accessed on 16 October 2024).

- Calder, B.J.; Phillips, L.W.; Tybout, A.M. The Concept of External Validity. 1982. Available online: https://www.jstor.org/stable/2488620 (accessed on 16 October 2024).

- Kjeldskov, J.; Skov, M.B. Studying Usability In Sitro: Simulating Real World Phenomena in Controlled Environments. Int. J. Hum. Comput. Interact. 2007, 22, 7–36. [Google Scholar] [CrossRef]

- Peeters, M. Experiential Design Landscapes: Design Research in the Wild. 2013. Available online: https://www.nordes.org (accessed on 16 October 2024).

- Lipp, N.; Sterna, R.; Duzmańska-Misiarczyk, N.; Strojny, A.; Poeschl-Guenther, S.; Strojny, P. VR Realism Scale-Revalidation of contemporary VR headsets on a Polish sample. PLoS ONE 2021, 16, e0261507. [Google Scholar] [CrossRef] [PubMed]

- Slater, M. Immersion and the illusion of presence in virtual reality. Br. J. Psychol. 2018, 109, 431. [Google Scholar] [CrossRef] [PubMed]

- Schwind, V.; Knierim, P.; Haas, N.; Henze, N. Using presence questionnaires in virtual reality. In Proceedings of the Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Steuer, J.; Reeves, B. Defining Virtual Reality: Dimensions Determining Telepresence. 1992. Available online: http://www.cyborganic.com/ (accessed on 16 October 2024).

- Mütterlein, J. The Three Pillars of Virtual Reality? Investigating the Roles of Immersion, Presence, and Interactivity. 2018. Available online: http://hdl.handle.net/10125/50061 (accessed on 16 October 2024).

- Terhorst, Y.; Philippi, P.; Sander, L.B.; Schultchen, D.; Paganini, S.; Bardus, M.; Santo, K.; Knitza, J.; Machado, G.C.; Schoeppe, S.; et al. Validation of the mobile application rating scale (MARS). PLoS ONE 2020, 15, e0241480. [Google Scholar] [CrossRef]

- Aaron, W. Designing for Emotion; New York, NY, USA. 2011. Available online: https://theswissbay.ch/pdf/Gentoomen%20Library/The%20Actually%20Useful%20Programming%20Library/Design/Designing%20for%20Emotion%20-%20Spool%20-%20A%20Book%20Apart%20%282011%29.pdf (accessed on 14 September 2022).

- Nielsen Group. Available online: https://www.nngroup.com/articles/usability-101-introduction-to-usability/ (accessed on 14 September 2022).

- Lewis, J.R. Psychometric Evaluation of an after-Scenario Questionnaire for Computer Usability Studies: The ASQ. ACM Sigchi Bull. 1991, 23, 78–81. [Google Scholar] [CrossRef]

- Group, N.N. When to Use Which User-Experience Research Methods. (n.d.). Nielsen Norman Group. Available online: https://www.nngroup.com/articles/which-ux-research-methods/ (accessed on 14 September 2022).

- Harlen, W.; James, M. Assessment and learning: Differences and relationships between formative and summative assessment. Int. J. Phytoremediation 1997, 21, 365–379. [Google Scholar] [CrossRef]

- Pahl, G.; Beitz, W. Engineering Design; London, Springer. 1996. Available online: https://link.springer.com/book/10.1007/978-1-4471-3581-4 (accessed on 16 April 2024).

- Cross, N. Engineering Design Methods: Strategies for Product Design; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- Van Someren, M.; Barnard, Y.F.; Sandberg, J. The Think Aloud Method: A Practical Guide to Modelling Cognitive Processes; Academic Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Valdespino, A. After-Scenario Questionnaire (ASQ). 2019. Available online: https://help.qualaroo.com/hc/en-us/articles/360039070552-After-Scenario-Questionnaire-ASQ- (accessed on 16 October 2024).

- Hayes, A.L.; Ulinski, A.C.; Hodges, L.F. That avatar is looking at me! social inhibition in virtual worlds. In Proceedings of the Intelligent Virtual Agents: 10th International Conference, IVA 2010, Philadelphia, PA, USA, 20–22 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 454–467. [Google Scholar]

- Zheleva, A.; Emmerechts, E.; Declercq, A.; Bombeke, K.; Talsma, D.; De Marez, L. Identity in Pixels: The Interplay of Personality, Self-Discrepancy, and Avatar Type in Social Virtual Reality Communication. In Etmaal van de Communicatiewetenschap; Ghent University Researchers Location: Songdo, Republic of Korea, 2024. [Google Scholar]

| Product Development Stage | ||

|---|---|---|

| Strategize | Design | Launch and Assess |

|  |  |

| Research goal: Find new directions and opportunities | Research goal: Improve usability of design | Research goal: Measure product performance against itself or its competition |

| Generative research methods | Formative research methods | Summative research methods |

| Example Methods | ||

| Field studies, diary studies, interviews, surveys, participatory design, concept testing | Card sorting, tree testing, usability testing, remote testing (moderated and unmoderated) | Usability benchmarking, unmoderated UX testing, A/B testing, clickstream analytics, surveys |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Joundi, J.; Baccarne, B.; Robaeyst, B.; Bombeke, K.; De Marez, L.; Saldien, J. Comparing Virtual and Real-Life Rapid Prototyping Methods for User Testing Smart City Interfaces: A Case Study. Appl. Sci. 2024, 14, 9918. https://doi.org/10.3390/app14219918

Joundi J, Baccarne B, Robaeyst B, Bombeke K, De Marez L, Saldien J. Comparing Virtual and Real-Life Rapid Prototyping Methods for User Testing Smart City Interfaces: A Case Study. Applied Sciences. 2024; 14(21):9918. https://doi.org/10.3390/app14219918

Chicago/Turabian StyleJoundi, Jamil, Bastiaan Baccarne, Ben Robaeyst, Klaas Bombeke, Lieven De Marez, and Jelle Saldien. 2024. "Comparing Virtual and Real-Life Rapid Prototyping Methods for User Testing Smart City Interfaces: A Case Study" Applied Sciences 14, no. 21: 9918. https://doi.org/10.3390/app14219918

APA StyleJoundi, J., Baccarne, B., Robaeyst, B., Bombeke, K., De Marez, L., & Saldien, J. (2024). Comparing Virtual and Real-Life Rapid Prototyping Methods for User Testing Smart City Interfaces: A Case Study. Applied Sciences, 14(21), 9918. https://doi.org/10.3390/app14219918