Interacting with Smart Virtual Assistants for Individuals with Dysarthria: A Comparative Study on Usability and User Preferences

Abstract

:1. Introduction

- (i)

- Direct speech commands through Alexa (version 2.2). Alexa is a widely known hands-free smart voice assistant device developed by Amazon [25]. Choosing Alexa was informed by its status as the most widely used device globally for natural language processing and voice-activated assistance [26]. It operates primarily through speech recognition and natural language processing to understand and respond to voice commands (voice commands as input and voice replies or actions performed as output). This interaction method involves using speech, in which users directly send commands to the SVA by uttering a sentence command, for example, “Alexa, what is the weather today?”

- (ii)

- Nonverbal voice cues through the Daria system [15,27]. We refer to this system as ”Daria”, an easy to pronounce name. Further, all the letters from Daria are in “DysARthrIA” and in the same order. This choice was informed by emerging research indicating the potential of nonverbal vocalizations in enhancing interaction for individuals who have speech impairments [15,27]. Daria is a custom-developed system that allows for interaction with SVAs by using nonverbal voice cues, offering a more straightforward, shorter, and less fatiguing alternative to traditional speech commands. For example, users can simply make the sound /α/ (“aaa”) to turn on lights, which is significantly simpler than uttering complex sentences such as “Alexa, turn on the lights”. Daria is programmed using five distinct nonverbal voice cues, each mapped to a specific action. This mapping includes /α/ for lights, /i/ for news, /ŋ/ to initiate a call, humming for music, and /u/ for weather updates. This design ensures ease of control and enhanced accessibility, particularly for users who have severe dysarthria, allowing them to perform a variety of tasks using minimal effort. Prior studies have been conducted on the design of Daria [15,27], underscoring its primary goal of empowering individuals who have dysarthria. The system’s design involved collaboration with individuals diagnosed with dysarthria, ensuring that Daria is sensitively and effectively attuned to their specific communication challenges and preferences.

- (iii)

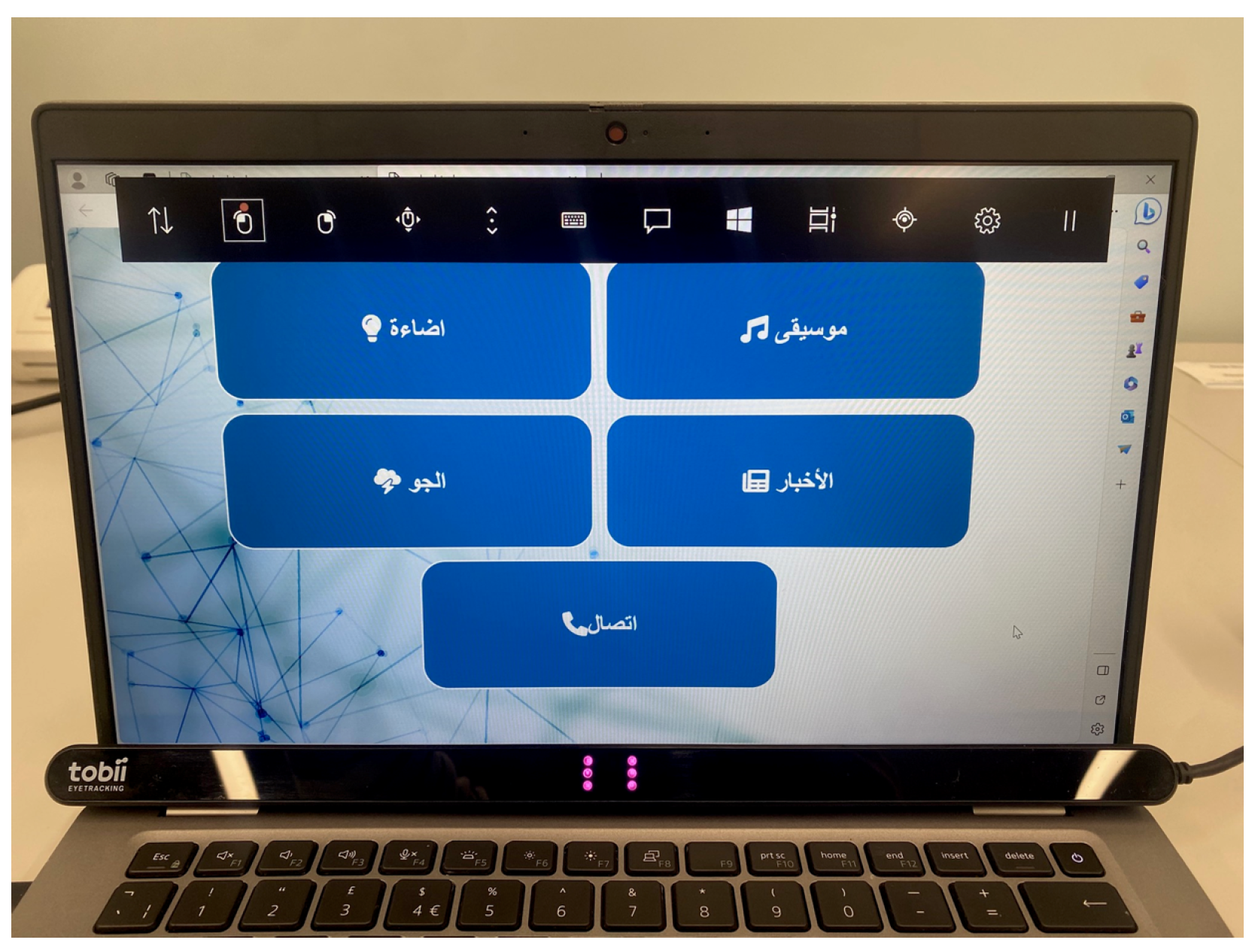

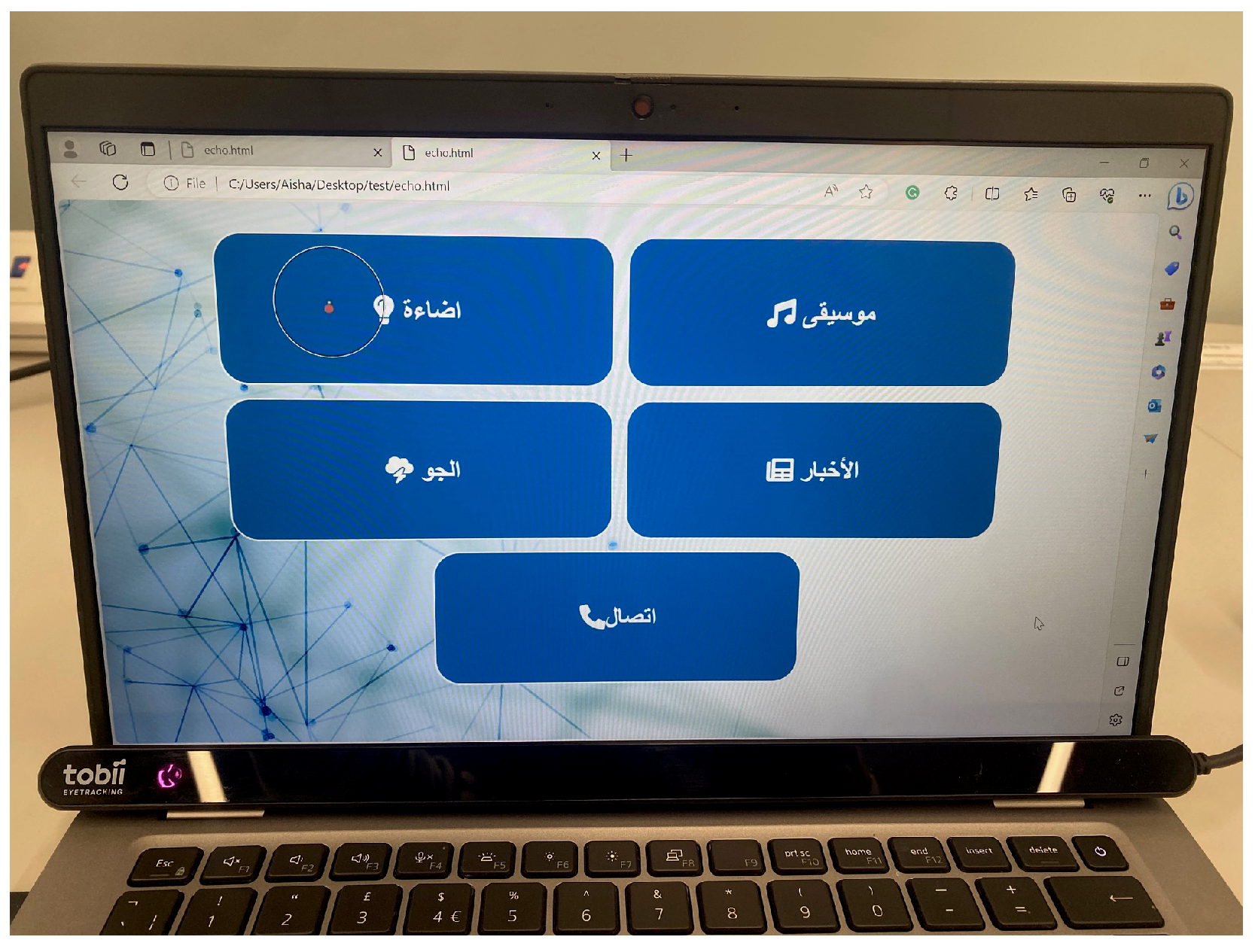

- Eye gaze control. This method employs eye gaze control by which users control a tablet connected to the SVA using only their eyes.

2. Background

3. Methods

- The usability attribute measured the user’s ease and efficiency in interacting with the system. This was measured by the system usability scale (SUS), a widely used tool for testing usability [39] that has been used across various domains, including the usability of SVAs [40,41,42,43,44]. This survey comprises 10 questions rated on a 5-point Likert scale that ranges from strongly disagree to strongly agree.

- The effectiveness attribute measured the user’s ability to complete a task (task success rate) [28]. The task was considered successful if the SVA successfully replied to or performed the command requested. The success was recorded during the study and confirmed by video recordings. Although the SUS provides subjective user feedback, the effectiveness attribute offers objective concrete data on how well a system performs in achieving its intended tasks [45] and many studies have used it in combination with the SUS [45,46,47,48].

- The preference for each system was evaluated through direct feedback from a post-study interview, which focused specifically on system preference as an indicator of satisfaction [49]. This approach complemented the other measures and provided a deeper understanding of the user feedback.

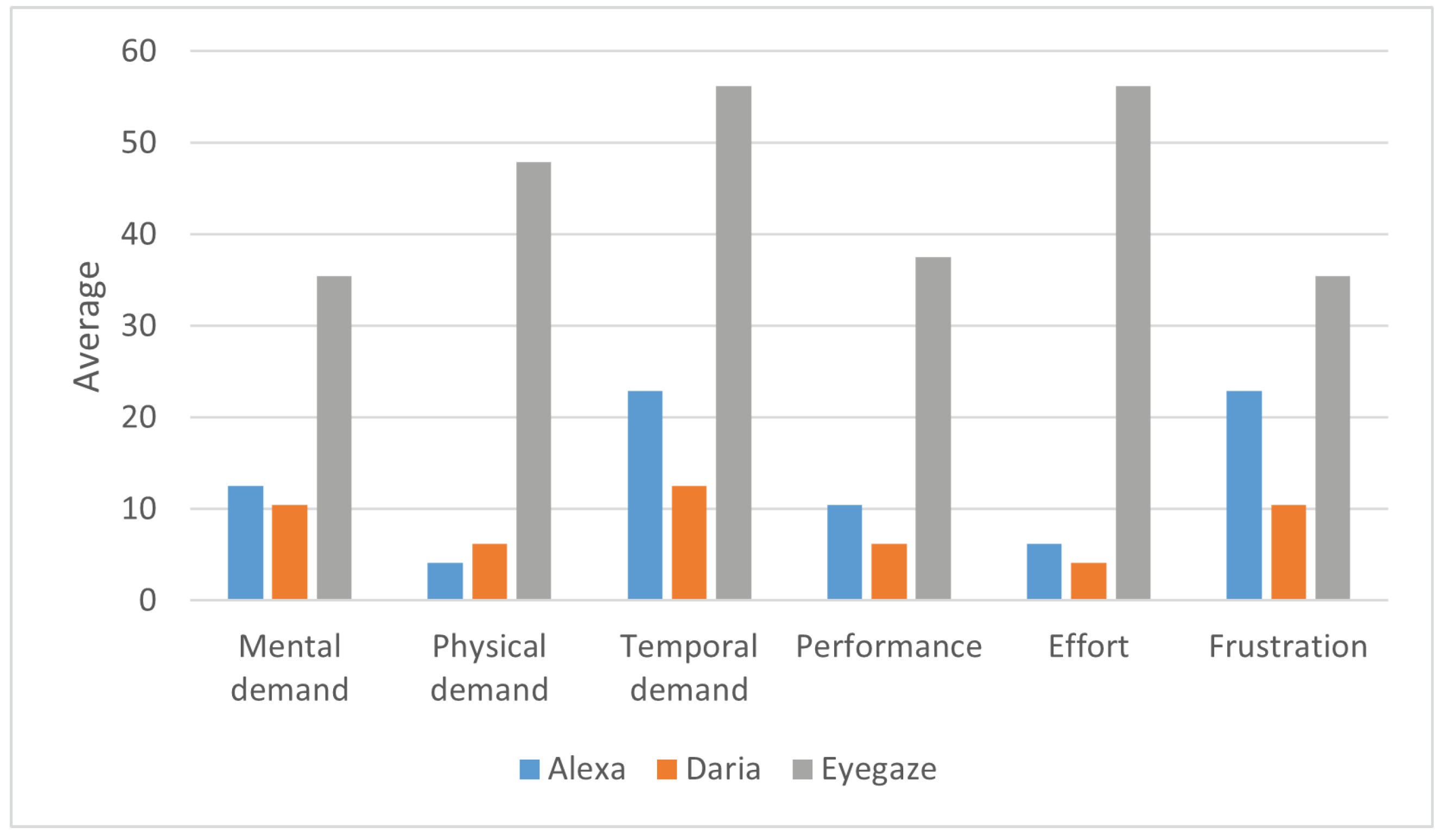

- The workload identified the effort required to perform a task. This was measured using the NASA Task Load Index (NASA-TLX) questionnaire [50], which contained six questions focusing on mental demand, physical demand, temporal demand, performance, effort, and frustration level. Using this measure was particularly crucial for individuals who have dysarthria and often experience rapid fatigue. By employing the NASA-TLX, we aimed to gain a deeper understanding of the workload implications for this specific user group [41,44,51].

3.1. Participants

3.2. Setup and Equipment

4. Results

4.1. SUS

4.2. Workload

4.3. Task Success Rate

4.4. Preference

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kepuska, V.; Bohouta, G. Next-generation of virtual personal assistants (microsoft cortana, apple siri, amazon alexa and google home). In Proceedings of the 2018 IEEE 8th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; pp. 99–103. [Google Scholar]

- Hoy, M.B. Alexa, Siri, Cortana, and more: An introduction to voice assistants. Med. Ref. Serv. Q. 2018, 37, 81–88. [Google Scholar] [CrossRef]

- Bentley, F.; Luvogt, C.; Silverman, M.; Wirasinghe, R.; White, B.; Lottridge, D. Understanding the long-term use of smart speaker assistants. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 91. [Google Scholar] [CrossRef]

- Moore, C. OK, Google: What Can Home Do? The Speaker’s Most Useful Skills. Available online: https://www.digitaltrends.com/home/google-home-most-useful-skills/ (accessed on 15 December 2023).

- Ammari, T.; Kaye, J.; Tsai, J.Y.; Bentley, F. Music, search, and IoT: How people (really) use voice assistants. ACM Trans. Comput.-Hum. Interact. 2019, 26, 17. [Google Scholar] [CrossRef]

- Sciarretta, E.; Alimenti, L. Smart speakers for inclusion: How can intelligent virtual assistants really assist everybody? In Human-Computer Interaction. Theory, Methods and Tools: Thematic Area, HCI 2021, Held as Part of the 23rd HCI International Conference, HCII 2021, Virtual Event, 24–29 July 2021, Proceedings, Part I 23; Springer: Cham, Switzerland, 2021; pp. 77–93. [Google Scholar]

- Masina, F.; Orso, V.; Pluchino, P.; Dainese, G.; Volpato, S.; Nelini, C.; Mapelli, D.; Spagnolli, A.; Gamberini, L. Investigating the accessibility of voice assistants with impaired users: Mixed methods study. J. Med. Internet Res. 2020, 22, e18431. [Google Scholar] [CrossRef]

- Corbett, E.; Weber, A. What can I say? addressing user experience challenges of a mobile voice user interface for accessibility. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 72–82. [Google Scholar]

- Morris, J.T.; Thompson, N.A.; Center, S. User personas: Smart speakers, home automation and people with disabilities. J. Technol. Pers. Disabil. 2020, 8, 237–256. [Google Scholar]

- Takashima, Y.; Takiguchi, T.; Ariki, Y. End-to-end dysarthric speech recognition using multiple databases. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6395–6399. [Google Scholar]

- Pradhan, A.; Mehta, K.; Findlater, L. “Accessibility Came by Accident” Use of Voice-Controlled Intelligent Personal Assistants by People with Disabilities. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–13. [Google Scholar]

- Masina, F.; Pluchino, P.; Orso, V.; Ruggiero, R.; Dainese, G.; Mameli, I.; Volpato, S.; Mapelli, D.; Gamberini, L. VOICE Actuated Control Systems (VACS) for accessible and assistive smart homes. A preliminary investigation on accessibility and user experience with disabled users. In Proceedings of the Ambient Assisted Living: Italian Forum 2019; Springer: Cham, Switzerland, 2021; pp. 153–160. [Google Scholar]

- De Russis, L.; Corno, F. On the impact of dysarthric speech on contemporary ASR cloud platforms. J. Reliab. Intell. Environ. 2019, 5, 163–172. [Google Scholar] [CrossRef]

- Teixeira, A.; Braga, D.; Coelho, L.; Fonseca, J.; Alvarelhão, J.; Martín, I.; Queirós, A.; Rocha, N.; Calado, A.; Dias, M. Speech as the basic interface for assistive technology. In Proceedings of the DSAI 2009, 2th International Conference on Software Development for Enhancing Accessibility and Fighting Info-Exclusion, Lisboa, Portugal, 3–5 June 2009. [Google Scholar]

- Jaddoh, A.; Loizides, F.; Lee, J.; Rana, O. An interaction framework for designing systems for virtual home assistants and people with dysarthria. Univers. Access Inf. Soc. 2023, 1–13. [Google Scholar] [CrossRef]

- Fried-Oken, M.; Fox, L.; Rau, M.T.; Tullman, J.; Baker, G.; Hindal, M.; Wile, N.; Lou, J.S. Purposes of AAC device use for persons with ALS as reported by caregivers. Augment. Altern. Commun. 2006, 22, 209–221. [Google Scholar] [CrossRef]

- Beukelman, D.R.; Mirenda, P. Augmentative and Alternative Communication; Paul H. Brookes: Baltimore, MD, USA, 1998. [Google Scholar]

- Bryen, D.N.; Chung, Y. What adults who use AAC say about their use of mainstream mobile technologies. Assist. Technol. Outcomes Benefits 2018, 12, 73–106. [Google Scholar]

- Ballati, F.; Corno, F.; De Russis, L. Assessing virtual assistant capabilities with italian dysarthric speech. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, Galway, Ireland, 22–24 October 2018; pp. 93–101. [Google Scholar]

- Curtis, H.; Neate, T.; Vazquez Gonzalez, C. State of the Art in AAC: A Systematic Review and Taxonomy. In Proceedings of the 24th International ACM SIGACCESS Conference on Computers and Accessibility, Athens, Greece, 23–26 October 2022; pp. 1–22. [Google Scholar]

- Matters, C. TYPES OF AAC. Available online: https://www.communicationmatters.org.uk/what-is-aac/types-of-aac/ (accessed on 11 December 2023).

- Bissoli, A.; Lavino-Junior, D.; Sime, M.; Encarnação, L.; Bastos-Filho, T. A human–machine interface based on eye tracking for controlling and monitoring a smart home using the internet of things. Sensors 2019, 19, 859. [Google Scholar] [CrossRef]

- Pasqualotto, E.; Matuz, T.; Federici, S.; Ruf, C.A.; Bartl, M.; Olivetti Belardinelli, M.; Birbaumer, N.; Halder, S. Usability and workload of access technology for people with severe motor impairment: A comparison of brain-computer interfacing and eye tracking. Neurorehabilit. Neural Repair 2015, 29, 950–957. [Google Scholar] [CrossRef]

- Ansel, B.M.; Kent, R.D. Acoustic-phonetic contrasts and intelligibility in the dysarthria associated with mixed cerebral palsy. J. Speech Lang. Hear. Res. 1992, 35, 296–308. [Google Scholar] [CrossRef] [PubMed]

- Alexa. Available online: https://www.amazon.com/b?node=21576558011 (accessed on 15 December 2023).

- Statista.com. Number of Households with Smart Home Products and Services in Use Worldwide from 2017 to 2025. Available online: https://www.statista.com/statistics/1252975/smart-home-households-worldwide/ (accessed on 12 December 2023).

- Jaddoh, A.; Loizides, F.; Rana, O. Non-verbal interaction with virtual home assistants for people with dysarthria. J. Technol. Pers. Disabil. 2021, 9, 71–84. [Google Scholar]

- ISO 9241-11:2018; Ergonomic Requirements for Office Work with Visual Display Terminals (VDT)s-Part 11 Guidance on Usability. ISO: Geneva, Switzerland, 2018.

- Ballati, F.; Corno, F.; De Russis, L. “Hey Siri, Do You Understand Me?”: Virtual Assistants and Dysarthria. In Proceedings of the International Workshop on the Reliability of Intelligent Environments (Workshops), Rome, Italy, 25–28 June 2018; pp. 557–566. [Google Scholar]

- Moore, M.; Venkateswara, H.; Panchanathan, S. Whistle-blowing asrs: Evaluating the need for more inclusive automatic speech recognition systems. In Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH, Hyderabad, India, 2–6 September 2018; Volume 2018, pp. 466–470. [Google Scholar]

- Derboven, J.; Huyghe, J.; De Grooff, D. Designing voice interaction for people with physical and speech impairments. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction: Fun, Fast, Foundational, Helsinki, Finland, 26–30 October 2014; pp. 217–226. [Google Scholar]

- Moore, M. Speech Recognition for Individuals with Voice Disorders. In Multimedia for Accessible Human Computer Interfaces; Springer: Cham, Switzerland, 2021; pp. 115–144. [Google Scholar]

- Corno, F.; Farinetti, L.; Signorile, I. A cost-effective solution for eye-gaze assistive technology. In Proceedings of the IEEE International Conference on Multimedia and Expo, Lausanne, Switzerland, 26–29 August 2002; Volume 2, pp. 433–436. [Google Scholar]

- Hemmingsson, H.; Borgestig, M. Usability of eye-gaze controlled computers in Sweden: A total population survey. Int. J. Environ. Res. Public Health 2020, 17, 1639. [Google Scholar] [CrossRef]

- Caligari, M.; Godi, M.; Guglielmetti, S.; Franchignoni, F.; Nardone, A. Eye tracking communication devices in amyotrophic lateral sclerosis: Impact on disability and quality of life. Amyotroph. Lateral Scler. Front. Degener. 2013, 14, 546–552. [Google Scholar] [CrossRef]

- Karlsson, P.; Allsop, A.; Dee-Price, B.J.; Wallen, M. Eye-gaze control technology for children, adolescents and adults with cerebral palsy with significant physical disability: Findings from a systematic review. Dev. Neurorehabilit. 2018, 21, 497–505. [Google Scholar] [CrossRef]

- Donegan, M.; Morris, J.D.; Corno, F.; Signorile, I.; Chió, A.; Pasian, V.; Vignola, A.; Buchholz, M.; Holmqvist, E. Understanding users and their needs. Univers. Access Inf. Soc. 2009, 8, 259–275. [Google Scholar] [CrossRef]

- Najafi, L.; Friday, M.; Robertson, Z. Two case studies describing assessment and provision of eye gaze technology for people with severe physical disabilities. J. Assist. Technol. 2008, 2, 6–12. [Google Scholar] [CrossRef]

- Gil-Gómez, J.A.; Manzano-Hernández, P.; Albiol-Pérez, S.; Aula-Valero, C.; Gil-Gómez, H.; Lozano-Quilis, J.A. USEQ: A short questionnaire for satisfaction evaluation of virtual rehabilitation systems. Sensors 2017, 17, 1589. [Google Scholar] [CrossRef]

- Kocabalil, A.B.; Laranjo, L.; Coiera, E. Measuring user experience in conversational interfaces: A comparison of six questionnaires. In Proceedings of the 32nd International BCS Human Computer Interaction Conference, Belfast, UK, 4–6 July 2018. [Google Scholar]

- Vtyurina, A.; Fourney, A. Exploring the role of conversational cues in guided task support with virtual assistants. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–7. [Google Scholar]

- Pyae, A.; Joelsson, T.N. Investigating the usability and user experiences of voice user interface: A case of Google home smart speaker. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Barcelona, Spain, 3–6 September 2018; pp. 127–131. [Google Scholar]

- Bogers, T.; Al-Basri, A.A.A.; Ostermann Rytlig, C.; Bak Møller, M.E.; Juhl Rasmussen, M.; Bates Michelsen, N.K.; Gerling Jørgensen, S. A study of usage and usability of intelligent personal assistants in Denmark. In Information in Contemporary Society, Proceedings of the 14th International Conference, iConference 2019, Washington, DC, USA, 31 March–3 April 2019; Proceedings 14; Springer: Cham, Switzerland, 2019; pp. 79–90. [Google Scholar]

- Anbarasan; Lee, J.S. Speech and gestures for smart-home control and interaction for older adults. In Proceedings of the 3rd International Workshop on Multimedia for Personal Health and Health Care, Seoul, Republic of Korea, 22 October 2018; pp. 49–57. [Google Scholar]

- Kortum, P.; Peres, S.C. The relationship between system effectiveness and subjective usability scores using the System Usability Scale. Int. J. Hum.-Comput. Interact. 2014, 30, 575–584. [Google Scholar] [CrossRef]

- Demir, F.; Kim, D.; Jung, E. Hey Google, Help Doing My Homework: Surveying Voice Interactive Systems. J. Usability Stud. 2022, 18, 41–61. [Google Scholar]

- Iannizzotto, G.; Bello, L.L.; Nucita, A.; Grasso, G.M. A vision and speech enabled, customizable, virtual assistant for smart environments. In Proceedings of the 2018 11th International Conference on Human System Interaction (HSI), Gdansk, Poland, 4–6 July 2018; pp. 50–56. [Google Scholar]

- Barricelli, B.R.; Fogli, D.; Iemmolo, L.; Locoro, A. A multi-modal approach to creating routines for smart speakers. In Proceedings of the 2022 International Conference on Advanced Visual Interfaces, Frascati, Italy, 6–10 June 2022; pp. 1–5. [Google Scholar]

- Frøkjær, E.; Hertzum, M.; Hornbæk, K. Measuring usability: Are effectiveness, efficiency, and satisfaction really correlated? In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; pp. 345–352. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Kim, S.; Ko, I.Y. A Conversational Approach for Modifying Service Mashups in IoT Environments. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; pp. 1–16. [Google Scholar]

- Tobii. Tobii Eye Tracker. Available online: https://www.tobii.com/ (accessed on 11 December 2023).

- Feit, A.M.; Williams, S.; Toledo, A.; Paradiso, A.; Kulkarni, H.; Kane, S.; Morris, M.R. Toward everyday gaze input: Accuracy and precision of eye tracking and implications for design. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1118–1130. [Google Scholar]

- Wobbrock, J.O.; Kane, S.K.; Gajos, K.Z.; Harada, S.; Froehlich, J. Ability-based design: Concept, principles and examples. ACM Trans. Access. Comput. 2011, 3, 9. [Google Scholar] [CrossRef]

- Patel, R.; Dromey, C.; Kunov, H. Control of Prosodic Parameters by an Individual with Severe Dysarthria; Technical Report; University of Toronto: Toronto, ON, Canada, 1998. [Google Scholar]

- Ferrier, L.; Shane, H.; Ballard, H.; Carpenter, T.; Benoit, A. Dysarthric speakers’ intelligibility and speech characteristics in relation to computer speech recognition. Augment. Altern. Commun. 1995, 11, 165–175. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Unified theory of acceptance and use of technology: A synthesis and the road ahead. J. Assoc. Inf. Syst. 2016, 17, 328–376. [Google Scholar] [CrossRef]

- Munteanu, C.; Jones, M.; Oviatt, S.; Brewster, S.; Penn, G.; Whittaker, S.; Rajput, N.; Nanavati, A. We need to talk: HCI and the delicate topic of spoken language interaction. In CHI’13 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2013; pp. 2459–2464. [Google Scholar]

- Wang, Y. Gaps between continuous measurement methods: A longitudinal study of perceived usability. Interact. Comput. 2021, 33, 223–237. [Google Scholar] [CrossRef]

- Kabacińska, K.; Vu, K.; Tam, M.; Edwards, O.; Miller, W.C.; Robillard, J.M. “Functioning better is doing better”: Older adults’ priorities for the evaluation of assistive technology. Assist. Technol. 2023, 35, 367–373. [Google Scholar] [CrossRef]

- Wang, K.; Wang, S.; Ji, Q. Deep eye fixation map learning for calibration-free eye gaze tracking. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016; pp. 47–55. [Google Scholar]

- Arthanat, S.; Bauer, S.M.; Lenker, J.A.; Nochajski, S.M.; Wu, Y.W.B. Conceptualization and measurement of assistive technology usability. Disabil. Rehabil. Assist. Technol. 2007, 2, 235–248. [Google Scholar] [CrossRef]

| Participant | Gender | Severity | Age Range | Diagnosis |

|---|---|---|---|---|

| P1 | Male | Mild | 25–44 | Traumatic brain injury |

| P2 | Male | Mild | 45–65 | Stroke |

| P3 | Female | Mild | 25–44 | Cerebral palsy |

| P4 | Male | Moderate | 45–65 | Spinal cord injury |

| P5 | Male | Moderate | 25–44 | Traumatic brain injury |

| P6 | Male | Severe | 25–44 | Stroke |

| P7 | Male | Severe | 25–44 | Traumatic brain injury |

| P8 | Male | Severe | 18–24 | Traumatic Brain Injury |

| Category | System | Mean Rank | Chi-Square | p-Value | p-Value Assessment |

|---|---|---|---|---|---|

| Mental demand | Daria | 2.25 | 3.50 | 0.174 | Not Significant |

| Eyegaze | 1.63 | ||||

| Alexa | 2.13 | ||||

| Physical demand | Daria | 2.31 | 11.47 | 0.003 | Significant |

| Eyegaze | 1.25 | ||||

| Alexa | 2.44 | ||||

| Temporal demand | Daria | 2.44 | 5.85 | 0.054 | Not Significant |

| Eyegaze | 1.38 | ||||

| Alexa | 2.19 | ||||

| Performance | Daria | 2.31 | 6.42 | 0.040 | Significant |

| Eyegaze | 1.44 | ||||

| Alexa | 2.25 | ||||

| Effort | Daria | 2.50 | 8.96 | 0.011 | Significant |

| Eyegaze | 1.25 | ||||

| Alexa | 2.25 | ||||

| Frustration | Daria | 2.38 | 2.82 | 0.244 | Not Significant |

| Eyegaze | 1.69 | ||||

| Alexa | 1.94 |

| Category | System | Mean | p-Value | p-Value Assessment |

|---|---|---|---|---|

| Mental demand | Daria | 93.75 | 0.223 | Not Significant |

| Eye gaze | 43.75 | |||

| Daria | 93.75 | 0.317 | Not Significant | |

| Alexa | 77.08 | |||

| Eye gaze | 43.75 | 0.223 | Not Significant | |

| Alexa | 77.08 | |||

| Physical demand | Daria | 93.75 | 0.025 | Significant |

| Eye gaze | 52.08 | |||

| Daria | 93.75 | 0.317 | Not Significant | |

| Alexa | 95.83 | |||

| Eye gaze | 52.08 | 0.024 | Significant | |

| Alexa | 95.83 | |||

| Temporal demand | Daria | 89.58 | 0.020 | Significant |

| Eye gaze | 64.58 | |||

| Daria | 89.58 | 0.285 | Not Significant | |

| Alexa | 87.50 | |||

| Eye gaze | 64.58 | 0.205 | Not Significant | |

| Alexa | 87.50 | |||

| Performance | Daria | 93.75 | 0.041 | Significant |

| Eye gaze | 62.50 | |||

| Daria | 93.75 | 0.655 | Not Significant | |

| Alexa | 89.58 | |||

| Eye gaze | 62.50 | 0.242 | Not Significant | |

| Alexa | 89.58 | |||

| Effort | Daria | 95.83 | 0.018 | Significant |

| Eye gaze | 43.75 | |||

| Daria | 95.83 | 0.564 | Not Significant | |

| Alexa | 93.74 | |||

| Eye gaze | 43.75 | 0.042 | Significant | |

| Alexa | 93.74 | |||

| Frustration | Daria | 89.58 | 0.068 | Not Significant |

| Eye gaze | 64.58 | |||

| Daria | 89.58 | 0.461 | Not Significant | |

| Alexa | 77.08 | |||

| Eye gaze | 64.58 | 0.498 | Not Significant | |

| Alexa | 77.08 |

| Preference | Participant | Diagnosis |

|---|---|---|

| Alexa | P1 | Mild |

| P5 | Moderate | |

| Daria | P2 | Mild |

| P3 | Mild | |

| P6 | Severe | |

| P7 | Severe | |

| P8 | Severe | |

| Eye gaze | P4 | Moderate |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jaddoh, A.; Loizides, F.; Rana, O.; Syed, Y.A. Interacting with Smart Virtual Assistants for Individuals with Dysarthria: A Comparative Study on Usability and User Preferences. Appl. Sci. 2024, 14, 1409. https://doi.org/10.3390/app14041409

Jaddoh A, Loizides F, Rana O, Syed YA. Interacting with Smart Virtual Assistants for Individuals with Dysarthria: A Comparative Study on Usability and User Preferences. Applied Sciences. 2024; 14(4):1409. https://doi.org/10.3390/app14041409

Chicago/Turabian StyleJaddoh, Aisha, Fernando Loizides, Omer Rana, and Yasir Ahmed Syed. 2024. "Interacting with Smart Virtual Assistants for Individuals with Dysarthria: A Comparative Study on Usability and User Preferences" Applied Sciences 14, no. 4: 1409. https://doi.org/10.3390/app14041409

APA StyleJaddoh, A., Loizides, F., Rana, O., & Syed, Y. A. (2024). Interacting with Smart Virtual Assistants for Individuals with Dysarthria: A Comparative Study on Usability and User Preferences. Applied Sciences, 14(4), 1409. https://doi.org/10.3390/app14041409