Online Street View-Based Approach for Sky View Factor Estimation: A Case Study of Nanjing, China

Abstract

:1. Introduction

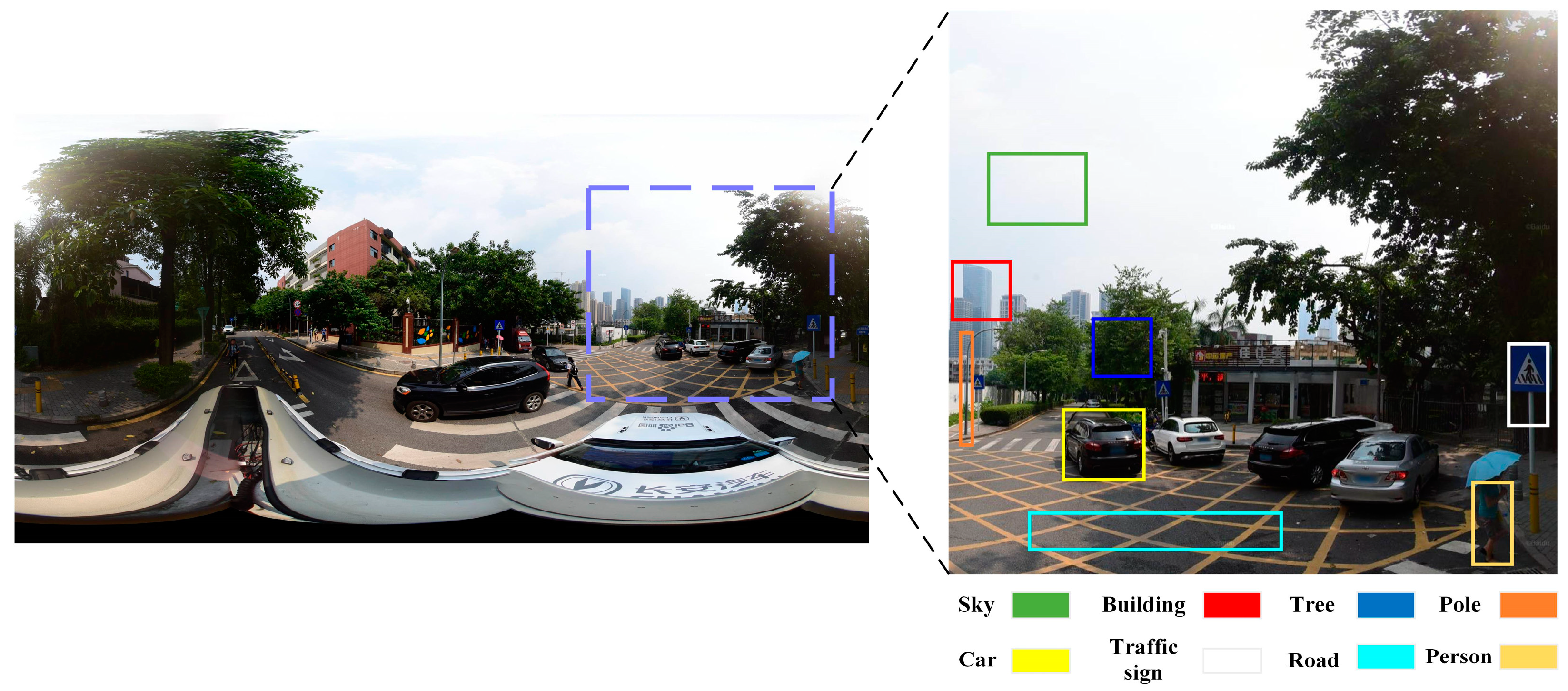

2. Materials and Methods

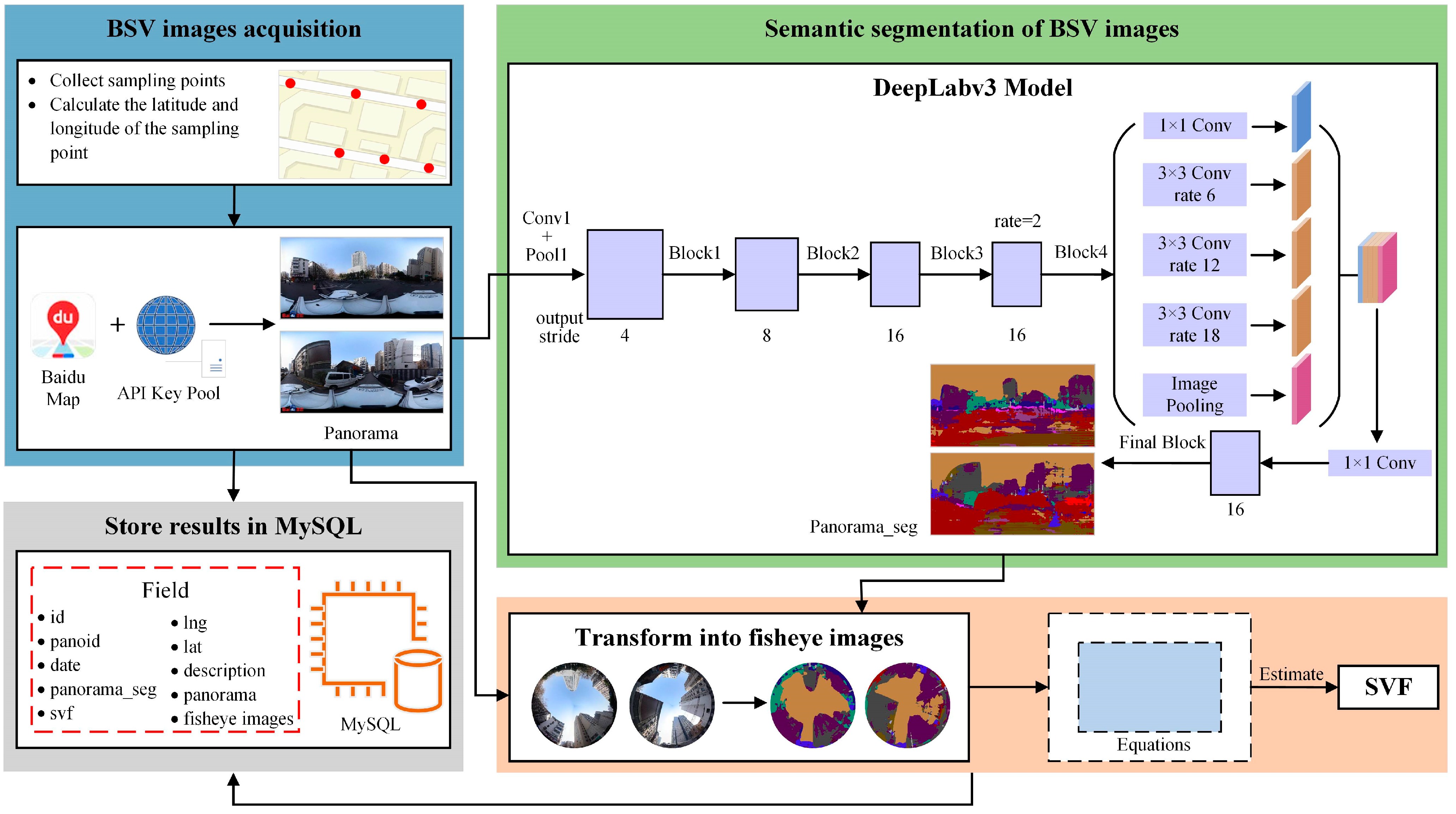

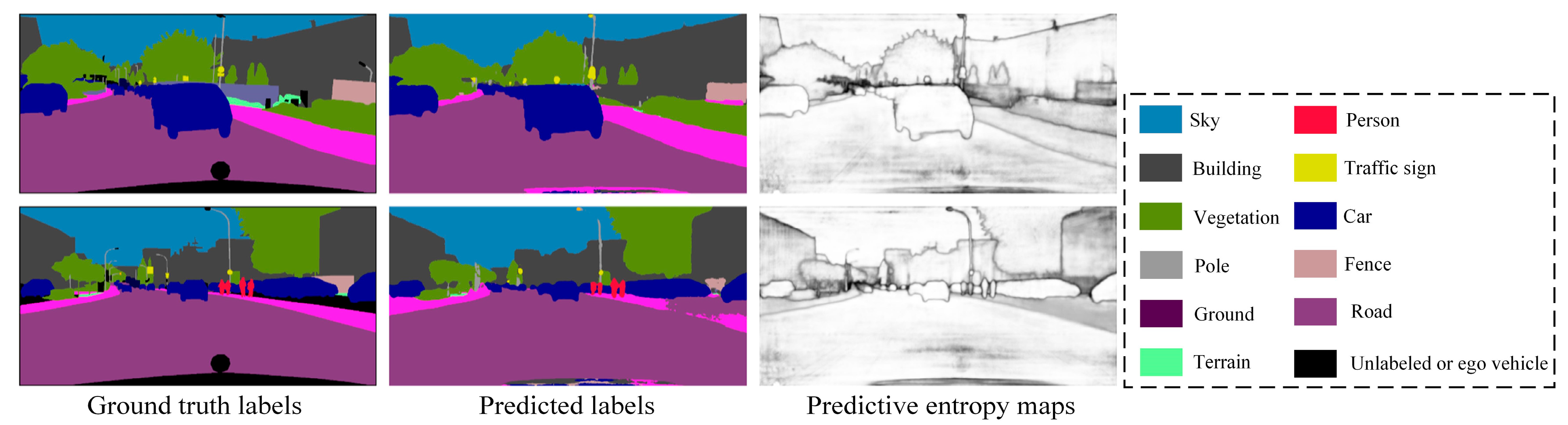

2.1. Estimation of the SVF with the BMapSVF—Based Method

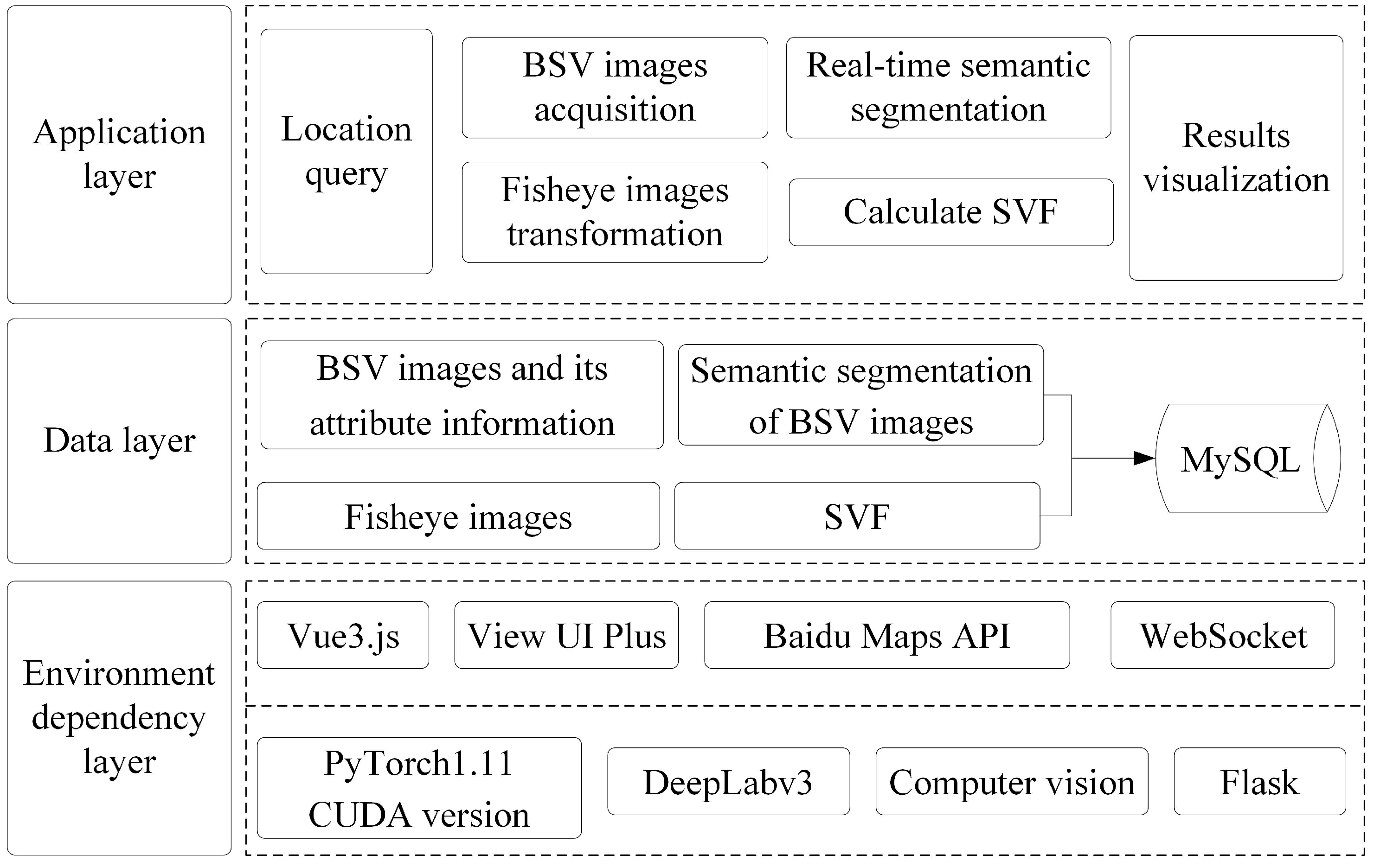

2.2. System Design and Implementation

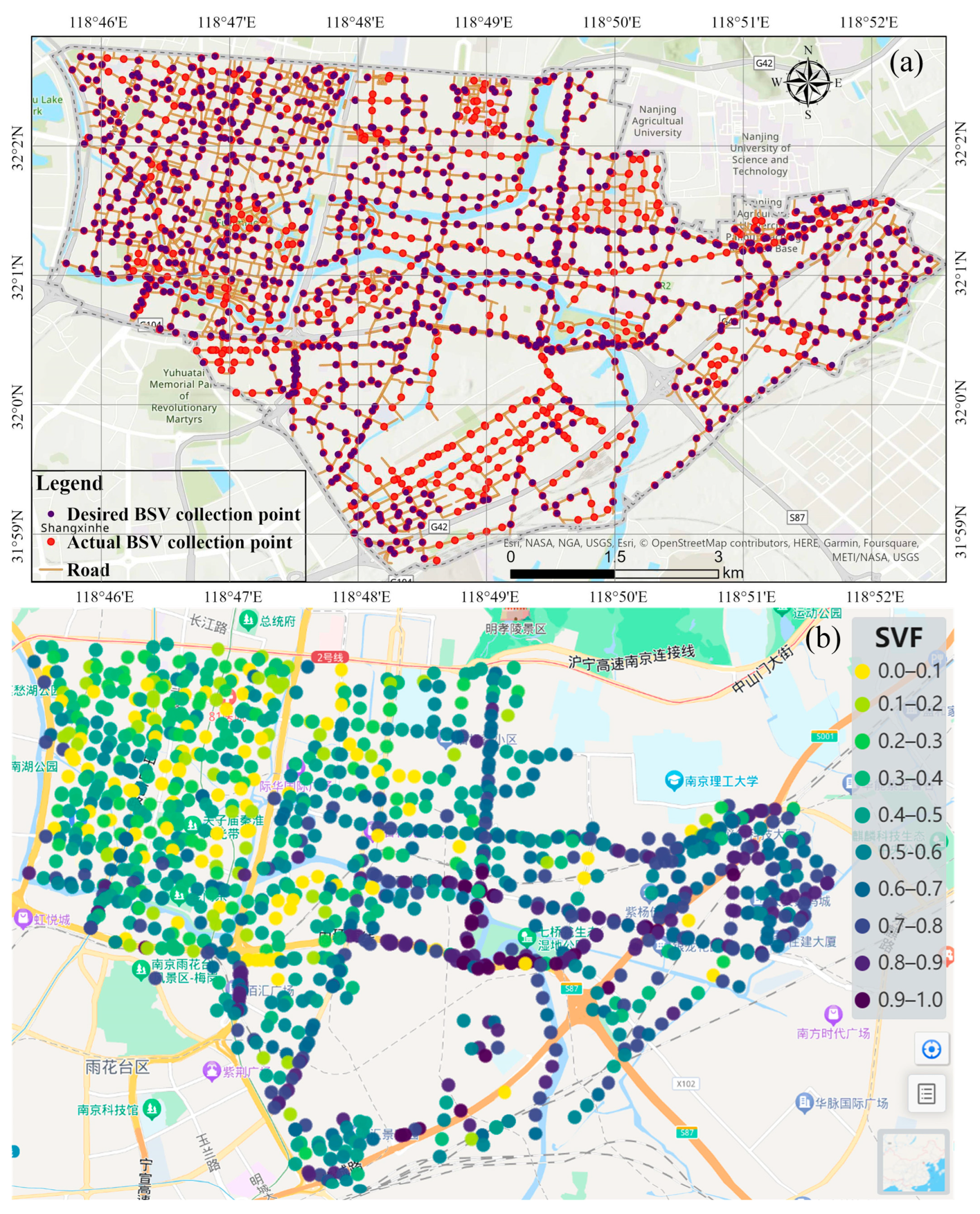

2.3. Study Area and Experimental Data

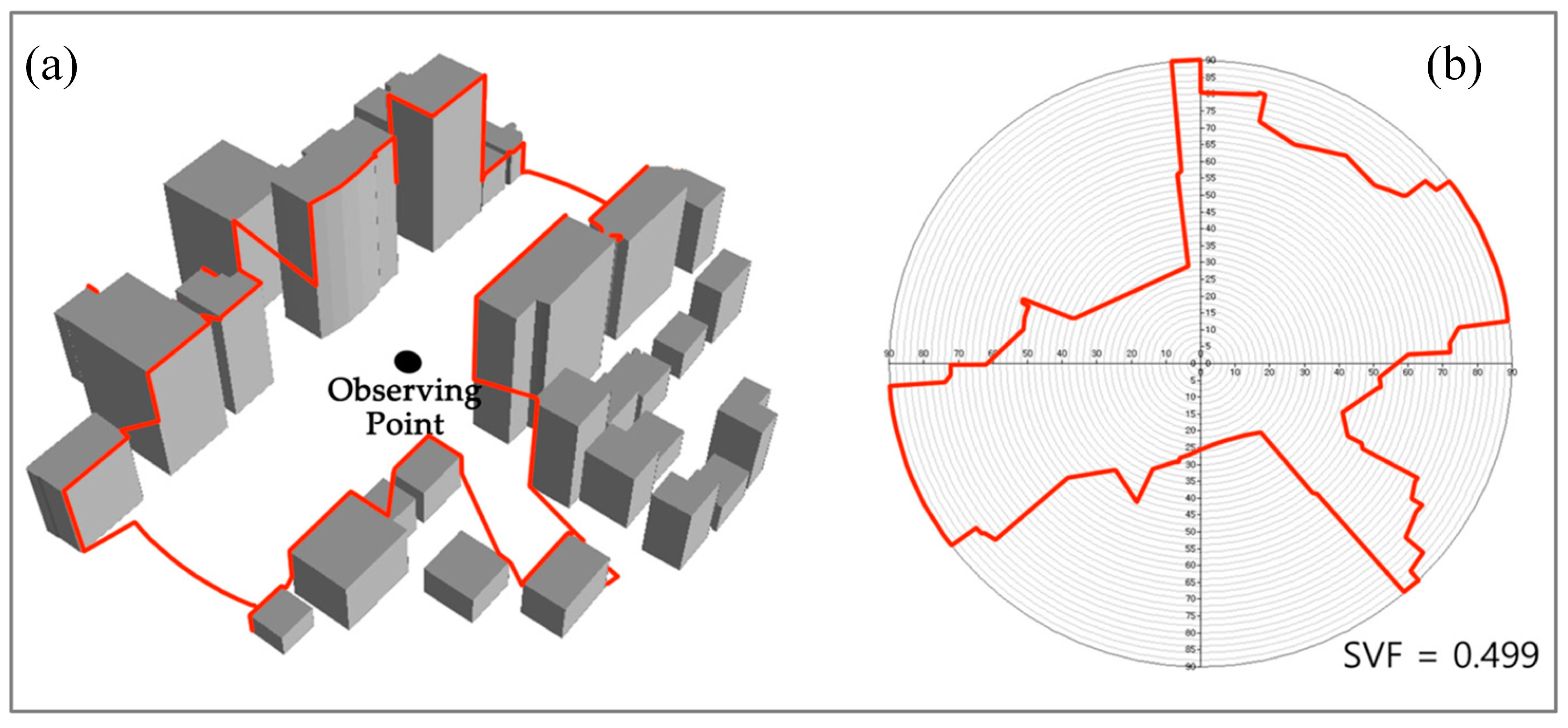

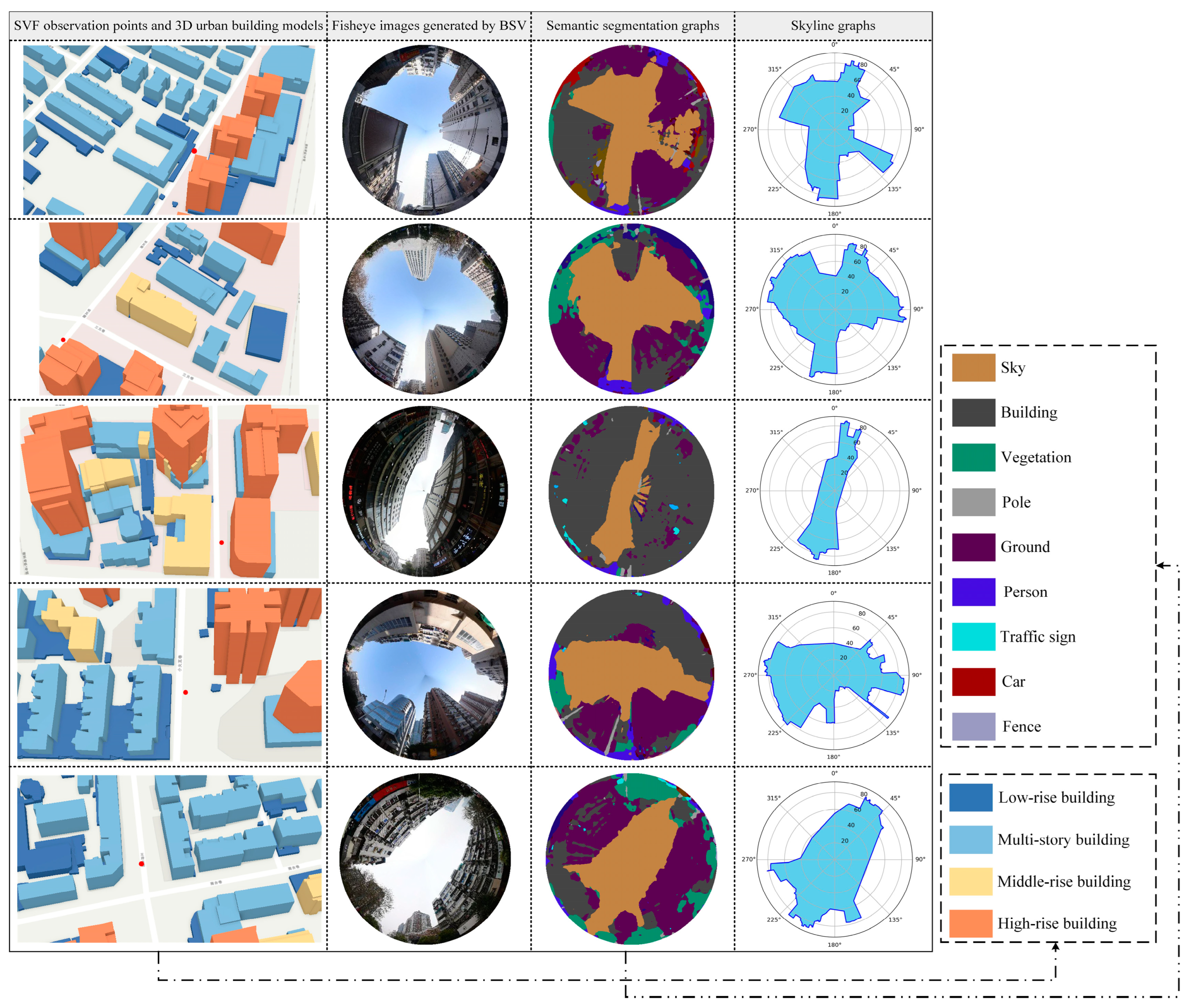

2.4. Estimating SVF with a Simulation Method for 3D Urban Building Models

3. Results

3.1. System Setting

- Switch cities: Clicking the city list button in the top left corner initiates the switch; the map is then loaded to the corresponding city location.

- Address search box: Address or place identification is accomplished through an automatic search in the address box. Upon clicking, the location is automatically pinpointed on the corresponding map position.

- Panorama control: Activation of the panorama control button involves clicking and placing it on the map; revealing the covered area in the form of a blue route.

- Location control: The positioning control button, when clicked, retrieves current position information. Preferably, the browser location interface is utilized for obtaining location information. If unsuccessful, the user IP location is invoked, and the result at the city level is returned.

- Map toggle: Changes in map type, such as common street views, satellite views, and hybrid views of satellite and road networks, are achieved through map toggling.

- Distance measurement: Distance measurement on the 2D map facilitates the determination of distances between measured objects.

- Coordinate position: WGS84 and BD09 coordinates are entered into the dialog box; If the input is WGS84 coordinates, conversion to BD09 coordinates for positioning is carried out, and if it is BD09 coordinates, direct positioning occurs.

- Sample collection: Single point sampling is executed through left-clicking, while multiple points are obtained through a csv file. SVF values are automatically calculated and stored in MySQL.

- SVF distribution: The drawing of SVF values in colored markers on the map.

- Map legend: The generation of symbols and colors representing SVF values.

- Export: The exportation of results from the selected zone to a designated folder, including results in the folder and Microsoft Excel, concludes the process.

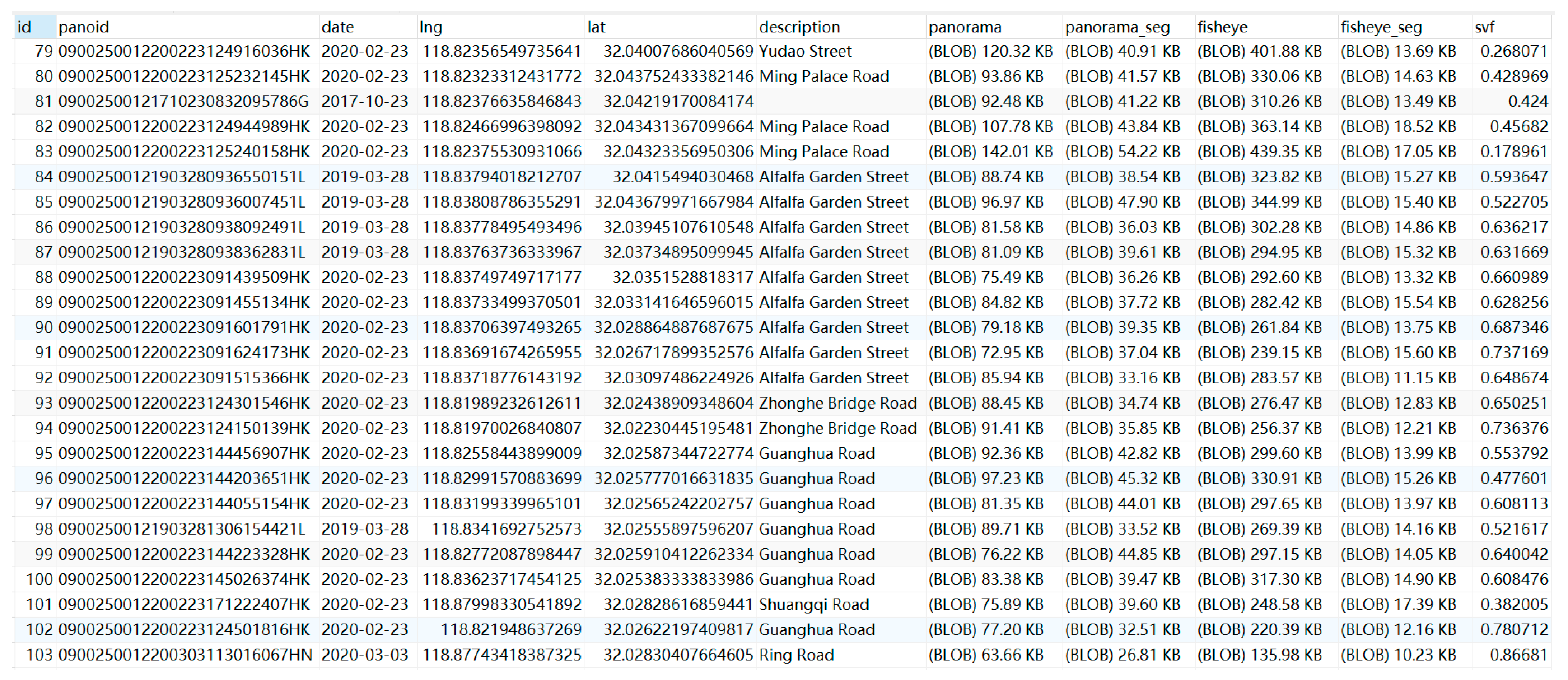

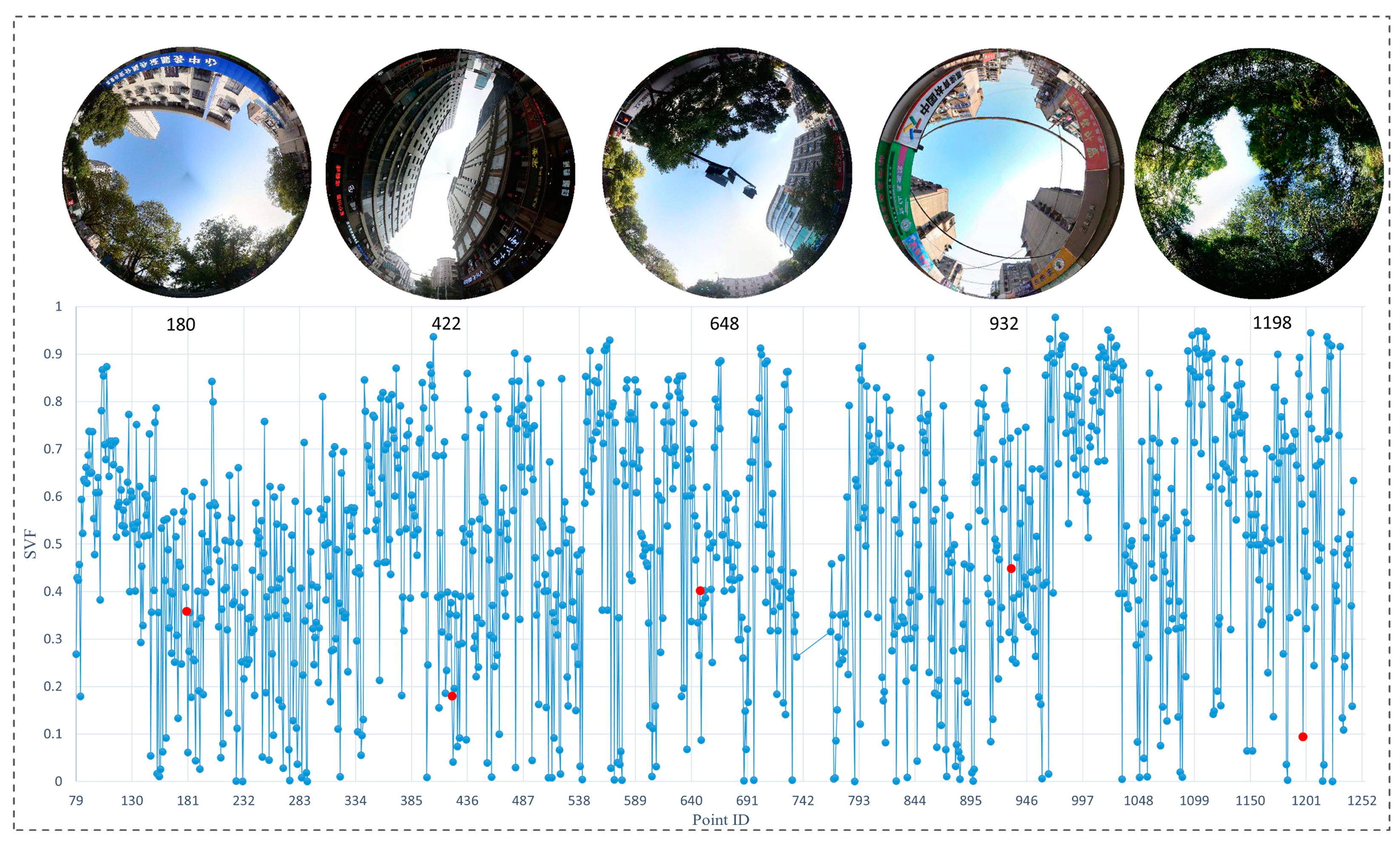

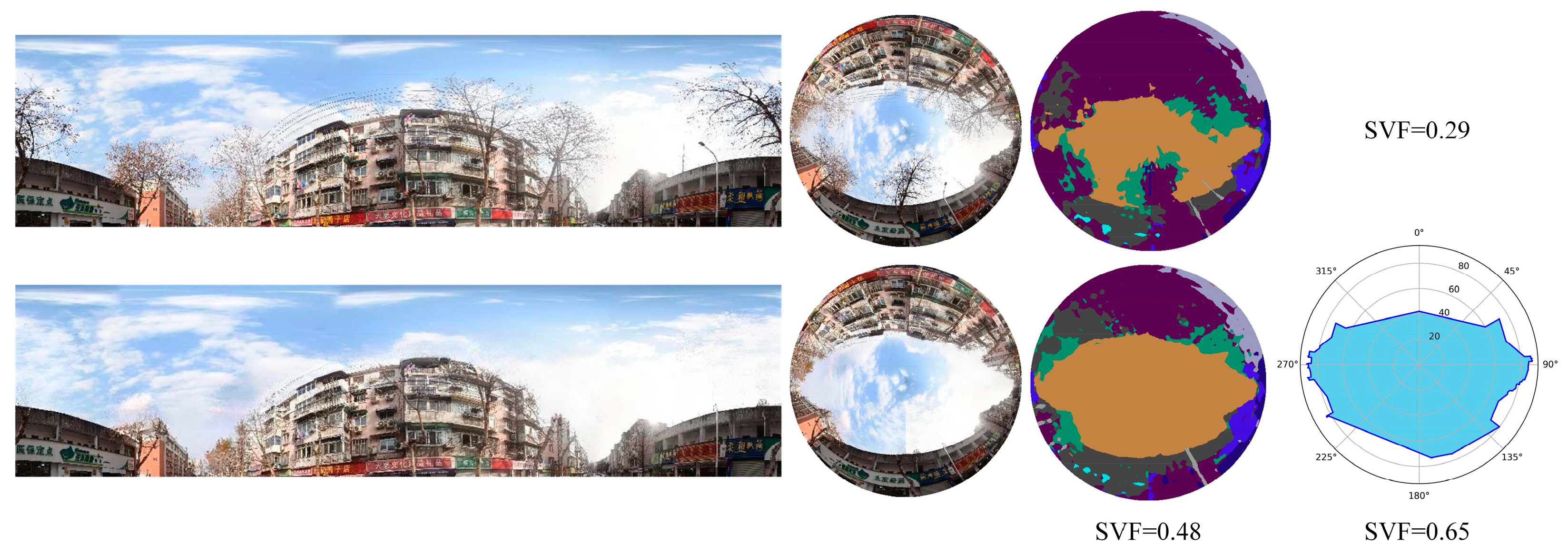

3.2. Case Analysis

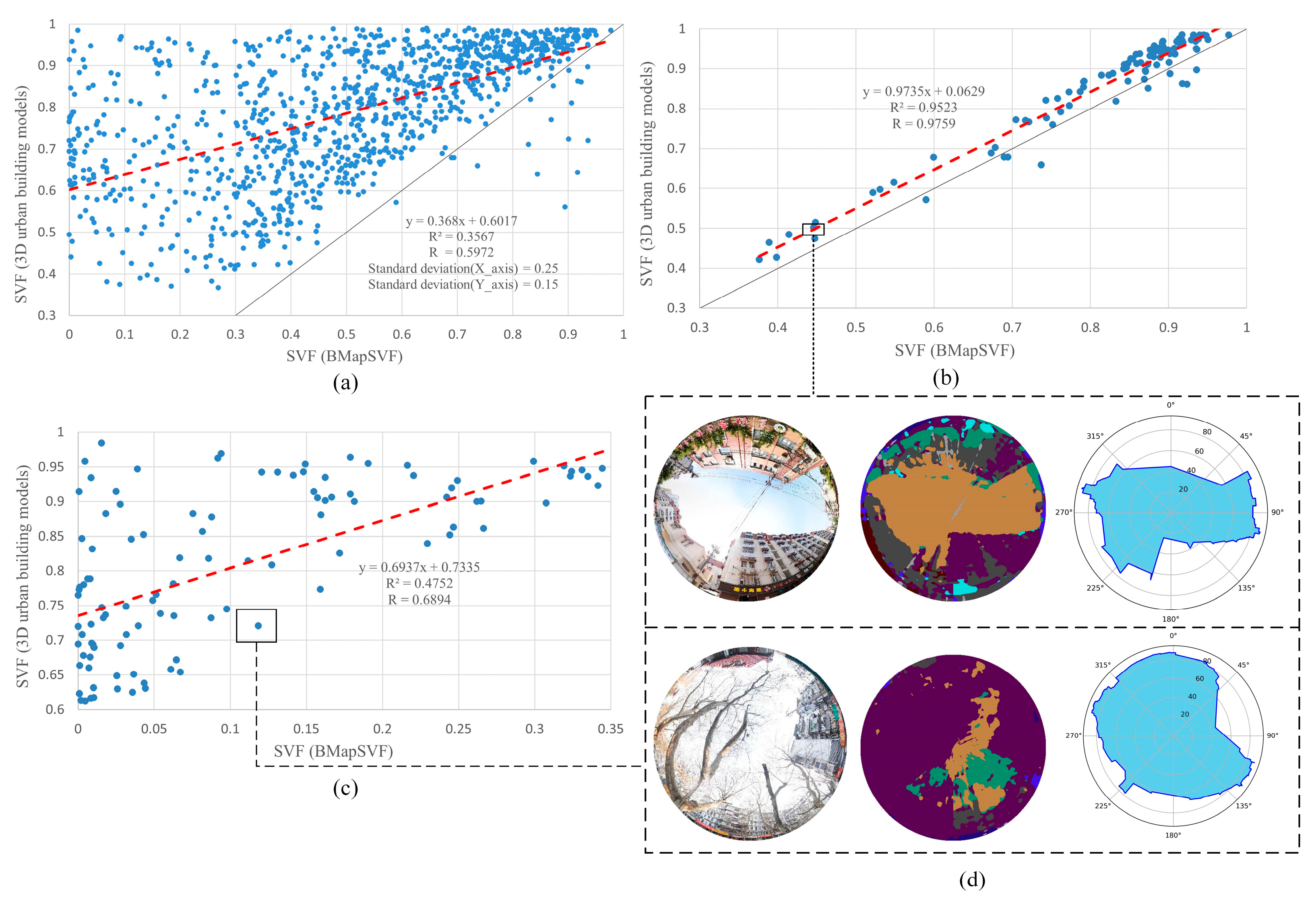

3.3. Comparison with SVF Estimation from 3D Urban Building Models

3.4. The Uncertainty of the SVF

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Middel, A.; Lukasczyk, J.; Maciejewski, R.; Demuzere, M.; Roth, M. Sky View Factor footprints for urban climate modeling. Urban Clim. 2018, 25, 120–134. [Google Scholar] [CrossRef]

- Poon, K.H.; Kmpf, J.H.; Tay, S.; Wong, N.H.; Reindl, T.G. Parametric study of URBAN morphology on building solar energy potential in Singapore context. Urban Clim. 2020, 33, 100624. [Google Scholar] [CrossRef]

- Santos Nouri, A.; Costa, J.P.; Matzarakis, A. Examining default urban-aspect-ratios and sky-view-factors to identify priorities for thermal-sensitive public space design in hot-summer Mediterranean climates: The Lisbon case. Build. Environ. 2017, 126, 442–456. [Google Scholar] [CrossRef]

- He, X.D.; Miao, S.G.; Shen, S.H.; Li, J.; Zhang, B.Z.; Zhang, Z.Y.; Chen, X.J. Influence of sky view factor on outdoor thermal environment and physiological equivalent temperature. Int. J. Biometeorol. 2015, 59, 285–297. [Google Scholar] [CrossRef]

- Zhang, J.; Gou, Z.H.; Lu, Y.; Lin, P.Y. The impact of sky view factor on thermal environments in urban parks in a subtropical coastal city of Australia. Urban For. Urban Green. 2019, 44, 18. [Google Scholar] [CrossRef]

- Zheng, B.H.; Li, J.Y. Evaluating the Annual Effect of the Sky View Factor on the Indoor Thermal Environment of Residential Buildings by Envi-met. Buildings 2022, 12, 787. [Google Scholar] [CrossRef]

- Ge, J.; Wang, Y.; Akbari, H.; Zhou, D. The effects of sky view factor on ground surface temperature in cold regions—A case from Xi’an, China. Build. Environ. 2022, 210, 108707. [Google Scholar] [CrossRef]

- Oke, T.R. Canyon geometry and the nocturnal urban heat island: Comparison of scale model and field observations. J. Climatol. 1981, 1, 237–254. [Google Scholar] [CrossRef]

- Unger, J. Intra-urban relationship between surface geometry and urban heat island: Review and new approach. Clim. Res. 2004, 27, 253–264. [Google Scholar] [CrossRef]

- Zhu, S.Y.; Guan, H.D.; Bennett, J.; Clay, R.; Ewenz, C.; Benger, S.; Maghrabi, A.; Millington, A.C. Influence of sky temperature distribution on sky view factor and its applications in urban heat island. Int. J. Climatol. 2013, 33, 1837–1843. [Google Scholar] [CrossRef]

- Chiang, Y.-C.; Liu, H.-H.; Li, D.; Ho, L.-C. Quantification through deep learning of sky view factor and greenery on urban streets during hot and cool seasons. Landsc. Urban Plan. 2023, 232, 104679. [Google Scholar] [CrossRef]

- Chen, L.; Ng, E.; An, X.P.; Ren, C.; Lee, M.; Wang, U.; He, Z.J. Sky view factor analysis of street canyons and its implications for daytime intra-urban air temperature differentials in high-rise, high-density urban areas of Hong Kong: A GIS-based simulation approach. Int. J. Climatol. 2012, 32, 121–136. [Google Scholar] [CrossRef]

- Song, B.G. Comparison of thermal environments and classification of physical environments using fisheye images with object-based classification. Urban Clim. 2023, 49, 101510. [Google Scholar] [CrossRef]

- Wei, R.; Song, D.; Wong, N.H.; Martin, M. Impact of urban morphology parameters on microclimate. Procedia Eng. 2016, 169, 142–149. [Google Scholar] [CrossRef]

- Johnson, G.T.; Watson, I.D. The Determination of view-factors in urban canyons. J. Clim. Appl. Meteorol. 1984, 23, 329–335. [Google Scholar] [CrossRef]

- Watson, I.D.; Johnson, G.T. Graphical estimation of sky view-factors in urban environments. J. Climatol. 1987, 7, 193–197. [Google Scholar] [CrossRef]

- Grimmond, C.S.B.; Potter, S.K.; Zutter, H.N.; Souch, C. Rapid methods to estimate sky-view factors applied to urban areas. Int. J. Climatol. 2001, 21, 903–913. [Google Scholar] [CrossRef]

- Honjo, T.; Tzu-Ping, L.; Seo, Y. Sky view factor measurement by using a spherical camera. J. Agric. Meteorol. 2019, 75, 59–66. [Google Scholar] [CrossRef]

- Matzarakis, A.; Matuschek, O. Sky view factor as a parameter in applied climatology—Rapid estimation by the SkyHelios model. Meteorol. Z. 2011, 20, 39–45. [Google Scholar] [CrossRef]

- Chapman, L.; Thornes, J.E. Real-time sky-view factor calculation and approximation. J. Atmos. Ocean. Technol. 2004, 21, 730–741. [Google Scholar] [CrossRef]

- Chapman, L.; Thornes, J.E.; Bradley, A.V. Sky-view factor approximation using GPS receivers. Int. J. Climatol. 2002, 22, 615–621. [Google Scholar] [CrossRef]

- Cheung, H.K.W.; Coles, D.; Levermore, G.J. Urban heat island analysis of Greater Manchester, UK using sky view factor analysis. Build Serv. Eng. Res. Technol. 2016, 37, 5–17. [Google Scholar] [CrossRef]

- Liang, J.M.; Gong, J.H.; Xie, X.P.; Sun, J. Solar3D: An Open-Source Tool for Estimating Solar Radiation in Urban Environments. ISPRS Int. Geo-Inf. 2020, 9, 524. [Google Scholar] [CrossRef]

- Zeng, L.Y.; Lu, J.; Li, W.Y.; Li, Y.C. A fast approach for large-scale Sky View Factor estimation using street view images. Build. Environ. 2018, 135, 74–84. [Google Scholar] [CrossRef]

- Yang, J.; Wong, M.S.; Menenti, M.; Nichol, J. Modeling the effective emissivity of the urban canopy using sky view factor. ISPRS J. Photogramm. Remote Sens. 2015, 105, 211–219. [Google Scholar] [CrossRef]

- Wang, Z.A.; Tang, G.A.; Lu, G.N.A.; Ye, C.; Zhou, F.Z.; Zhong, T. Positional error modeling of sky-view factor measurements within urban street canyons. Trans. GIS 2021, 25, 1970–1990. [Google Scholar] [CrossRef]

- Carrasco-Hernandez, R.; Smedley, A.R.D.; Webb, A.R. Using urban canyon geometries obtained from Google Street View for atmospheric studies: Potential applications in the calculation of street level total shortwave irradiances. Energy Build. 2015, 86, 340–348. [Google Scholar] [CrossRef]

- Liang, J.M.; Gong, J.H.; Sun, J.; Zhou, J.P.; Li, W.H.; Li, Y.; Liu, J.; Shen, S. Automatic Sky View Factor Estimation from Street View Photographs-A Big Data Approach. Remote Sens. 2017, 9, 411. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Gong, F.Y.; Zeng, Z.C.; Zhang, F.; Li, X.J.; Ng, E.; Norford, L.K. Mapping sky, tree, and building view factors of street canyons in a high-density urban environment. Build. Environ. 2018, 134, 155–167. [Google Scholar] [CrossRef]

- Xia, Y.X.; Yabuki, N.; Fukuda, T. Sky view factor estimation from street view images based on semantic segmentation. Urban Clim. 2021, 40, 14. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Feng, Y.; Chen, L.; He, X. Sky View Factor Calculation based on Baidu Street View Images and Its Application in Urban Heat Island Study. J. Geo-Inf. Sci. 2021, 23, 1998–2012. [Google Scholar] [CrossRef]

- Liang, J.M.; Gong, J.H.; Zhang, J.M.; Li, Y.; Wu, D.; Zhang, G.Y. GSV2SVF-an interactive GIS tool for sky, tree and building view factor estimation from street view photographs. Build. Environ. 2020, 168, 106475. [Google Scholar] [CrossRef]

- BSV Baidu Street View. Available online: https://lbs.baidu.com/faq/api?title=viewstatic-base (accessed on 30 August 2023).

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, Y.; Liu, J.; Shen, Z.; Liu, M. Cross-people mobile-phone based activity recognition. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011. [Google Scholar]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning, Artificial Neural Networks and Machine Learning. In Proceedings of the 27th International Conference on Artificial Neural Networks, ICANN 2018, Rhodes, Greece, 4–7 October 2018; Proceedings, Part III 27; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 270–279. [Google Scholar] [CrossRef]

- Li, X.J.; Ratti, C.; Seiferling, I. Quantifying the shade provision of street trees in urban landscape: A case study in Boston, USA, using Google Street View. Landsc. Urban Plan. 2018, 169, 81–91. [Google Scholar] [CrossRef]

- Pimentel, V.; Nickerson, B.G. Communicating and displaying real-time data with websocket. IEEE Internet Comput. 2012, 16, 45–53. [Google Scholar] [CrossRef]

- He, S.J.; Wang, X.Y.; Dong, J.R.; Wei, B.C.; Duan, H.M.; Jiao, J.Z.; Xie, Y.W. Three-Dimensional Urban Expansion Analysis of Valley-Type Cities: A Case Study of Chengguan District, Lanzhou, China. Sustainability 2019, 11, 5663. [Google Scholar] [CrossRef]

- Koziatek, O.; Dragicevic, S. A local and regional spatial index for measuring three-dimensional urban compactness growth. Envrion. Plan. B-Urban Anal. CIty Sci. 2019, 46, 143–164. [Google Scholar] [CrossRef]

- Yang, L.; Yang, X.; Zhang, H.P.; Ma, J.F.; Zhu, H.; Huang, X. Urban morphological regionalization based on 3D building blocks-A case in the central area of Chengdu, China. Comput. Environ. Urban Syst. 2022, 94, 101800. [Google Scholar] [CrossRef]

- Park, C.; Ha, J.; Lee, S. Association between Three-Dimensional Built Environment and Urban Air Temperature: Seasonal and Temporal Differences. Sustainability 2017, 9, 1338. [Google Scholar] [CrossRef]

- Mukhoti, J.; Gal, Y. Evaluating bayesian deep learning methods for semantic segmentation. arXiv 2018, arXiv:1811.12709. [Google Scholar]

- An, S.M.; Kim, B.S.; Lee, H.Y.; Kim, C.H.; Yi, C.Y.; Eum, J.H.; Woo, J.H. Three-dimensional point cloud based sky view factor analysis in complex urban settings. Int. J. Climatol. 2014, 34, 2685–2701. [Google Scholar] [CrossRef]

| Classification | Height (m) | Count | Ratio |

|---|---|---|---|

| Low-rise building | 3–9 | 27,130 | 56.31% |

| Multi-story building | 9–21 | 13,926 | 28.90% |

| Middle-rise building | 21–30 | 3997 | 8.30% |

| High-rise building | 30–100 | 3055 | 6.34% |

| Ultra-high-rise building | >100 | 74 | 0.15% |

| Parameters | Description |

|---|---|

| ak | API key |

| width | panorama width |

| height | panorama height |

| location | panorama location coordinates (longitude, latitude) |

| fov | horizontal direction range, range [10°, 360°] |

| Field | Field Type | Not Null | Description |

|---|---|---|---|

| id | int | yes | key |

| panoid | varchar | yes | the panorama id |

| date | date | yes | acquisition of the BSV panorama |

| lng | double | yes | BD09 longitude |

| lat | double | yes | BD09 latitude |

| description | varchar | no | Panorama road name |

| panorama | longblob | yes | BLOB format the BSV panorama |

| panorama_seg | longblob | yes | semantic segmentation images of panoramas |

| fisheye | longblob | yes | fisheye images of panoramas |

| fisheye_seg | longblob | yes | fisheye images of panorama_seg |

| svf | float | yes | SVF is calculated by BMapSVF |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, H.; Lu, H.; Liu, S. Online Street View-Based Approach for Sky View Factor Estimation: A Case Study of Nanjing, China. Appl. Sci. 2024, 14, 2133. https://doi.org/10.3390/app14052133

Xu H, Lu H, Liu S. Online Street View-Based Approach for Sky View Factor Estimation: A Case Study of Nanjing, China. Applied Sciences. 2024; 14(5):2133. https://doi.org/10.3390/app14052133

Chicago/Turabian StyleXu, Haiyang, Huaxing Lu, and Shichen Liu. 2024. "Online Street View-Based Approach for Sky View Factor Estimation: A Case Study of Nanjing, China" Applied Sciences 14, no. 5: 2133. https://doi.org/10.3390/app14052133