Quality Analysis of Unmanned Aerial Vehicle Images Using a Resolution Target

Abstract

:1. Introduction

2. Theoretical Background

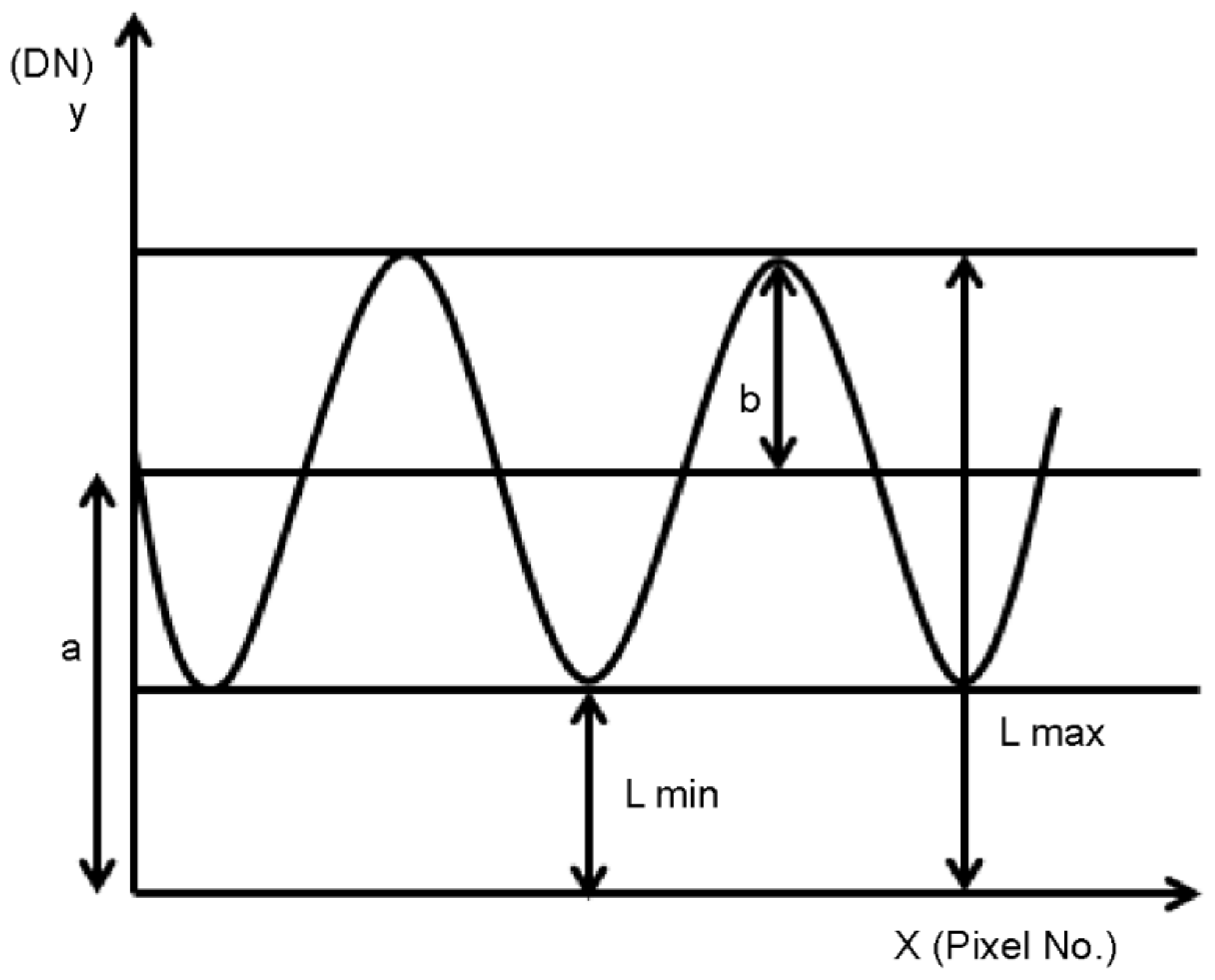

2.1. GSD Analysis Using the Bar Target

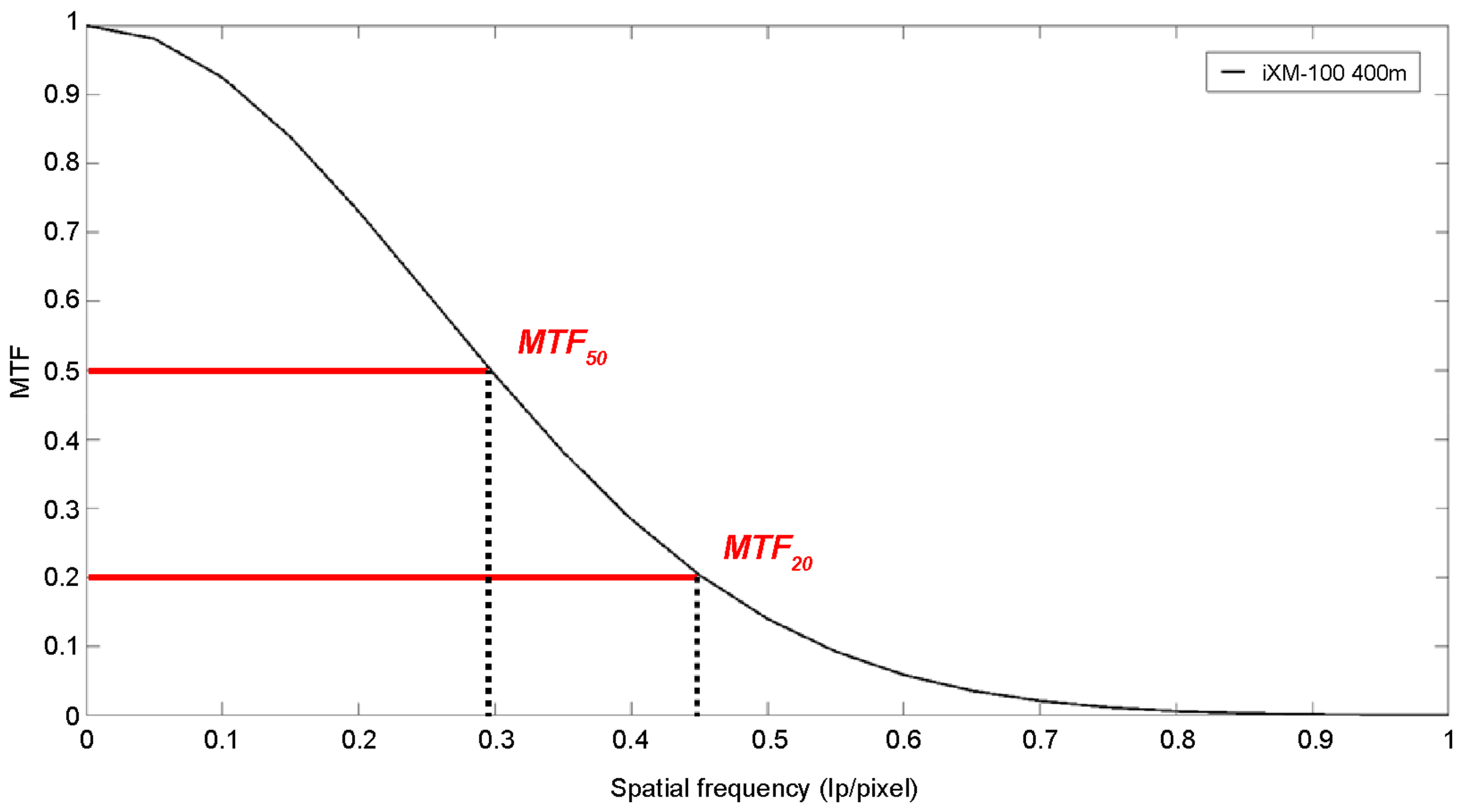

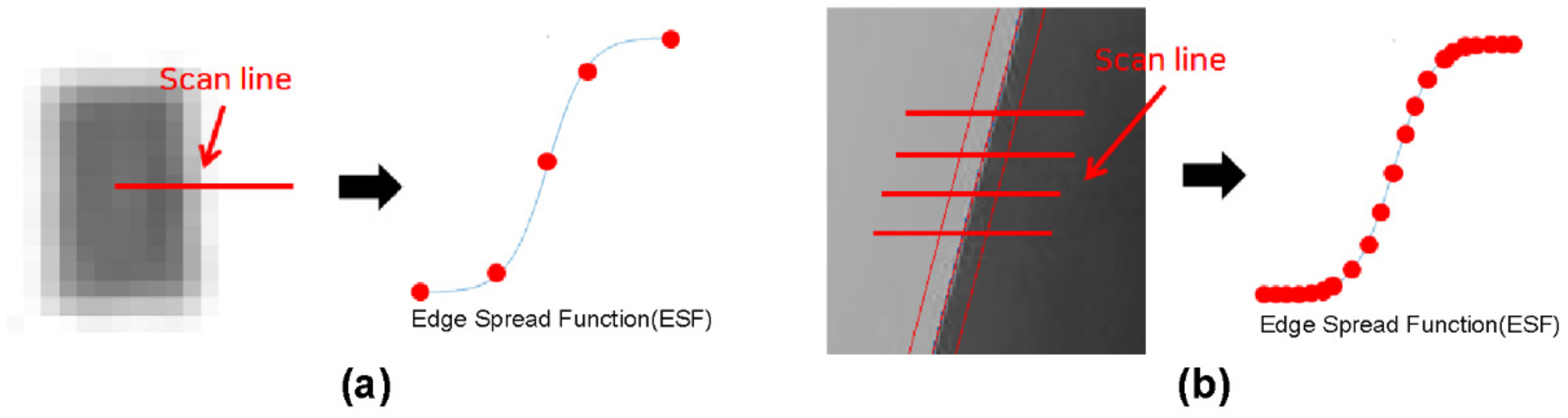

2.2. MTF Analysis

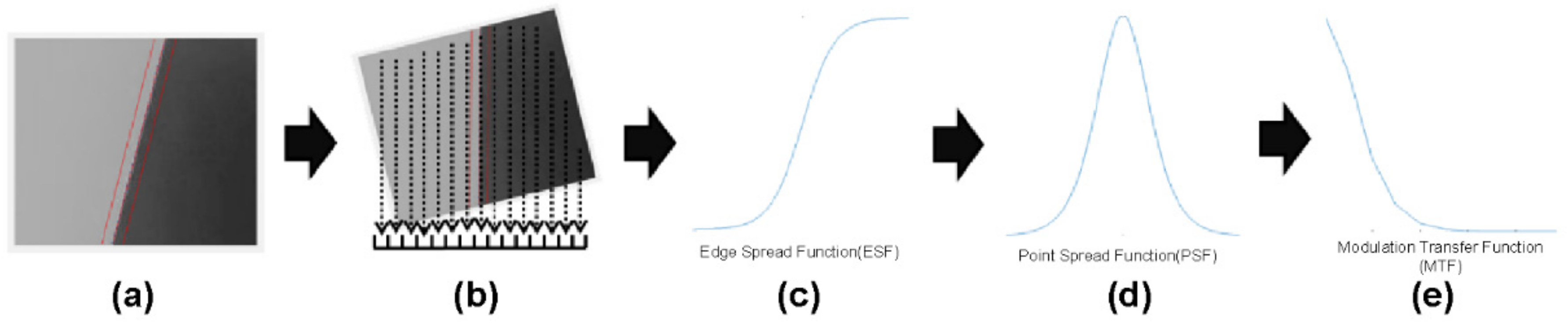

2.3. MTF Analysis Using the Slanted Edge Target

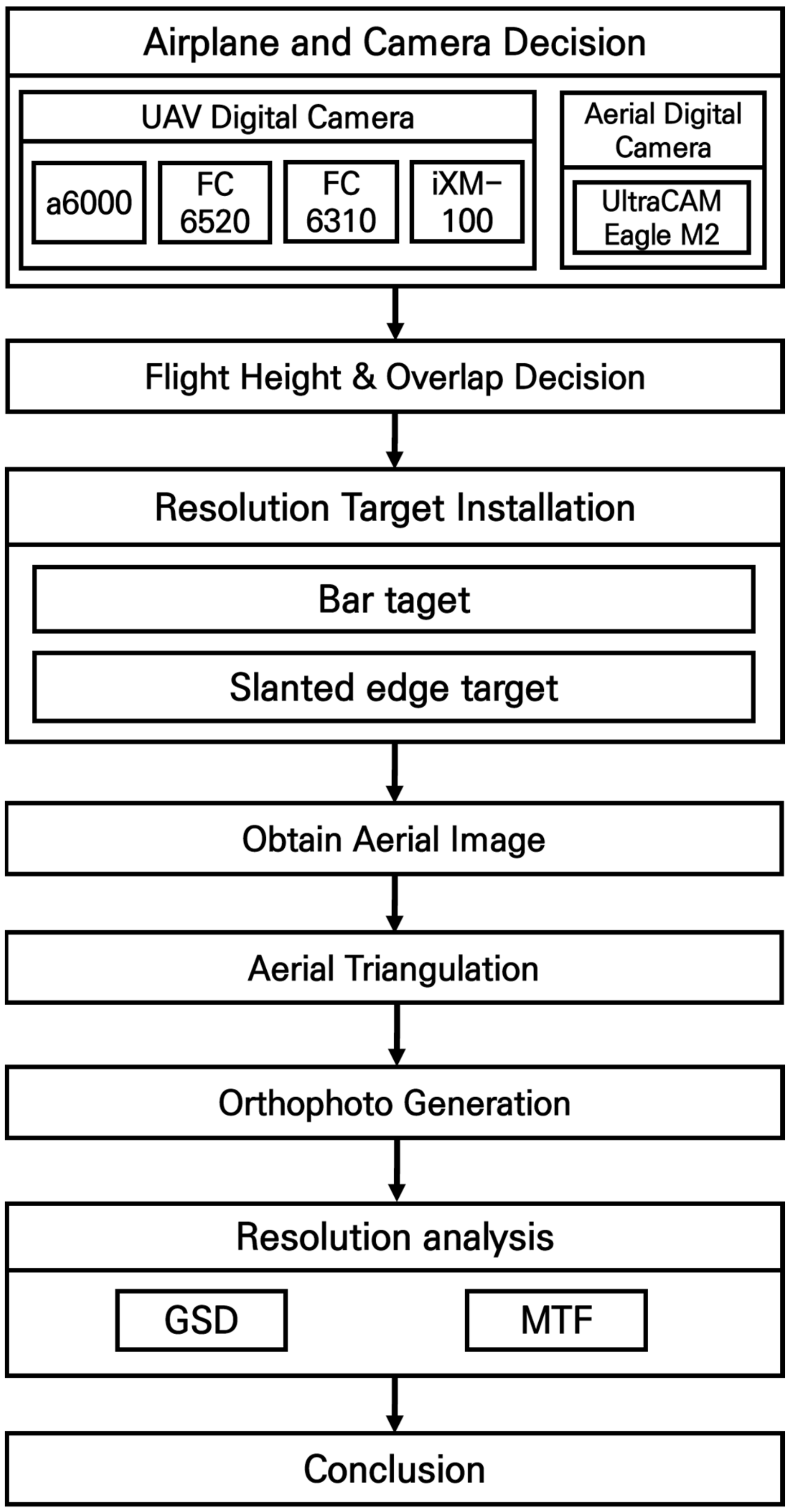

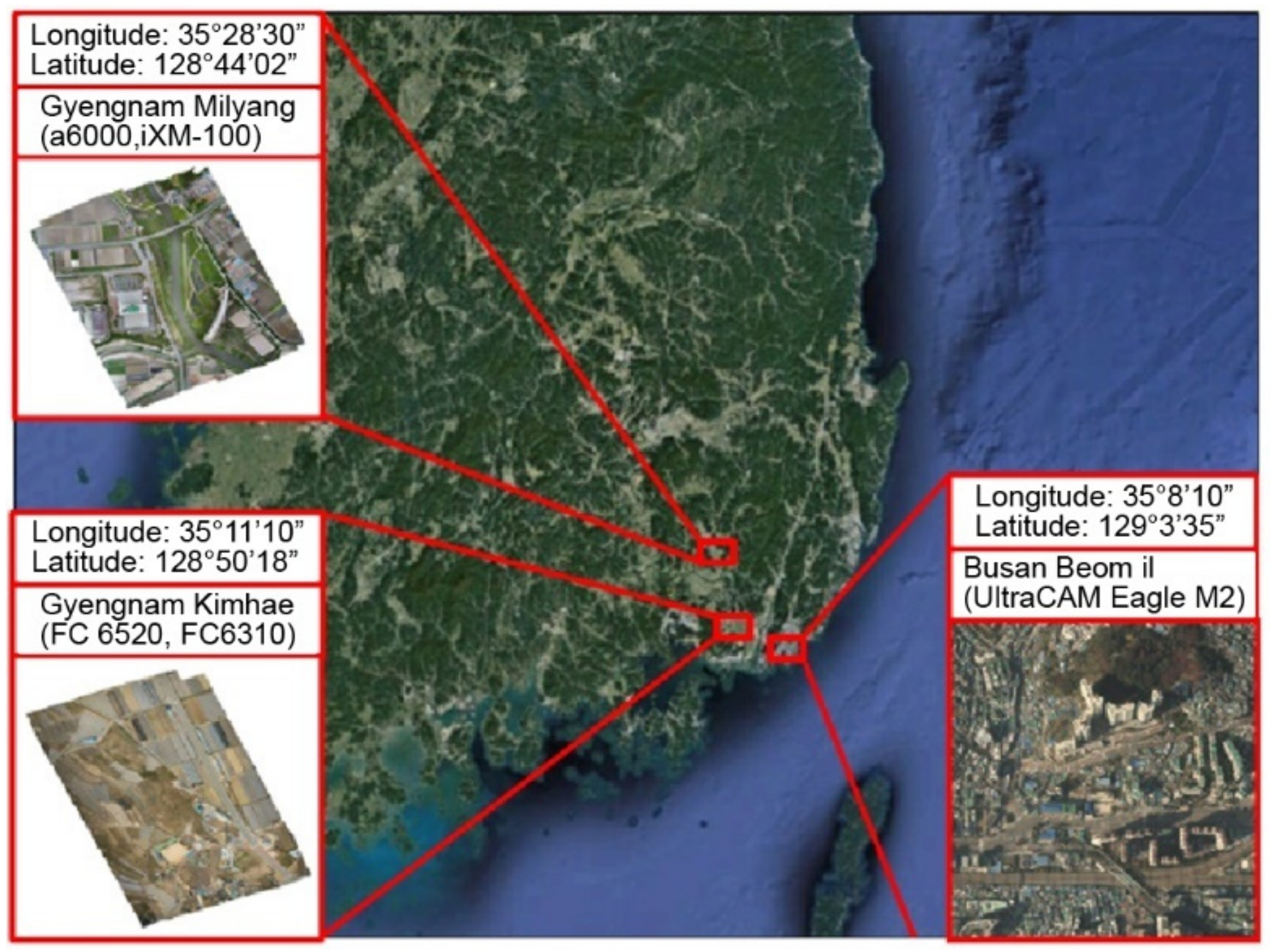

3. Materials and Methods

3.1. Specifications of Resolution Targets

3.1.1. Bar Target

3.1.2. Slanted Edge Target

3.2. Resolution Target Installation

3.3. Image Acquisition and Processing

4. Results

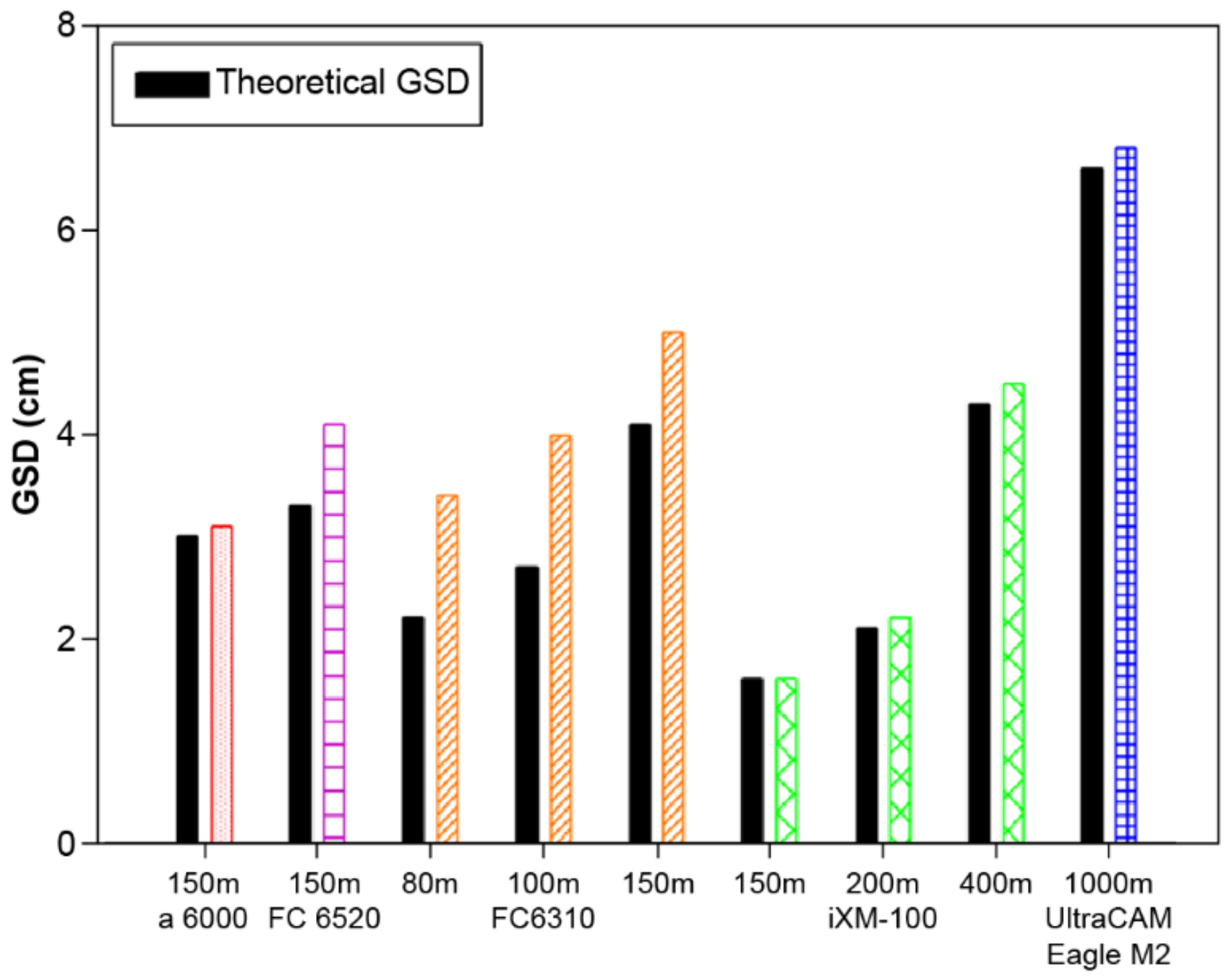

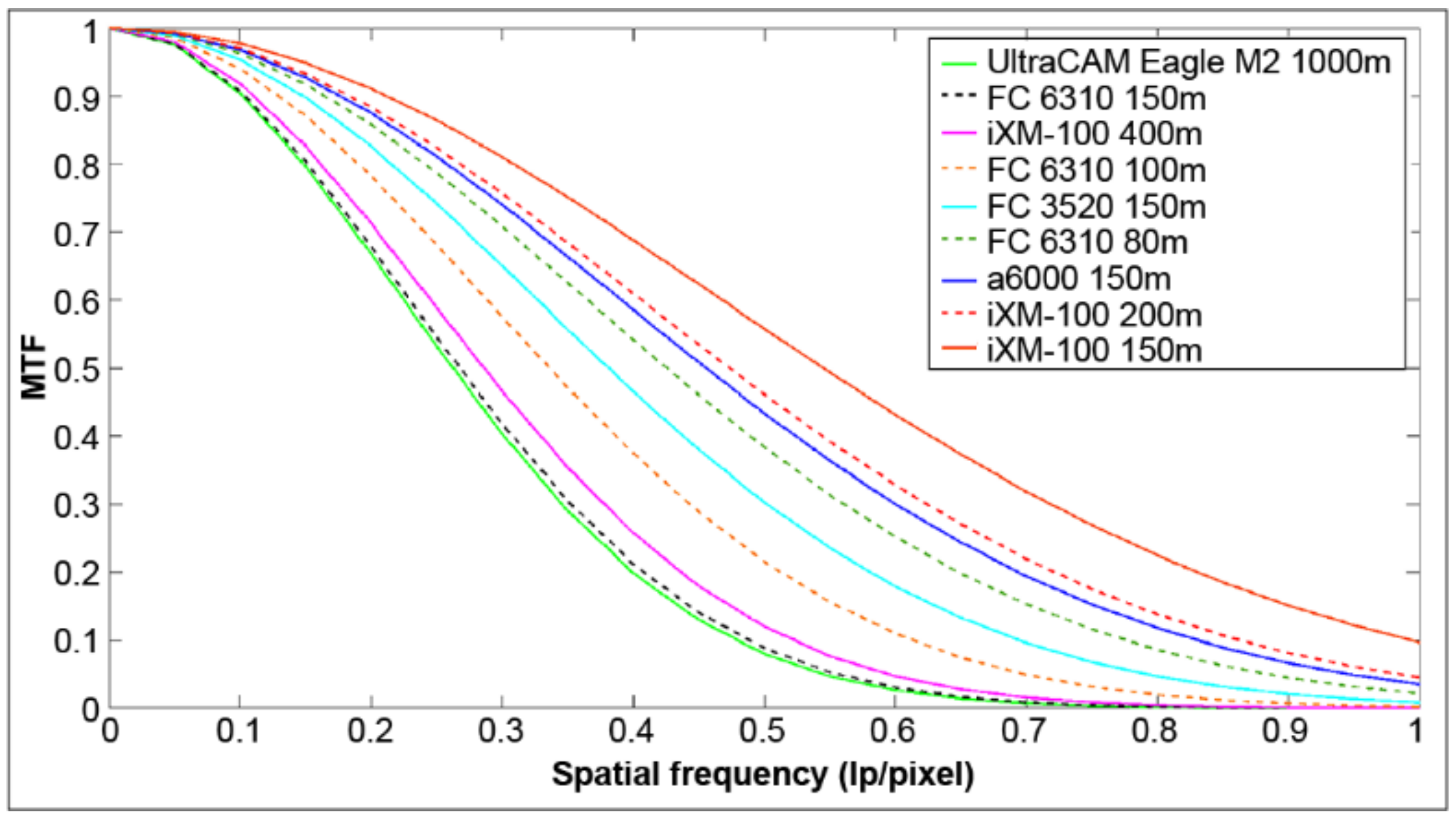

- To analyze the effect of camera performance on the quality of the UAV’s photos and outcomes, we set the flight height to be almost identical at 150 m, and the overlap at P = 60% and at Q = 70–75%. To compare the camera performance, we indicated the name of each camera model.

- Using the FC 6310 and iXM-100 sensors, we captured the images at different heights to analyze the effect of flight height on the quality of the UAV’s images and outcomes.

- The GSD and MTF of the manned aircraft images from the UltraCAM Eagle M2 sensor and of the UAV images from the four sensor types were analyzed and compared.

4.1. GSD Analysis

4.2. MTF Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Masita, K.; Hasan, A.; Shongwe, T. Defects Detection on 110 MW AC Wind Farm’s Turbine Generator Blades Using Drone-Based Laser and RGB Images with Res-CNN3 Detector. Appl. Sci. 2023, 13, 13046. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, T.; Xu, J.; Hong, Y.; Pu, Q.; Wen, X. Rotating Target Detection Method of Concrete Bridge Crack Based on YOLO v5. Appl. Sci. 2023, 13, 11118. [Google Scholar] [CrossRef]

- Lu, L.; Dai, F. Accurate road user localization in aerial images captured by unmanned aerial vehicles. Autom. Constr. 2024, 158, 105257. [Google Scholar] [CrossRef]

- Ke, R.; Li, Z.; Tang, J.; Pan, Z.; Wang, Y. Real-Time Traffic Flow Parameter Estimation from UAV Video Based on Ensemble Classifier and Optical Flow. In IEEE Transactions on Intelligent Transportation Systems; IEEE: Piscataway, NJ, USA, 2018; pp. 54–64. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhou, L.; Wang, X.; Wang, F.; Shi, G. Highway Crack Detection and Classification Using UAV Remote Sensing Images Based on CrackNet and CrackClassification. Appl. Sci. 2023, 13, 7269. [Google Scholar] [CrossRef]

- Ercolini, L.; Grossi, N.; Silvestri, N. A Simple Method to Estimate Weed Control Threshold by Using RGB Images from Drones. Appl. Sci. 2022, 12, 11935. [Google Scholar] [CrossRef]

- Logan, R.D.; Torrey, M.A.; Feijó-Lima, R.; Colman, B.P.; Valett, H.M.; Shaw, J.A. UAV-Based Hyperspectral Imaging for River Algae Pigment Estimation. Remote Sens. 2023, 15, 3148. [Google Scholar] [CrossRef]

- Rajeena, F.P.P.; Ismail, W.N.; Ali, M.A.S. A Metaheuristic Harris Hawks Optimization Algorithm for Weed Detection Using Drone Images. Appl. Sci. 2023, 13, 7083. [Google Scholar] [CrossRef]

- Diruit, W.; Le Bris, A.; Bajjouk, T.; Richier, S.; Helias, M.; Burel, T.; Lennon, M.; Guyot, A.; Ar Gall, E. Seaweed Habitats on the Shore: Characterization through Hyperspectral UAV Imagery and Field Sampling. Remote Sens. 2022, 14, 3124. [Google Scholar] [CrossRef]

- Fabris, M.; Balin, M.; Monego, M. High-Resolution Real-Time Coastline Detection Using GNSS RTK, Optical, and Thermal SfM Photogrammetric Data in the Po River Delta, Italy. Remote Sens. 2023, 15, 5354. [Google Scholar] [CrossRef]

- Domingo, D.; Gómez, C.; Mauro, F.; Houdas, H.; Sangüesa-Barreda, G.; Rodríguez-Puerta, F. Canopy Structural Changes in Black Pine Trees Affected by Pine Processionary Moth Using Drone-Derived Data. Drones 2024, 8, 75. [Google Scholar] [CrossRef]

- Sung, S.M. A Study on Spatial Resolution Analysis Methods of UAV Images. Ph.D. Dissertation, Dong-A University, Busan, Korea, 20 August 2019. [Google Scholar]

- Baer, L.R. Circular-edge spatial frequency response test. In Proceedings Society of Photo-Optical Instrumentation Engineers, Image Quality and System Performance, San Jose, CA, USA, 18 December 2003; Yoichi Miyake, D., Rene, R., Eds.; SPIE: Bellingham, WA, USA, 2003. [Google Scholar]

- Wang, T.; Li, S.; Li, X. An automatic MTF measurement method for remote sensing cameras. In Proceedings of the 2nd IEEE International Conference on Computer Science and Information Technology, Beijing, China, 8–11 August 2009; pp. 245–248. [Google Scholar]

- Sieberth, T.; Wackrow, R.; Chandler, J.H. Automatic detection of blurred images in UAV image sets. ISPRS J. Photogramm. Remote Sens. 2016, 122, 1–16. [Google Scholar] [CrossRef]

- Orych, A. Review of methods for determining the spatial resolution of UAV sensors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2015, XL-1/W4, 391–395. [Google Scholar] [CrossRef]

- Lee, T.Y. Spatial Resolution Analysis of Aerial Digital Camera. Ph.D. Dissertation, Dong-A University, Busan, Korea, 2012; 50p. (In Korean with English abstract). [Google Scholar]

- Neumann, A. Verfahren zur Auflösungsmessung Digitaler Kameras. Masters’s Thesis, University of Applied Sciences, Cologne, Germany, 2003; 70p. [Google Scholar]

- Pedrotti, F.L.; Pedrotti, L.M. Introduction to Optics, 3rd ed.; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Crespi, M.; De Vendictis, L. A Procedure for High Resolution Satellite Imagery Quality Assessment. Sensors 2009, 9, 3289–3313. [Google Scholar] [CrossRef] [PubMed]

- Pinkus, A.; Task, H. Measuring Observers’ Visual Acuity Through Night Vision Goggles; Defense Technical Information Center: Fort Belvoir, VA, USA, 1998. [Google Scholar]

- ISO 12233:2000(E); Photography–Electronic Still-Picture Cameras—Resolution Measurements. ISO: Geneve, Switzerland, 2000.

- Geo-Matching. Available online: https://geo-matching.com/uas-for-mapping-and-3d-modelling/firefly6-pro (accessed on 20 January 2024).

- Inspire 2. Available online: https://www.dji.com/inspire-2 (accessed on 20 January 2024).

- Support for Phantom 4 Pro. Available online: https://www.dji.com/phantom-4-pro (accessed on 20 January 2024).

- MATRICE 600PRO. Available online: https://www.dji.com/matrice600-pro (accessed on 20 January 2024).

- EagleM2. Available online: https://www.vexcel-imaging.com/EagleM2 (accessed on 20 January 2024).

| UAV Model | FireFLY 6 PRO | Inspire 2 | Phantom Pro 4 | Matrice 600 | Manned Aircraft |

|---|---|---|---|---|---|

| Appearance |  |  |  |  |  |

| Camera model | a6000 | FC 6520 | FC 6310 | iXM-100 | UltraCAM Eagle M2 |

| Focal length | 20 mm | 15 mm | 8.8 mm | 35 mm | 100 mm |

| Pixel size | 4 × 4 µm | 3.28 × 3.28 µm | 2.41 × 2.41 µm | 3.76 × 3.76 µm | 6 × 6 µm |

| CCD sensor size | 6000 × 4000 (24 MP) | 5280 × 3956 (21 MP) | 5472 × 3648 (20 MP) | 11,664 × 8750 (100 MP) | 17,310 × 11,310 (193 MP) |

| Camera Model | Flight Height | Overlap | Area | Number of Images | Wind Velocity | Flight Date |

|---|---|---|---|---|---|---|

| a6000 | 150 m | P = 60% | 720 m2 | 451 | 0.9 m/s | 19 April 2011 |

| Q = 75% | ||||||

| FC 6520 | 150 m | P = 60% | 422 m2 | 371 | 1.9 m/s | 18 May 2022 |

| Q = 70% | ||||||

| FC 6310 | 80 m | P = 60% | 894 m2 | 632 | 1.9 m/s | 18 May 2022 |

| Q = 70% | ||||||

| 100 m | P = 60% | 894 m2 | 556 | 1.9 m/s | 18 May 2022 | |

| Q = 70% | ||||||

| 150 m | P = 60% | 894 m2 | 422 | 1.9 m/s | 18 May 2022 | |

| Q = 70% | ||||||

| iXM-100 | 150 m | P = 60% | 462 m2 | 231 | 1.3 m/s | 19 March 2028 |

| Q = 70% | ||||||

| 200 m | P = 60% | 462 m2 | 115 | 1.3 m/s | 19 March 2028 | |

| Q = 70% | ||||||

| 400 m | P = 60% | 462 m2 | 52 | 1.3 m/s | 19 March 2028 | |

| Q = 70% |

| Camera Model | Flight Height | Bar Target | Theoretical GSD | Measured GSD |

|---|---|---|---|---|

| a6000 | 150 m |  | 3.0 cm | 3.1 cm |

| FC 6520 | 150 m |  | 3.3 cm | 4.1 cm |

| FC 6310 | 80 m |  | 2.2 cm | 3.4 cm |

| 100 m |  | 2.7 cm | 4.0 cm | |

| 150 m |  | 4.1 cm | 5.0 cm | |

| iXM-100 | 150 m |  | 1.6 cm | 1.6 cm |

| 200 m |  | 2.1 cm | 2.2 cm | |

| 400 m |  | 4.3 cm | 4.5 cm | |

| UltraCAM Eagle M2 | 1000 m |  | 6.6 cm | 6.8 cm |

| Camera Model | Flight Height | Slanted Edge Target | |||

|---|---|---|---|---|---|

| a6000 | 150 m |  | 0.401 | 0.456 | 0.694 |

| FC 6520 | 150 m |  | 0.511 | 0.381 | 0.582 |

| FC 6310 | 80 m |  | 0.443 | 0.426 | 0.648 |

| 100 m |  | 0.522 | 0.336 | 0.513 | |

| 150 m |  | 0.694 | 0.268 | 0.408 | |

| iXM-100 | 150 m |  | 0.331 | 0.545 | 0.831 |

| 200 m |  | 0.395 | 0.474 | 0.722 | |

| 400 m |  | 0.635 | 0.286 | 0.437 | |

| UltraCAM Eagle M2 | 1000 m |  | 0.715 | 0.263 | 0.399 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.-H.; Sung, S.-M. Quality Analysis of Unmanned Aerial Vehicle Images Using a Resolution Target. Appl. Sci. 2024, 14, 2154. https://doi.org/10.3390/app14052154

Kim J-H, Sung S-M. Quality Analysis of Unmanned Aerial Vehicle Images Using a Resolution Target. Applied Sciences. 2024; 14(5):2154. https://doi.org/10.3390/app14052154

Chicago/Turabian StyleKim, Jin-Hyo, and Sang-Min Sung. 2024. "Quality Analysis of Unmanned Aerial Vehicle Images Using a Resolution Target" Applied Sciences 14, no. 5: 2154. https://doi.org/10.3390/app14052154