Abstract

This paper provides an in-depth review of deep learning techniques to address the challenges of odometry and global ego-localization using frequency modulated continuous wave (FMCW) radar sensors. In particular, we focus on the prediction of odometry, which involves the determination of the ego-motion of a system by external sensors, and loop closure detection, which concentrates on the determination of the ego-position typically on an existing map. We initially emphasize the significance of these tasks in the context of radar sensors and underscore the motivations behind them. The subsequent sections delve into the practical implementation of deep learning approaches, strategically designed to effectively address the aforementioned challenges. We primarily focus on spinning and automotive radar configurations within the domain of autonomous driving. Additionally, we introduce publicly available datasets that have been instrumental in addressing these challenges and analyze the importance and struggles of current methods used for radar based odometry and localization. In conclusion, this paper highlights the distinctions between the addressed tasks and other radar perception applications, while also discussing their differences from challenges posed by alternative sensor modalities. The findings contribute to the ongoing discourse on advancing radar sensor capabilities through the application of deep learning methodologies, particularly in the context of enhancing odometry and ego-localization for autonomous driving applications.

1. Introduction

Simultaneous Localization and Mapping (SLAM) stands as a foundational technology in the realms of robotics and autonomous systems. Its primary function involves enabling an autonomous system to not only construct a comprehensive map of its surroundings, but also to accurately determine its own position within this map. Central to any SLAM algorithm are two pivotal concepts: odometry, which forecasts the system’s ego-motion, and ego-localization, the skill to establish its position within the map using external sensor data.

Passive and active perception-based sensors, such as cameras, lidars, sonars, and radars, are commonly applied in a SLAM approach [1]. These sensors have the advantage of deriving accuracy from observations. Maps can be constructed from these sensors to gain a spatial understanding of the environment. In an ideal world, information derived from active sensors, such as lidars, sonars, and radars, denote absolute precision for a measurement, and the odometry and ego-localization of a system can be determined solely from this information. However, due to various factors, the detections are not always consistent or accurate [2]. Passive positional sensors, such as a Global Navigation Satellite System (GNSS) or an Inertial Navigation System (INS) can provide additional information about the position and dynamics of the system, but are unable to capture the spatial environment on their own. Additionally, GNSS sensors also suffer from poor performance in common application areas, such as indoor scenarios, tunnels, and in between sky scrapers due to poor signal reception [3]. In such scenarios, perception-based ego-localization is preferred.

Positional sensors such as GNSS and INS are often included in autonomous vehicle applications besides a perception sensor, which will be discussed later.

Perception-based SLAM approaches can be categorized according to the type of sensor they rely on, with a primary distinction being between active range-based and passive visual-based methodologies. Visual SLAM (VSLAM) methods specifically employ onboard cameras to depict their surroundings. Despite the considerable research on VSLAM techniques, they encounter difficulties in adverse weather conditions like heavy rain or fog, and in varying lighting situations such as intense sunlight or during the night. These challenges extend to issues concerning accurate depth perception [4,5].

Range-based techniques utilize sensors such as lidar, sonar, and radar to operate. Sonar finds its main utility in underwater scenarios [6,7] whereas lidars and radars are predominantly employed in both indoor and outdoor applications, particularly in the context of autonomous vehicles. While lidars offer denser detection capabilities, radars bring their own set of distinctive advantages. Lidars tend to be more expensive than radars and are generally less resilient in adverse weather conditions. Although hybrid approaches that leverage both radars and lidars for SLAM tasks exist, the focus in literature and industry remains on the employment of a solitary range-based sensor [5,8]. In practice, a range-based sensor is often integrated beside visual sensors to provide depth information and assist in visually impaired scenarios. Another motivation in incorporating multiple sensors into a complete SLAM is to avoid the occlusion problem that arises when using a solitary perception sensor. When an object is obstructed by another object, or an object moves out of the frame of one sensor, another sensor might be able to spot the object. Radars are especially relevant for such a task, since radars can detect objects that appear occluded in lidars or visual data [9]. However, radar data are very susceptible to “ghost detections” [9], where a radar detects objects that are not in the scene. These detections are mostly caused by multi-path propagation [10] effects. Similar to the occlusion problem in computer vision, a deep neural network can learn a mask to filter out non-relevant objects and regions. An extra sensor, such as lidar or camera, can also provide additional spatial information to the system.

The framework for classifying SLAM approaches was introduced in 1995 through the seminal works by Durrant-Whyte et al. [11,12]. In the realm of perception-based SLAM methods, a central concept involved manually designing features to facilitate the alignment of keypoints within image or pointcloud representations. However, much like the progress witnessed in various other fields, such as natural language processing [13] and computer vision [14], deep learning methods have been developed to address the challenges faced in a SLAM method.

In the field of computer vision, deep learning has been applied thoroughly to address a variety of issues for the SLAM problem, including keypoint detection, ego-motion estimation, structure-from-motion, and ego-localization [15,16,17,18]. Analog methods have been developed for lidar data that work on a point cloud representation rather than a grid representation [19,20,21]. The type of deep learning architecture consists of a range of modules from standard spatial and temporal layers such as convolutional neural networks (CNNs) [22] and recurrent neural network (RNN) such as long short-term Memory layers (LSTMs) [23] and Gated recurrent units (GRUs) [24], to sophisticated graph neural-networks (GNNs) [25] and transformer architectures [26]. Other optimization methods, such as formulating an appropriate loss, multi-task learning, and optimization learning techniques have been thoroughly investigated for cameras and lidar. The exact architecture and training routine depends on the available data, and on the sensors present in the system [27,28,29].

Given the increasing prominence of radar as a robust, cost-effective, and dependable perception sensor [5,9,30], there is a distinct need to undertake a comprehensive review of deep learning approaches specifically tailored toward radar-based SLAM methodologies.

Our main contributions are as follows:

- We provide an introduction to the formulation of the SLAM problem and radar processing.

- We delve into a detailed examination of the latest deep learning techniques employed in radar-based odometry and localization.

- We explore the current methods to integrate radar data into deep learning modules to enhance visual/lidar odometry and ego-localization accuracy.

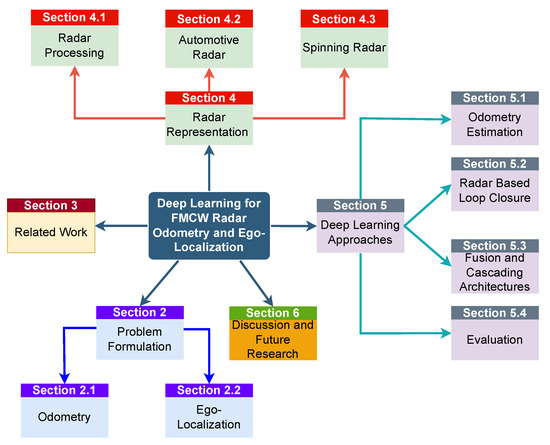

Figure 1 provides an overview of the survey paper proposed in this study. Section 2 introduces the overarching problem of sensor-based odometry and ego-localization. Moving forward, Section 3 references existing survey papers, setting the context for our work. In Section 4, our attention is directed towards fundamental radar processing, exploring diverse setups and methods of data representation for radar data. Section 5 consolidates the latest trends by examining the application of deep learning methods in radar-based odometry and ego-localization pipelines. Notably, we extend our discussion beyond solely radar data to include the integration of fusion and cascading network structures to account for the necessity for a complete and reliable SLAM approach. We also compare the evaluation methods of the models. To conclude, Section 6 offers a comprehensive summary and discussion of potential future trends in the field.

Figure 1.

Overview of the proposed survey paper.

2. Problem Formulation

In this section, we present fundamental concepts and the sequential stages involved in odometry estimation and ego-localization within radar-based SLAM. Additionally, we highlight key methods employed in classical radar SLAM.

SLAM constitutes an estimation challenge wherein the objective is to refine the collection of all ego poses at times 1 through t and n landmark positions recorded in a global map . This refinement is accomplished by utilizing observed data encompassing perceptions , gathered odometry readings , and an initial starting point . Mathematically, the probabilistic formulation is articulated as defined by Grisetti et al. [31] as:

For convenience, the starting position from Equation (1) will be omitted from now on. The ego-position and odometry measurements are commonly depicted using 2D or 3D special Euclidean group transformation matrices or . Such transformation matrices consist of a translation vector t and a rotation matrix R which can be represented with homogeneous coordinates in Equation (2) as

The fundamental objective of any SLAM methodology is to determine the Maximum A Posteriori (MAP) state for trajectories and the landmark map . This objective can be formally expressed as:

A commonly employed approach to solving Equation (3) involves making an assumption of the static global map and leveraging the Markov property. This allows the SLAM problem to be conceptualized as a dynamic Bayesian network (DBN) [31]. Within this framework, the initial pose , observations and odometry estimations are considered as observable variables. Meanwhile, the latent variables of the model encompass (sequential poses) and the map . The structure of the DBN is characterized by two main components: a state transition model denoted as which signifies that a new pose is contingent on the previous pose and the current odometry measurement. The second component is an observation model denoted as , indicating that observations are dependent on the current pose and the overall map configuration.

A DBN can also be portrayed using a graphical representation, such as that presented in [32], where nodes correspond to poses . Within this graph, edges serve as spatial constraints connecting poses. These constraints can be established based on odometry measurements between poses or through the alignment of two distinct observations taken at different poses. In practical implementations, the observation edges play a crucial role in the graphical representation by functioning as regulators. Their purpose is to rectify any potential accumulation of errors or drift that might arise from the utilization of odometry edges alone.

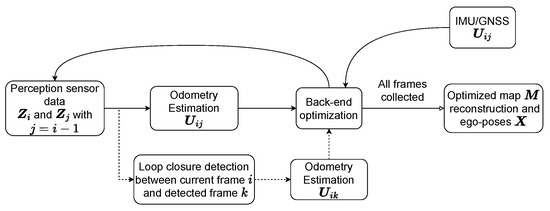

Graph optimization is a prevailing technique employed to determine the optimal global solution, considering multiple outcomes from scan matching. Depending on the specific characteristics of a SLAM algorithm, the edges accumulated through odometry and observations might not align seamlessly. This discrepancy can arise due to assorted factors, encompassing both hardware and software intricacies. Despite these potential inconsistencies, the ultimate objective remains the establishment of a globally coherent trajectory that accounts for all observations and information available. A typical SLAM procedure is shown in Figure 2.

Figure 2.

Typical setup of a SLAM approach. Sensor data are used to estimate the transformation matrix between frames. If a loop closure is detected, a transformation matrix between the detected frame and current frame is added to the back-end optimization scheme. Finally, an optimized map and ego-poses are generated after the back-end optimization process. IMU/GNSS data can be inserted as additional edges in the back-end optimization.

2.1. Odometry

Various sensors can serve to establish an odometry edge within the graph representation. An inertial measurement unit (IMU) achieves this by utilizing internal sensors like gyroscopes, accelerometers, and magnetometers. Similarly, GNSS sensors like a GPS, differential GPS (DGPS) or INS with integrated GPS can also provide odometry measurements from the pose difference computed at two different locations. However, passive IMU and GNSS sensors lack environmental context and are thus vulnerable to external factors such as signal interference or incorrect readings, for example due to a stuck vehicle, despite wheel movement detected by the IMU.

On the other hand, scan-based odometry methods deduce the vehicle’s ego-motion by analyzing environmental data from perception sensors like radar, lidar, and/or vision. Unlike IMU-predicted odometry, sensor-based odometry aligns consecutive scans, leading to a consistent odometry estimate that is grounded in the external environment. However, relying on external sensors introduces its own set of challenges, which can potentially yield inaccurate estimations depending on the specific sensor. In practice, the IMU/GNSS data and scan-matching results are often fused in a Kalman-Filter approach [9]. Here, it needs to be mentioned that any IMU needs to be re-calibrated from time to time due to various factors, including mechanical deterioration, change in environment, or temperature changes [33]. As such, odometry estimations from other sensors are crucial in a SLAM method, allowing accurate prediction of the ego-motion.

In the realm of radar-based scan matching, a prevalent technique involves range-based feature matching [9]. Radar data are typically represented in the form of a point cloud or a 2D bird’s-eye view (BEV) image. The goal is to align consecutive scans to identify the most probable transformation. While deep learning methods can be used to determine the ego-transformation between successive radar scans, traditional techniques continue to find more widespread application. These conventional methods entail the use of manually designed features. Examples include Oriented FAST and rotated BRIEF (ORB)-features [34] and scale-invariant feature transform (SIFT)-features [35] for visual data, as well as Conservative Filtering for Efficient and Accurate Radar Odometry (CFEAR) [36] features tailored for radar data. These features are expressed as point detections. The process of scan matching involves identifying these features in single scans and matching them across consecutive scans. This is accomplished through an iterative closest point (ICP)-based approach [37,38], which is often integrated with a random sample consensus (RANSAC) [39] technique to discard outliers.

For laser scan observations, alternate methods like the normal distribution transform (NDT) [40] are commonly employed to calculate the ego-transformation matrix between successive scans. For accurate alignment of sensor measurements, it is essential to exclusively include relevant stationary features. Introducing non-stationary features into the ICP process can distort the vehicle’s ego-motion estimation, yielding inaccurate outcomes [41]. Consequently, it is crucial to filter out detections originating from irrelevant features. While this issue has been extensively addressed for cameras and lidar sensors, radar sensors largely evade this concern due to the captured Doppler feature. Although the Doppler component solely provides the radial velocity of a feature, it can be employed to deduce the true velocity of a detection. This allows the removal of non-stationary features before applying an ICP-based approach, ensuring more accurate results [41,42].

2.2. Ego-Localization

Odometry estimation plays a crucial role in accurately mapping consecutive scans of the environment. However, regardless of the precision of the odometry measurement and the sensors used, there is an inevitable build-up of global drift over time. This drift can lead to distortions in the generated map and, thus, inaccuracies in global pose estimations [9]. To mitigate such drift issues, ego-localization is employed in the form of loop closure detections to identify previously visited locations. When an already visited location is detected, a scan matching procedure is carried out between the present and past scan or map, resulting in an adjustment of the current pose based on the matching outcome. In graph optimization approaches, loop closure transformations are included as additional edges in the graph structure.

Various techniques are employed in the detection of potential loop closures. Some methods employ the usage of positional sensors such as GPS, while others match a new scan to a scan from a set of stored scans to determine if an edge is added to the graph optimization. One common approach utilizes the Bag-of-Words (BoW) method [43]. This technique proves valuable for efficiently comparing perception scans, a critical aspect in the detection of loop closures within SLAM and analogous systems. The BoW technique treats each scan as a collection of visual words, succinctly represented as a single vector. These visual words encompass features such as edges, corners, and texture sections, extracted through algorithms like SIFT, SURF, ORB, or a trained network.

Initially, a dictionary is crafted by aggregating features from diverse scans and organizing them through a cluster analysis, such as k-means clustering. Each cluster center subsequently serves as a distinct ’visual word.’ When a new scan is recorded, its features are extracted and matched against the visual words, or cluster centers, present in the dictionary. This results in the representation of the scan as a vector, illustrating the frequency of each visual word within the scan. During the ego-localization process, a BoW is employed to identify similarities between the current scan and known scenes, enabling the estimation of the position concerning previously visited locations. If the similarity between the current vector and a vector from the dictionary succeeds a previous defined threshold, scan matching is performed between the two scans to determine the transformation matrix and an edge is added to the graph. However, these edges have to be carefully chosen, as incorrect edges can lead to large deteriorations of the generated map [11,12].

3. Related Work

A plethora of survey papers offer in-depth insights into a diverse array of scan matching and odometry estimation methods, addressing different sensor and method types, as illustrated in Table 1. These survey papers were found by combining the terms “Survey” or “Review’ with a task or sensor-specific term such as: “SLAM”, “VSLAM”, “lidar”, “Survey”, “Ego-localization”, “Localization”, “Deep learning”, “Radar”, “Odometery” using Google Scholar and Web of Science. Additional papers were found with a backward search on the found surveys.

While there are numerous survey papers dedicated to odometry and localization in SLAM, none specifically delve into the application of deep learning to the radar modality. A recent contribution by Louback da Silva Lubanco et al. [44] lays the groundwork for a foundational understanding of classic radar-based odometry.

Geng et al. [45] discuss advancements in deep learning applied to radar perception, although their focus does not specifically center on odometry or place recognition. Conversely, the review conducted by Yao et al. [4] revolves around radar-vision fusion specifically for object detection and segmentation.

The survey papers authored by Zhou et al. [9] and Harlow et al. [5] provide thorough insights into radar pre-processing schemes and deliver a detailed overview of state-of-the-art methods for radar perception tasks within the domain of autonomous driving. Notably, these reviews predominantly concentrate on radar perception concerning object detection and segmentation. They touch upon only a limited number of deep learning-based methods for odometry estimation and localization.

Table 1.

Overview of relevant survey papers. Primary Sensor: R, L, and C stand for Radar, lidar, and Camera sensors. ✓ means the survey included the SLAM component, (✓) means it was partially included and ✕ that it was not included.

Table 1.

Overview of relevant survey papers. Primary Sensor: R, L, and C stand for Radar, lidar, and Camera sensors. ✓ means the survey included the SLAM component, (✓) means it was partially included and ✕ that it was not included.

| Paper | Year | Primary Sensor | Deep Learning Focus | Odometry | Localization |

|---|---|---|---|---|---|

| [44] | 2022 | R | ✕ | ✓ | ✕ |

| [46] | 2018 | R,L,C,GPS | ✕ | ✕ | ✓ |

| [47] | 2021 | L,C | ✓ | ✕ | ✓ |

| [48] | 2021 | L,C | ✓ | ✕ | ✓ |

| [49] | 2022 | R,L,C | ✓ | ✕ | ✓ |

| [29] | 2023 | L,C | ✓ | ✓ | ✓ |

| [45] | 2021 | R | ✓ | ✕ | ✕ |

| [4] | 2023 | R,C | ✓ | ✕ | ✕ |

| [50] | 2022 | L,C | ✕ | ✓ | ✓ |

| [51] | 2022 | L,C | ✓ | ✓ | ✓ |

| [52] | 2021 | L | ✓ | ✓ | ✓ |

| [53] | 2021 | L | ✕ | ✓ | ✓ |

| [54] | 2022 | C | ✓ | ✓ | ✓ |

| [55] | 2015 | C | ✕ | ✕ | ✓ |

| [56] | 2020 | C | ✓ | ✓ | ✕ |

| [57] | 2019 | C | ✓ | ✓ | ✓ |

| [58] | 2023 | L,C | (✓) | ✓ | ✓ |

| [8] | 2020 | L,C | ✓ | ✓ | ✓ |

| [27] | 2023 | C | ✓ | ✓ | ✓ |

| [59] | 2023 | C | ✓ | ✓ | ✓ |

| [28] | 2023 | C | ✓ | ✓ | ✓ |

| [9] | 2022 | R | (✓) | ✓ | ✓ |

| [5] | 2023 | R | (✓) | ✓ | ✓ |

| Ours | 2023 | R | ✓ | ✓ | ✓ |

The work by Saleem et al. [29] closely aligns with our survey paper, as these authors delve into the fundamentals of SLAM issues and present numerous deep learning techniques applied to lidar and visual sensors. It is noteworthy, however, that their coverage of radar is confined to potential fusion scenarios with lidar or visual sensors.

Besides these survey papers focusing on radar, many others focus on either visual or lidar-based SLAM methodology. Kuutti et al. [46] concentrate on non-learning based localization methods for various perception sensors. Extending this work, Arshad et al. [47] investigate how deep learning techniques facilitate loop closure identification in lidar and VSLAM. Shifting focus, Roy et al. [48] specialize in deep learning techniques tailored for indoor localization and embedded systems. Additionally, Yin et al. [49] provide an extensive coverage of place recognition and loop closure detection across sensor modalities and application domains.

In the realm of sensor fusion, Xu et al. [50] highlight the integration of lidar-based sensors with other modalities, primarily leveraging visual features. On a similar note, Chghaf et al. [51] concentrate on lidar and VSLAM, briefly addressing the fusion of radar with other modalities.

Huang et al. [52] and Khan et al. [53] predominantly center their reviews on lidar-SLAM. In the context of VSLAM, several comprehensive review papers, including [54,55,56,57,60], provide detailed overviews, but lack any mention of radar sensors.

The exploration of deep learning across diverse sensor modalities is addressed in the works by Placed et al. [58] and Chen et al. [8], with a specific focus on lidar and VSLAM. They particularly emphasize belief-space planning and deep reinforcement learning. In a related context, Zhang et al. [27] and Favorskaya et al. [59] delve into the role of deep learning in VSLAM approaches, investigating its applications in fundamental SLAM tasks such as pose optimization and mapping.

Mokssit et al. [28] concentrate on specific stages of VSLAM methods, encompassing depth estimation, optical flow, odometry, loop closure, and end-to-end learning. Additionally, the review by Zeng et al. [61] explores the application of the transformer architecture in point cloud representations within the context of VSLAM.

4. Radar Representation

In this section, we focus on radar processing and the diverse ways in which radar data can be represented. As we dive into standard signal processing for radars, we focus on the two most commonly applied radar configurations for autonomous vehicles; automotive and spinning radar.

4.1. Radar Processing

Radar sensors have found utility across various applications since the 1930s and have gained particular popularity for perception tasks. This preference stems from their robustness and relatively affordable cost [5,9].

A radar sensor functions by emitting and receiving frequency-modulated continuous waves (FMCW), usually within frequency bands centered at 24 GHz or 77 GHz with a linearly increasing frequency. To achieve this, a frequency mixer is employed, combining the transmitted and received signals to manage high-speed sampling requirements. Subsequently, a low-pass filter is applied to eliminate the summed components, resulting in the extraction of an intermediate-frequency signal (IF). This IF signal can be captured by an Analog-to-Digital Converter (ADC), yielding a discrete-time complex ADC signal. The process of determining an object’s range involves analyzing the beat frequency, which emerges due to the time difference between transmission and reception [9].

Simultaneously, the Doppler velocity is ascertained by examining the phase shift within the IF signal. This phase shift arises due to the consecutive generation of FMCW waves, also referred to as chirps. The dimension of the sampling points within a single chirp is referred to as the fast time, and the dimension of a single chirp index across multiple chirps in a single frame is referred to as slow time. Under the assumptions that the range variations in slow time caused by target motion can be neglected due to a short frame time and by utilizing large frequency modulation to neglect the Doppler frequency in fast time, the range and Doppler calculations can be decoupled. A pivotal outcome of this process is a complex-valued data matrix, achieved through two discrete Fourier transforms carried out over the fast-time and slow-time dimensions. Known as the range–Doppler (RD) map, this matrix depicts power readings corresponding to specific range and Doppler values. This map offers valuable insight into the presence and motion of objects detected within the radar’s scope [5].

Every FMCW radar employs the outlined scheme to calculate range and Doppler components. However, techniques diverge when it comes to determining the object’s direction of arrival (DOA). This distinction hinges on the radar’s positioning and configuration, leading to two main categories of methods: automotive radars (AR) and spinning radars (SR). Figure 3 illustrates how each radar sensor configuration can be placed on a vehicle.

Figure 3.

AR stands for automotive radar sensors and SR stands for spinning a radar sensor. A single spinning radar sensor is installed on top of the vehicle, while multiple radar sensors are installed in front and in the corners or sides of the vehicle in an automotive radar configuration.

Spinning radar configurations have the advantage that they only consist of a single sensor placed on top of the vehicle. A sole external calibration matrix is used to project the objects into the vehicle’s frame of reference. For an automotive radar configuration, radar sensors, typically 4–8 small chips, are placed at different positions around the vehicle. Here, the information recorded by the sensors needs to be transformed into the same coordinate system, often into the vehicle coordinate system, to match the same detections recorded by different sensors. As such, point cloud representations are favored when working with AR configurations [5,9].

Table 2 provides an overview of presently utilized open-source radar datasets that are explicitly employed for ego-motion or ego-localization purposes using radar sensors. The datasets, discovered through the GitHub repository [62], were supplemented by additional datasets identified in Section 5.

Table 2.

Radar datasets applied for deep learning SLAM methods. Type: SR and AR stand for spinning radar and automotive radar. Scenarios: U,S,H,P,I,T,W stand for urban, suburban, highway, parking lot, indoors, tunnel, and waterways. Other sensors: C,L,O,S stand for camera, LiDAR, internal odometry sensor, and synthetic aperture radar (SAR). ✓ datasets include a Doppler measurement, and ✕ do not.

While mathematical expressions and derivations for variables like the IF signal, range, Doppler velocity, and DOA exist, these details are not covered here. This omission is due to the fact that radar-based odometry and ego-localization methods are typically applied post Constant False Alarm Rate (CFAR) processing, and have been covered extensively in other works [5,9]. Additionally, raw data representations are infrequently included in current publicly investigated datasets, making models dependent on the pre-processing of each respected dataset.

Here, it also has to be noted that pre-processing of radar data is an active ongoing research field. One further issue with radar sensors is the problem of mutual inference, especially when considering AR configurations. As the number of radar sensors increases, the radars can interfere with one another, potentially creating ghost object detections and a reduced signal-to-noise ratio [76]. This can be caused by radars from the same vehicle, or even from a different vehicle with a radar sensor. Methods exist that reduce the amount of interference between sensors [77], but are rarely discussed in public datasets.

It is important to highlight the differences between the mentioned setups of radar configuration in autonomous vehicles as many of the found methods in Section 5 are only applied for one or the other radar setup. These differences will be discussed next.

4.2. Automotive Radar

Automotive radars adopt a strategy of using multiple antennas, either in a single transmitting and multiple receiving (SIMO) configuration or with multiple transmitting and receiving antennas (MIMO) configuration, to determine the DOA. This determination relies on evaluating the phase change between the receiving antennas, coupled with the physical spatial separation between them. The DOA is deduced by performing an angle Fast Fourier Transform (FFT) over the receiver dimension, yielding a 3D tensor named the range–azimuth–Doppler (RAD) map. This tensor can be concatenated with the previously discussed range–Doppler (RD) map, resulting in a comprehensive representation of the direction and Doppler component of each reflection.

Traditionally, only the azimuth angle has been resolved. However, due to advancements in engineering, modern radar sensors are capable of resolving both azimuth and elevation angles [78,79]. This enhanced capability gives rise to a 4D radar map, encompassing range, azimuth, elevation, and Doppler features.

By employing a CFAR detector on the RAD tensor, radar detections can be transformed into point clouds. However, these point clouds often contend with issues arising from road clutter, interference, and multi-path effects due to the noisy IF signal. To refine detections, additional spatial–temporal filtering methods like density-based spatial clustering of applications with noise (DBSCAN) [80] or Kalman filters can be employed.

Besides the Doppler velocity, another important feature of a radar detection is the Radar Cross Section (RCS), which depends on the properties of the reflected target. It is the intensity recorded by a receiving antenna that was deflected by an object. The intensity is dependent on the material, size, and orientation of the object. The RCS is used to differentiate between different objects. For example, cars and thick walls have a high RCS, whereas pedestrians and small objects have a general low RCS [9].

Currently, most radar-based SLAM methods lack elevation resolution. Hence, point clouds are commonly represented as 2D BEV images. To enhance object visibility and detection, multiple SIMO/MIMO sensors are often placed on a vehicle to achieve a 360-degree field of vision, often positioned at the corners of the vehicle. However, combining the features of these multiple sensors necessitates further processing due to the spatial and temporal disparities, ultimately resulting in a unified representation. Each detection of the point cloud usually consists of spatial information, Doppler and an RCS component.

4.3. Spinning Radar

In contrast to automotive radars, spinning radars utilize a single transmitting and receiving antenna. Similar to LiDAR sensors, they are positioned atop a vehicle and rotate around their vertical axis. Like any FMCW radar, these radars leverage the frequency shift between transmitted and received waves to calculate the object’s range. The power of the returned signal provides valuable information regarding the object’s reflectivity, size, shape, and orientation concerning the receiver. However, the DOA is determined by the current angle of the spinning radar, generating a polar power spectrum [81,82].

This 2D polar spectrum is able to generate a less sparse radar representation compared to the heavily processed point cloud generated by the automotive radar. However, due to this dense representation, the Doppler and RCS component are often not included in spinning radar open datasets. Another issue is that a spinning radar setup is unable to determine any height information due to the lack of antennas. Thus, spinning radars are generally used in 2D scenarios [81,82].

It is important to note that radar datasets differ not only in their respective type, i.e., spinning and automotive, but also in their exact sensor configurations. E.g., although two systems use an automotive radar configuration, they can drastically differ in their working range and resolution, generally depending on the price of the sensor. The same goes for spinning radars. Radars with a higher spinning frequency will provide a higher resolution range-angle image, yielding in higher quality data.

5. Deep Learning Approaches

Within this section, we discuss and compare various deep learning architectures that have been applied to radar-based odometry and localization. Subsequently, we delve into the manner in which radar data are seamlessly integrated with other sensor inputs within the context of deep learning approaches.

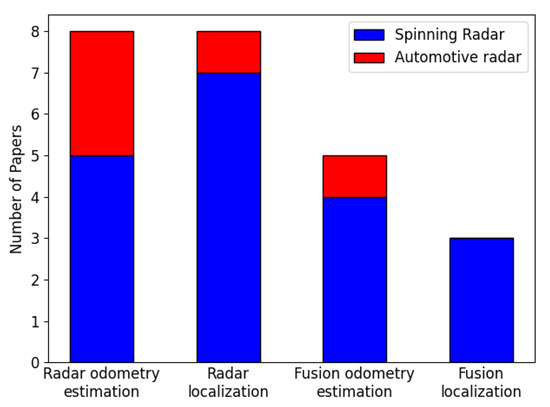

The investigation of various approaches involved a thorough search on Google Scholar and Web of Science. Initially, methods related to deep learning for radar-based odometry estimation or place recognition were identified on Google Scholar using keyword searches. Additional relevant methods were then found through a backwards search of the initially identified references. To complement the Google Scholar search, a similar search was conducted on Web of Science, using the same queries as those used in the Google Scholar search. The search utilized different permutations and choices of keywords and phrases such as “Deep learning”, “Learning-based”, “(Un)-supervised”, “Radar”, “Ego-localization”, “Localization”, “Scan matching”, “Scene flow”, “Odometry”, “Place recognition”, “Point-based”, “Sensor Fusion”, and “SLAM” which were connected with the conjunctions “and” and “for”. Given the relatively recent introduction of deep learning in radar-based SLAM, the search specifically considered methods published from 2016 onwards. This cutoff criterion was motivated by the famous paper by Wang et al. [83] using deep learning for visual odometry published in 2017. Figure 4 shows the number of papers investigated for odometry estimation and localization using deep learning and radar sensors. Here, the methods are also differentiated by radar setup, and if other modalities are also used by the method.

Figure 4.

Number of papers utilizing deep learning for radar-based odometry estimation and localization, as well as fusion approaches with a radar sensor.

As radars are very price-effective, they can be integrated in various systems as perception sensors. These systems can vary greatly in terms of available computational hardware and use-case scenarios. For example, autonomous cars have more space to carry a large Graphics Processing Unit (GPU) for processing, whilst a cleaning robot has very limited memory. On the other hand, the car operates in a more challenging environment, operating at potentially much larger scales and ego-velocities than the cleaning robot causing greater motion distortion. The methods introduced are thus not all comparable to one another and need to be interpreted for their respected datasets and use-cases.

An overview of the investigated methods, as well as the radar representation, datasets, its task, and the usage of deep learning are shown in Table 3.

Table 3.

List of deep learning methods applied for radar-based odometry and localization. SR stands for a spinning radar and AR for an automotive radar setup.

5.1. Odometry Estimation

In the realm of deep learning methods for odometry estimation, particularly from point cloud and image representations, a central challenge revolves around identifying pertinent features and effectively tracking them across successive frames. Should these relevant features be successfully identified and consistently tracked, a differentiable point matching mechanism like the Kabsch algorithm [108] can be employed to determine the transformation matrix between the frames. Yet, the crux of the matter remains in the ability to pinpoint these features and establish accurate point associations, constituting the core challenge in odometry estimation derived from sensor data.

The capacity of models to extract keypoints from both point clouds and images has undergone thorough investigation, particularly in the context of VSLAM and lidar-based SLAM methods [8,28,29]. Notably, when compared to other sensors, radar encounters a distinct challenge due to the prevalence of sparse and unreliable data.

A common method for determining transformation parameters involves a scan-matching approach, typically assuming that . The optimal ego-motion parameters are estimated by learning a mask that maximizes the overlap of the noise-free representation, and trained with a loss of the form [8]:

where and are the predicted translation and rotation matrices in Equation (4). is a fixed or learnable parameter to adjust the error between translation and rotation weight. is the identity matrix.

Barnes et al. [109] utilized a masking network in a U-Net encoder–decoder architecture to effectively filter out irrelevant regions within depth images, specifically for visual odometry. Drawing inspiration from this, Aldera et al. [84] employed a similar U-Net architecture to mask specific areas within a Cartesian radar representation image. The learned mask efficiently excludes non-relevant elements, such as noise, spurious detections, and moving objects. It is important to note, however, that this process disregards the Doppler velocity of detections. In this approach, the U-Net architecture serves as the sole component responsible for distinguishing relevant landmarks.

Barnes et al. [85] also utilized a convolutional neural network (CNN) to predict a mask for spinning radar scans. This model learns to filter out all non-essential detections from the radar scan. To determine the relative pose between two scans specifically, a mask is learned for a reference scan denoted as S1 and for another subsequent scan S2. Each mask is then multiplied with its respective scan. Scan S2 is then adjusted using possible pose offsets . Finally, Barnes et al. employed a brute force correlative matching technique to identify the most suitable transformation. The process involved calculating the discrete cross-correlation between the FFT of S1 and the FFT of S2, which was adjusted using the aforementioned mask and pose offsets.

Extending the foundation laid by [85], the study conducted by Weston et al. [86] takes a step forward by disentangling the search process for the rotation and translation components. This strategic decoupling serves to alleviate the computational complexity inherent in the correlative scan matching technique. Initially, the optimal angle is identified by assessing the cross-correlation of the masked scans in polar coordinates. This angle then serves as a pivotal factor in the subsequent correlative scan matching approach. By segregating the search for the rotation angle from the search for the translation component, the computational load is significantly reduced. This improvement in computational efficiency is achieved with only a minimal decline in performance when compared to the method proposed by [85].

An alternative approach to generating ego-motion predictions involves directly extracting keypoints from the data. Barnes et al. [87] implemented a U-Net encoder–decoder architecture with two decoder branches to gain insights into keypoints, weights, and descriptors. These components collectively contribute to determining a transformation matrix between two frames. The architectural design is adept at generating keypoints along with corresponding weights and descriptors for each of these keypoints. Subsequently, a matching process is applied to identify the keypoints that exhibit the most pronounced similarity. From these matched keypoints, a transformation is deduced using the Singular Value Decomposition (SVD) technique. A noteworthy aspect of their approach is the embedded differentiability within the keypoint matching and pose estimation processes. This design choice ensures that the parameters of the model remain differentiable, facilitating seamless integration with optimization processes.

Much like the approach taken in [87], Burnett et al. [88] also harnessed the capabilities of a U-Net architecture. This architecture was utilized to extract keypoints, weights, and descriptors from a spinning radar scan, ultimately facilitating the prediction of odometry across successive scans via an unsupervised methodology. The authors integrated a General Expectation-Maximization (GEM) scheme into their approach, with the primary aim of maximizing the evidence lower bound (ELBO). This combined strategy enables them to enhance the efficiency and effectiveness of their unsupervised odometry prediction process.

When finding update rules for the parameters, only the upper bound from Equation (5) is optimized. When assuming a multivariate Gaussian probability distribution for the posterior approximation (), it results in a new loss.

where is the latent trajectory, represents the data measurements, and represents the network parameters. During EM training, the parameters over the joint factor are split up into motion prior factors and measurement factors. For the motion prior factors, Burnett et al. apply a white-noise-on-acceleration prior presented by Anderson et al. [110], and the measurements factors are calculated using the learned keypoints, weights, and descriptors from the U-Net architecture. In the E-step of the GEM, the model parameters are hold fixed while optimizing Equation (6) for the posterior distribution , and during the M-step the posterior distribution is hold fixed while optimizing Equation (6) for the network parameters . A notable departure from the methodology proposed in [87] was the utilization of a moving window strategy. This approach involved incorporating more than just two consecutive radar scans into the analysis.

Another method to perform odometry estimation using external sensors is through scene flow prediction, which involves predicting the three-dimensional motion field of dynamic scenes. Such a method captures both the spatial and temporal aspects of static and dynamic objects within a perception scan of the environment. This is usually performed using a cost volume layer [111] to aggregate information across disparities or disparities and optical flow, facilitating the refinement of predictions. This layer computes matching costs between points at different disparities, enabling any network to capture intricate details and improve the accuracy of scene flow predictions by considering the cost relationships across different dimensions.

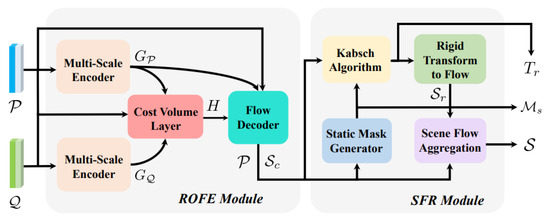

Ding et al. [89] introduced RaFLOW, depicted in Figure 5, a deep learning network specifically crafted for estimating the flow scene of a vehicle through the use of 4D automotive radar detections. The method involves processing two consecutive point clouds within a Radar-Oriented Flow Estimation module. Subsequently, a Static Flow Refinement module, employing the Kabsch algorithm [108], is applied to estimate the vehicle’s ego-motion. Leveraging the determined ego-motion, the model then determines the scene flow of dynamic detections. This method integrates the ego-motion as one loss where the objective is to determine the complete scene flow.

Figure 5.

Overview of RaFlow [89]. Two point clouds, and are inserted and first encoded using a multi-scale encoder. A cost volume layer is used with the point cloud and its features to approximate the scene flow of the entire scene in a flow decoder module. An additional static mask generator is used to filter out dynamic objects that could impact the ego-motion estimation by generating a mask . Finally, the Kabsch algorithm is applied to generate the ego-motion . This transformation is used to further fine-tune the rest of the scene by predicting the final scene flow . Reproduced with permission from [89].

Lu et al. [90] presented 4DRO-Net, a novel approach for predicting radar odometry from 4D radar data. This technique employs a coarse-to-fine hierarchical optimization strategy based on a sliding window estimation approach. Extracting separate features using PointNet-style feature encoders [112] from 4D radar data enables the fusion of features to generate both point and global features for each scan. The global features are instrumental in generating an initial pose estimate, while the point features contribute to a pose regression module utilizing a velocity-aware attentive cost volume layer between consecutive scaled point features. The corrected pose is then applied to the initial transformation assumption, yielding the final odometry between the two point clouds and resulting in ego-transformations at each investigated scale.

Finally, Almalioglu et al. [91] implemented Milli-RIO, a radar based odometry method based on point cloud allignment, A NDT [40] is used in an unscented Kalman-Filter (UKF) approach for point cloud alignment with a sophisticated point matching procedure. A RNN is implemented in the form of a LSTM layer as a motion model. Given a state of the system as

with being the state, the position, the rotation, the velocity, and bias of angular velocity at time t in Equation (7). Assuming a transition function for the sensor motion model and a constant bias for the bias of the angular velocity, the system state between consecutive scans is given by

A bidirectional-LSTM with 256 nodes is used to approximate the transition function in Equation (8). A bidirectional LSTM is chosen, as it should caption the forward and backward dependencies of the motion model. The network is trained with ground truth trajectories and the predicted state is fed into the UKF.

As seen, deep learning methods for radar-based odometry focus on either spinning or automotive radar data, depending on the available representation. The processing steps and implementations differ depending on the representation.

For automotive radar, the data are generally represented as a sparse detection point cloud. The RCS and Doppler values are used as further features of each detection. The trend for automotive radars tends toward predicting the flow of all detections in a scene, and thus predicting the ego-motion via stationary objects. A variety of different layers, including PointNet-style feature enhancers, linear, warping, and cost-volume layers, are used to best approximate this transformation.

For spinning radar data, the focus is on identifying relevant features and keypoints from image-like radar scans. These models are able to operate in a polar or cartesian space, taking advantage of the denser representation of spinning radar. CNNs and transformer architectures are integrated for spatial feature extraction, while LSTM and GRU layers are implemented to establish a temporal connection between the spatial features.

For both radar setup configurations, the focus is still on finding a 2D transformation matrix . Most papers also utilize some masking module in which irrelevant matches or features are filter out. Only recent models try to utilize 4D automotive radars to find a 3D ego-transformation matrix .

5.2. Radar Based Loop Closure

Deep learning methods have gained significant prominence in the domain of loop closure detection. They are commonly integrated into existing localization frameworks, often in conjunction with components such as a Vector of Locally Aggregated Descriptors (VLAD) layer.

The VLAD layer, first proposed by Arandjelovic et al. [113], summarizes local features from inputs into a compact representation. Local features are initially extracted from a given set of input data. These extracted features are then organized into clusters using a clustering algorithm like k-means clustering. This clustering step generates a hidden dictionary of visually meaningful words, also referred to as “visual words”. For each local feature, a determination is made as to which visual word in the dictionary it is closest to. This assignment is made based on a chosen distance metric, such as the cosine similarity or Euclidean distance. After the assignments are made, the differences between the assigned visual words and the original features are accumulated and normalized. This aggregation process results in a compact descriptor that encodes the distribution of the differences across the visual words. The resulting dimension of the descriptor is calculated as the product of the number of visual words in the dictionary (C) and the initial dimension of the local features (D), yielding a descriptor of size .

Building on this concept, the NetVLAD layer [114] extends the approach by introducing a learnable assignment stage prior to the VLAD aggregation. This involves incorporating a deep neural network that learns the encoding of the visual words and clusters. The training of the NetVLAD layer often employs a margin loss, which can be a triplet loss [115] or contrastive loss [116]. These loss functions guide the training process by encouraging meaningful representations in the learned descriptors space.

Equation (9) represents a standard triplet loss, where the variables have the following meanings: A refers to the anchor or query descriptor, P corresponds to positive descriptors that are physically close to the query location, and N denotes negative descriptors that are physically far away from the query location. represents the margin. Positives are generally chosen as locations that are closer than 5 m within the query and negatives are further than 15 m away from the query location.

Cai et al. [92] fused a deep spatial–temporal encoder with a dynamic points removal scheme for pre-processing and a RCS based re-ranking score as post processing. The architecture is shown in Figure 6. The filtered representation is passed through a NetVLAD layer and optimized using a triplet loss to generate a unique scene descriptor. Finally, a RCS re-ranking is used to match scenes with similar RCS values, filtering out non-relevant stationary features, and reject places as potential loop closure detections if the histogram of RCS values are too dissimilar between a scan and a scan at a denoted keypose. The authors tested this method on the NuScenes dataset.

Figure 6.

Similar place retrieval for Autoplace model [92]. Initially, a 2D point cloud, accompanied by its radial velocity and RCS score, serves as input. The first step involves filtering the point cloud to eliminate moving targets. The resultant filtered point cloud undergoes processing through a spatial–temporal encoder, comprising CNNs as spatial encoders and LSTMs as temporal encoders. The final representation is then fed through a NetVLAD layer, facilitating the extraction of essential features and spatial relationships. Subsequently, similar places, as determined by the NetVLAD layer, are further refined by re-ranking based on the Kullback–Leibler divergence of each RCS histogram. This comprehensive process enhances the model’s ability to understand and differentiate scenes based on radar features.

Suaftescu et al. [93] employed a VGG-16 backbone with a NetVLAD layer to extract a global invariant descriptor from a RA map captured by a spinning radar. The approach capitalizes on the polar representation of the scan, incorporating circular padding, anti-aliasing blurring, and azimuth-wise max-pooling techniques to achieve rotational invariance for the local descriptor. The network is optimized using a triplet loss, enhancing the separation between different classes within the data and aiming to extract robust features for radar-based place recognition. Building on this work, Gadd et al. [94] created a contrast-enhanced distance matrix between embeddings from places along reference and live trajectories. Place recognition is then performed through a closest neighborhood search based on the distance matrices of different places.

In a subsequent work, Gadd et al. [95,117] focused on unsupervised radar place recognition, employing an embedding learning approach to ensure that features of distinct instances are separated while features of augmented instances remain invariant. Using a VGG-19 as a frontend backbone, they extract local features that should be similar regardless of total augmentation. Similar to [94], difference matrices are created for localization based on a neighborhood search.

Yuan et al. [97] implemented a generative model, namely a variational autoencoder (VAE) [118], to create a place recognition method that incorporates the uncertainty to highlight the differences between positive sample pairs. The VAE is used to untangle the feature representation into variance of prediction-irrelevant uncertainty sources and semantic invariant descriptor. For negative pairs, the distance between the semantically invariant descriptors is maximized. For positive pairs, a Gaussian distribution is sampled with the found mean and variance of the VAE and added to the descriptor to take the uncertainty into consideration. This is especially relevant in highly noisy radar scans. The uncertainty also allows for a consisting mapping maintenance, rejecting samples generated with high uncertainty compared to the already present map data.

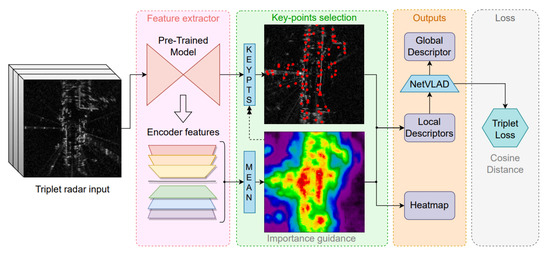

Usuelli et al. [96] extended the work by Burnett et al. [88] by sampling from local descriptors with relevant keypoints to perform place recognition, shown in Figure 7. These descriptors are then processed through a NetVLAD layer adapted for point-wise global localization, originally implemented for a lidar approach [119]. They also used the cosine distance metric to evaluate the triplet loss, enhancing the discriminative power of the extracted features.

Figure 7.

Training pipeline of RadarLCD for loop closure detection [96]. The model adopts the keypoint extraction and encoding structure from [87], with a modification in which the mean of the descriptor map is computed to generate an importance guidance map. This importance guidance map is then sampled at the keypoint locations and processed through a NetVLAD layer to generate a global descriptor. This pipeline ensures that keypoint information, coupled with an importance-guided global representation, is leveraged for effective perform radar-based loop closure detection. Reproduced with permission from [96].

Unlike prior works, Wang et al. [98] implemented RadarLoc that takes a single cartesian map from spinning radar scans as input and outputs a single pose based on GT information. The architecture of the convolutional network comprises a self-attention masking module, followed by a DenseNet [120] feature extractor, and finally two separate multi-layer perception (MLP) layers for the untangled determination of the translational and rotational component. This configuration facilitates the extraction of a feature map from the cartesian map input. Notably, a generalized max-pooling layer [121] is incorporated as a pooling mechanism, contributing to the extraction of global descriptors in the DenseNet module.

Adolfsson et al. [122] presented a sophisticated loop closure verification scheme for spinning radar data by employing two logistic regression classifiers. Although this method is not trained via deep learning, it is still relevant for state-of-the-art research. The process involves extracting hand-crafted CFEAR [36] features from a Cartesian Bird’s Eye View (BEV) radar scan as global descriptors. These global descriptors are then utilized to identify loop closure candidates. An alignment measurement between the two scans is applied, extracting various features from the joined point clouds, including joint and separate entropy, radar peak detections, and a measure of overlap. These features serve as input for training a logistic classifier, reflecting the similarity of the two point clouds based on their overlap. Finally, this similarity, combined with the point clouds’ similarity in odometry and global descriptor distance, is integrated into a logistic regression classifier scheme to determine a verified loop closure candidate. This comprehensive approach enhances the robustness of loop closure verification for spinning radar data.

The focus of radar based ego-localization can be categorized into two categories: methods that depend on training a model to generate unique local place descriptors, and methods that want to predict a global pose from a single scan. The models generating a local descriptor are often trained using a NetVLAD layer in an End2End approach. To some extent, the same modules were integrated from the odometry methods into the place recognition methods, including CNNs and LSTMs for spatial and temporal feature extraction. Similar and dissimilar places are extracted from the training data to force a small distance metric of feature descriptors of similar places, and large differences between places that are physically far apart. This leads to a large dependency on the chosen similar and dissimilar distance criteria, as well as on the investigated dataset.

The other main method to perform localization is by predicting the ego-pose directly from a local scan. These methods are far less researched in comparison to utilizing a BOW approach, because they do not rely on the usage of any map, and are thus very susceptible to over-fitting. However, these methods are more prevalent when adding an extra sensor to the system.

5.3. Fusion and Cascading Architectures

5.3.1. Vision Fusion

In many aspects, vision fusion has the greatest potential with radar data as the radar provides the cameras with much needed depth perception, while remaining relative cheap compared to a lidar for economical purposes. Simultaneously, vision also provides radar with much needed spatial context in “normal” lightning conditions [5,9].

Yu et al. [123] implemented a multi-modal deep localization scheme. The architecture contains two main branches; an image branch that encodes a camera image using CNN blocks and a point cloud branch with a PointNet [112] backbone. Fusion between the lidar and CNN branch is performed by spatial corresponding, point-wise image feature extraction, and feature fusion of each image encoder block and the resulting pointcloud representation block, which is then encoded into the pointcloud feature. Lastly, a NetVLAD layer is applied on the last fusion layer to train the network.

Almalioglu et al. [100] fuse camera data with range sensor data, namely radar or lidar, for a self-supervised geometry-aware multimodal ego-motion estimation network called GRAMME in all-weather scenarios. The network consists of various modules to estimate the ego-motion of a vehicle under various weather and lighting scenarios. A DepthNet U-Net produces depth images from camera inputs and a VisionNet predicts the ego-motion from consecutive camera frames. A MaskNet produces input masks from range inputs, while a RangeNet predicts the ego-motion based on the range sensor input. The RangeNet and VisionNet outputs are fused in a FusionNet module to predict the ego-motion. Finally, the results from the DepthNet, MaskNet, and FusionNet are combined in a spatial transformer to train the model in a complete supervised fashion.

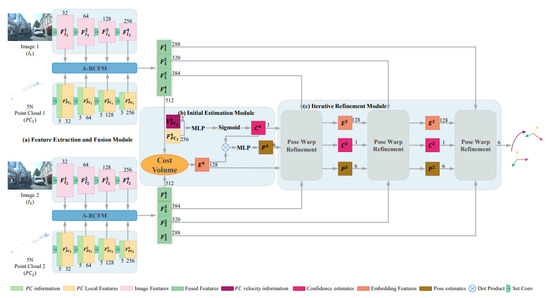

Zhuo et al. [124] implement a camera–radar fusion model for visual–radar odometry using 4D radar data called 4DRVO shown in Figure 8. The model fuses features using a multi-scale approach, where camera features are computed in a pyramid network and the radar features are computed in a pyramid style using an extension of PointNet++ [125] called Radar-PointNet++ where the additional RCS and Doppler information is incorporated besides the coordinate position of the detection. The visual and radar features are fused on multiple scales using the external camera–radar calibration matrix to project the radar features onto the feature map of the RGB image. The resulting projection and radar feature map is further fused using a deformable attention-based spatial cross-attention mechanism where the radar features identifies relevant objects in the RGB feature map. A cost volume layer is introduced to find associations between consecutive point clouds where a velocity-guided point confidence estimation is used to assign a confidence calibration to each radar detection, essentially learning to mask over non-stationary detections. MLPs are implemented to generate odometry estimation at each scale. Lastly, a Pose Warp Refinement Module is implemented to fuse the embeddings, confidence calibration, and pose estimation of the current and the next scale to further fine tune the pose estimation. The 4DRVO model is able to predict the odometry of the vehicle better than standalone radar or camera data and is even able to generate better results than a sole lidar sensor, demonstrating the importance of 4D radar data.

Figure 8.

4DRVO-Net [124] includes three main components. The first is a multi-scale extraction block for radar and visual data with an adaptive 4D radar–camera fusion module (A-RCFM) fusion block at each scale. Image features and 4D point features are extracted from the vision and 4D radar data respectively. The second is an initial pose estimation module that takes the two 4D point clouds at the coarsest scale and their features to produce a confidence score, embedding features and pose estimate. The final component is a pose refinement module that takes the confidences, embedding features, and predicted poses of a prior scale to progressively improve the estimates at each scale. Reproduced with permission from [124].

It is also possible to couple radars with other vision based sensors. Tang et al. [99,126] used satellite imagery to perform scan matching between an artificial and real radar scan. The authors implemented a generative-adversarial network (GAN) [127] to convert a satellite image to an artificial radar image. They used a conditional GAN, or cGAN, in order to condition the artificial radar image on a prior satellite image. The training consists of two parts. The first part involves training the cGAN to generate an artificial radar representation. The second part of the network consists of finding the translational and rotational components of the transformation matrix . Since no information about the altitude is present in either the spinning radar scan or the overhead image, no information about the height transformation can be inferred. The network splits the search for the translation and rotation component by determining the angle of rotation through the biggest correlation in the Fourier domain between the original radar data and rotated versions of the artificial scan. Finally, linear and convolutional layers are used to determine the translational offset. This approach requires an approximately correct ego-pose in order to obtain a satellite image.

5.3.2. Lidar Fusion

Over recent years, many authors have implemented deep generative models to generate specific synthetic data from custom input data, where the generation of radar to lidar is frequently performed, as expensive lidar data can be exchanged for cheap radars with a generative model. The generative model not only performs translation between sensors, such as lidar to radar [128] or radar to lidar [101], but also translations between lidar representations in different weather conditions [129].

Based on generative modelling, Yin et al. [101] introduce a GAN to convert a spinning radar scan into a synthetic lidar map. Instead of sharing polar features, this network generates a synthetic lidar BEV image from a radar scan. The synthetic lidar output aims to remove the clutter in the radar scan while generating features similar to a lidar scan. The synthetic lidar scan is then used by the authors along with a prior map for odometry and ego-localization in a particle filter localization scheme. The odometry is estimated using point-to-plane ICP between consecutive lidar scans. Localization is performed using a metric based on number of matched points to the map.

Yin et al. [102] perform odometry on a prior lidar map with a known position using a differentiable Kalman-Filter approach shown in Figure 9. A cartesian 2D radar scan and a lidar submap are fed through several U-Net architectures to extract two separate feature embeddings from each input sensor. The lidar embedding is rotated by a range of angles and translated by a range of possible solution factors and . The difference tensor between the radar embedding and each transformed lidar embedding is split up into patches and each patch is fed through another network to obtain a patch based difference. This difference is averaged and its softmin is taken to finally generate marginal probability distributions for each solution candidate, , and . The model is trained with three different loss functions. The first is a cross-entropy loss of the found marginal probability distributions using a one-hot encoding of the ground truth parameters. The second loss is the mean squared error between the ground truth parameters and the resulting estimated parameters from the marginal distributions and corresponding offset. Finally, the last loss is based on a differentiable Kalman-Filter to balance between the likelihood and a lower uncertainty in the posterior.

Figure 9.

Overview of the RaLL architecture [102]. Three networks, denoted as , , and , collectively generate a difference tensor across a predefined search space. Subsequently, another network, , referred to as the patch network, is employed to construct a single-valued number for each patch and potential offset combination. This can be expressed in a single tensor . Probability distributions are then formed based on this tensor across each dimension. Finally, the pose offsets are generated from these distributions. The obtained pose offsets can be utilized within a Kalman-Filter approach, complemented by IMU data. This comprehensive architecture integrates multiple networks to effectively generate and utilize pose information for enhanced localization. Reproduced with permission from [102].

In a similar fashion to the approaches presented in [101,102], Lisus et al. [103] utilize deep learning to localize a radar scan on a lidar map. Specifically, a U-Net network is employed to extract a mask from the radar scan, similar to the methodology by [85]. Concurrently, a point cloud is extracted from the radar scan using the BFAR detector [130], with features from the mask sampled at the extracted points serving as weight values. A significant part of this method relies on a differential ICP backend, which matches the extracted points with a reference lidar map.

Sun et al. [131] implement a GAN network for moving object detection in lidar data. Although radar data is not used directly by the deep learning module, the Doppler velocity of the radar scan acts as a verification tool to ensure that dynamic objects have been accurately determined. Additionally, lidar data are utilized to filter ghost objects in the radar data.

Yin et al. [104] conduct joint place recognition of radar and lidar scans by employing a shared encoder–decoder architecture for both the polar representation of a radar scan and a lidar submap. The output features are transformed into a BEV representation, and a 2D FFT is applied to generate a final vector descriptor. The network is trained using a joint training scheme, optimizing the loss with different sensors for each query sample (anchor, positive, negative).

Similar to the work by Yin et al. [104], the work by Nayak et al. [105] also leverages lidar submaps for radar-based localization on a lidar map. However, in contrast to [104], individual radar and lidar backbones are implemented using a Recurrent All-Pairs Field Transformer (RAFT) feature extractor [132] to generate a BEV feature representation and a unique descriptor for each sensor. This method utilizes a place recognition head for loop closure detection and a metric localization head to find the 3D pose on the lidar map. During training, the place recognition head is trained with a triplet loss between the current radar descriptor and a positive and negative example of a lidar submap to enforce the similarity of descriptors between similar places recorded with different sensors. Interestingly, the application of a final NetVLAD layer did not produce better localization results than the final CNN layers. The encoded positive lidar submap is further used in the metric localization head to predict the complete flow in the radar scene. Akin to [89], this method also utilizes the correlation volume between the two BEV encodings as input for a GRU to predict the scene flow. Utilizing this flow and the localization head, the ego-pose of the system in the lidar map can be inferred from a single radar scan.

Ding et al. [106] implement a cross-modal model using 4D automotive radar sensors, a lidar sensor, camera images, and IMU odometry sensors for a supervised scene flow approach. The primary objective is to predict the complete scene flow of a scan, encompassing the rigid ego-motion and motion of other dynamic objects. Relevant stationary points of consecutive scans and corresponding weights are estimated from 4D radar point clouds processed through a backbone network consisting of set conv layers [133], an initial flow estimation head, and a motion segmentation head. Similar to [89], an SVD decomposition is applied to the found coordinates to derive a rigid transformation matrix, which is trained using the transformation found with the IMU sensor.

5.3.3. Cascading Architectures

Next, we discuss two exemplary methods that employ cascading techniques involving the utilization of results from other models, such as the annotation of radar points during object detection and labeling in a sensor fusion approach. This is to motivate the usage of deep learning networks, e.g., as a pre-processing network that can assist in filtering out irrelevant detections with a non-learning based matching approach.

The work conducted by Isele et al. [107] integrates semantic segmentation results from other sensors to categorize radar detections into different classes. Subsequently, the segmented radar detections are filtered to retain only those belonging to relevant classes. The annotated radar point clouds are then subjected to NDT scan matching for alignment. Notably, deep learning is not directly applied to the radar data; instead, deep learning serves to enhance the radar features by incorporating information from other sensors. This approach showcases the synergistic integration of information from diverse sensors, leveraging deep learning to enrich the radar feature set with insights derived from semantic segmentation results. The cascading methodology enhances the discriminate power of radar data, ultimately contributing to improved matching accuracy in scenarios where collaborative sensor information is available.

Cheng et al. [134] use a GAN architecture to create synthetic pointclouds from a RD map. The CNN-based generator creates a mask for RD matrix, and the CNN-based discriminator is trained to differentiate between generator masks and Ground Truth masks created from lidar and synchronous radar data. The resulting synthetic point cloud can be used in object detection task or tracking, and in a SLAM approach for odometry estimation or ego-localization.

In fusion architectures, radar data is commonly utilized to generate denser synthetic lidar representations through techniques like GAN or VAE for odometry and ego-localization. Such approaches enable the use of synthetic data for odometry and localization methods tailored for denser lidar representations. However, generative networks operate on pre-processed radar representations, like point clouds or scan representations. This pre-processing may result in the loss of relevant information from the raw representation, replaced by synthetic data, potentially leading to inaccurate results.

5.4. Evaluation

In this subsection, we compare the introduced methods based on their radar setup, i.e., between automotive radar and spinning radar setups, for the odometry and ego-localization task. We try to compare these methods both in terms of how well they perform, but also try to highlight the importance of utilized hardware by analyzing the inference speed and device used to test the model. We also present relevant classical approaches for comparison to see how the deep learning models perform in comparison to established methods. The data come directly from the papers which are cited.

Finding a common ground for a fair comparison among the mentioned methods poses considerable challenges. To ensure a fair evaluation, methods should ideally be tested on the same dataset and under identical conditions. However, achieving this is complicated due to the inherent differences in datasets and conditions across various tasks, particularly when comparing methods designed for spinning radar sensors with those tailored for automotive radar datasets and vice versa.

Moreover, assessing inference speed is crucial for both odometry and localization tasks, especially in real-time applications like autonomous driving. The computation time of the model should ideally not be the primary bottleneck; instead, the focus should be on the data gathering speed of the perception sensor, such as radar data generation. This implies that fair comparisons require testing odometry or localization models on identical hardware.

However, practical challenges hinder the attainment of this ideal scenario. Data generation speeds can vary not only between different types of sensors (e.g., automotive vs. spinning) but even among sensors of the same type. For automotive sensors, additional pre-processing steps may introduce significant differences in data generation speeds between differently configured sensors or models. Standardizing to a single sensor is also impractical, as different applications demand varying sensor specifications, leading to differences in resolution, accuracy, and price.

Furthermore, the computational hardware of a system can constrain model choices. Implementing a large model may not be feasible if the system lacks the capability to support modern GPUs due to space or price limitations. Choosing an appropriate model that aligns with the environment of the system adds another layer of complexity, making the comparison of similar methods across different environments challenging. Despite these challenges, efforts to establish standardized evaluation metrics and practices will contribute to more meaningful comparisons in the field.

5.4.1. Odometry

In point-matching or pose estimation evaluations, determining the translational and rotational components of the pose difference between predicted and true poses is a fundamental aspect. The Absolute Trajectory Error (ATE) serves as a common metric, representing the average error for both translation and rotation components. Typically measured in meters (m) for translation and degrees (deg) for rotation, the ATE is calculated as follows for a pair of poses:

In Equation (10), and t are the predicted and true translational components, and and R are the predicted and true rotational components, respectively. The ATE combines these individual components into a single measure of translational and rotational error.

However, in the context of odometry estimation, where the global drift over a certain spatial extent is often more critical than small pose differences between consecutive frames, the ATE may not be the most appropriate metric. If a network learns to predict the transformation between two scans, it is more relevant to know how wrong the network is after some spatial or temporal extent than to know how the network performs in between consecutive scans. This is relevant especially in slow-moving systems with high data generation frequencies. An example would be the Oxford radar dataset, which operates at 4 Hz. Small pose differences may not accurately capture the global drift over a significant range in such cases.

To address this concern, the KITTI evaluation benchmark [135] introduces a metric that averages the relative position and rotation error over sub-sequences of various lengths (e.g., 100 m, 200 m, …, 800 m). The resulting translational and rotational ATE is reported as a percentage offset for the translational component and in degrees per 1000 m for the rotational component. This provides a more comprehensive evaluation metric, considering the performance of the system over the extended distances and offering a metric relevant for odometry tasks.

- Spinning Radar