Mental Workload Assessment Using Machine Learning Techniques Based on EEG and Eye Tracking Data

Abstract

:1. Introduction

- Analyzing the relationship between EEG and eye-related variables with task difficulty level and subjective workload evaluation, and comparing them with similar studies in the literature.

- Predicting task difficulty level, which is evaluated as the mental workload, using EEG and eye tracking data.

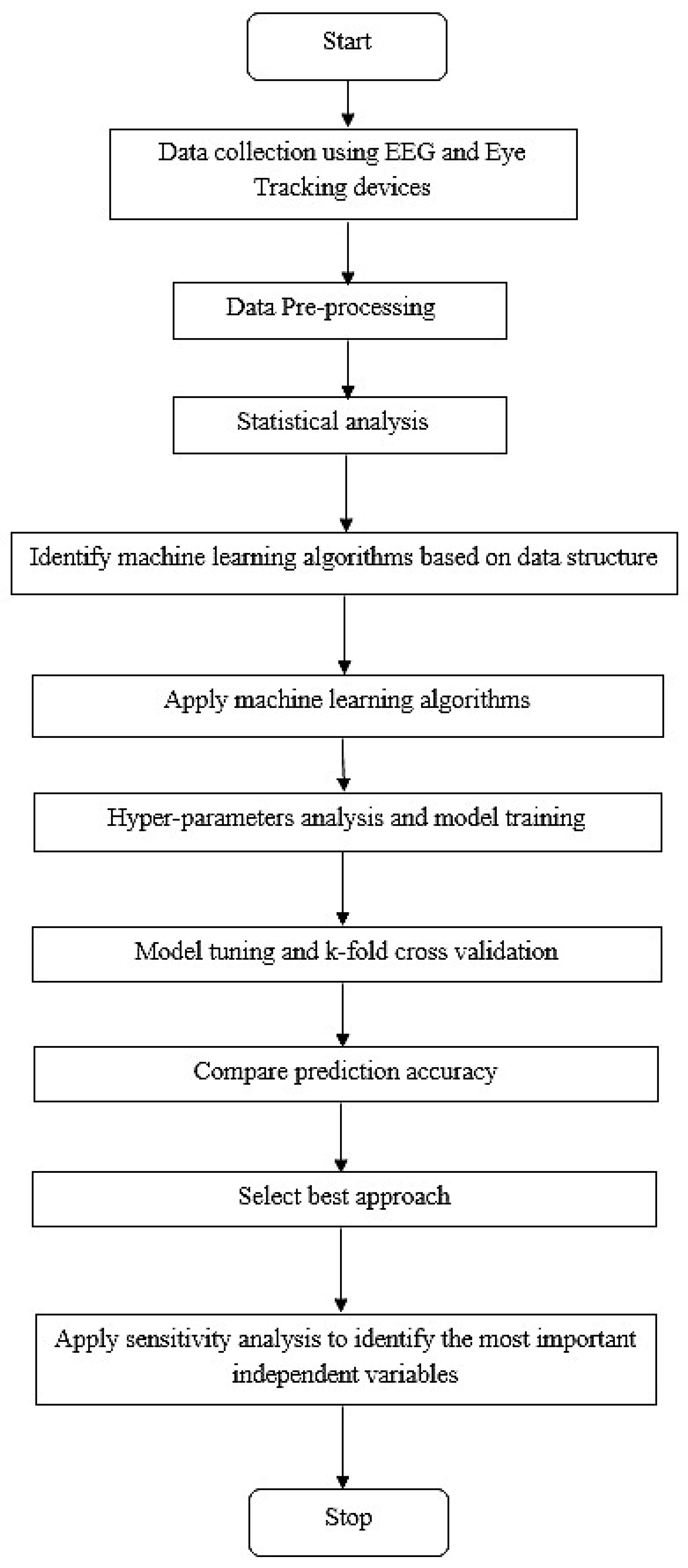

- Incorporating machine learning algorithms and utilizing the EEGLAB tool to enhance the analysis and interpretation of the results, thereby contributing to the existing literature. This research was carried out in the order shown in Figure 1.

2. Related Work

3. Materials and Methods

3.1. N-Back Task

3.2. Participants and Experimental Procedure

3.3. Data Acquisition and Pre-Processing

3.3.1. EEG Data

3.3.2. Eye Tracking Data

3.4. Datasets for Analysis

3.5. Brief Overview of Machine Learning Algorithms

3.5.1. K-Nearest Neighbors (KNN)

3.5.2. Random Forests

3.5.3. Artificial Neural Networks (ANNs)

3.5.4. Support Vector Machine (SVM)

3.5.5. Gradient Boosting Machines (GBM)

3.5.6. Extreme Gradient Boosting (XGBoost)

3.5.7. Light Gradient Boosting Machine (LightGBM)

4. Results and Discussion

4.1. Statistical Results

4.2. EEGLAB Study Results

4.3. Classification Results

4.4. Comparison with Previous Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Parasuraman, R.; Hancock, P.A. Adaptive control of mental workload. In Stress, Workload, and Fatigue; Hancock, P.A., Desmond, P.A., Eds.; Lawrence Erlbau: Mahwah, NJ, USA, 2001; pp. 305–333. [Google Scholar]

- Sweller, J. Cognitive load during problem solving: Effects on learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Bommer, S.C.; Fendley, M. A theoretical framework for evaluating mental workload resources in human systems design for manufacturing operations. Int. J. Ind. Ergon. 2018, 63, 7–17. [Google Scholar] [CrossRef]

- Rubio, S.; Diaz, E.; Martin, J.; Puente, J.M. Evaluation of Subjective Mental Workload: A Comparison of SWAT, NASA-TLX, and Workload Profile Methods. Appl. Psychol. 2004, 53, 61–86. [Google Scholar] [CrossRef]

- Ahlstrom, U.; Friedman-Berg, F.J. Using eye movement activity as a correlate of cognitive workload. Int. J. Ind. Ergon. 2006, 36, 623–636. [Google Scholar] [CrossRef]

- Benedetto, S.; Pedrotti, M.; Minin, L.; Baccino, T.; Re, A.; Montanari, R. Driver workload and eye blink duration. Transp. Res. Part F Traffic Psychol. Behav. 2011, 14, 199–208. [Google Scholar] [CrossRef]

- Gao, Q.; Wang, Y.; Song, F.; Li, Z.; Dong, X. Mental workload measurement for emergency operating procedures in digital nuclear power plants. Ergonomics 2013, 56, 1070–1085. [Google Scholar] [CrossRef] [PubMed]

- Reiner, M.; Gelfeld, T.M. Estimating mental workload through event-related fluctuations of pupil area during a task in a virtual world. Int. J. Psychophysiol. 2014, 93, 38–44. [Google Scholar] [CrossRef]

- Wanyan, X.; Zhuang, D.; Lin, Y.; Xiao, X.; Song, J.-W. Influence of mental workload on detecting information varieties revealed by mismatch negativity during flight simulation. Int. J. Ind. Ergon. 2018, 64, 1–7. [Google Scholar] [CrossRef]

- Radhakrishnan, V.; Louw, T.; Gonçalves, R.C.; Torrao, G.; Lenné, M.G.; Merat, N. Using pupillometry and gaze-based metrics for understanding drivers’ mental workload during automated driving. Transp. Res. Part F Traffic Psychol. Behav. 2023, 94, 254–267. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Grimes, D.; Tan, D.S.; Hudson, S.E.; Shenoy, P.; Rao, R.P. Feasibility and pragmatics of classifying working memory load with an electroencephalograph. In Proceedings of the CHI ′08: CHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 835–844. [Google Scholar]

- Liu, Y.; Ayaz, H.; Shewokis, P.A. Multisubject “Learning” for Mental Workload Classification Using Concurrent EEG, fNIRS, and Physiological Measures. Front. Hum. Neurosci. 2017, 11, 389. [Google Scholar] [CrossRef]

- Jusas, V.; Samuvel, S.G. Classification of Motor Imagery Using a Combination of User-Specific Band and Subject-Specific Band for Brain-Computer Interface. Appl. Sci. 2019, 9, 4990. [Google Scholar] [CrossRef]

- Yin, Z.; Zhang, J. Cross-session classification of mental workload levels using EEG and an adaptive deep learning model. Biomed. Signal Process. Control. 2017, 33, 30–47. [Google Scholar] [CrossRef]

- Pei, Z.; Wang, H.; Bezerianos, A.; Li, J. EEG-Based Multiclass Workload Identification Using Feature Fusion and Selection. IEEE Trans. Instrum. Meas. 2020, 70, 1–8. [Google Scholar] [CrossRef]

- Wang, Z.; Hope, R.M.; Wang, Z.; Ji, Q.; Gray, W.D. Cross-subject workload classification with a hierarchical Bayes model. NeuroImage 2012, 59, 64–69. [Google Scholar] [CrossRef]

- Kaczorowska, M.; Plechawska-Wójcik, M.; Tokovarov, M. Interpretable Machine Learning Models for Three-Way Classification of Cognitive Workload Levels for Eye-Tracking Features. Brain Sci. 2021, 11, 210. [Google Scholar] [CrossRef] [PubMed]

- Sassaroli, A.; Zheng, F.; Hirshfield, L.M.; Girouard, A.; Solovey, E.T.; Jacob, R.J.K.; Fantini, S. Discrimination of Mental Workload Levels in Human Subjects with Functional Near-Infrared Spectroscopy. J. Innov. Opt. Health Sci. 2008, 1, 227–237. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, Z.; Jia, M.; Tran, C.C.; Yan, S. Using Artificial Neural Networks for Predicting Mental Workload in Nuclear Power Plants Based on Eye Tracking. Nucl. Technol. 2019, 206, 94–106. [Google Scholar] [CrossRef]

- Subasi, A. Automatic recognition of alertness level from EEG by using neural network and wavelet coefficients. Expert Syst. Appl. 2005, 28, 701–711. [Google Scholar] [CrossRef]

- Li, Q.; Ng, K.K.; Yu, S.C.; Yiu, C.Y.; Lyu, M. Recognising situation awareness associated with different workloads using EEG and eye-tracking features in air traffic control tasks. Knowl. Based Syst. 2023, 260, 110179. [Google Scholar] [CrossRef]

- Borys, M.; Plechawska-Wójcik, M.; Wawrzyk, M.; Wesołowska. Classifying cognitive workload using eye activity and EEG features in arithmetic tasks. In Proceedings of the Information and Software Technologies: 23rd International Conference, ICIST 2017, Druskininkai, Lithuania, 12–14 October 2017; Springer International Publishing: Cham, Switzerland, 2018; pp. 90–105. [Google Scholar]

- Kaczorowska, M.; Wawrzyk, M.; Plechawska-Wójcik, M. Binary Classification of Cognitive Workload Levels with Oculog-raphy Features. In Proceedings of the Computer Information Systems and Industrial Management: 19th International Conference, CISIM 2020, Bialystok, Poland, 16–18 October 2020; Proceedings 19. Springer International Publishing: Cham, Switzerland, 2020; pp. 243–254. [Google Scholar]

- Qu, H.; Shan, Y.; Liu, Y.; Pang, L.; Fan, Z.; Zhang, J.; Wanyan, X. Mental workload classification method based on EEG independent component features. Appl. Sci. 2020, 10, 3036. [Google Scholar] [CrossRef]

- Lim, W.; Sourina, O.; Wang, L. STEW: Simultaneous task EEG workload dataset. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2106–2114. [Google Scholar] [CrossRef]

- Karacan, S.; Saraoğlu, H.M.; Kabay, S.C.; Akdağ, G.; Keskinkılıç, C.; Tosun, M. EEG-based mental workload estimation of multiple sclerosis patients. Signal Image Video Process. 2023, 17, 3293–3301. [Google Scholar] [CrossRef]

- Zhou, Y.; Xu, Z.; Niu, Y.; Wang, P.; Wen, X.; Wu, X.; Zhang, D. Cross-Task Cognitive Workload Recognition Based on EEG and Domain Adaptation. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 50–60. [Google Scholar] [CrossRef] [PubMed]

- Plechawska-Wójcik, M.; Tokovarov, M.; Kaczorowska, M.; Zapała, D. A Three-Class Classification of Cognitive Workload Based on EEG Spectral Data. Appl. Sci. 2019, 9, 5340. [Google Scholar] [CrossRef]

- Le, A.S.; Aoki, H.; Murase, F.; Ishida, K. A Novel Method for Classifying Driver Mental Workload Under Naturalistic Conditions with Information from Near-Infrared Spectroscopy. Front. Hum. Neurosci. 2018, 12, 431. [Google Scholar] [CrossRef]

- Herff, C.; Heger, D.; Fortmann, O.; Hennrich, J.; Putze, F.; Schultz, T. Mental workload during n-back task—Quantified in the prefrontal cortex using fNIRS. Front. Hum. Neurosci. 2014, 7, 935. [Google Scholar] [CrossRef] [PubMed]

- Ke, Y.; Qi, H.; Zhang, L.; Chen, S.; Jiao, X.; Zhou, P.; Zhao, X.; Wan, B.; Ming, D. Towards an effective cross-task mental workload recognition model using electroencephalography based on feature selection and support vector machine regression. Int. J. Psychophysiol. 2015, 98, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Tjolleng, A.; Jung, K.; Hong, W.; Lee, W.; Lee, B.; You, H.; Son, H.; Park, S. Classification of a Driver’s cognitive workload levels using artificial neural network on ECG signals. Appl. Ergon. 2017, 59, 326–332. [Google Scholar] [CrossRef]

- Harputlu Aksu, Ş.; Çakıt, E. A machine learning approach to classify mental workload based on eye tracking data. J. Fac. Eng. Archit. Gazi Univ. 2023, 38, 1027–1040. [Google Scholar]

- Aksu, Ş.H.; Çakıt, E.; Dağdeviren, M. Investigating the Relationship Between EEG Features and N-Back Task Difficulty Levels with NASA-TLX Scores Among Undergraduate Students. In Proceedings of the Intelligent Human Systems Integration (IHSI 2023): Integrating People and Intelligent Systems, 69, New York, NY, USA, 22–24 February 2023; pp. 115–123. [Google Scholar]

- Aksu, Ş.H.; Çakıt, E. Classifying mental workload using EEG data: A machine learning approach. In Proceedings of the International Conference on Applied Human Factors and Ergonomics (AHFE), New York, NY, USA, 24–28 July 2022; pp. 65–72. [Google Scholar]

- Oztürk, A. Transfer and Maintenance Effects of N-Back Working Memory Training in Interpreting Students: A Behavioural and Optical Brain Imaging Study. Ph.D. Thesis, Middle East Technical University, Informatics Institute, Ankara, Turkey, 2018. [Google Scholar]

- Monod, H.; Kapitaniak, B. Ergonomie; Masson Publishing: Paris, France, 1999. [Google Scholar]

- Galy, E.; Cariou, M.; Mélan, C. What is the relationship between mental workload factors and cognitive load types? Int. J. Psychophysiol. 2012, 83, 269–275. [Google Scholar] [CrossRef]

- Guan, K.; Chai, X.; Zhang, Z.; Li, Q.; Niu, H. Evaluation of mental workload in working memory tasks with different information types based on EEG. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Virtual, 1–5 November 2021; pp. 5682–5685. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Borys, M.; Tokovarov, M.; Wawrzyk, M.; Wesołowska, K.; Plechawska-Wójcik, M.; Dmytruk, R.; Kaczorowska, M. An analysis of eye-tracking and electroencephalography data for cognitive load measurement during arithmetic tasks. In Proceedings of the 2017 10th International Symposium on Advanced Topics in Electrical Engineering (ATEE), Bucharest, Romania, 23–25 March 2017; pp. 287–292. [Google Scholar]

- Sareen, E.; Singh, L.; Varkey, B.; Achary, K.; Gupta, A. EEG dataset of individuals with intellectual and developmental disorder and healthy controls under rest and music stimuli. Data Brief 2020, 30, 105488. [Google Scholar] [CrossRef]

- Rashid, A.; Qureshi, I.M. Eliminating Electroencephalogram Artefacts Using Independent Component Analysis. Int. J. Appl. Math. Electron. Comput. 2015, 3, 48–52. [Google Scholar] [CrossRef]

- Yan, S.; Wei, Y.; Tran, C.C. Evaluation and prediction mental workload in user interface of maritime operations using eye response. Int. J. Ind. Ergon. 2019, 71, 117–127. [Google Scholar] [CrossRef]

- Naveed, S.; Sikander, B.; Khiyal, M. Eye Tracking System with Blink Detection. J. Comput. 2012, 4, 50–60. [Google Scholar]

- Johns, M. The amplitude velocity ratio of blinks: A new method for monitoring drowsiness. Sleep 2003, 26, A51–A52. [Google Scholar]

- Raikwal, J.S.; Saxena, K. Performance Evaluation of SVM and K-Nearest Neighbor Algorithm over Medical Data set. Int. J. Comput. Appl. 2012, 50, 35–39. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Genuer, R.; Poggi, J.-M.; Tuleau-Malot, C. Variable selection using random forests. Pattern Recognit. Lett. 2010, 31, 2225–2236. [Google Scholar] [CrossRef]

- Cun, W.; Mo, R.; Chu, J.; Yu, S.; Zhang, H.; Fan, H.; Chen, Y.; Wang, M.; Wang, H.; Chen, C. Sitting posture detection and recognition of aircraft passengers using machine learning. Artif. Intell. Eng. Des. Anal. Manuf. 2021, 35, 284–294. [Google Scholar] [CrossRef]

- Rucco, M.; Giannini, F.; Lupinetti, K.; Monti, M. A methodology for part classification with supervised machine learning. Artif. Intell. Eng. Des. Anal. Manuf. 2018, 33, 100–113. [Google Scholar] [CrossRef]

- Çakıt, E.; Dağdeviren, M. Comparative analysis of machine learning algorithms for predicting standard time in a manufacturing environment. AI EDAM 2023, 37, e2. [Google Scholar] [CrossRef]

- Çakıt, E.; Durgun, B.; Cetik, O. A Neural Network Approach for Assessing the Relationship between Grip Strength and Hand Anthropometry. Neural Netw. World 2015, 25, 603–622. [Google Scholar] [CrossRef]

- Çakıt, E.; Karwowski, W. Soft computing applications in the field of human factors and ergonomics: A review of the past decade of research. Appl. Ergon. 2024, 114, 104132. [Google Scholar] [CrossRef] [PubMed]

- Noori, B. Classification of Customer Reviews Using Machine Learning Algorithms. Appl. Artif. Intell. 2021, 35, 567–588. [Google Scholar] [CrossRef]

- Çakıt, E.; Karwowski, W.; Bozkurt, H.; Ahram, T.; Thompson, W.; Mikusinski, P.; Lee, G. Investigating the relationship between adverse events and infrastructure development in an active war theater using soft computing techniques. Appl. Soft Comput. 2014, 25, 204–214. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation, 2nd ed.; Prentice Hall Inc.: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Sheela, K.G.; Deepa, S.N. Performance analysis of modeling framework for prediction in wind farms employing artificial neural networks. Soft Comput. 2013, 18, 607–615. [Google Scholar] [CrossRef]

- Zurada, J. Introduction to Artificial Neural Systems; West Publishing Company: St. Paul, MN, USA, 1992; Volume 8. [Google Scholar]

- Fausett, L. Fundamentals of Neural Networks; Pearson: London, UK, 1994. [Google Scholar]

- Cervantes, J.; Garcia-Lamont, F.; Rodríguez-Mazahua, L.; Lopez, A. A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 2020, 408, 189–215. [Google Scholar] [CrossRef]

- Brereton, R.G.; Lloyd, G.R. Support Vector Machines for classification and regression. Analyst 2009, 135, 230–267. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling; Springer: New York, NY, USA, 2013; Volume 26, p. 13. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Çakıt, E.; Dağdeviren, M. Predicting the percentage of student placement: A comparative study of machine learning algorithms. Educ. Inf. Technol. 2022, 27, 997–1022. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3146–3154. [Google Scholar]

| Model | Accuracy (%) | Kappa | Matthews Correlation Coefficient (MCC) | AUC |

|---|---|---|---|---|

| KNN | 54.85 | 0.40 | 0.40 | 0.80 |

| SVM | 62.15 | 0.49 | 0.50 | 0.85 |

| ANN | 59.00 | 0.45 | 0.45 | 0.82 |

| RF | 61.44 | 0.49 | 0.49 | 0.85 |

| GBM | 61.93 | 0.49 | 0.49 | 0.85 |

| XGBoost | 70.22 | 0.60 | 0.60 | 0.91 |

| LightGBM | 71.96 | 0.63 | 0.63 | 0.92 |

| Authors, Year | Number of Participants | Number of Classes | Measurement Tool | Method | Accuracy |

|---|---|---|---|---|---|

| Subasi [21] | 30 | 3 | EEG | ANN | 87–98% |

| Grimes et al. [12] | 8 | 2–4 | EEG | NB | 88–99% |

| Sassaroli et al. [19] | 3 | 3 | fNIRS | KNN (k = 3) | 44–72% |

| Wang et al. [17] | 8 | 3 | EEG | ANN, NB | 30–84% |

| Borys et al. [23] | 13 | 2–3 | EEG + Eye tracking | DT, LDA, LR, SVM, KNN | 73–90% |

| Liu et al. [13] | 21 | 3 | EEG + fNIRS | LDA, NB | 65% |

| Yin and Zhang [15] | 7 | 2 | EEG | DL | 85.7% |

| Le et al. [30] | 5 | 3 | fNIRS | DT, LDA, LR, SVM, KNN | 81.3–95.4% |

| Lim et al. [26] | 48 | 3 | EEG | SVM | 69% |

| Jusas and Samuvel [14] | 9 | 4 | EEG | LDA | 64% |

| Plechawska-Wojcik et al. [29] | 11 | 3 | EEG | SVM, DT, KNN, RF | 70.4–91.5% |

| Wu et al. [20] | 39 | 2 | Eye tracking | ANN | 97% |

| Kaczorowska et al. [24] | 26 | 2 | Eye tracking | SVM, KNN, RF | 97% |

| Qu et al. [25] | 10 | 3 | EEG | SVM | 79.8% |

| Kaczorowska et al. [18] | 29 | 3 | Eye tracking | LR, RF | 97% |

| Pei et al. [16] | 7 | 3 | EEG | RF | 75.9–84.3% |

| Zhou et al. [28] | 45 | 7 | EEG | KNN, SVM, LDA, ANN | 56% |

| Li et al. [22] | 28 | 2–3 | EEG +Eye tracking | SVM, RF, ANN | 54.2–82.7% |

| Şaşmaz et al. [27] | 45 | 3 | EEG | SVM, RF, LDA, ANN | 83.4% |

| Current study | 15 | 2–3–4 | EEG + Eye tracking | KNN, SVM, ANN, RF, GBM, XGBoost, LightGBM | 76–90% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aksu, Ş.H.; Çakıt, E.; Dağdeviren, M. Mental Workload Assessment Using Machine Learning Techniques Based on EEG and Eye Tracking Data. Appl. Sci. 2024, 14, 2282. https://doi.org/10.3390/app14062282

Aksu ŞH, Çakıt E, Dağdeviren M. Mental Workload Assessment Using Machine Learning Techniques Based on EEG and Eye Tracking Data. Applied Sciences. 2024; 14(6):2282. https://doi.org/10.3390/app14062282

Chicago/Turabian StyleAksu, Şeniz Harputlu, Erman Çakıt, and Metin Dağdeviren. 2024. "Mental Workload Assessment Using Machine Learning Techniques Based on EEG and Eye Tracking Data" Applied Sciences 14, no. 6: 2282. https://doi.org/10.3390/app14062282

APA StyleAksu, Ş. H., Çakıt, E., & Dağdeviren, M. (2024). Mental Workload Assessment Using Machine Learning Techniques Based on EEG and Eye Tracking Data. Applied Sciences, 14(6), 2282. https://doi.org/10.3390/app14062282