1. Introduction

In modern society, when important information about enterprises is released online, it generally needs to be as complete as possible. In order to keep the security and opacity of important data transmission, it is necessary to use some unimportant data to confuse and make it difficult to distinguish from an adversary. The more information is used to confuse important data, the better it is. Therefore, some papers (e.g., [

1,

2,

3,

4]) discussed the issue of maximum opaque sublanguages. However, in reality, due to the cost involved in using data transmission, less unimportant data and lower transmission costs are preferred. Therefore, this paper proposes an optimization mathematical model to find the most cost-effective information to confuse the important data to be released and proposes an algorithm to obtain the optimal control strategy.

In 2004, opacity was first introduced to analyze cryptographic protocols in [

5]. In 2005, the research modeled as Petri nets in [

6] brought opacity to the field of discrete event systems (DES). Afterward, the research on opacity in DES boomed up. In DES models, the definition of opacity was divided into two cases: language-based opacity [

1,

7,

8] (e.g., strong opacity [

7], weak opacity [

7], non-opacity [

7]) and state-based opacity [

6,

9,

10,

11,

12,

13] (e.g., current-state opacity [

6,

9], initial-state opacity [

6], initial-and-final-state opacity [

13],

K-step opacity [

9,

10,

11], infinite-step opacity [

12,

14]). In ref. [

15], the works of [

13] were extended, and it was shown that the various notions of opacity can be transformable to each other. Then, in Ref. [

16], the existing notions of opacity were unified and a general framework was provided. With the definition of opacity, the verification approach was investigated in the previous works. Once a system was not opaque, supervisory control theory [

1,

4,

17] or the enforcement approach [

18,

19,

20,

21,

22] were presented to ensure opacity in the system. In general, supervisory control theory restricts the behavior of the system to ensure opacity, whereas the enforcement approach does not restrict but modifies the output of the system to ensure opacity. For example, in Ref. [

1], fix-point theory was used to check the opacity of the closed system at every iteration to achieve the maximal permissive supervisor on condensed state estimates. In Ref. [

4], the maximal permissive supervisor was obtained using the refining of the plant and observer instead of condensed state estimates. In Ref. [

17], strong infinite- and

k-step opacity was transformed into a language and an algorithm was presented to enforce infinite- and

k-step opacity by a supervisor. In Ref. [

18], the synthesis of insertion function was extended from current-state opacity [

23] to infinite-step opacity and

K-step opacity. In Ref. [

19], fictitious events were inserted at the output of the systems to enforce the opacity of the system. And some works [

20,

21] extended the method of [

19], where the authors of Ref. [

20] discussed the problem of opacity enforcement under energy constraints and the authors of Ref. [

21] studied supervisory control under local mean payoff constraints. In Ref. [

22], current-state opacity was verified and enforced based on an algebraic state space approach for partially observed finite automaton.

To ensure opacity, in Ref. [

24], some algorithms were proposed to design a controller to control information released to the public. And then, in Ref. [

25], the work of [

24] was extended, and then an algorithm was presented for finding minimal information release policies for non-opacity. In Ref. [

26], a reward was assigned for revealing the information of each transition and the maximum guaranteed payoff policy was found for the weighted DES. In Ref. [

27], a dynamic information release mechanism was proposed to verify current-state opacity where information is partially released and state-dependent.

To ensure opacity, the secret is preserved by enabling/disabling some events to restrict the behavior of the system. If cost functions are defined in DES, two types of optimal supervisory control problems are developed: one is for event cost, and the other is for control cost. For example, in Ref. [

28], the cost of events was defined to design a supervisor to minimize the total cost to reach the desired states. Then, in Ref. [

29], the framework was extended to the partial observation of the system. Afterward, in Ref. [

30], the mean payoff supervisory control problem was investigated for a system where each event has an integer associated with it. In [

31,

32], the costs of choosing control input and occurring event were defined and two optimal problems to minimize the maximal discounted total cost among all possible strings generated in the systems were solved using Markov decision processes.

In contrast to the supremal opaque sublanguage of the plant in [

1,

2,

3,

4,

8], we want to find a ‘smallest’ closed controllable sublanguage of the plant, concerning which the secret is not only opaque but also ‘largest’. Since the class of opaque languages is not closed under intersection [

8], ‘smallest’ does not refer to set inclusion, but to minimal discount total choosing cost. On the other hand, ‘largest’ refers to set inclusion, where it means the union of all the elements of the class of confused secret. To describe the optimal problem, a non-linear optimal supervisory control model is proposed by introducing the concept of choosing cost.

The paper is organized as follows.

Section 2 establishes the background on supervisory control theory, opacity, and choosing cost of DES. In

Section 3, we present an optimal supervisory control problem that is modeled by non-linear programming with two constraint conditions. In

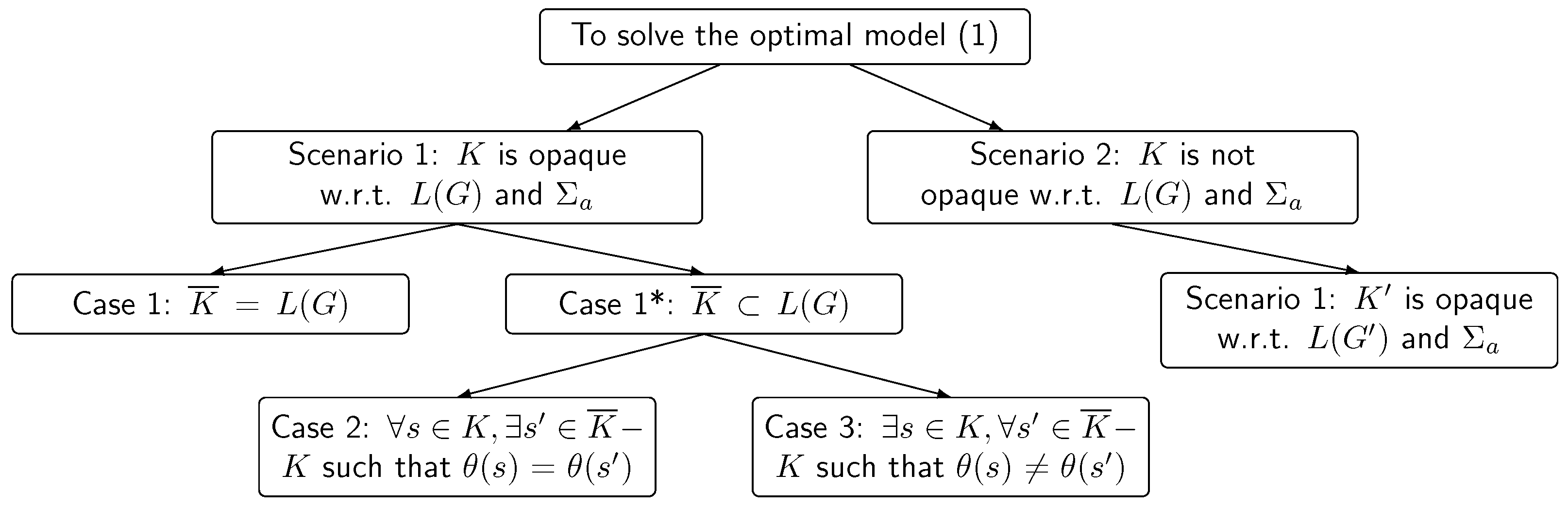

Section 4, we propose two scenarios to show the computational process of the optimal problem. The first scenario is divided into three cases in

Section 5, where we suppose that the plant can ensure the secret is opaque, and some algorithms and theorems are put forward to the solution of the optimal problem. In

Section 6, a generalized algorithm and theorem are proposed under the second scenario, where the plant cannot ensure the secret is opaque. Finally, the main contribution of the work is discussed in

Section 7.

3. Optimal Supervisory Control Model on Opacity

In the section, an optimal supervisory control model is constructed to minimize the choosing cost of the controlled system which is opaque. Given system

G and secret

, as shown in [

1], secret

K is a regular language. For secret

K, we suppose there exists a set of secret states

which can recognize secret

K, i.e.,

iff

.

To show the cost of information released, we introduce the cost of choosing control input in [

31,

32] and define the total choosing cost as follows:

Definition 4. Given closed-loop behavior , the discount total choosing cost of is defined as , where f is a supervisor controlling system G and is a discount factor. For convenience, is simplified as .

For system G, if there exist two supervisors and such that , it is obvious that by Definition 4.

To obtain the discount total choosing cost, we definite discount cost by the sum of choosing cost after s under f.

Definition 5. We consider s, and . Then, is said to be the discount cost of choosing after s under f, where and . Particularly, if , i.e., , then ; if , then and , where means that choosing at s has nothing to do with Σ.

In Definition 5, if some string can be divided into two pieces, a formula is formulated to simplify the computation of discount cost in the following conclusions:

Proposition 1. We consider . Then, we have .

Proof. For

, we proved the following formula, where

and

:

□

To generalize the above Proposition 1, we have the following conclusion as Proposition 2:

Proposition 2. We consider . Then, we have .

Proof. The proof can proceed by induction.

Base case: If , then it holds that .

Inductive hypothesis: Suppose that we have if .

Inductive step: For , we prove that .

By Definition 5, we have the following, which completes the inductive step:

□

To obtain the discount total choosing cost, we formulate Algorithm 1 to obtain

by the computation of

.

| Algorithm 1 Computing , the discount total choosing cost of |

Input: ;

Output: .

- 1:

Let root = . - 2:

Let . - 3:

for do - 4:

Compute the in-degree of state q. - 5:

if in-degree of state q is more than 1 then - 6:

Copy state q and its successor states and traces based on its in-degree. - 7:

Insert the states q and its successor into Q and . - 8:

end if - 9:

end for - 10:

for do - 11:

Compute the out-degree of state q. - 12:

if out-degree =1 then - 13:

Concatenate the transitions from q’s parent to q’s child via q, i.e., achieve a transition () from the transitions ( and ). - 14:

- 15:

end if - 16:

end for - 17:

At some node q, if there exists a transition s from root to q and a transition from q to its child (i.e., root ’s child), we have . - 18:

By breadth-first search, we traverse all the nodes and have - 19:

return

|

As shown in Algorithm 1, closed-system can be transformed into a tree automaton, where the closed-system is language-equivalent with the tree automaton. To obtain the longest common prefix of some strings, we need to compute the out-degree of each node. In the tree automaton, if the out-degree of some node is greater than one, the string from the root to the node must be the longest common prefix of some strings from the root to the leaves via the node.

To show the computational process of Algorithm 1, an example is given to obtain the discount total choosing cost.

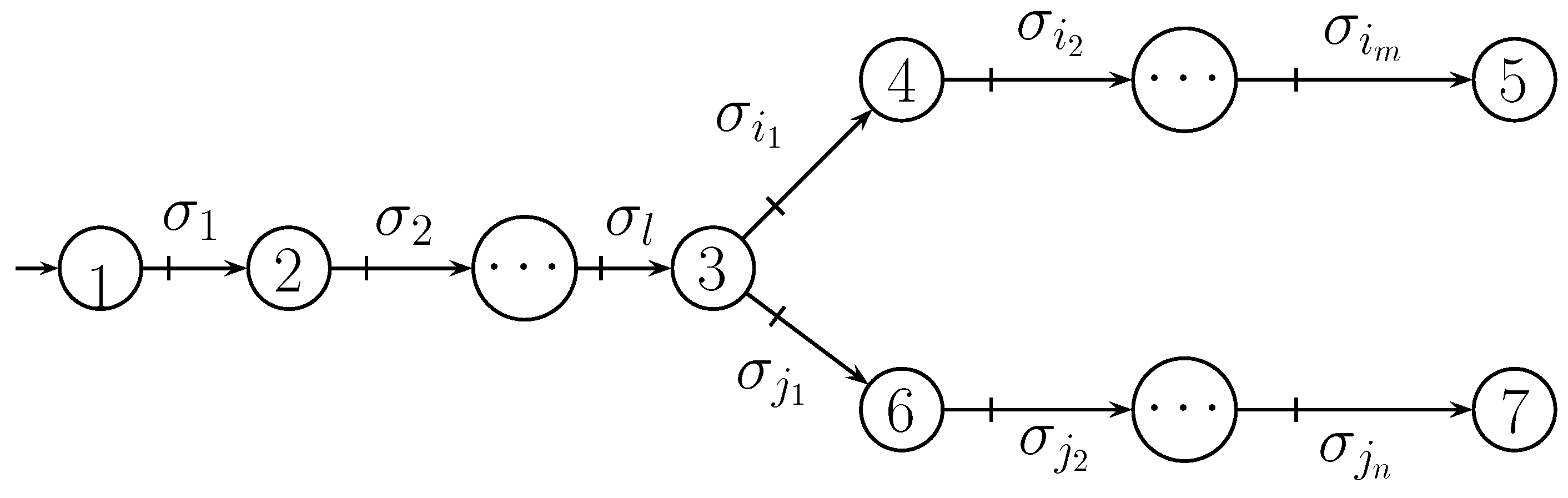

Example 1. Suppose that and t is the longest common prefix of and . To simplify the process of computation in Definition 4, we denote and such that and . By Definition 4, we have the following equation: According to Algorithm 1, we transform in Figure 1 into a tree automaton shown in Figure 2. By Figure 2 and Algorithm 1, we have the following formula: If there exists language K such that , the discount total choosing cost of can be denoted by and obtained as shown in Algorithm 1. If we denote by , we can also obtain the discount cost using Definition 5. means to compute the choosing cost outside of in . Next, we continue the above Example 1.

Example 2. In Figure 1, we assume there exists state subset such that . By Algorithm 1, we obtain a tree automaton based on shown in Figure 3. So, it holds that . According to the formulas of and , we have the following equation: For the system and the secret, we propose an optimal problem to synthesize a supervisor such that the discount total choosing cost of the controlled system is minimal.

Optimal opacity-enforcing problem. Given system G and secret , we find supervisor f such that satisfies the following conditions:

- 1.

K is opaque with respect to and ;

- 2.

All the secret information that has not been leaked in G remains in the closed-loop system ;

- 3.

For closed-loop behavior , discount total choosing cost is minimal.

In Condition 1 of the above problem, if K is opaque with respect to and , we have by Definition 3.

In Condition 2, if the supremal controllable and closed sublanguage of

are denoted by

[

4], the largest secret

K permitted by supervisor

f is

. So, all the secret information that is not leaked in

G is

. Therefore, the second condition implies that

holds.

In Condition 3, we hope that objective function is minimal.

Based on the above optimal problem and its analysis, a non-linear optimal model is formulated as follows:

For the above optimal Model (

1), the objective function means that the supervised system’s discount total choosing cost is minimal. And the first constraint condition means that

K is opaque with respect to the controlled system. The latter implies that the biggest number of secrets not to be disclosed is in the controlled system.

5. Scenario 1: Secret K Is Opaque with Respect to L(G) and

We consider language

and regular language (secret)

. By [

4], we have

for the reason that

is the supremal opaque sublanguage of

. Therefore, the second condition of Model (

1) is equivalent to formula

, which implies that

. Since

is prefix-closed, it is obvious that

is equivalent to formula

. So, in Model (

1), the second constraint condition can be equivalently reduced to

. And then, optimal Model (

1) can be displayed as the following Model (

2):

To solve optimal Model (

2), we consider the following three cases:

5.1. Case 1:

We have

in Condition 2 of Model (

2). To ensure the feasible region is not empty, we can obtain supervisor

f such that

. So, we have the following theorem:

Theorem 2. We have system G and secret . In Case 1 of Scenario 1, is an optimal solution of optimal Model (

2).

Proof. From the above analysis, it is obvious that is the unique solution of the feasible set. So, is minimal. □

5.2. Case 2: and Such That

In Case 2, the condition means that any secret cannot be distinguished from some non-secret in closure .

For secret

K and its closure

, we have

. If there exists a closed-loop system

such that

, it obviously can ensure the opacity of secret

K. So, the feasible region of Model (

2) is not empty.

To find supervisor f, we infer whether is controllable with respect to .

If

is controllable with respect to

, there exists supervisor

f such that

. For closed-loop behavior

, it satisfies the two constraint conditions of Model (

2). So, the feasible region of Model (

2) is not empty.

If

is not controllable with respect to

, we can find a controllable and closed superlanguage

of

. Superlanguage

not only ensures the opacity of

K(in Theorem A1 of

Appendix A), but also maximizes secret

K. So, the feasible region of Model (

2) is not empty.

According to the above analysis, the following Theorem 3 states that an optimal solution of Model (

2) can be obtained using the controllability of

or superlanguage

.

Theorem 3. We consider system G and secret . In Case 2 of Scenario 1, we have the following conclusions about the optimal solution of Model (

2):

- 1

If is controllable with respect to , there exists supervisor f such that , which is the optimal solution of Model (

2).

- 2

If is not controllable with respect to , there exists supervisor f such that , which is the optimal solution of Model (

2).

Proof. Firstly, we prove that closed-loop behavior

of Theorem 3 is a feasible solution of optimal Model (

2).

If is controllable with respect to , is a closed and controllable sublanguage of . So, there exists supervisor f such that . If is not controllable with respect to , it is obvious that is the infimal closed and controllable superlanguage of . So, there exists supervisor f such that .

Therefore, we have

which means constraint Condition 2 of Model (

2) is true.

By Theorem A1 of

Appendix A, we conclude that

can ensure the opacity of

K under Case 2 of Scenario 1, which implies that constraint Condition 1 of Model (

2) is true.

From the above points, it is true that

in Theorem 3 is a feasible solution of Model (

2).

Next, we prove by contraction that the discount total choosing costs of

produced in Theorem 3 are minimal. We assume that there exists feasible solution

of Model (

2) such that

.

According to constraint ondition 2, we have . Afterward, we consider the controllability of .

If is controllable, it holds that by Theorem 1, which means . So, we have , which contracts with .

If is not controllable, it holds that by Theorem 1. For any , there exists such that and hold. As shown in Proposition 2 and Assumption 1, we have . So, it holds that . According to formula , it is true that , which contracts with .

In summary, it is true that , which means that the discount total choosing costs of in Theorem 3 are minimal. □

According to the proof of Theorem 3, we have the following corollaries:

Corollary 1. We consider language L. If new language is considered as the concatenation of any string of L with an uncontrollable string (i.e., ), then the discount total choosing costs of L and are the same, that is, .

Corollary 2. We consider system G and secret . In Case 2 of Scenario 1, holds, where is the closed-loop system in Theorem 3.

Example 3. We consider finite transition system shown in Figure 5, where . Obviously, for system G, Assumption 1 is true. We suppose that secret , which can be recognized by . To show choosing cost , label means that if there is a transition from p to q by ·, notation n denotes choosing cost . For control input Γ, the cost of choosing is defined as . We assume that the adversary has complete knowledge of the supervisor’s control policy. From the adversary’s view, the adversary can see a partial set of events, denoted by . For secret K, it can be verified that K is opaque with respect to and (Scenario 1). To reduce the choosing cost, closed-loop system can be obtained in Theorem 3, where is shown in Figure 6. By Definition 5 and Algorithm 1, 1.726 is minimal.

5.3. Case 3: and Such That

In Case 3, the condition means that there exists some secret in K such that all the non-secrets confused with them are outside of .

For , we let be a set of some secret which cannot be confused by any string in , and be a set of strings which can confuse the secret of . For language L, we call the coset (or equivalence class) of s with respect to L and , where is said to be the equivalent string of s. And is defined as the quotient set of L with respect to coset . For a determined finite transition system, the number of strings in L is finite and the length of each string of L is finite, too. Obviously, coset and quotient set are also finite.

To solve Model (

2), Algorithm 3 shown as follows is proposed by referring to Function 1 (seen in Algorithm 4) and Function 2 (seen in Algorithm 5).

| Algorithm 3 Optimal supervisory control I |

Input: Automaton G, secret K and choosing cost ;

Output: Closed-loop language .

- 1:

Construct language - 2:

- 3:

for do - 4:

- 5:

if then - 6:

Continue - 7:

end if - 8:

- 9:

- 10:

- 11:

end for - 12:

- 13:

- 14:

- 15:

In Label, find and sort from to , i.e., - 16:

- 17:

By Theorem 3, can be got from - 18:

return

|

In Line 13 of Algorithm 3, Function 1 (in Algorithm 4) shows how to compute the choosing cost outside of the closure of secret K. For a quotient set, we take any string of a coset and obtain a prefix with a maximal length in the closure of the secret. And then, we compute the discount cost of choosing the remaining string after the prefix. The specific process is shown in Algorithm 4.

In Line 14 of Algorithm 3, Function 2 constructs a weighted directed diagram with multi-stages and produces a path with minimal discount total choosing cost. For the diagram, the elements of a set

H are regarded as the stages, and the elements of

are defined as the nodes of each stage. Based on dynamic programming, the optimal weight between different nodes of adjacent stages is obtained in Function 3 (in Algorithm 6). Then, the weighted directed diagram is obtained. For every node of the diagram, an ordered pair is obtained by employing Function 3 (in Algorithm 6). For the ordered pair, the first element is the set of shortest paths with minimal discount total choosing cost from the starting node to the current node, and the second is the discount total choosing cost of the path. When the current node is the ending node, the path with minimal discount total choosing cost is obtained. The specific processes are shown in Algorithms 5 and 6.

| Algorithm 4 Calculation of the choosing cost outside of |

Input: Quotient set R and secret K;

Output: Choosing cost V outside of .

- 1:

for do - 2:

for do - 3:

let n be the length of s, and s is denoted by - 4:

- 5:

take , and suppose that - 6:

while do - 7:

if then - 8:

- 9:

Break - 10:

end if - 11:

if then - 12:

Break - 13:

end if - 14:

- 15:

end while - 16:

compute the cost t after , that is - 17:

end for - 18:

end for - 19:

return

|

| Algorithm 5 Finding the set of strings whose choosing cost of the path from the starting node is minimal |

Input: and V, where is the number of the elements of H;

Output: the strings set of the shorted path from the starting node to the ending node.

- 1:

- 2:

- 3:

for do - 4:

for do - 5:

if then - 6:

- 7:

- 8:

- 9:

else - 10:

for do - 11:

- 12:

- 13:

end for - 14:

- 15:

- 16:

- 17:

end if - 18:

end for - 19:

end for - 20:

- 21:

for do - 22:

- 23:

end for - 24:

- 25:

- 26:

return

|

| Algorithm 6 Computing optimal weight value between and s |

Input: ;

Output: Optimal weight value from node to node s.

- 1:

- 2:

for do - 3:

compute the longest common prefix of s and such that and , and - 4:

if then - 5:

compute the cost after , - 6:

- 7:

else - 8:

if then - 9:

- 10:

Break - 11:

else - 12:

- 13:

end if - 14:

end if - 15:

end for - 16:

return

|

According to the calculation process of Algorithm 3, we have the following theorem to show the solution of Model (

2):

Theorem 4. We consider system G and secret . In Case 3 of Scenario 1, closed-loop behavior produced in Algorithm 3 is an optimal solution of Model (

2).

Proof. We first show that closed-loop behavior

produced in Algorithm 3 is a feasible solution of optimal Model (

2).

- 1.

Proof of the opacity (the first constraint condition).

As shown in Case 3, the secret of can be confused by the non-secret strings of . For , all the non-secrets in which cannot be distinguished form the secret in are in of Line 12. At Lines 14 and 15, string is from , where . At Lines 15 and 16, we have and . So, closed-loop behavior produced in Algorithm 3 can ensure the opacity of secret K.

- 2.

Proof of the secret remaining in the closed-loop system being maximal (the second constraint condition).

According to Lines 15 and 16, it holds that . So, the second constraint condition is true.

To sum up, the closed-loop behavior

obtained in Algorithm 3 is a feasible solution of optimal Model (

2).

Secondly, we show that the discount total choosing cost of closed-loop behavior produced by Algorithm 3 is minimal.

Since it holds that

, the discount total choosing cost of

can be computed as follows:

is finite. To minimize the discount total choosing cost of

, we need to show

is minimal using Formula (

3). As shown in Line 1 of Algorithm 3, it is obvious that language

L contains all the non-secrets in

, which cannot be distinguished from all the secrets of

. So, if we want to make

minimal, all the strings

s in

must come from

L. And then

holds. According to Lines 3–11 of Algorithm 3, we have

. And all the strings in

can confuse one secret of

and its equivalent secret. Only one string is chosen in each set,

of

H, which is the necessary condition to minimize

.

At Line 13 of Algorithm 3 (i.e., function 1 of Algorithm 4), all the strings in L are traversed and choosing cost after can be obtained, where and .

At Line 14 of Algorithm 3, a diagram with multi-stages is constructed in , where initial node and ending node are virtual, is the set of nodes in the jth stage. To only pick a string in each , we find a path from to . And then, Algorithm 6 is proposed to optimize weight of transition from node to node s between adjacent stages. For optimal weight, it is obvious that the discount total choosing cost of each node (i.e., string) of the path is equal to the total weight of the path (at Line 12 of Algorithm 5). At Lines 3–19 of Algorithm 5, the shortest path and its minimal discount total choosing cost of the jth stage can be obtained by and of the th stage based on dynamical programming. As shown in the above analysis about Line 14 of Algorithm 3 (i.e., Function 2 in Algorithm 5), the first element of the ordered pair () is the shorted path (i.e., the set of strings) with minimal discount total choosing cost from starting node to current node , and the second is the discount total choosing cost of the path. When the current node is (i.e., Line 20 of Algorithm 5), is the shorted path from the initial to the ending node and is the minimal discount total choosing cost of the path (see Lines 21–25 of Algorithm 5). So, is the subset of L, whose discount total choosing cost is minimal and whose strings can confuse all the secrets of .

At Lines 16 and 17 of Algorithm 3, closed-loop behavior can be ensured to be controllable and closed by Corollary 2. The discount total choosing is minimal as shown in the above analysis.

All in all, closed-loop behavior

produced in Algorithm 3 is an optimal solution of Model (

2). □

Example 4. We consider finite transition system and secret shown in Figure 7, where and K can be recognized by . We suppose that the adversary has complete knowledge of the supervisor’s control policy, and the observed set of events by the adversary is . It is verified that K is opaque with respect to and . But K is not opaque with respect to and , i.e., secret cannot be confused by any non-secret of . Case 3 of Scenario 1 is fulfilled and Assumption 1 is true. Next, we construct closed-loop behavior using Algorithm 3. For system G and secret K, we obtain language , where the strings in cannot be confused by any strings of . From the opacity of , we can find sub-language , whose strings cannot be distinguished with the secret in . For language L, we offer the following computational process:

We take , and then we have and .

We take , and then we have and .

So, quotient set is a partition of L.

For , we can compute the choosing cost of the suffix of the non-secret string out of in the first stage (seen in Function 1 of Algorithm 4).

If , we have and .

If , we have and .

If , we have and .

For coset , we can similarly obtain the following in the second stage:

If , we have and .

If , we have and .

If , we have and .

If , we have and .

Based on and the choosing cost out of above, a weighted directed diagram shown in Figure 8 is constructed using Algorithm 5 calling Algorithm 6. In the diagram, every node denoted by ⊙ is shown as a fraction. For the fraction, its numerator is non-secret string in , and its denominator is , where and . To show the weight between nodes of adjacent stages, some weight is given as follows by Definition 5:

.

By Algorithm 5, label and minimal choosing cost of a path from initial node to current node s are computed in Table 1. In Table 1, we have for ending node . From Line 16 of Algorithm 3, we know that the shortest path is . So, it holds that . Since (by Algorithm 1) is finite, . So, in Line 17, we have shown in Figure 9. In Figure 9, it is verified that by Algorithm 1. 6. Scenario 2: Secret K Is Not Opaque with Respect to L(G) and

For system

G and secret

, if

K is not opaque with respect to

and

; we need to design a supervisor to prohibit all the secrets disclosed. To obtain the supervisor, we can use the method of [

1,

4] to end up with the maximal permission sublanguage of

which can ensure the opacity of

K. Then, Scenario 1 is fulfilled. Next, we propose Algorithm 7 to solve Model (

1).

| Algorithm 7 Optimal supervisory control II |

Input: Automaton G, secret K, and choosing cost ;

Output: Closed-loop behavior .

- 1:

Compute the observer of G - 2:

Compute the parallel composition - 3:

For the parallel composition , find supervisor g such that is the maximal permissive closed-loop behavior which can ensure K opaque [ 1, 4]. - 4:

- 5:

- 6:

if then - 7:

Design supervisor such that - 8:

else - 9:

if then - 10:

Design supervisor from by Theorem 3, and then output closed-loop behavior - 11:

else - 12:

Perform Algorithm 3 to design supervisor , and then output closed-loop behavior - 13:

end if - 14:

end if - 15:

- 16:

return

|

According to the above algorithm, we first construct maximal permissive supervisor

g to enforce the opacity of

K. And then, it is verified that closed-loop behavior

and restricted secret

meet the requirements of Scenario 1. As shown in Theorem 2, Theorem 3, and Theorem 4, we can conclude that Algorithm 7 can produce an optimal solution of Model (

1).

Theorem 5. We consider system G and secret . In Scenario 2, closed-loop behavior obtained in Algorithm 7 is an optimal solution of Model (

1).

Proof. Firstly, we prove that

is a controllable and closed sublanguage of

. As shown in Lines 7, 10, and 12,

is a closed sublanguage of

. Next, we prove

is controllable with respect to

.

is a controllable and closed sublanguage of

. At Line 15, there exits supervisor

f such that

.

Secondly, we show that closed-loop behavior

produced by Algorithm 7 is a feasible solution of Model (

1).

- 1.

Showing the opacity of .

In Lines 3–5, it is obvious that is opaque with respect to and . At Lines 6–14, by Theorems 2–4, it holds that is opaque with respect to and , which implies that . According to Lines 4, 5, and 15, we have . Since , we have . So, holds, which means is true. Therefore, K is opaque with respect to and .

- 2.

Showing that closed-loop behavior can preserve the maximal secret information.

For system

and secret

, at Lines 4–14, it is obvious that

is a feasible solution of Model (

2), which implies that

. Since

at Line 5 and

at Line 15, it holds that

, which implies that

. Since

holds, it is true that

. So, we have

. Therefore, we have

.

To conclude, closed-loop behavior

produced by Algorithm 7 is a feasible solution of Model (

1).

Finally, we show that the discount total choosing cost of

produced by Algorithm 7 is minimal for Model (

1).

We assume that closed-loop behavior

produced by Algorithm 7 is not the optimal solution of Model (

1). So, there exists supervisor

such that

is a feasible solution of Model (

1) and

holds. For Model (

1), the two constrain conditions are satisfied for

. The two conditions mean that

is opaque with respect to

and

, and

holds. As shown in Line 5, we have

. Taking

, it holds that

. Based on the assumption about

and

, we have

. Then, we consider the following two cases:

- Case 1

If , we discuss the relation between and .

- 1.1

If , it holds that at Lines 6 and 7, which contracts with the assumption that .

- 1.2

If , it holds that by Theorems 3 and 4 at Lines 9–13, which contracts with the assumption that .

- Case 2

If , it is true that for any because of formulas and , which implies that s is out of . Then, we discuss the relation between and again.

- 2.1

If , there exists such that for any , which means that all the secret strings in can be confused by the non-secret string in . By Corollary 2, it holds that . In Lines 9 and 10, it is true that . So, it holds that , which contracts with assumption that .

- 2.2

If , we discuss the following two sub-cases:

- 2.2.1

If there exists such that for any , it is obvious that by Corollary 2. At Lines 9, 10 and 15 of Algorithm 7, it holds that . So, it is true that , which contracts with assumption that .

- 2.2.2

If there exists

such that

for any

, we have the following formulas:

Owing to the definition of feasible solution, we have

and

. So, it holds that

. For the remaining part of Formulas (

4) and (

5), we construct two weight directed diagrams

and

T, where

(or

T) is produced in Algorithm 5 (e.g., Line 14 of Algorithm 3) if

(or

) is inputted in Algorithm 3.

By the constructions of

L and

H in Algorithm 3, it is obvious that

(i.e.,

is a sub-diagram of

T) and that

, where

(and

) is the weight of arc

in diagram

(and

T, respectively). So, the sum of the weight of the shortest path of

T is less than that of

, which implies that

. According to Formulas (

4) and (

5), we have

, which contracts with assumption that

.

To conclude, it is true that

, which implies that the discount total choosing cost of closed-loop behavior

produced by Algorithm 7 is minimal for optimal Model (

1). □

To show the effectiveness of Algorithm 7, we introduce the model of [

1] to compute the optimal choosing control strategy.

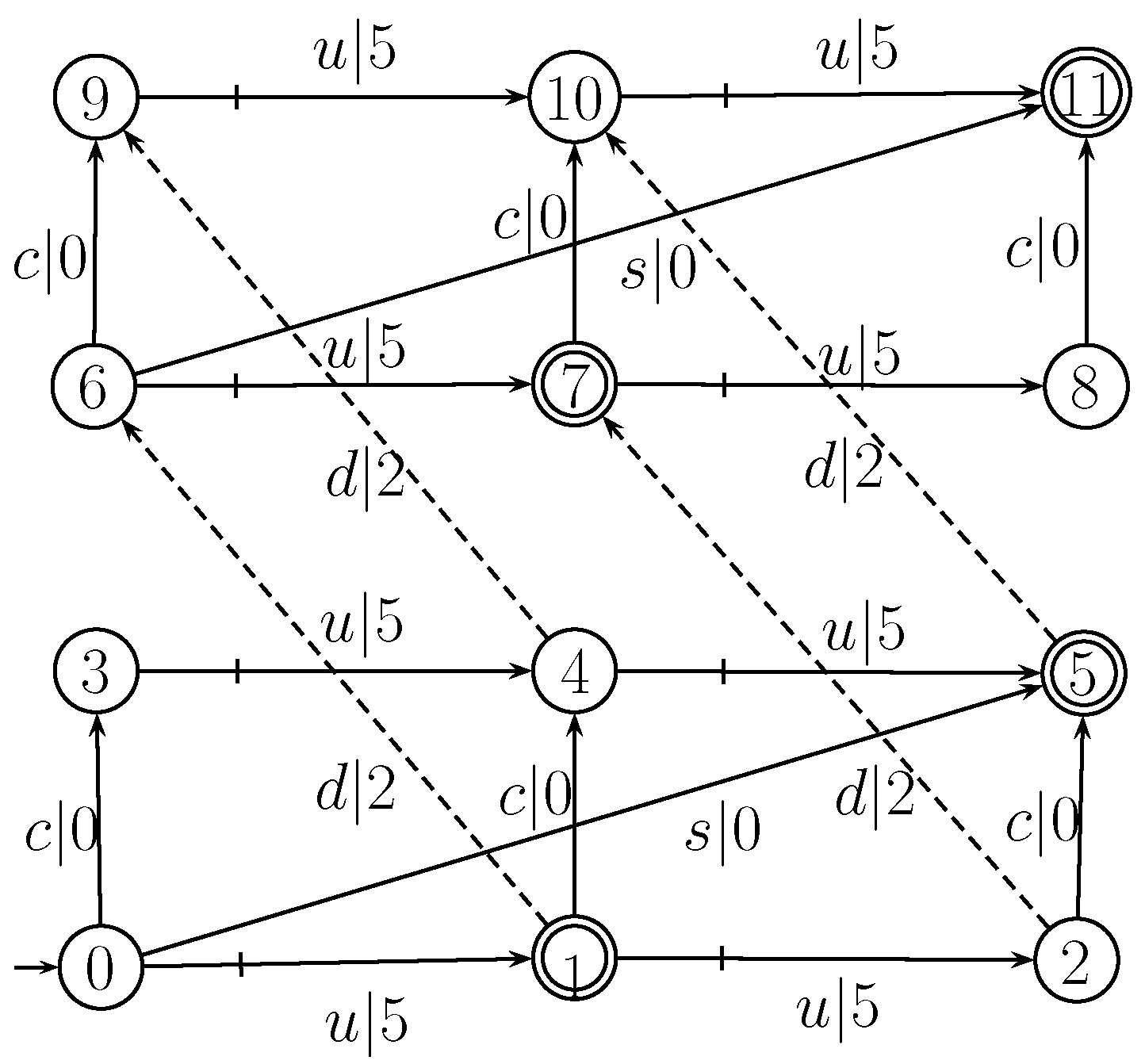

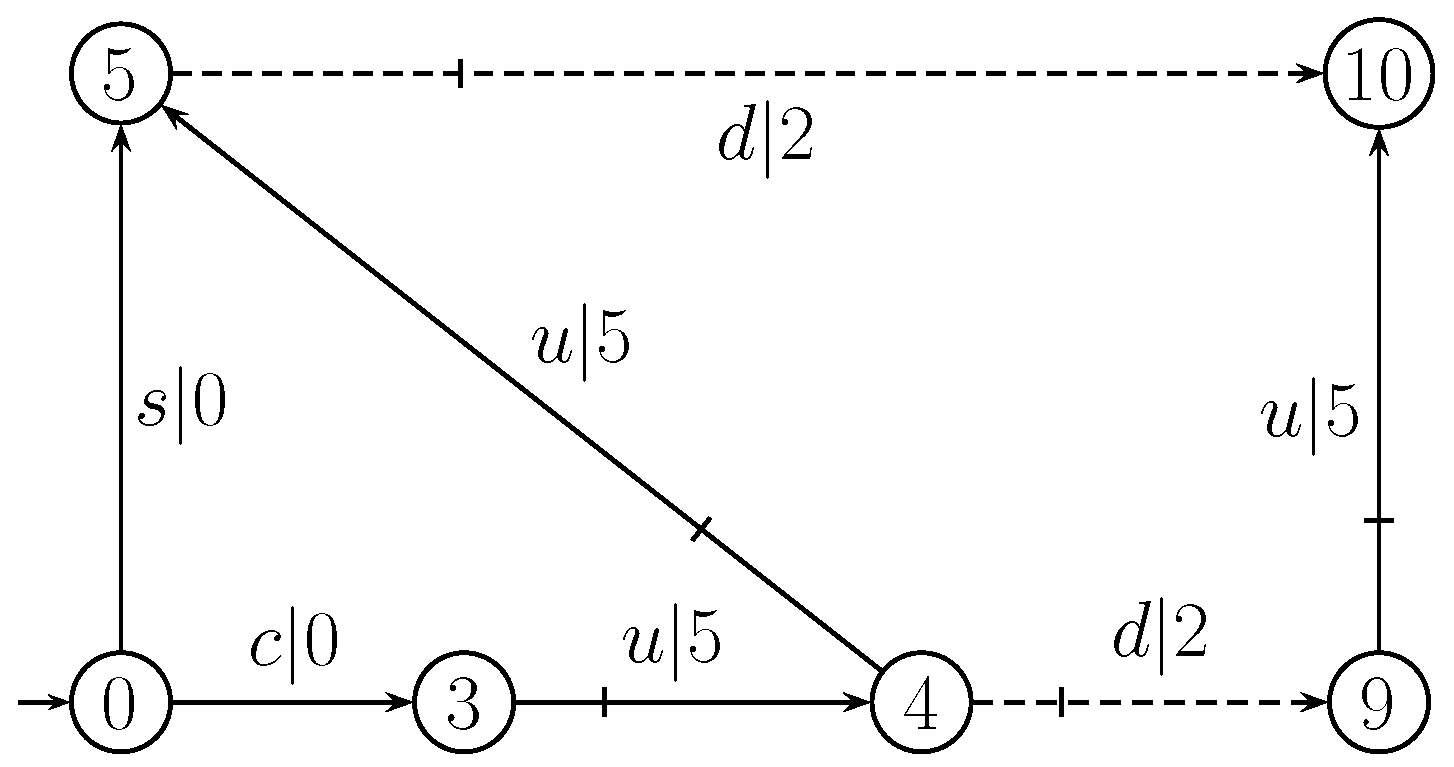

Example 5. We consider transition system G [1] shown in Figure 10, which models all sequences of possible moves of an agent in a three-storey building with a south wing and a north wing, both equipped with lifts and both connected by a corridor at each floor. Moreover, there is a staircase that leads from the first floor in the south wing to the third floor in the north wing. The agent starts from the first floor in the south wing. They can walk up the stairs (s) or walk through the corridors (c) from south to north without any control. The lifts can be used several times one floor upwards (u) and at most once on floor downwards (d) altogether. The moves of the lifts are controllable. Thus, . The secret is that the agent is either on the second floor in the south wing or on the third floor in the north wing, i.e., marked by a double circle. The adversary may gather the exact subsequence of moves in from sensors, but they cannot observe the downwards moves of the lifts. For every transition of system G, the choosing cost is shown in Figure 10. In [1], there are a unique supremal prefix-closed and controllable sublanguage (shown in Figure 11) of such that secret S is opaque with respect to and . So, . We suppose that choosing cost is inserted in G and , shown in Figure 10 and Figure 11, respectively. According to Line 12 of Algorithm 7 (i.e., Algorithm 3), and . By the process of Algorithm 3, we have and , which means there exists only one path (shown in Figure 12) from the starting node to the ending node. So, . At Lines 16 and 17 of Algorithm 3, it is obvious that is shown in Figure 13, which has the minimal discount total choosing cost . The optimal supervisory control defined by prevents the agent from using the lift of the south wing and the lift of the north wing from the second floor to the third floor at any time after they used this lift downwards, as well as the lift of the north wing downwards on the second floor.

7. Conclusions

When opacity-enforcing supervisory control is considered in Discrete Event Systems, we have to face another problem, i.e., cost. In reality, we hope we can reduce the cost while preserving the opacity of the supervised system. So, an optimal supervisory control model is formulated to enforce opacity by a supervisor with minimal discount total choosing cost. In the model, the objective function is to minimize the discount total choosing cost of closed-loop behavior, and the two constraint conditions are given: one is to enforce the opacity of closed-loop behavior, the other is to preserve the maximal part of secret information for closed-loop behavior. To solve the above optimal model, some algorithms and theorems are formulated from simplicity to complexity.

In this paper, the plant is modeled by the Finite Transition System, because coset and quotient set in Algorithms 3 and 4 may be infinite in Finite State Machine. To break the restriction, we will adopt a finite state to replace infinite event string and introduce the optimal control problem to Finite State Machine, which is our future work.