An Adaptive State Consistency Architecture for Distributed Software-Defined Network Controllers: An Evaluation and Design Consideration

Abstract

:Featured Application

Abstract

1. Introduction

- a.

- Static Inter-Controller Synchronization Approach

- b.

- Adaptive Inter-Controller Synchronization Approach

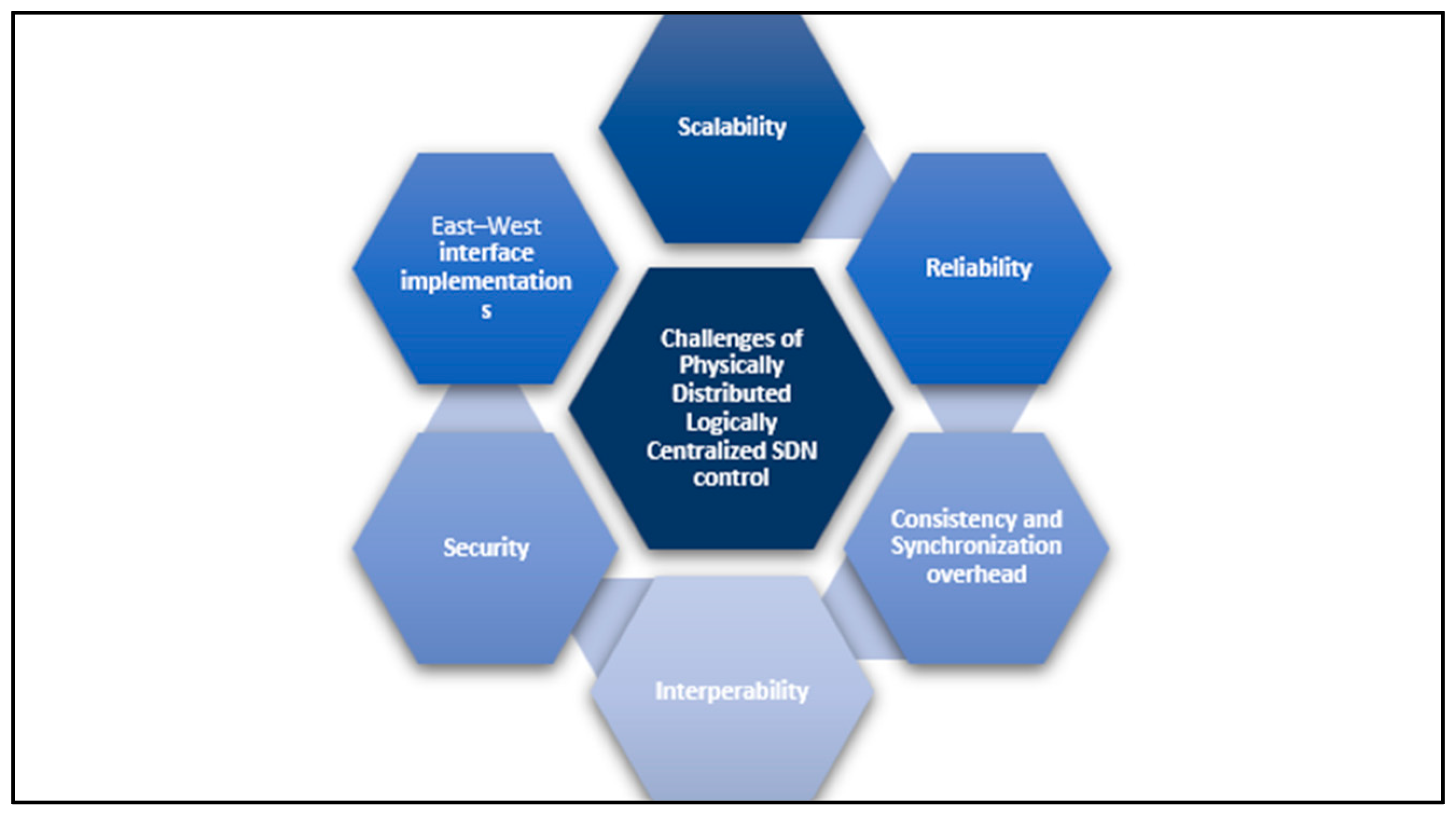

2. Physically Distributed Logically Centralized (PDLC) SDN Architecture and Components

2.1. Data Layer

2.2. Connectivity

2.3. Distributed Control Plane Instances

2.4. Control Logic

2.5. Database

3. Non-AI Consistency Solutions for PDLC SDN Controllers

3.1. Static Consistency Synchronization Techniques

| Architecture | Year | Distributed Architecture | Connectivity Model | Scalability | Robustness | Consistently | Synchronization Approach | Synchronization Overhead | East–West Comm. Protocol | Interoperability | Programming Language |

|---|---|---|---|---|---|---|---|---|---|---|---|

| DSF [34] | 2021 | Log. Centralized | T-Model, Hierarchical, Flat | Medium | Medium | Strong | Static | High | RTPS | No | Java |

| WECAN [1] | 2019 | Log. Centralized | Flat | Low | Medium | Strong | Static | High | FLEX | No | NA |

| VNF-Consensus [8] | 2020 | Log. Centralized | Flat | Medium | Medium | Strong | Static | Low | REST | Yes | Python |

3.2. Adaptive Consistency Synchronization Techniques

3.3. Discussion and Open Issues

- Message passing: Controllers can exchange messages with each other to communicate updates and exchange information. This can be performed using various messaging protocols such as MQTT, AMQP, or custom protocols implemented over TCP/IP or UDP. These include ElstiCon [25], which employs message passing as their communication approach among the controllers in the pool.

- Publish-subscribe model: Controllers can subscribe to specific topics or events of interest and publish updates on those topics. Other controllers that are subscribed to those topics will receive the updates. This model allows for asynchronous communication and decoupling between controllers. Orion [26] utilizes a publish-subscribe approach for sharing state across its applications.

- Remote procedure calls (RPC): Controllers can expose remote procedures or methods that other controllers can invoke to trigger updates or request information, such as using RESTful APIs. Floodlight [42] is an open-source SDN controller that exposes RESTful APIs for remote procedure calls.

- Shared data store: Controllers can access a shared data store, such as a database or distributed key-value store, to read and write shared information. This shared data store can be a centralized repository where controllers can update and retrieve data. HyperFlow [11] is an SDN controller that falls under the publish-subscribe category and the “shared data store” category. It utilizes a WheelFS [43] to read and write shared information for communication and coordination among controllers. In addition to the publish-subscribe model, Orion [26] also employs a centralized, in-memory data store called the Network Information Base (NIB), which acts as a shared state repository across the Orion applications.

- Event-based communication: Controllers can generate and listen to events to communicate updates. Events can be published by one controller and subscribed to by others, allowing for event-driven updates and notifications. ONOS (Open Network Operating System) [24] is an SDN controller that fits into multiple communication approaches. It supports both the “publish-subscribe model” and “event-based communication” approaches. Controllers in the ONOS framework can subscribe to specific topics or events of interest and publish updates on those topics, enabling asynchronous and event-driven communication.

4. AI-Based Solutions for SDN Consistency Synchronization

4.1. AI-Based SDN-Related Work

4.2. Discussion and Open Issues

5. Future Direction: AI-Based State Consistency Architecture

- Synchronization Module: This module implements an algorithm that dynamically adjusts controllers’ synchronization rates based on network conditions and workload. This algorithm should be capable of learning and adapting over time using AI techniques. The distributed controllers work collaboratively under the guidance of the Synchronization Module to synchronize their activities.

- AI Techniques: Many recent studies have recommended RL for SDN networks such as [44,45,59]. There are many reasons for that, such as the abundance of data that the SDN switches can afford through the protocol of OpenFlow, and the high heterogeneity of distinct SDN domains. Moreover, SDN networks are considered complex and intricate systems. Subsequently, modeling such system accuracy is mathematically complicated. Due to the lack of restrictions on the structure or dynamicity of the network, model-free RL-based techniques are particularly appealing. They can be used in SDN networks in real-world scenarios.

- Synchronization Monitoring: Implement a mechanism to monitor the synchronization state between controllers. It can involve tracking performance metrics, latency measurements, and other relevant parameters to ensure effective coordination.

- Network State Collection: Incorporate mechanisms to monitor the network state, traffic patterns, and other relevant metrics. These data can be used as feedback for the AI synchronization algorithm, allowing it to make informed decisions based on the current network conditions.

5.1. Challenges of AI-Based SDN

5.1.1. New ML Techniques

5.1.2. Dataset Standardization

5.2. Reinforcement Learning (RL) Challenges

5.2.1. Trade-Off between Exploration and Exploitation

5.2.2. The Whole Problem as a Goal-Directed Agent

5.2.3. Continuous Control Problems

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yu, H.; Qi, H.; Li, K. WECAN: An Efficient West-East Control Associated Network for Large-Scale SDN Systems. Mob. Netw. Appl. 2020, 25, 114–124. [Google Scholar] [CrossRef]

- Keshari, S.K.; Kansal, V.; Kumar, S. A Systematic Review of Quality of Services (QoS) in Software Defined Networking (SDN). Wirel. Pers. Commun. 2021, 116, 2593–2614. [Google Scholar] [CrossRef]

- Tadros, C.N.; Mokhtar, B.; Rizk, M.R.M. Logically Centralized-Physically Distributed Software Defined Network Controller Architecture. In Proceedings of the 2018 IEEE Global Conference on Internet of Things, GCIoT 2018, Alexandria, Egypt, 5–7 December 2018; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Ahmad, S.; Mir, A.H. Scalability, Consistency, Reliability and Security in SDN Controllers: A Survey of Diverse SDN Controllers. J. Netw. Syst. Manag. 2021, 29, 9. [Google Scholar] [CrossRef]

- Hoang, N.T.; Nguyen, H.N.; Tran, H.A.; Souihi, S. A Novel Adaptive East–West Interface for a Heterogeneous and Distributed SDN Network. Electronics 2022, 11, 975. [Google Scholar] [CrossRef]

- Espinel Sarmiento, D.; Lebre, A.; Nussbaum, L.; Chari, A. Decentralized SDN Control Plane for a Distributed Cloud-Edge Infrastructure: A Survey. IEEE Commun. Surv. Tutor. 2021, 23, 256–281. [Google Scholar] [CrossRef]

- Blial, O.; Ben Mamoun, M.; Benaini, R. An Overview on SDN Architectures with Multiple Controllers. J. Comput. Netw. Commun. 2016, 2016, 9396525. [Google Scholar] [CrossRef]

- Venâncio, G.; Turchetti, R.C.; Camargo, E.T.; Duarte, E.P. VNF-Consensus: A virtual network function for maintaining a consistent distributed software-defined network control plane. Int. J. Netw. Manag. 2021, 31, e2124. [Google Scholar] [CrossRef]

- Informatique, S.; Informatique, G. Extending SDN Control to Large-Scale Networks: Taxonomy, Challenges and Solutions; Université Paris-Est Créteil: Créteil, France, 2021. [Google Scholar]

- Hussein, A.; Chehab, A.; Kayssi, A.; Elhajj, I. Machine learning for network resilience: The start of a journey. In Proceedings of the 2018 5th International Conference on Software Defined Systems, Barcelona, Spain, 23–26 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 59–66. [Google Scholar] [CrossRef]

- Tootoonchian, A.; Ganjali, Y. HyperFlow: A distributed control plane for OpenFlow. In Proceedings of the 2010 Internet Network Management Workshop/Workshop on Research on Enterprise Networking, INM/WREN 2010, San Jose, CA, USA, 27 April 2010. [Google Scholar]

- Ts, O.N.F. Reference Design SDN Enabled Broadband Access. 2019. Available online: http://www.opennetworking.org (accessed on 10 March 2023).

- Aslan, M.; Matrawy, A. Adaptive consistency for distributed SDN controllers. In Proceedings of the 2016 17th International Telecommunications Network Strategy and Planning Symposium, Networks, Montreal, QC, Canada, 26–28 September 2016; pp. 150–157. [Google Scholar] [CrossRef]

- Panda, A.; Scott, C.; Ghodsi, A.; Koponen, T.; Shenker, S. CAP for networks. In Proceedings of the ACM SIGCOMM Workshop on Hot Topics in Software Defined Networking, HotSDN 2013, Hong Kong, China, 16 August 2013; pp. 91–96. [Google Scholar] [CrossRef]

- Oktian, Y.E.; Lee, S.G.; Lee, H.J.; Lam, J.H. Distributed SDN controller system: A survey on design choice. Comput. Netw. 2017, 121, 100–111. [Google Scholar] [CrossRef]

- Levin, D.; Wundsam, A.; Heller, B.; Handigol, N.; Feldmann, A. Logically centralized? State distribution trade-offs in software defined networks. In Proceedings of the 1st Workshop on Hot Topics in Software Defined Networks, HotSDN’12, Helsinki, Finland, 13 August 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Foerster, K.T.; Schmid, S.; Vissicchio, S. Survey of Consistent Software-Defined Network Updates. IEEE Commun. Surv. Tutor. 2019, 21, 1435–1461. [Google Scholar] [CrossRef]

- Bannour, F.; Souihi, S.; Mellouk, A. Adaptive State Consistency for Distributed ONOS Controllers. In Proceedings of the 2018 IEEE Global Communications Conference, Abu Dhabi, United Arab Emirates, 9–13 December 2018. [Google Scholar] [CrossRef]

- Sakic, E.; Sardis, F.; Guck, J.W.; Kellerer, W. Towards adaptive state consistency in distributed SDN control plane. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017. [Google Scholar] [CrossRef]

- Akyildiz, I.F.; Lee, A.; Wang, P.; Luo, M.; Chou, W. A roadmap for traffic engineering in software defined networks. Comput. Netw. 2014, 71, 1–30. [Google Scholar] [CrossRef]

- Yao, H.; Jiang, C.; Qian, Y. (Eds.) Developing Networks Using Artificial Intelligence, 1st ed.; Springer International Publishing: Cham, Switzerland, 2019; p. 8. ISBN 978-3-030-15028-0. [Google Scholar] [CrossRef]

- Koponen, T.; Casado, M.; Gude, N.; Stribling, J.; Poutievski, L.; Zhu, M.; Ramanathan, R.; Iwata, Y.; Inoue, H.; Hama, T.; et al. Onix A Distributed Control Platform for Large-Scale Production Networks. In Proceedings of the 9th USENIX Conference on Operating Systems Design and Implementation, Vancouver, BC, Canada, 4–6 October 2010; Volume 10, pp. 1–14. Available online: http://dl.acm.org/citation.cfm?id=1924943.1924968 (accessed on 26 June 2022).

- Alowa, A.; Fevens, T. Towards minimum inter-controller delay time in software defined networking. Procedia Comput. Sci. 2020, 175, 395–402. [Google Scholar] [CrossRef]

- Open Network Operating System (ONOS). SDN Controller for SDN/NFV Solutions. In Proceedings of the ACM/IEEE Symposium on Architectures for Networking and Communications Systems, Los Angeles, CA, USA, 20–21 October 2014; Available online: https://opennetworking.org/onos/ (accessed on 26 June 2022).

- Dixi, A.; Hao, F.; Mukherjee, S.; Lakshman, T.V.; Kompella, R.R. ElastiCon: An elastic distributed SDN controller. In Proceedings of the 10th ACM/IEEE Symposium on Architectures for Networking and Communications Systems, ANCS 2014, Marina del Rey, CA, USA, 20–21 October 2014; pp. 17–27. [Google Scholar] [CrossRef]

- Ferguson, A.D.; Gribble, S.; Hong, C.Y.; Killian, C.; Mohsin, W.; Muehe, H.; Ong, J.; Poutievski, L.; Singh, A.; Vicisano, L.; et al. Orion: Google’s Software-Defined Networking Control Plane. In Proceedings of the 2021 18th USENIX Symposium on Networked Systems Design and Implementation, NSDI 2021, Virtual, 12–14 April 2021; pp. 83–98. [Google Scholar]

- Bannour, F.; Souihi, S.; Mellouk, A. Distributed SDN Control: Survey, Taxonomy, and Challenges. IEEE Commun. Surv. Tutor. 2018, 20, 333–354. [Google Scholar] [CrossRef]

- Hu, J.; Lin, C.; Li, X.; Huang, J. Scalability of control planes for software defined networks: Modeling and evaluation. In Proceedings of the IEEE 22nd International Symposium of Quality of Service (IWQoS), Hong Kong, China, 26–27 May 2014; pp. 147–152. [Google Scholar] [CrossRef]

- Remigio Da Silva, E.; Endo, P.T.; De Queiroz Albuquerque, E. Standardization for evaluating software-defined networking controllers. In Proceedings of the 2017 8th International Conference on the Network of the Future (NOF), London, UK, 22–24 November 2017; pp. 135–137. [Google Scholar] [CrossRef]

- European Commission. Technology Readiness Levels (TRL). Horizon 2020—Work Program. 2014–2015 Gen. Annex. Extr. from Part 19—Comm. Decis. C. 2014. Available online: http://ec.europa.eu/research/participants/data/ref/h2020/wp/2014_2015/annexes/h2020-wp1415 (accessed on 26 June 2022).

- Jain, S.; Smith, T. Googles SDN. J. Netw. Eng. 2013, 5, 3–14. [Google Scholar]

- Hong, C.-Y.; Lee, D.; Kim, E. SDWAN: Achieving High Utilization. In Proceedings of the ACM SIGCOMM 2013 Conference on SIGCOMM, SIGCOMM ’13, Hong Kong, China, 12–16 August 2013; ACM Press: New York, NY, USA, 2013; Volume 43, p. 15. [Google Scholar]

- Qiu, T.; Qiao, R.; Wu, D.O. EABS: An event-aware backpressure scheduling scheme for emergency internet of things. IEEE Trans. Mob. Comput. 2018, 17, 72–84. [Google Scholar] [CrossRef]

- Almadani, B.; Beg, A.; Mahmoud, A. DSF: A Distributed SDN Control Plane Framework for the East/West Interface. IEEE Access 2021, 9, 26735–26754. [Google Scholar] [CrossRef]

- Cai, Z.; Cox, A.; Ng, E.T.S. Maestro: A System for Scalable OpenFlow Control. Cs.Rice.Edu. 2011. Available online: http://www.cs.rice.edu/~eugeneng/papers/TR10-11.pdf (accessed on 10 March 2024).

- Rajsbaum, S. ACM SIGACT news distributed computing column 13. ACM SIGACT News 2003, 34, 53–56. [Google Scholar] [CrossRef]

- Benamrane, F.; Ben Mamoun, M.; Benaini, R. An East-West interface for distributed SDN control plane: Implementation and evaluation. Comput. Electr. Eng. 2017, 57, 162–175. [Google Scholar] [CrossRef]

- Adedokun, E.A.; Adekale, A. Development of a Modified East-West Interface for Distributed Control Plane Network. Arid. Zone J. Eng. Technol. Environ. 2019, 15, 242–254. Available online: www.azojete.com.ng (accessed on 12 January 2022).

- Abdelsalam, M.A. Network Application Design Challenges and Solutions in SDN. Ph.D. Thesis, Carleton University, Ottawa, ON, Canada, 2018. [Google Scholar]

- Aslan, M.; Matrawy, A. A Clustering-based Consistency Adaptation Strategy for Distributed SDN Controllers. In Proceedings of the 2018 4th IEEE Conference on Network Softwarization, NetSoft 2018, Montreal, QC, Canada, 25–29 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 257–261. [Google Scholar] [CrossRef]

- Aslan, M.; Matrawy, A. On the impact of network state collection on the performance of SDN applications. IEEE Commun. Lett. 2016, 20, 5–8. [Google Scholar] [CrossRef]

- Floodlight Controller—Confluence. Available online: https://floodlight.atlassian.net/wiki/spaces/floodlightcontroller/overview (accessed on 25 June 2022).

- Stribling, J.; Sovran, Y.; Zhang, I.; Pretzer, X.; Li, J.; Kaashoek, M.F.; Morris, R.T. Flexible, wide-area storage for distributed systems with wheelfs. In Proceedings of the 6th USENIX Symposium on Networked Systems Design and Implementation, NSDI 2009, Boston, MA, USA, 22–24 April 2009; USENIX Association: Berkeley, CA, USA, 2009; pp. 43–58. [Google Scholar]

- Zhang, Z.; Ma, L.; Poularakis, K.; Leung, K.P.; Wu, L. DQ Scheduler: Deep Reinforcement Learning Based Controller Synchronization in Distributed SDN. In Proceedings of the ICC 2019–2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Zhang, Z.; Ma, L.; Poularakis, K.; Leung, K.K.; Tucker, J.; Swami, A. MACS: Deep reinforcement learning based SDN controller synchronization policy design. In Proceedings of the 2019 IEEE 27th International Conference on Network Protocols, Chicago, IL, USA, 7–10 October 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Mestres, A.; Rodriguez-Natal, A.; Carner, J.; Barlet-Ros, P.; Alarcón, E.; Solé, M.; Muntés-Mulero, V.; Meyer, D.; Barkai, S.; Hibbett, M.J.; et al. Knowledge-defined networking. Comput. Commun. Rev. 2017, 47, 2–10. [Google Scholar] [CrossRef]

- Clark, D.D.; Partridge, C.; Christopher Ramming, J.; Wroclawski, J.T. A Knowledge Plane for the Internet. Comput. Commun. Rev. 2003, 33, 3–10. [Google Scholar] [CrossRef]

- Aouedi, O.; Piamrat, K.; Parrein, B. Intelligent Traffic Management in Next-Generation Networks. Futur. Internet 2022, 14, 44. [Google Scholar] [CrossRef]

- Mohmmad, S.; Shankar, K.; Chanti, Y. AI Based SDN Technology Integration with their Challenges and Opportunities. Asian J. Comput. Sci. Technol. 2019, 8, 165–169. [Google Scholar]

- Andrew, A.M. Reinforcement Learning: An Introduction. Kybernetes 1998, 27, 1093–1096. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Li, Y.; Su, X.; Ding, A.Y.; Lindgren, A.; Liu, X.; Prehofer, C.; Riekki, J.; Rahmani, R.; Tarkoma, S.; Hui, P. Enhancing the Internet of Things with Knowledge-Driven Software-Defined Networking Technology: Future Perspectives. Sensors 2020, 20, 3459. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Yin, H.; Min, G.; Jiang, H.; Zhang, J.; Wu, Y. Data-Driven Information Plane in Software-Defined Networking. IEEE Commun. Mag. 2017, 55, 218–224. [Google Scholar] [CrossRef]

- Fang, C.; Guo, S.; Wang, Z.; Huang, H.; Yao, H.; Liu, Y. Data-driven intelligent future network: Architecture, use cases, and challenges. IEEE Commun. Mag. 2019, 57, 34–40. [Google Scholar] [CrossRef]

- Chemalamarri, V.D.; Braun, R.; Lipman, J.; Abolhasan, M. A Multi-agent Controller to enable Cognition in Software Defined Networks. In Proceedings of the 28th International Telecommunication Networks and Application Conference, ITNAC 2018, Sydney, Australia, 21–23 November 2018; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar] [CrossRef]

- Hussein, A.; Salman, O.; Chehab, A.; Elhajj, I.; Kayssi, A. Machine learning for network resiliency and consistency. In Proceedings of the 2019 6th International Conference on Software Defined Systems, SDS 2019, Rome, Italy, 10–13 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 146–153. [Google Scholar] [CrossRef]

- Wang, Z.; Schaul, T.; Hessel, M.; Van Hasselt, H.; Lanctot, M.; De Frcitas, N. Dueling Network Architectures for Deep Reinforcement Learning. In Proceedings of the 33rd International Conference on Machine Learning, ICML 2016, New York, NY, USA, 20–22 June 2016; Volume 4, pp. 2939–2947. [Google Scholar]

- Tavakoli, A.; Pardo, F.; Kormushev, P. Action branching architectures for deep reinforcement learning. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 4131–4138. [Google Scholar] [CrossRef]

- Sun, P.; Guo, Z.; Wang, G.; Lan, J.; Hu, Y. MARVEL: Enabling controller load balancing in software-defined networks with multi-agent reinforcement learning. Comput. Netw. 2020, 177, 107230. [Google Scholar] [CrossRef]

- Konda, V.R.; Tsitsiklis, J.N. Actor-Critic Algorithms; Laboratory for Information and Decision Systems, Massachusetts Institute of Technology: Cambridge, MA, USA, 2001. [Google Scholar]

- Sutton, R.S.; McAllester, D.; Singh, S.; Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst. 2000, 12, 1057–1063. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

| Distributed Controller | Year | Distributed Architecture | Connectivity Model | Scalability | Robustness | Consistency Model | Sync. Approach | Sync. Overhead | Interoperability | TRL | East–West Comm. Protocol | Programming Language |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HyperFlow [11] | 2010 | Log. Centralized | Flat | Medium | Low | Eventual | Static | Medium | No | TRL3 | Broker-based P2P | C++ |

| ONIX [22] | 2010 | Log. Centralized | Flat | Medium/Low | Medium | Weak/Strong | Static | Medium/High | No | TRL7 | ZooKeeper API | Python or C |

| ONOS [24] | Dec. 2014 | Log. Centralized | Flat | Medium/Low | Medium | Medium | Static | Medium/High | No | TRL9 | Atomix DB (RAFT Algorithm) | Java |

| ElastiCon [25] | 2014 | Log. Centralized | Mesh | Medium | Undefined | Eventual | Static | Medium | No | TRL3 | DB and TCP Channel | Java |

| Orion [26] | 2021 | Log. Centralized | Hybrid | High | Undefined | Eventual | Static | Medium | No | TRL3 | Publish-Subscribe DB (NIB) | C++ |

| Architecture | Year | Distributed Architecture | Connectivity Model | Scalability | Robustness | Consistently | Synchronization Approach | Synchronization Overhead | East–West Comm. Protocol | Interoperability | Programming Language |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Adaptive State Consistency for Distributed ONOS Controllers | 2018 | Log. Centralized | Flat | Medium | Medium | Eventual | Continuous Adaptive | Medium | Events shared by the distributed core of ONOS | No (Homogeneous) | Java |

| Towards Adaptive State Consistency in Distributed SDN Control Plane | 2017 | Log. Centralized | Flat | High | Medium | Eventual | Continuous Adaptive | Low | Event-based | NA | NA |

| Adaptive Consistency for Distributed SDN Controllers | 2016 | Log. Centralized | Flat | High | Medium | Eventual | Adaptive | Low | Not specified | Yes | Python |

| Architecture | Year | Distributed Architecture | Connectivity Model | Scalability | Robustness | Consistently | Synchronization Approach | Synchronization Overhead | East–West Comm. Protocol | Interoperability | Programming Language |

|---|---|---|---|---|---|---|---|---|---|---|---|

| A Multi-agent Controller to enable Cognition in Software Defined Network | 2018 | Log. Centralized (single controller) | Single controller | Very low (one SDN controller) | Low | Eventual | Adaptive | NA | No (single controller) | No | GOAL agent programming language. Additionally, Prolog is used by GOAL to represent knowledge. |

| Machine Learning for Network Resiliency and Consistency | 2019 | Logically centralized (single controller) | Flat | Very low (one SDN controller) | Low | Strong consistency (for security architecture) | Adaptive | No Overhead (only one controller) | No | No between different controllers (interoperability between SDN controller and the ARS system, handling an SDN system that is heterogeneous and uses SDN controllers created by various vendors by using REST interface) | NA |

| DQ Scheduler: Deep Reinforcement Learning Based Controller Synchronization in Distributed SDN | 2018 | Logically centralized | Flat | High | Medium | Eventual | Adaptive | Low | NA | Yes | Python |

| MACS: Deep Reinforcement Learning-based SDN Controller Synchronization Policy Design | 2019 | Logically centralized | Flat | High | Medium | Eventual | Adaptive | Very Low | Through domain controllers broadcasting or receiving control plan messages that have the selected up-to-date basic information of synchronization | Yes | Python |

| MARVEL: Enabling controller load balancing in software-defined networks with multi-agent reinforcement learning | 2020 | Logically Centralized | Mech | High | High | Eventual | Adaptive | Low | Such as ONOS local controller state data are sent throughout the cluster via events shared. | Yes | Python |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsheikh, R.; Fadel, E.; Akkari, N. An Adaptive State Consistency Architecture for Distributed Software-Defined Network Controllers: An Evaluation and Design Consideration. Appl. Sci. 2024, 14, 2627. https://doi.org/10.3390/app14062627

Alsheikh R, Fadel E, Akkari N. An Adaptive State Consistency Architecture for Distributed Software-Defined Network Controllers: An Evaluation and Design Consideration. Applied Sciences. 2024; 14(6):2627. https://doi.org/10.3390/app14062627

Chicago/Turabian StyleAlsheikh, Rawan, Etimad Fadel, and Nadine Akkari. 2024. "An Adaptive State Consistency Architecture for Distributed Software-Defined Network Controllers: An Evaluation and Design Consideration" Applied Sciences 14, no. 6: 2627. https://doi.org/10.3390/app14062627