An Introduction to Machine and Deep Learning Methods for Cloud Masking Applications

Abstract

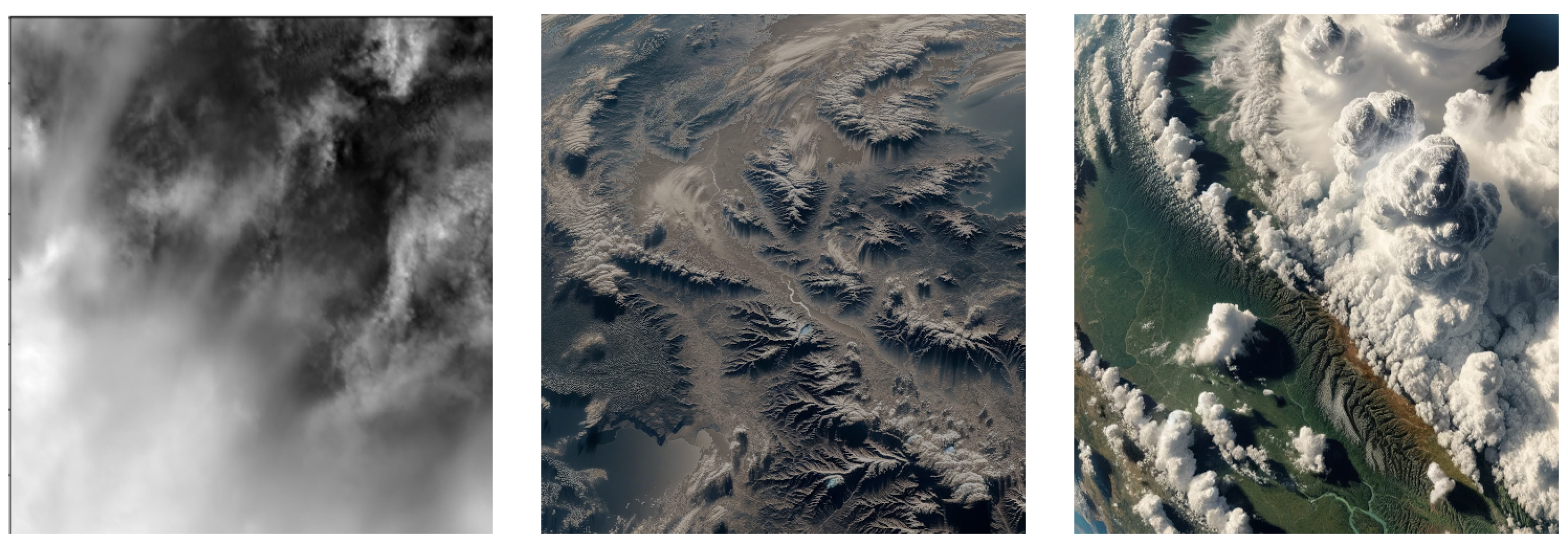

1. Introduction

2. Thresholding Methods

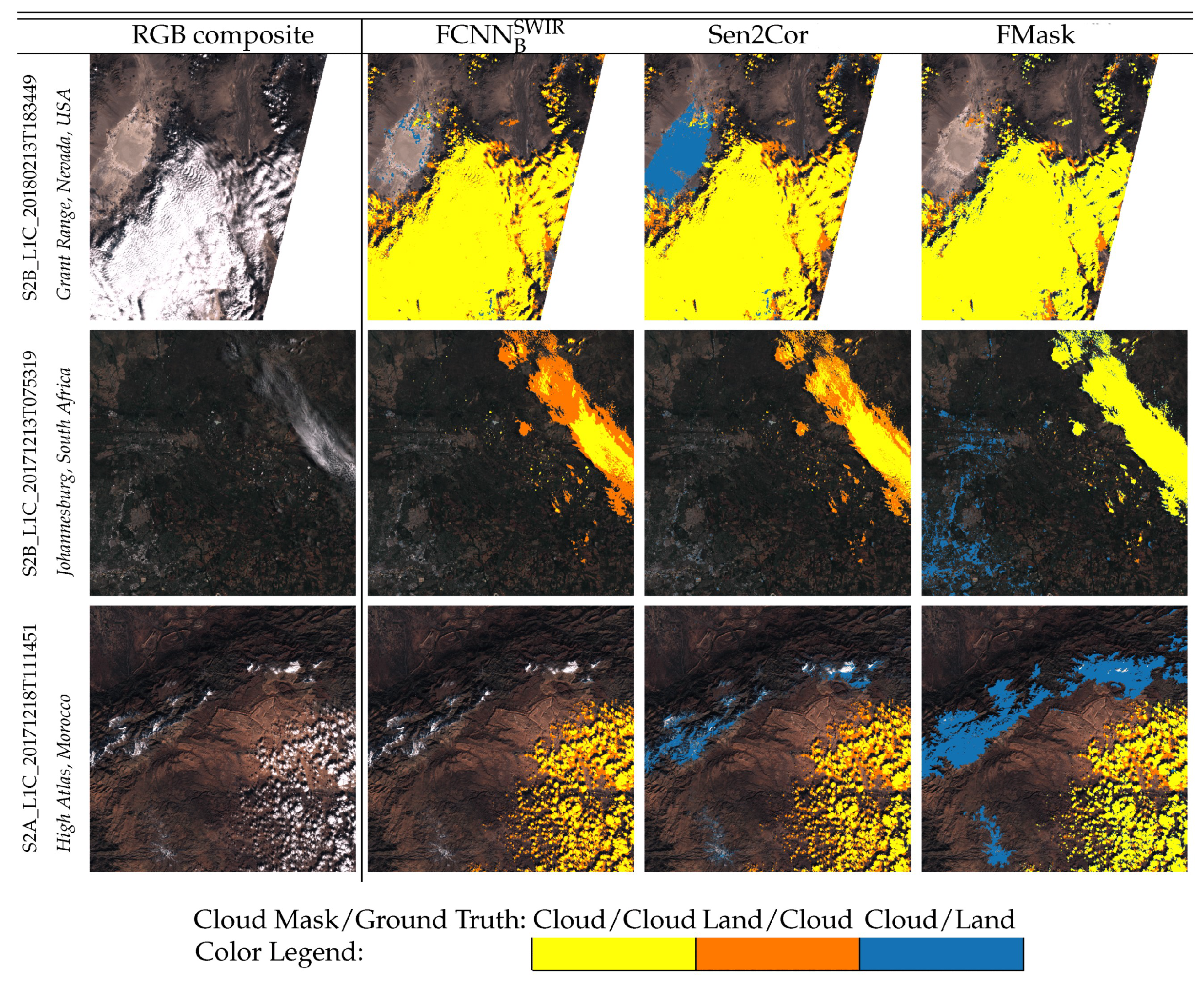

3. Machine Learning Methods

3.1. Machine Learning Algorithms

3.2. Advantages and Challenges of Traditional Machine Learning for Cloud Masking

4. Deep Learning Methods

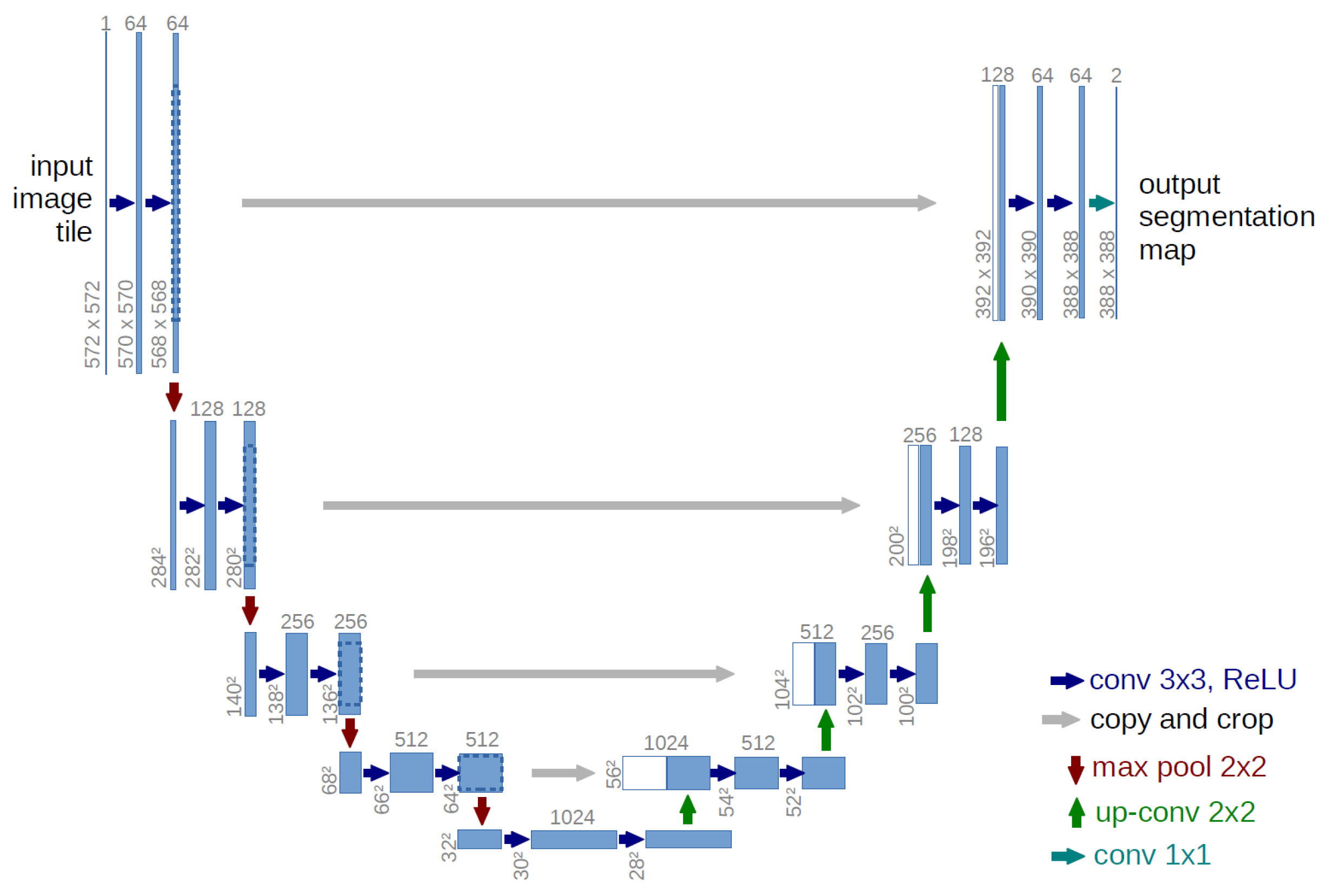

4.1. Deep Learning for Semantic Segmentation

4.2. Deep Learning for Cloud Masking

4.3. Datasets and Performance Metrics

5. Most Significant Challenges Related to Data Quality and Labelled Data

- Variability of the publicly available annotated datasets: Publicly available annotated datasets are used for the training and testing of models. The development of large, high-quality datasets requires expertise and time-consuming processes; inconsistent labeling, especially in ambiguous situations, complicates model training and evaluation [95]. In addition, the resulting annotated masks are generally specific to a particular sensor. Different formats, resolution, variability of class types, different data volume, non exhaustive geographic scene distributions, are some factors that characterize most of the currently available annotated masks, besides the lack of thin clouds or cloud shadows labels. This leads to an uneasy utilization of the available amount of data. Finally, not all the reference data used by some developers are freely available.

- Diversity of labelled data: The diversity of the labelled data is another important factor that affects the accuracy and generalization performance of the model. The labelled data should be diverse enough to capture the variability and complexity of cloud patterns and atmospheric conditions. However, it can be challenging to obtain diverse labelled data, especially for rare or extreme cloud events.

- Data imbalance: Another challenge is the imbalance between cloud and non-cloud regions in the input data. Cloud regions are often less frequent than non-cloud regions, which can lead to bias and poor model performance. Techniques such as data augmentation and sampling can help to address this issue, but they can also introduce new sources of error and uncertainty.

- Sensor-dependent data: each dataset is linked to the spatial and spectral characteristics of the source sensor.

6. Challenges and Future Prospects in Cloud Masking Applications

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lefebvre, A.; Sannier, C.; Corpetti, T. Monitoring Urban Areas with Sentinel-2A Data: Application to the Update of the Copernicus High Resolution Layer Imperviousness Degree. Remote Sens. 2016, 8, 606. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, M.; Tao, M.; Zhou, W.; Lu, X.; Xiong, Y.; Li, F.; Wang, Q. The role of satellite remote sensing in mitigating and adapting to global climate change. Sci. Total. Environ. 2023, 904, 166820. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Shen, H.; Weng, Q.; Zhang, Y.; Dou, P.; Zhang, L. Cloud and cloud shadow detection for optical satellite imagery: Features, algorithms, validation, and prospects. ISPRS J. Photogramm. Remote Sens. 2022, 188, 89–108. [Google Scholar] [CrossRef]

- King, M.D.; Platnick, S.; Menzel, W.P.; Ackerman, S.A.; Hubanks, P.A. Spatial and Temporal Distribution of Clouds Observed by MODIS Onboard the Terra and Aqua Satellites. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3826–3852. [Google Scholar] [CrossRef]

- Pierre Auger Observatory. Available online: https://www.auger.org/ (accessed on 1 March 2024).

- JEM-EUSO Joint Experiment Missions for Extreme Universe Space Observatory. Available online: https://www.jemeuso.org/ (accessed on 1 March 2024).

- Mini-EUSO. Available online: http://jem-euso.roma2.infn.it (accessed on 1 March 2024).

- Aielli, G.; Bacci, C.; Bartoli, B.; Bernardini, P.; Bi, X.J.; Bleve, C.; Branchini, P.; Budano, A.; Bussino, S.; Melcarne, A.C.; et al. Highlights from the ARGO-YBJ experiment. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2012, 661 (Suppl. S1), S50–S55. [Google Scholar] [CrossRef][Green Version]

- Vercellone, S.; Bigongiari, C.; Burtovoi, A.; Cardillo, M.; Catalano, O.; Franceschini, A.; Lombardi, S.; Nava, L.; Pintore, F.; Stamerra, A.; et al. ASTRI Mini-Array core science at the Observatorio del Teide. J. High Energy Astrophys. 2022, 35, 1–42. [Google Scholar] [CrossRef]

- Anzalone, A.; Bertaina, M.E.; Briz, S.; Cassardo, C.; Cremonini, R.; de Castro, A.J.; Ferrarese, S.; Isgrò, F.; López, F.; Tabone, I. Methods to Retrieve the Cloud-Top Height in the Frame of the JEM-EUSO Mission. IEEE Trans. Geosci. Remote Sens. 2019, 57, 304–318. [Google Scholar] [CrossRef]

- Anzalone, A.; Bruno, A.; Isgrò, F. Measurements of High Energy Cosmic Rays and Cloud presence: A method to estimate Cloud Coverage in Space and Ground-Based Infrared Images. Nucl. Part. Phys. Proc. 2019, 306–308, 116–123. [Google Scholar] [CrossRef]

- Fu, C.L.; Cheng, H.Y. Predicting solar irradiance with all-sky image features via regression. Sol. Energy 2013, 97, 537–550. [Google Scholar] [CrossRef]

- Park, S.; Kim, Y.; Ferrier, N.J.; Collis, S.M.; Sankaran, R.; Beckman, P.H. Prediction of Solar Irradiance and Photovoltaic Solar Energy Product Based on Cloud Coverage Estimation Using Machine Learning Methods. Atmosphere 2021, 12, 395. [Google Scholar] [CrossRef]

- Mustaza, M.S.; Latip, M.F.A.; Zaini, N.; Asmat, A.; Norhazman, H. Cloud Cover Profile using Cloud Detection Algorithms towards Energy Forecasting in Photovoltaic (PV) Systems. In Proceedings of the 2019 IEEE 7th Conference on Systems, Process and Control (ICSPC), Melaka, Malaysia, 13–14 December 2019; pp. 102–107. [Google Scholar] [CrossRef]

- Wulder, M.A.; Masek, J.G.; Cohen, W.B.; Loveland, T.R.; Woodcock, C.E. Opening the archive: How free data has enabled the science and monitoring promise of Landsat. Remote Sens. Environ. 2008, 122, 2–10. [Google Scholar] [CrossRef]

- Rossow, W.B.; Garder, L.C. Cloud detection using satellite measurements of infrared and visible radiances for ISCCP. J. Clim. 1993, 6, 2341–2369. [Google Scholar] [CrossRef]

- Saunders, R.W.; Kriebel, K.T. An improved method for detecting clear sky and cloudy radiances from AVHRR data. Int. J. Remote Sens. 1988, 9, 123–150. [Google Scholar] [CrossRef]

- Ackerman, S.A.; Strabala, K.I.; Menzel, W.P.; Frey, R.A.; Moeller, C.C.; Gumley, L.E. Discriminating clear sky from clouds with MODIS. J. Geophys. Res. 1998, 103, 32141–32157. [Google Scholar] [CrossRef]

- Ackerman, S.A.; Frey, R.A.; Strabala, K.; Liu, Y.; Gumley, L.E.; Baum, B.; Menzel, P. Discriminating Clear-Sky from Cloud with MODIS Algorithm Theoretical Basis Document (MOD35 v.6.1). Available online: https://atmosphere-imager.gsfc.nasa.gov/sites/default/files/ModAtmo/MOD35_ATBD_Collection6_1.pdf (accessed on 1 March 2024).

- Sakaida, F.; Hosoda, K.; Moriyama, M.; Murakami, H.; Mukaida, A.; Kawamura, H. Sea surface temperature observation by Global Imager (GLI)/ADEOS-II: Algorithm and accuracy of the product. J. Oceanogr. 2006, 62, 311–319. [Google Scholar] [CrossRef]

- Inoue, T. On the Temperature and Effective Emissivity Determination of Semi-Transparent Cirrus Clouds by Bi-Spectral Measurements in the 10 μm Window Region. J. Meteorol. Soc. Jpn. 1985, 63, 88–99. [Google Scholar] [CrossRef]

- Heidinger, A.K.; Pavolonis, M.J. Gazing at Cirrus Clouds for 25 Years through a Split Window. Part I: Methodology. J. Appl. Meteorol. Climatol. 2009, 48, 1100. [Google Scholar] [CrossRef]

- Jiao, Y.; Zhang, M.; Wang, L.; Qin, W. A New Cloud and Haze Mask Algorithm from Radiative Transfer Simulations Coupled with Machine Learning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; Woodcock, C.E. Cirrus clouds that adversely affect Landsat 8 images: What are they and how to detect them? Remote Sens. Environ. 2020, 246, 111884. [Google Scholar] [CrossRef]

- Istomina, L.; Marks, H.; Huntemann, M.; Heygster, G.; Spreen, G. Improved cloud detection over sea ice and snow during Arctic summer using MERIS data. Atmos. Meas. Tech. 2020, 13, 6459–6472. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley, R.D.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Hughes, M.J.; Laue, B. Cloud detection algorithm comparison and validation for operational Landsat data products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef]

- Stillinger, T.; Roberts, D.A.; Collar, N.M.; Dozier, J. Cloud Masking for Landsat 8 and MODIS Terra Over Snow-Covered Terrain: Error Analysis and Spectral Similarity between Snow and Cloud. Water Resour. Res. 2019, 55, 6169–6184. [Google Scholar] [CrossRef] [PubMed]

- Melchiorre, A.; Boschetti, L.; Roy, D.P. Global evaluation of the suitability of MODIS-Terra detected cloud cover as a proxy for Landsat 7 cloud conditions. Remote Sens. 2020, 12, 202. [Google Scholar] [CrossRef]

- Landsat Satellites. Available online: https://landsat.gsfc.nasa.gov/satellites/ (accessed on 1 March 2024).

- Sentinel Satellites. Available online: https://sentinels.copernicus.eu/web/sentinel/ (accessed on 1 March 2024).

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Frantz, D.; Haß, E.; Uhl, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; He, B. Fmask 4.0: Improved cloud and cloud shadow detection in Landsats 4–8 and Sentinel-2 imagery. Remote Sens. Environ. 2019, 231, 111205. [Google Scholar] [CrossRef]

- Main-Knorn, M.; Pflug, B.; Louis, J.M.B.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for Sentinel-2. Remote Sens. 2017, 10, 3. Available online: https://api.semanticscholar.org/CorpusID:64430252 (accessed on 1 March 2024).

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G. A multi-temporal method for cloud detection, applied to FORMOSAT-2, VENµS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef]

- MAJA-Github. 2023. Available online: https://github.com/CNES/MAJA (accessed on 1 March 2024).

- Baetens, L.; Desjardins, C.; Hagolle, O. Validation of Copernicus Sentinel-2 Cloud Masks Obtained from MAJA, Sen2Cor, and FMask Processors Using Reference Cloud Masks Generated with a Supervised Active Learning Procedure. Remote Sens. 2019, 11, 433. [Google Scholar] [CrossRef]

- Li, L.; Li, X.; Jiang, L.; Su, X.; Chen, F. A review on Deep Learning techniques for cloud detection methodologies and challenges. SIViP 2021, 15, 1527–1535. [Google Scholar] [CrossRef]

- Kristollari, V.; Karathanassi, V. Artificial neural networks for cloud masking of Sentinel-2 ocean images with noise and sunglint. Int. J. Remote Sens. 2020, 41, 4102–4135. [Google Scholar] [CrossRef]

- Poulsen, C.; Egede, U.; Robbins, D.; Sandeford, B.; Tazi, K.; Zhu, T. Evaluation and comparison of a Machine Learning cloud identification algorithm for the SLSTR in polar regions. Remote Sens. Environ. 2020, 248, 111999. [Google Scholar] [CrossRef]

- Liu, C.; Yang, S.; Di, D.; Yang, Y.; Zhou, C.; Hu, X.; Sohn, B.J. A Machine Learning-based Cloud Detection Algorithm for the Himawari-8 Spectral Image. Adv. Atmos. Sci. 2022, 39, 1994–2007. [Google Scholar] [CrossRef]

- Joshi, P.P.; Wynne, R.H.; Thomas, V.A. Cloud detection algorithm using SVM with SWIR2 and tasseled cap applied to Landsat 8. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101898. [Google Scholar] [CrossRef]

- Lee, K.-Y.; Lin, C.-H. Cloud Detection of Optical Satellite Images using Support Vector Machine. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016; XLI-B7, 289–293. [Google Scholar] [CrossRef]

- Bai, T.; Li, D.; Sun, K.; Chen, Y.; Li, W. Cloud Detection for High-Resolution Satellite Imagery Using Machine Learning and Multi-Feature Fusion. Remote. Sens. 2016, 8, 715. [Google Scholar] [CrossRef]

- Ishida, H.; Oishi, Y.; Morita, K.; Moriwaki, K.; Nakajima, T.Y. Development of a support vector machine based cloud detection method for MODIS with the adjustability to various conditions. Remote Sens. Environ. 2018, 205, 390–407. [Google Scholar] [CrossRef]

- Ghasemian, N.; Akhoondzadeh, M. Introducing two Random Forest based methods for cloud detection in remote sensing images. Adv. Space Res. 2018, 62, 288–303. [Google Scholar] [CrossRef]

- Thampi, B.V.; Wong, T.; Lukashin, C.; Loeb, N.G. Determination of CERES TOA fluxes using Machine Learning algorithms. Part I: Classification and retrieval of CERES cloudy and clear scenes. J. Atmos. Oceanic Technol. 2017, 34, 2329–2345. [Google Scholar] [CrossRef]

- Sentinel Hub Cloud Detector for Sentinel-2 Images in Python. Available online: https://github.com/sentinel-hub/sentinel2-cloud-detector (accessed on 1 June 2023).

- Li, P.; Dong, L.; Xiao, H.; Xu, M. A cloud image detection method based on SVM vector machine. Neurocomputing 2015, 169, 34–42. [Google Scholar] [CrossRef]

- Gomez-Chova, L.; Camps-Valls, G.; Bruzzone, L.; Calpe-Maravilla, J. Mean Map Kernel Methods for Semisupervised Cloud Classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 207–220. [Google Scholar] [CrossRef]

- Shiffman, S.; Nemani, R. Evaluation of decision trees for cloud detection from AVHRR data. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, IGARSS ’05, Seoul, Korea, 29 July 2005; Volume 8, pp. 5610–5613. [Google Scholar] [CrossRef]

- De Colstoun, E.C.B.; Story, M.H.; Thompson, C.; Commisso, K.; Smith, T.G.; Irons, J.R. National Park vegetation mapping using multi-temporal Landsat 7 data and a decision tree classifier. Remote Sens. Environ. 2003, 85, 316–327. [Google Scholar] [CrossRef]

- Hollstein, A.; Segl, K.; Guanter, L.; Brell, M.; Enesco, M. Ready-to-Use Methods for the Detection of Clouds, Cirrus, Snow, Shadow, Water and Clear Sky Pixels in Sentinel-2 MSI Images. Remote Sens. 2016, 8, 666. [Google Scholar] [CrossRef]

- Hughes, M.J.; Hayes, D.J. Automated detection of cloud and cloud shadow in single-date Landsat imagery using neural networks and spatial post-processing. Remote Sens. 2014, 6, 4907–4926. [Google Scholar] [CrossRef]

- Cilli, R.; Monaco, A.; Amoroso, N.; Tateo, A.; Tangaro, S.; Bellotti, R. Machine Learning for Cloud Detection of Globally Distributed Sentinel-2 Images. Remote Sens. 2020, 12, 2355. [Google Scholar] [CrossRef]

- Singh, R.; Biswas, M.; Pal, M. Cloud detection using sentinel 2 imageries: A comparison of XGBoost, RF, SVM, and CNN algorithms. Geocarto Int. 2022, 38, 1–32. [Google Scholar] [CrossRef]

- Gómez-Chova, L.; Mateo-García, G.; Muñoz-Marí, J.; Camps-Valls, G. Cloud detection Machine Learning algorithms for PROBA-V. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2251–2254. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Le Goff, M.; Tourneret, J.; Wendt, H.; Ortner, M.; Spigai, M. Deep learning for cloud detection. In Proceedings of the ICPRS 8th International Conference of Pattern Recognition Systems, Madrid, Spain, 11–13 July 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Soylu, B.E.; Guzel, M.S.; Bostanci, G.E.; Ekinci, F.; Asuroglu, T.; Acici, K. Deep-Learning-Based Approaches for Semantic Segmentation of Natural Scene Images: A Review. Electronics 2023, 12, 2730. [Google Scholar] [CrossRef]

- Song, C.; Huang, Y.; Ouyang, W.; Wang, L. Box-driven class-wise region masking and filling rate guided loss for weakly supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3136–3145. [Google Scholar]

- Sun, K.; Shi, H.; Zhang, Z.; Huang, Y. Ecs-net: Improving weakly supervised semantic segmentation by using connections between class activation maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 7283–7292. [Google Scholar]

- Ma, T.; Wang, Q.; Zhang, H.; Zuo, W. Delving deeper into pixel prior for box-supervised semantic segmentation. IEEE Trans. Image Process. 2022, 31, 1406–1417. [Google Scholar] [CrossRef] [PubMed]

- Hung, W.-C.; Tsai, Y.-H.; Liou, Y.-T.; Lin, Y.-Y.; Yang, M.-H. Adversarial learning for semi-supervised semantic segmentation. arXiv 2018, arXiv:1802.07934. [Google Scholar]

- Xue, Y.; Xu, T.; Zhang, H.; Long, L.R.; Huang, X. Segan: Adversarial network with multi-scale l 1 loss for medical image segmentation. Neuroinformatics 2018, 16, 383–392. [Google Scholar] [CrossRef] [PubMed]

- Toldo, M.; Maracani, A.; Michieli, U.; Zanuttigh, P. Unsupervised Domain Adaptation in Semantic Segmentation: A Review. arXiv 2020, arXiv:2005.10876. [Google Scholar] [CrossRef]

- Huo, X.; Xie, L.; Zhou, W.; Li, H.; Tian, Q. Focus on Your Target: A Dual Teacher-Student Framework for Domain-adaptive Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 18981–18992. [Google Scholar]

- Hoyer, L.; Dai, D.; Gool, L.V. Daformer: Improving network architectures and training strategies for domain-adaptive semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9924–9935. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Weng, X.; Yan, Y.; Chen, S.; Xue, J.-H.; Wang, H. Stage-aware feature alignment network for real-time semantic segmentation of street scenes. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4444–4459. [Google Scholar] [CrossRef]

- Tang, Q.; Liu, F.; Zhang, T.; Jiang, J.; Zhang, Y.; Zhu, B.; Tang, X. Compensating for Local Ambiguity With Encoder-Decoder in Urban Scene Segmentation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19224–19235. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2018; p. 11045. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Xiao, B. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Chen, L.C.; Papreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern. Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollàr, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 5998–6008. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for Semantic Segmentation, In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). Montreal, QC, Canada, 10–17 October 2021; pp. 7242–7252. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 21–24 June 2021; pp. 6881–6890. [Google Scholar]

- Everingham, M.; Winn, J. The PASCAL visual object classes challenge 2012 (VOC2012) development kit. Pattern Anal. Stat. Model. Comput. Learn. Tech. Rep. 2012, 2007, 1–45. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Mateo-García, G.; Gómez-Chova, L.; Camps-Valls, G. Convolutional neural networks for multispectral image cloud masking. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2255–2258. [Google Scholar] [CrossRef]

- Xie, F.; Shi, M.; Shi, Z.; Yin, J.; Zhao, D. Multi-level Cloud Detection in Remote Sensing Images Based on Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3631–3640. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Zi, Y.; Xie, F.; Jiang, Z. A Cloud Detection Method for Landsat 8 Images Based on PCANet. Remote Sens. 2018, 10, 877. [Google Scholar] [CrossRef]

- López-Puigdollers, D.; Mateo-García, G.; Gómez-Chova, L. Benchmarking Deep Learning Models for Cloud Detection in Landsat 8 and Sentinel-2 Images. Remote Sens. 2021, 13, 992. [Google Scholar] [CrossRef]

- Drönner, J.; Korfhage, N.; Egli, S.; Mühling, M.; Thies, B.; Bendix, J.; Freisleben, B.; Seeger, B. Fast Cloud Segmentation Using Convolutional Neural Networks. Remote Sens. 2018, 10, 1782. [Google Scholar] [CrossRef]

- Zhan, Y.; Wang, J.; Shi, J.; Cheng, G.; Yao, L.; Sun, W. Distinguishing Cloud and Snow in Satellite Images via Deep Convolutional Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1785–1789. [Google Scholar] [CrossRef]

- Wieland, M.; Li, Y.; Martinis, S. Multi-sensor cloud and cloud shadow segmentation with a convolutional neural network. Remote Sens. Environ. 2019, 230, 111203. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A cloud detection algorithm for satellite imagery based on Deep Learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Mohajerani, S.; Saeedi, P. Cloud-Net: An end-to-end Cloud Detection Algorithm for Landsat 8 Imagery. arXiv 2019, arXiv:1901.10077. [Google Scholar]

- Francis, A.; Sidiropoulos, P.; Muller, J.-P. CloudFCN: Accurate and Robust Cloud Detection for Satellite Imagery with Deep Learning. Remote Sens. 2019, 11, 2312. [Google Scholar] [CrossRef]

- L8 Biome Cloud Validation Masks. U.S. Geological Survey. 2016. Available online: https://landsat.usgs.gov/landsat-8-cloud-cover-assessment-validation-data (accessed on 1 March 2024).

- Domnich, M.; Sünter, I.; Trofimov, H.; Wold, O.; Harun, F.; Kostiukhin, A.; Järveoja, M.; Veske, M.; Tamm, T.; Voormansik, K.; et al. KappaMask: AI-Based Cloudmask Processor for Sentinel-2. Remote Sens. 2021, 13, 4100. [Google Scholar] [CrossRef]

- Francis, A.; Mrziglod, J.; Sidiropoulos, P.; Muller, J.-P. Sentinel-2 Cloud Mask Catalogue. 2020. Available online: https://zenodo.org/records/4172871 (accessed on 1 March 2024).

- Hu, K.; Zhang, D.; Xia, M. CDUNet: Cloud Detection UNet for Remote Sensing Imagery. Remote Sens. 2021, 13, 4533. [Google Scholar] [CrossRef]

- Caraballo-Vega, J.A.; Carroll, M.L.; Neigh, C. Optimizing WorldView-2,-3 cloud masking using Machine Learning approaches. Remote Sens. Environ. 2023, 284, 113332. [Google Scholar] [CrossRef]

- Chai, D.; Newsam, S.; Zhang, H.K.; Qiu, Y.; Huang, J. Cloud and cloud shadow detection in Landsat imagery based on deep convolutional neural networks. Remote Sens. Environ. 2019, 225, 307–316. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Cheng, Q.; Liu, Y.; You, S.; He, Z. Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef]

- Shao, Z.; Pan, Y.; Diao, C.; Cai, J. Cloud Detection in Remote Sensing Images Based on Multiscale Features-Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4062–4076. [Google Scholar] [CrossRef]

- Li, J.; Wu, Z.; Hu, Z.; Jian, C.; Luo, S.; Mou, L.; Zhu, X.X.; Molinier, M. A lightweight deep learning-based cloud detection method for Sentinel-2A imagery fusing multiscale spectral and spatial features. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5401219. [Google Scholar] [CrossRef]

- Chen, G.; Li, C.; Wei, W.; Jing, W.; Woźniak, M.; Blažauskas, T.; Damaševičius, R. Fully Convolutional Neural Network with Augmented Atrous Spatial Pyramid Pool and Fully Connected Fusion Path for High Resolution Remote Sensing Image Segmentation. Appl. Sci. 2019, 9, 1816. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, J.; Zhang, R.; Li, Z.; Lin, Q.; Wang, X. UATNet: U-Shape Attention-Based Transformer Net for Meteorological Satellite Cloud Recognition. Remote Sens. 2022, 14, 104. [Google Scholar] [CrossRef]

- Mateo-García, G.; Laparra, V.; López-Puigdollers, D.; Gómez-Chova, L. Transferring Deep Learning models for cloud detection between Landsat 8 and Proba-V. ISPRS J. Photogramm. Remote Sens. 2020, 150, 1–17. [Google Scholar] [CrossRef]

- Mateo-García, G.; Laparra, V.; López-Puigdollers, D.; Gómez-Chova, L. Cross-Sensor Adversarial Domain Adaptation of Landsat-8 and Proba-V Images for Cloud Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 747–761. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Pang, S.; Sun, L.; Tian, Y.; Ma, Y.; Wei, J. Convolutional Neural Network-Driven Improvements in Global Cloud Detection for Landsat 8 and Transfer Learning on Sentinel-2 Imagery. Remote Sens. 2023, 15, 1706. [Google Scholar] [CrossRef]

- Skakun, S.; Wevers, J.; Brockmann, C.; Doxani, G.; Aleksandrov, M.; Batič, M.; Žust, L. Cloud Mask Intercomparison eXercise (CMIX): An evaluation of cloud masking algorithms for Landsat 8 and Sentinel-2. Remote Sens. Environ. 2022, 274, 112990. [Google Scholar] [CrossRef]

- Mateo-García, G.; Laparra, V.; Gómez-Chova, L. Domain Adaptation of Landsat-8 and Proba-V Data Using Generative Adversarial Networks for Cloud Detection. In Proceedings of the IGARSS 2019—IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 712–715. [Google Scholar] [CrossRef]

- Francis, A.; Mrziglod, J.; Sidiropoulos, P.; Muller, J.P. SEnSeI: A Deep Learning Module for Creating Sensor Independent Cloud Masks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5406121. [Google Scholar] [CrossRef]

- Mohajerani, S.; Saeedi, P. Cloud-Net+: A cloud segmentation CNN for landsat 8 remote sensing imagery optimized with 393 filtered jaccard loss function. arXiv 2020, arXiv:2001.08768. [Google Scholar]

- Baetens, L.; Hagolle, O. Sentinel-2 Reference Cloud Masks Generated by an Active Learning Method. 2018. Available online: https://zenodo.org/records/1460961 (accessed on 1 March 2024).

- Aybar, C.; Ysuhuaylas, L.; Loja, J.; Gonzales, K.; Herrera, F.; Bautista, L.; Gómez-Chova, L. CloudSEN12, a global dataset for semantic understanding of cloud and cloud shadow in Sentinel-2. Sci. Data 2022, 9, 782. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Li, J.; Wang, Y.; Hu, Z.; Molinier, M. Self-attentive generative adversarial network for cloud detection in high resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1792–1796. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Li, H.; Xia, G.; Gamba, P.; Zhang, L. Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery. Remote Sens. Environ. 2017, 191, 342–358. [Google Scholar] [CrossRef]

- Wu, X.; Shi, Z.; Zou, Z. A geographic information-driven method and a new large scale dataset for remote sensing cloud/snow detection. ISPRS J. Photogramm. Remote Sens. 2021, 174, 87–104. [Google Scholar] [CrossRef]

- Mrziglod, J.; Francis, A. Intelligently Reinforced Image Segmentation graphical user interface (IRIS) 2019.

- Czerkawski, M.; Atkinson, R.; Michie, C.; Tachtatzis, C. SatelliteCloudGenerator: Controllable Cloud and Shadow Synthesis for Multi-Spectral Optical Satellite Images. Remote Sens. 2023, 15, 4138. [Google Scholar] [CrossRef]

- Alhassan, M.; Fuseini, M. Data augmentation: A comprehensive survey of modern approaches. Array 2022, 16, 100258. [Google Scholar] [CrossRef]

- Ghasemi, A.; Rabiee, H.R.; Fadaee, M.; Manzuri, M.T.; Rohban, M.H. Active Learning from Positive and Unlabelled Data. In Proceedings of the 2011 IEEE 11th International Conference on Data Mining Workshops, Vancouver, BC, Canada, 11 December 2011. [Google Scholar] [CrossRef]

- Zeng, Y.; Hao, D.; Park, T.; Zhu, P.; Huete, A.; Myneni, R.; Knyazikhin, Y.; Qi, J.; Nemani, R.; Fa, L.; et al. Structural complexity biases vegetation greenness measures. Nat. Ecol. Evol. 2023, 7, 1790–1798. [Google Scholar] [CrossRef]

- Dozier, J.; Bair, E.; Baskaran, L.; Brodrick, P.; Carmon, N.; Kokaly, R.; Miller, C.; Miner, K.R.; Painter, T.; Thompson, D. Error and Uncertainty Degrade Topographic Corrections of Remotely Sensed Data. J. Geophys. Res. Biogeosci. 2022, 127, e2022JG007147. [Google Scholar] [CrossRef]

- Lazzaro, D.; Cinà, A.E.; Pintor, M.; Demontis, A.; Biggio, B.; Roli, F.; Pelillo, M. Minimizing Energy Consumption of Deep Learning Models by Energy-Aware Training. arXiv 2023, arXiv:2307.00368. [Google Scholar]

- Desislavov, R.; Martínez-Plumed, F.; Hernández-Orallo, J. Trends in AI inference energy consumption: Beyond the performance-vs-parameter laws of deep learning. Sustain. Comput. Inform. Syst. 2023, 38, 100857. [Google Scholar] [CrossRef]

| Dataset | Geo-Distribution | Number of Scenes |

|---|---|---|

| Landsat 8 (30 m) | ||

| L8_SPARCS [55] | Worldwide | 80 |

| L8_Biome [27] | Worldwide | 96 |

| L8-38Cloud [100] | USA | 38 |

| L8-95Cloud [120] | USA | 95 |

| Sentinel-2 (10 m) | ||

| S2-Hollstein [54] | Europe | 59 |

| S2-BaetensHagolle [121] | Worldwide | 35 |

| CESBIO [38] | Europe | 30 |

| S2-CloudCatalogue (20 m) [104] | Worldwide | 513 |

| KappaZeta [103] | Northern Europe | 150 |

| CloudSEN12 [122] | Worlwide | 49, 400 |

| WHUS2-CD [123] | China | 32 |

| Gaofen1 (16 m) | ||

| GF1_WHU [124] | Worldwide | 108 |

| Levir_CS [125] | Worldwide | 4168 |

| Metric | Formula | Description |

|---|---|---|

| Overall accuracy (OA) | Proportion of TP and TN detected out of all the predictions made by the model. | |

| Balanced OA | Index of the model’s ability to predict both classes. | |

| Precision (UA) | Proportion of TP detected out of all positive predictions made by the model. | |

| Recall (PA) | Proportion of TP that have been detected out of all actual positives. | |

| F1-score | Harmonic mean of recall and precision. | |

| Intersection of Union (IoU) or Jaccard Index | Widely used for semantic segmentation, measures the overlap between predicted and actual positive. | |

| Omission Error | Proportion of actual positives missed by the model out of all actual positives. | |

| Commission Error | Proportion of negative instances incorrectly identified as positive by the model (overdetection). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anzalone, A.; Pagliaro, A.; Tutone, A. An Introduction to Machine and Deep Learning Methods for Cloud Masking Applications. Appl. Sci. 2024, 14, 2887. https://doi.org/10.3390/app14072887

Anzalone A, Pagliaro A, Tutone A. An Introduction to Machine and Deep Learning Methods for Cloud Masking Applications. Applied Sciences. 2024; 14(7):2887. https://doi.org/10.3390/app14072887

Chicago/Turabian StyleAnzalone, Anna, Antonio Pagliaro, and Antonio Tutone. 2024. "An Introduction to Machine and Deep Learning Methods for Cloud Masking Applications" Applied Sciences 14, no. 7: 2887. https://doi.org/10.3390/app14072887

APA StyleAnzalone, A., Pagliaro, A., & Tutone, A. (2024). An Introduction to Machine and Deep Learning Methods for Cloud Masking Applications. Applied Sciences, 14(7), 2887. https://doi.org/10.3390/app14072887