Using Transfer Learning and Radial Basis Function Deep Neural Network Feature Extraction to Upgrade Existing Product Fault Detection Systems for Industry 4.0: A Case Study of a Spring Factory

Abstract

:1. Introduction

2. Related Works

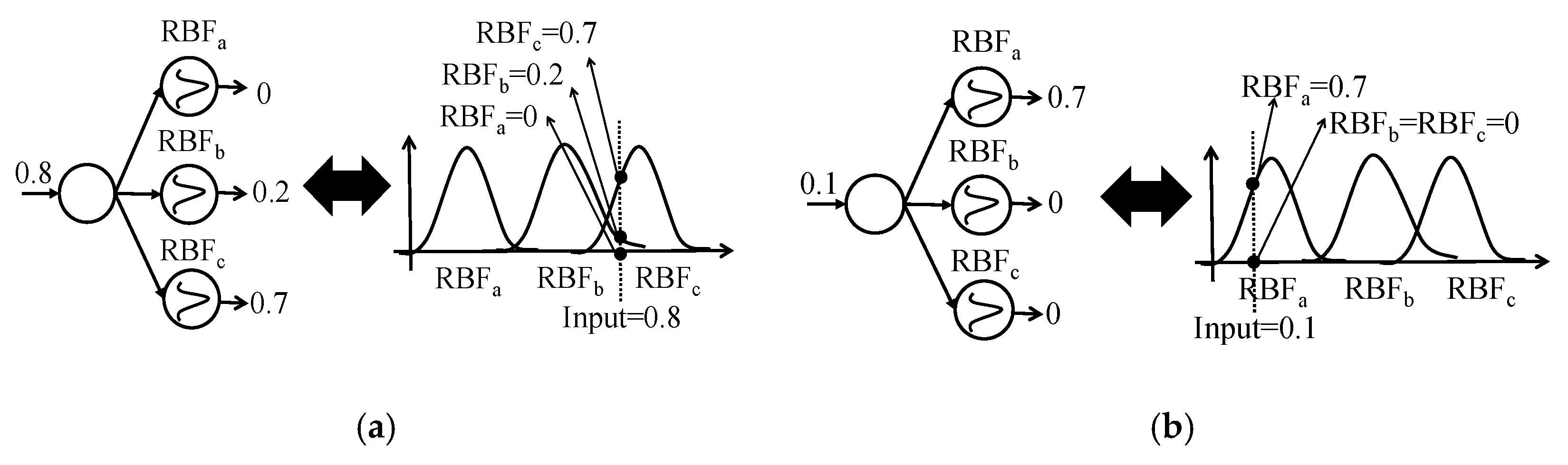

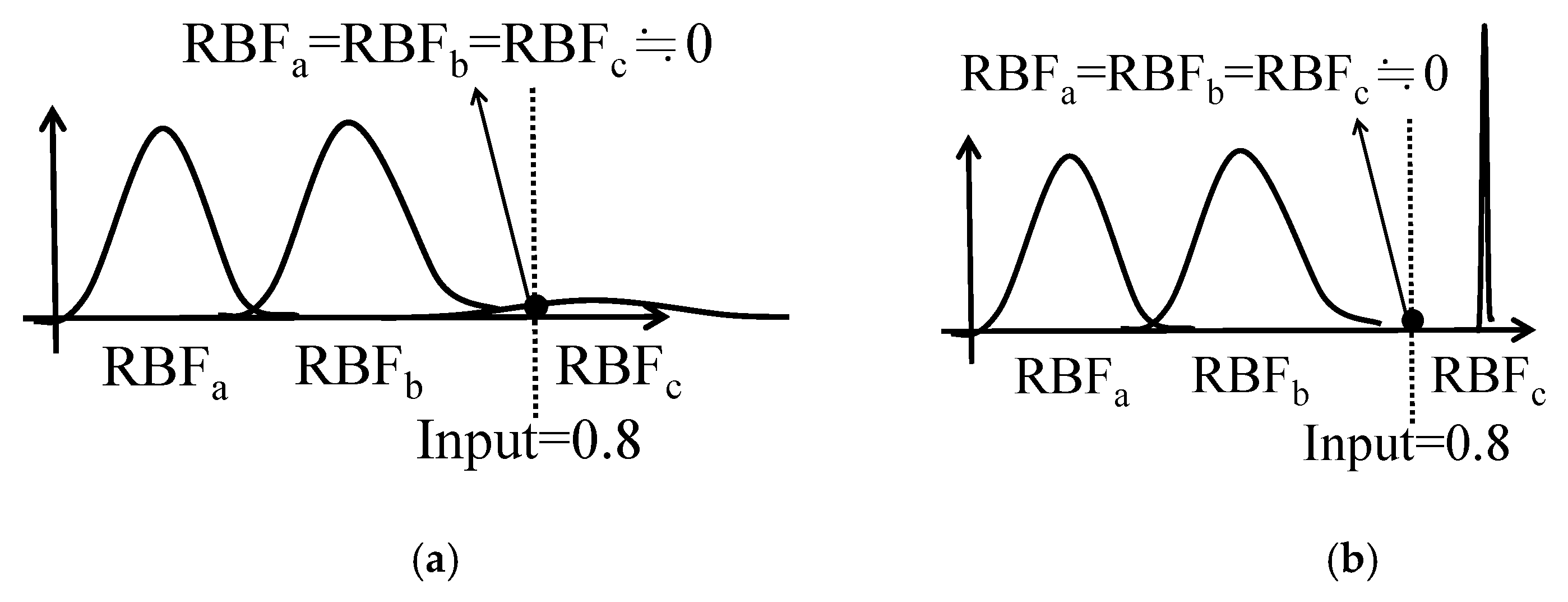

3. Algorithms

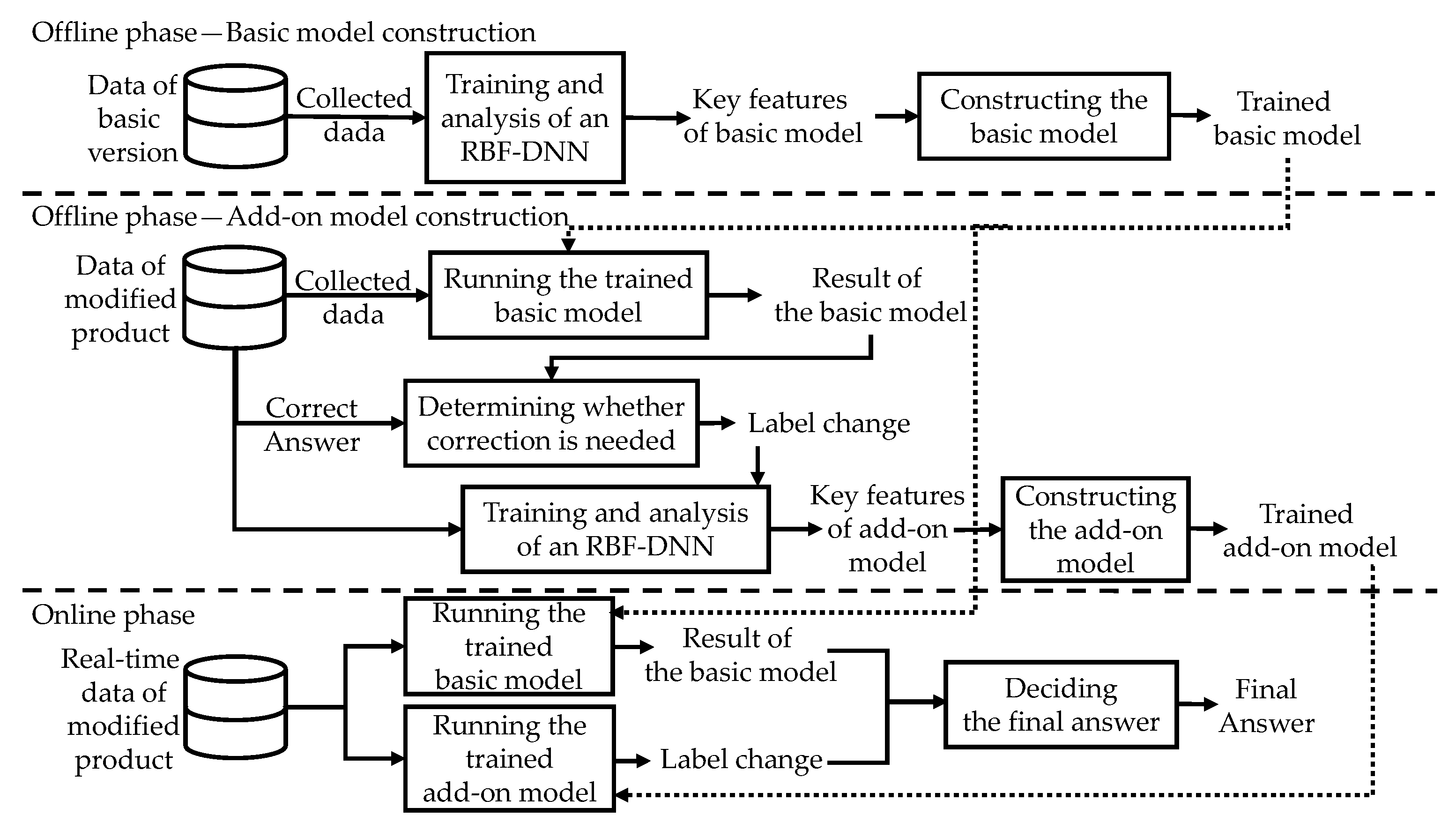

3.1. Algorithm for Offline Basic Model Construction

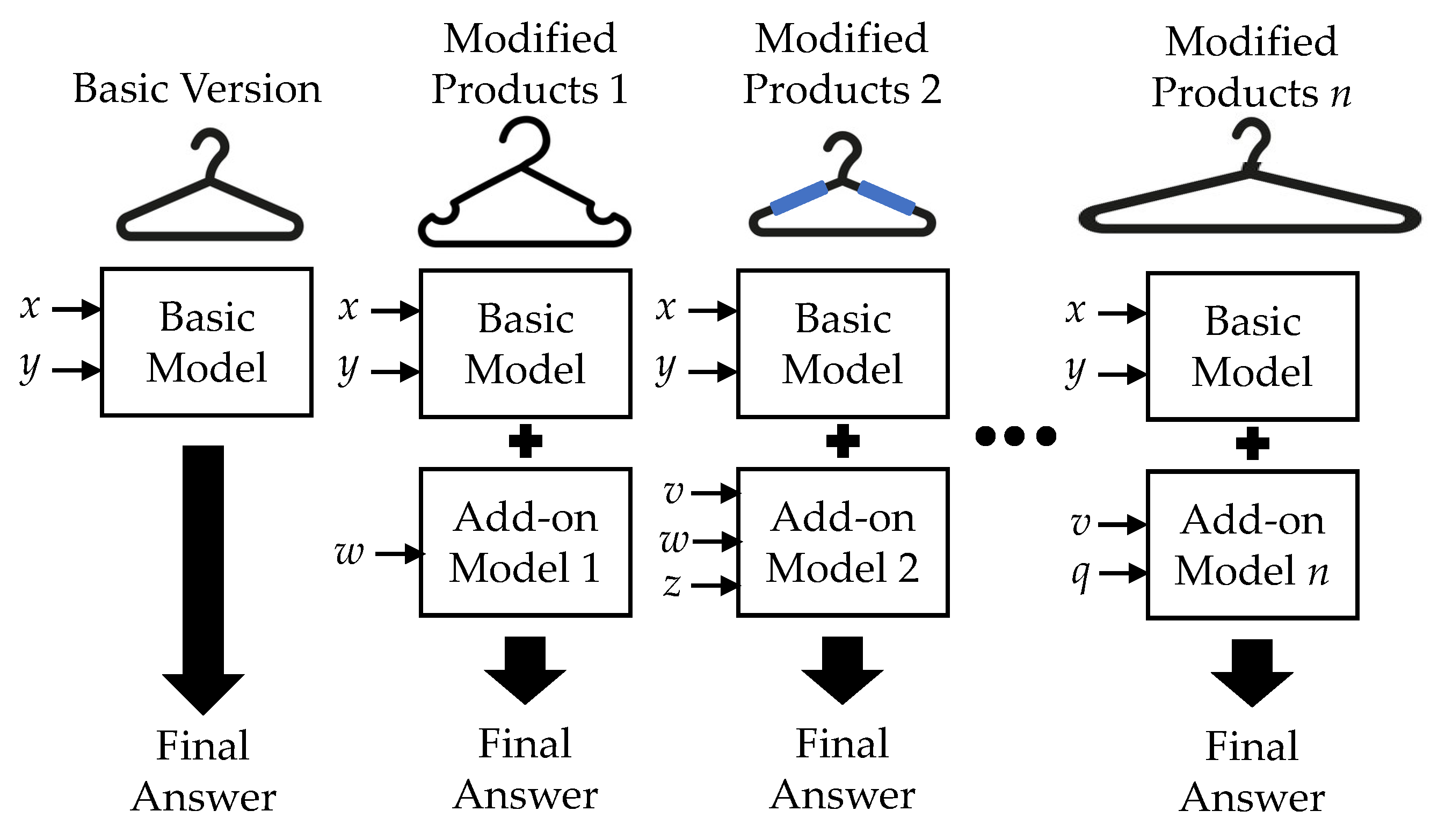

3.2. Algorithm for Offline Add-on Model Construction

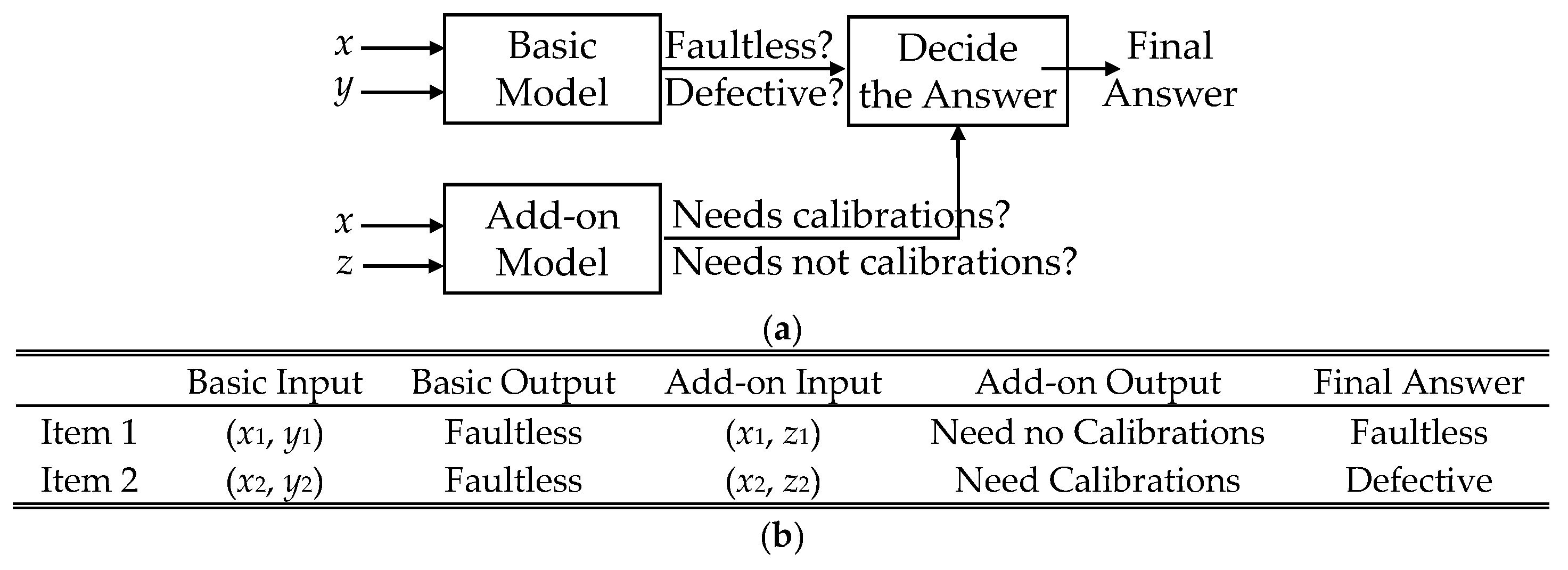

3.3. Simultaneous Operation of Basic and Add-On Models Online

4. Simulations

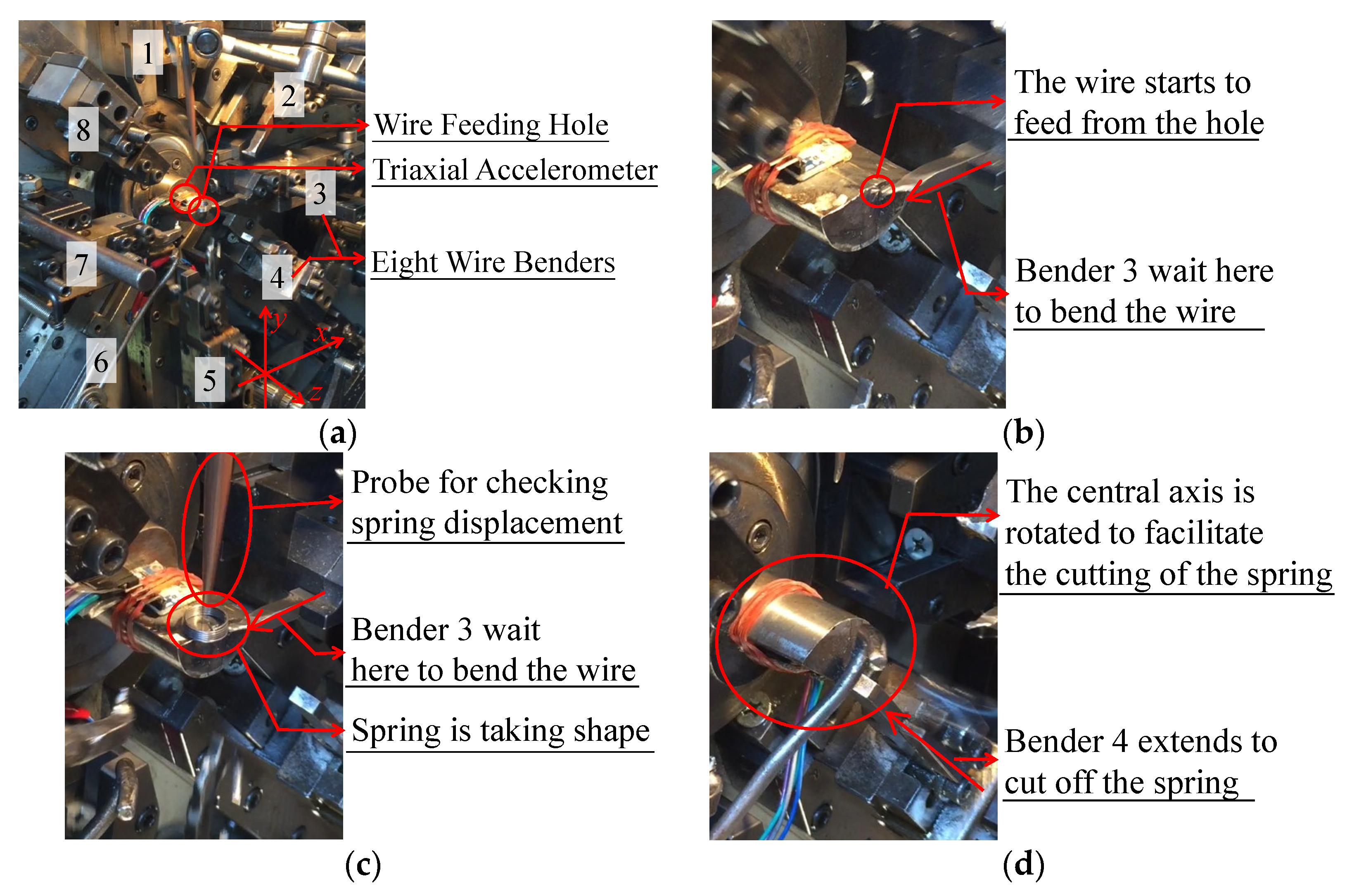

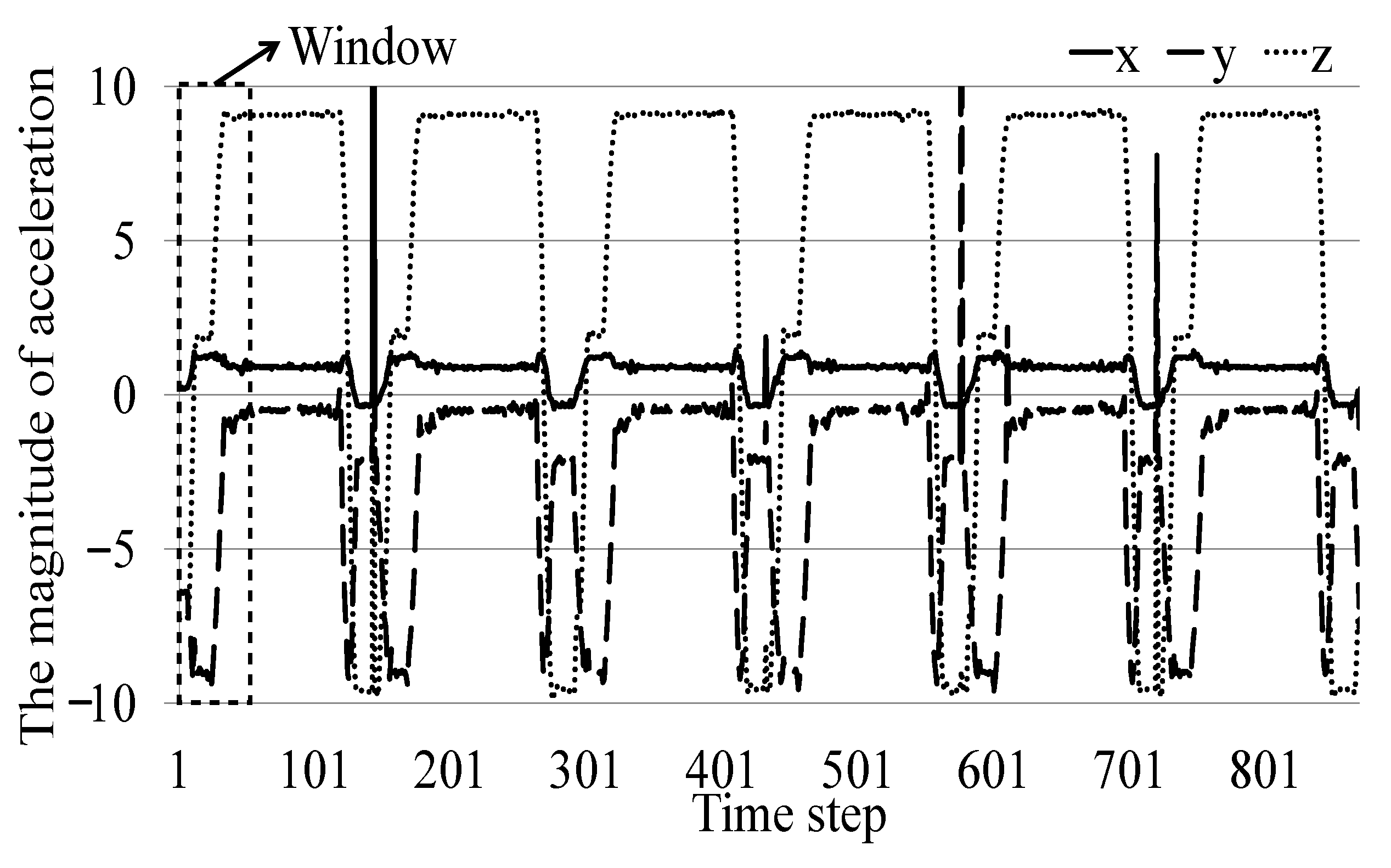

4.1. Introduction to Dataset and Experiment Parameters

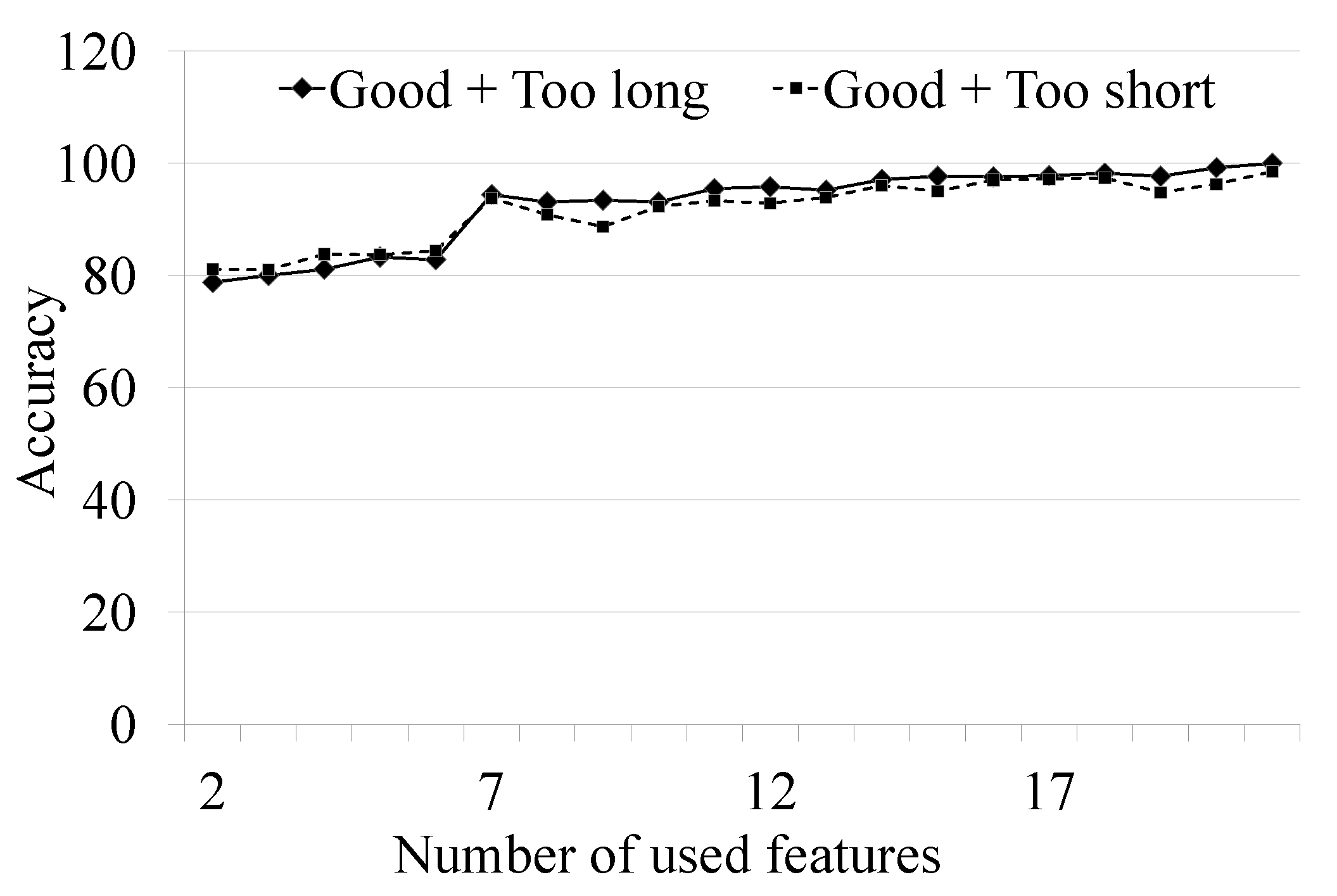

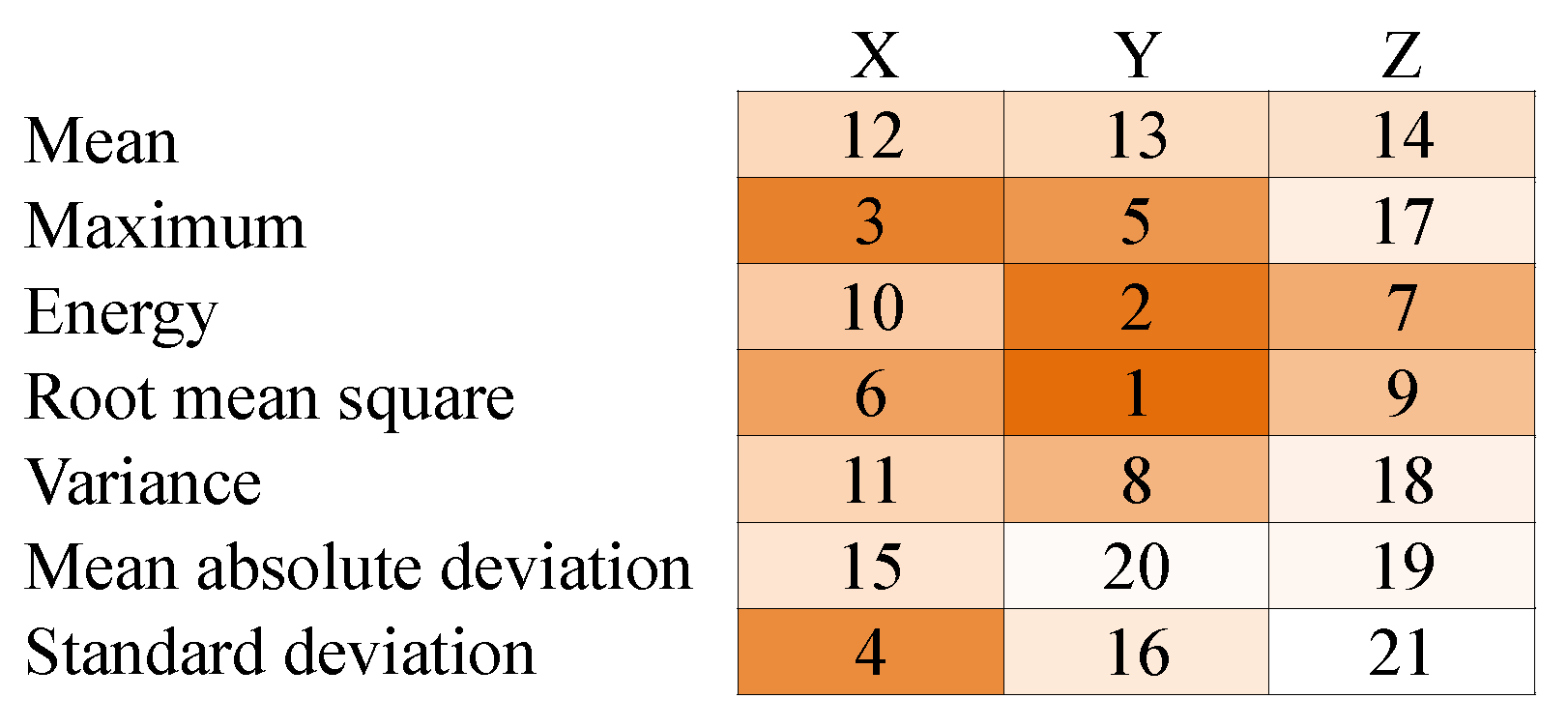

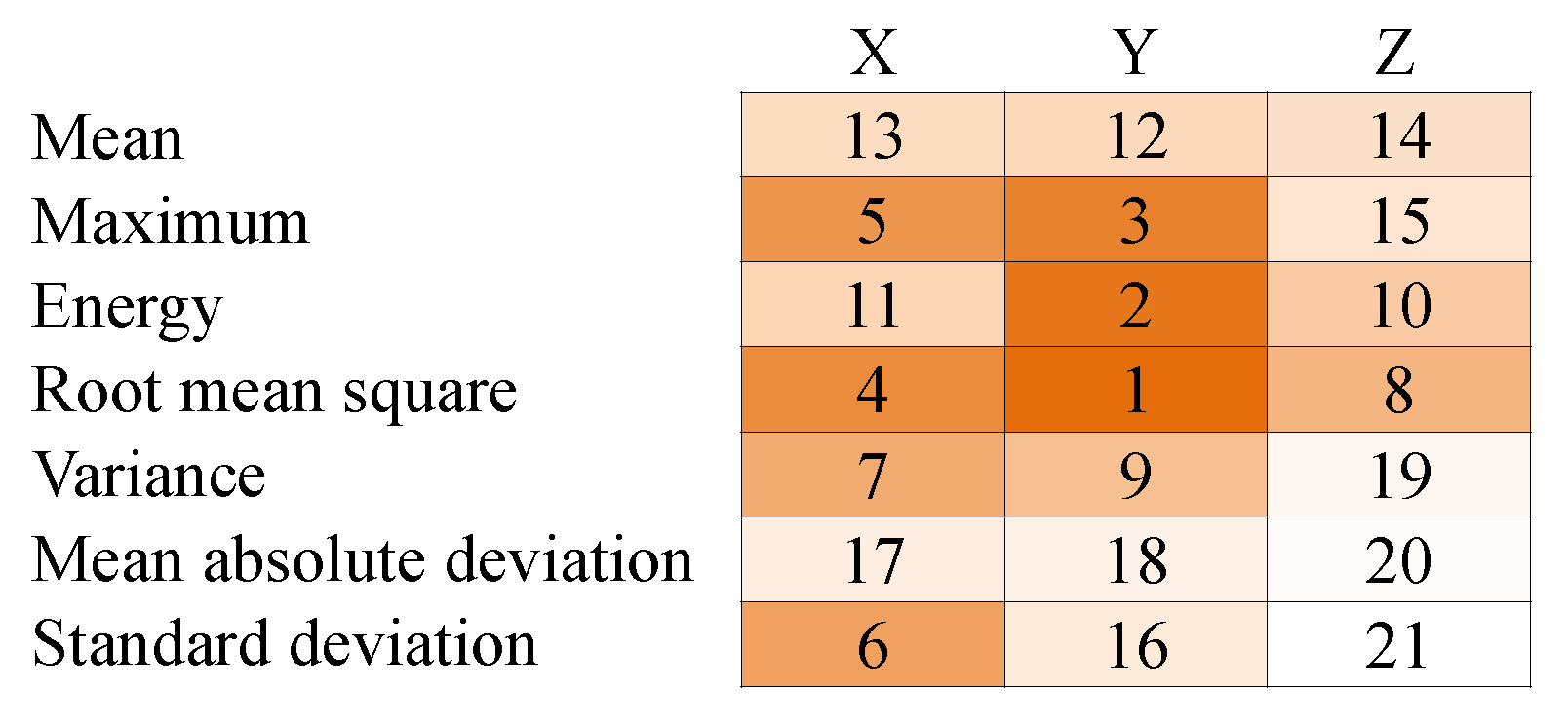

4.2. Verification of Basic Model

4.3. Verification of Add-On Model Construction

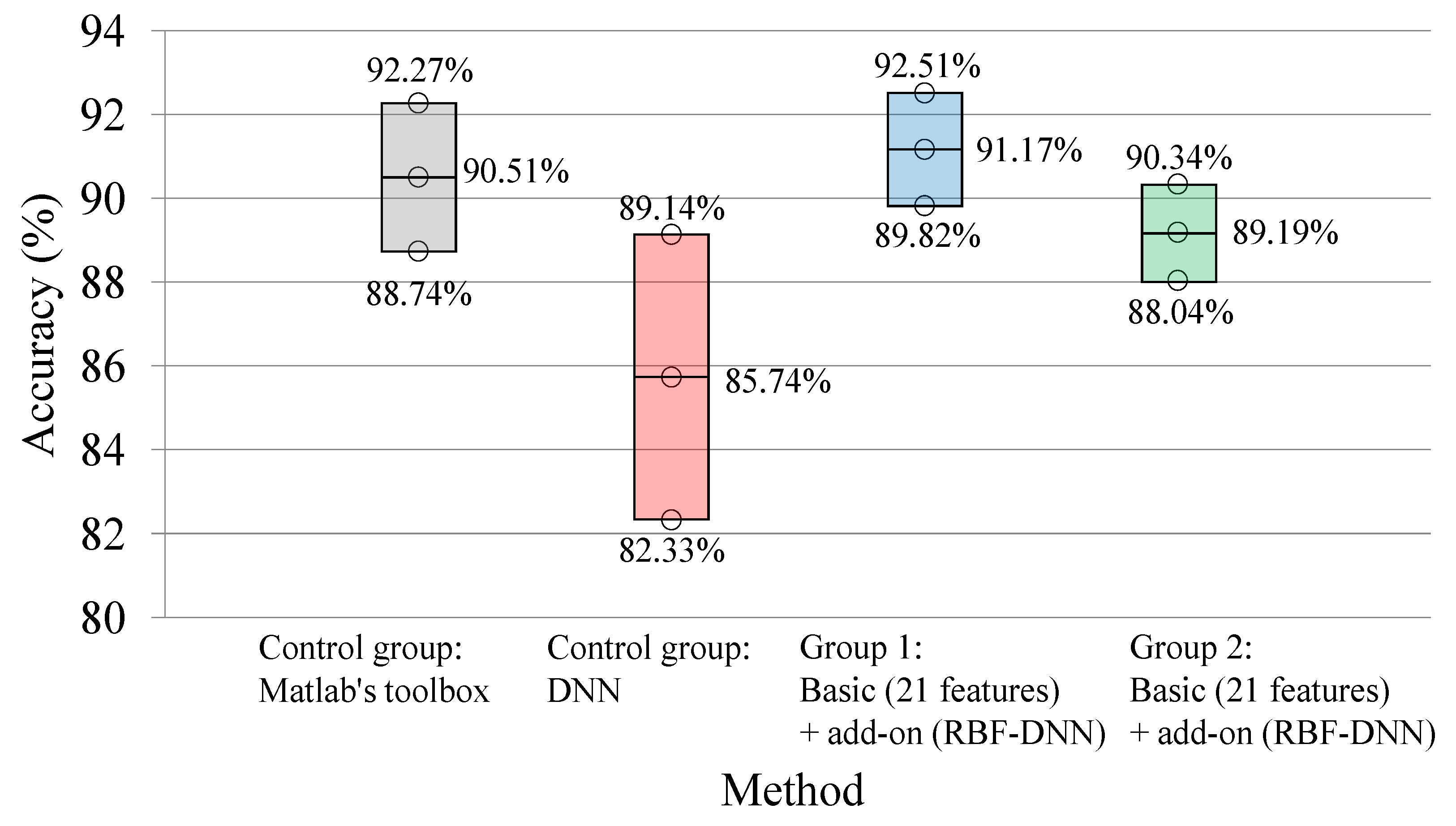

4.4. Verification of Transfer Learning for the Combination of Basic and Add-On Models

4.5. Applicability of Proposed Method in Practice

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kościelny, J.M.; Ostasz, A.; Wasiewicz, P. Fault Detection Based on Fuzzy Neural Networks—Application to Sugar Factory Evaporator. IFAC Proc. Vol. 2000, 33, 343–348. [Google Scholar] [CrossRef]

- Libal, U.; Hasiewicz, Z. Wavelet based rule for fault detection. IFAC-PapersOnLine 2018, 51, 255–262. [Google Scholar] [CrossRef]

- Liu, Z.; Zhou, Z.; Xu, Z.; Tan, D. An adaptive VNCMD and its application for fault diagnosis of industrial sewing machines. Appl. Acoust. 2023, 213, 109500. [Google Scholar] [CrossRef]

- Chiu, S.M.; Chen, Y.C.; Kuo, C.J.; Hung, L.C.; Hung, M.H.; Chen, C.C.; Lee, C. Development of Lightweight RBF-DRNN and Automated Framework for CNC Tool-Wear Prediction. IEEE Trans. Instrum. Meas. 2022, 71, 2506711. [Google Scholar] [CrossRef]

- Lee, X.Y.; Kumar, A.; Vidyaratne, L.; Rao, A.R.; Farahat, A.; Gupta, C. An ensemble of convolution-based methods for fault detection using vibration signals. In Proceedings of the IEEE International Conference on Prognostics and Health Management (ICPHM), Montreal, QC, Canada, 5–7 June 2023; pp. 172–179. [Google Scholar]

- Li, Z.; Wang, Y.; Wang, K.S. Intelligent predictive maintenance for fault diagnosis and prognosis in machine centers: Industry 4.0 scenario. Adv. Manuf. 2017, 5, 377–387. [Google Scholar] [CrossRef]

- Mezair, T.; Djenouri, Y.; Belhadi, A.; Srivastava, G.; Lin, J.C. W, A sustainable deep learning framework for fault detection in 6G Industry 4.0 heterogeneous data environments. Comput. Commun. 2022, 187, 164–171. [Google Scholar] [CrossRef]

- Vita, F.D.; Bruneo, D.; Das, S.K. On the use of a full stack hardware/software infrastructure for sensor data fusion and fault prediction in industry 4.0. Pattern Recognit. Lett. 2020, 138, 30–37. [Google Scholar] [CrossRef]

- Drakaki, M.; Karnavas, Y.L.; Tzionas, P.; Chasiotis, I.D. Recent Developments towards Industry 4.0 Oriented Predictive Maintenance in Induction Motors. Procedia Comput. Sci. 2021, 180, 943–949. [Google Scholar] [CrossRef]

- Yan, J.; Meng, Y.; Lu, L.; Li, L. Industrial Big Data in an Industry 4.0 Environment: Challenges, Schemes, and Applications for Predictive Maintenance. IEEE Access 2017, 5, 23484–23491. [Google Scholar] [CrossRef]

- Kuo, C.J.; Ting, K.C.; Chen, Y.C.; Yang, D.L.; Chen, H.M. Automatic machine status prediction in the era of Industry 4.0: Case study of machines in a spring factory. J. Syst. Archit. 2017, 81, 44–53. [Google Scholar] [CrossRef]

- Neupane, D.; Kim, Y.; Seok, J.; Hong, J. CNN-Based Fault Detection for Smart Manufacturing. Appl. Sci. 2021, 11, 11732. [Google Scholar] [CrossRef]

- Mazzoleni, M.; Sarda, K.; Acernese, A.; Russo, L.; Manfredi, L.; Glielmo, L.; Vecchio, C.D. A fuzzy logic-based approach for fault diagnosis and condition monitoring of industry 4.0 manufacturing processes. Eng. Appl. Artif. Intell. 2022, 115, 105317. [Google Scholar] [CrossRef]

- Raouf, I.; Kumar, P.; Lee, H.; Kim, H.S. Transfer Learning-Based Intelligent Fault Detection Approach for the Industrial Robotic System. Mathematics 2023, 11, 945. [Google Scholar] [CrossRef]

- Chen, Y.C.; Li, D.C. Selection of key features for PM2.5 prediction using a wavelet model and RBF-LSTM. Appl. Intell. 2021, 51, 2534–2555. [Google Scholar] [CrossRef]

- Yang, L. Risk Prediction Algorithm of Social Security Fund Operation Based on RBF Neural Network. Int. J. Antennas Propag. 2021, 2021, 6525955. [Google Scholar] [CrossRef]

- Zhang, A.; Xie, H.; Cao, Q. The Study of Safety of Ships’ Setting Sail Assessment Based on RBF Neural Network. In Proceedings of the International Conference on Automation, Control and Robotics Engineering, Dalian, China, 19–20 September 2020; pp. 607–611. [Google Scholar]

- Chen, Y.-C.; Ting, K.-C.; Chen, Y.-M.; Yang, D.-L.; Chen, H.-M.; Ying, J.J.-C. A Low-Cost Add-On Sensor and Algorithm to Help Small- and Medium-Sized Enterprises Monitor Machinery and Schedule Processes. Appl. Sci. 2019, 9, 1549. [Google Scholar] [CrossRef]

- Isermann, R. Fault-Diagnosis Systems-An Introduction from Fault Detection to Fault Tolerance, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Brkovic, A.; Gajic, D.; Gligorijevic, J.; Savic-Gajic, I.; Georgieva, O.; Gennaro, S.D. Early fault detection and diagnosis in bearings for more efficient operation of rotating machinery. Energy 2017, 136, 63–71. [Google Scholar] [CrossRef]

- Sarita, K.; Devarapalli, R.; Kumar, S.; Malik, H.; Márquez, F.P.G.; Rai, P.; Malik, H.; Chaudhary, G.; Srivastava, S. Principal component analysis technique for early fault detection. J. Intell. Fuzzy Syst. 2022, 42, 861–872. [Google Scholar] [CrossRef]

- Yu, Y.; Peng, M.J.; Wang, H.; Ma, Z.G.; Li, W. Improved PCA model for multiple fault detection, isolation and reconstruction of sensors in nuclear power plant. Ann. Nucl. Energy 2020, 148, 107662. [Google Scholar] [CrossRef]

- Yu, W.; Dillon, T.; Mostafa, F.; Rahayu, W.; Liu, Y. A Global Manufacturing Big Data Ecosystem for Fault Detection in Predictive Maintenance. IEEE Trans. Ind. Inform. 2020, 16, 183–192. [Google Scholar] [CrossRef]

- Xue, P.; Zhou, Z.; Fang, X.; Chen, X.; Liu, L.; Liu, Y.; Liu, J. Fault detection and operation optimization in district heating substations based on data mining techniques. Appl. Energy 2017, 205, 926–940. [Google Scholar] [CrossRef]

- Limaua, F.S.D.; Guedes, L.A.H.; Silva, D.R. Application of Fourier Descriptors and Pearson Correlation for fault detection in Sucker Rod Pumping System. In Proceedings of the IEEE Conference on Emerging Technologies & Factory Automation, Palma de Mallorca, Spain, 22–25 September 2009; pp. 1–4. [Google Scholar]

- Anouar, B.A.E.; Elamrani, M.; Elkihel, B.; Delaunois, F. Fault diagnosis of Rotating Machinery using Vibration Measurement: Application of the Wavelet Transform. In Proceedings of the International Conference on Industrial Engineering and Operations Management, Rabat, Morocco, 11–13 April 2017. [Google Scholar]

- Hartono, D.; Halim, D.; Widodo, A.; Roberts, G. Bevel Gearbox Fault Diagnosis using Vibration Measurements. In Proceedings of the 2016 International Conference on Frontiers of Sensors Technologies (ICFST 2016), Hong Kong, China, 12–14 March 2016; Volume 59. [Google Scholar]

- Wang, Z.; Shen, Y. Kalman Filter-Based Fault Diagnosis. In Model-Based Fault Diagnosis. Studies in Systems, Decision and Control, 1st ed.; Springer: Singapore, 2023; Volume 221, pp. 154–196. [Google Scholar]

- Jiang, H.; Liu, G.; Li, J.; Zhang, T.; Wang, C.; Ren, K. Model based fault diagnosis for drillstring washout using iterated unscented Kalman filter. J. Pet. Sci. Eng. 2019, 180, 246–256. [Google Scholar] [CrossRef]

- Khan, R.; Khan, S.U.; Khan, S.; Khan, M.U.A. Localization Performance Evaluation of Extended Kalman Filter in Wireless Sensors Network. Procedia Comput. Sci. 2014, 32, 117–124. [Google Scholar] [CrossRef]

- Nykyri, M.; Kuisma, M.; Kärkkäinen, T.J.; Junkkari, T.; Kerkelä, K.; Puustinen, J.; Myrberg, J.; Hallikas, J. Predictive Analytics in a Pulp Mill using Factory Automation Data-Hidden Potential. In Proceedings of the IEEE 17th International Conference on Industrial Informatics, Helsinki, Finland, 22–25 July 2019; pp. 1014–1020. [Google Scholar]

- Mishra, K.M.; Huhtala, K.J. Fault Detection of Elevator Systems Using Multilayer Perceptron Neural Network. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation, Zaragoza, Spain, 10–13 September 2019; pp. 904–909. [Google Scholar]

- Nguyen, N.B. Fault localization on the transmission lines by wavelet technique combined radial basis function neural network. J. Tech. Educ. Sci. 2019, 14, 7–11. [Google Scholar]

- Lilhore, U.K.; Simaiya, S.; Sandhu, J.K.; Trivedi, N.K.; Garg, A.; Moudgil, A. Deep Learning-Based Predictive Model for Defect Detection and Classification in Industry 4.0. In Proceedings of the International Conference on Emerging Smart Computing and Informatics, Pune, India, 9–11 March 2022; pp. 1–5. [Google Scholar]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A New Convolutional Neural Network-Based Data-Driven Fault Diagnosis Method. IEEE Trans. Ind. Electron. 2018, 65, 5990–5998. [Google Scholar] [CrossRef]

- Chen, Z.; Deng, S.; Chen, X.; Li, C.; Sanchez, R.V.; Qin, H. Deep neural networks-based rolling bearing fault diagnosis. Microelectron. Reliab. 2017, 75, 327–333. [Google Scholar] [CrossRef]

- Hermawan, A.P.; Kim, D.-S.; Lee, J.-M. Sensor Failure Recovery using Multi Look-back LSTM Algorithm in Industrial Internet of Things. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation, Vienna, Austria, 8–11 September 2020; pp. 1363–1366. [Google Scholar]

- Kumar, P.; Hati, A.S. Transfer learning-based deep CNN model for multiple faults detection in SCIM. Neural Comput. Appl. 2021, 2021, 15851–15862. [Google Scholar] [CrossRef]

- Skowron, M. Analysis of PMSM Short-Circuit Detection Systems Using Transfer Learning of Deep Convolutional Networks. Power Electron. Drives 2024, 9, 21–33. [Google Scholar] [CrossRef]

- Liu, J.Y.; Zhang, Q.; Li, X.; Li, G.N.; Liu, Z.M.; Xie, Y.; Li, K.N.; Liu, B. Transfer learning-based strategies for fault diagnosis in building energy systems. Energy Build. 2021, 250, 111256. [Google Scholar] [CrossRef]

- Chen, S.W.; Ge, H.J.; Li, H.; Sun, Y.C.; Qian, X.Y. Hierarchical deep convolution neural networks based on transfer learning for transformer rectifier unit fault diagnosis. Measurement 2021, 167, 108257. [Google Scholar] [CrossRef]

- Li, Y.T.; Jiang, W.B.; Zhang, G.Y.; Shu, L.J. Wind turbine fault diagnosis based on transfer learning and convolutional autoencoder with small-scale data. Renew. Energy 2021, 171, 103–115. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, Y.M.; Liu, X.L.; Zheng, Y.H. A Digital-Twin-Assisted Fault Diagnosis Using Deep Transfer Learning. IEEE Access 2019, 7, 19990–19999. [Google Scholar] [CrossRef]

- Cho, S.H.; Kim, S.; Choi, J.-H. Transfer Learning-Based Fault Diagnosis under Data Deficiency. Appl. Sci. 2020, 10, 7768. [Google Scholar] [CrossRef]

- Dong, Y.J.; Li, Y.Q.; Zheng, H.L.; Wang, R.X.; Xu, M.Q. A new dynamic model and transfer learning based intelligent fault diagnosis framework for rolling element bearings race faults: Solving the small sample problem. ISA Trans. 2022, 121, 327–348. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhang, W.; Ma, H.; Luo, Z.; Li, X. Partial transfer learning in machinery cross-domain fault diagnostics using class-weighted adversarial networks. Neural Netw. 2020, 129, 312–322. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Liu, Y.B.; Li, Q.J. Generative adversarial network and transfer-learning-based fault detection for rotating machinery with imbalanced data condition. Meas. Sci. Technol. 2022, 33, 045103. [Google Scholar] [CrossRef]

- Wang, H.; Wang, J.W.; Zhao, Y.; Liu, Q.; Liu, M.; Shen, W.M. Few-Shot Learning for Fault Diagnosis with a Dual Graph Neural Network. IEEE Trans. Ind. Inform. 2023, 19, 1559–1568. [Google Scholar] [CrossRef]

- Legutko, S. Industry 4.0 Technologies for the Sustainable Management of Maintenance Resources. In Proceedings of the 2022 International Conference Innovation in Engineering, Minho, Portugal, 28–30 June 2022; pp. 37–48. [Google Scholar]

- Patalas-Maliszewska, J.; Łosyk, H. An approach to maintenance sustainability level assessment integrated with Industry 4.0 technologies using Fuzzy-TOPSIS: A real case study. Adv. Prod. Eng. Manag. 2022, 17, 455–468. [Google Scholar] [CrossRef]

- Mendes, D.; Gaspar, P.D.; Charrua-Santos, F.; Navas, H. Synergies between Lean and Industry 4.0 for Enhanced Maintenance Management in Sustainable Operations: A Model Proposal. Processes 2023, 11, 2691. [Google Scholar] [CrossRef]

- Kaczmarek, M.J.; Gola, A. Maintenance 4.0 Technologies for Sustainable Manufacturing—An Overview. IFAC-PapersOnLine 2019, 52, 91–96. [Google Scholar]

- Hadjadji, A.; Sattarpanah Karganroudi, S.; Barka, N.; Echchakoui, S. Advances in Smart Maintenance for Sustainable Manufacturing in Industry 4.0. In Sustainable Manufacturing in Industry 4.0; Springer: Singapore, 2023; pp. 97–123. [Google Scholar]

- Wang, Y.; Luo, C. An intelligent quantitative trading system based on intuitionistic-GRU fuzzy neural networks. Appl. Soft Comput. 2021, 108, 107471. [Google Scholar] [CrossRef]

- Yao, J.; Lu, B.; Zhang, J. Multi-Step-Ahead Tool State Monitoring Using Clustering Feature-Based Recurrent Fuzzy Neural Networks. IEEE Access 2021, 9, 113443–113453. [Google Scholar] [CrossRef]

- Keras 3 API Documentation/Models API/The Sequential Class. Available online: https://keras.io/api/models/sequential/ (accessed on 10 March 2024).

| Input Features of the Basic Model | fb1 | fb2 | fb3 | … | fbm | - | - | Basic Judgment | Real Condition of the Item | Label Change | Add on Judgment | Basic + Add on Judgment (Final Judgment) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Features from Modified Products | fe1 | - | fe2 | … | fek-2 | fek-1 | fek | |||||

| Item 1 | 0.9 | 0 | 0.4 | … | 1.2 | 2.5 | 1.3 | Good | Good | do not change | do not change | Good |

| Item 2 | 0.7 | 0 | 0.6 | … | 1.1 | 2.9 | 4.1 | Bad | Bad | do not change | change | Good |

| Item 3 | 0.2 | 0 | 1.3 | … | 0.5 | 2.7 | 3.5 | Good | Bad | change | change | Bad |

| Item 4 | 0.1 | 0 | 1.8 | … | 0.6 | 2.6 | 2.7 | Bad | Good | change | change | Good |

| Inputs of the Basic Model | Accuracy |

|---|---|

| All 21 features | 100% |

| 1st to 7th most important features identified using the random forest | 90.2% |

| 8th to 14th most important features identified using the random forest | 85.3% |

| 15th to 21st most important features identified using the random forest | 83.7% |

| 1st to 7th most important features identified using RBF-DNN | 94.4% |

| 8th to 14th most important features identified using RBF-DNN | 84.1% |

| 15th to 21st most important features identified using RBF-DNN | 81.1% |

| Inputs of the Basic Model | Accuracy |

|---|---|

| All 21 features | 98.5% |

| 1st to 7th most important features identified using the random forest | 90.2% |

| 8th to 14th most important features identified using the random forest | 87.9% |

| 15th to 21st most important features identified using the random forest | 87.7% |

| 1st to 7th most important features identified using RBF-DNN | 93.8% |

| 8th to 14th most important features identified using RBF-DNN | 88.1% |

| 15th to 21st most important features identified using RBF-DNN | 85.5% |

| Inputs of Basic Model | Accuracy |

|---|---|

| All 21 features | 92.3% |

| 1st to 7th most important features identified using the random forest | 86.3% |

| 8th to 14th most important features identified using the random forest | 85.1% |

| 15th to 21st most important features identified using the random forest | 85.9% |

| 1st to 7th most important features identified using RBF-DNN | 91.7% |

| 8th to 14th most important features identified using RBF-DNN | 87.3% |

| 15th to 21st most important features identified using RBF-DNN | 84% |

| Inputs of Basic Model | Accuracy |

|---|---|

| All 21 features | 90.1% |

| 1st to 7th most important features identified using the random forest | 84.7% |

| 8th to 14th most important features identified using the random forest | 83.9% |

| 15th to 21st most important features identified using the random forest | 83.5% |

| 1st to 7th most important features identified using RBF-DNN | 88.7% |

| 8th to 14th most important features identified using RBF-DNN | 84.3% |

| 15th to 21st most important features identified using RBF-DNN | 82.7% |

| Inputs of Basic Model | Accuracy |

|---|---|

| All 21 features | 93.7% |

| 1st to 7th most important features identified using the random forest | 91.7% |

| 8th to 14th most important features identified using the random forest | 89.2% |

| 15th to 21st most important features identified using the random forest | 88.6% |

| 1st to 7th most important features identified using RBF-DNN | 92.8% |

| 8th to 14th most important features identified using RBF-DNN | 90% |

| 15th to 21st most important features identified using RBF-DNN | 88.3% |

| Inputs of Basic Model | Accuracy |

|---|---|

| All 21 features | 94% |

| 1st to 7th most important features identified using the random forest | 91.4% |

| 8th to 14th most important features identified using the random forest | 89.1% |

| 15th to 21st most important features identified using the random forest | 88.1% |

| 1st to 7th most important features identified using RBF-DNN | 91.8% |

| 8th to 14th most important features identified using RBF-DNN | 90.2% |

| 15th to 21st most important features identified using RBF-DNN | 84.6% |

| Control group: one model (good + too long + too short) | |

|---|---|

| Method | Accuracy |

| All 21 features + Matlab’s NNstart toolbox | 94.5% |

| All 21 features + deep neural network kit | 93.6% |

| Group 1: Basic model (good + too long)→add-on model (too short) | |

| Method | Accuracy |

| Basic (random forest’s 7 features) + add-on (random forest’s 7 features) | 83.1% |

| Basic (all 21 features) + add-on (random forest’s 7 features) | 91.7% |

| Basic (RBF-DNN’s 7 features) + add-on (RBF-DNN’s 7 features) | 89% |

| Basic (all 21 features) + add-on (7 features selected by RBF-DNN) | 92.8% |

| Group 2: Basic model (good + too short)→add-on model (too long) | |

| Method | Accuracy |

| Basic (random forest’s 7 features) + add-on (random forest’s 7 features) | 83.1% |

| Basic (all 21 features) + add-on (random forest’s 7 features) | 89.3% |

| Basic (RBF-DNN’s 7 features) + add-on (RBF-DNN’s 7 features) | 88.3% |

| Basic (all 21 features) + add-on (RBF-DNN’s 7 features) | 91.9% |

| Phase | Offline | Online | |

|---|---|---|---|

| Action | Construction of an RBF-DNN | Construction of a neural network using Matlab | Running a neural network in Matlab |

| Time cost | about 30 s | <5 s | <1 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Loh, C.-H.; Chen, Y.-C.; Su, C.-T. Using Transfer Learning and Radial Basis Function Deep Neural Network Feature Extraction to Upgrade Existing Product Fault Detection Systems for Industry 4.0: A Case Study of a Spring Factory. Appl. Sci. 2024, 14, 2913. https://doi.org/10.3390/app14072913

Loh C-H, Chen Y-C, Su C-T. Using Transfer Learning and Radial Basis Function Deep Neural Network Feature Extraction to Upgrade Existing Product Fault Detection Systems for Industry 4.0: A Case Study of a Spring Factory. Applied Sciences. 2024; 14(7):2913. https://doi.org/10.3390/app14072913

Chicago/Turabian StyleLoh, Chee-Hoe, Yi-Chung Chen, and Chwen-Tzeng Su. 2024. "Using Transfer Learning and Radial Basis Function Deep Neural Network Feature Extraction to Upgrade Existing Product Fault Detection Systems for Industry 4.0: A Case Study of a Spring Factory" Applied Sciences 14, no. 7: 2913. https://doi.org/10.3390/app14072913

APA StyleLoh, C.-H., Chen, Y.-C., & Su, C.-T. (2024). Using Transfer Learning and Radial Basis Function Deep Neural Network Feature Extraction to Upgrade Existing Product Fault Detection Systems for Industry 4.0: A Case Study of a Spring Factory. Applied Sciences, 14(7), 2913. https://doi.org/10.3390/app14072913