A Knowledge Graph Framework to Support Life Cycle Assessment for Sustainable Decision-Making

Abstract

1. Introduction and Motivation

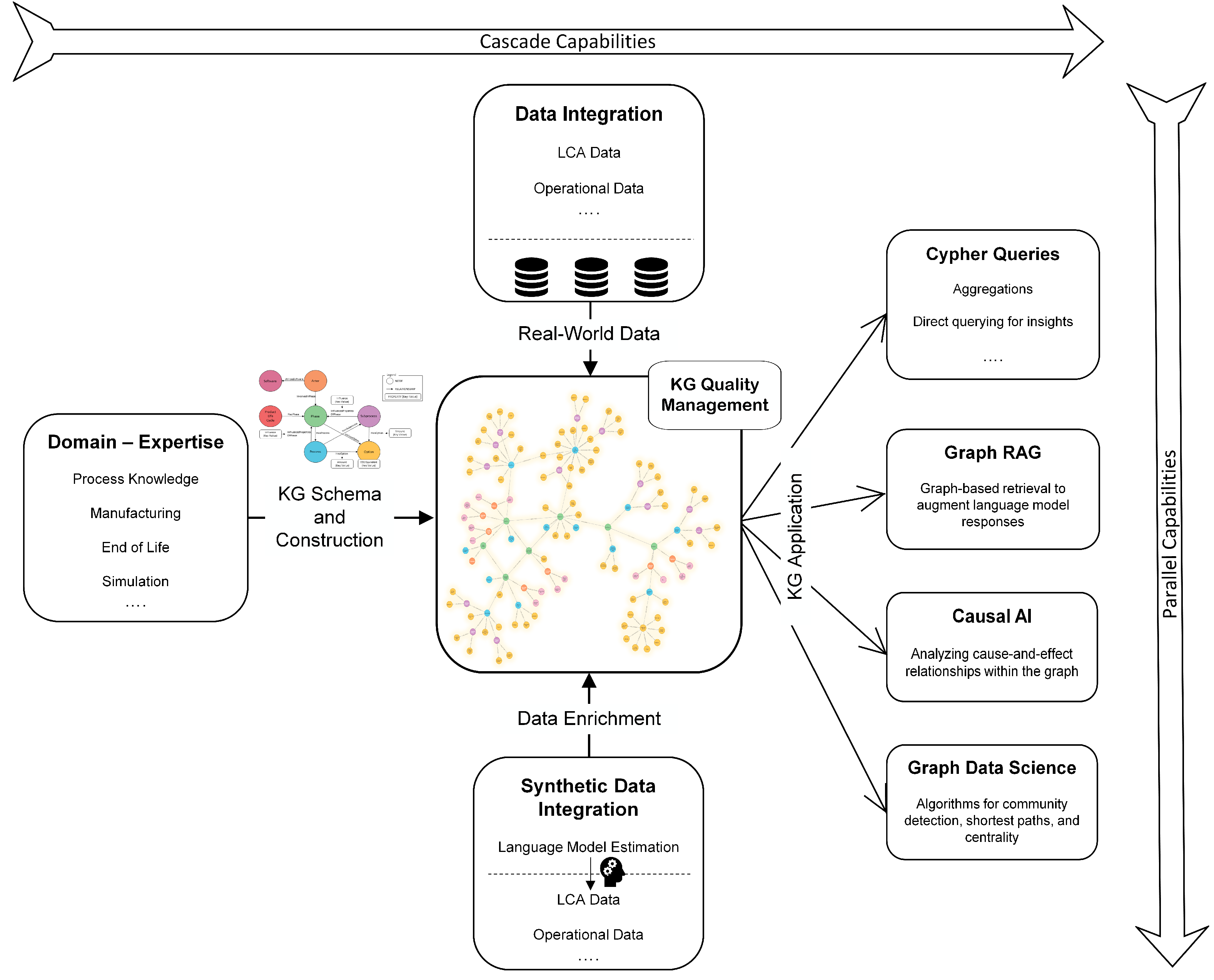

- Develop a comprehensive methodology that uses knowledge graphs (KGs) to integrate, enrich, and analyze heterogeneous data sources, including domain expertise, databases, and language models, to support LCA.

- Enable the incorporation of early-stage design decisions into the LCA process by modeling the entire product life cycle within a KG, thereby highlighting dependencies and influences across different phases.

- Facilitate the LCA of products that are traditionally difficult to analyze due to data scarcity or complexity by utilizing language models to estimate missing data and incorporating them into the KG.

- Demonstrate how the constructed KG can support analytical applications to provide actionable insights for decision-making.

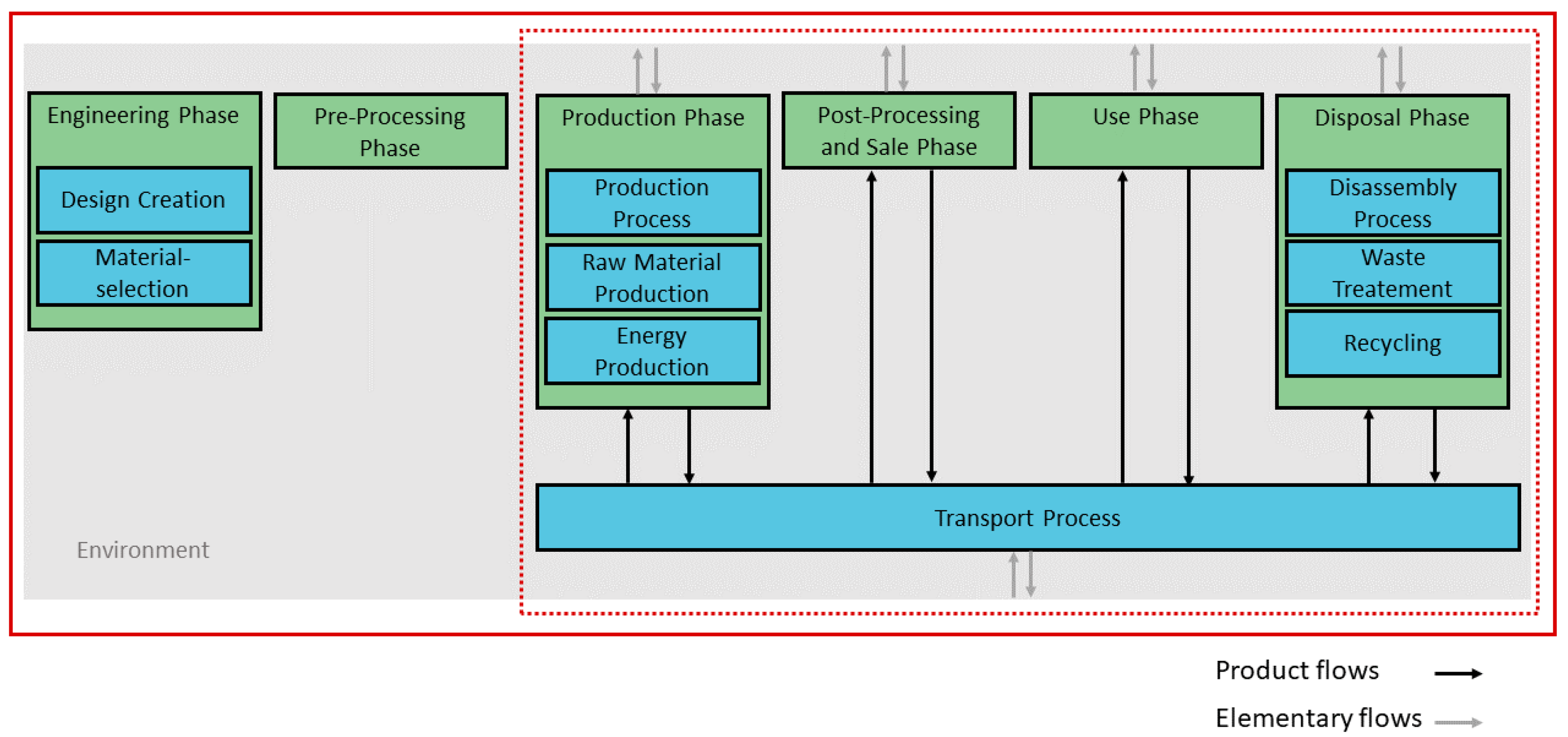

2. Theoretical Foundations and Related Works

2.1. Life-Cycle Assessments

2.2. Knowledge Graphs

- V is a finite set of vertices (representing entities, concepts, or objects).

- is a finite set of directed edges (representing relationships between entities).

- is a finite set of labels.

- is a labeling function assigning one or more labels from to each vertex or edge (, and it denotes the power set of ).

- is a partial function that assigns properties, where K is a set of property keys, and D is a domain of data values (e.g., strings, integers, and floats). If is defined for some and , then .

- Each vertex, , represents an entity, object, or concept. A vertex may have zero, one, or multiple labels indicating its type or category: .

- Each edge, , is a directed relationship between vertices , and may have zero, one, or multiple labels: . Labels typically denote the semantic role of the relationship (e.g., isPartOf, producedBy).

- Both vertices and edges may have properties, defined as key–value pairs. For a vertex, , or an edge, , and a key, , or returns a literal value in D if defined. For example, a node representing a product might have properties like weight = 5.3 (in kilograms) and material = “steel”.

2.3. Related Works and Research Gap

3. Methodology

3.1. Domain—Expertise

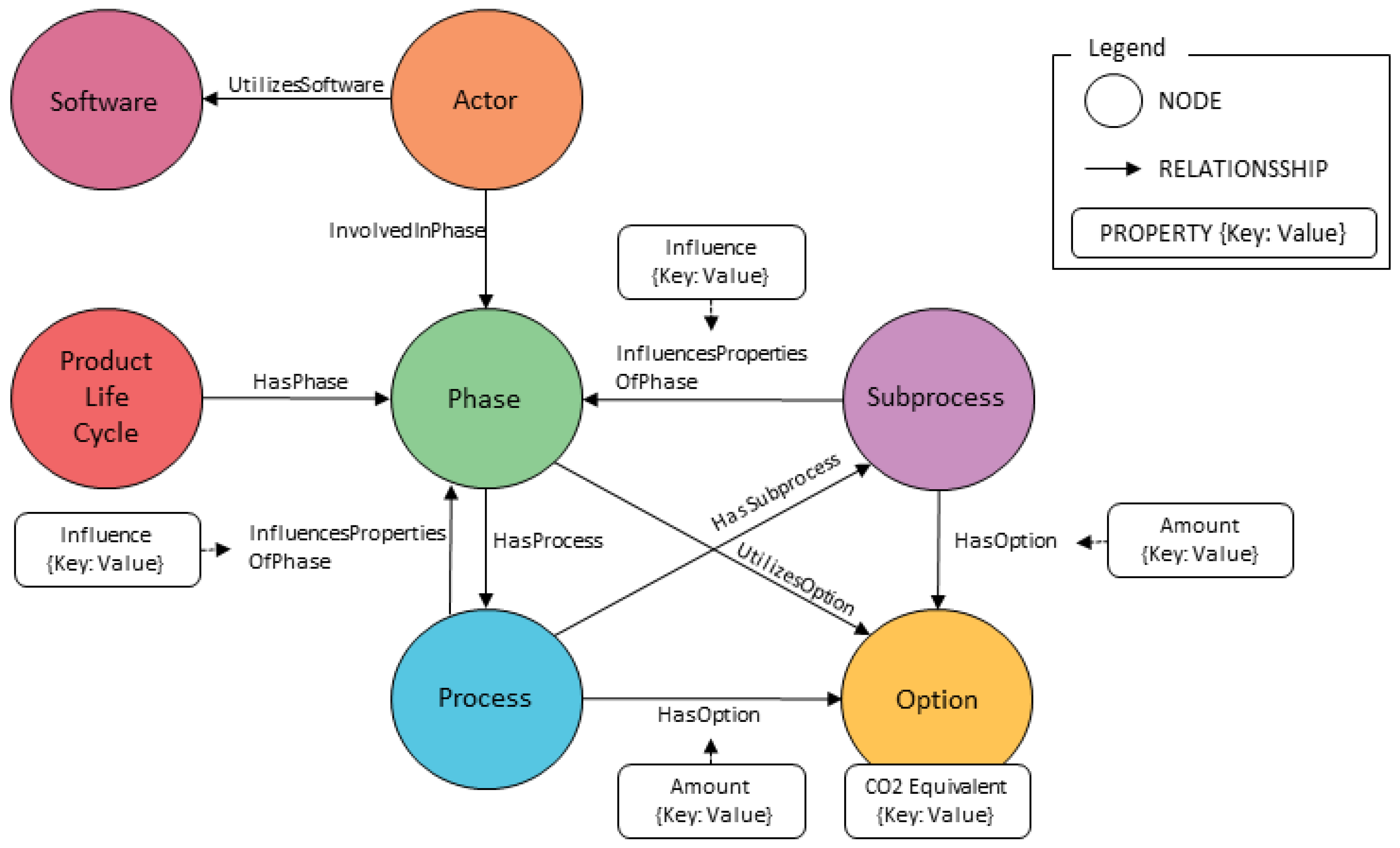

3.2. KG Schema and KG Construction

3.3. Data Integration

3.4. Synthetic Data Integration

3.5. KG Quality Management

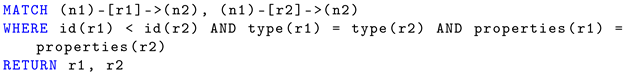

| Listing 1. Cypher Query for Identifying Duplicate Nodes. |

|

| Listing 2. Cypher Query for Identifying Duplicate Relationships. |

|

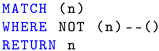

| Listing 3. Cypher Query for Finding Isolated Nodes. |

|

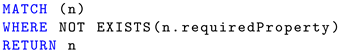

| Listing 4. Cypher Query for Nodes with Missing Properties. |

|

3.6. KG Application

4. Case Study: 3D Printing

4.1. Relevance of 3DP in Advancing Sustainability

- Production on demand: 3DP enables the production of only the necessary quantities of a product when needed, thus minimizing the need for large inventories and reducing the risk of overproduction [50].

- Efficient use of resources: by promoting more efficient use of resources, as well as enabling recycling and remanufacturing processes, 3DP aligns closely with the principles of a circular economy [49].

4.2. Domain Expertise of 3DP

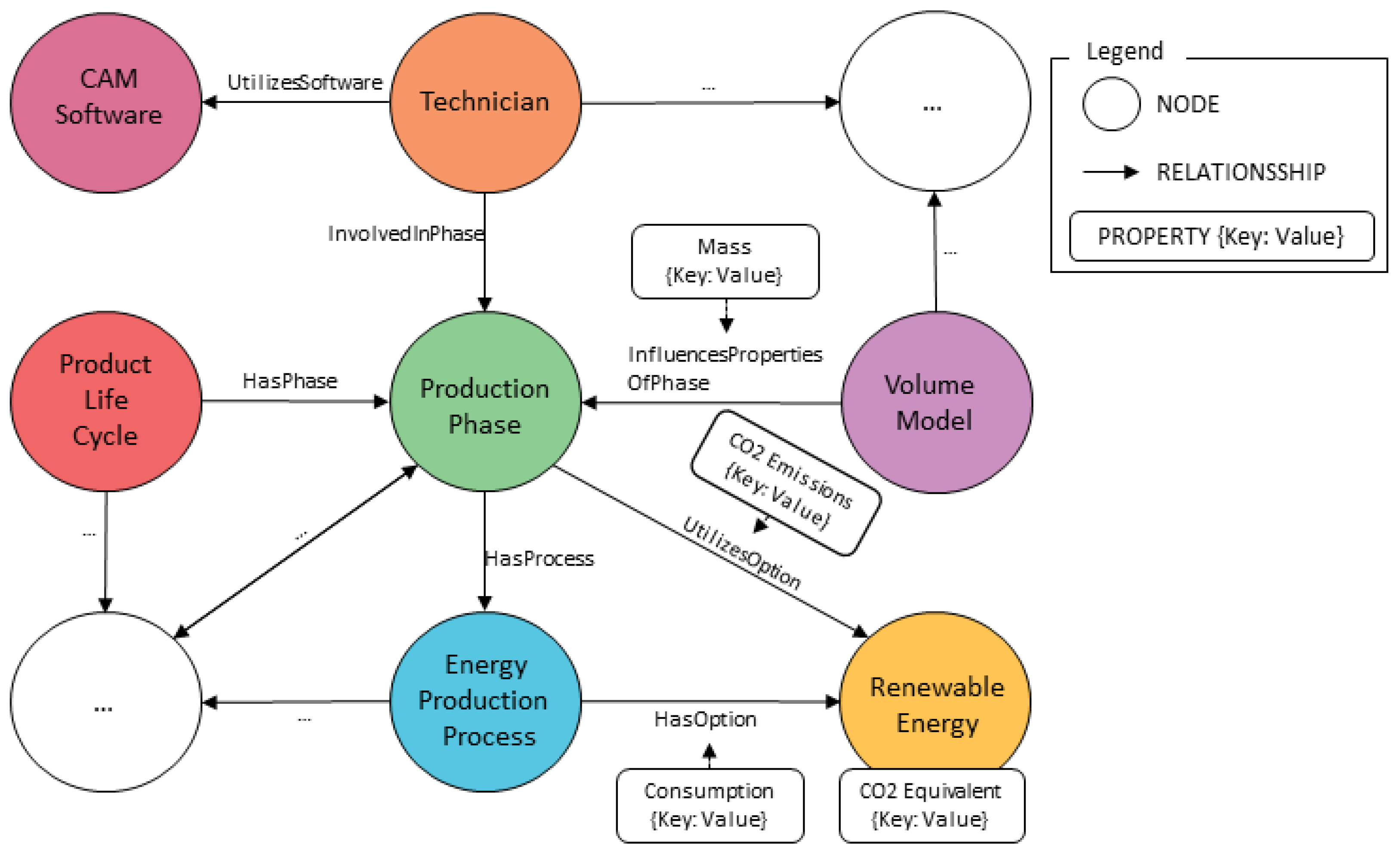

4.3. KG Schema and KG Construction

4.4. Data Integration Through Databases

4.5. Synthetic Data Integration

4.6. KG Quality Management

4.7. Developed KG

4.8. KG Application: Cypher Queries

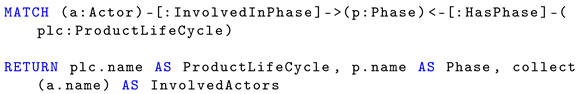

4.8.1. Roles Involved in Life Cycle Phases

| Listing 5. Cypher Query for Querying the corresponding roles for each product life cycle phase. |

|

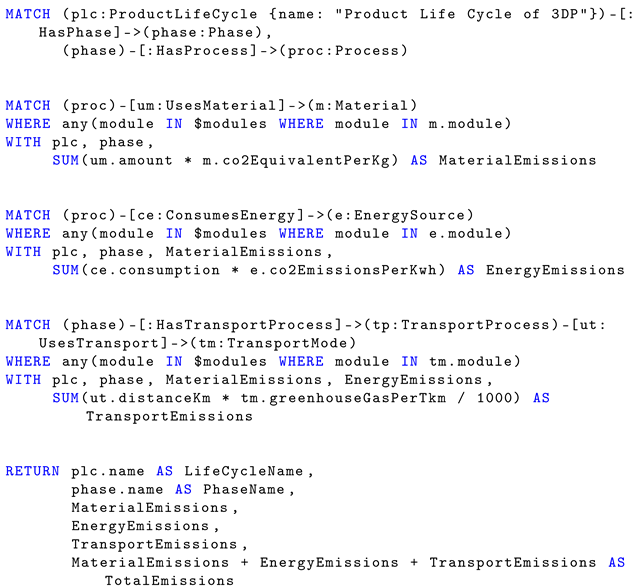

4.8.2. Environmental Impact Assessment

| Listing 6. Cypher Query for Calculating Emissions. |

|

4.8.3. Influence of Engineering Decisions on Production Phase

| Listing 7. Cypher Query for Identifying Influences in 3D Printing. |

|

5. Discussion

6. Limitations

7. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- European Commission. The European Green Deal; European Commission: Brussels, Belgium, 2019.

- Laurent, A.; Clavreul, J.; Bernstad, A.; Bakas, I.; Niero, M.; Gentil, E.; Christensen, T.H.; Hauschild, M.Z. Review of LCA studies of solid waste management systems—Part II: Methodological guidance for a better practice. Waste Manag. 2014, 34, 589–606. [Google Scholar] [CrossRef] [PubMed]

- Saade, M.R.M.; Yahia, A.; Amor, B. How has LCA been applied to 3D printing? A systematic literature review and recommendations for future studies. J. Clean. Prod. 2020, 244, 118803. [Google Scholar] [CrossRef]

- Vilches, A.; Garcia-Martinez, A.; Sanchez-Montanes, B. Life cycle assessment (LCA) of building refurbishment: A literature review. Energy Build. 2017, 135, 286–301. [Google Scholar] [CrossRef]

- ISO 14040:2006; Environmental Management–Life Cycle Assessment–Principles and Framework. ISO: Geneva, Switzerland, 2006.

- ISO 14044:2006; Environmental Management—Life Cycle Assessment—Requirements and Guidelines. ISO: Geneva, Switzerland, 2006.

- Curran, M.A. Overview of Goal and Scope Definition in Life Cycle Assessment. In Goal and Scope Definition in Life Cycle Assessment; Springer: Dordrecht, The Netherlands, 2017; pp. 1–62. [Google Scholar] [CrossRef]

- Suh, S.; Huppes, G. Methods for life cycle inventory of a product. J. Clean. Prod. 2005, 13, 687–697. [Google Scholar] [CrossRef]

- Hauschild, M.Z.; Bonou, A.; Olsen, S.I. Life cycle interpretation. In Life Cycle Assessment: Theory and Practice; Springer Nature: Cham, Switzerland, 2018; pp. 323–334. [Google Scholar]

- Curran, M.A.; Mann, M.; Norris, G. The international workshop on electricity data for life cycle inventories. J. Clean. Prod. 2005, 13, 853–862. [Google Scholar] [CrossRef]

- Finnveden, G.; Hauschild, M.Z.; Ekvall, T.; Guinée, J.; Heijungs, R.; Hellweg, S.; Koehler, A.; Pennington, D.; Suh, S. Recent developments in life cycle assessment. J. Environ. Manag. 2009, 91, 1–21. [Google Scholar] [CrossRef]

- Soimakallio, S.; Cowie, A.; Brandão, M.; Finnveden, G.; Ekvall, T.; Erlandsson, M.; Koponen, K.; Karlsson, P.E. Attributional life cycle assessment: Is a land-use baseline necessary? Int. J. Life Cycle Assess. 2015, 20, 1364–1375. [Google Scholar] [CrossRef]

- Ekvall, T.; Azapagic, A.; Finnveden, G.; Rydberg, T.; Weidema, B.P.; Zamagni, A. Attributional and consequential LCA in the ILCD handbook. Int. J. Life Cycle Assess. 2016, 21, 293–296. [Google Scholar] [CrossRef]

- Yang, Y. Two sides of the same coin: Consequential life cycle assessment based on the attributional framework. J. Clean. Prod. 2016, 127, 274–281. [Google Scholar] [CrossRef]

- Weidema, B.P.; Pizzol, M.; Schmidt, J.; Thoma, G. Attributional or consequential life cycle assessment: A matter of social responsibility. J. Clean. Prod. 2018, 174, 305–314. [Google Scholar] [CrossRef]

- Schaubroeck, S.; Schaubroeck, T.; Baustert, P.; Gibon, T.; Benetto, E. When to replace a product to decrease environmental impact?—A consequential LCA framework and case study on car replacement. Int. J. Life Cycle Assess. 2020, 25, 1500–1521. [Google Scholar] [CrossRef]

- Akroyd, J.; Mosbach, S.; Bhave, A.; Kraft, M. Universal Digital Twin—A Dynamic Knowledge Graph. Data-Centric Eng. 2021, 2, e14. [Google Scholar] [CrossRef]

- Ehrlinger, L.; Wöß, W. Towards a Definition of Knowledge Graphs. In Proceedings of the International Conference on Semantic Systems, Leipzig, Germany, 12–15 September 2016. [Google Scholar]

- Yuan, J.; Jin, Z.; Guo, H.; Jin, H.; Zhang, X.; Smith, T.H.; Luo, J. Constructing biomedical domain-specific knowledge graph with minimum supervision. Knowl. Inf. Syst. 2019, 62, 317–336. [Google Scholar] [CrossRef]

- Agrawal, G.; Deng, Y.; Park, J.; Liu, H.; Chen, Y. Building Knowledge Graphs from Unstructured Texts: Applications and Impact Analyses in Cybersecurity Education. Information 2022, 13, 526. [Google Scholar] [CrossRef]

- MacLean, F. Knowledge graphs and their applications in drug discovery. Expert Opin. Drug Discov. 2021, 16, 1057–1069. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Y.; Jiang, L.; Shah, N.; Fei, X.; Cai, H. The Construction of a Domain Knowledge Graph and Its Application in Supply Chain Risk Analysis. In Proceedings of the Advances in E-Business Engineering for Ubiquitous Computing, ICEBE 2019; Lecture Notes on Data Engineering and Communications Technologies; Springer: Berlin/Heidelberg, Germany, 2019; Volume 41, pp. 464–478. [Google Scholar] [CrossRef]

- Saad, M.; Zhang, Y.; Tian, J.; Jia, J. A graph database for life cycle inventory using Neo4j. J. Clean. Prod. 2023, 393, 136344. [Google Scholar] [CrossRef]

- Robinson, I.; Webber, J.; Eifrem, E. Graph Databases: New Opportunities for Connected Data; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2015. [Google Scholar]

- Purohit, S.; Van, N.; Chin, G. Semantic Property Graph for Scalable Knowledge Graph Analytics. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 2672–2677. [Google Scholar] [CrossRef]

- Sharma, C.; Sinha, R. A schema-first formalism for labeled property graph databases: Enabling structured data loading and analytics. In Proceedings of the 6th IEEE/ACM International Conference on Big Data Computing, Applications and Technologies, Auckland, New Zealand, 2–5 December 2019; pp. 71–80. [Google Scholar]

- Baken, N. Linked data for smart homes: Comparing RDF and labeled property graphs. In Proceedings of the LDAC2020—8th Linked Data in Architecture and Construction Workshop, Dublin, Ireland, 17–19 June 2020; pp. 23–36. [Google Scholar]

- Mynarz, J.; Haniková, K.; Svátek, V. Test-driven Knowledge Graph Construction. In Proceedings of the KGCW’23: 4th International Workshop on Knowledge Graph Construction, Hersonissos, Greece, 28 May 2023. [Google Scholar]

- Zhong, L.; Wu, J.; Li, Q.; Peng, H.; Wu, X. A Comprehensive Survey on Automatic Knowledge Graph Construction. arXiv 2023, arXiv:2302.05019. [Google Scholar] [CrossRef]

- Nicholson, D.N.; Greene, C. Constructing knowledge graphs and their biomedical applications. Comput. Struct. Biotechnol. J. 2020, 18, 1414–1428. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Shen, X.; Lv, Q.; Wang, J.; Ni, X.; Ye, J. SAC-KG: Exploiting Large Language Models as Skilled Automatic Constructors for Domain Knowledge Graph. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; Association for Computational Linguistics: Kerrville, TX, USA, 2024. [Google Scholar]

- Chen, H.; Cao, G.; Chen, J.; Ding, J. A Practical Framework for Evaluating the Quality of Knowledge Graph. In Proceedings of the International Conference on Knowledge Management, Zamora, Spain, 15–18 July 2019; pp. 111–122. [Google Scholar] [CrossRef]

- Padia, A.; Ferraro, F.; Finin, T. Enhancing Knowledge Graph Consistency through Open Large Language Models: A Case Study. In Proceedings of the AAAI Symposium, Stanford, CA, USA, 25–27 March 2024; pp. 203–208. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, C.; Xia, L.; Li, C. Knowledge Graph Contrastive Learning for Recommendation. In Proceedings of the 45th International ACM SIGIR Conference, Madrid, Spain, 11–15 July 2022. [Google Scholar] [CrossRef]

- Tran, T.; Gad-Elrab, M.H.; Stepanova, D.; Kharlamov, E.; Strötgen, J. Fast Computation of Explanations for Inconsistency in Large-Scale Knowledge Graphs. In Proceedings of the Web Conference, Taipei, Taiwan, 20–24 April 2020. [Google Scholar] [CrossRef]

- Xue, B.; Zou, L. Knowledge Graph Quality Management: A Comprehensive Survey. IEEE Trans. Knowl. Data Eng. 2023, 35, 4969–4988. [Google Scholar] [CrossRef]

- Zhang, Y.; Luo, X.; Buis, J.J.; Sutherland, J. LCA-oriented semantic representation for the product life cycle. J. Clean. Prod. 2015, 86, 146–162. [Google Scholar] [CrossRef]

- Wang, Y.; Tao, J.; Liu, W.; Peng, T. A Knowledge-enriched Framework for Life Cycle Assessment in Manufacturing. Procedia CIRP 2022, 105, 55–60. [Google Scholar] [CrossRef]

- Shaw, C.; Hoare, C.; de Riet, M.; de Andrade Pereira, F.; O’Donnell, J. An end-to-end Asset Life Cycle Knowledge Graph. In Proceedings of the 2024 W78 Conference, CIB W78, Marrakech, Morocco, 2–3 October 2024; pp. 81–90. [Google Scholar]

- Kuczenski, B.; Davis, C.; Rivela, B.; Janowicz, K. Semantic catalogs for life cycle assessment data. J. Clean. Prod. 2016, 137, 1109–1117. [Google Scholar] [CrossRef]

- Bertin, B.; Scuturici, V.M.; Risler, E.; Pinon, J.M. A semantic approach to life cycle assessment applied on energy environmental impact data management. In Proceedings of the 2012 Joint EDBT/ICDT Workshops, Berlin, Germany, 26–30 March 2012; pp. 87–94. [Google Scholar] [CrossRef]

- Di, F.; Wu, J.; Li, J.; Wang, Y. Research on the knowledge graph based life cycle carbon footprint labeling representation model for electromechanical products. In Proceedings of the 5th International Conference on Computer Information Science and Application Technology (CISAT 2022), International Society for Optical Engineering (SPIE), Chongqing, China, 20 October 2022; Volume 12451, p. 124511O. [Google Scholar] [CrossRef]

- Marconnet, B.; Gaha, R.; Eynard, B. Context-Aware Sustainable Design: Knowledge Graph-Based Methodology for Proactive Circular Disassembly of Smart Products. In Proceedings of the Technological Systems, Sustainability and Safety, Jeddah, Saudi Arabia, 15–16 May 2024. [Google Scholar]

- Peng, T.; Gao, L.; Agbozo, R.S.; Xu, Y.; Svynarenko, K.; Wu, Q.; Li, C.; Tang, R. Knowledge graph-based mapping and recommendation to automate life cycle assessment. Adv. Eng. Inform. 2024, 62, 102752. [Google Scholar] [CrossRef]

- Kim, S.; Dale, B. Life Cycle Inventory Information of the United States Electricity System (11/17 pp). Int. J. Life Cycle Assess. 2005, 10, 294–304. [Google Scholar] [CrossRef]

- Nadagouda, M.N.; Ginn, M.; Rastogi, V. A review of 3D printing techniques for environmental applications. Curr. Opin. Chem. Eng. 2020, 28, 173–178. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Chen, G.; Wang, G.; Zhu, P.; Gao, C. Recent Progress on 3D-Printed Polylactic Acid and Its Applications in Bone Repair. Adv. Eng. Mater. 2019, 22, 1901065. [Google Scholar] [CrossRef]

- Yin, H.; Qu, M.; Zhang, H.; Lim, Y. 3D Printing and Buildings: A Technology Review and Future Outlook. Technol. + Des. 2018, 2, 94–111. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, T.; Guo, X.; Ling, D.; Hu, L.; Jiang, G. The potential of 3D printing in facilitating carbon neutrality. J. Environ. Sci. 2023, 130, 85–91. [Google Scholar] [CrossRef]

- Annibaldi, V.; Rotilio, M. Energy consumption consideration of 3D printing. In Proceedings of the 2019 II Workshop on Metrology for Industry 4.0 and IoT (MetroInd 4.0 & IoT), Naples, Italy, 4–6 June 2019; pp. 243–248. [Google Scholar] [CrossRef]

- Jandyal, A.; Chaturvedi, I.; Wazir, I.; Raina, A.; Ul Haq, M.I. 3D printing—A review of processes, materials and applications in industry 4.0. Sustain. Oper. Comput. 2022, 3, 33–42. [Google Scholar] [CrossRef]

- Gebler, M.; Schoot Uiterkamp, A.J.; Visser, C. A global sustainability perspective on 3D printing technologies. Energy Policy 2014, 74, 158–167. [Google Scholar] [CrossRef]

- Elbadawi, M.; Basit, A.W.; Gaisford, S. Energy consumption and carbon footprint of 3D printing in pharmaceutical manufacture. Int. J. Pharm. 2023, 639, 122926. [Google Scholar] [CrossRef] [PubMed]

- Cucchiella, F.; Gastaldi, M.; Trosini, M. Investments and cleaner energy production: A portfolio analysis in the Italian electricity market. J. Clean. Prod. 2017, 142, 121–132. [Google Scholar] [CrossRef]

- Wei, C.; Liu, F.; Zhou, X.; Xie, J. Fine energy consumption allowance of workpieces in the mechanical manufacturing industry. Energy 2016, 114, 623–633. [Google Scholar]

- Hauck, S.; Greif, L. Facilitating the Preparation of Life Cycle Assessment Through Subject-Oriented Process Modeling: A Methodological Framework. In Proceedings of the Subject-Oriented Business Process Management. Models for Designing Digital Transformations; Elstermann, M., Lederer, M., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 173–188. [Google Scholar]

- MacDonald, E.; Wicker, R. Multiprocess 3D printing for increasing component functionality. Science 2016, 353, aaf2093. [Google Scholar] [CrossRef] [PubMed]

- Wesselak, V.; Voswinckel, S. Photovoltaik: Wie Sonne zu Strom Wird; Springer Vieweg: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Goedert, J.D.; Bonsell, J.; Samura, F. Integrating Laser Scanning and Rapid Prototyping to Enhance Construction Modeling. J. Archit. Eng. 2005, 11, 71–74. [Google Scholar] [CrossRef]

- Yao, A. Applications of 3D scanning and reverse engineering techniques for quality control of quick response products. Int. J. Adv. Manuf. Technol. 2005, 26, 1284–1288. [Google Scholar] [CrossRef]

- Kokkinis, D.; Schaffner, M.; Studart, A. Multimaterial magnetically assisted 3D printing of composite materials. Nat. Commun. 2015, 6, 8643. [Google Scholar] [CrossRef]

- Lee, J.Y.; An, J.; Chua, C. Fundamentals and applications of 3D printing for novel materials. Appl. Mater. Today 2017, 7, 120–133. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, F.; Yan, Z.; Ma, Q.; Li, X.; Huang, Y.; Rogers, J. Printing, folding and assembly methods for forming 3D mesostructures in advanced materials. Nat. Rev. Mater. 2017, 2, 17019. [Google Scholar] [CrossRef]

- Rother, H. D-Drucken… und Dann?: Weiterbearbeitung, Verbindung & Veredelung von 3D-Druck-Teilen; Carl Hanser Verlag GmbH Co. KG: München, Deutschland, 2017. [Google Scholar]

- Rapp, P.; Hotz, F. Perfekte 3D-Drucke mit Simplify3D; Carl Hanser Verlag GmbH Co. KG: München, Deutschland, 2022. [Google Scholar]

- Kabir, S.; Mathur, K.; Seyam, A. A critical review on 3D printed continuous fiber-reinforced composites: History, mechanism, materials and properties. Compos. Struct. 2020, 232, 111476. [Google Scholar] [CrossRef]

- Ngo, T.; Kashani, A.; Imbalzano, G.; Nguyen, K.; Hui, D. Additive manufacturing (3D printing): A review of materials, methods, applications and challenges. Compos. Part B Eng. 2018, 143, 172–196. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, J.; Dong, S.; Yu, X.; Han, B. A review of the current progress and application of 3D printed concrete. Compos. Part A Appl. Sci. Manuf. 2019, 125, 105533. [Google Scholar] [CrossRef]

- Lemu, H. Study of capabilities and limitations of 3D printing technology. AIP Conf. Proc. 2012, 1431, 857–865. [Google Scholar] [CrossRef]

- Leach, N. 3D Printing in Space. Archit. Des. 2014, 84, 108–113. [Google Scholar] [CrossRef]

- Boon, W.; van Wee, B. Influence of 3D printing on transport: A theory and experts judgment based conceptual model. Transp. Rev. 2018, 38, 556–575. [Google Scholar] [CrossRef]

- Birtchnell, T.; Urry, J. Fabricating Futures and the Movement of Objects. Mobilities 2013, 8, 388–405. [Google Scholar] [CrossRef][Green Version]

- Gorji, N.; O’Connor, R.; Brabazon, D. XPS and SEM characterization for powder recycling within 3d printing process. In Proceedings of the ESAFORM 2021, 24th International Conference on Material Forming, Liège, Belgium, 14–16 April 2021. [Google Scholar] [CrossRef]

- Patterson, B.; Cordes, N.; Henderson, K.; Pacheco, R.; Herman, M.; James, C.E.; Mertens; Xiao, X.; Williams, J.J.; Chawla, N. In situ Synchrotron X-ray Tomographic Imaging of 3D Printed Materials During Uniaxial Loading. Microsc. Microanal. 2016, 22, 1760–1761. [Google Scholar] [CrossRef]

- Byard, D.J.; Woern, A.L.; Oakley, R.; Fiedler, M.J.; Snabes, S.L.; Pearce, J.M. Green fab lab applications of large-area waste polymer-based additive manufacturing. Addit. Manuf. 2019, 27, 515–525. [Google Scholar] [CrossRef]

- Beltrán, F.; Arrieta, M.; Moreno, E.; Gaspar, G.; Muneta, L.M.; Carrasco-Gallego, R.; Yáñez, S.; Hidalgo-Carvajal, D.; de la Orden, M.U.; Urreaga, J.M. Evaluation of the Technical Viability of Distributed Mechanical Recycling of PLA 3D Printing Wastes. Polymers 2021, 13, 1247. [Google Scholar] [CrossRef]

- Vidakis, N.; Petousis, M.; Tzounis, L.; Maniadi, A.; Velidakis, E.; Mountakis, N.; Papageorgiou, D.; Liebscher, M.; Mechtcherine, V. Sustainable Additive Manufacturing: Mechanical Response of Polypropylene over Multiple Recycling Processes. Sustainability 2020, 13, 159. [Google Scholar] [CrossRef]

- Kumar, R.; Singh, R.; Farina, I. On the 3D printing of recycled ABS, PLA and HIPS thermoplastics for structural applications. PSU Res. Rev. 2018, 2, 115–137. [Google Scholar] [CrossRef]

- Anderson, I. Mechanical Properties of Specimens 3D Printed with Virgin and Recycled Polylactic Acid. Procedia CIRP 2017, 4, 110–115. [Google Scholar] [CrossRef]

- Zhu, C.; Li, T.; Mohideen, M.M.; Hu, P.; Gupta, R.; Ramakrishna, S.; Liu, Y. Realization of Circular Economy of 3D Printed Plastics: A Review. Polymers 2021, 13, 744. [Google Scholar] [CrossRef] [PubMed]

- EN 15804:15804:2012+A2:2019; Sustainability of Construction Works—Environmental Product Declarations—Core Rules for the Product Category of Construction Products. CEN (European Committee for Standardization): Brussels, Belgium, 2019.

| Material | Modul | GWP (kg CO2-eq) | ODP (kg CFC-11-eq) |

|---|---|---|---|

| Wood fiber insulation | A2 | 0.367 | 7.33 × 10−10 |

| Fibre cement facade panel | C2 | 0.042 | 1.08 × 10−15 |

| Clay plaster | A5 | 7.926 | 2.30 × 10−15 |

| Fly ash | A1–A3 | 0.000 | 0.000 |

| Cement (CEM IV 42.5) | A1–A3 | 0.688 | 3.79 × 10−15 |

| Cement (CEM II 42.5) | A1–A3 | 0.795 | 3.86 × 10−15 |

| Metric | Original GWP Values | Estimated GWP Values |

|---|---|---|

| Count | 637 | 637 |

| Mean | 39.13 | 45.32 |

| Standard deviation | 486.45 | 417.73 |

| Minimum | −5674.33 | −1768.63 |

| Median | 0.12 | 0.11 |

| Maximum | 19,900.00 | 6110.47 |

| Attribute | Example 1: Facade Paint (Dispersion Paint) in Modul C2 | Example 2: Asphalt Base Layer in Modul D |

|---|---|---|

| GPT-4o Estimation | “Given that this product is a type of paint in Modul C2 and based on the GWP patterns observed for similar products, I estimate the GWP for ‘facade paint (dispersion paint)’ to be approximately 0.004.” | “Considering that the ‘asphalt base layer’ is a type of asphalt layer used in Modul D, and noting that similar materials in end-of-life stages often have GWP values close to zero or slightly negative due to recycling credits, I estimate the GWP for the ‘asphalt base layer’ to be approximately −0.025.” |

| Actual GWP | 0.0032 | −0.0263 |

| Estimated GWP | 0.004 | −0.025 |

| AE | 0.0008 | 0.0013 |

| REP | 25.19% | 4.96% |

| Comment | High relative error due to the low actual GWP magnitude; minor deviations yield significant percentage differences. | Low relative error shows a close match between the estimate and the actual GWP value. |

| Top 5 Node Frequency | |

|---|---|

| Node Name | Count |

| Option | 76 |

| Process | 14 |

| Software | 14 |

| Subprocess | 13 |

| Actor | 8 |

| Top 5 In-Degree | |

| Node Name | Count |

| Production phase | 10 |

| Transport process | 4 |

| Post-processing and sale phase | 4 |

| Use phase | 2 |

| Disposal phase | 2 |

| Top 5 Out-Degree | |

| Node Name | Count |

| 3D printing process | 14 |

| Material option | 8 |

| Post-processing | 7 |

| Recycling | 6 |

| Product Life Cycle | Phase | Involved Actors |

|---|---|---|

| 3DP Component A | Production phase | Technician, operator |

| 3DP Component A | Energy production process | Energy manager, technician |

| 3DP Component A | Use phase | User, maintenance engineer |

| 3DP Component A | Disposal phase | Recycling specialist, waste manager |

| 3DP Prototype B | Design creation | CAD designer, engineer |

| 3DP Prototype B | Pre-processing phase | Material specialist, technician |

| 3DP Prototype B | Post-processing phase | Quality inspector, technician |

| Scenario 1: Cement (CEM IV 42.5) | |

|---|---|

| Phase Name | Emissions (kg CO2-eq) |

| Production phase—material | 68.8 |

| Production phase—energy | 40.0 |

| Transport phase | 15.0 |

| Total emissions | 123.8 |

| Scenario 2: Cement (CEM II 42.5) | |

| Phase Name | Emissions (kg CO2-eq) |

| Production phase—material | 79.5 |

| Production phase—energy | 40.0 |

| Transport phase | 10.0 |

| Total emissions | 129.5 |

| Start Node | Influence | End Node |

|---|---|---|

| Volume model | Mass | Production phase |

| Material selection | Transport distance | Transport process |

| CAM software | Support structure | Post-processing and sale process |

| CAM software | Printing time, | Production phase |

| material consumption, | ||

| energy consumption | ||

| Volume model | Assembly complexity | Post-processing and sale process |

| Volume model | Disassembly complexity | Disposal phase |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Greif, L.; Hauck, S.; Kimmig, A.; Ovtcharova, J. A Knowledge Graph Framework to Support Life Cycle Assessment for Sustainable Decision-Making. Appl. Sci. 2025, 15, 175. https://doi.org/10.3390/app15010175

Greif L, Hauck S, Kimmig A, Ovtcharova J. A Knowledge Graph Framework to Support Life Cycle Assessment for Sustainable Decision-Making. Applied Sciences. 2025; 15(1):175. https://doi.org/10.3390/app15010175

Chicago/Turabian StyleGreif, Lucas, Svenja Hauck, Andreas Kimmig, and Jivka Ovtcharova. 2025. "A Knowledge Graph Framework to Support Life Cycle Assessment for Sustainable Decision-Making" Applied Sciences 15, no. 1: 175. https://doi.org/10.3390/app15010175

APA StyleGreif, L., Hauck, S., Kimmig, A., & Ovtcharova, J. (2025). A Knowledge Graph Framework to Support Life Cycle Assessment for Sustainable Decision-Making. Applied Sciences, 15(1), 175. https://doi.org/10.3390/app15010175