Abstract

With the dynamic development of imaging technologies and increasing demands in various industrial fields, neural networks are playing a crucial role in advanced design, monitoring, and analysis techniques. This review article presents the latest research advancements in neural network-based imaging, thermal, infrared, and X-ray technologies from 2005 to 2024. It focuses on two main research categories: ‘Technology’ and ‘Application’. The ‘Technology’ category includes neural network-enhanced image sensors, thermal imaging, infrared detectors, and X-ray technologies, while the ‘Application’ category is divided into image processing, robotics and design, object recognition, medical imaging, and security systems. In image processing, significant progress has been made in classification, segmentation, digital image storage, and information classification using neural networks. Robotics and design have seen advancements in mobile robots, navigation, and machine design through neural network integration. Object recognition technologies include neural network-based object detection, face recognition, and pattern recognition. Medical imaging has benefited from innovations in diagnosis, imaging techniques, and disease detection using neural networks. Security systems have improved in terms of monitoring and efficiency through neural network applications. This review aims to provide a comprehensive understanding of the current state and future directions of neural network-based imaging, thermal, infrared, and X-ray technologies.

1. Introduction

Today, image recognition is becoming more and more important in areas such as facial identification, card registration number reading, searching for anomalies in medical photos, and many more, with significant improvements noticed in this field. Thus, the present article has been prepared to order and summarise the state of the art.

Over the past 20 years, image processing technologies have seen significantly increased capabilities in relation to improved quality of recorded images, extension of the recorded spectrum, and greater computing power on the part of digital devices. The development of new image processing methods is important as well. The most popular of these is the use of Artificial Neural Networks (ANNs), which are analysed in this work.

ANNs are among the artificial intelligence technologies that has been dynamically developing since the 1950s. ANNs are based the concept of the perceptron (1958). Their development has been enabled by backpropagation algorithms and multilayer architectures, which allow for application in increasingly complex tasks. From the beginning of the 21st century, the increase in computing power and the availability of large datasets has allowed for great progress in the field of deep learning, as expressed by architectures such as Convolutional Neural Networks (LeNet, AlexNet, VGG, ResNet) [1,2,3].

To date, neural networks have been used for image recognition, segmentation, reconstruction, and classification of image data as well as in non-standard spectral bands such as thermal, infrared, and X-ray images. In medical imaging, neural networks have enabled automatic disease detection (e.g., detection of neoplastic lesions), tissue recognition, and segmentation [4,5]. In the security sector, these technologies have been used for recognizing people in low-light conditions [6] as well as in weapon detection [7], and intelligent systems for monitoring critical infrastructure [8], among others.

The main properties of neural networks include the ability to automatically extract features, flexibility in modelling nonlinear dependencies, and the ability to transfer trained networks between different problems (transfer learning). In recent years, optimisation of algorithms and dedicated hardware systems have made it possible to implement neural networks on embedded devices and to operate them in real time [9]. However, there are continuing challenges, including high demand for training data, sensitivity to interference and variability in the input data, and difficulty in interpreting model decisions.

The purpose of this paper is to review the technological advances and key applications of neural networks in image processing over the last two decades (2005–2024), particularly thermal, infrared, and X-ray imaging.

Section 2 presents the method used to select articles and their division according to the purposes of the proposed solution, then describes the current state-of-the-art. This is followed by a summary and analysis of the collected results in Section 3. A critical evaluation taking into account both the research and knowledge gaps is presented in Section 4. Finally, the full findings are synthesised in the last Section 5.

2. Literature Review Methodology and State-of-the-Art

The first element in this review is the selection and categorisation of appropriate source material. The next part takes into account the division of the selected materials.

2.1. Article Selection and Research Category Definition

The approach described below was used to identify articles from the Scopus database relating to specific types of image processing using ANNs.

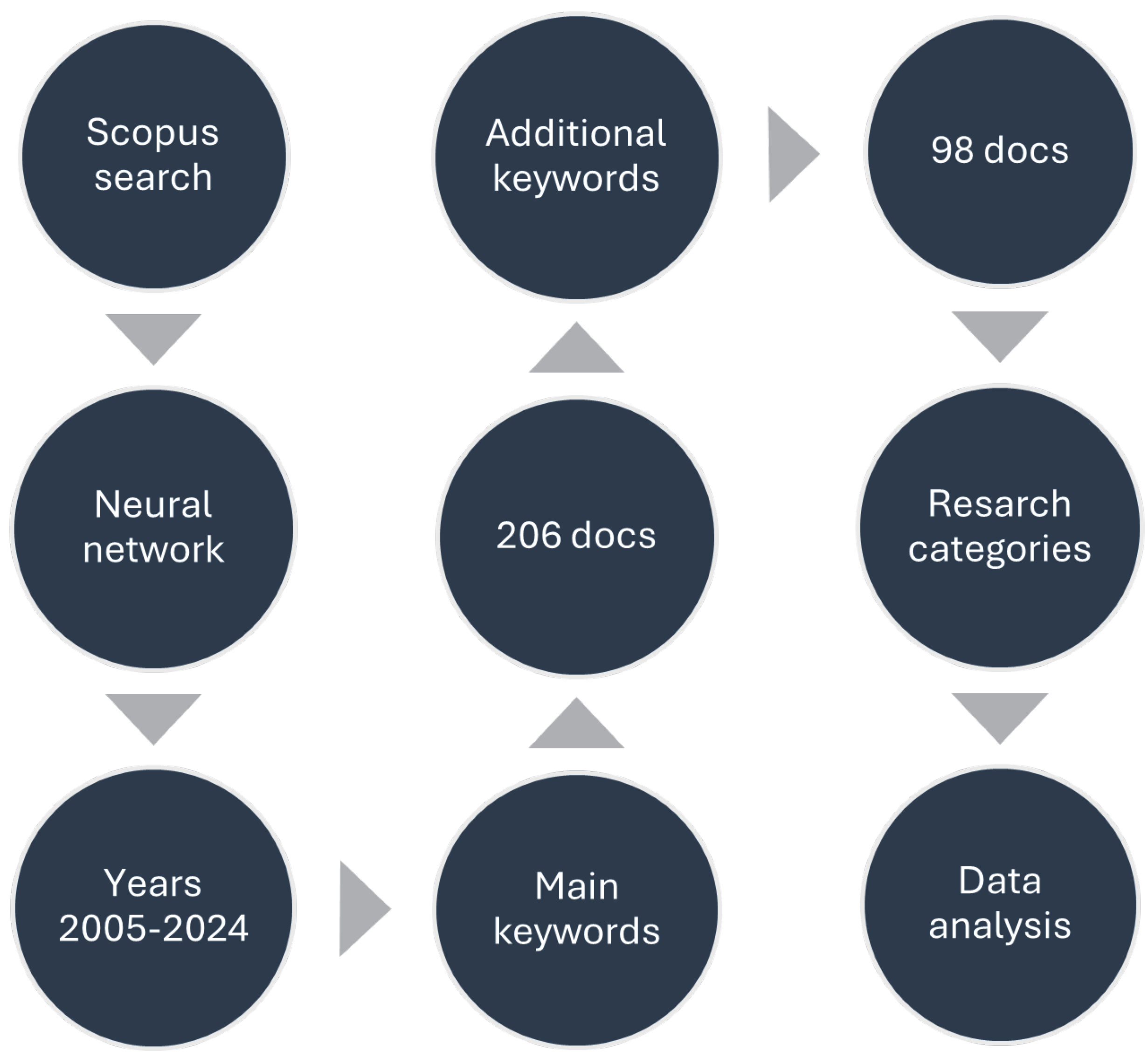

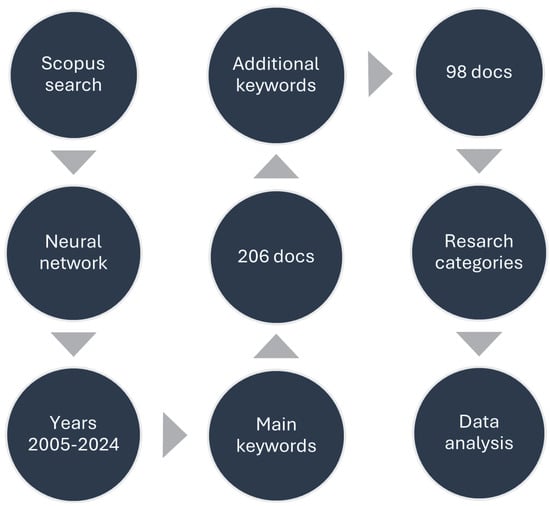

The selection of appropriate categories (domains) was preceded by an extensive search combining specific words in the title, abstract, and keywords of the publications according to the scheme presented in Figure 1.

Figure 1.

The methodology used for data preparation.

In the first step, a search in the Scopus database was applied with the following limitations, all of which needed to be fulfilled:

- A combined field that searches abstracts by: neural network

- Years: between 2005 and 2024

- Limited by language: English

- Limited by publication stage: final

- Limited by subject areas: computer science, engineering

- Limited by main keywords: image sensors OR thermal imaging OR infrared detectors OR X-ray.

As a result of this search, a list of 206 articles was obtained. However, because some of the articles were incorrectly classified, we increased the precision of the search by applying an additional filter to check whether at least one of the following keywords was present:

|

|

|

After applying the above constraints, a list of 100 articles was obtained. However, after manual verification it turned out that two articles [10,11] were incorrectly classified by the Scopus database; thus, these were removed from further analysis. On the basis of the remaining articles, the second step divided them into research categories. The first category consisted of a group based on basic keywords, with additional keywords assigned to subsequent categories to build the second group.

Information about selected publications was exported to a CSV (Comma Separate Values) file containing data such as title, authors, year of publication, etc., which was then imported to a PostgreSQL 16.2 database. This enabled later analysis through SQL queries for data mining and aggregation. This process was automated. A programme written in Python 3.12.2 was used for this purpose and to prepare tables and graphs. These data summaries allowed researchers to conduct further analysis. The statistical information is presented in Section 3.

2.2. Artificial Neural Networks Overview

In this section, some basic information is presented before presenting an overview of the current state-of-the-art. The starting point is to compare the tasks for which Artificial Neural Networks (ANNs) can be applied with alternative methods of their implementation. The data are summarised in Table 1.

Table 1.

Comparison of classical methods and neural networks for selected image processing tasks.

Although the idea of an ANN itself is not new, due to their diverse applications it has been necessary to develop new structures. Depending on the purpose, ANNs have been developed in different directions and have had different features. A summary of popular ANN architectures is presented in Table 2.

Table 2.

Examples of neural network architectures for image analysis and processing.

2.3. State-of-the-Art: A Technology Review from 2005 to 2024

During the extraction of categories, four of them were formulated. Thus, the review of the state-of-the-art is divided into four parts.

2.3.1. Image Sensors

Comparing existing technology of imaging sensors is complicated, because very often they are used in very strict problems. Thus, there are not enough source dates which are connected with the same case. Despite this, it is still possible to identify some techniques which are used in some class of problems [27].

One of the most frequently repeated motivations to improve image sensors is energy efficiency [8,9,28,29,30,31,32,33]. It is connected to the application of these sensors to battery-powered devices. For those which are supplied by feeder-cable, it means a reduction of usage cost. The next important reason is to increase the performance of data processing [9,29,34,35]. In [36] it was also mentioned that the additional objective is to reduce memory usage. To solve these problems, some techniques are used. One of the most frequently appearing solutions is to implement a neural network in hardware [28,29,30,34,36,37,38,39,40,41]. This could be a separate device, an on-chip module, or a FPGA component. This is not the only possible way to obtain better results. Another is to introduce techniques that could be used to train a neural network more effectively [9,32,42,43,44,45,46]. They are very different: additional training of the first layer, preparing images, using multisource remote sensing images, or including signals from other sensors.

Another significant feature is the ability to detect important elements of an image [8,38,40,44,47]. This allows limiting the amount of data that must be processed. As a result, a system performs better and has more energy efficiency. The use of fuzzy logic to increase the sensitivity for a wider spectrum of signals was presented in [48]. The hybrid approach is not only used in this case. In expert systems where detection of skin disease is necessary, a connection of neural and Bayesian networks has been applied [4,5]. Thus, diagnosis is better and treatment is more effective.

Among the most important features is an image correction. The reasons could be different: dark environments, whole objects not being visible, sensor resolution lower than modern ones, or as in electrical charge tomography, the object of the image is not seen directly [4,5,31,38,39,40,41,49]. Using a neural network in image sensors allows for reconstructing an object during their runtime and gives better results than an interpolation [37,40]. To strengthen this effect, sometimes information from other sensors is engaged [32,45].

An image analysis is not concerned only around static pictures. Some devices are designed to detect movement. Comparison of changes in series of pictures is possible, but using neural networks gives better results [35]. Movement detection could be used, for example, to recognise workers at a construction site building [50], in particular to monitor them if they are in danger from the site of building machines. This is one of the reasons for which a time of execution of a detection should be more predictable. In [34], the authors presented how to make the network computation time more deterministic. In particular, this may play a crucial role in some applications related to autonomous vehicles. This segment is still growing and is not limited only to assist during parking [48], recognising items surrounding vehicles [51], or recognising road signs [52]. Despite these technologies growing separately, their combination could bring a new quality in this field.

A key aspect is applying image sensors in production technologies. To reduce a cost of transport, solid particles flowing in a pipeline are very often used. To control this process, electrical charge tomography images are analysed [49]. Another example of image sensor usage with edge detection is control of the welding process. Due to the large number of variables that influence this process, it is a challenging job [53]. The quality of it is increased by adding signals from other detectors [54].

A standout feature added more and more frequently to image sensors is face recognition. Implementing it on a camera allows for saving energy with the same recognition quality [30]. Furthermore, for the pretrained neuron networks it is possible to use them to identify people wearing a surgical mask [46]. In addition, the identification based on fingerprints is improved [47]. Similar but heading in a different direction is tissue recognition [4]. In this case, the main goal is not identification of the person but a pressure ulcer.

The use of neural networks in image sensors that enable text recognition has enabled the development of an additional to standard energy and water metres. Reading it, the module is able to send its value through the ZigBee network, which allows remote reading of the metre, which was not adapted for this [55,56].

Image processing can also be used by wearable devices. For example, ref. [57] presented a system for eye tracking and real-time object recognition in various lighting conditions.

2.3.2. Thermal Imaging

A separate problem is the processing of a thermal image. The input material is much different from that obtained in visible light because it operates in infrared light [6,58,59,60,61,62,63,64,65,66]. Thus, ANNs pretrained on pictures prepared in daylight are not sufficient. Moreover, the resolution of the thermal imaging is lower than that of the classical image.

The most frequently investigated with thermal images is related to medical issues [58,59,60,61,65,67,68,69,70,71]. In this scope, the main goal is to detect different kinds of disease. The spectrum of application is wide and starts from as general a problem as detecting sick newborns on thermal images [70]. During the COVID-19 pandemic, it was very important to detect potential ill people in a contactless manner [67,68]. In addition, thermal images were used to detect the sick even if when wearing masks [67]. Moreover, observing persons’ behaviour, especially coughing and shortness of breath, was helpful in detecting COVID-19.

However, detecting infectious diseases is not the only application of thermal images. They can also be used to recognise rheumatoid arthritis [59,65], breast cancer [58], peripheral arterial disease [69], or diabetic foot ulcers [60,71]. In the latter case, thermal images can also be used to classify the stage of disease progression. It is helpful to choose the most suitable treatment.

Despite the fact that thermal image processing can provide a lot of information, there is still space for further improvements. This is achieved by connecting this information with data taken from other sources. One of the most obvious is the combination of thermal and classical images, which can be used to calibrate important points [67] or to recognise persons or objects in visibility and invisibility light [6,66]. In [72], the authors presented the addition of sensors to increase the safety of a space surrounding mining machines. Furthermore, adding an RFID detector to a drone can allow detection of a friend or enemy on a battlefield [73]. For these reasons, different methods of machine learning such as linear regression, decision trees, random forest, neural network, XGBoost, LightGBM, and the fuzzy c-means segmentation algorithm are applied [62,64,65,69,74,75]. Their amount is also increasing because as input data there are not only static images but also thermal camera video [68,73,76].

Thermal imaging is also a useful method to increase the accuracy of classifying a person’s emotions. For example, using face thermography makes emotion detection 10% better than without it [63]. It could support emotional communication with autistic children, where this kind of cooperation is difficult [77]. Recognition could also be concerned with person identification. In [62], it was presented that the thermal facial image could be used for identification. It is especially important if the light conditions are not optimal [6]. In addition, in the security system the ability to detect a human in an open area when light is very weak is crucial [73,76]. If necessary, protection could be extended for human detection [73,76], person identification [6,62,64], or weapon detection [7]. The latter is also an example of object detection from thermal images. This skill makes it possible to apply this technology in the identification of electrical equipment [66] and faults [74]. For all of these reasons, thermal image processing should be performed in real time [7,62,63,70,72,73,74,75,76], especially if they are used to protect people working close to machines in a mine [72], pedestrians near autonomous vehicles, even if the images are in low resolution [75] or to monitor the condition of working machines [66,74].

2.3.3. Infrared Detectors

The spectrum of application of infrared detectors is very wide. It could start from basic usage, such as helping in works of others sensors. In [78], detectors were used to calibrate an RGB camera to take a correct photo. However, infrared detectors often work in combination with different data sources. The main domains of this integration concentrate around mobile robot navigation [79,80,81,82,83,84,85,86], object recognition [87,88,89,90], position detection [85,91], human measurement [91,92] and machine works improvement [93].

Integration of a multi-source navigation system in mobile robots gives robust control mechanism of them. The first application of infrared detectors is to build a map of an environment in which a robot exists [85]. In connection with ANNs, this is a more economic solution than a laser scanner [94]. The next example is to use them to navigate a robot to avoid obstacles that are detected [80,95]. In addition, its quality could be improved using sonar data to measure a distance and RGB camera to detect a road sign [79]. To faster prepare a robot to do this, neuroevolution techniques are used [96,97]. They set weights in neural network, meaning that training them is faster.

The retro-reflective characteristics of lane demarcations on roadways allow them to be easily detected in infrared light [98], which simplifies their tracking. This problem is frequently investigated with some configurations: driving along a line [81,99], measuring a distance [81], or planning a path to drive [82].

Because using mobile robots with AI is becoming a more and more common application, there is a need to educate a new staff of robot programmers. An educational training platform which provides an opportunity to learn how to train neural and Bayesian networks using infrared detectors, among others, was described in [86].

Another important field in the use of infrared detectors with artificial intelligence is positioning, which can involve a few aspects. The first is to detect all nearby objects and build a map [85,94]. This could also include ground properties [100] or tracking a navigation path [101]. This feature does not have to limit itself only to an open space, and could also be used in another object such as the human body [78]. Finally, setting some object in the proper distance and position between each other could be achieved in the same way, which helps to obtain better quality data for other sensors [91].

RGB images are used very often as input data to recognise an object. In addition, within this scope infrared detectors could play a crucial role. As mentioned previously, they can recognise an object in the way of mobile robots [87,99]. To achieve a better result, these data can be mixed with information from other sensors, e.g., radars [88]. In addition, detecting lines [98] or cars [90,102] is more accurate. Particularly if light conditions are weak, such as at night [98,103], this method makes it possible to detect and classify ships [89]. It is not always light intensity that is the only problem. Sometimes an object looks very similar in visible light. Then, using infrared could help to separate them, such as during the detection of foreign fibres in cotton [104]. This ability is also used for recognising cars visible from a plane, where resolution is poor [90,102], or for recognising humans at night [105,106]. At night, recognition of a ship is more difficult, and this processing in infrared is applied. In effect, classification of ships is possible, although training and real images have a different palette of colours [103]. Another way to solve this problem is to connect these data with an RGB camera [89].

Detecting a human in infrared light is only one example of a whole group. Another is a classification of their activity [106]. In this case, avoiding visible light increases privacy because it does not allow for the identification of a specific person. The next is a diagnosis of an illness, for example, COVID-19 [92]. Although it is only a screening test, it allows the identification of people with a respiratory infection. Observing the deformation of the platform on which the patient walks is used for the classification of ambulatory patterns [107]. Such investigations also increase the comfort of the patient. Gathering data such as temperature from a patient’s foot allows ANNs to detect places of higher blood pressure. This information could be used to prepare shoes that are better fitted and more comfortable for these people [108]. Doctors may find useful a mechanism for tracking the position of ultrasound head during an abdominal diagnostic. This improves the quality of classification and diagnosis of difficult-to-identify cases [91].

Position detection is also important when navigating special robots that are used to inspect ventilation pipes. Using infrared detectors together with recorded microphone data allows them to detect damage in this installation [83]. Robots that use peristalsis to move in pipes and quickly overcome elbows are also being designed. By analysing data from various sources, including infrared detectors, such robots can locate damage, attempt to repair it, or bypass it [84].

It is worth mentioning two more areas that were relatively little analysed using infrared detectors. In [93], they were used together with touch sensors and others to improve robot grip. In this way, the accidental crushing and slipping of objects was reduced and grip stability was increased. In [100]. the focus was on the use of infrared to assess the temperature of the monitored ground. In this way, the degree of its moisture was estimated. This information can be particularly useful in agriculture.

2.3.4. X-Rays

Similar to image processing, AI can segment X-ray images by identifying objects depicted in them. This is most often used to recognise parts of the human body [109,110]. The main application of AI in X-ray processing is disease detection. For radiologists, it can be helpful in detecting pneumonia [111], detecting and staging breast cancer [110], or generally helping to detect 14 different diseases [112]. Most of them are concentrated around the chest [109,110,111,112,113,114,115].

Automated analysis of chest radiograph images can range from detecting whether a given patient is healthy or sick [113,115] to detecting a specific infection. Due to the recent COVID-19 pandemic, the main focus is on the detection of this disease [113,114,115,116,117]. Due to its significant similarity with the X-ray image, the second most frequently studied disease for AI recognition is pneumonia [111,113,114,115,118]. These investigations allow for better differentiation of the pathogens mentioned in a given case [113,114,115]. Another disease recognised by X-ray using AI is breast cancer [110]. This method allows for not only its detection but also classification of the stage of advancement.

When analysing the type of solution, it is worth noting that the predominant number of cases use Convolutional Neural Networks (CNNs) [109,112,113,114,115,116,117,118]. In one case, only Generative Adversarial Networks (GAN) were used as a complement to the convolutional network [116]. In implementation, the Keras framework was used [112].

2.4. Neural Network Algorithms

Section 2.3 describes the current state of knowledge within various technologies related to neural networks. Therefore, it focuses mainly on technological aspects. For a complete picture, it is worth looking at how neural network algorithms relate to these technologies.

Table 3 summarises the common algorithms used in neural networks. They are similar in each of the technologies discussed in the previous section. The main difference is in the way the algorithm is implemented and in the training data. For example, networks that analyse colour or greyscale data may have a different number of inputs in the first layer. In addition, the same objects in visible, infrared, and X-rays may look different. Therefore, each of these algorithms requires a different set of training data. However, this does not fundamentally change the idea of how the algorithm works.

Table 3.

Summary of examples of neural network algorithms.

3. Results

To track changes in the studied area, the time period considered was divided into two decades: 2005–2014 and 2015–2024. Table 4 presents a summary of changes in subsequent decades, taking into account the division into document type, technology used, solution used, and research method used. To detect and assess the changes that occurred in these categories, a chi-square independence test was performed. The null hypothesis () assumed that there was no relationship between the publication period and the frequency of a given category. In contrast, the alternative hypothesis () is presented that there is a relationship between the period and the number of publications in a given category.

Table 4.

Publications by year in all categories.

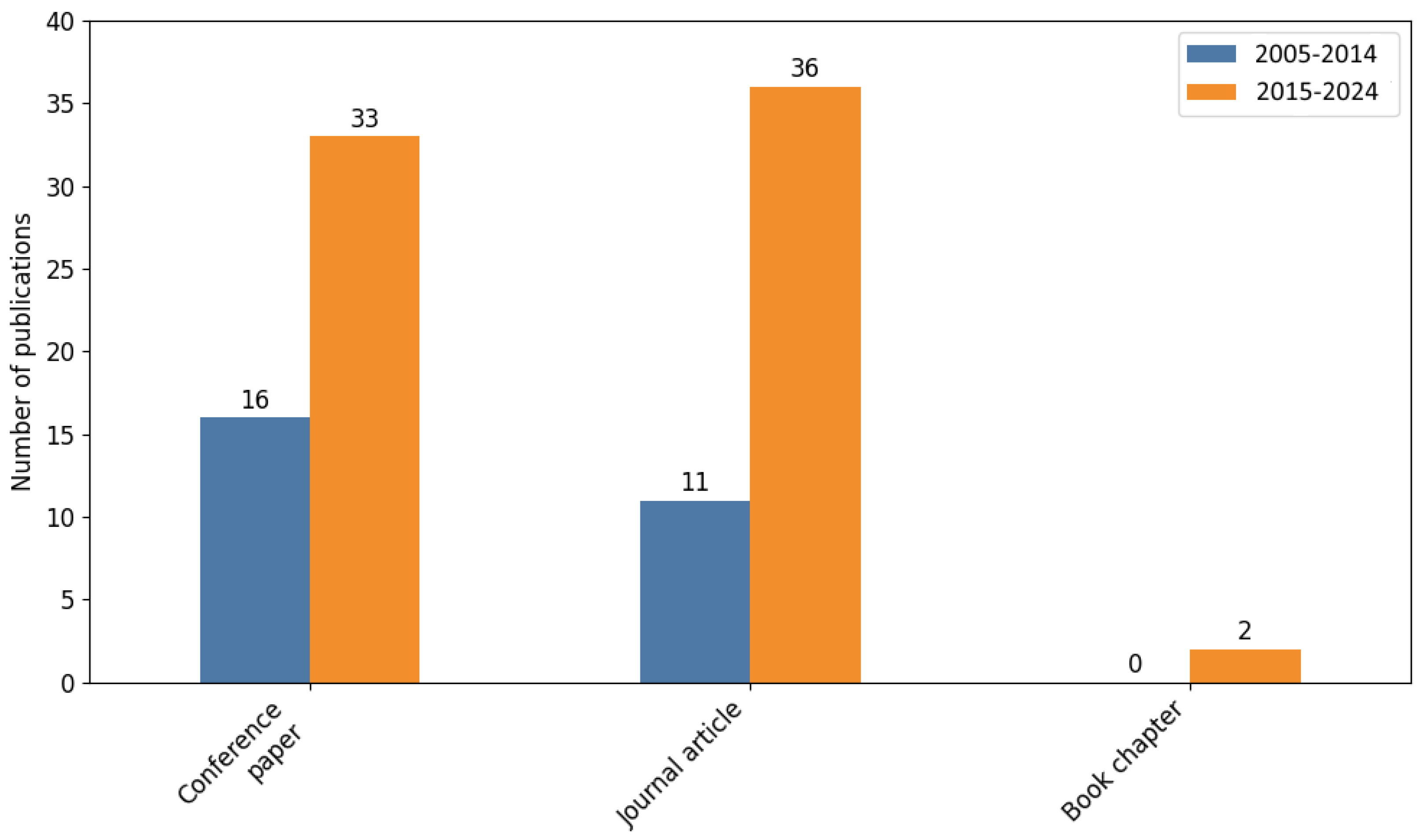

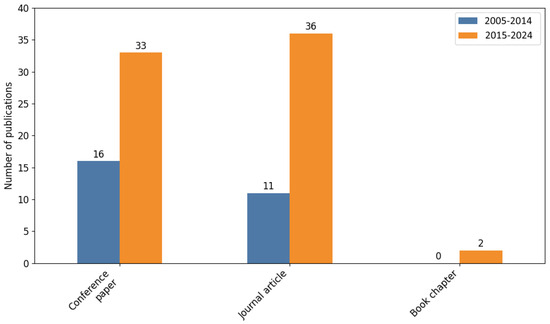

Figure 2 shows the change in the publication of documents of different types. Despite the overall increase in the number of publications, the proportions between conference publications, press publications, and book chapters did not change significantly. Therefore, with a chi-square test value of and three degrees of freedom, the p-value is equal to . This confirms the null hypothesis that the proportion between publication types did not change.

Figure 2.

Change in the number of different types of documents.

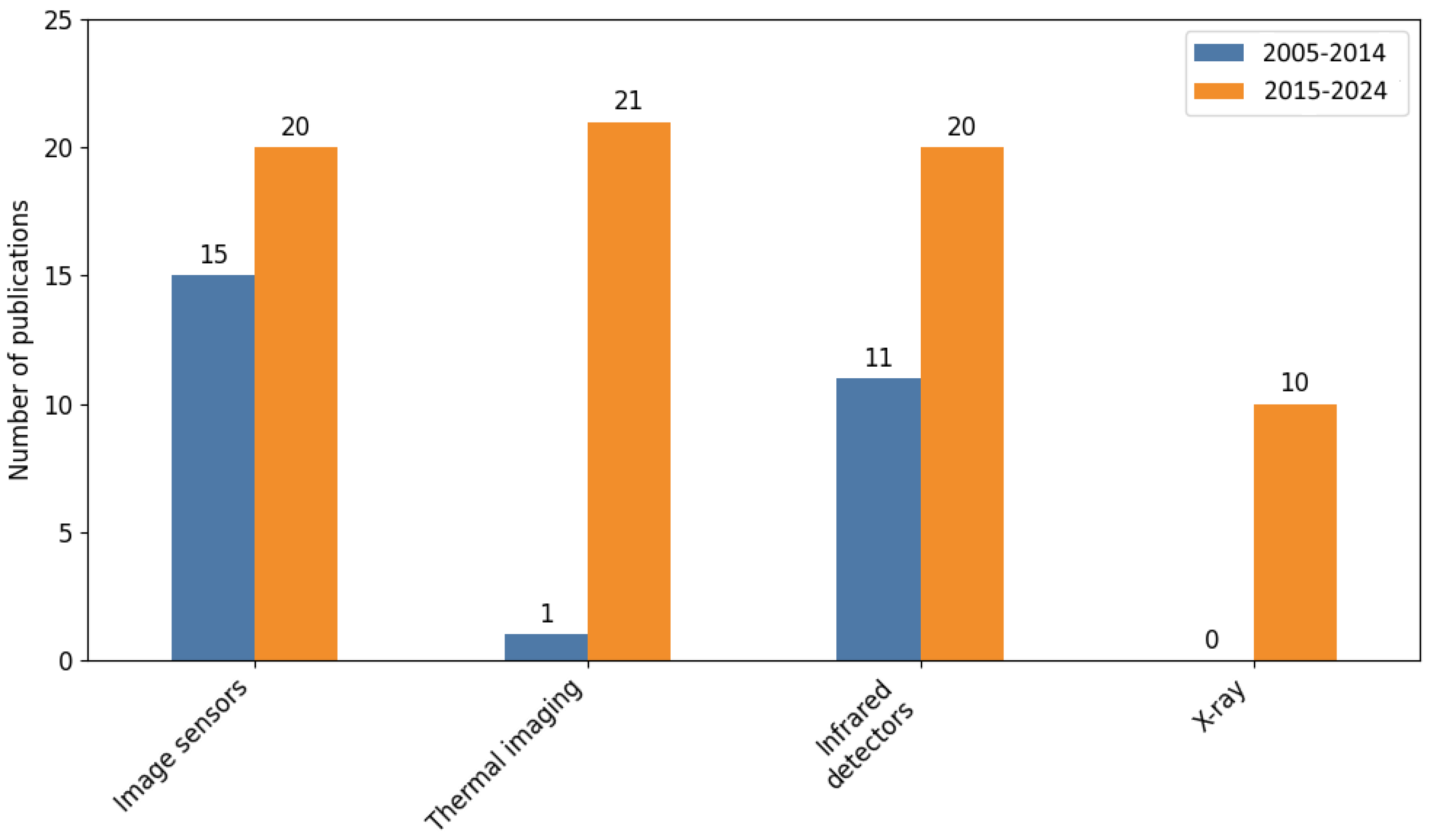

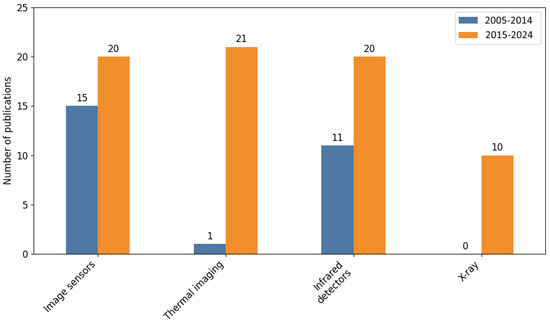

The next group studied is applied technologies. A summary of the changes is presented in Figure 3. In general, an increase in the number of publications using each technology can be observed. However, the test value = 14.72, which with three degrees of freedom gives p-value = 0.0. This means that the null hypothesis should be rejected for this group and the alternative hypothesis should be accepted. This means that statistically significant changes have occurred. In this case, it can be seen that a disproportionately large increase was observed within thermal imaging technology. This suggests that a significant number of new studies have occurred in this area.

Figure 3.

Change in the number of different technologies.

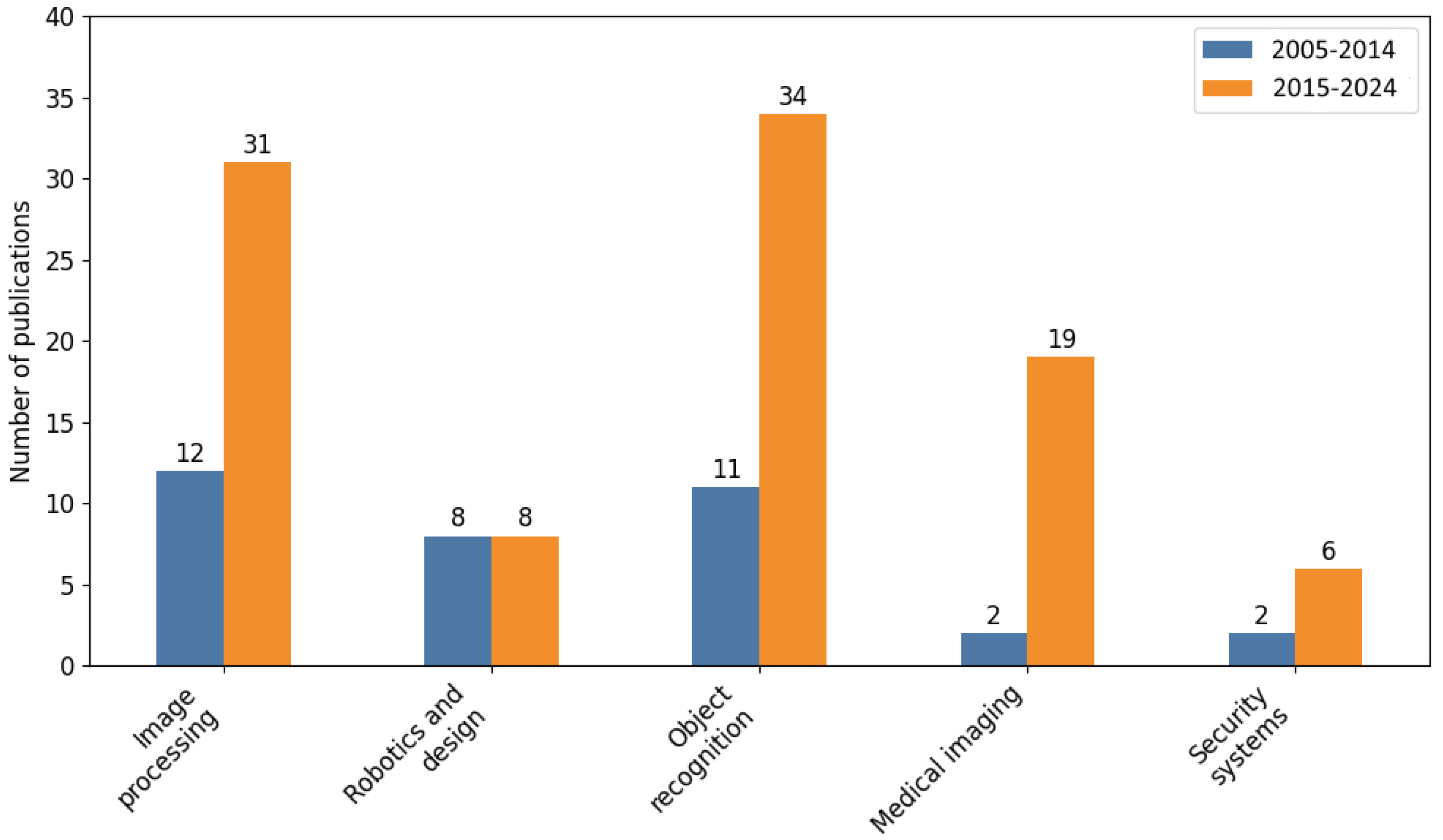

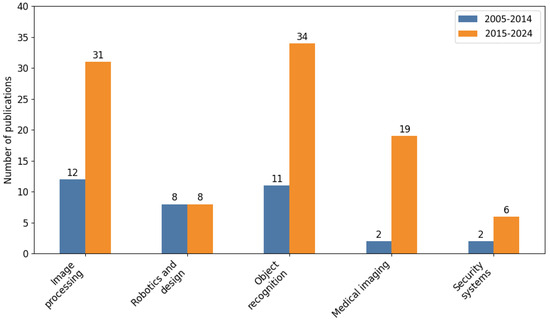

Analysing the set of areas in which neural networks were used for image processing, a general increase in the number of publications can be noticed (Figure 4). However, it is not equal. Robotics and design stands out negatively here, where the number of publications remained constant. This is reflected in the calculated value of = 7.83, where with four degrees of freedom the p-value = 0.1 was obtained. Considering that the assumed level of significance is 0.05, the null hypothesis should be accepted. However, this is almost a borderline value; thus, it can be assumed that the changes that have occurred here are not yet statistically significant. Nonetheless, they constitute a certain trend. This suggests that the topic may have been exploited in the field of robotics and design or tht it is not currently popular.

Figure 4.

Change in the number of different application.

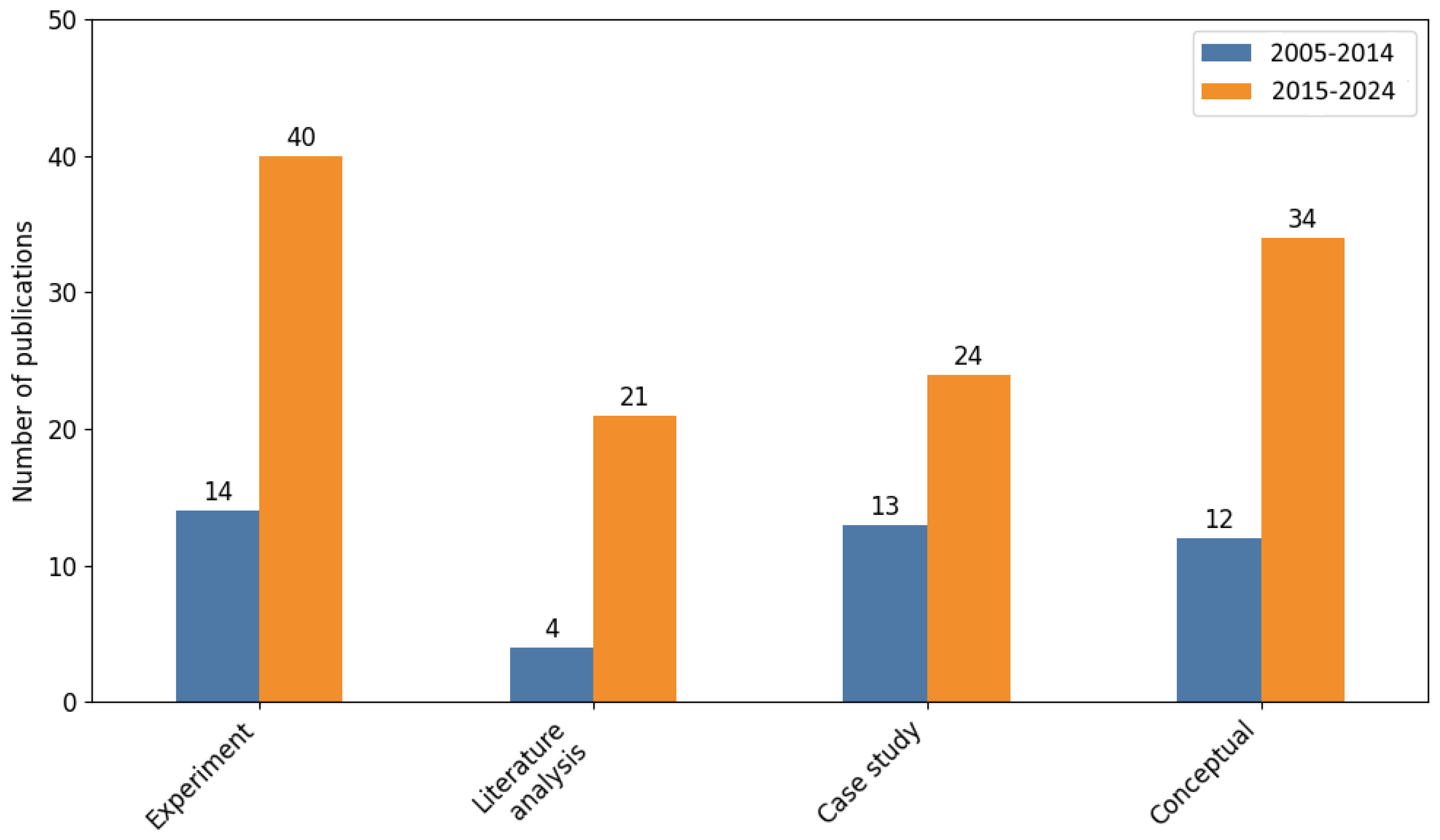

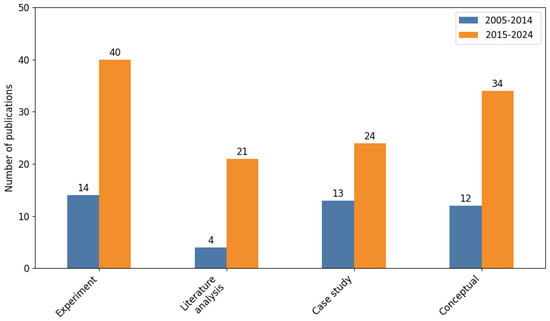

Figure 5 presents changes in applied research methods. As can be seen, the value of = 2.84, which with three degrees of freedom gives a value of p-value = 0.42. This means accepting the null hypothesis and considering that there were no significant differences in the distribution of selected research methods. However, it should be noted that the total number of publications has increased significantly in the last decade.

Figure 5.

Change in the number of different research methodology.

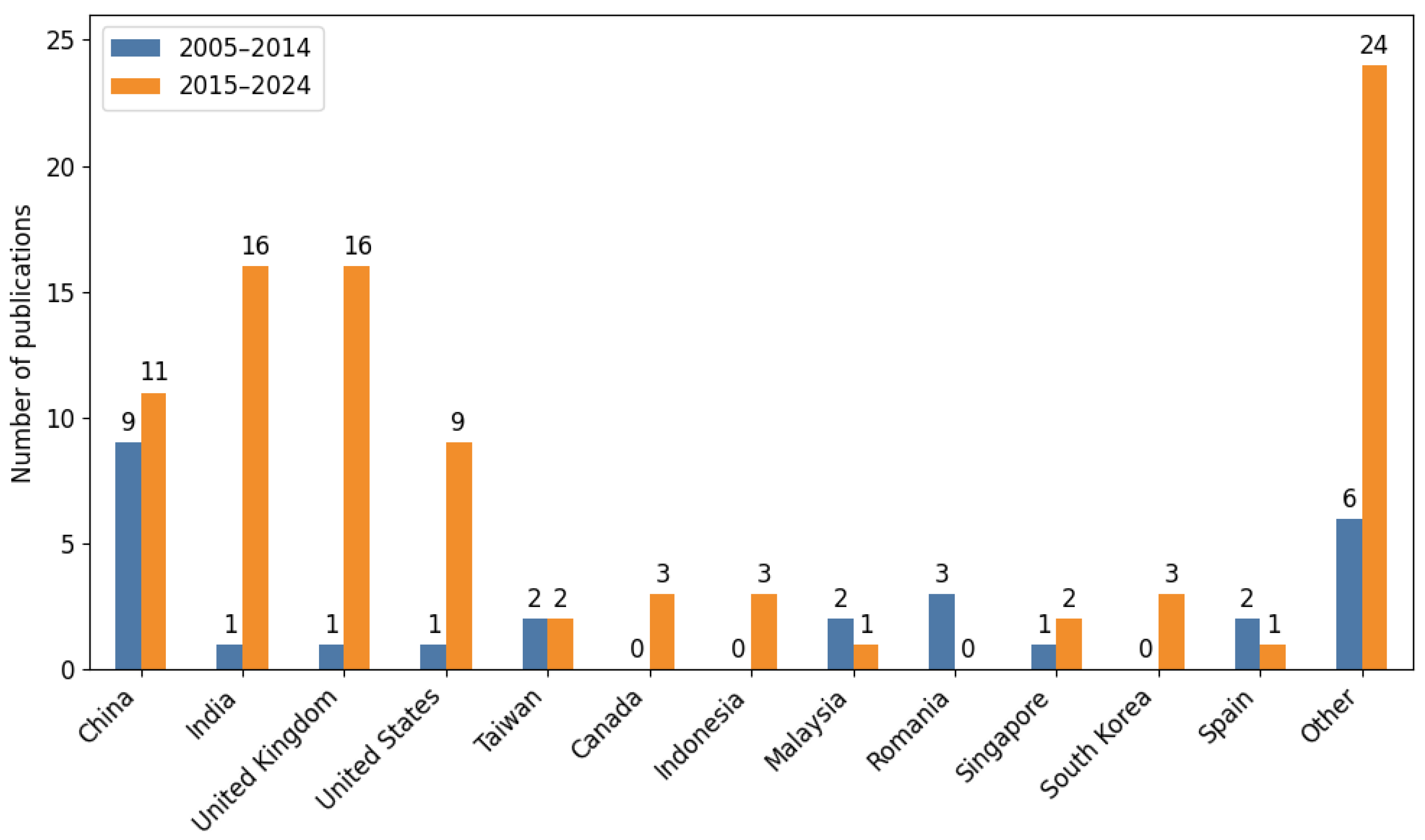

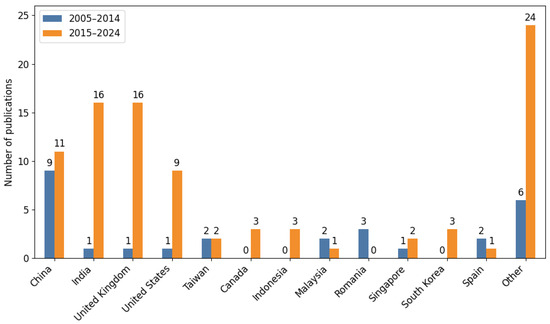

Table 5 and Figure 6 present a summary of the number of publications in the studied area divided by publication location and decade. At the same time, the null hypothesis is presented that there were no significant changes in this area. However, the test result = 31.53 for 13 degrees of freedom means that p-value = 0. Therefore, the alternative hypothesis is accepted, stating that the structure of the places where research is conducted on the analysed topic has changed significantly in the past decade. Considering that in some locations the increase was multiple, it should be recognised that the topic is still needed and developed.

Table 5.

Publications by year in locations.

Figure 6.

Publications by year in locations—visualisation.

It is worth noting a few locations here. The first group is India and the United Kingdom, where the increase in the number of publications is the largest. This may indicate that this technology is perceived as future-orientated. This hypothesis may also be confirmed by the fact that in the second group of locations, the United States and China, which may not have recorded such a large increase in the number of publications, nevertheless still maintain a large number of them. In total, 65% of the published works on the studied topic in the years 2005–2024 come from these locations. However, 35% from the remaining locations means that scientists there are also looking at this technology.

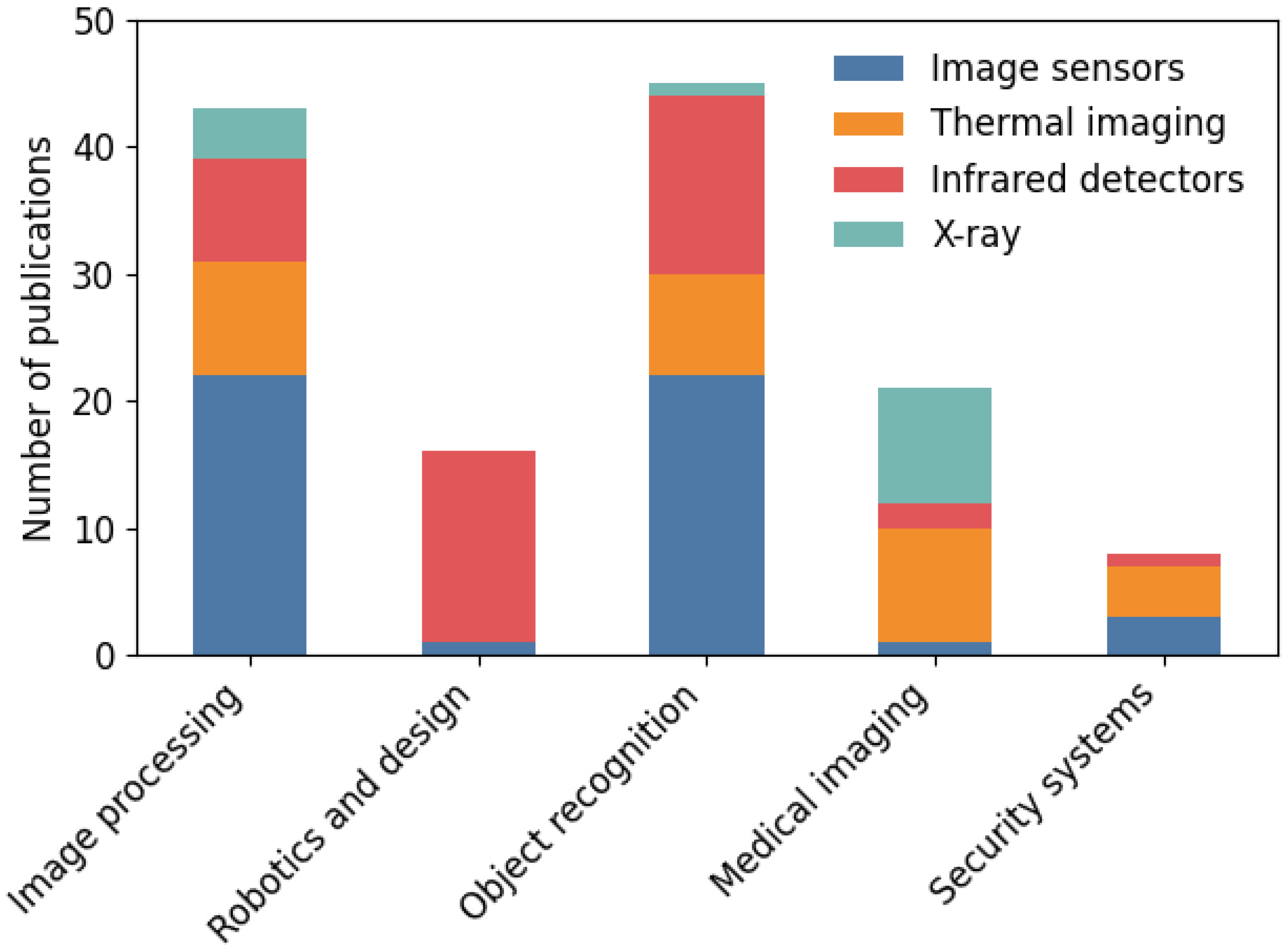

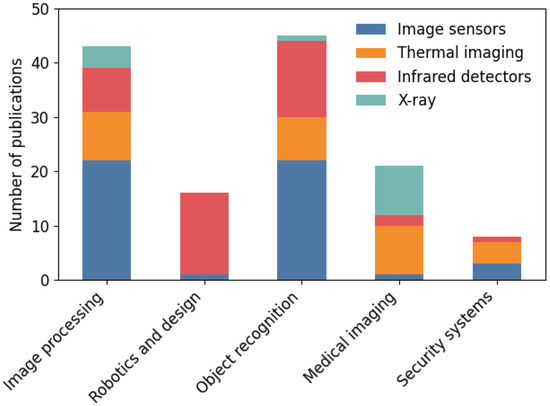

A summary of the technologies used in relation to various applications and research methods is presented in Table 6. Figure 7 presents a cumulative picture of the technologies used for the five most frequently described applications. It can be seen that for image processing and object recognition, the most frequently chosen technology is image processing, which is used in half of the described solutions. On the other hand, infrared detection has a dominant share in robotics and design. For medical imaging, the most important are thermal imaging and X-rays. Analysing the data in the Application section of Table 6, the null hypothesis is that there is no relationship between technology and application category, while the alternative hypothesis is that there is a relationship between technology and the application category. Because the test result = 78.35 with 16 degrees of freedom, the p-value = 0.0. This means that the alternative hypothesis should be accepted as correct, which confirms the observation in Figure 7.

Table 6.

Publications by technology in other categories.

Figure 7.

Publications by technology in Application.

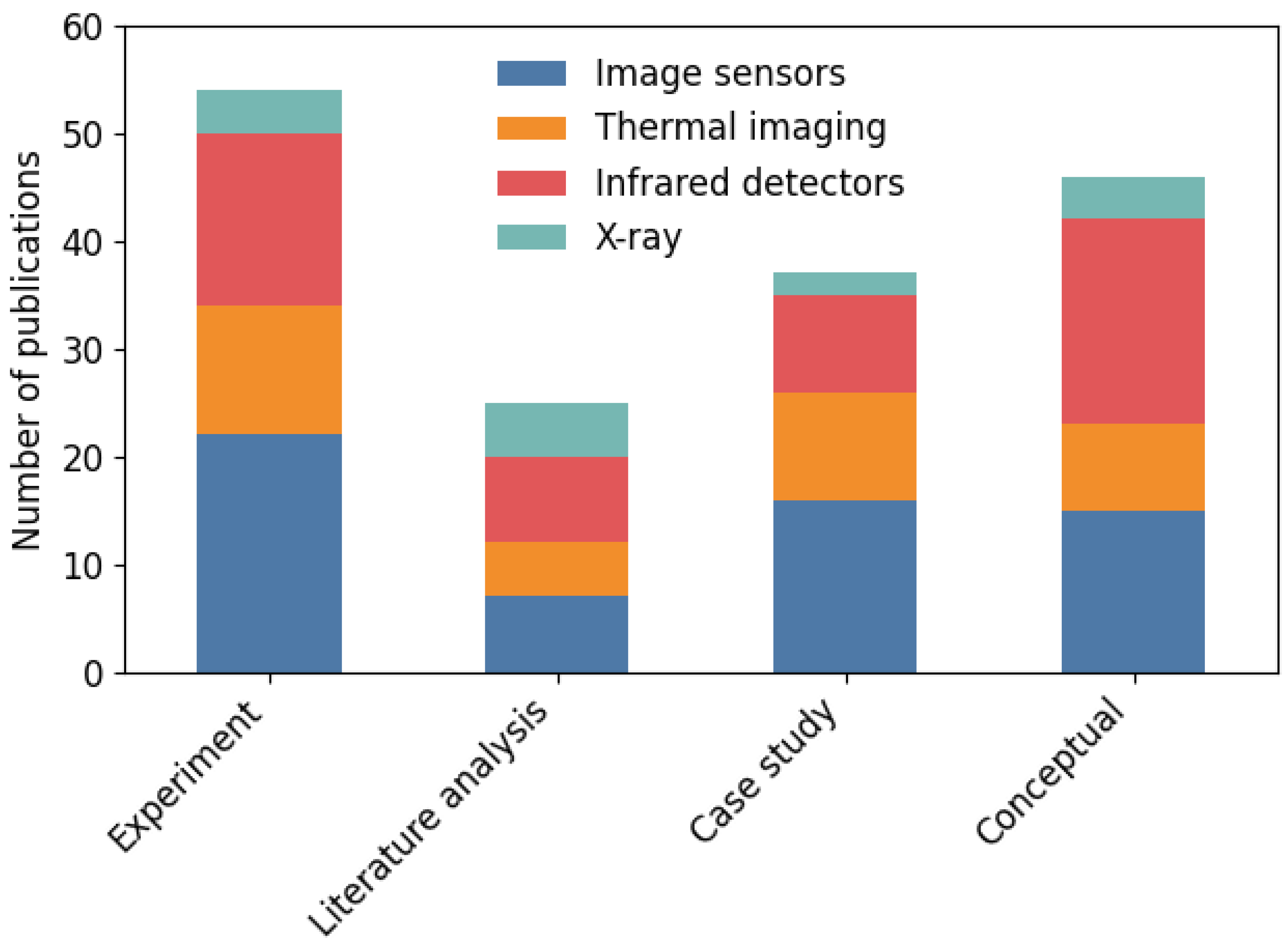

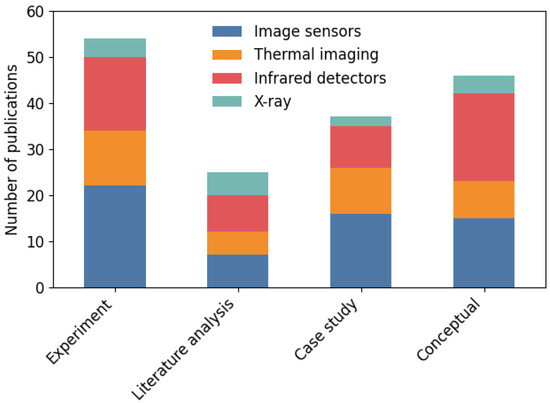

The analysis of Table 6 is complemented by a review of the applied research methods for each of the considered technologies. The null hypothesis proposes that there is no relationship between technology and research methodology. The alternative hypothesis is that the relationship mentioned above occurs. After calculating the test = 8.24 and with 12 degrees of freedom, the p-value = 0.77 was obtained. Therefore, with the assumed degree of significance at the level of 0.05, the null hypothesis can be accepted. Furthermore, this result is confirmed by the analysis of Figure 8, where each of the analysed research methods uses and considers the use of each of the research methods in comparable proportions.

Figure 8.

Publications by technology in Research Methodology.

4. Discussion

Undoubtedly, the use of artificial neural networks has recently become increasingly common. This is also the case for image processing, as confirmed by the growing number of publications on the subject. It should be noted that over the last decade there has been no decline in interest in this subject. Moreover, as shown in Table 4, in most cases it has actually increased.

4.1. Current Trends in Technologies Powered by Artificial Neural Networks

The most exploited issues, which prove their importance but also the significantly deepened state of knowledge, include the following:

- Energy efficiency is improved by adjusting data collection and processing [8,9,28,29,30,31,32].

- Hardware implementation of neural networks allows for their acceleration as well as improvements in energy efficiency. It also allows for their use in embedded systems [28,29,30,34,36,37,38,39,40,41].

- Image quality improvement allows images with much higher resolution and quality to be obtained than with simple interpolation [4,5,31,38,39,40,41,49].

- Infrared image analysis uses images invisible in visible light for in-depth analysis of reality [6,58,59,60,61,62,63,64,65,66].

- Detection of human diseases by analysing images allows changes to be recognised for various diseases, including anthropometric, dermatological, and diabetic foot [58,59,60,61,65,67,68,69,70,71].

- Object recognition, including road signs, route markers, and obstacles on the road [78,87,88,89,90,98,99,102,104].

- Combining multiple data sources through multi-source analysis has been used to identify hidden dependencies [79,80,81,82,83,84,85,86,87,88,89,90,91,92,93]

- Mobile robot navigation is very widely analysed due to its potentially wide application, including special-purpose robots that need to be able to navigate on their own to solutions that can be used in autonomous cars [79,80,81,82,83,84,85,86,94,95,96,97,98,99,101].

- Convolutional Neural Networks (CNNs) are the most frequently mentioned type of neural network in image processing [109,112,113,114,115,116,117,118].

- Pneumonia and COVID-19 were the most frequently studied infections in images due to the recent SARS CoV-2 coronavirus pandemic. This is mostly due to their similarity and the need for their distinction and rapid diagnosis [111,113,114,115,116,117,118].

4.2. Hybrid Networks

In recent years, hybrid neural network architectures have also been used, which combine different types of models to better exploit their individual properties. The most common combination is the integration of Convolutional Neural Networks (CNNs) with recurrent neural networks (RNNs) such as LSTM or GRU. CNNs are used to extract spatial features from images or video frames, while RNNs allow modelling temporal or sequential dependencies. Combining both types of networks allows for the analysis of image sequences, for example in recognising actions on recorded video, tracking objects, or analysing a series of medical images. CNNs are first used to extract features, then RNNs/LSTMs are used to model their changes over time [3,123].

Although the literature review did not take this into account due to the adopted criteria, it is possible to find integrations of CNNs with self-attention mechanisms known from transformer networks, as exemplified by Vision Transformer (ViT) architectures and their various modifications. The aim of such solutions is to combine local feature analysis (typical of CNNs) with global modelling of dependencies enabled by transformers. This approach results in improved results in classification, segmentation, and object detection tasks, especially when global relations between objects placed in the image are important [26].

The combination of generative architectures such as GANs with other models is also important. For example, U-Net can act as a generator in supervised GANs for tasks such as segmentation or image translation between domains (e.g., CycleGAN, pix2pix). The advantage of such solutions is the ability to generate realistic images in new styles or improve the quality of image data for reconstruction or super-resolution [19,124]. This is especially relevant in recent times due to the development of detection systems using imaging techniques, in particular combining multispectral imaging using deep neural networks technology to identify fire sources in different environments [125,126,127,128,129,130,131,132,133].

4.3. Optimisation of Neural Networks for a Class of Images

The architecture of a CNN is often tailored to the type of image. For example, visible light images are typically represented as three-channel (RGB) inputs; thus, networks are designed to process them with three inputs. In contrast, X-ray, infrared, or thermal images are single-channel, meaning that the input layer of the network often has only one input. In addition, the specific characteristics of each image type, such as noise patterns, dynamic range, and resolution, all affect the choice of network depth, filter size, and normalisation strategy and need to be trained on separate datasets. Therefore, although the network architecture may remain similar, individual implementations utilize an optimised neural network for each type of image [14,17].

4.4. Neural Networks and Platforms

To summarise the review in Section 2.3 in terms of the implementation of neural networks on various devices, the following groups can be distinguished:

- Edge devices:

- Applications: Fast recognition (e.g., number of people, simple monitoring, mobile robots, data acquisition directly from sensors).

- Solutions: Lightweight CNN models (e.g., small, optimised networks), simplified machine learning algorithms, classifiers with low resource requirements.

- Hardware architectures: microcontrollers, DSP (Digital Signal Processor), low-power ARM processors, dedicated PCB systems (e.g., energy metres, simple IoT sensors), often with ZigBee or WiFi wireless communication.

- Example: Reading physical metres using a CMOS image sensor + DSP + neural network, sending results through ZigBee to the concentrator [55].

- Embedded industrial systems:

- Applications: Advanced monitoring systems, autonomous robots, real-time control, industrial inspection systems (e.g., production lines, mobile robots, autonomous vehicles).

- Solutions: Medium-sized CNN models, sensor fusion (e.g., IR, cameras, radar), low-latency processing, sometimes recurrent networks, or Spiking Neural Networks (SNNs).

- Hardware architectures: FPGA, System-on-Chip (SoC) systems with their own hardware acceleration for AI, sometimes embedded GPU (e.g., Nvidia Jetson).

- Example: FPGA + CMOS for vehicle parking and orientation systems [48], mobile robots using data from multiple sensors and neural networks [79,80,81,82,83,84,85,86].

- Stand-alone Desktop Computers:

- Applications: Advanced image processing, data mining, scientific experiments, simulations, algorithm development and testing, more complex analyses (e.g., segmentation, multiclass classification, transfer learning).

- Solutions: Full-scale CNN architectures (e.g., ResNet, U-Net), GAN for image quality improvement, advanced autoencoder networks, classic MLP networks for signal analysis.

- Hardware architectures: PC-class CPU, GPU (Nvidia, AMD), workstations, standard laboratory equipment.

- Example: Training and testing networks in the Matlab environment on a PC with CPU/GPU [12].

- Cloud computing:

- Applications: Complex operations on large datasets, large-scale modelling and prediction, remote medical diagnostics, big data analysis, large-scale deep learning, model servicing for clients.

- Solutions: Very large deep networks (Deep CNN, GAN, transformers), high-performance transfer learning techniques, hybrid and multi-type models, training and inference on distributed clusters.

- Hardware architectures: GPU clusters, TPU (Google), distributed cloud environments (AWS, Azure, Google Cloud), containerisation (Docker, Kubernetes).

- Example: Medical applications using remotely trained and hosted models in the cloud for image data analysis (e.g., X-ray) [119,120].

- Supercomputers:

- Applications: Training very large AI models, processing massive datasets from many sources (e.g., genetic projects, medical imaging studies), multiscale simulations, data mining from many sensors simultaneously.

- Solutions: Very complex deep networks, hybrid networks, advanced classifiers and segmenters, multi-stage simulations using many models.

- Hardware architectures: Multithreaded CPU+GPU/TPU clusters, dedicated AI supercomputers (e.g., El Capitan, Summit, Fugaku) (top500.org, accessed on 18 June 2025).

- Example: Analysis and training of networks for large-scale diagnostics, large-scale data fusion from multiple sources [119,120].

The above analysis shows that in edge devices more emphasis is placed on energy efficiency, simple design, and real-time operation. As the performance of platforms (desktop, cloud computing) increases, the scale, complexity, and demand for computing power increase as the algorithms become more complex and multi-layered. It can be seen that a significant part of the solutions described in the article can be scaled depending on need.

4.5. Noticeable New Trends

Despite the very extensive research in this field, there are still many knowledge gaps. This is due to several factors. One is the development of technology that allows for miniaturisation and use of ANNs in places that were previously inaccessible. Another is demand, such as the COVID-19 pandemic. The next is the lack of greater interest in a given topic. However, it can be expected that in the latter case there will be changes, as they will allow for the improvement of services or reduction of costs. Issues that seem interesting but have not yet been sufficiently researched may include:

- Face identification—The main problem here is the reliability of such a solution and the ability to properly detect it despite the lack of access to the full data [30,46].

- Expert systems—Interesting results have been obtained using a combination of Bayesian and neural networks [4,5].

- Fingerprint identification—Only one article describes this solution, stating that much better results were obtained compared to previous methods [47].

- Identification of tissues—It is important not only to identify its type, but also to assess its condition and suggest possible treatment [4].

- Identification of human diseases—Until recently, due to the COVID-19 pandemic this was the main focus of research. However, there are many other diseases which can also be successfully diagnosed, such as joint diseases, breast cancer, or artery diseases [58,59,65,69].

- Assistance in agriculture—In [100], a method was presented to check the proper level of soil moisture. However, it can be expected that this technology can be used to detect the presence of pests or parasites as well.

- Hybrid approaches involving ANNs are finding more and more practical applications and are one of the most important fields of deep learning development in image processing.

5. Conclusions

In the last two decades, the application of neural networks in imaging technologies has developed rapidly, including thermal, infrared, and X-ray imaging. The literature review confirms that methods based on deep networks, such as Convolutional Neural Networks (CNNs), have contributed to significant improvements in the quality of image detection, segmentation, and classification in varied fields from medicine to engineering and agriculture to public safety. However, as presented in Table 4, this has been particularly pronounced in the last decade. The growth in importance is observed in almost every field, especially in image processing (43%) and object recognition (46%). This topic is being taken up by scientists from all over the world, with the most active in the following locations: China, India, the United Kingdom, and the United States.

A key property of ANNs is their ability to automatically extract features and detect complex patterns even in low-resolution or highly noisy images. This enables automatic diagnosis of diseases (e.g., cancers based on mammographic images or ischemic changes in the diabetic foot on thermal images), detection of people and objects in low-light conditions, or identification of the presence of hidden threats such as weapons. For this reason, in recent years research has focused mainly on the following areas:

- Object recognition

- Person security and detection

- Road sign recognition

- Respiratory disease detection.

Despite significant progress, many gaps are still open. These include the need to obtain large, representative, and well-described training datasets or issues related to energy efficiency and implementation in embedded systems and real-time systems as well as the Internet of Things (IoT). An important branch of development will be hybrid applications combining images of many types (e.g., combining data from IR, visible, and X-ray imaging), which will allow for better use of the potential of neural networks in classification and detection tasks. Filling the gaps will therefore focus on the following categories:

- Detection of various diseases, especially rare ones

- Development of expert systems

- Improvement of security systems

- Improvement of autonomous systems.

Filling these gaps should be one of the priorities in both basic and application research.

In summary, neural networks are an important tool in image analysis systems from various areas of life and economy. The prospects for their development include further increasing the efficiency of models, reducing the demand for data and energy, and implementing them on mobile platforms and devices with limited computing power.

In light of the above, it can be said that neural networks will play an increasingly important role in the development of modern imaging technologies, contributing to increased automation, improved diagnostics and safety, and reduced operating costs of many systems.

Author Contributions

Conceptualization, G.W.-J.; methodology, G.W.-J.; software, Ł.P.; validation, J.W.-J., Ł.P. and L.C.; formal analysis, Ł.P.; investigation, Ł.P.; resources, Ł.P.; data curation, L.C.; writing—original draft preparation, L.C.; writing—review and editing, L.C.; visualization, Ł.P.; supervision, J.W.-J.; project administration, J.W.-J., Ł.P. and L.C.; funding acquisition, J.W.-J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; pp. 63–79. [Google Scholar] [CrossRef]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Darrell, T.; Saenko, K. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar] [CrossRef]

- Veredas, F.J.; Mesa, H.; Morente, L. A hybrid learning approach to tissue recognition in wound images. Int. J. Intell. Comput. Cybern. 2009, 2, 327–347. [Google Scholar] [CrossRef]

- Smith, L.; Smith, M.; Farooq, A.; Sun, J.; Ding, Y.; Warr, R. Machine vision 3D skin texture analysis for detection of melanoma. Sens. Rev. 2011, 31, 111–119. [Google Scholar] [CrossRef]

- Kristo, M.; Ivasic-Kos, M. An overview of thermal face recognition methods. In Proceedings of the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; pp. 1098–1103. [Google Scholar] [CrossRef]

- Veranyurt, O.; Sakar, C.O. Concealed pistol detection from thermal images with deep neural networks. Multimed. Tools Appl. 2023, 82, 44259–44275. [Google Scholar] [CrossRef]

- Ko, J.H.; Na, T.; Mukhopadhyay, S. An Energy-Quality Scalable Wireless Image Sensor Node for Object-Based Video Surveillance. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 591–602. [Google Scholar] [CrossRef]

- Datta, G.; Liu, Z.; Yin, Z.; Sun, L.; Jaiswal, A.R.; Beerel, P.A. Enabling ISPless Low-Power Computer Vision. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 2429–2438. [Google Scholar] [CrossRef]

- Li, Y.; Hussain, H.; Yang, C.; Hu, S.; Zhao, J. High-Resolution Image Reconstruction Array of Based on Low-Resolution Infrared Sensor; Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST (LNICST, Volume 303); Springer: Cham, Switzerland, 2019; pp. 118–132. [Google Scholar] [CrossRef]

- Kamal, U.; Ahmed, S.; Toha, T.R.; Islam, N.; Alim Al Islam, A. Intelligent Human Counting through Environmental Sensing in Closed Indoor Settings. Mob. Netw. Appl. 2020, 25, 474–490. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Doll’ar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25, pp. 1097–1105. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar] [CrossRef]

- Jin, K.H.; McCann, M.T.; Froustey, E.; Unser, M. Deep Convolutional Neural Network for Inverse Problems in Imaging. IEEE Trans. Image Process. 2017, 26, 4509–4522. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 4 May 2021. [Google Scholar] [CrossRef]

- Pan, C.; Xia, B. Comparison of pixels unmixing approaches and application to MODIS. In Proceedings of the 2009 International Conference on Artificial Intelligence and Computational Intelligence, Shanghai, China, 7–8 November 2009; Volume 2, pp. 65–68. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, X.; Yang, H.; Zhu, H.; Ren, E.; Liu, Z.; Jia, K.; Luo, L.; Zhang, X.; Wei, Q.; et al. Processing near sensor architecture in mixed-signal domain with CMOS image sensor of convolutional-kernel-readout method. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 389–400. [Google Scholar] [CrossRef]

- Kisku, W.; Kaur, A.; Mishra, D. On-chip Pixel Reconstruction using Simple CNN for Sparsely Read CMOS Image Sensor. In Proceedings of the 2021 IEEE 3rd International Conference on Artificial Intelligence Circuits and Systems (AICAS), Washington, DC, USA, 6–9 June 2021. [Google Scholar] [CrossRef]

- Jeong, B.; Lee, J.; Lee, S.; Lee, S.; Son, Y.; Kim, S.Y. A 240-FPS In-Column Binarized Neural Network Processing in CMOS Image Sensors. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 3907–3911. [Google Scholar] [CrossRef]

- Rojas, R.A.; Luo, W.; Murray, V.; Lu, Y.M. Learning optimal parameters for binary sensing image reconstruction algorithms. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 2791–2795. [Google Scholar] [CrossRef]

- Chen, H.G.; Jayasuriya, S.; Yang, J.; Stephen, J.; Sivaramakrishnan, S.; Veeraraghavan, A.; Molnar, A. ASP vision: Optically computing the first layer of convolutional neural networks using angle sensitive pixels. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 903–912. [Google Scholar] [CrossRef]

- Guo, J.; Gu, H.; Potkonjak, M. Efficient image sensor subsampling for DNN-based image classification. In Proceedings of the ISLPED ’18: International Symposium on Low Power Electronics and Design, Seattle, WA, USA, 23–25 July 2018. [Google Scholar] [CrossRef]

- Ahmad, J.; Warren, A. FPGA based Deterministic Latency Image Acquisition and Processing System for Automated Driving Systems. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018. [Google Scholar] [CrossRef]

- Camuñas-Mesa, L.; Zamarreño-Ramos, C.; Linares-Barranco, A.; Acosta-Jiménez, A.J.; Serrano-Gotarredona, T.; Linares-Barranco, B. An event-driven multi-kernel convolution processor module for event-driven vision sensors. IEEE J. Solid-State Circuits 2012, 47, 504–517. [Google Scholar] [CrossRef]

- Benjilali, W.; Guicquero, W.; Jacques, L.; Sicard, G. Hardware-Compliant Compressive Image Sensor Architecture Based on Random Modulations and Permutations for Embedded Inference. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 1218–1231. [Google Scholar] [CrossRef]

- Kaur, A.; Mishra, D.; Amogh, K.; Sarkar, M. On-Array Compressive Acquisition in CMOS Image Sensors Using Accumulated Spatial Gradients. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 523–532. [Google Scholar] [CrossRef]

- Lai, J.L.; Guan, Z.X.; Chen, Y.T.; Tai, C.F.; Chen, R.J. Implementation of fuzzy cellular neural network with image sensor in CMOS technology. In Proceedings of the 2008 International Conference on Communications, Circuits and Systems, Fujian, China, 25–27 May 2008; pp. 982–986. [Google Scholar] [CrossRef]

- Yang, X.; Yao, C.; Kang, L.; Luo, Q.; Qi, N.; Dou, R.; Yu, S.; Feng, P.; Wei, Z.; Liu, J.; et al. A Bio-Inspired Spiking Vision Chip Based on SPAD Imaging and Direct Spike Computing for Versatile Edge Vision. IEEE J. Solid-State Circuits 2024, 59, 1883–1898. [Google Scholar] [CrossRef]

- Stow, E.; Ahsan, A.; Li, Y.; Babaei, A.; Murai, R.; Saeedi, S.; Kelly, P.H.J. Compiling CNNs with Cain: Focal-plane processing for robot navigation. Auton. Robot. 2022, 46, 893–910. [Google Scholar] [CrossRef]

- Micheloni, C.; Foresti, G.L. Active tuning of intrinsic camera parameters. IEEE Trans. Autom. Sci. Eng. 2009, 6, 577–587. [Google Scholar] [CrossRef]

- Hofmann, M.; Mader, P. Synaptic Scaling—An Artificial Neural Network Regularization Inspired by Nature. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3094–3108. [Google Scholar] [CrossRef]

- Costilla-Reyes, O.; Scully, P.; Ozanyan, K.B. Deep Neural Networks for Learning Spatio-Temporal Features From Tomography Sensors. IEEE Trans. Ind. Electron. 2018, 65, 645–653. [Google Scholar] [CrossRef]

- Cao, D.; Guo, P. Residual error based approach to classification of multisource remote sensing images. In Proceedings of the Sixth International Conference on Intelligent Systems Design and Applications, Jian, China, 16–18 October 2006; Volume 2, pp. 406–411. [Google Scholar] [CrossRef]

- Cracan, A.M.; Teodoru, C.; Dobrea, D.M. Techniques to implement an embedded laser sensor for pattern recognition. In Proceedings of the EUROCON 2005—The International Conference on “Computer as a Tool”, Belgrade, Serbia, 21–24 November 2005; Volume II, pp. 1417–1420. [Google Scholar] [CrossRef]

- Alonso-Fernandez, F.; Hernandez-Diaz, K.; Ramis, S.; Perales, F.J.; Bigun, J. Facial masks and soft-biometrics: Leveraging face recognition CNNs for age and gender prediction on mobile ocular images. IET Biom. 2021, 10, 562–580. [Google Scholar] [CrossRef]

- Lin, C.; Kumar, A. A CNN-Based Framework for Comparison of Contactless to Contact-Based Fingerprints. IEEE Trans. Inf. Forensics Secur. 2019, 14, 662–676. [Google Scholar] [CrossRef]

- Chen, C.Y.; Feng, H.M. Hybrid intelligent vision-based car-like vehicle backing systems design. Expert Syst. Appl. 2009, 36, 7500–7509. [Google Scholar] [CrossRef]

- Rahmat, M.; Abdul Rahim, R.; Sabit, H. Application of neural network and electrodynamic sensor as flow pattern identifier. Sens. Rev. 2010, 30, 137–141. [Google Scholar] [CrossRef]

- Son, H.; Kim, C. Integrated worker detection and tracking for the safe operation of construction machinery. Autom. Constr. 2021, 126, 103670. [Google Scholar] [CrossRef]

- Massoud, Y.; Laganiere, R. Learnable fusion mechanisms for multimodal object detection in autonomous vehicles. IET Comput. Vis. 2024, 18, 499–511. [Google Scholar] [CrossRef]

- Nguwi, Y.Y.; Kouzani, A.Z. Automatic road sign recognition using neural networks. In Proceedings of the 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, 16–21 July 2006; pp. 3955–3962. [Google Scholar] [CrossRef]

- Wang, J.; Lin, T.; Chen, S. Obtaining weld pool vision information during aluminium alloy TIG welding. Int. J. Adv. Manuf. Technol. 2005, 26, 219–227. [Google Scholar] [CrossRef]

- Chen, B.; Wang, J.; Chen, S. Modeling of pulsed GTAW based on multi-sensor fusion. Sens. Rev. 2009, 29, 223–232. [Google Scholar] [CrossRef]

- Zhao, L.; Zhang, Y.; Bai, Q.; Qi, Z.; Zhang, X. Research of digital meter identifier based on DSP and neural network. In Proceedings of the 2009 IEEE International Workshop on Imaging Systems and Techniques, Shenzhen, China, 11–12 May 2009; pp. 402–406. [Google Scholar] [CrossRef]

- Zhao, L.; Zhang, Y.; Bai, Q.; Qi, Z.; Zhang, X. Design and research of digital meter identifier based on image and wireless communication. In Proceedings of the 2009 International Conference on Industrial Mechatronics and Automation, Chengdu, China, 15–16 May 2009; pp. 101–104. [Google Scholar] [CrossRef]

- El Hafi, L.; Ding, M.; Takamatsu, J.; Ogasawara, T. STARE: Realtime, wearable, simultaneous gaze tracking and object recognition from eye images. SMPTE Motion Imaging J. 2017, 126, 37–46. [Google Scholar] [CrossRef]

- Torghabeh, F.A.; Modaresnia, Y.; Hosseini, S.A. An Efficient Approach for Breast Abnormality Detection through High-Level Features of Thermography Images. In Proceedings of the 2023 13th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 1–2 November 2023; pp. 54–59. [Google Scholar] [CrossRef]

- Naz, M.; Sakarkar, G. Arthritis Detection Using Thermography and Artificial Intelligence. In Proceedings of the 2022 10th International Conference on Emerging Trends in Engineering and Technology - Signal and Information Processing (ICETET-SIP-22), Nagpur, India, 29–30 April 2022. [Google Scholar] [CrossRef]

- Sharma, N.; Mirza, S.; Rastogi, A.; Singh, S.; Mahapatra, P.K. Region-wise severity analysis of diabetic plantar foot thermograms. Biomedizinische Technik 2023, 68, 607–615. [Google Scholar] [CrossRef] [PubMed]

- Selvathi, D.; Suganya, K.; Menaka, M.; Venkatraman, B. Deep convolutional neural network-based diabetic eye disease detection and classification using thermal images. Int. J. Reason.-Based Intell. Syst. 2021, 13, 106–114. [Google Scholar] [CrossRef]

- Kyal, C.; Poddar, H.; Reza, M. Thermal Biometric Face Recognition (TBFR): A Noncontact Face Biometry; Elsevier: Amsterdam, The Netherlands, 2022; pp. 29–46. [Google Scholar] [CrossRef]

- Pons, G.; El Ali, A.; Cesar, P. ET-CycleGAN: Generating thermal images from images in the visible spectrum for facial emotion recognition. In Proceedings of the ICMI ’20: International Conference On Multimodal Interaction, Virtual Event, The Netherlands, 25–29 October 2020; pp. 87–91. [Google Scholar] [CrossRef]

- Lin, S.; Chen, L.; Chen, W.; Chang, B. Face Recognition via Thermal Imaging: A Comparative Study of Traditional and CNN-Based Approaches. In Proceedings of the ICMLSC ’24: Proceedings of the 2024 8th International Conference on Machine Learning and Soft Computing; Singapore, 26–28 January 2024, pp. 132–138. [CrossRef]

- Rathore, S.; Bhalerao, S. Implementation of neuro-fuzzy based portable thermographic system for detection of Rheumatoid Arthritis. In Proceedings of the 2015 Global Conference on Communication Technologies (GCCT), Thuckalay, India, 23–24 April 2015; pp. 902–905. [Google Scholar] [CrossRef]

- Nascimento, J.P.; Araujo, B.V.; Rodrigues, G.A.; Xavier, G.V.; Freire, E.O.; Sales, G.; Ferreira, T.V. Detection of electrical equipment using an artificial neural network with visual spectrum images and thermal images. IET Conf. Proc. 2023, 2023, 177–183. [Google Scholar] [CrossRef]

- Muthu, V.; Kavitha, S. Detection of Covid-19 Using an Infrared Fever Screening System (IFSS) Based on Deep Learning Technology. Lect. Notes Netw. Syst. 2023, 672, 217–228. [Google Scholar] [CrossRef]

- Liyanarachchi, R.; Wijekoon, J.; Premathilaka, M.; Vidhanaarachchi, S. COVID-19 symptom identification using Deep Learning and hardware emulated systems. Eng. Appl. Artif. Intell. 2023, 125, 106709. [Google Scholar] [CrossRef]

- Kostadinov, G. AI-Enabled Infrared Thermography: Machine Learning Approaches in Detecting Peripheral Arterial Disease; Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST (LNICST, Volume 514); Springer: Cham, Switzerland, 2023; pp. 159–170. [Google Scholar] [CrossRef]

- Senalp, F.M.; Ceylan, M. Deep learning based super resolution and classification applications for neonatal thermal images. Trait. Signal 2021, 38, 1361–1368. [Google Scholar] [CrossRef]

- Bouallal, D.; Douzi, H.; Harba, R. Diabetic foot thermal image segmentation using Double Encoder-ResUnet (DE-ResUnet). J. Med Eng. Technol. 2022, 46, 378–392. [Google Scholar] [CrossRef]

- Dickens, J.; Van Wyk, M.; Green, J. Pedestrian detection for underground mine vehicles using thermal images. In Proceedings of the IEEE Africon ’11, Victoria Falls, Zambia, 13–15 September 2011. [Google Scholar] [CrossRef]

- Sneha, M.; Aravindakshan, G.; Varsha Vardini, S.; Akshayaa Rajeshwari, D.; Sastika Var, R.; Selvi, J.T.; Sathiyanarayanan, M. An effective drone surveillance system using thermal imaging. In Proceedings of the 2020 International Conference on Smart Technologies in Computing, Electrical and Electronics (ICSTCEE), Bengaluru, India, 9–10 October 2020; pp. 477–482. [Google Scholar] [CrossRef]

- Elgohary, A.A.; Badr, M.M.; Elmalhy, N.A.; Hamdy, R.A.; Ahmed, S.; Mordi, A.A. Transfer of learning in convolutional neural networks for thermal image classification in Electrical Transformer Rooms. Alex. Eng. J. 2024, 105, 423–436. [Google Scholar] [CrossRef]

- Gorska, A.; Guzal, P.; Namiotko, I.; Wedolowska, A.; Wloszczynska, M.; Ruminski, J. Pedestrian detection in low-resolution thermal images. In Proceedings of the 2022 15th International Conference on Human System Interaction (HSI), Melbourne, Australia, 28–31 July 2022. [Google Scholar] [CrossRef]

- Ivašić-Kos, M.; Krišto, M.; Pobar, M. Human detection in thermal imaging using YOLO. In Proceedings of the ICCTA 2019: 2019 5th International Conference on Computer and Technology Applications, Istanbul, Turkey, 16–17 April 2019; pp. 20–24. [Google Scholar] [CrossRef]

- Ganesh, K.; Umapathy, S.; Thanaraj Krishnan, P. Deep learning techniques for automated detection of autism spectrum disorder based on thermal imaging. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2021, 235, 1113–1127. [Google Scholar] [CrossRef]

- Kuo, H.H.; Barik, D.S.; Zhou, J.Y.; Hong, Y.K.; Yan, J.J.; Yen, M.H. Design and Implementation of AI aided Fruit Grading Using Image Recognition. In Proceedings of the 2022 IEEE/ACIS 23rd International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Taichung, Taiwan, 7–9 December 2022; pp. 194–199. [Google Scholar] [CrossRef]

- Purcaru, C.; Precup, R.E.; Iercan, D.; Fedorovici, L.O.; Dohangie, B.; Dragan, F. Nrobotic mobile robot navigation using traffic signs in unknown indoor environments. In Proceedings of the 2013 IEEE 8th International Symposium on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, 23–25 May 2013; pp. 29–34. [Google Scholar] [CrossRef]

- Adarsh, S.; Ramachandran, K. Design of Sensor Data Fusion Algorithm for Mobile Robot Navigation Using ANFIS and Its Analysis Across the Membership Functions. Autom. Control Comput. Sci. 2018, 52, 382–391. [Google Scholar] [CrossRef]

- Crnokić, B. Use of Artificial Neural Networks for Fusion of Infrared and Vision Sensors in a Mobile Robot Navigation System; DAAAM International: Vienna, Austria, 2020; Volume 31, pp. 80–87. [Google Scholar] [CrossRef]

- Gavrilut, I.; Tiponut, V.; Gacsadi, A. Mobile robot navigation based on CNN images processing—An experimental setup. WSEAS Trans. Syst. 2009, 8, 947–956. [Google Scholar]

- He, R.; Xu, P.; Chen, Z.; Luo, W.; Su, Z.; Mao, J. A non-intrusive approach for fault detection and diagnosis of water distribution systems based on image sensors, audio sensors and an inspection robot. Energy Build. 2021, 243, 110967. [Google Scholar] [CrossRef]

- Zhang, X.X.; Zhang, Y.H.; Sun, H.X.; Nian, S.C. Detection and control of flexible peristaltic pipeline robot in elbow. Appl. Mech. Mater. 2013, 389, 789–795. [Google Scholar] [CrossRef]

- Koval, V.; Adamiv, O.; Kapura, V. The local area map building for mobile robot navigation using ultrasound and infrared sensors. In Proceedings of the 2007 4th IEEE Workshop on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications, Dortmund, Germany, 6–8 September 2007; pp. 454–459. [Google Scholar] [CrossRef]

- Greenwald, L.; Artz, D.; Mehta, Y.; Shirmohammadi, B. Using educational robotics to motivate complete AI solutions. AI Mag. 2006, 27, 83–95. [Google Scholar]

- Nigam, P.; Biswas, K.; Gupta, S.; Khandagre, Y.; Dubey, A.K. Radar-infrared sensor track correlation algorithm based on neural network fusion system. In Proceedings of the 2011 3rd International Conference on Electronics Computer Technology, Kanyakumari, India, 8–10 April 2011; Volume 3, pp. 202–206. [Google Scholar] [CrossRef]

- Kim, T.; Kim, S.; Lee, E.; Park, M. Comparative analysis of RADAR-IR sensor fusion methods for object detection. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 18–21 October 2017; pp. 1576–1580. [Google Scholar] [CrossRef]

- Liu, W.; Qiao, Y.; Zhao, Y.; Xing, Z.; He, H. Maritime vessel classification based on a dual network combining EfficientNet with a hybrid network MPANet. IET Image Process. 2024, 18, 3093–3107. [Google Scholar] [CrossRef]

- He, Z.; Zhuge, X.; Wang, J.; Yu, S.; Xie, Y.; Zhao, Y. Half space object classification via incident angle based fusion of radar and infrared sensors. J. Syst. Eng. Electron. 2022, 33, 1025–1031. [Google Scholar] [CrossRef]

- Lawley, A.; Hampson, R.; Worrall, K.; Dobie, G. Using positional tracking to improve abdominal ultrasound machine learning classification. Mach. Learn. Sci. Technol. 2024, 5, 025002. [Google Scholar] [CrossRef]

- Avuthu, B.; Yenuganti, N.; Kasikala, S.; Viswanath, A.; Sarath. A Deep Learning approach for detection and analysis of respiratory infections in covid-19 patients using RGB and infrared images. In Proceedings of the IC3-2022: 2022 Fourteenth International Conference on Contemporary Computing, Noida, India, 4–6 August 2022; pp. 367–371. [Google Scholar] [CrossRef]

- Zhang, J.; Song, C.; Hu, Y.; Yu, B. Improving robustness of robotic grasping by fusing multi-sensor. In Proceedings of the 2012 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Hamburg, Germany, 13–15 September 2012; pp. 126–131. [Google Scholar] [CrossRef]

- Yatim, N.M.; Jamaludin, A.; Noh, Z.M.; Buniyamin, N. Rao-Blackwellized Particle Filter with Neural Network using Low-Cost Range Sensor in Indoor Environment. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 840–848. [Google Scholar] [CrossRef]

- Jeffril, M.A.; Sariff, N. The integration of fuzzy logic and artificial neural network methods for mobile robot obstacle avoidance in a static environment. In Proceedings of the 2013 IEEE 3rd International Conference on System Engineering and Technology, Shah Alam, Malaysia, 19–20 August 2013; pp. 325–330. [Google Scholar] [CrossRef]

- Savage, J.; Cruz, J.; Matamoros, M.; Rosenblueth, D.A.; Muñoz, S.; Negrete, M. Configurable Mobile Robot Behaviors Implemented on FPGA Based Architectures. In Proceedings of the 2016 International Conference on Autonomous Robot Systems and Competitions (ICARSC), Bragança, Portugal, 4–6 May 2016; pp. 317–322. [Google Scholar] [CrossRef]

- Fouladvand, S.; Salavati, S.; Masajedi, P.; Ghanbarzadeh, A. A modified neuro-evolutionary algorithm for mobile robot navigation: Using fuzzy systems and combination of artificial neural networks. Int. J. Knowl.-Based Intell. Eng. Syst. 2015, 19, 125–133. [Google Scholar] [CrossRef]

- Hofer, E.; Rahman, T.; Myers, R.; Hamieh, I. Training a Neural Network for Lane Demarcation Detection in the Infrared Spectrum. In Proceedings of the 2020 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), London, ON, Canada, 30 August–2 September 2020. [Google Scholar] [CrossRef]

- Roy, A.; Noel, M.M. Design of a high-speed line following robot that smoothly follows tight curves. Comput. Electr. Eng. 2016, 56, 732–747. [Google Scholar] [CrossRef]

- Sobayo, R.; Wu, H.H.; Ray, R.; Qian, L. Integration of convolutional neural network and thermal images into soil moisture estimation. In Proceedings of the 2018 1st International Conference on Data Intelligence and Security (ICDIS), South Padre Island, TX, USA, 8–10 April 2018; pp. 207–210. [Google Scholar] [CrossRef]

- Farooq, U.; Arif, H.; Amar, M.; Tahir, B.M.; Qasim, M.; Ahmad, N. Comparative analysis of fuzzy logic and neural network based controllers for line tracking task in mobile robots. In Proceedings of the 2010 2nd International Conference on Signal Processing Systems, Dalian, China, 5–7 July 2010; Volume 2, pp. V2733–V2739. [Google Scholar] [CrossRef]

- Liu, X.; Yang, T.; Li, J.; Wang, M.; Zhang, Y. Rapid ground car detection on aerial infrared images. In Proceedings of the ICIGP 2018: 2018 International Conference on Image and Graphics Processing, Hong Kong, 24–26 February 2018; pp. 33–37. [Google Scholar] [CrossRef]

- Feng, H.; Tang, W.; Xu, H.; Jiang, C.; Ge, S.S.; He, J. Meta-learning based infrared ship object detection model for generalization to unknown domains. Appl. Soft Comput. 2024, 159, 111633. [Google Scholar] [CrossRef]

- He, W.; Han, L.; Zhang, X. Study on characteristics analysis and recognition for infrared absorption spectrum of foreign fibers in cotton. In Proceedings of the 2008 IEEE International Conference on Automation and Logistics, Qingdao, China, 1–3 September 2008; pp. 397–400. [Google Scholar] [CrossRef]

- He, F.; Guo, Y.; Gao, C. Human segmentation of infrared image for mobile robot search. Multimed. Tools Appl. 2018, 77, 10701–10714. [Google Scholar] [CrossRef]

- Yin, C.; Miao, X.; Chen, J.; Jiang, H.; Chen, D.; Tong, Y.; Zheng, S. Human Activity Recognition With Low-Resolution Infrared Array Sensor Using Semi-Supervised Cross-Domain Neural Networks for Indoor Environment. IEEE Internet Things J. 2023, 10, 11761–11772. [Google Scholar] [CrossRef]

- Elliott, M.; Ma, X.; Brett, P. A smart sensing platform for the classification of ambulatory patterns. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2009, 223, 567–575. [Google Scholar] [CrossRef] [PubMed]

- Liao, X.; Wang, D.; Li, Z.; Dey, N.; Sherratt, R.S.; Shi, F. Infrared imaging segmentation employing an explainable deep neural network. Turk. J. Electr. Eng. Comput. Sci. 2023, 31, 1021–1038. [Google Scholar] [CrossRef]

- Saifullah, S.; Drezewski, R. Modified Histogram Equalization for Improved CNN Medical Image Segmentation; Elsevier B.V.: Amsterdam, The Netherlands, 2023; Volume 225, pp. 3021–3030. [Google Scholar] [CrossRef]

- Srivastava, K.; Garg, P.; Sharma, V.; Gupta, N. Comparative Analysis of Various Machine Learning Techniques to Predict Breast Cancer. In Proceedings of the 2022 3rd International Conference on Issues and Challenges in Intelligent Computing Techniques (ICICT), Ghaziabad, India, 11–12 November 2022. [Google Scholar] [CrossRef]

- Nessipkhanov, D.; Davletova, V.; Kurmanbekkyzy, N.; Omarov, B. Deep CNN for the Identification of Pneumonia Respiratory Disease in Chest X-Ray Imagery. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 652–661. [Google Scholar] [CrossRef]

- Hemalatha, T.; Barve, A.; Sivakumar, T. Automatic Chest X Ray Pathology Detection using Convolutional Neural Network. In Proceedings of the 2023 3rd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA), Bengaluru, India, 21–23 December 2023; pp. 331–340. [Google Scholar] [CrossRef]

- Zainab, A.S.N.; Soesanti, I.; Utomo, D.R. Detection of COVID-19 using CNN’s Deep Learning Method: Review. In Proceedings of the 2022 4th International Conference on Biomedical Engineering (IBIOMED), Yogyakarta, Indonesia, 18–19 October 2022; pp. 59–64. [Google Scholar] [CrossRef]

- Dzhaynakbaev, N.; Kurmanbekkyzy, N.; Baimakhanova, A.; Mussatayeva, I. 2D-CNN Architecture for Accurate Classification of COVID-19 Related Pneumonia on X-Ray Images. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 905–917. [Google Scholar] [CrossRef]

- Novamizanti, L.; Rajab, T.L.E. A Survey of Deep Learning on COVID-19 Identification Through X-Ray Images. Lect. Notes Electr. Eng. 2022, 898, 35–57. [Google Scholar] [CrossRef]

- Elghamrawy, S. An H2O’s Deep Learning-Inspired Model Based on Big Data Analytics for Coronavirus Disease (COVID-19) Diagnosis. Stud. Big Data 2020, 78, 263–279. [Google Scholar] [CrossRef]

- Yan, S.; Chen, B. Coronavirus Disease (COVID-19) X-Ray Film Classification Based on Convolutional Neural Network. Lect. Notes Electr. Eng. 2021, 653, 508–515. [Google Scholar] [CrossRef]

- Saraiva, A.; Fonseca Ferreira, N.; De Sousa, L.L.; Costa, N.C.; Sousa, J.V.M.; Santos, D.; Valente, A.; Soares, S. Classification of Images of Childhood Pneumonia Using Convolutional Neural Networks; SciTePress: Setúbal, Portugal, 2019; pp. 112–119. [Google Scholar] [CrossRef]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [PubMed]

- Valada, A.; Oliveira, G.L.; Brox, T.; Burgard, W. Deep Multispectral Semantic Scene Understanding of Forested Environments Using Multimodal Fusion. In Proceedings of the 2016 International Symposium on Experimental Robotics, Tokyo, Japan, 3–6 October 2016; Kulić, D., Nakamura, Y., Khatib, O., Venture, G., Eds.; Springer: Cham, Switzerland, 2017; pp. 465–477. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar] [CrossRef]