FreeMix: Personalized Structure and Appearance Control Without Finetuning

Abstract

1. Introduction

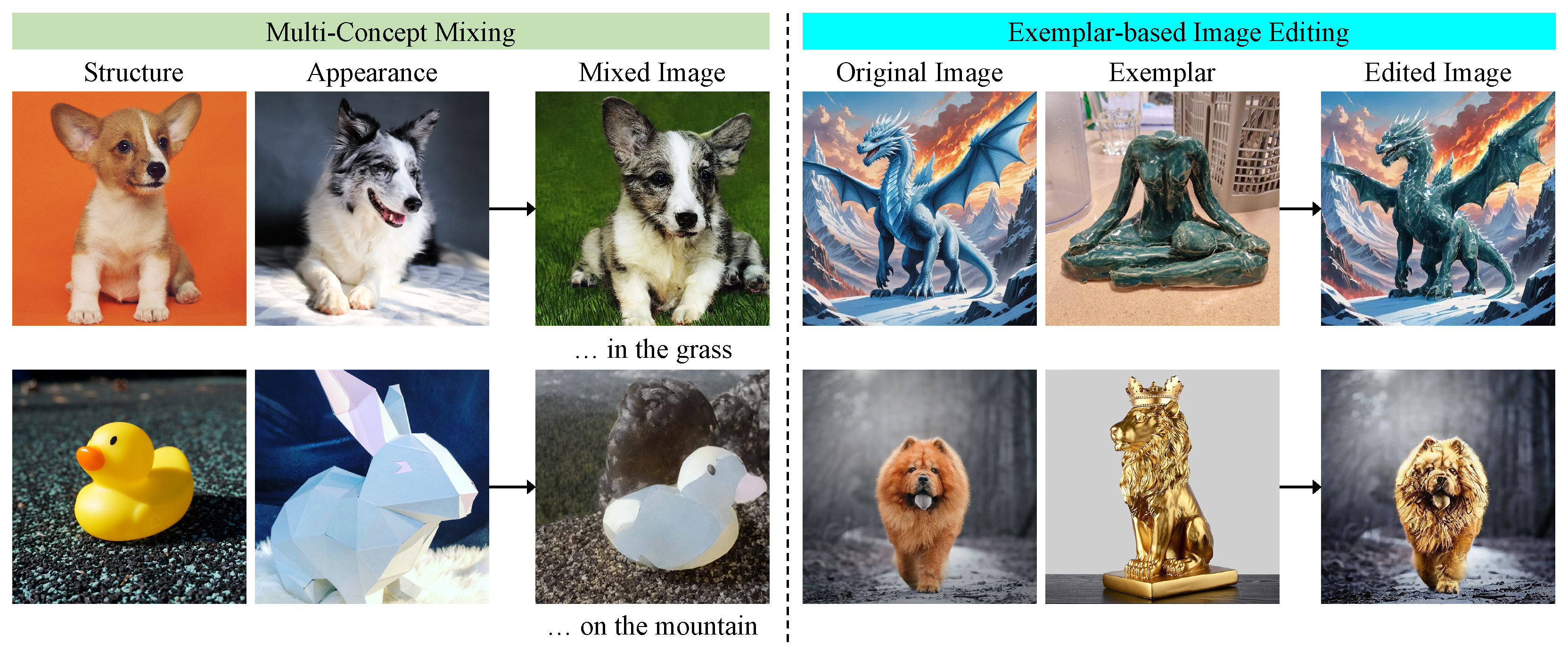

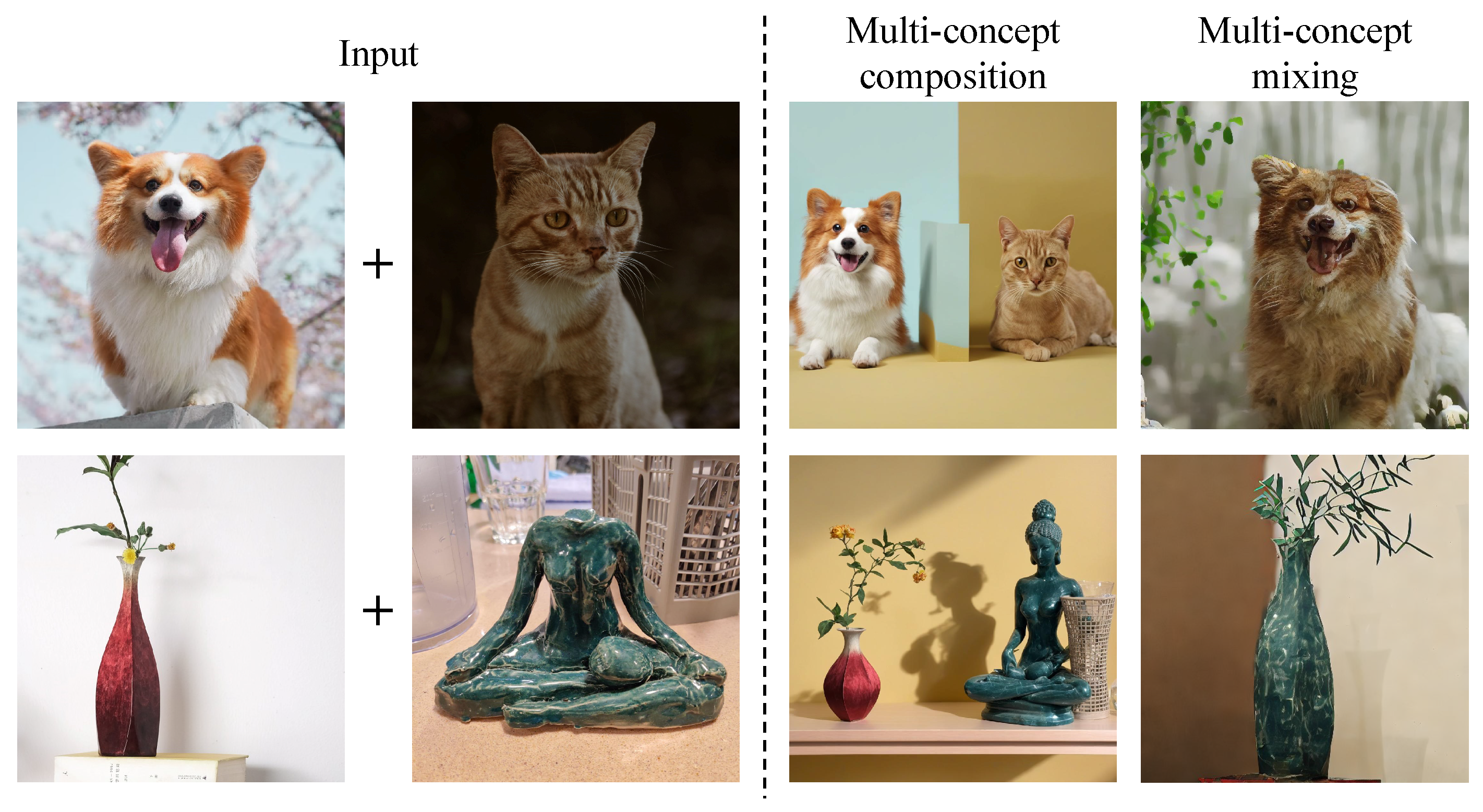

- We propose a method that generates a new image by combining the structure of a single object image and the appearance of an exemplar without requiring additional model training.

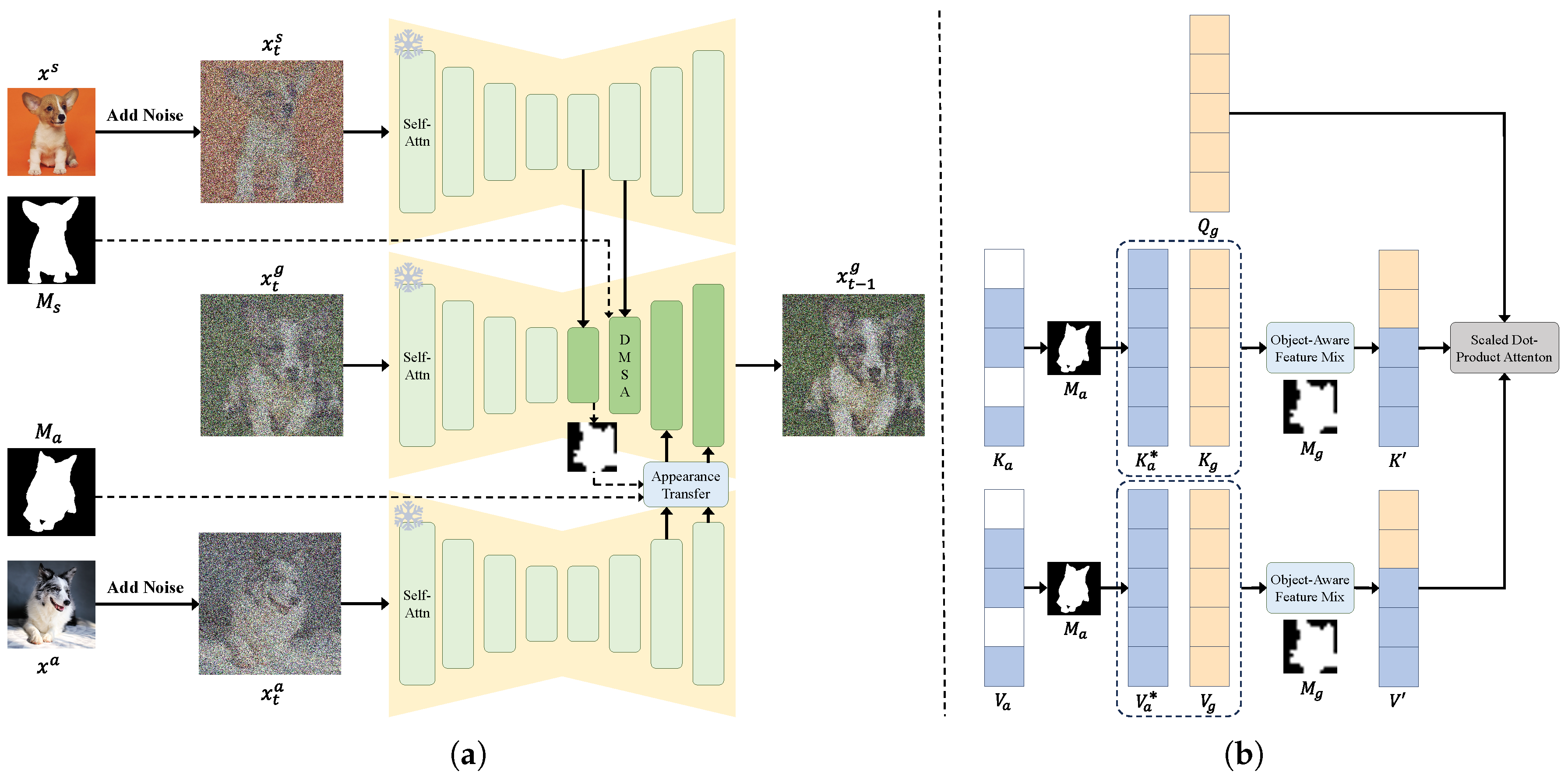

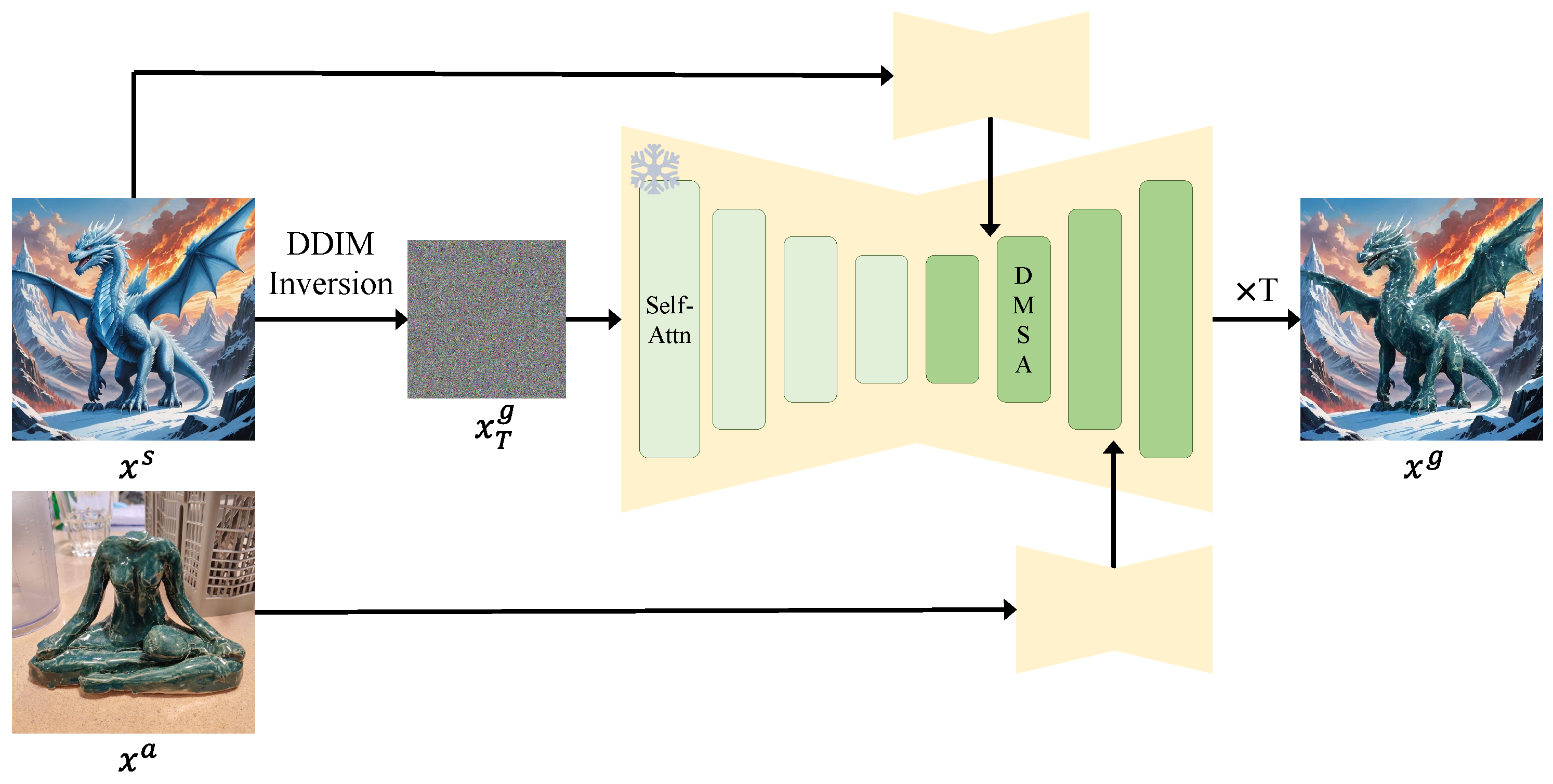

- We introduce DMSA, a self-attention mechanism that effectively disentangles and integrates structure and appearance features, enabling precise concept mixing.

- Our experimental results demonstrate that our method achieves superior performance in multi-concept mixing and exemplar-based image editing tasks compared to existing methods.

2. Related Work

2.1. Text-to-Image Generation

2.2. Personalized Image Generation

2.3. Image Editing

3. Preliminaries

3.1. Diffusion Models

3.2. Attention in Stable Diffusion

3.2.1. Self-Attention

3.2.2. Cross-Attention

4. Methodology

4.1. Disentangle-Mixing Self-Attention

4.2. Structure Generation

4.3. Appearance Transfer

4.4. Application to Image Editing

5. Experiments

5.1. Experiment Setting

5.1.1. Dataset

5.1.2. Evaluation Metrics

5.1.3. Compared Methods

5.1.4. Implementation Settings

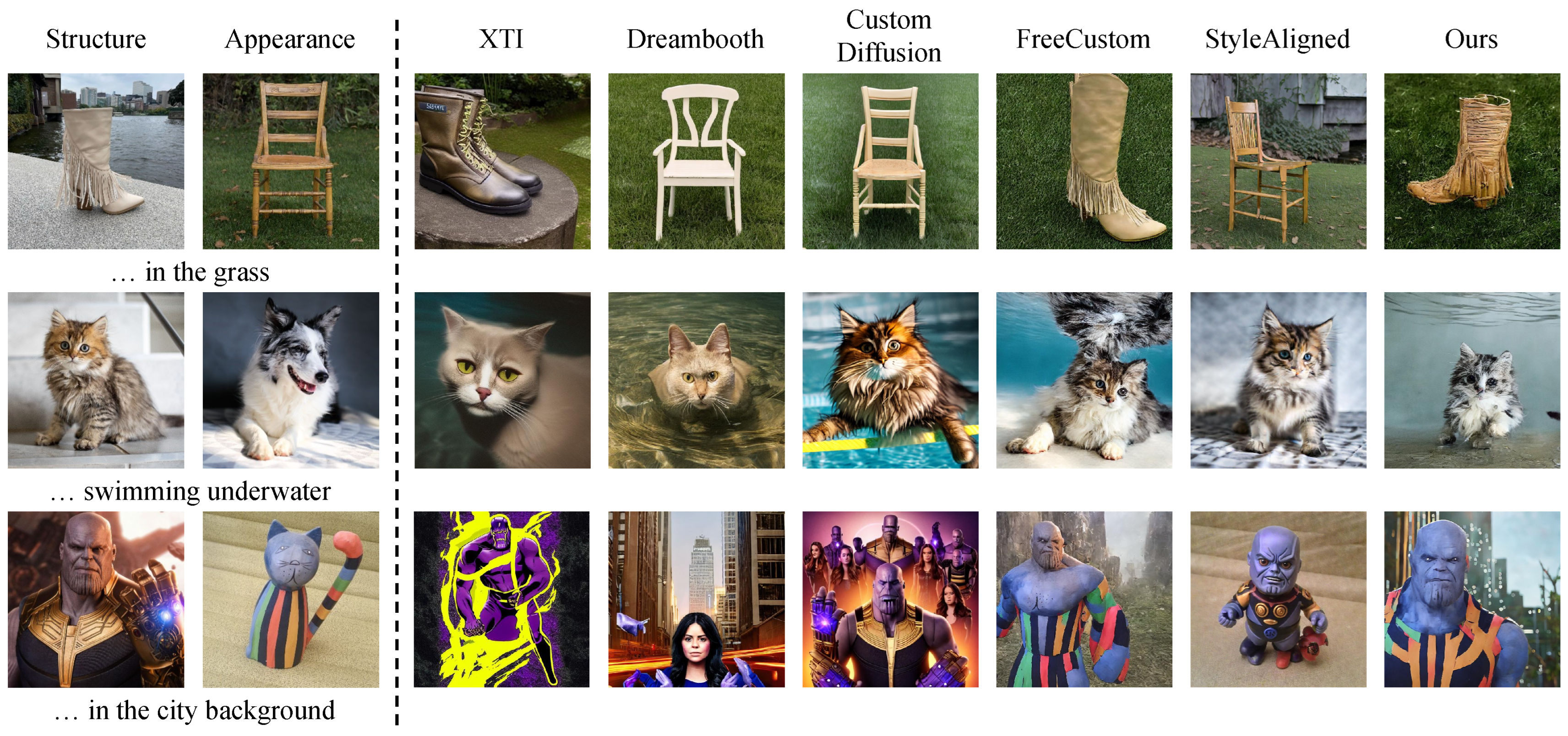

5.2. Multi-Concept Mixing

5.2.1. Quantitative Comparisons

5.2.2. Qualitative Comparisons

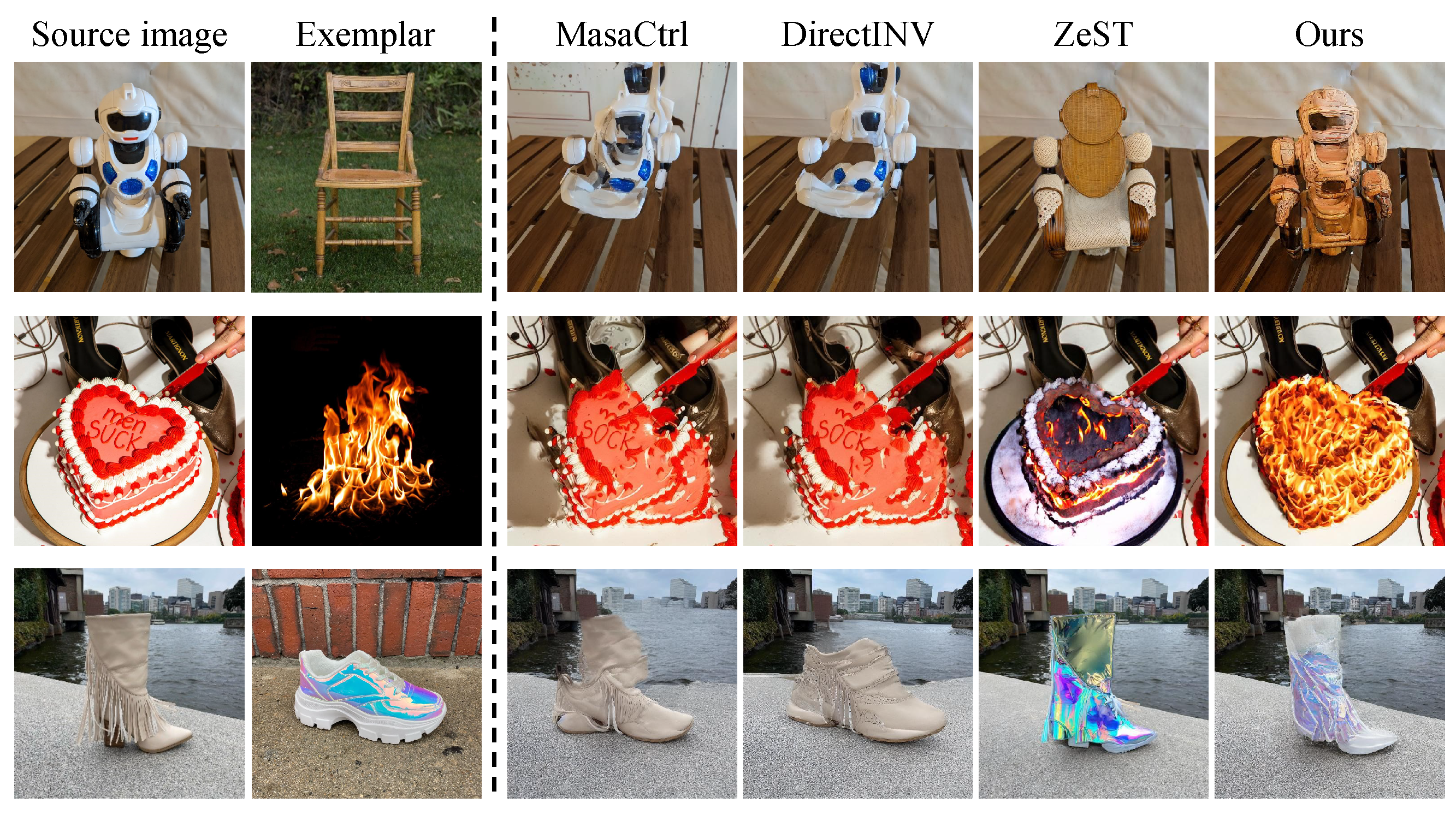

5.3. Exemplar-Based Image Editing

5.3.1. Quantitative Comparisons

5.3.2. Qualitative Comparisons

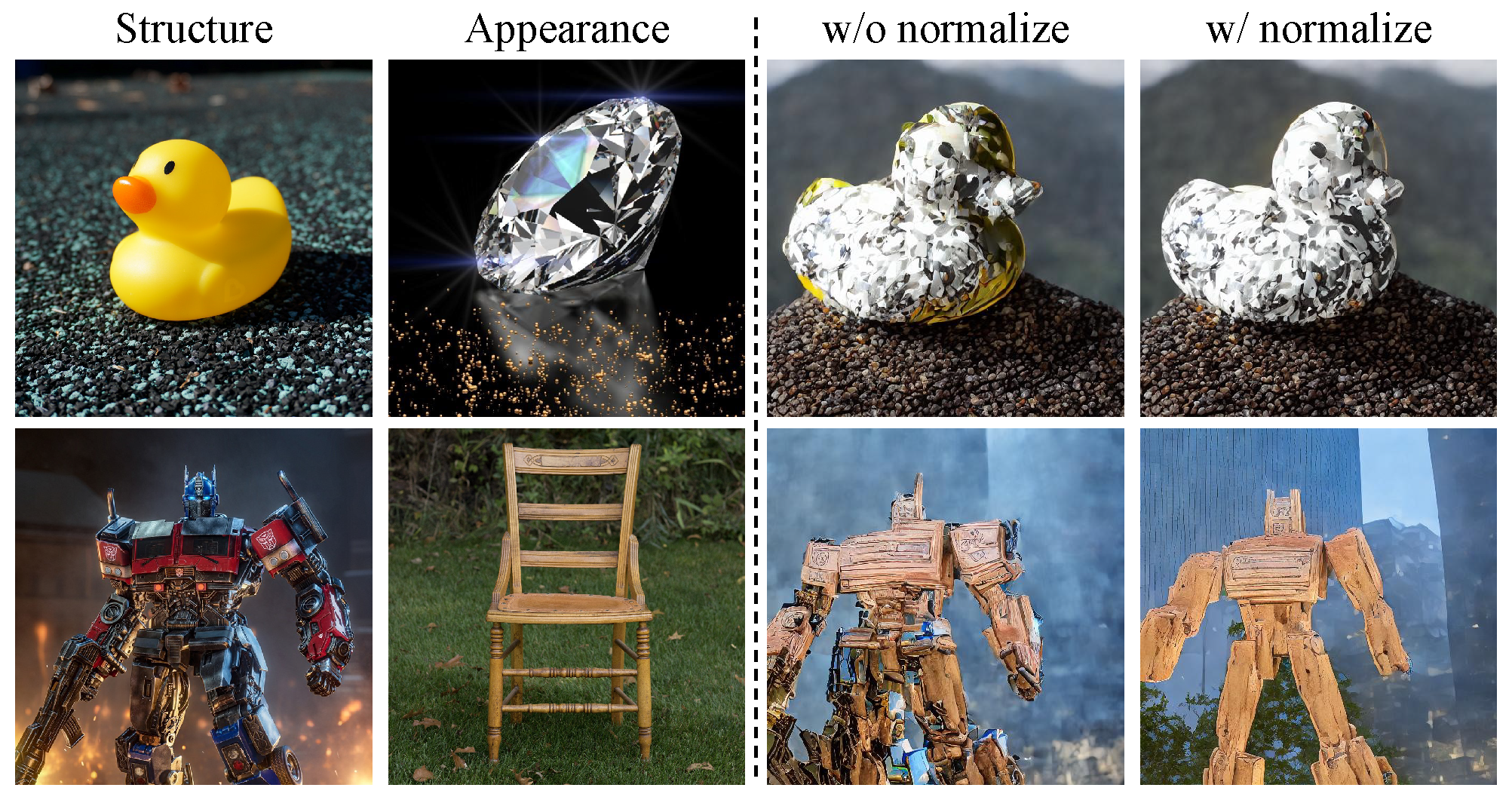

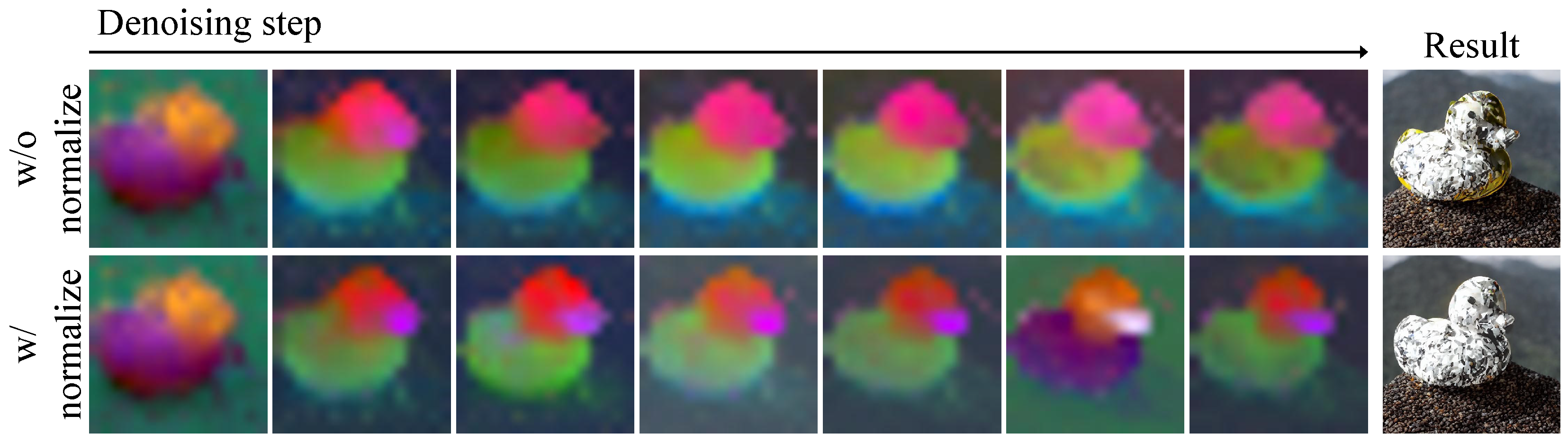

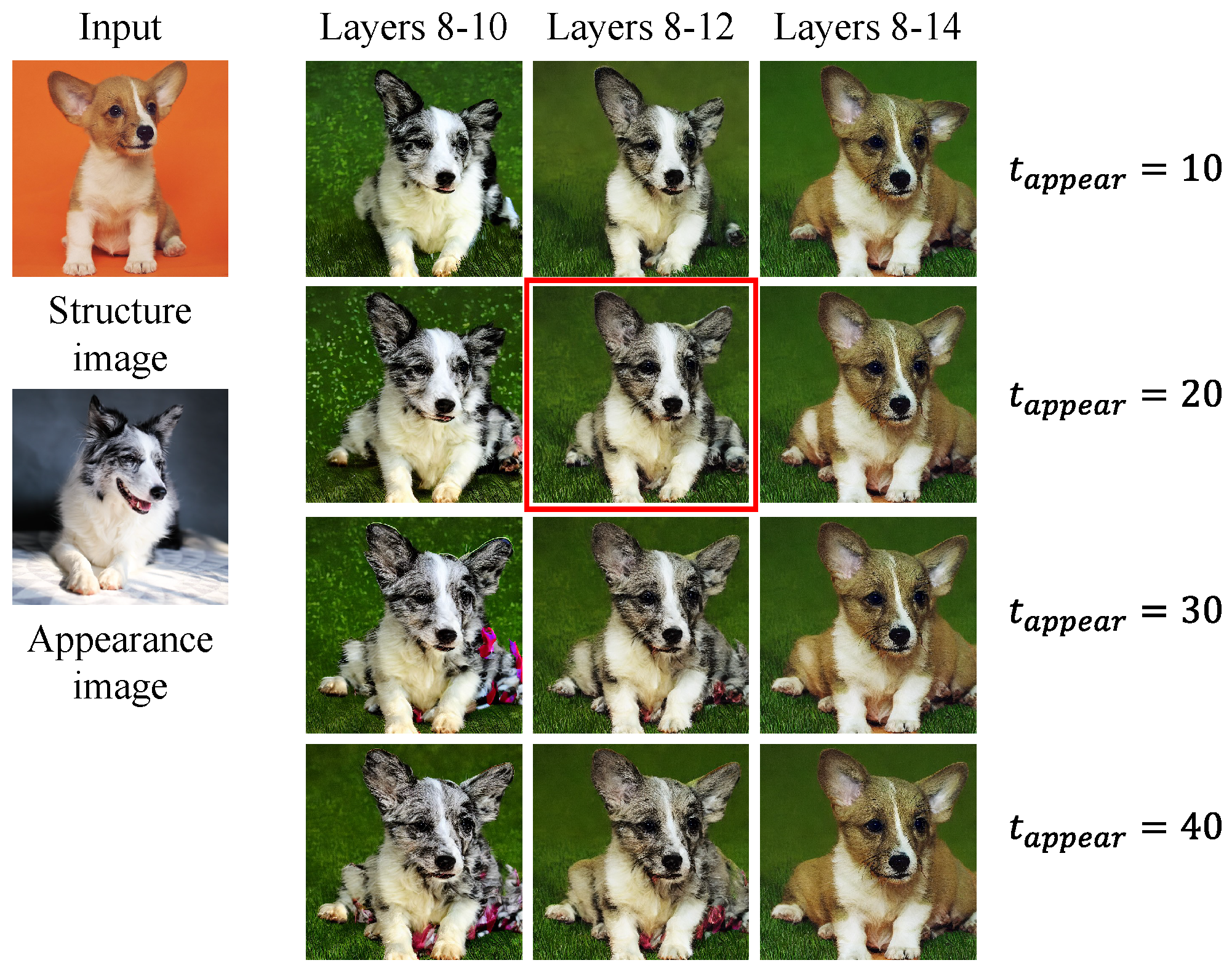

5.4. Ablation Study

5.5. Additional Results

5.5.1. Customization

5.5.2. Appearance Control

5.5.3. Results with ControlNet

6. Conclusions and Future Work

7. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with clip latents. arXiv 2022, arXiv:2204.06125. [Google Scholar] [CrossRef]

- Gal, R.; Alaluf, Y.; Atzmon, Y.; Patashnik, O.; Bermano, A.H.; Chechik, G.; Cohen-Or, D. An image is worth one word: Personalizing text-to-image generation using textual inversion. arXiv 2022, arXiv:2208.01618. [Google Scholar]

- Ruiz, N.; Li, Y.; Jampani, V.; Pritch, Y.; Rubinstein, M.; Aberman, K. Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22500–22510. [Google Scholar]

- Kumari, N.; Zhang, B.; Zhang, R.; Shechtman, E.; Zhu, J.Y. Multi-concept customization of text-to-image diffusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1931–1941. [Google Scholar]

- Voynov, A.; Chu, Q.; Cohen-Or, D.; Aberman, K. p+: Extended textual conditioning in text-to-image generation. arXiv 2023, arXiv:2303.09522. [Google Scholar]

- Ding, G.; Zhao, C.; Wang, W.; Yang, Z.; Liu, Z.; Chen, H.; Shen, C. FreeCustom: Tuning-Free Customized Image Generation for Multi-Concept Composition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 9089–9098. [Google Scholar]

- Hertz, A.; Voynov, A.; Fruchter, S.; Cohen-Or, D. Style aligned image generation via shared attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 4775–4785. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D.N. Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5907–5915. [Google Scholar]

- Xu, T.; Zhang, P.; Huang, Q.; Zhang, H.; Gan, Z.; Huang, X.; He, X. Attngan: Fine-grained text to image generation with attentional generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1316–1324. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Nichol, A.; Dhariwal, P.; Ramesh, A.; Shyam, P.; Mishkin, P.; McGrew, B.; Sutskever, I.; Chen, M. Glide: Towards photorealistic image generation and editing with text-guided diffusion models. arXiv 2021, arXiv:2112.10741. [Google Scholar]

- Saharia, C.; Chan, W.; Saxena, S.; Li, L.; Whang, J.; Denton, E.L.; Ghasemipour, K.; Gontijo Lopes, R.; Karagol Ayan, B.; Salimans, T.; et al. Photorealistic text-to-image diffusion models with deep language understanding. Adv. Neural Inf. Process. Syst. 2022, 35, 36479–36494. [Google Scholar]

- Podell, D.; English, Z.; Lacey, K.; Blattmann, A.; Dockhorn, T.; Müller, J.; Penna, J.; Rombach, R. Sdxl: Improving latent diffusion models for high-resolution image synthesis. arXiv 2023, arXiv:2307.01952. [Google Scholar] [CrossRef]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 3836–3847. [Google Scholar]

- Alaluf, Y.; Richardson, E.; Metzer, G.; Cohen-Or, D. A neural space-time representation for text-to-image personalization. ACM Trans. Graph. (TOG) 2023, 42, 1–10. [Google Scholar] [CrossRef]

- Ye, H.; Zhang, J.; Liu, S.; Han, X.; Yang, W. Ip-adapter: Text compatible image prompt adapter for text-to-image diffusion models. arXiv 2023, arXiv:2308.06721. [Google Scholar]

- Wei, Y.; Zhang, Y.; Ji, Z.; Bai, J.; Zhang, L.; Zuo, W. Elite: Encoding visual concepts into textual embeddings for customized text-to-image generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 15943–15953. [Google Scholar]

- Hertz, A.; Mokady, R.; Tenenbaum, J.; Aberman, K.; Pritch, Y.; Cohen-Or, D. Prompt-to-prompt image editing with cross attention control. arXiv 2022, arXiv:2208.01626. [Google Scholar]

- Cao, M.; Wang, X.; Qi, Z.; Shan, Y.; Qie, X.; Zheng, Y. Masactrl: Tuning-free mutual self-attention control for consistent image synthesis and editing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 22560–22570. [Google Scholar]

- Tumanyan, N.; Geyer, M.; Bagon, S.; Dekel, T. Plug-and-play diffusion features for text-driven image-to-image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1921–1930. [Google Scholar]

- Brooks, T.; Holynski, A.; Efros, A.A. Instructpix2pix: Learning to follow image editing instructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18392–18402. [Google Scholar]

- Ju, X.; Zeng, A.; Bian, Y.; Liu, S.; Xu, Q. Direct inversion: Boosting diffusion-based editing with 3 lines of code. arXiv 2023, arXiv:2310.01506. [Google Scholar] [CrossRef]

- Cheng, T.Y.; Sharma, P.; Markham, A.; Trigoni, N.; Jampani, V. Zest: Zero-shot material transfer from a single image. In Computer Vision—ECCV 2024, Proceedings of the 18th European Conference, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 370–386. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Song, J.; Meng, C.; Ermon, S. Denoising diffusion implicit models. arXiv 2020, arXiv:2010.02502. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Huang, X.; Belongie, S. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1501–1510. [Google Scholar]

- Yang, B.; Gu, S.; Zhang, B.; Zhang, T.; Chen, X.; Sun, X.; Chen, D.; Wen, F. Paint by example: Exemplar-based image editing with diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18381–18391. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. Dinov2: Learning robust visual features without supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Tumanyan, N.; Bar-Tal, O.; Bagon, S.; Dekel, T. Splicing vit features for semantic appearance transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10748–10757. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

| Method | Base Model | CLIP-I ↑ | DINO ↑ | CLIP-T ↑ | PRES ↓ | DIV ↑ |

|---|---|---|---|---|---|---|

| XTI | SDv1.5 | 0.5425 | 0.6827 | 24.57 | 0.6745 | 0.3394 |

| DreamBooth | SDv1.5 | 0.6110 | 0.6393 | 23.58 | 0.5721 | 0.3734 |

| Custom Diffusion | SDv1.5 | 0.6133 | 0.7595 | 25.65 | 0.5583 | 0.3876 |

| FreeCustom | SDv1.5 | 0.6220 | 0.7691 | 27.32 | 0.4936 | 0.3947 |

| StyleAligned | SDv1.5 | 0.6034 | 0.7109 | 21.48 | 0.5636 | 0.3681 |

| StyleAligned | SDXL | 0.6260 | 0.7245 | 22.01 | 0.4803 | 0.3846 |

| Ours | SDv1.5 | 0.6377 | 0.7605 | 27.42 | 0.4869 | 0.4038 |

| Method | Base Model | StruD ↓ | LPIPS ↓ | CLIP-I ↑ |

|---|---|---|---|---|

| MasaCtrl * | SDv1.5 | 0.0350 | 0.1435 | 0.7119 |

| DirectINV * | SDv1.5 | 0.0335 | 0.1273 | 0.7353 |

| ZeST | SDXL | 0.0390 | 0.0734 | 0.7519 |

| Ours | SDv1.5 | 0.0245 | 0.0712 | 0.8101 |

| XTI | DreamBooth | Custom Diffusion | FreeCustom | StyleAligned | Ours | |

|---|---|---|---|---|---|---|

| Generation Time (s) | 1183 | 675 | 342 | 37 | 680 | 34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, M.; Choi, Y.S. FreeMix: Personalized Structure and Appearance Control Without Finetuning. Appl. Sci. 2025, 15, 9889. https://doi.org/10.3390/app15189889

Kang M, Choi YS. FreeMix: Personalized Structure and Appearance Control Without Finetuning. Applied Sciences. 2025; 15(18):9889. https://doi.org/10.3390/app15189889

Chicago/Turabian StyleKang, Mingyu, and Yong Suk Choi. 2025. "FreeMix: Personalized Structure and Appearance Control Without Finetuning" Applied Sciences 15, no. 18: 9889. https://doi.org/10.3390/app15189889

APA StyleKang, M., & Choi, Y. S. (2025). FreeMix: Personalized Structure and Appearance Control Without Finetuning. Applied Sciences, 15(18), 9889. https://doi.org/10.3390/app15189889