The Effectiveness of Unimodal and Multimodal Warnings on Drivers’ Response Time: A Meta-Analysis

Abstract

1. Introduction

1.1. The Traffic Hazards

1.2. Unimodal Warning Systems

1.3. Multimodal Warning Systems and Related Theories

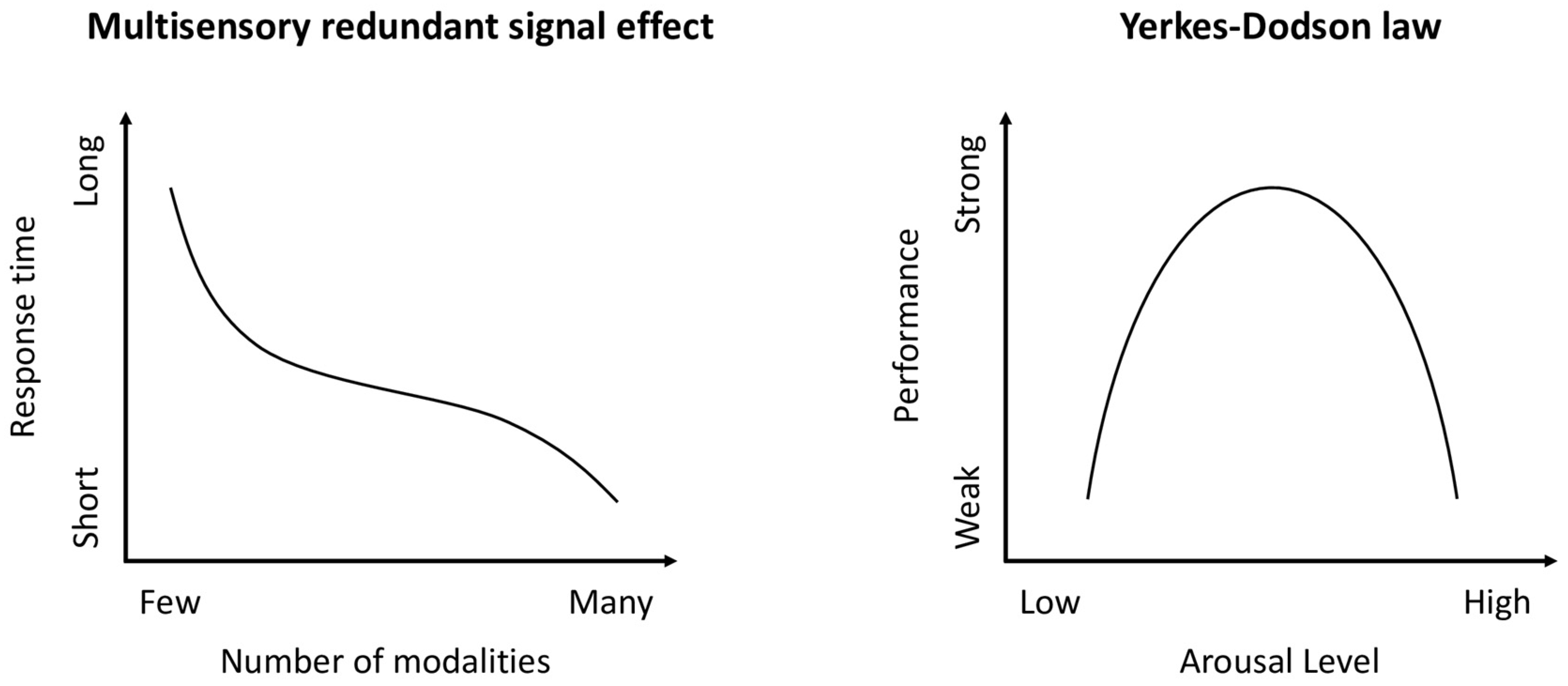

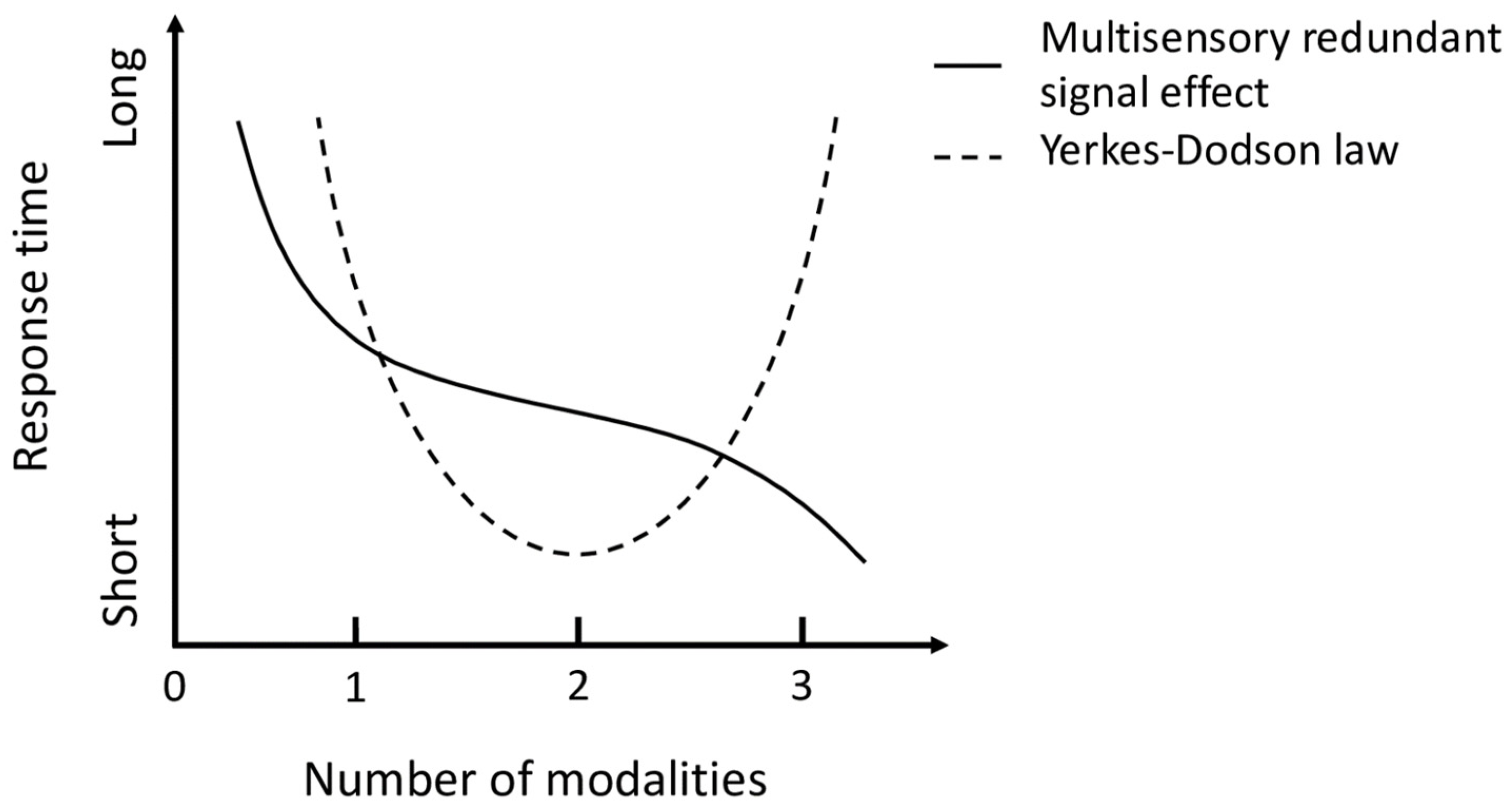

1.3.1. Redundant Signal Effect

1.3.2. Yerkes-Dodson Law

1.4. Central Research Questions

2. Method

2.1. Search Strategy and Selection Criteria

2.2. Data Extraction

2.3. Statistical Analysis Strategies

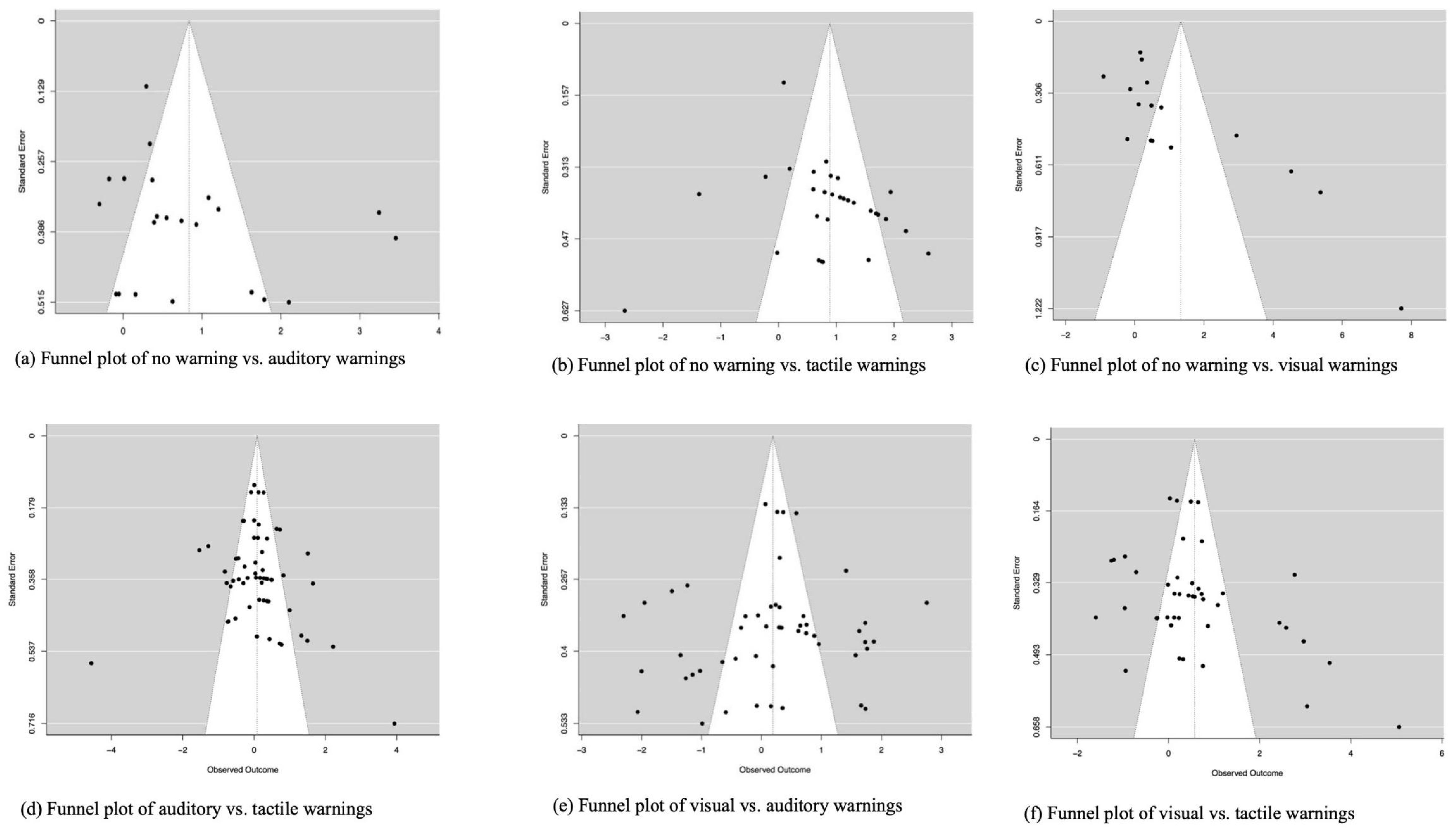

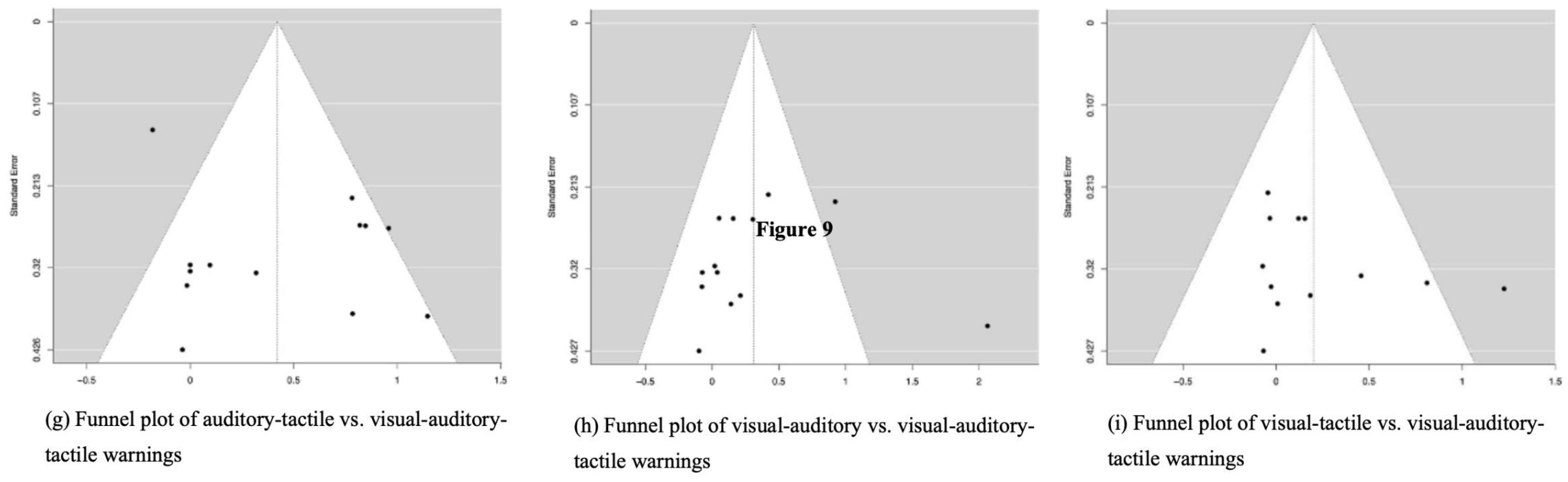

2.4. Publication Bias

3. Results

3.1. Effectiveness of Unimodal Warnings

3.2. Comparisons Between Unimodal Warnings

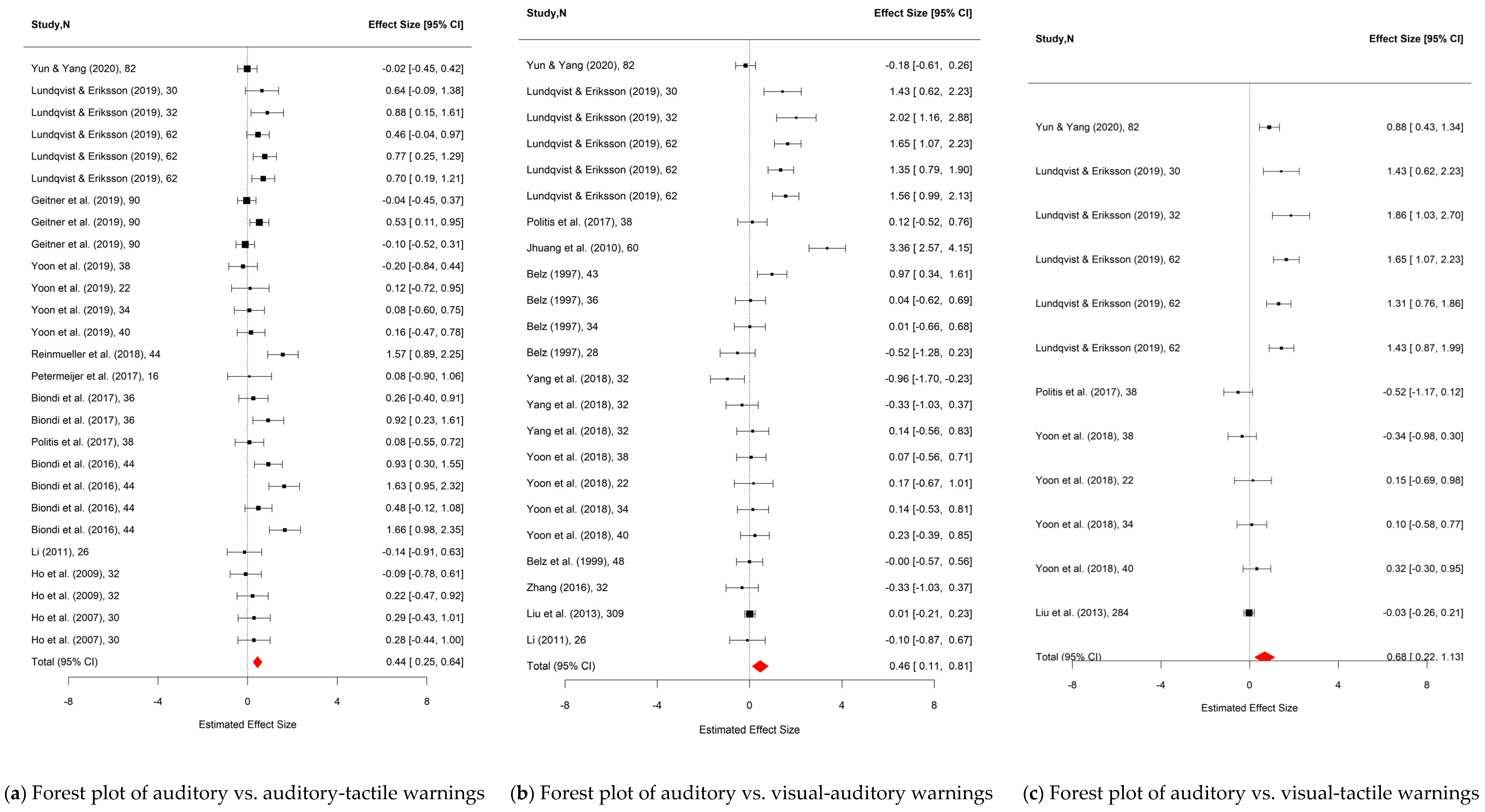

3.3. Comparisons Between Unimodal and Multimodal Warnings

3.3.1. Unimodal Versus Bimodal Warnings

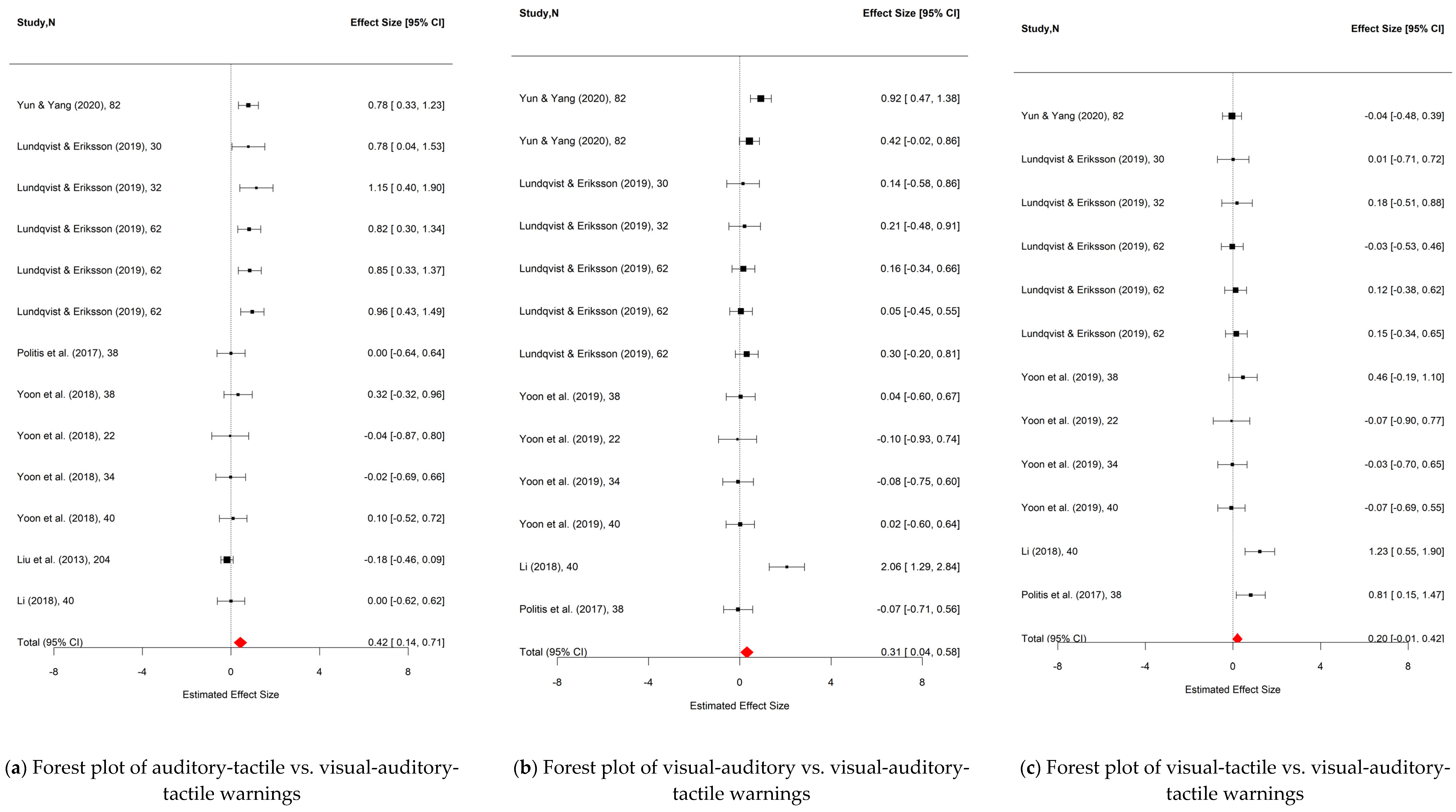

3.3.2. Bimodal Versus Trimodal Warnings

4. Discussion

4.1. The Benefit of Unimodal Warnings

4.2. Tactile Warnings as the Best Potential Unimodal Warnings

4.3. MIE of Driving Warnings Reveals a Positive Trend with the Number of Modalities

4.3.1. Comparisons Between Unimodal and Bimodal Warnings

4.3.2. Comparisons Between Bimodal and Trimodal Warnings

4.3.3. MIE in Driving Warning Systems

4.4. Implications

4.5. Limitations and Future Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Road Traffic Injuries. 2022. Available online: https://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries (accessed on 6 February 2023).

- National Highway Traffic Safety Administration. 2020 Fatality Data Show Increased Traffic Fatalities during Pandemic. 2021. Available online: https://www.nhtsa.gov/press-releases/2020-fatality-data-show-increased-traffic-fatalities-during-pandemic (accessed on 6 February 2023).

- Edmonds, E. AAA Recommends Common Naming for ADAS Technology. Newsroom. 2019. Available online: https://newsroom.aaa.com/2019/01/common-naming-for-adas-technology (accessed on 6 February 2023).

- Hegeman, G.; Brookhuis, K.; Hoogendoorn, K. Opportunities of advanced driver assistance systems towards overtaking. Eur. J. Transp. Infrastruct. Res. 2005, 5, 281–296. [Google Scholar] [CrossRef]

- Van der Heijden, R.; van Wees, K. Introducing advanced driver assistance systems: Some legal issues. Eur. J. Transp. Infrastruct. Res. 2001, 1, 1–18. [Google Scholar] [CrossRef]

- Horowitz, A.D.; Dingus, T.A. Warning signal design: A key human factors issue in an in-vehicle front-to-rear-end collision warning system. In Proceedings of the Human Factors Society Annual Meeting; Sage CA: Atlanta, GA, USA, 1992; Volume 36, pp. 1011–1013. [Google Scholar] [CrossRef]

- Gaspar, J.G.; Brown, T.L. Matters of state: Examining the effectiveness of lane departure warnings as a function of driver distraction. Transp. Res. Part F Traffic Psychol. Behav. 2020, 71, 1–7. [Google Scholar] [CrossRef]

- Lundqvist, L.-M.; Eriksson, L. Age, cognitive load, and multimodal effects on driver response to directional warning. Appl. Ergon. 2019, 76, 147–154. [Google Scholar] [CrossRef] [PubMed]

- Scott, J.J.; Gray, R. A comparison of tactile, visual, and auditory warnings for rear-end collision prevention in simulated driving. Hum. Factors 2008, 50, 264–275. [Google Scholar] [CrossRef] [PubMed]

- Yun, H.; Yang, J.H. Multimodal warning design for take-over request in conditionally automated driving. Eur. Transp. Res. Rev. 2020, 12, 34. [Google Scholar] [CrossRef]

- Spence, C.; Ho, C. Tactile and multisensory spatial warning signals for drivers. IEEE Trans. Haptics 2008, 1, 121–129. [Google Scholar] [CrossRef] [PubMed]

- Mack, A.; Rock, I. Inattentional Blindness; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Rock, I.; Linnett, C.M.; Grant, P.; Mack, A. Perception without attention: Results of a new method. Cogn. Psychol. 1992, 24, 502–534. [Google Scholar] [CrossRef]

- Simons, D.J.; Chabris, C.F. Gorillas in our midst: Sustained inattentional blindness for dynamic events. Perception 1999, 28, 1059–1074. [Google Scholar] [CrossRef] [PubMed]

- Macdonald JS, P.; Lavie, N. Visual perceptual load induces inattentional deafness. Atten. Percept. Psychophys. 2011, 73, 1780–1789. [Google Scholar] [CrossRef] [PubMed]

- Scheer, M.; Buelthoff, H.H.; Chuang, L.L. Auditory task irrelevance: A basis for inattentional deafness. Hum. Factors 2018, 60, 428–440. [Google Scholar] [CrossRef]

- Sabic, E.; Chen, J.; MacDonald, J.A. Toward a better understanding of in-vehicle auditory warnings and background noise. Hum. Factors 2021, 63, 312–335. [Google Scholar] [CrossRef] [PubMed]

- Gaffary, Y.; Lecuyer, Y. The use of haptic and tactile information in the car to improve driving safety: A review of current technologies. Front. ICT 2018, 5, 5. [Google Scholar] [CrossRef]

- Biral, F.; Da Lio, M.; Lot, R.; Sartori, R. An intelligent curve warning system for powered two wheel vehicles. Eur. Transp. Res. Rev. 2010, 3, 147–156. [Google Scholar] [CrossRef]

- Lee, J.D.; Hoffman, J.D.; Hayes, E. Collision warning design to mitigate driver distraction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; ACM Press: New York, NY, USA, 2004; pp. 65–72. [Google Scholar] [CrossRef]

- Zhu, A.; Choi, A.; Ma, K.X.; Cao, S.; Yao, H.; Wu, J.; He, J. A tactile toolkit and driving simulation platform to evaluate the effectiveness of collision warning systems for drivers. J. Vis. Exp. 2020, 166, 61408. [Google Scholar] [CrossRef]

- Meng, F.; Spence, C. Tactile warning signals for in-vehicle systems. Accid. Anal. Prev. 2015, 75, 333–346. [Google Scholar] [CrossRef] [PubMed]

- Diederich, A.; Colonius, H. Bimodal and trimodal multisensory enhancement: Effects of stimulus onset and intensity on reaction time. Atten. Percept. Psychophys. 2004, 66, 1388–1404. [Google Scholar] [CrossRef] [PubMed]

- Hughes, H.C.; Reuter-Lorenz, P.A.; Nozawa, G.; RFendrich, R. Visual-auditory interactions in sensorimotor processing: Saccades versus manual responses. J. Exp. Psychol. Hum. Percept. Perform. 1994, 20, 131–153. [Google Scholar] [CrossRef] [PubMed]

- Lunn, J.; Sjoblom, A.; Ward, J.; Soto-Faraco, S.; Forster, S. Multisensory enhancement of attention depends on whether you are already paying attention. Cognition 2019, 187, 38–49. [Google Scholar] [CrossRef] [PubMed]

- Pannunzi, M.; Perez-Bellido, A.; Pereda-Banos, A.; Lopez-Moliner, J.; Deco, G.; Soto-Faraco, S. Deconstructing multisensory enhancement in detection. J. Neurophysiol. 2015, 113, 1800–1818. [Google Scholar] [CrossRef] [PubMed]

- Politis, I.; Brewster, S.; Pollick, F. Using multimodal displays to signify critical handovers of control to distracted autonomous car drivers. Int. J. Mob. Hum. Comput. Interact. 2017, 9, 1–16. [Google Scholar] [CrossRef]

- Yang, Z.; Shi, J.L.; Zhang, Y.; Wang, D.M.; Li, H.T.; Wu, C.X.; Zhang, Y.Q.; Wan, J.Y. Head-up display graphic warning system facilitates simulated driving performance. Int. J. Hum.–Comput. Interact. 2019, 35, 796–803. [Google Scholar] [CrossRef]

- Yoon, S.H.; Kim, Y.W.; Ji, Y.G. The effects of takeover request modalities on highly automated car control transitions. Accid. Anal. Prev. 2019, 123, 150–158. [Google Scholar] [CrossRef]

- Kinchla, R.A. Detecting target elements in multielement arrays: A confusability model. Atten. Percept. Psychophys. 1974, 15, 149–158. [Google Scholar] [CrossRef]

- Miller, J. Statistical facilitation and the redundant signals effect: What are race and coactivation models? Atten. Percept. Psychophys. 2016, 78, 516–519. [Google Scholar] [CrossRef] [PubMed]

- Meredith, M.A.; Stein, B.E. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J. Neurophysiol. 1986, 56, 640–662. [Google Scholar] [CrossRef] [PubMed]

- Stein, B.E.; Meredith, M.A. The Merging of the Senses; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Marks, L.E. Multimodal perception. In Perceptual Coding; Carterette, E.C., Friedman, M.P., Eds.; Academic Press. Inc.: New York, NY, USA, 1978; pp. 321–339. [Google Scholar] [CrossRef]

- Peiffer, A.M.; Mozolic, A.M.; Hugenschmidt, C.E.; Laurienti, P.J. Age-related multisensory enhancement in a simple audiovisual detection task. Neuro Rep. 2007, 18, 1077–1081. [Google Scholar] [CrossRef] [PubMed]

- Ho, C.; Reed, N.; Spence, N. Multisensory in-car warning signals for collision avoidance. Hum. Factors 2007, 49, 1107–1114. [Google Scholar] [CrossRef]

- Jeong, H.; Green, P. Forward Collision Warning Modality and Content: A Summary of Human Factors Experiments; Technical Report UMTRI-2012-35; University of Michigan Transportation Research Institute: Ann Arbor, Michigan, 2012; Available online: https://deepblue.lib.umich.edu/bitstream/handle/2027.42/134038/103247.pdf (accessed on 6 February 2023).

- Yerkes, R.M.; Dodson, J.D. The relation of strength of stimulus to rapidity of habit-formation. J. Comp. Neurol. Psychol. 1908, 18, 459–482. [Google Scholar] [CrossRef]

- Belz, S.M.; Robinson, G.S.; Casali, J.G. A new class of auditory warning signals for complex systems: Auditory icons. Hum. Factors 1999, 41, 608–618. [Google Scholar] [CrossRef] [PubMed]

- Ferrier, D. The Functions of the Brain; Smith, Elder, and Company: London, UK, 1886. [Google Scholar]

- Geitner, C.; Biondi, F.; Skrypchuk, L.; Jennings, L.; Birrell, S. The comparison of auditory, tactile, and multimodal warnings for the effective communication of unexpected events during an automated driving scenario. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 23–33. [Google Scholar] [CrossRef]

- Atwood, J.R.; Guo, F.; Blanco, M. Evaluate driver response to active warning system in level-2 automated vehicles. Accid. Anal. Prev. 2019, 128, 132–138. [Google Scholar] [CrossRef] [PubMed]

- Zeeb, K.; Buchner, A.; Schrauf, M. Is take-over time all that matters? The impact of visual-cognitive load on driver take-over quality after conditionally automated driving. Accid. Anal. Prev. 2016, 92, 230–239. [Google Scholar] [CrossRef] [PubMed]

- Thalheimer, W.; Cook, S. How to calculate effect sizes from published research: A simplified methodology. Work.-Learn. Res. 2002, 1, 1–9. [Google Scholar]

- Cohen, J. A power primer. Psychol. Bull. 1992, 112, 155–159. [Google Scholar] [CrossRef] [PubMed]

- Brown, S.B. Effects of Haptic and Auditory Warnings on Driver Intersection Behavior and Perception. Ph.D. Thesis, Virginia Polytechnic Institute and State University, Blacksburg, VA, USA, 2005. [Google Scholar]

- Reinmueller, K.; Koehler, L.; Steinhauser, M. Adaptive warning signals adjusted to driver passenger conversation: Impact of system awareness on behavioral adaptations. Transp. Res. Part F Traffic Psychol. Behav. 2018, 58, 242–252. [Google Scholar] [CrossRef]

- Ruscio, D.; Ciceri, M.R.; Biassoni, F. How does a collision warning system shape driver’s brake response time? The influence of expectancy and automation complacency on real-life emergency braking. Accid. Anal. Prev. 2015, 77, 72–81. [Google Scholar] [CrossRef]

- Ho, C.; Gray, R.; Spence, C. Reorienting driver attention with dynamic tactile cues. IEEE Trans. Haptics 2013, 7, 86–94. [Google Scholar] [CrossRef] [PubMed]

- Lylykangas, J.; Surakka, V.; Salminen, K.; Farooq, A.; Raisamo, R. Responses to visual, tactile and visual–tactile forward collision warnings while gaze on and off the road. Transp. Res. Part F Traffic Psychol. Behav. 2016, 40, 68–77. [Google Scholar] [CrossRef]

- Wu, X.; Boyle, L.N.; Marshall, D.; O’Brien, W. The effectiveness of auditory forward collision warning alerts. Transp. Res. Part F Traffic Psychol. Behav. 2018, 59, 164–178. [Google Scholar] [CrossRef]

- Zhang, Y. Study on the Influence of Head-up Display Graphic Warning Display Methods on Simulated Driving. Master’s Thesis, Zhejiang Sci-Tech University, Hangzhou, China, 2017. [Google Scholar]

- Ahtamad, M.; Gray, R.; Ho, C.; Reed, N.; Spence, C. Informative collision warnings: Effect of modality and driver age. In Proceedings of the Eighth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Salt Lake City, UT, USA, 22–25 June 2015; University of Iowa: Iowa City, IA, USA, 2015; Volume 8. [Google Scholar]

- Ahtamad, M.; Spence, C.; Ho, C.; Gray, R. Warning drivers about impending collisions using vibrotactile flow. IEEE Trans. Haptics 2015, 9, 134–141. [Google Scholar] [CrossRef] [PubMed]

- Aksan, N.; Sager, L.; Hacker, S.; Marini, R.; Dawson, J.; Anderson, S.; Rizzo, M. Forward collision warning: Clues to optimal timing of advisory warnings. SAE Int. J. Transp. Saf. 2016, 4, 107–112. [Google Scholar] [CrossRef]

- Fitch, G.M.; Hankey, J.M.; Kleiner, B.M.; Dingus, T.A. Driver comprehension of multiple haptic seat alerts intended for use in an integrated collision avoidance system. Transp. Res. Part F Traffic Psychol. Behav. 2011, 14, 278–290. [Google Scholar] [CrossRef]

- Gray, R.; Ho, C.; Spence, C. A comparison of different informative vibrotactile forward collision warnings: Does the warning need to be linked to the collision event? PLoS ONE 2014, 9, e87070. [Google Scholar] [CrossRef] [PubMed]

- Ho, C.; Reed, N.; Spence, C. Assessing the effectiveness of “intuitive” vibrotactile warning signals in preventing front-to-rear-end collisions in a driving simulator. Accid. Anal. Prev. 2006, 38, 988–996. [Google Scholar] [CrossRef] [PubMed]

- Lewis, B.A.; Eisert, J.L.; Baldwin, C.L. Validation of essential acoustic parameters for highly urgent in-vehicle collision warnings. Hum. Factors 2018, 60, 248–261. [Google Scholar] [CrossRef] [PubMed]

- Li, J.Z. Study on the Stress Response of Drivers under Different Warning Methods. Master’s Thesis, South China University of Technology, Guangzhou, China, 2018. [Google Scholar]

- Meng, F.; Gray, R.; Ho, C.; Ahtamad, M.; Spence, C. Dynamic vibrotactile signals for forward collision avoidance warning systems. Hum. Factors 2015, 57, 329–346. [Google Scholar] [CrossRef] [PubMed]

- Mohebbi, R.; Gray, R.; Tan, H.Z. Driver reaction time to tactile and auditory rear-end collision warnings while talking on a cell phone. Hum. Factors 2009, 51, 102–110. [Google Scholar] [CrossRef] [PubMed]

- Jhuang, J.W.; Liu, Y.C.; Ou, Y.K. A comparison study of five in-vehicle warning information displays with or without spatial compatibility. In Proceedings of the 2010 IEEE International Conference on Industrial Engineering and Engineering Management, Macao, China, 7–10 December 2010; pp. 827–831. [Google Scholar]

- Li, J.W. Research on Driver Fatigue State and Adaptive Warning Method of Traffic Environment. Ph.D. Thesis, Tsinghua University, Beijing, China, 2011. [Google Scholar]

- Liu, Y.J.; Jin, L.S.; Zheng, Y.; Li, Y.J. Research on methods of warning trigger for vehicle active safety warning system. Automot. Technol. 2013, 3, 33–37. [Google Scholar]

- Shi, J.L. Research on the Effectiveness of Head-up-Displays Warning System Based on User Research and Simulated Driving. Master’s Thesis, Zhejiang Sci-Tech University, Hangzhou, China, 2020. [Google Scholar]

- Schwarz, F.; Fastenmeier, W. Augmented reality warnings in vehicles: Effects of modality and specificity on effectiveness. Accid. Anal. Prev. 2017, 101, 55–66. [Google Scholar] [CrossRef]

- Xue, Q.W. Characteristics of Driver Rear-End Collision Avoidance Behavior and Effectiveness of Different Warning Methods Based on Driving Simulation. Ph.D. Thesis, Beijing Jiaotong University, Beijing, China, 2019. [Google Scholar]

- Zhang, Y.; Yan, X.; Li, X.; Wu, J. Changes of drivers’ visual performances when approaching a signalized intersection under different collision avoidance warning conditions. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 584–597. [Google Scholar] [CrossRef]

- Belz, S.M. A Simulator-Based Investigation of Visual, Auditory, and Mixed-Modality Display of Vehicle Dynamic State Information to Commercial Motor Vehicle Operators. Ph.D. Thesis, Virginia Polytechnic Institute and State University, Blacksburg, VA, USA, 1997. [Google Scholar]

- Girbes, V.; Armesto, L.; Dols, J.; Tornero, J. An active safety system for low-speed bus braking assistance. IEEE Trans. Intell. Transp. Syst. 2016, 18, 377–387. [Google Scholar] [CrossRef]

- McKeown, D.; Isherwood, S.; Conway, G. Auditory displays as occasion setters. Hum. Factors 2010, 52, 54–62. [Google Scholar] [CrossRef] [PubMed]

- Yan, X.; Xue, Q.; Ma, L.; Xu, Y. Driving-simulator-based test on the effectiveness of auditory red-light running vehicle warning system based on time-to-collision sensor. Sensors 2014, 14, 3631–3651. [Google Scholar] [CrossRef]

- Biondi, F.; Strayer, D.L.; Rossi, R.; Gastaldi, M.; Mulatti, C. Advanced driver assistance systems: Using multimodal redundant warnings to enhance road safety. Appl. Ergon. 2017, 58, 238–244. [Google Scholar] [CrossRef]

- Murata, A.; Kuroda, T.; Karwowski, W. Effects of auditory and tactile warning on response to visual hazards under a noisy environment. Appl. Ergon. 2017, 60, 58–67. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.T.; Huang, T.Y.; Liang, W.C.; Chiu, T.T.; Chao, C.F.; Hsu, S.H.; Ko, L.W. Assessing effectiveness of various auditory warning signals in maintaining drivers’ attention in virtual reality-based driving environments. Percept. Mot. Ski. 2009, 108, 825–835. [Google Scholar] [CrossRef] [PubMed]

- Petermeijer, S.; Bazilinskyy, P.; Bengler, K.; De Winter, J. Take-over again: Investigating multimodal and directional TORs to get the driver back into the loop. Appl. Ergon. 2017, 62, 204–215. [Google Scholar] [CrossRef] [PubMed]

- Becker, M. Effects of Looming Auditory FCW on Brake Reaction Time under Conditions of Distraction. Master’s Thesis, Arizona State University, Tempe, Arizona, 2016. [Google Scholar]

- Murata, A.; Doi, T.; Karwowski, W. Enhanced performance for in-vehicle display placed around back mirror by means of tactile warning. Transp. Res. Part F Traffic Psychol. Behav. 2018, 58, 605–618. [Google Scholar] [CrossRef]

- Cao, Y.; Van Der Sluis, F.; Theune, M.; op den Akker, R.; Nijholt, A. Evaluating informative auditory and tactile cues for in-vehicle information systems. In Proceedings of the 2nd International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Pittsburgh, PA, USA, 11–12 November 2010; ACM Press: New York, NY, USA, 2010; pp. 102–109. [Google Scholar]

- Halabi, O.; Bahameish, M.A.; Al-Naimi, L.T.; Al-Kaabi, A.K. Response times for auditory and vibrotactile directional cues in different immersive displays. Int. J. Hum.–Comput. Interact. 2019, 35, 1578–1585. [Google Scholar] [CrossRef]

- Petermeijer, S.; Doubek, F.; De Winter, J. Driver response times to auditory, visual, and tactile take-over requests: A simulator study with 101 participants. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1505–1510. [Google Scholar]

- Pitts, B.J.; Sarter, N. What you don’t notice can harm you: Age-related differences in detecting concurrent visual, auditory, and tactile cues. Hum. Factors 2018, 60, 445–464. [Google Scholar] [CrossRef] [PubMed]

- Straughn, S.M.; Gray, R.; Tan, H.Z. To go or not to go: Stimulus-response compatibility for tactile and auditory pedestrian collision warnings. IEEE Trans. Haptics 2009, 2, 111–117. [Google Scholar] [CrossRef]

- Navarro, J.; Mars, F.; Forzy, J.F.; El-Jaafari, M.; Hoc, J.M. Objective and subjective evaluation of motor priming and warning systems applied to lateral control assistance. Accid. Anal. Prev. 2010, 42, 904–912. [Google Scholar] [CrossRef]

- Wu, Y.W. Research on Key Technologies of Lane Departure Assistance Driving under Human-Machine Collaboration. Ph.D. Thesis, Hunan University, Changsha, China, 2013. [Google Scholar]

- Ho, C.; Spence, C. Using peripersonal warning signals to orient a driver’s gaze. Hum. Factors 2009, 51, 539–556. [Google Scholar] [CrossRef] [PubMed]

- Biondi, F.; Leo, M.; Gastaldi, M.; Rossi, R.; Mulatti, C. How to drive drivers nuts: Effect of auditory, vibrotactile, and multimodal warnings on perceived urgency, annoyance, and acceptability. Transp. Res. Rec. 2017, 2663, 34–39. [Google Scholar] [CrossRef]

- Ho, C.; Santangelo, V.; Spence, C. Multisensory warning signals: When spatial correspondence matters. Exp. Brain Res. 2009, 195, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates: New York, NY, USA, 1988. [Google Scholar]

- Cumming, G.; Fidler, F.; Kalinowski, P.; Lai, P. The statistical recommendations of the American Psychological Association Publication Manual: Effect sizes, confidence intervals, and meta-analysis. Aust. J. Psychol. 2012, 64, 138–146. [Google Scholar] [CrossRef]

- Hedges, L.V. Distribution theory for Glass’s estimator of effect size and related estimators. J. Educ. Stat. 1981, 6, 107–128. [Google Scholar] [CrossRef]

- Lakens, D. Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Front. Psychol. 2016, 4, 863. [Google Scholar] [CrossRef] [PubMed]

- Glen, S. Hedges’ g: Definition, Formula. Statistics How To. 2016. Available online: https://www.statisticshowto.com/hedges-g/ (accessed on 6 February 2023).

- Hedberg, E. ROBUMETA: Stata Module to Perform Robust Variance Estimation in Meta-Regression with Dependent Effect Size Estimates. Statistical Software Components, Boston College Department of Economics. 2011. Available online: https://ideas.repec.org/c/boc/bocode/s457219.html (accessed on 6 February 2023).

- Hedges, L.V.; Tipton, E.; Johnson, M.C. Robust variance estimation in meta-regression with dependent effect size estimates. Res. Synth. Methods 2010, 1, 39–65. [Google Scholar] [CrossRef] [PubMed]

- Higgins, J.P.; Thompson, S.G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 2002, 21, 1539–1558. [Google Scholar] [CrossRef] [PubMed]

- Erbeli, F.; Peng, P.; Rice, M. No evidence of creative benefit accompanying dyslexia: A meta-analysis. J. Learn. Disabil. 2022, 55, 242–253. [Google Scholar] [CrossRef] [PubMed]

- Lipsey, M.W.; Wilson, D.B. Practical Meta-Analysis; Sage Publications, Inc.: Thousand Oaks, CA, USA, 2001. [Google Scholar]

- Egger, M.; Davey Smith, G.; Schneider, M.; Minder, C. Bias in meta-analysis detected by a simple, graphical test. BMJ 1997, 315, 315–629. [Google Scholar] [CrossRef] [PubMed]

- Sterne, J.A.; Egger, M. Regression methods to detect publication and other bias in meta-analysis. In Publication Bias in Meta-Analysis; Rothstein, H.R., Sutton, A.J., Borenstein, M., Eds.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2006. [Google Scholar] [CrossRef]

- Owsley, C.; McGwin, G., Jr. Vision and driving. Vis. Res. 2010, 50, 2348–2361. [Google Scholar] [CrossRef] [PubMed]

- National Highway Traffic Safety Administration. Human factors design guidance for driver-vehicle interfaces; No. DOT HS 812 360; National Highway Traffic Safety Administration: 2016. Available online: https://www.nhtsa.gov/sites/nhtsa.gov/files/documents/812360_humanfactorsdesignguidance.pdf (accessed on 6 February 2023).

- Hirst, S.; Graham, R. The format and presentation of collision warnings. In Ergonomics and Safety of Intelligent Driver Interfaces; CRC Press: Boca Raton, FL, USA, 2020; pp. 203–219. [Google Scholar]

- Herslund, M.B.; Jørgensen, N.O. Looked-but-failed-to-see-errors in traffic. Accid. Anal. Prev. 2003, 35, 885–891. [Google Scholar] [CrossRef]

- Alain, C.; Izenberg, A. Effects of attentional load on auditory scene analysis. J. Cogn. Neurosci. 2003, 15, 1063–1073. [Google Scholar] [CrossRef] [PubMed]

- Murphy, S.; Fraenkel, N.; Dalton, P. Perceptual load does not modulate auditory distractor processing. Cognition 2013, 129, 345–355. [Google Scholar] [CrossRef] [PubMed]

- Wiese, E.E.; Lee, J.D. Auditory alerts for in-vehicle information systems: The effects of temporal conflict and sound parameters on driver attitudes and performance. Ergonomics 2004, 47, 965–986. [Google Scholar] [CrossRef] [PubMed]

- Ruscio, D.; Bos, A.J.; Ciceri, M.R. Distraction or cognitive overload? Using modulations of the autonomic nervous system to discriminate the possible negative effects of advanced assistance system. Accid. Anal. Prev. 2017, 103, 105–111. [Google Scholar] [CrossRef] [PubMed]

- Boyle, P.A.; Yu, L.; Wilson, R.S.; Leurgans, S.E.; Schneider, J.A.; Bennett, D.A. Person-specific contribution of neuropathologies to cognitive loss in old age. Ann. Neurol. 2018, 83, 74–83. [Google Scholar] [CrossRef] [PubMed]

- Howieson, D.B.; Camicioli, R.; Quinn, J.; Silbert, L.C.; Care, B.; Moore, M.M.; Dame, A.; Sexton, G.; Kaye, J.A. Natural history of cognitive decline in the old old. Neurology 2003, 60, 1489–1494. [Google Scholar] [CrossRef] [PubMed]

- Lipowski, Z.J. Sensory and information inputs overload: Behavioral effects. Compr. Psychiatry 1975, 16, 199–221. [Google Scholar] [CrossRef] [PubMed]

- Scheydt, S.; Müller Staub, M.; Frauenfelder, F.; Nielsen, G.H.; Behrens, J.; Needham, I. Sensory overload: A concept analysis. Int. J. Ment. Health Nurs. 2017, 26, 110–120. [Google Scholar] [CrossRef] [PubMed]

- Wickens, C.D. Multiple resources and performance prediction. Theor. Issues Ergon. Sci. 2002, 3, 159–177. [Google Scholar] [CrossRef]

- Okazaki, S.; Haramaki, T.; Nishino, H. A safe driving support method using olfactory stimuli. Adv. Intell. Syst. Comput. 2018, 772, 958–967. [Google Scholar] [CrossRef]

| Driving Task Descriptions | Simulation Fidelity | Reference |

|---|---|---|

| Driving task with potential collision events | Real car | Brown (2005) [46] |

| Driving task with a stimulus-response task | Real car | Reinmueller et al. (2018) [47]; Ruscio et al. (2015) [48] |

| Driving and braking task | Simulator | Geitner et al. (2019) [41]; Ho et al. (2014) [49]; Lylykangas et al. (2016) [50]; Lylykangas et al. (2016) [50]; Wu et al. (2018) [51]; Zhang(2017) [52] |

| Car following | Simulator | Ahtamad et al. (2015) [53]; Ahtamad et al. (2016) [54]; Aksan et al. (2016) [55]; Fitch et al. (2011) [56]; Gaspar & Brown (2020) [7]; Gray et al. (2014) [57]; Ho et al. (2006) [58]; Lewis et al. (2018) [59]; Li (2018) [60]; Meng et al. (2015) [61]; Mohebbi et al. (2009) [62]; Scott & Gray (2008) [9]; Zhu et al. (2020) [21] |

| Driving task with a stimulus-response task | Simulator | Jhuang et al. (2010) [63]; Li (2011) [64]; Liu et al.(2013) [65]; Politis et al. (2017) [27]; Shi (2020) [66]; Schwarz & Fastenmeier (2017) [67]; Xue (2019) [68]; Zhang et al. (2019) [69]; |

| Driving task with potential collision events | Simulator | Belz (1997) [70]; Belz et al. (1999) [39]; Girbes et al. (2016) [71]; McKeown et al. (2010) [72]; Yan et al. (2014) [73] |

| Braking task with lead car deceleration events | Simulator | Biondi et al. (2017) [74] |

| Lane and speed keeping while reacting to warnings or hazards | Simulator | Lundqvist & Eriksson (2019) [8]; Murata et al. (2017) [75] |

| Lane keeping | Simulator | Lin et al. (2009) [76]; Yang et al. (2019) [28] |

| Take-over request task | Simulator | Petermeijer et al. (2017) [77]; Yoon et al. (2019) [29] |

| Car following task with a secondary texting task | Simulator | Becker (2016) [78] |

| Driving task with a secondary task to detect warning signals | Simulator | Murata et al. (2018) [79] |

| Cue identification tasks with perceptional & cognitive load | Simulator | Cao et al. (2010) [80] |

| Lane change task | Simulator | Halabi et al. (2019) [81]; Petermeijer et al. (2017) [82]; Pitts & Sarter (2018) [83]; Straughn et al. (2009) [84]; Yun & Yang (2020) [10]; |

| Steering task | Simulator | Navarro et al. (2010) [85]; Wu (2013) [86] |

| Head-turning response | Simulator | Ho & Spence (2009) [87] |

| Surrogate driving tasks (e.g., speeded discrimination) | Surrogate tasks | Biondi et al. (2017) [88]; Ho et al. (2006) [60]; Ho et al. (2007) [36]; Ho et al. (2009) [89] |

| Comparison Category | Pairwise Comparison | Pooled Hedges’ g (95% CI) | τ2 (Tau.sq) | Failsafe-N Estimates |

|---|---|---|---|---|

| Unimodal vs. control condition | Visual vs. control | 0.83 (−0.50, 2.16) | 0.85 | 440 |

| Auditory vs. control | 0.98 ** (0.34, 1.61) | 0.88 | 344 | |

| Tactile vs. control | 0.77 ** (0.22, 1.32) | 0.72 | 518 | |

| Unimodal vs. unimodal | Visual vs. tactile | 0.74 * (0.11, 1.37) | 0.92 | 468 |

| Auditory vs. tactile | 0.11 (−0.40, 0.61) | 0.71 | 64 | |

| Visual vs. auditory | 0.42 (−0.12, 0.97) | 1.18 | 141 | |

| Unimodal vs. bimodal | Auditory vs. auditory-tactile | 0.38 * (0.04, 0.72) | 0.20 | 211 |

| Tactile vs. auditory-tactile | 0.46 * (0.10, 0.82) | 0.21 | 268 | |

| Auditory vs. visual-auditory | 0.37 (−0.34, 1.08) | 0.64 | 189 | |

| Tactile vs. visual-auditory | 0.39 (−0.22, 1.00) | 0.22 | 144 | |

| Auditory vs. visual-tactile | 0.38 (−0.60, 1.36) | 0.44 | 149 | |

| Tactile vs. visual-tactile | 0.36 (−0.04, 0.76) | 0.12 | 153 | |

| Bimodal vs. trimodal | visual-auditory vs. visual-auditory-tactile | 0.54 (−0.49, 1.57) | 0.48 | 67 |

| visual-tactile vs. visual-auditory-tactile | 0.40 (−0.29, 1.00) | 0.21 | 41 | |

| auditory-tactile vs. visual-auditory-tactile | 0.26 (−0.25, 0.77) | 0.12 | 95 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, A.; Ma, K.-H.; Choi, A.T.H.; Hu, D.; Hu, C.-P.; Peng, P.; He, J. The Effectiveness of Unimodal and Multimodal Warnings on Drivers’ Response Time: A Meta-Analysis. Appl. Sci. 2025, 15, 527. https://doi.org/10.3390/app15020527

Zhu A, Ma K-H, Choi ATH, Hu D, Hu C-P, Peng P, He J. The Effectiveness of Unimodal and Multimodal Warnings on Drivers’ Response Time: A Meta-Analysis. Applied Sciences. 2025; 15(2):527. https://doi.org/10.3390/app15020527

Chicago/Turabian StyleZhu, Ao, Ko-Hsuan Ma, Annebella Tsz Ho Choi, Duoduo Hu, Chuan-Peng Hu, Peng Peng, and Jibo He. 2025. "The Effectiveness of Unimodal and Multimodal Warnings on Drivers’ Response Time: A Meta-Analysis" Applied Sciences 15, no. 2: 527. https://doi.org/10.3390/app15020527

APA StyleZhu, A., Ma, K.-H., Choi, A. T. H., Hu, D., Hu, C.-P., Peng, P., & He, J. (2025). The Effectiveness of Unimodal and Multimodal Warnings on Drivers’ Response Time: A Meta-Analysis. Applied Sciences, 15(2), 527. https://doi.org/10.3390/app15020527