Fall Detection Based on Continuous Wave Radar Sensor Using Binarized Neural Networks

Abstract

1. Introduction

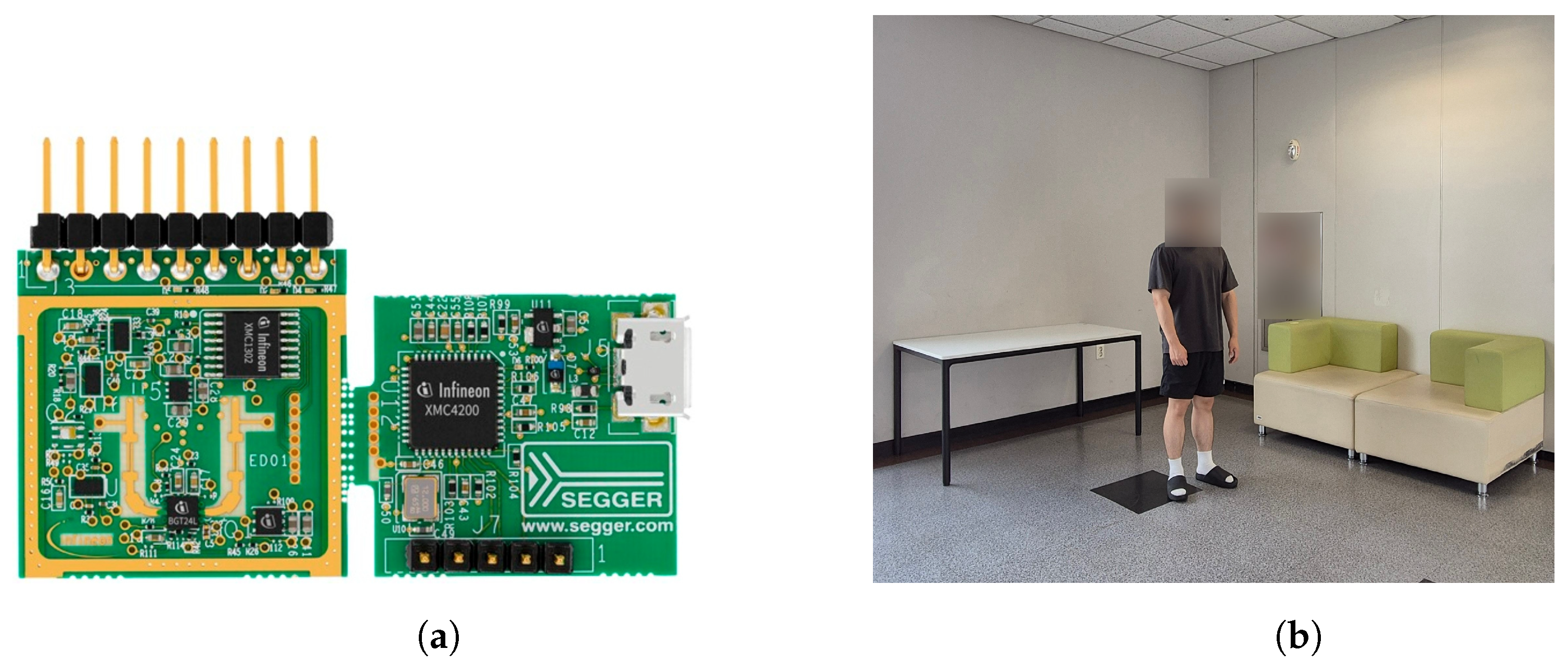

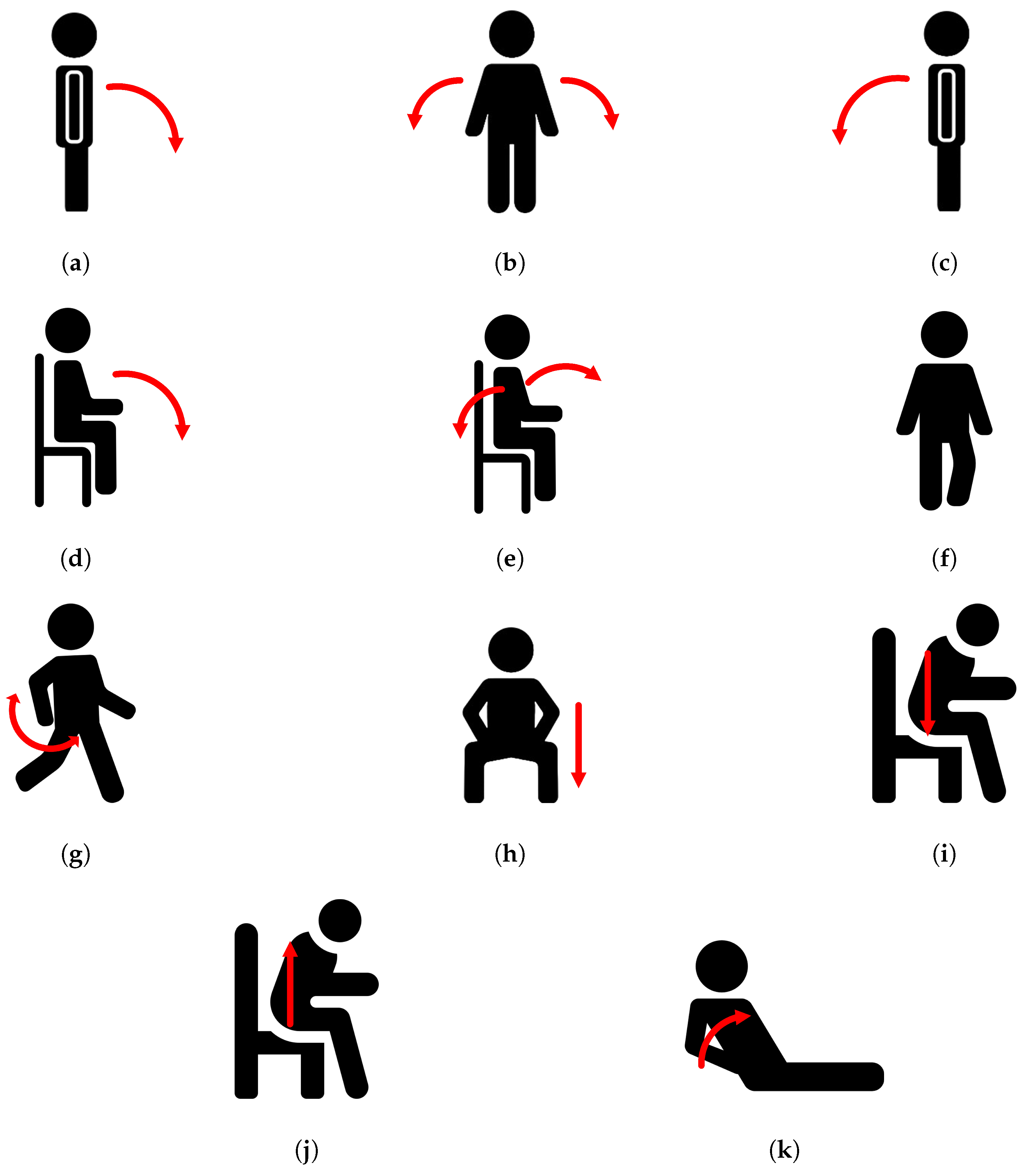

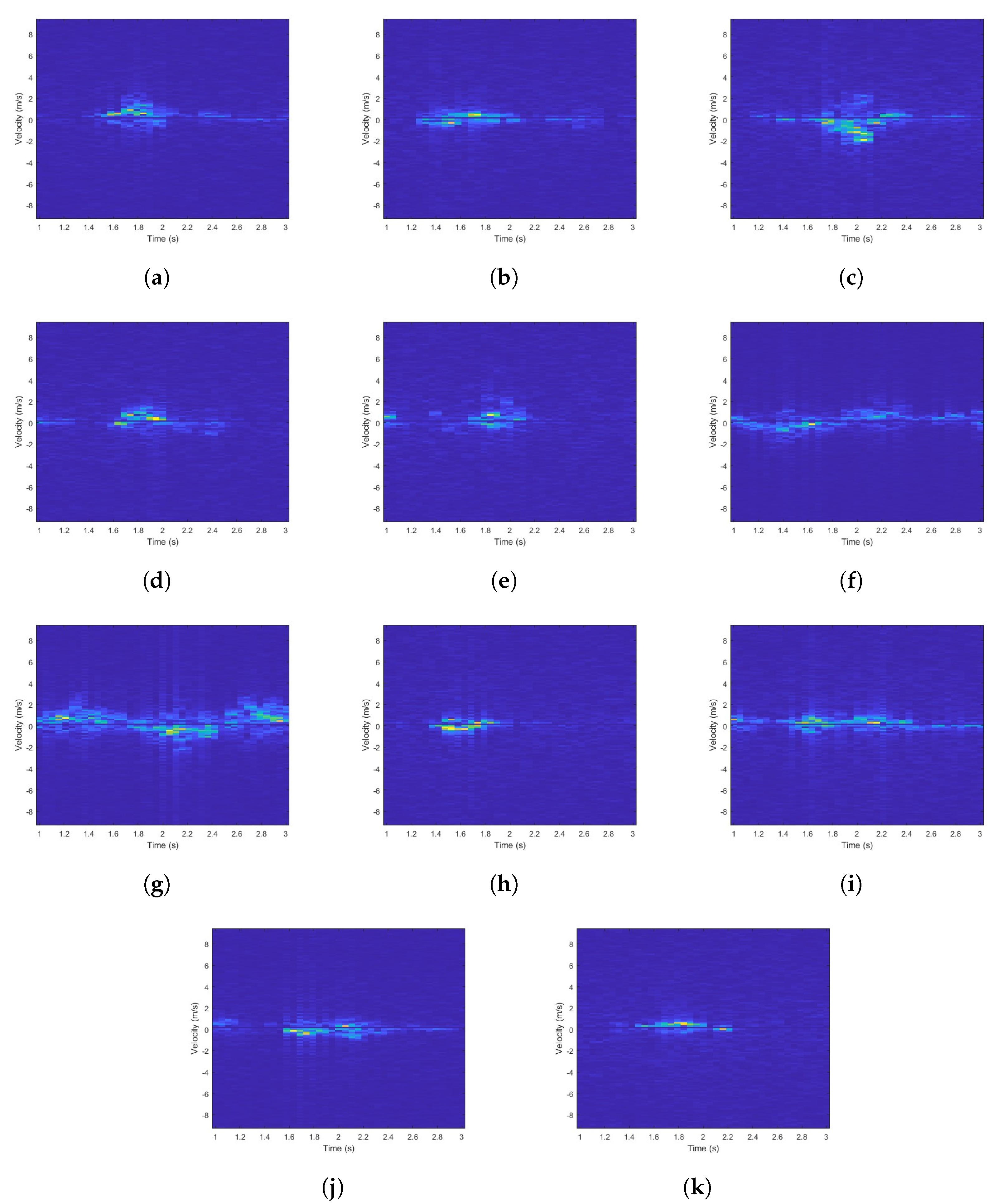

2. Experimental Setup and Measurement

3. Proposed Method

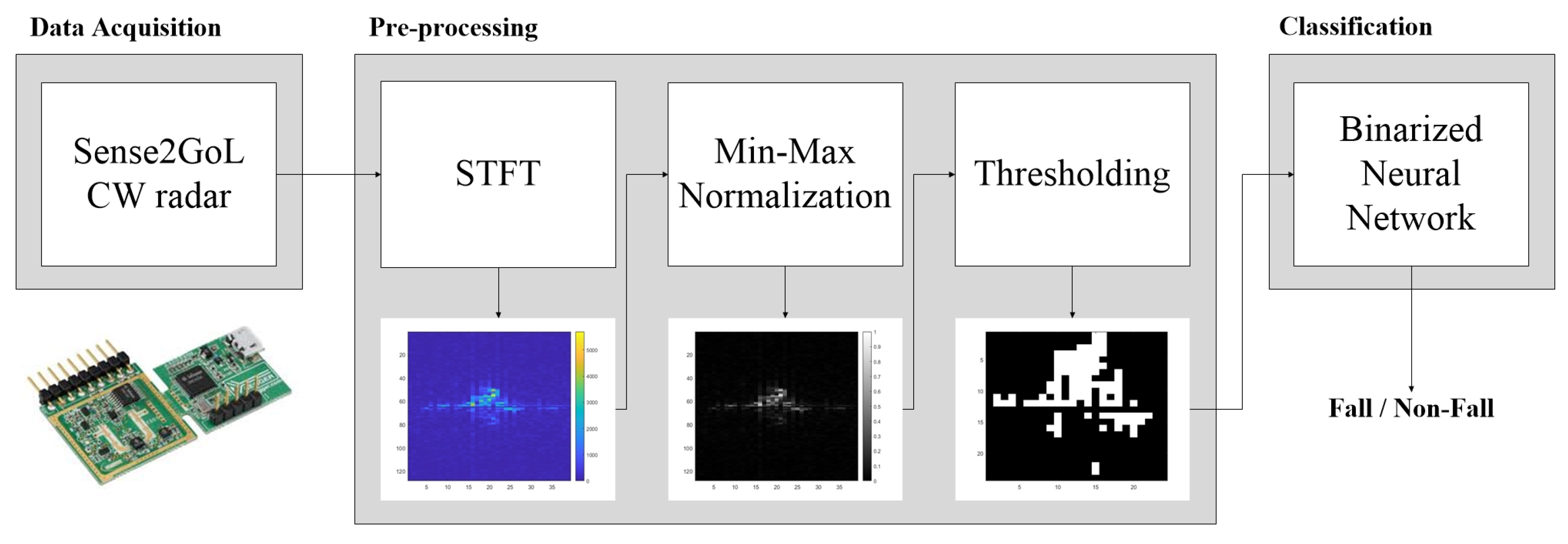

3.1. Overview

3.2. Pre-Processing

3.2.1. Short-Time Fourier Transform

3.2.2. Min–Max Normalization

3.2.3. Thresholding

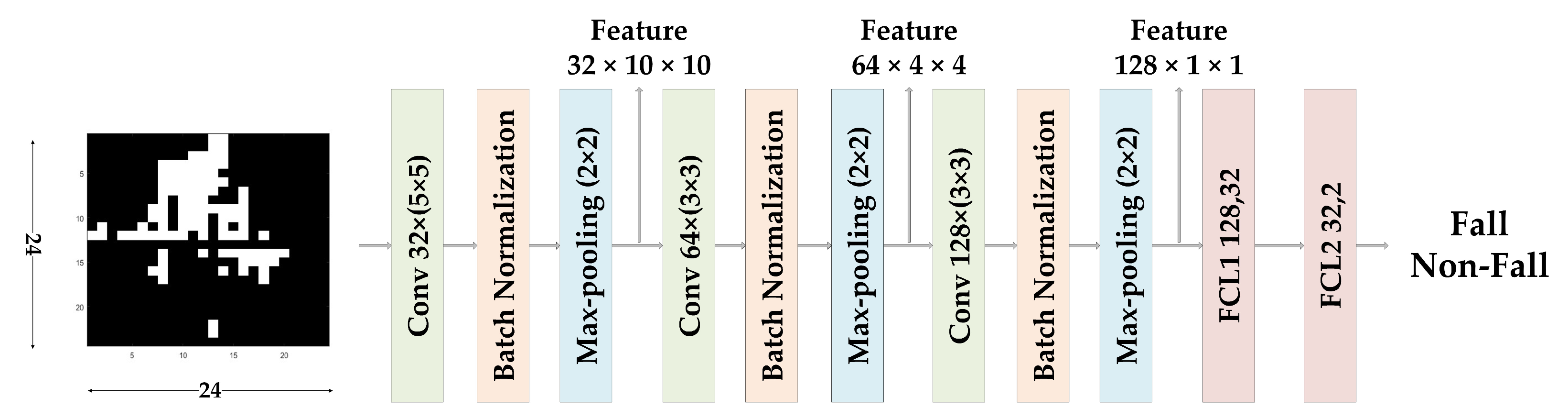

3.3. Network

4. Experiment Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, C.; Lu, W.; Narayanan, M.R.; Chang, D.C.W.; Lord, S.R.; Redmond, S.J.; Lovell, N.H. Low-power fall detector using triaxial accelerometry and barometric pressure sensing. IEEE Trans. Ind. Inform. 2016, 12, 2302–2311. [Google Scholar] [CrossRef]

- Saadeh, W.; Butt, S.A.; Altaf, M.A.B. A Patient-Specific Single Sensor IoT-Based Wearable Fall Prediction and Detection System. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 995–1003. [Google Scholar] [CrossRef]

- Lee, J.-S.; Tseng, H.-H. Development of an enhanced threshold-based fall detection system using smartphones with built-in accelerometers. IEEE Sens. J. 2019, 19, 8293–8302. [Google Scholar] [CrossRef]

- Harrou, F.; Zerrouki, N.; Sun, Y.; Houacine, A. An integrated vision-based approach for efficient human fall detection in a home environment. IEEE Access 2019, 7, 114966–114974. [Google Scholar] [CrossRef]

- Kamel, A.; Sheng, B.; Yang, P.; Li, P.; Shen, R.; Feng, D.D. Deep convolutional neural networks for human action recognition using depth maps and postures. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1806–1819. [Google Scholar] [CrossRef]

- Yu, M.; Rhuma, A.; Naqvi, S.M.; Wang, L.; Chambers, J. A posture recognition-based fall detection system for monitoring an elderly person in a smart home environment. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 1274–1286. [Google Scholar] [CrossRef] [PubMed]

- Chelli, A.; Pätzold, M. A Machine Learning Approach for Fall Detection Based on the Instantaneous Doppler Frequency. IEEE Access 2019, 7, 166173–166189. [Google Scholar] [CrossRef]

- Shiba, K.; Kaburagi, T.; Kurihara, Y. Fall detection utilizing frequency distribution trajectory by microwave Doppler sensor. IEEE Sens. J. 2017, 17, 7561–7568. [Google Scholar] [CrossRef]

- Tewari, R.C.; Sharma, S.; Routray, A.; Maiti, J. Effective fall detection and post-fall breath rate tracking using a low-cost CW Doppler radar sensor. IEEE Sens. J. 2023, 164, 107315. [Google Scholar] [CrossRef]

- Tewari, R.C.; Routray, A.; Maiti, J. Enhanced Robustness in Low-Cost Doppler Radar Based Fall Detection System via Kalman Filter Tracking and Transition Activity Analysis. In Proceedings of the 2023 IEEE 20th India Council International Conference (INDICON), Hyderabad, India, 14–17 December 2023. [Google Scholar]

- Lu, J.; Ye, W.-B. Design of a Multistage Radar-Based Human Fall Detection System. IEEE Sens. J. 2022, 22, 13177–13187. [Google Scholar] [CrossRef]

- Yoshino, H.; Moshnyaga, V.G.; Hashimoto, K.J.I. Fall Detection on a single Doppler Radar Sensor by using Convolutional Neural Networks. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 171–1724. [Google Scholar]

- Li, Z.; Du, J.; Zhu, B.; Greenwald, S.E.; Xu, L.; Yao, Y.; Bao, N. Doppler Radar Sensor-Based Fall Detection Using a Convolutional Bidirectional Long Short-Term Memory Model. Sensors 2024, 24, 5365. [Google Scholar] [CrossRef] [PubMed]

- Ding, C.; Zou, Y.; Sun, L.; Hong, H.; Zhu, X.; Li, C. Fall detection with multi-domain features by a portable FMCW radar. In Proceedings of the 2019 IEEE MTT-S International Wireless Symposium (IWS), Guangzhou, China, 19–22 May 2019. [Google Scholar]

- Rezaei, A.; Mascheroni, A.; Stevens, M.C.; Argha, R.; Papandrea, M.; Puiatti, A.; Lovell, N.H. Unobtrusive Human Fall Detection System Using mmWave Radar and Data Driven Methods. IEEE Sens. J. 2023, 23, 7968–7976. [Google Scholar] [CrossRef]

- Wang, B.; Guo, L.; Zhang, H.; Guo, Y.-X. A Millimetre-Wave Radar-Based Fall Detection Method Using Line Kernel Convolutional Neural Network. IEEE Sens. J. 2020, 20, 13364–13370. [Google Scholar] [CrossRef]

- Sun, Y.; Hang, R.; Li, Z.; Jin, M.; Xu, K. Privacy-Preserving Fall Detection with Deep Learning on mmWave Radar Signal. In Proceedings of the 2019 IEEE Visual Communications and Image Processing (VCIP), Sydney, Australia, 1–4 December 2019. [Google Scholar]

- Sadreazami, H.; Bolic, M.; Rajan, S. Fall detection using standoff radar-based sensing and deep convolutional neural network. IEEE Trans. Circuits Syst. II Express Briefs 2020, 67, 197–201. [Google Scholar] [CrossRef]

- Sadreazami, H.; Bolic, M.; Rajan, S. Contactless Fall Detection Using Time-Frequency Analysis and Convolutional Neural Networks. IEEE Trans. Ind. Inform. 2021, 17, 6842–6851. [Google Scholar] [CrossRef]

- Sadreazami, H.; Bolic, M.; Rajan, S. CapsFall: Fall detection using ultra-wideband radar and capsule network. IEEE Access 2019, 7, 55336–55343. [Google Scholar] [CrossRef]

- Wang, P.; Li, Q.; Yin, P.; Wang, Z.; Ling, Y.; Gravina, R.; Li, Y. A convolution neural network approach for fall detection based on adaptive channel selection of UWB radar signals. Neural Comput. Appl. 2023, 35, 15967–15980. [Google Scholar] [CrossRef]

- Ma, L.; Liu, M.; Wang, N.; Wang, L.; Yang, Y.; Wang, H. Room-level fall detection based on ultra-wideband (UWB) monostatic radar and convolutional long short-term memory (LSTM). Sensors 2020, 20, 1105. [Google Scholar] [CrossRef] [PubMed]

- Infineon: DEMO SENSE2GOL. Available online: https://www.infineon.com/cms/en/product/evaluation-boards/demo-sense2gol/ (accessed on 12 September 2024).

| Parameters | Value |

|---|---|

| Frequency | 24.125 GHz |

| Minimum speed | 0.5 km/h |

| Maximum speed | 30 km/h |

| Maximum distance | 15 m |

| Horizontal −3 dB beamwidth | 80° |

| Elevation −3 dB beamwidth | 29° |

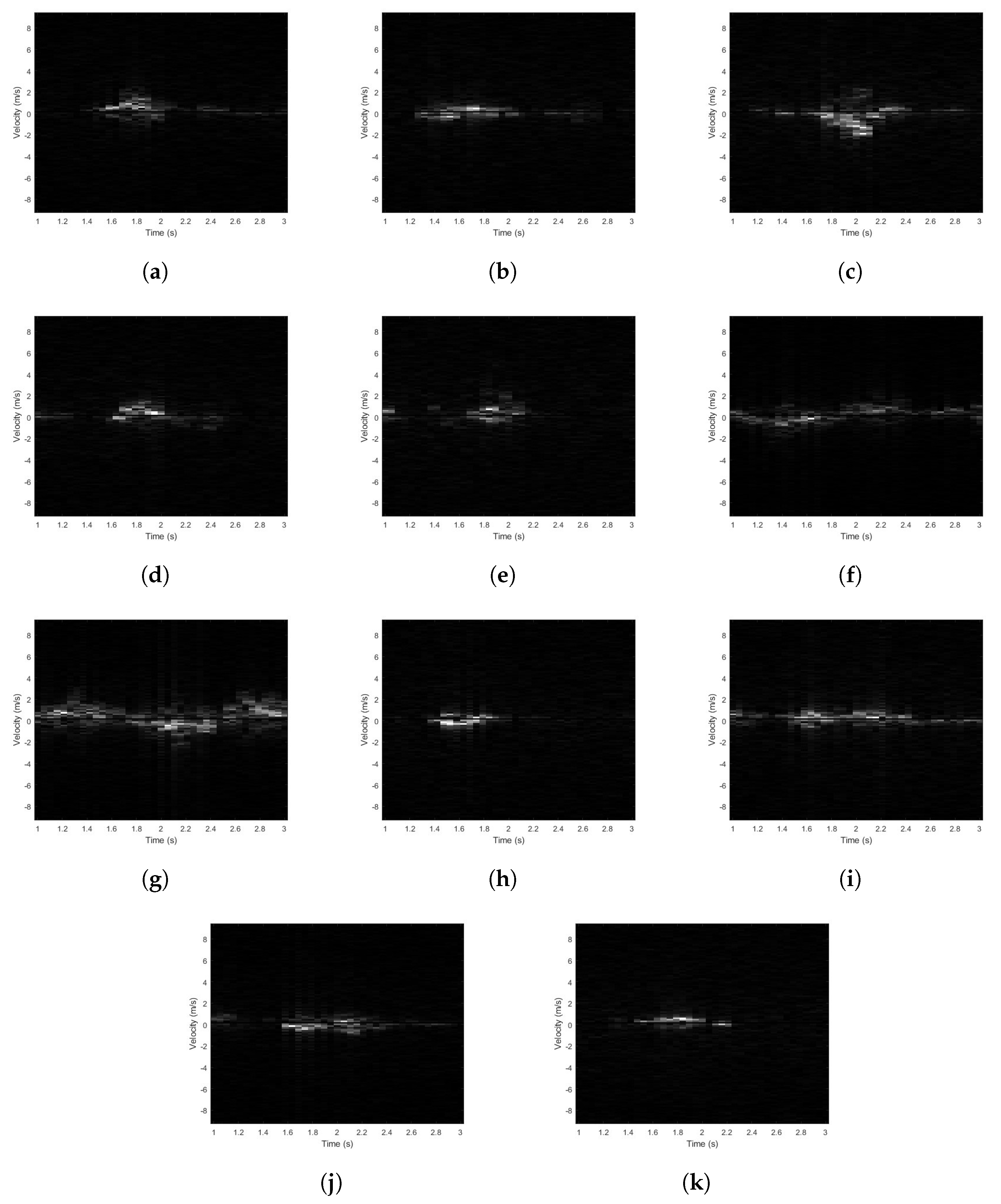

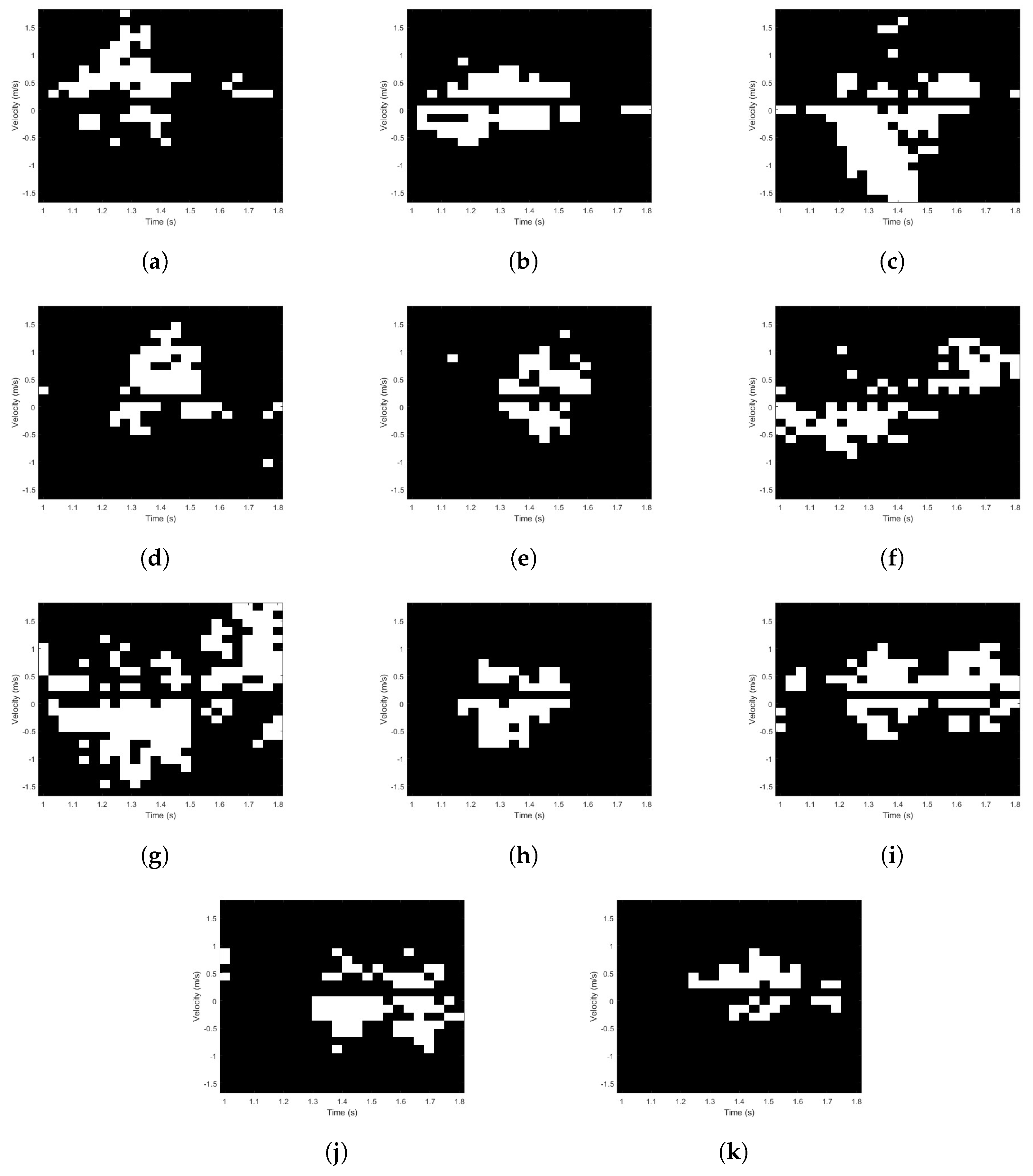

| Class | Action | No. of Data |

|---|---|---|

| Fall | (a) standing and then falling forward | 153 |

| (b) standing and then falling to the left/right | 306 | |

| (c) standing and then falling backward | 153 | |

| (d) sitting and then falling forward | 135 | |

| (e) sitting and then falling to the left/right | 270 | |

| Non-Fall | (f) walking slowly without moving arms | 145 |

| (g) walking quickly while swinging arms | 145 | |

| (h) squatting | 265 | |

| (i) sitting on a chair | 190 | |

| (j) standing up from sitting on a chair | 190 | |

| (k) lying down and then lifting the upper body | 240 |

| Threshold | 0.1 | 0.125 | 0.15 | 0.175 | 0.2 |

| Accuracy (%) | 91.8 | 92.0 | 93.1 | 92.4 | 92.2 |

| Network | No. of Output Channels | No. of Par 1 | Acc 2 (%) | |||||

|---|---|---|---|---|---|---|---|---|

| CL1 | CL2 | CL3 | CL4 | FCL1 | FCL2 | |||

| 1 | 32 | 64 | - | - | 2 | - | 21,472 | 84.1 |

| 2 | 64 | 64 | - | - | 16 | 2 | 55,168 | 88.9 |

| 3 | 64 | 64 | 64 | - | 16 | 2 | 76,800 | 91.6 |

| 4 | 32 | 64 | 128 | - | 2 | - | 93,664 | 92.4 |

| 5 | 32 | 64 | 128 | - | 32 | 2 | 97,632 | 93.1 |

| 6 | 64 | 128 | 128 | - | 2 | - | 223,680 | 92.2 |

| 7 | 128 | 128 | 128 | - | 2 | - | 299,136 | 93.1 |

| 8 | 64 | 128 | 256 | - | 64 | 2 | 387,776 | 93.8 |

| 9 | 32 | 64 | 128 | 256 | 64 | 2 | 454,624 | 93.8 |

| Predicted Label | |||

|---|---|---|---|

| Fall | Non-Fall | ||

| True label | Fall | 193 | 14 |

| Non-Fall | 17 | 228 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cho, H.; Kang, S.; Sim, Y.; Lee, S.; Jung, Y. Fall Detection Based on Continuous Wave Radar Sensor Using Binarized Neural Networks. Appl. Sci. 2025, 15, 546. https://doi.org/10.3390/app15020546

Cho H, Kang S, Sim Y, Lee S, Jung Y. Fall Detection Based on Continuous Wave Radar Sensor Using Binarized Neural Networks. Applied Sciences. 2025; 15(2):546. https://doi.org/10.3390/app15020546

Chicago/Turabian StyleCho, Hyeongwon, Soongyu Kang, Yunseong Sim, Seongjoo Lee, and Yunho Jung. 2025. "Fall Detection Based on Continuous Wave Radar Sensor Using Binarized Neural Networks" Applied Sciences 15, no. 2: 546. https://doi.org/10.3390/app15020546

APA StyleCho, H., Kang, S., Sim, Y., Lee, S., & Jung, Y. (2025). Fall Detection Based on Continuous Wave Radar Sensor Using Binarized Neural Networks. Applied Sciences, 15(2), 546. https://doi.org/10.3390/app15020546