Vocal Communication Between Cobots and Humans to Enhance Productivity and Safety: Review and Discussion

Abstract

:1. Introduction

2. Literature Review

- Visual communications: Graphical displays, AR, and vision systems are key components of visual interfaces that enable workers to comprehend and interpret information from cobots efficiently [14,15]. Graphical displays can provide real-time feedback on cobot actions, task progress, and system status, enhancing transparency and situational awareness [16]. AR overlays digital information onto the physical workspace, offering intuitive guidance for tasks and aiding in error prevention [17]. Vision systems, equipped with cameras and sensors, enable cobots to recognize and respond to human gestures, further fostering natural and fluid interaction [18].

- Auditory communications: Human–cobot vocal communication has been a topic of intensive research in recent years [19]. Auditory cues are valuable in environments where visual attention may be divided or compromised [20]. Sound alerts, spoken instructions, and auditory feedback mechanisms contribute to effective communication between human workers and cobots [21]. For instance, audible signals can indicate the initiation or completion of a task, providing workers with real-time information without requiring constant visual focus [22]. Speech recognition technology enables cobots to understand verbal commands, fostering a more intuitive and dynamic interaction [23]. The thoughtful use of auditory interfaces between humans and cobots helps create a collaborative environment where information is conveyed promptly, enhancing overall responsiveness and coordination [24]. Several recent papers have proposed novel interfaces and platforms to facilitate this type of interaction. Rusan and Mocanu [25] introduced a framework that detects and recognizes speech messages, converting them into spoken commands for operating system instructions. Carr, Wang, and Wang [26] proposed a network-independent verbal communication platform for multi-robot systems, which can function in environments lacking network infrastructures. McMillan et al. [27] highlighted the importance of conversation as a natural method of communication between humans and robots, promoting inclusivity in human–robot interaction. Lee et al. [28] conducted a user study to understand the impact of robot attributes on team dynamics and collaboration performance, finding that vocalizing robot intentions can decrease team performance and perceived safety. Ionescu and Schlud [29] found that voice-activated cobot programming is more efficient than typing and other programming techniques. These papers collectively contribute to the development of human–robot vocal communication systems and highlight the challenges and opportunities in this field.

- Tactile communications: The incorporation of tactile feedback mechanisms enhances the haptic dimension of human–cobot collaboration [30]. Tactile interfaces, such as force sensors and haptic feedback devices, enable cobots to perceive and respond to variations in physical interactions [31]. Force sensors can detect unexpected resistance, triggering immediate cessation of movement to prevent collisions or accidents [32]. Haptic feedback devices provide physical sensations to human operators, conveying information about the cobot’s state or impending actions [33]. This tactile dimension contributes to a more nuanced and sophisticated collaboration, allowing for a greater degree of trust and coordination between human workers and cobots.

3. Main Assistive Scenarios

- Standard pick and place (known locations): There may be several different tasks, and their names and locations should be clearly defined for the human and cobot. The cobot trajectory of a given pick and place is relatively easy to compute. As an input for computing the trajectory, it only needs the coordinates in the space of the origin (pick), the destination (place), and the cobot’s end effector (the edge of the cobot’s arm). The end effector must be properly quipped for the “grasp” at the pick location and the “release” at the place location.

- Fetch distinct tool/part/material: Visual search may be needed for identifying the object and corresponding grasping strategy. On the other hand, the destination location must be either predetermined or determined based on the type of tool/part and the context (such as the operation stage or the worker’s location). Besides the need to identify the origin and destination coordinates, the trajectory computation carries a striking resemblance to the pick and place task.

- Return tool/part/material: A predetermined pickup place typically facilitates finding and picking the tool/part/material. Returning the tool to its dedicated storage location may be easy if there is only one possible location for this tool/part. However, having several identical tools may require a visual search for an empty placing location. Additionally, tool placement may require some manipulation and an aligning strategy.

- Dispose of defective tool/part/material: The cobot must identify the defective tool/part/material and plan a trajectory for grasping it. Then, it should identify the closest or correct dispose location, and plan a trajectory to reach it.

- Turn Object: “Turn object” requires specifying the object, its location, the turn direction, and the turn extent in part of a circle (e.g., half, third…tenth of a circle) or degrees. For example, “Turn the left box clockwise three-quarters a of a circle” or “Turn the left box clockwise 270 degrees”.

- Clear away: This task is required in cases where the cobot is in the path of the main activity or the human worker, and should move away to clear the path. This command must be accompanied by a direction of movement. For example, “Clear away left” turns the whole robot arm left.

- Move and align: The input to this task must include a specification of the object to move/align and the destination of the object movement. The end effector camera should identify the object and the area up to the destination, and plan the trajectory for the move.

- Drill: This task must be accompanied by the drill coordinates and orientation, and the specification of drill bit type and drilling speed and depth. The cobot end effector must first be equipped with a drill with the right drill bit. Then, the trajectory to the drilling location is computed. Upon arrival of the drill to its location and orientation a driling operation strats. Finally, the drill returns to its intial position.

- Screw: The screw location must be defined (or pre-defined). The “Screw“ task assumes that a screw is already in place waiting to be screwed. So, a pick and place of a screw usually precedes this task. To execute this task, first the cobot must locate and fetch a screwdriver. Then, it has to move and align the screwdriver with the screw, and then rotate in the clockwise direction for tightening the screw and counterclockwise for releasing it.

- Solder/glue-point: the Location must be pre-defined.

- Inspect: The robot has to bring the camera to a given location. Typically, the camera is an integral part of the cobot, located near its end effector. Either the coordinates or the object to be inspected must be specified. In the latter case, dynamic analysis of the camera screenshots has to identify the object and find a good inspection point.

- Push/Pull: Care must be taken to clarify the command, as this task may be vague or ambiguous. For example, push/pull the box must be accompanied with a direction and length: “push/pull the box left 10 CM”.

- Press/Detach: These commands come with either an object or coordinates. In the case of an object (e.g., “press a button” and “detach the handle”) the location of the object must be predefined. Otherwise, coordinates must be specified of where to press.

- Hold (reach and grasp): Identifying the object for planning the reach, and grasp trajectory.

4. Main Strategies for Optimizing Vocal Communication

- Workstation map: Generate a map of the workstation with location-identifying labels of various important points that the cobot arm may need to reach. Store the coordinates of each point in the cobot’s control system and hang the map in front of the worker.

- Dedicated space for placing tools and parts (for both the worker and the cobot): Dedicate a convenient place, close to the worker, for the cobot to place or take tools or parts or materials that the worker asked for. This place could serve several tools or parts to be placed from left to right and top to bottom. Store the coordinates of the place in the cobot’s control system, and the worker must be informed about this place and understand the expected trajectory of the cobot for reaching this place.

- Dedicated storage place for tool/part: Dedicate a unique storage place for each tool and for part supply that would be easy to reach for both the human worker and the cobot.

- Define names for mutual use: Make sure both the cobot and workers are using the same name for each tool and for each part.

- Define predefined trajectories of the cobot from tool/part areas to the placement area. Thus, the worker knows what movements to expect from the cobot.

5. Illustrative Example

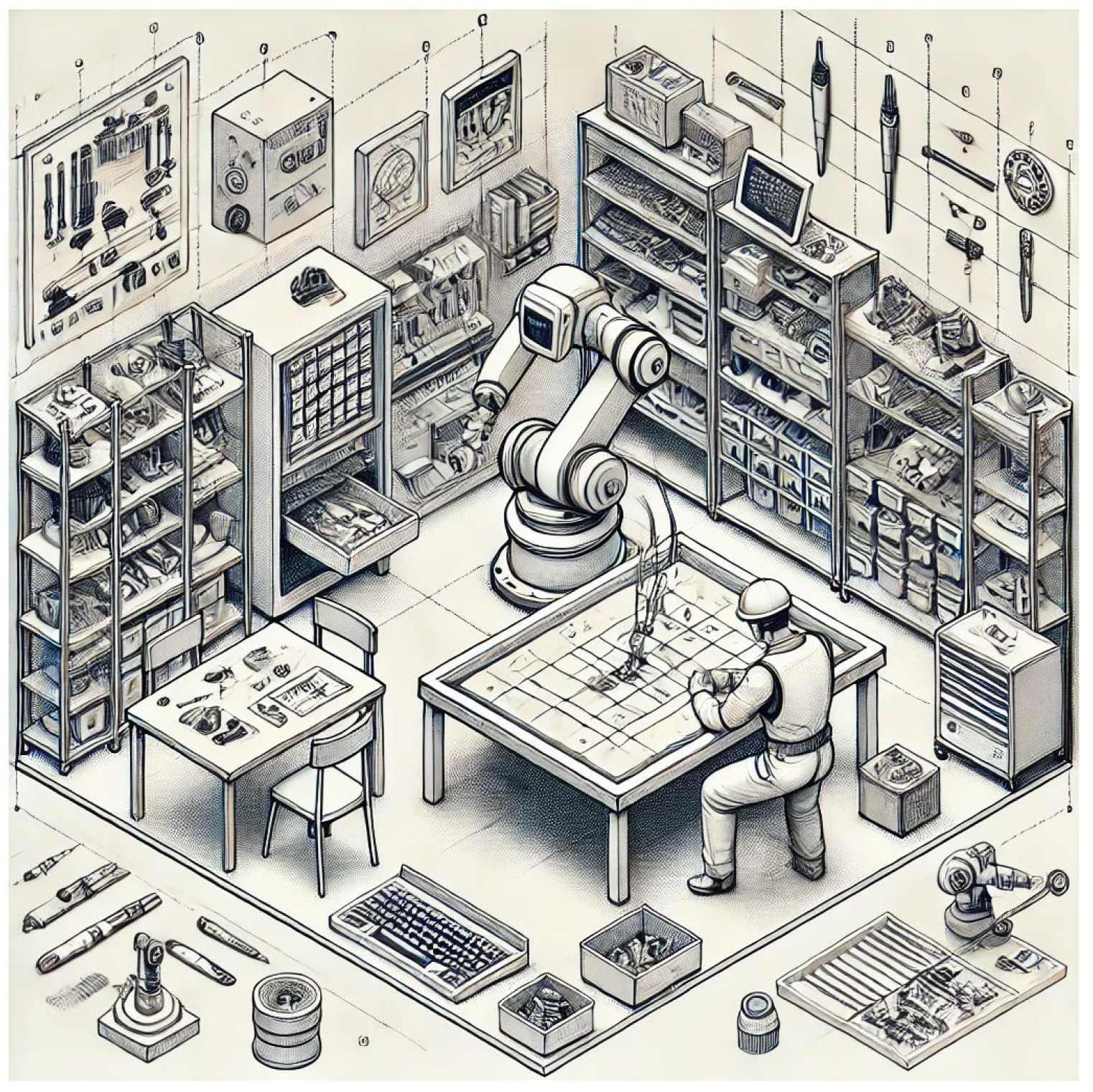

5.1. Scenario 1: Pick and Place

- Initial Setup: The workstation map is displayed, showing the locations of various components in storage bins. The cobot’s control system stores the coordinates of each bin.

- Task Execution:

- -

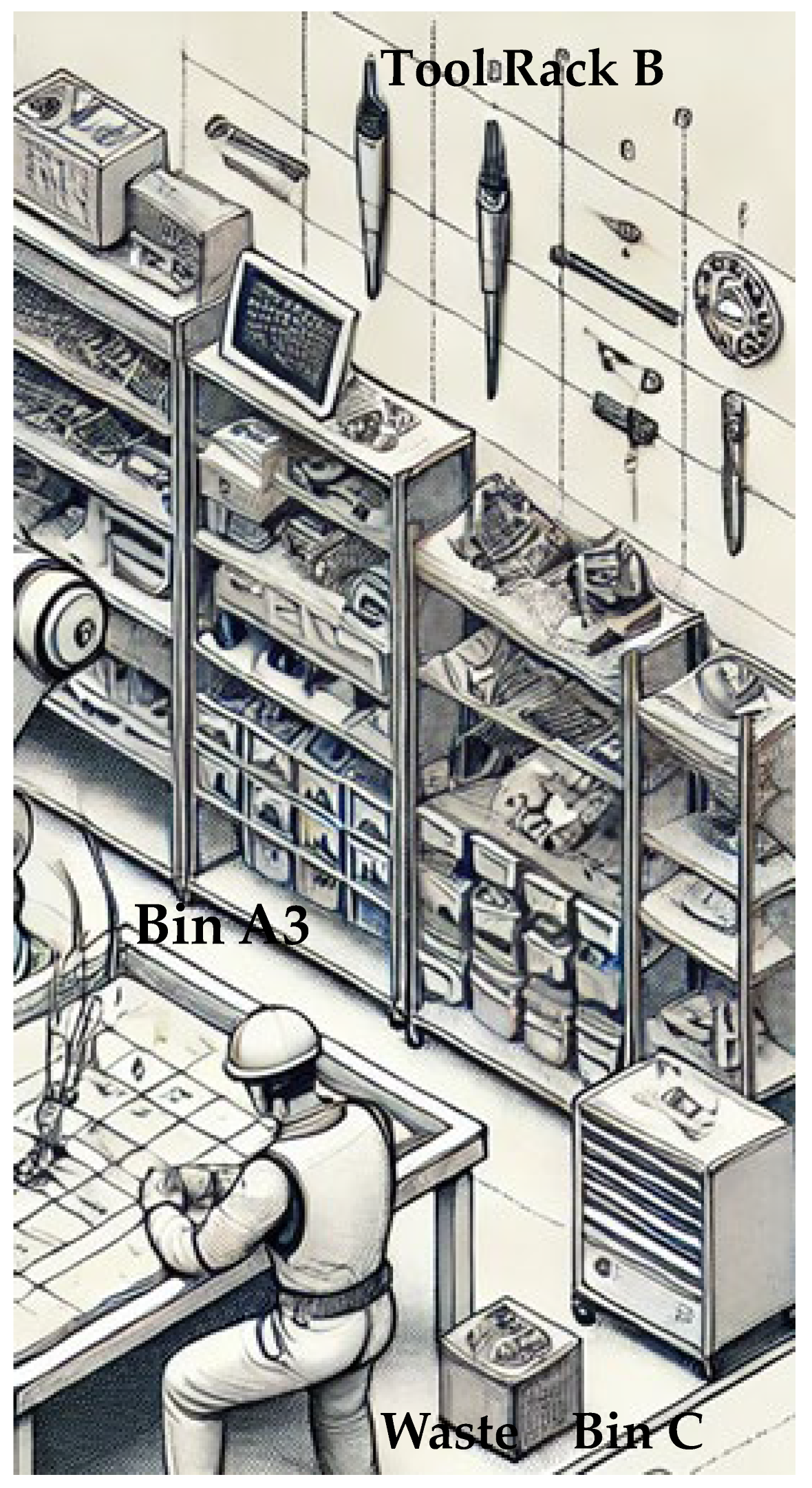

- Worker: “Cobot, use trajectory “1A” to pick resistor R1 from Bin A3”. (Figure 2).

- -

- Cobot: “Picking resistor R1 from Bin A3”.

- -

- Worker: “Cobot, use trajectory “1B” to place it on the spot 1” (on the table)

- -

- The cobot moves and places it on the designated spot on the assembly table.

- -

- Cobot: “Resistor R1 placed on the table”.

- Follow-up: The worker continues to assemble the board, instructing the cobot to pick and place other components as needed

5.2. Scenario 2: Fetch

- Initial Setup: Dedicated spaces for frequently used tools are identified and labeled on the workstation map. The cobot’s control system stores the coordinates for these storage locations.

- Task Execution:

- -

- Worker: “Cobot, fetch the soldering iron from Tool Rack B”. (Figure 2). The cobot has the soldering iron coordinates stored in its memory.

- -

- Cobot: “Fetching soldering iron from Tool Rack B”.

- -

- The cobot moves to Tool Rack B, identifies the soldering iron, and picks it from Rack B.

- -

- The cobot identifies an empty space on the table near to the worker and moves to place it on that space.

- -

- Cobot: “Soldering iron delivered”.

- Follow-up: The worker uses the soldering iron to solder components onto the board, instructing the cobot to fetch other tools as needed.

5.3. Scenario 3: Return

- Initial Setup:

- -

- Storage locations for each tool are predefined and stored in the cobot’s control system.

- -

- The workstation map includes these locations for easy reference.

- Task Execution:

- -

- Worker: “Cobot, return the soldering iron to Tool Rack B” (see Figure 2)

- -

- Cobot: “Returning soldering iron to Tool Rack B”.

- -

- The cobot takes the soldering iron from the table and returns it to its designated spot in Tool Rack B.

- -

- Cobot: “Soldering iron returned to Tool Rack B”.

- Follow-up: The worker continues with the assembly, knowing that the workspace remains organized and tools are readily available for future use.

5.4. Scenario 4: Dispose

- Initial Setup:

- -

- Waste disposal bins are identified and labeled on the workstation map.

- -

- The cobot’s control system stores the coordinates for these bins.

- Task Execution:

- -

- Worker: “Cobot, use trajectory “2” to dispose of this defective capacitor in Waste Bin C”. (Figure 2)

- -

- Cobot: “Disposing of defective capacitor in Waste Bin C”.

- -

- The cobot identifies the capacitor and moves its arm above the table, picks up the defective capacitor, and disposes of it in Waste Bin C.

- -

- Cobot: “Defective capacitor disposed of in Waste Bin C”.

- Follow-up: The worker continues assembling the electronic board, confident that defective parts are properly disposed of, maintaining a safe and clean workspace.

6. Discussion

6.1. Discussion for Scenario 1: Pick and Place

- Hardware:

- Software:

6.2. Discussion for Scenario 2: Fetch

- Hardware:

- Software:

6.3. Discussion for Scenario 3: Return

- Hardware:

- Software:

6.4. Discussion on Social and Psychological Impact of Cobots on Society and Workers

7. Conclusions

- Most cobot tasks could be classified into a small group of task types, where each type has characteristic movements (14 main types identified by [41]).

- A three-dimensional map of the workstation that is understood by a human and enables the translation of places and trajectories to 3D coordinates is a key enabler of human–robot collaboration.

- Common expressions between the human worker and the digital system are essential for collaboration. These expressions could be task type, points in the workstation, pre-agreed-upon trajectories, gripping instructions, etc.

- Setting dedicated places for tools and intermediate storage facilitates human–cobot collaboration.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Faccio, M.; Cohen, Y. Intelligent Cobot Systems: Human-Cobot Collaboration in Manufacturing. J. Intell. Manuf. 2023, 35, 1905–1907. [Google Scholar] [CrossRef]

- Faccio, M.; Granata, I.; Menini, A.; Milanese, M.; Rossato, C.; Bottin, M.; Minto, R.; Pluchino, P.; Gamberini, L.; Boschetti, G. Human Factors in Cobot Era: A Review of Modern Production Systems Features. J. Intell. Manuf. 2023, 34, 85–106. [Google Scholar] [CrossRef]

- Liu, L.; Schoen, A.J.; Henrichs, C.; Li, J.; Mutlu, B.; Zhang, Y.; Radwin, R.G. Human Robot Collaboration for Enhancing Work Activities. Hum. Factors 2024, 66, 158–179. [Google Scholar] [CrossRef] [PubMed]

- Papetti, A.; Ciccarelli, M.; Scoccia, C.; Palmieri, G.; Germani, M. A Human-Oriented Design Process for Collaborative Robotics. Int. J. Comput. Integr. Manuf. 2023, 36, 1760–1782. [Google Scholar] [CrossRef]

- Gross, S.; Krenn, B. A Communicative Perspective on Human–Robot Collaboration in Industry: Mapping Communicative Modes on Collaborative Scenarios. Int. J. Soc. Robot. 2023, 16, 1–18. [Google Scholar] [CrossRef]

- Moore, B.A.; Urakami, J. The Impact of the Physical and Social Embodiment of Voice User Interfaces on User Distraction. Int. J. Hum. Comput. Stud. 2022, 161, 102784. [Google Scholar] [CrossRef]

- Marklin Jr, R.W.; Toll, A.M.; Bauman, E.H.; Simmins, J.J.; LaDisa, J.F., Jr.; Cooper, R. Do Head-Mounted Augmented Reality Devices Affect Muscle Activity and Eye Strain of Utility Workers Who Do Procedural Work? Studies of Operators and Manhole Workers. Hum. Factors 2022, 64, 305–323. [Google Scholar] [CrossRef]

- Heydaryan, S.; Suaza Bedolla, J.; Belingardi, G. Safety Design and Development of a Human-Robot Collaboration Assembly Process in the Automotive Industry. Appl. Sci. 2018, 8, 344. [Google Scholar] [CrossRef]

- Petzoldt, C.; Harms, M.; Freitag, M. Review of Task Allocation for Human-Robot Collaboration in Assembly. Int. J. Comput. Integr. Manuf. 2023, 36, 1675–1715. [Google Scholar] [CrossRef]

- Schmidbauer, C.; Zafari, S.; Hader, B.; Schlund, S. An Empirical Study on Workers’ Preferences in Human–Robot Task Assignment in Industrial Assembly Systems. IEEE Trans. Hum. Mach. Syst. 2023, 53, 293–302. [Google Scholar] [CrossRef]

- Jacomini Prioli, J.P.; Liu, S.; Shen, Y.; Huynh, V.T.; Rickli, J.L.; Yang, H.-J.; Kim, S.-H.; Kim, K.-Y. Empirical Study for Human Engagement in Collaborative Robot Programming. J. Integr. Des. Process Sci. 2023, 26, 159–181. [Google Scholar] [CrossRef]

- Scalise, R.; Rosenthal, S.; Srinivasa, S. Natural Language Explanations in Human-Collaborative Systems. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 377–378. [Google Scholar]

- Kontogiorgos, D. Utilising Explanations to Mitigate Robot Conversational Failures. arXiv 2023, arXiv:2307.04462. [Google Scholar]

- Zieliński, K.; Walas, K.; Heredia, J.; Kjærgaard, M.B. A Study of Cobot Practitioners Needs for Augmented Reality Interfaces in the Context of Current Technologies. In Proceedings of the 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Vancouver, Canada, 8–12 August 2021; pp. 292–298. [Google Scholar]

- Pascher, M.; Kronhardt, K.; Franzen, T.; Gruenefeld, U.; Schneegass, S.; Gerken, J. My Caregiver the Cobot: Comparing Visualization Techniques to Effectively Communicate Cobot Perception to People with Physical Impairments. Sensors 2022, 22, 755. [Google Scholar] [CrossRef] [PubMed]

- Eimontaite, I.; Cameron, D.; Rolph, J.; Mokaram, S.; Aitken, J.M.; Gwilt, I.; Law, J. Dynamic Graphical Instructions Result in Improved Attitudes and Decreased Task Completion Time in Human–Robot Co-Working: An Experimental Manufacturing Study. Sustainability 2022, 14, 3289. [Google Scholar] [CrossRef]

- Carriero, G.; Calzone, N.; Sileo, M.; Pierri, F.; Caccavale, F.; Mozzillo, R. Human-Robot Collaboration: An Augmented Reality Toolkit for Bi-Directional Interaction. Appl. Sci. 2023, 13, 11295. [Google Scholar] [CrossRef]

- Sauer, V.; Sauer, A.; Mertens, A. Zoomorphic Gestures for Communicating Cobot States. IEEE Robot. Autom. Lett. 2021, 6, 2179–2185. [Google Scholar] [CrossRef]

- Deuerlein, C.; Langer, M.; Seßner, J.; Heß, P.; Franke, J. Human-robot-interaction using cloud-based speech recognition systems. Procedia Cirp 2021, 97, 130–135. [Google Scholar] [CrossRef]

- Turri, S.; Rizvi, M.; Rabini, G.; Melonio, A.; Gennari, R.; Pavani, F. Orienting Auditory Attention through Vision: The Impact of Monaural Listening. Multisensory Res. 2021, 35, 1–28. [Google Scholar] [CrossRef]

- Resing, M. Industrial Cobot Sound Design Study: Audio Design Principles for Industrial Human-Robot Interaction. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2023. [Google Scholar]

- Tran, N. Exploring Mixed Reality Robot Communication under Different Types of Mental Workload; Colorado School of Mines: Golden, CO, USA, 2020; ISBN 9798645495275. [Google Scholar]

- Telkes, P.; Angleraud, A.; Pieters, R. Instructing Hierarchical Tasks to Robots by Verbal Commands. In Proceedings of the 2024 IEEE/SICE International Symposium on System Integration (SII), Ha Long, Vietnam, 8–11 January 2024; pp. 1139–1145. [Google Scholar]

- Salehzadeh, R.; Gong, J.; Jalili, N. Purposeful Communication in Human–Robot Collaboration: A Review of Modern Approaches in Manufacturing. IEEE Access 2022, 10, 129344–129361. [Google Scholar] [CrossRef]

- Rusan, H.-A.; Mocanu, B. Human-Computer Interaction Through Voice Commands Recognition. In Proceedings of the 2022 International Symposium on Electronics and Telecommunications, Timisoara, Romania, 10–11 November 2022; pp. 1–4. [Google Scholar]

- Carr, C.; Wang, P.; Wang, S. A Human-Friendly Verbal Communication Platform for Multi-Robot Systems: Design and Principles. In UK Workshop on Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2023; pp. 580–594. [Google Scholar]

- McMillan, D.; Jaber, R.; Cowan, B.R.; Fischer, J.E.; Irfan, B.; Cumbal, R.; Zargham, N.; Lee, M. Human-Robot Conversational Interaction (HRCI). In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; pp. 923–925. [Google Scholar]

- Lee, K.M.; Krishna, A.; Zaidi, Z.; Paleja, R.; Chen, L.; Hedlund-Botti, E.; Schrum, M.; Gombolay, M. The Effect of Robot Skill Level and Communication in Rapid, Proximate Human-Robot Collaboration. In Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023; pp. 261–270. [Google Scholar]

- Ionescu, T.B.; Schlund, S. Programming Cobots by Voice: A Human-Centered, Web-Based Approach. Procedia CIRP 2021, 97, 123–129. [Google Scholar] [CrossRef]

- Sorgini, F.; Farulla, G.A.; Lukic, N.; Danilov, I.; Roveda, L.; Milivojevic, M.; Pulikottil, T.B.; Carrozza, M.C.; Prinetto, P.; Tolio, T. Tactile Sensing with Gesture-Controlled Collaborative Robot. In Proceedings of the 2020 IEEE International Workshop on Metrology for Industry 4.0 & IoT, Rome, Italy, 3–5 June 2020; pp. 364–368. [Google Scholar]

- Guda, V.; Mugisha, S.; Chevallereau, C.; Zoppi, M.; Molfino, R.; Chablat, D. Motion Strategies for a Cobot in a Context of Intermittent Haptic Interface. J. Mech. Robot. 2022, 14, 041012. [Google Scholar] [CrossRef]

- Zurlo, D.; Heitmann, T.; Morlock, M.; De Luca, A. Collision Detection and Contact Point Estimation Using Virtual Joint Torque Sensing Applied to a Cobot. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; 2023; pp. 7533–7539. [Google Scholar]

- Costes, A.; Lécuyer, A. Inducing Self-Motion Sensations with Haptic Feedback: State-of-the-Art and Perspectives on “Haptic Motion”. IEEE Trans. Haptics 2023, 16, 171–181. [Google Scholar] [CrossRef] [PubMed]

- Keshvarparast, A.; Battini, D.; Battaia, O.; Pirayesh, A. Collaborative Robots in Manufacturing and Assembly Systems: Literature Review and Future Research Agenda. J. Intell. Manuf. 2023, 35, 2065–2118. [Google Scholar] [CrossRef]

- Calzavara, M.; Faccio, M.; Granata, I.; Trevisani, A. Achieving productivity and operator well-being: A dynamic task allocation strategy for collaborative assembly systems in Industry 5.0. Int. J. Adv. Manuf. Technol. 2024, 134, 3201–3216. [Google Scholar] [CrossRef]

- Schreiter, T.; Morillo-Mendez, L.; Chadalavada, R.T.; Rudenko, A.; Billing, E.; Magnusson, M.; Arras, K.O.; Lilienthal, A.J. Advantages of Multimodal versus Verbal-Only Robot-to-Human Communication with an Anthropomorphic Robotic Mock Driver. In Proceedings of the 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Busan, South Korea, 28–31 August 2023; pp. 293–300. [Google Scholar]

- Rautiainen, S.; Pantano, M.; Traganos, K.; Ahmadi, S.; Saenz, J.; Mohammed, W.M.; Martinez Lastra, J.L. Multimodal Interface for Human–Robot Collaboration. Machines 2022, 10, 957. [Google Scholar] [CrossRef]

- Urakami, J.; Seaborn, K. Nonverbal Cues in Human–Robot Interaction: A Communication Studies Perspective. ACM Trans. Hum. Robot Interact. 2023, 12, 1–21. [Google Scholar] [CrossRef]

- Park, K.-B.; Choi, S.H.; Lee, J.Y.; Ghasemi, Y.; Mohammed, M.; Jeong, H. Hands-Free Human–Robot Interaction Using Multimodal Gestures and Deep Learning in Wearable Mixed Reality. IEEE Access 2021, 9, 55448–55464. [Google Scholar] [CrossRef]

- Nagrani, A.; Yang, S.; Arnab, A.; Jansen, A.; Schmid, C.; Sun, C. Attention Bottlenecks for Multimodal Fusion. Adv. Neural Inf. Process. Syst. 2021, 34, 14200–14213. [Google Scholar]

- Javaid, M.; Haleem, A.; Singh, R.P.; Rab, S.; Suman, R. Significant Applications of Cobots in the Field of Manufacturing. Cogn. Robot. 2022, 2, 222–233. [Google Scholar] [CrossRef]

- Rahman, M.M.; Khatun, F.; Jahan, I.; Devnath, R.; Bhuiyan MA, A. Cobotics: The Evolving Roles and Prospects of Next-Generation Collaborative Robots in Industry 5.0. J. Robot. 2024, 2024, 2918089. [Google Scholar] [CrossRef]

- Keshvarparast, A.; Katiraee, N.; Finco, S.; Calzavara, M. Integrating collaboration scenarios and workforce individualization in collaborative assembly line balancing. Int. J. Prod. Econ. 2024, 279, 109450. [Google Scholar] [CrossRef]

- Ragil, S.M.; Tieling, Z.; Kiridena, S. Overview of Ergonomics and Safety Aspects of Human-Cobot Interaction in the Manufacturing Industry. Int. Conf. Inform. Technol. Eng. 2023, 21, 401–405. [Google Scholar]

- Adamini, R.; Antonini, N.; Borboni, A.; Medici, S.; Nuzzi, C.; Pagani, R.; Tonola, C. User-friendly human-robot interaction based on voice commands and visual systems. In Proceedings of the 2021 24th International Conference on Mechatronics Technology (ICMT), Singapore, 18–22 December 2021; p. 1. [Google Scholar]

- Ionescu, T.B.; Schlund, S. Programming cobots by voice: A pragmatic, web-based approach. Int. J. Comput. Integr. Manuf. 2023, 36, 86–109. [Google Scholar] [CrossRef]

- Rouillard, J.; Vannobel, J.M. Multimodal Interaction for Cobot Using MQTT. Multimodal Technol. Interact. 2023, 7, 78. [Google Scholar] [CrossRef]

- Su, H.; Qi, W.; Chen, J.; Yang, C.; Sandoval, J.; Laribi, M.A. Recent advancements in multimodal human–robot interaction. Front. Neurorobotics 2023, 17, 1084000. [Google Scholar] [CrossRef]

- Ferrari, D.; Pupa, A.; Signoretti, A.; Secchi, C. Safe Multimodal Communication in Human-Robot Collaboration. In In International Workshop on Human-Friendly Robotics; Springer Nature: Cham, Switzerland, 2023; pp. 151–163. [Google Scholar]

- D’Attanasio, S.; Alabert, T.; Francis, C.; Studzinska, A. Exploring Multimodal Interactions with a Robot Assistant in an Assembly Task: A Human-Centered Design Approach. In Proceedings of the 8th International Conference on Human Computer Interaction Theory and Applications, Rome, Italy, 27–29 February 2024; pp. 549–556. [Google Scholar]

- Abdulrazzaq, A.Z.; Ali, Z.G.; Al-Ani AR, M.; Khaleel, B.M.; Alsalame, S.; Snovyda, V.; Kanbar, A.B. Evaluation of Voice Interface Integration with Arduino Robots in 5G Network Frameworks. In Proceedings of the 2024 36th Conference of Open Innovations Association (FRUCT), Lappeenranta, Finland, 24–26 April 2024; pp. 44–55. [Google Scholar] [CrossRef]

- Schwarz, D.; Zarcone, A.; Laquai, F. Talk to your Cobot: Faster and more efficient error-handling in a robotic system with a multi-modal Conversational Agent. Proc. Mensch und Comput. 2024, 2024, 520–532. [Google Scholar]

- Asha, C.S.; D’Souza, J.M. Voice-Controlled Object Pick and Place for Collaborative Robots Employing the ROS2 Framework. In Proceedings of the 2024 International Conference on Advances in Modern Age Technologies for Health and Engineering Science (AMATHE), Shivamogga, India, 16–17 May 2024; pp. 1–7. [Google Scholar]

- Younes, R.; Elisei, F.; Pellier, D.; Bailly, G. Impact of verbal instructions and deictic gestures of a cobot on the performance of human coworkers. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Nancy, France, 22–24 November 2024. [Google Scholar]

- Siwach, G.; Li, C. Enhancing Human Cobot Interaction using Natural Language Processing. In Proceedings of the 2023 IEEE 4th International Multidisciplinary Conference on Engineering Technology (IMCET), Beirut, Lebanon, 12–14 December 2023; pp. 21–26. [Google Scholar]

- Siwach, G.; Li, C. Unveiling the Potential of Natural Language Processing in Collaborative Robots (Cobots): A Comprehensive Survey. In Proceedings of the 2024 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 6–8 January 2024; pp. 1–6. [Google Scholar]

- Gkournelos, C.; Konstantinou, C.; Angelakis, P.; Michalos, G.; Makris, S. Enabling Seamless Human-Robot Collaboration in Manufacturing Using LLMs. In European Symposium on Artificial Intelligence in Manufacturing; Springer Nature: Cham, Switzerland, 2023; pp. 81–89. [Google Scholar]

- Lakhnati, Y.; Pascher, M.; Gerken, J. Exploring a GPT-based large language model for variable autonomy in a VR-based human-robot teaming simulation. Front. Robot. AI 2024, 11, 1347538. [Google Scholar] [CrossRef]

- Kim, C.Y.; Lee, C.P.; Mutlu, B. Understanding large-language model (llm)-powered human-robot interaction. In Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–15 March 2024; pp. 371–380. [Google Scholar]

- Ye, Y.; You, H.; Du, J. Improved trust in human-robot collaboration with ChatGPT. IEEE Access 2023, 11, 55748–55754. [Google Scholar] [CrossRef]

- Wang, T.; Fan, J.; Zheng, P. An LLM-based vision and language cobot navigation approach for Human-centric Smart Manufacturing. J. Manuf. Syst. 2024, 75, 299–305. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, D.; Choi, J.; Park, J.; Oh, N.; Park, D. A survey on integration of large language models with intelligent robots. Intell. Serv. Robot. 2024, 17, 1091–1107. [Google Scholar] [CrossRef]

- Zhang, C.; Chen, J.; Li, J.; Peng, Y.; Mao, Z. Large language models for human-robot interaction: A review. Biomim. Intell. Robot. 2023, 3, 100131. [Google Scholar] [CrossRef]

- Jeong, H.; Lee, H.; Kim, C.; Shin, S. A Survey of Robot Intelligence with Large Language Models. Appl. Sci. 2024, 14, 8868. [Google Scholar] [CrossRef]

- Shi, Z.; Landrum, E.; O’Connell, A.; Kian, M.; Pinto-Alva, L.; Shrestha, K.; Zhu, X.; Matarić, M.J. How Can Large Language Models Enable Better Socially Assistive Human-Robot Interaction: A Brief Survey. Proc. AAAI Symp. Ser. 2024, 3, 401–404. [Google Scholar] [CrossRef]

- Kawaharazuka, K.; Matsushima, T.; Gambardella, A.; Guo, J.; Paxton, C.; Zeng, A. Real-world robot applications of foundation models: A review. Adv. Robot. 2024, 38, 1232–1254. [Google Scholar] [CrossRef]

- Liao, S.; Lin, L.; Chen, Q. Research on the acceptance of collaborative robots for the industry 5.0 era—The mediating effect of perceived competence and the moderating effect of robot use self-efficacy. Int. J. Ind. Ergon. 2023, 95, 103455. [Google Scholar] [CrossRef]

- Nenna, F.; Zanardi, D.; Maria Orlando, E.; Mingardi, M.; Buodo, G.; Gamberini, L. Addressing Trust and Negative Attitudes Toward Robots in Human-Robot Collaborative Scenarios: Insights from the Industrial Work Setting. In Proceedings of the 17th International Conference on PErvasive Technologies Related to Assistive Environments, Crete, Greece, 26–28 June 2024; pp. 259–264. [Google Scholar]

- Pietrantoni, L.; Favilla, M.; Fraboni, F.; Mazzoni, E.; Morandini, S.; Benvenuti, M.; De Angelis, M. Integrating collaborative robots in manufacturing, logistics, and agriculture: Expert perspectives on technical, safety, and human factors. Front. Robot. AI 2024, 11, 1342130. [Google Scholar] [CrossRef]

- Weiss, A.; Wortmeier, A.-K.; Kubicek, B. Cobots in Industry 4.0: A Roadmap for Future Practice Studies on Human–Robot Collaboration. IEEE Trans. Hum. Mach. Syst. 2021, 51, 335–345. [Google Scholar] [CrossRef]

- Paliga, M. The relationships of human-cobot interaction fluency with job performance and job satisfaction among Cobot operators—The moderating role of workload. Int. J. Environ. Res. Public Health 2023, 20, 5111. [Google Scholar] [CrossRef]

- Fraboni, F.; Brendel, H.; Pietrantoni, L. Evaluating organizational guidelines for enhancing psychological well-being, safety, and performance in technology integration. Sustainability 2023, 15, 8113. [Google Scholar] [CrossRef]

- Liu, L.; Guo, F.; Zou, Z.; Duffy, V.G. Application, development and future opportunities of collaborative robots (cobots) in manufacturing: A literature review. Int. J. Hum. Comput. Interact. 2024, 40, 915–932. [Google Scholar] [CrossRef]

- Yenjai, N.; Dancholvichit, N. Optimizing pick-place operations: Leveraging k-means for visual object localization and decision-making in collaborative robots. J. Appl. Res. Sci. Tech (JARST) 2024, 23, 254153. [Google Scholar] [CrossRef]

- Cohen, Y.; Shoval, S.; Faccio, M.; Minto, R. Deploying cobots in collaborative systems: Major considerations and productivity analysis. Int. J. Prod. Res. 2022, 60, 1815–1831. [Google Scholar] [CrossRef]

- De Simone, V.; Di Pasquale, V.; Giubileo, V.; Miranda, S. Human-Robot Collaboration: An analysis of worker’s performance. Procedia Comput. Sci. 2022, 200, 1540–1549. [Google Scholar] [CrossRef]

- Bi, Z.M.; Luo, C.; Miao, Z.; Zhang, B.; Zhang, W.J.; Wang, L. Safety assurance mechanisms of collaborative robotic systems in manufacturing. Robot. Comput. Integr. Manuf. 2021, 67, 102022. [Google Scholar] [CrossRef]

- Vemuri, N.; Thaneeru, N. Enhancing Human-Robot Collaboration in Industry 4.0 with AI-driven HRI. Power Syst. Tech. 2023, 47, 341–358. [Google Scholar] [CrossRef]

| Communication Method | Advantages | Disadvantages |

|---|---|---|

| Text Communication |

|

|

| Visual Communication |

|

|

| Auditory Communication |

|

|

| Tactile Communication |

|

|

| Multimodal Interaction |

|

|

| Related Strategies (Abbreviated Titles—See Legend Below) | |||||

|---|---|---|---|---|---|

| Main Scenarios | 1 Map | 2 Placing Space | 3 Tool/Part Storage | 4 Defined Names | 5 Path |

| Pick and place | V | V | V | ||

| Fetch | V | V | V | V | V |

| Return | V | V | V | V | V |

| Dispose | V | V | V | V | |

| Turn predefined | V | V | |||

| Turn degree | V | V | |||

| Align | V | V | V | ||

| Drill | V | V | V | ||

| Screw | V | V | V | V | |

| Solder | V | V | V | ||

| Inspect | V | V | V | ||

| Push/press | V | V | V | ||

| Pull/detach | V | V | V | ||

| Hold | V | V | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cohen, Y.; Faccio, M.; Rozenes, S. Vocal Communication Between Cobots and Humans to Enhance Productivity and Safety: Review and Discussion. Appl. Sci. 2025, 15, 726. https://doi.org/10.3390/app15020726

Cohen Y, Faccio M, Rozenes S. Vocal Communication Between Cobots and Humans to Enhance Productivity and Safety: Review and Discussion. Applied Sciences. 2025; 15(2):726. https://doi.org/10.3390/app15020726

Chicago/Turabian StyleCohen, Yuval, Maurizio Faccio, and Shai Rozenes. 2025. "Vocal Communication Between Cobots and Humans to Enhance Productivity and Safety: Review and Discussion" Applied Sciences 15, no. 2: 726. https://doi.org/10.3390/app15020726

APA StyleCohen, Y., Faccio, M., & Rozenes, S. (2025). Vocal Communication Between Cobots and Humans to Enhance Productivity and Safety: Review and Discussion. Applied Sciences, 15(2), 726. https://doi.org/10.3390/app15020726