Toward Predictive Maintenance of Biomedical Equipment in Moroccan Public Hospitals: A Data-Driven Structuring Approach

Abstract

1. Introduction

- To analyze the state of the art in biomedical maintenance strategies, with a focus on predictive approaches and supporting digital architectures.

- To identify structural constraints encountered in low-resource hospital environments, particularly with respect to data governance, digital infrastructure, and organizational maturity.

- To demonstrate the feasibility and relevance of a multi-institutional prototype data structuring pipeline as a foundation for predictive maintenance models that are contextualized, reproducible, and scalable within the Moroccan healthcare system and transferable to other LMIC contexts facing similar challenges.

2. Related Work

2.1. Theoretical Foundations of Biomedical Maintenance

2.2. Advances in Predictive Maintenance and Artificial Intelligence

2.3. Specific Constraints in Low-Resource Settings

2.4. Strategic Positioning of the Present Study

3. Materials and Methods

3.1. Study Context and Data Sources

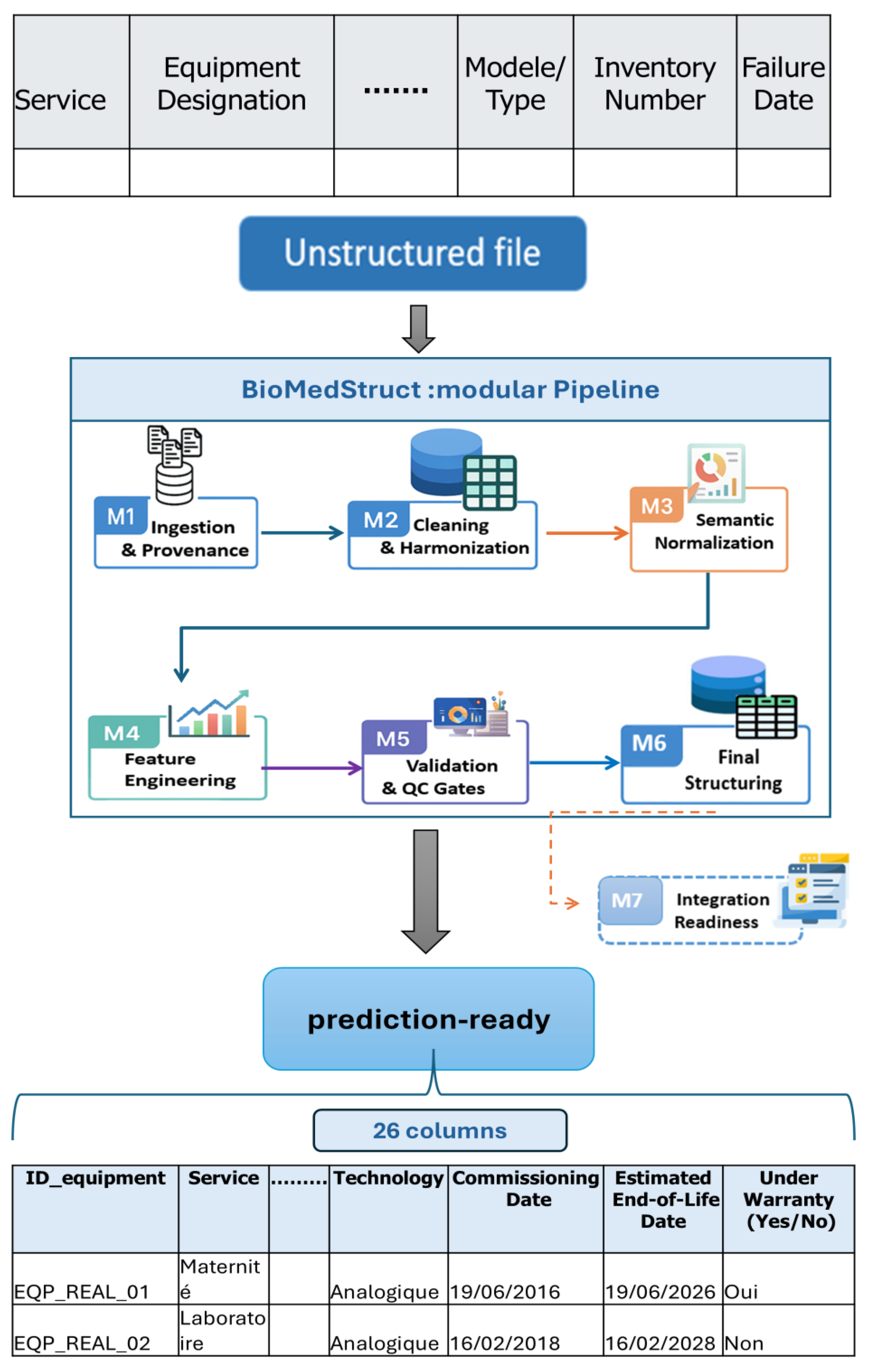

3.2. Structuring Prototype Pipeline (M1–M7)

3.3. Reliability, Maintainability, and Availability Indicators

- Failure Rate (FR)

- 2

- Mean Time Between Failures (MTBF)

- 3

- Mean Time To Repair (MTTR)

- 4

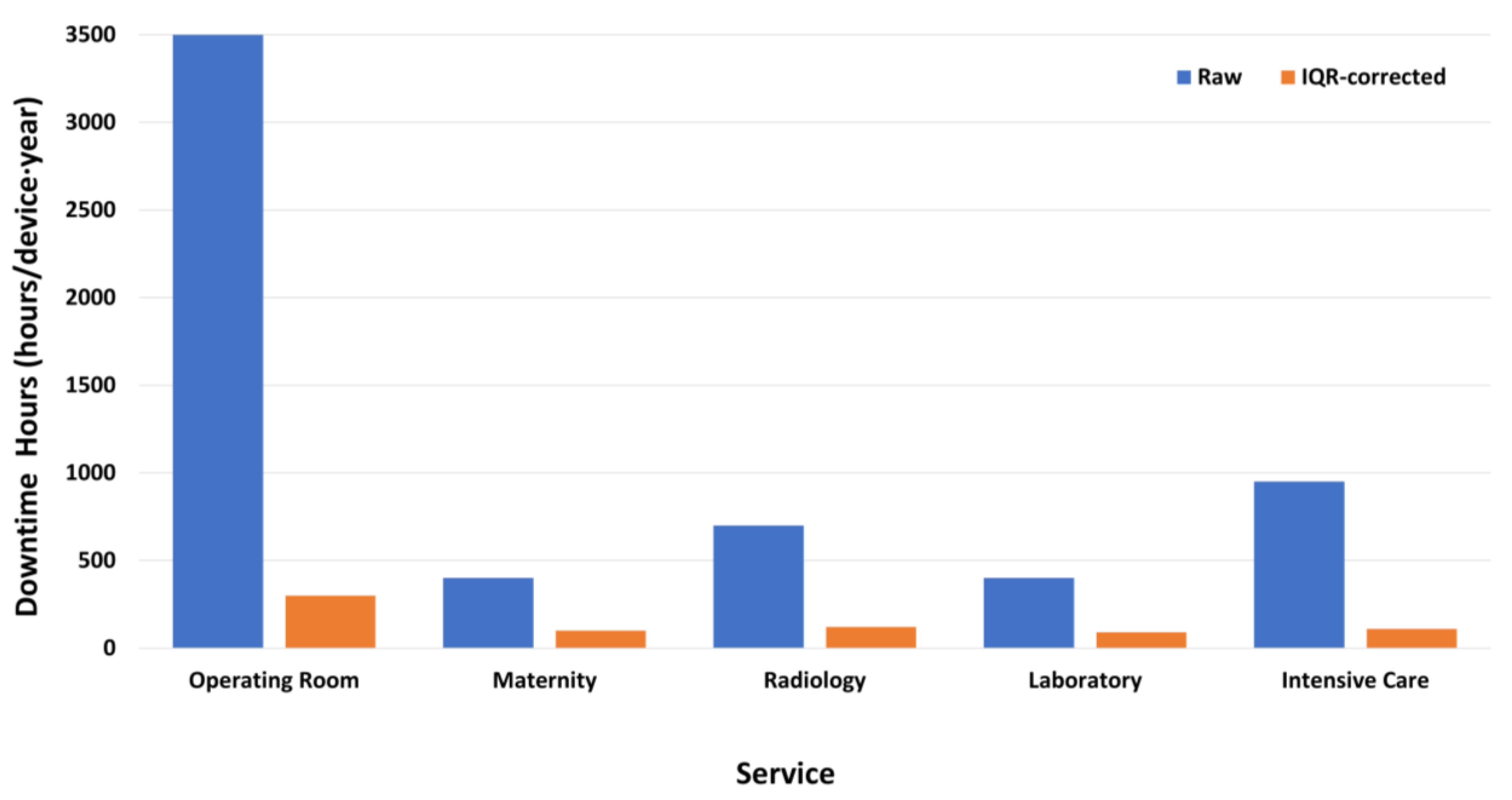

- Downtime Hours (DH)

Robust Correction of MTTR

3.4. Predictive Modeling Protocol

- Prediction task

- Validation protocol

- Subset definition

- Model configuration and baselines

4. Results

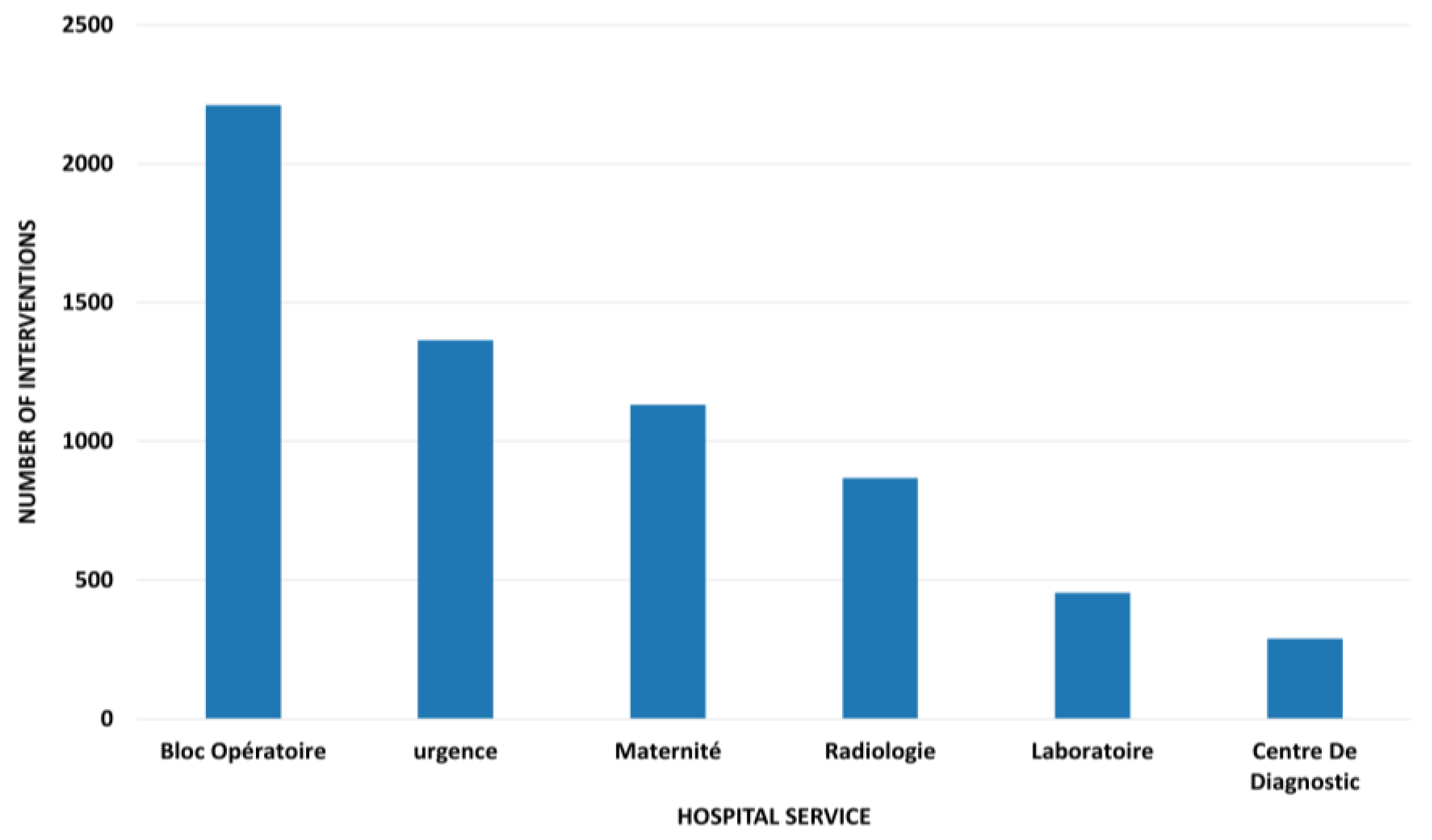

4.1. Dataset Overview and Descriptive Statistics

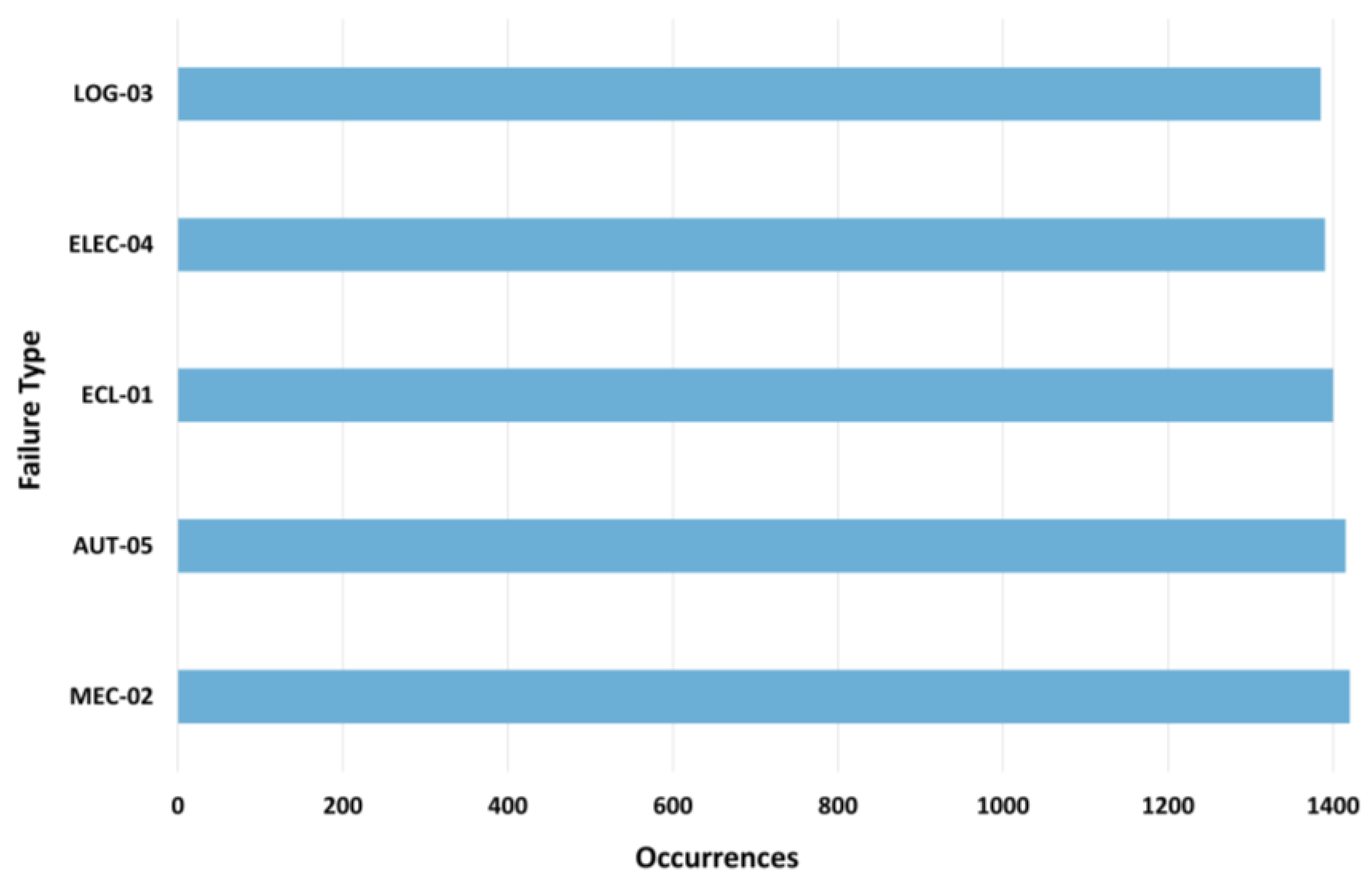

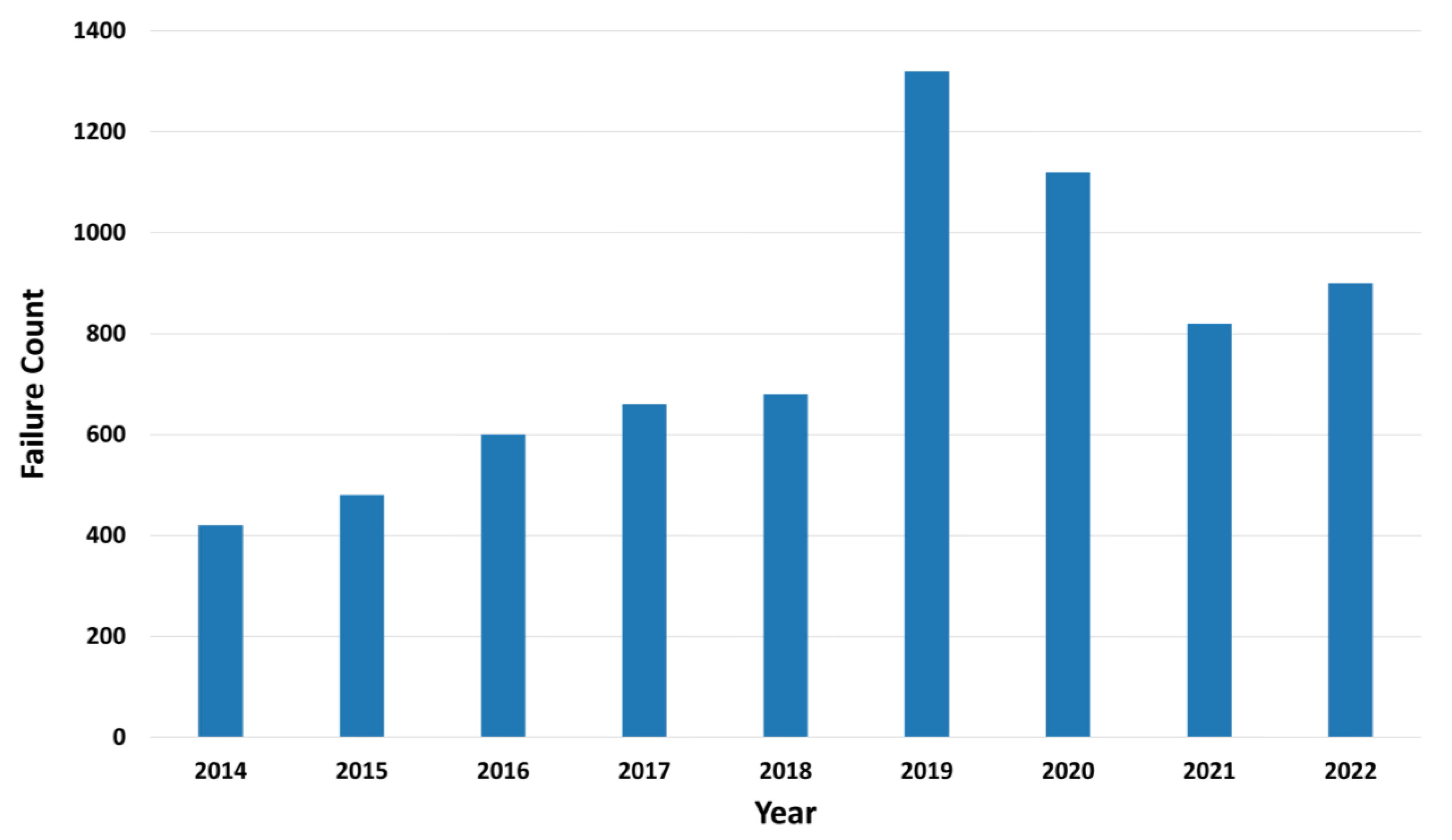

4.2. Failure Characterization and Temporal Patterns

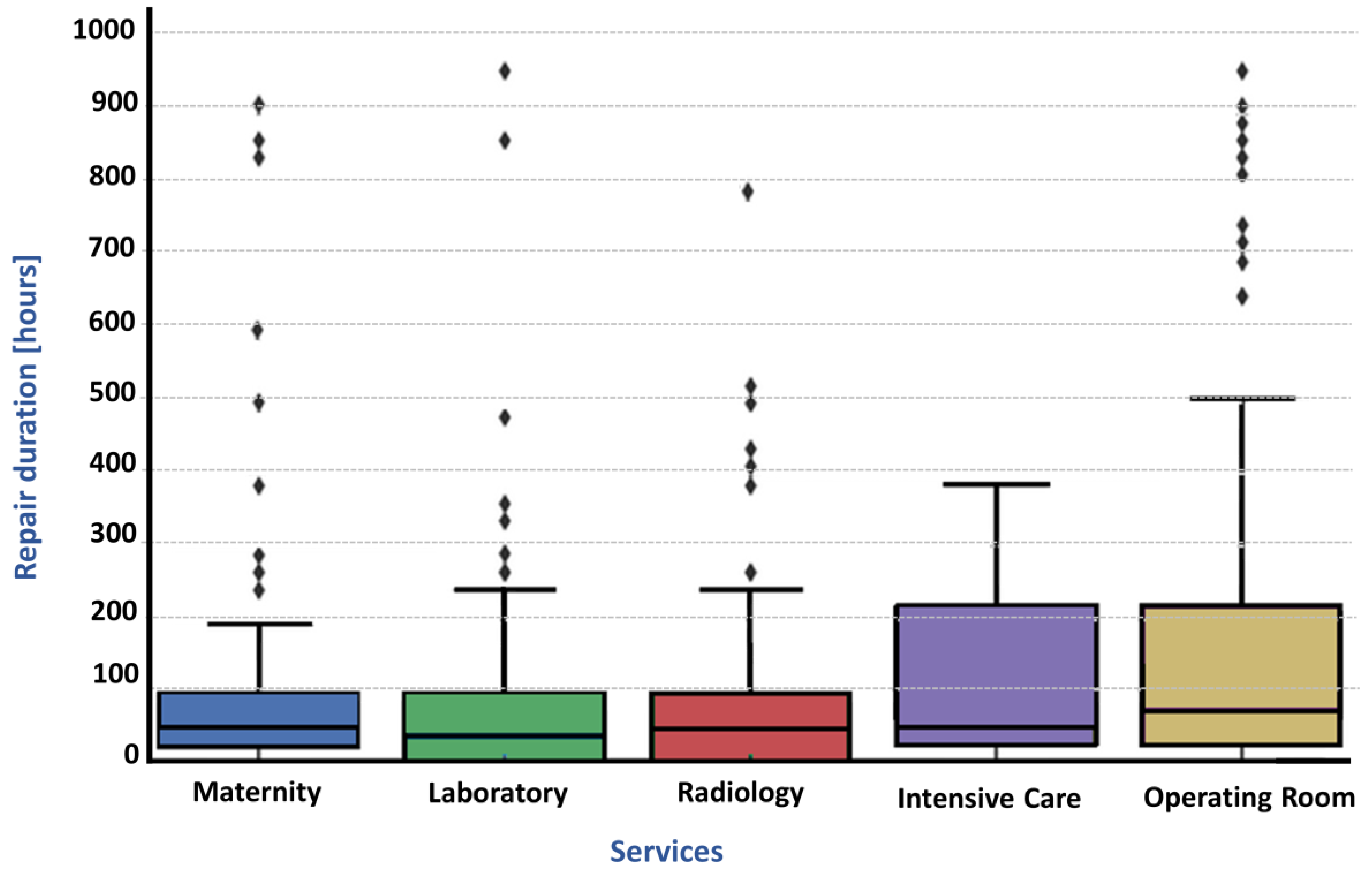

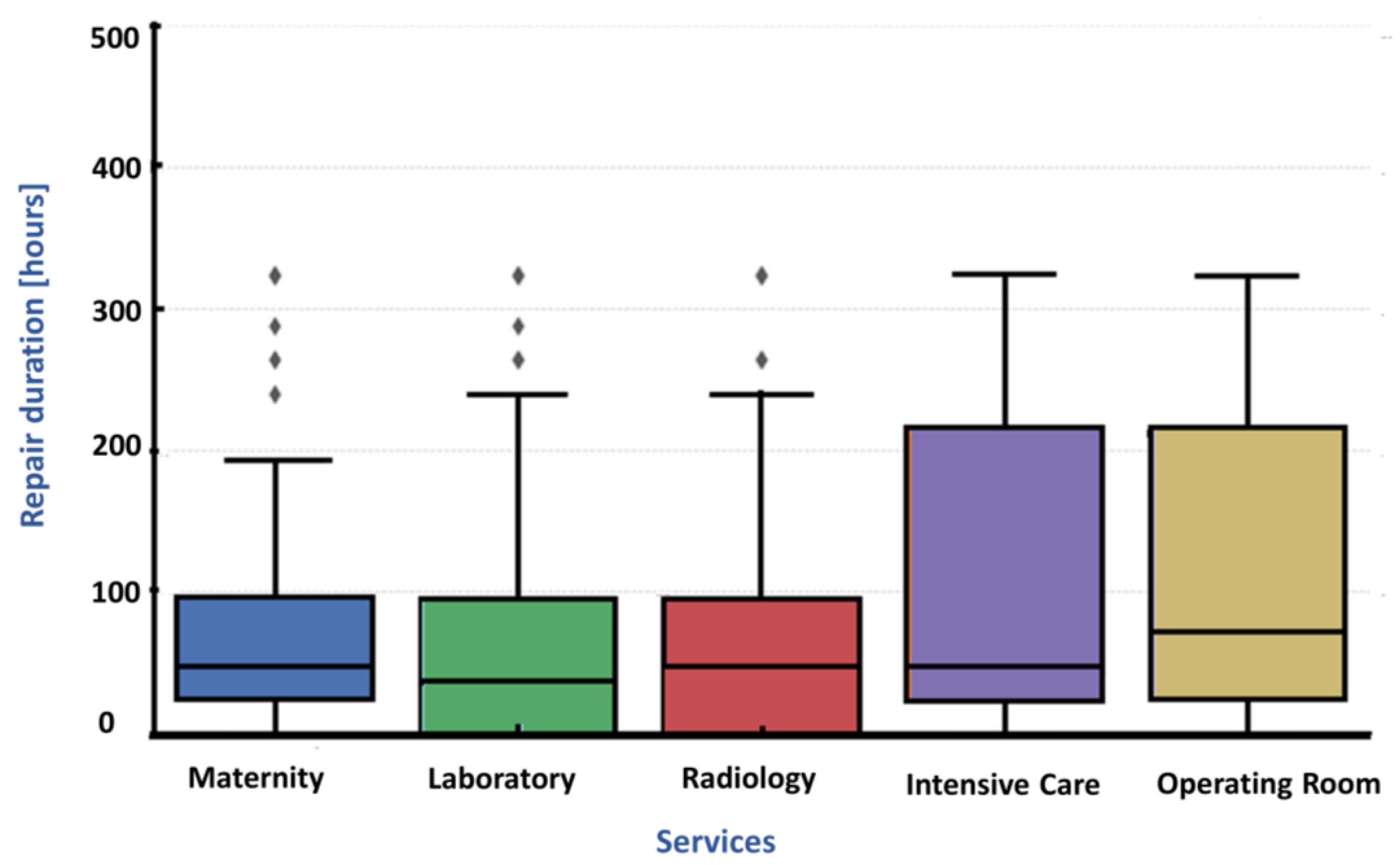

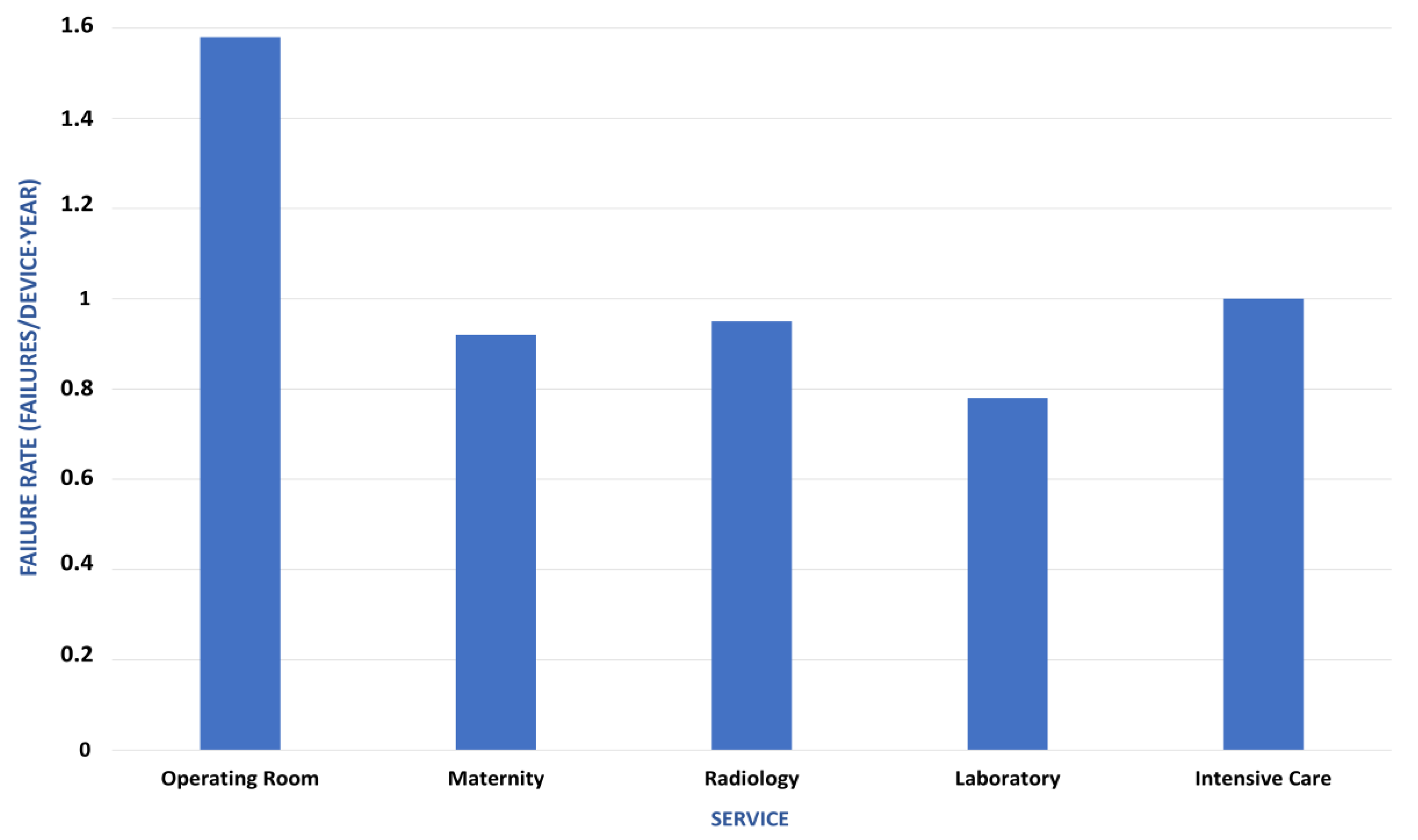

4.3. Reliability Indicators

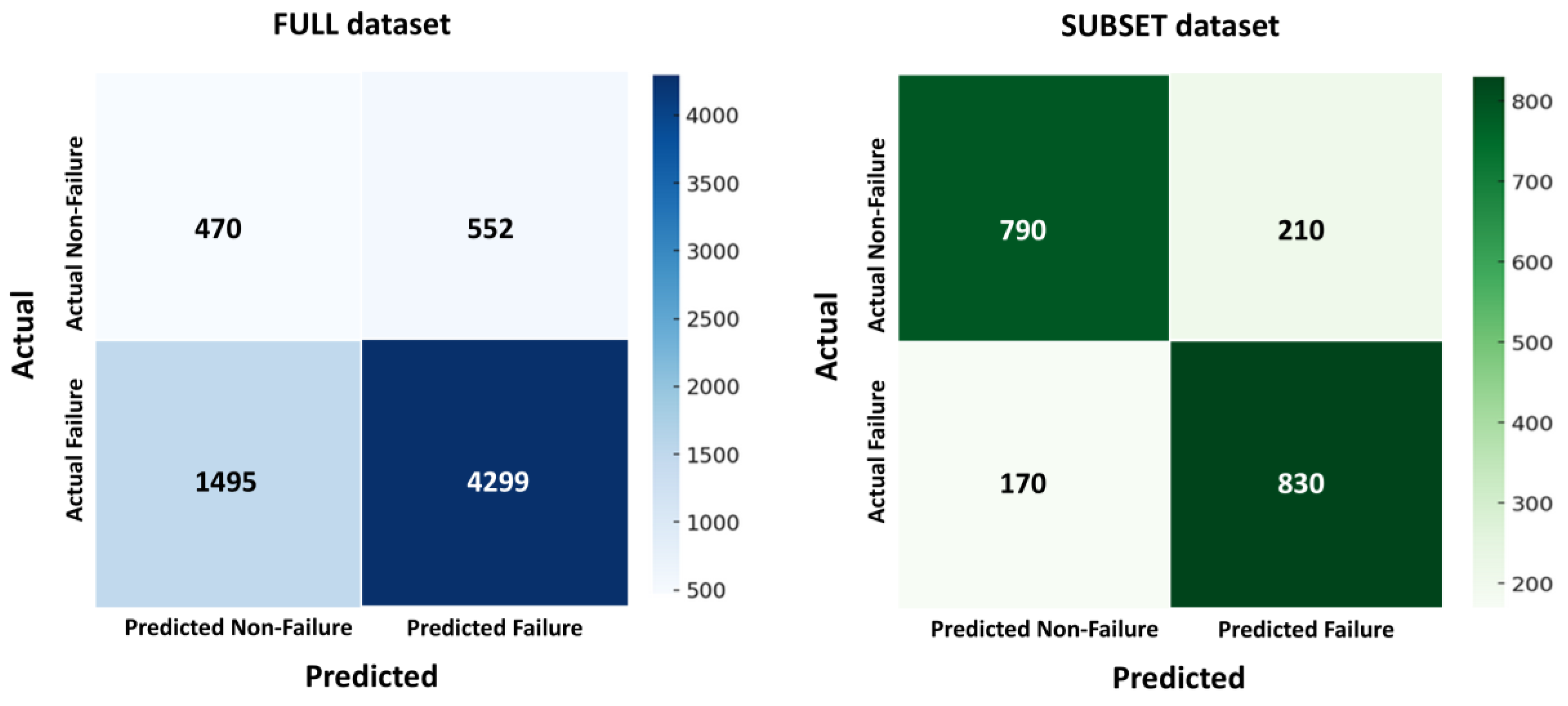

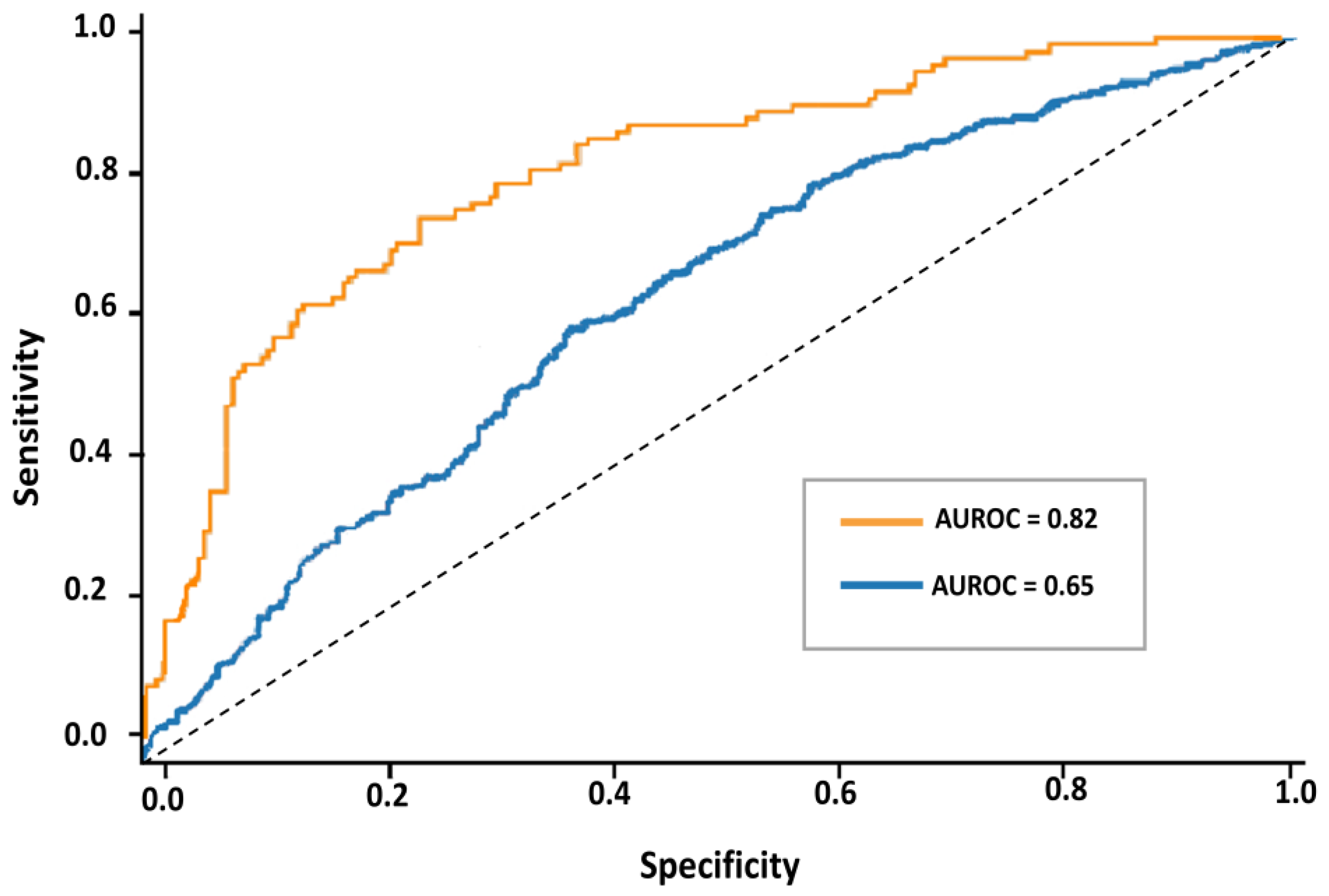

4.4. Predictive Modeling Results

4.5. Analytical Interpretation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANCFCC | Agence Nationale de la Conservation Foncière du Cadastre et de la Cartographie |

| AUC | Area Under the Curve |

| AUROC | Area Under the Receiver Operating Characteristic Curve |

| BI | Business Intelligence |

| CMMS | Computerized Maintenance Management Systems |

| CNN | Convolutional Neural Networks |

| EHRs | Electronic Health Records |

| FDA | Food and Drug Administration |

| FHIR | Fast Healthcare Interoperability Resources |

| FMEA | Failure Mode and Effects Analysis |

| IoMT | Internet of Medical Things |

| IoT | Internet of Things |

| k-NN | k-Nearest Neighbors |

| LDA | Latent Dirichlet Allocation |

| LMICs | Low- and Middle-Income Countries |

| MTBF | Mean Time Between Failures |

| MTTR | Mean Time To Repair |

| NLP | Natural Language Processing |

| PdM | Predictive Maintenance |

| RCM | Reliability-Centered Maintenance |

| RUL | Remaining Useful Life |

| SaMD | Software as a Medical Device |

| SVM | Support Vector Machines |

Appendix A

| N° | Variable Name | Type | Unit | Description | Missing (%) Before | Missing (%) After | Included in Core Dataset | |

|---|---|---|---|---|---|---|---|---|

| Identifiers & Provenance | 1 | internal_id | Categorical | Label | Internal identifier generated by the pipeline (e.g., iq_real_001) | 0% | 0% | Yes |

| 2 | equipment_id | Categorical | Identifier | Official hospital inventory number (e.g., 9608/19) | 0% | 0% | Yes | |

| 3 | hospital_id | Categorical | Code | Hospital code | 0% | 0% | Yes | |

| 4 | department | Categorical | Label | Clinical department where the device is used | 3% | 0% | Yes | |

| 5 | file_source | Categorical | Label | Excel file name used as data source | 0% | 0% | No (merged) | |

| 6 | sha256_checksum | Categorical | Hash | SHA-256 checksum ensuring data provenance and integrity | 0% | 0% | Yes | |

| 7 | file_uid | Categorical | Hash | Unique identifier automatically assigned to each imported Excel source file | 0% | 0% | No (technical) | |

| 8 | ingestion_timestamp | Temporal | ISO-Datetime | Exact time of ingestion (YYYY-MM-DD HH:MM:SS) logged for auditability | 0% | 0% | No (technical) | |

| 9 | last_update_timestamp | Temporal | ISO-Datetime | Last modification or validation timestamp of each record | NA | 0% | No (technical) | |

| 10 | checksum_verified | Categorical | Boolean | Indicates whether checksum integrity was verified (True/False) | NA | 0% | No (technical) | |

| 11 | data_source | Categorical | Label | Source of data (manual entry, GMAO export, external file, etc.) | 0% | 0% | No (technical) | |

| Contextual/Technical | 12 | equipment_designation | Categorical | Label | Designation or common name of the device | 2% | 0% | Yes |

| 13 | technology | Categorical | Label | Equipment technology (Analog/Digital/Hybrid) | 6% | 0% | Yes | |

| 14 | brand | Categorical | Label | Manufacturer brand | 7% | 0% | No (merged) | |

| 15 | model | Categorical | Label | Model or type | 9% | 0% | No (merged) | |

| 16 | brand_model | Categorical | Label | Unified brand–model label | NA | 0% | Yes | |

| 17 | acquisition_date | Temporal | DD/MM/YYYY | Date of acquisition | 10% | 0% | Yes | |

| 18 | commissioning_date | Temporal | DD/MM/YYYY | Date of commissioning (first use) | 10% | 0% | Yes | |

| 19 | Operational_Age | Numerical | Years | Operational_Age (time elapsed since commissioning) | NA | 0% | Yes | |

| 20 | warranty_status | Categorical | Yes/No | Indicates if the device is under warranty | 12% | 0% | Yes | |

| 21 | warranty_end_date | Temporal | DD/MM/YYYY | End date of warranty period | 12% | 0% | Yes | |

| 22 | estimated_end_of_life_date | Temporal | DD/MM/YYYY | Estimated end-of-life date of the equipment | 25% | NA | Yes | |

| 23 | service_status | Categorical | Label | Operational/Under repair/Retired | 5% | 0% | Yes | |

| 24 | spare_parts_used | Categorical | Label | Spare parts replaced | 18% | 5% | No | |

| 25 | CIₙ | Numerical (ordinal) | Scale 1–5 | Internal Criticality Index combining downtime, failure frequency, and clinical importance | NA | 0% | Yes | |

| 26 | location | Categorical | Label | Room or unit location | 20% | 8% | No | |

| 27 | supplier_name | Categorical | Label | Supplier or vendor name | 18% | NA | No | |

| Temporal Variables | 28 | intervention_date | Temporal | DD/MM/YYYY | Maintenance intervention date | 2% | 0% | Yes |

| 29 | failure_date | Temporal | DD/MM/YYYY | Failure occurrence date | 7% | 0% | Yes | |

| 30 | repair_date | Temporal | DD/MM/YYYY | Repair completion date | 9% | 0% | Yes | |

| 31 | downtime_hours | Numerical | Hours | Total hours of downtime | 12% | 0% | Yes | |

| 32 | repair_duration | Numerical | Hours | Duration of the repair | 15% | 0% | Yes | |

| 33 | intervention_year | Numerical | Year | Extracted year of intervention (for temporal grouping) | NA | 0% | Yes | |

| Maintenance/Failure | 34 | intervention_type | Categorical | Label | Curative/Preventive/Minor adjustment/External | 0% | 0% | Yes |

| 35 | failure_type | Categorical | Label | Failure category (electrical, mechanical, software…) | 5% | 0% | Yes | |

| 36 | failure_criticality | Categorical | Low/Med/High | Failure severity level | 20% | 0% | Yes | |

| 37 | intervention_status | Categorical | Label | Completed/Ongoing/Abandoned | 4% | 0% | Yes | |

| Derived Indicators | 38 | MTBF | Numerical | Days | Mean Time Between Failures | NA | 0% | Derived |

| 39 | MTTR | Numerical | Hours | Mean Time to Repair | NA | 0% | Derived | |

| 40 | FR | Numerical | %/year | Annualized Failure Rate normalized by equipment and time | NA | 0% | Derived | |

| 41 | DH | Numerical | Hours | Downtime Hours per intervention cycle | NA | 0% | Derived |

References

- Li, J.; Mao, Y.; Zhang, J. Construction of Medical Equipment Maintenance Network Management Platform Based on Big Data. Front. Phys. 2023, 11, 1105906. [Google Scholar] [CrossRef]

- Manchadi, O.; Ben-Bouazza, F.-E.; Jioudi, B. Predictive Maintenance in Healthcare System: A Survey. IEEE Access 2023, 11, 61313–61330. [Google Scholar] [CrossRef]

- Fakhkhari, H.; Bounabat, B.; Bennani, M.; Bekkali, R. Moroccan Patient-Centered Hospital Information System: Global Architecture. In Proceedings of the ArabWIC 6th Annual International Conference Research Track, Rabat, Morocco, 7–9 March 2019. [Google Scholar]

- Oufkir, L.; Oufkir, A.A. Understanding EHR Current Status and Challenges to a Nationwide Electronic Health Records Implementation in Morocco. Inform. Med. Unlocked 2023, 42, 10134. [Google Scholar] [CrossRef]

- Arab-Zozani, M.; Imani, A.; Doshmangir, L.; Dalal, K.; Bahreini, R. Assessment of Medical Equipment Maintenance Management: Proposed Checklist Using Iranian Experience. Biomed. Eng. OnLine 2021, 20, 49. [Google Scholar] [CrossRef]

- Woldeyohanins, A.E.; Molla, N.M.; Mekonen, A.W.; Wondimu, A. The Availability and Functionality of Medical Equipment and the Barriers to Their Use at Comprehensive Specialized Hospitals in the Amhara Region, Ethiopia. Front. Health Serv. 2025, 4, 1470234. [Google Scholar] [CrossRef]

- Abdul-Rahman, T.; Ghosh, S.; Lukman, L.; Bamigbade, G.B.; Oladipo, O.V.; Amarachi, O.R.; Olanrewaju, O.F.; Toluwalashe, S.; Awuah, W.A.; Aborode, A.T.; et al. Inaccessibility and Low Maintenance of Medical Data Archive in Low-Middle Income Countries: Mystery behind Public Health Statistics and Measures. J. Infect. Public Health 2023, 16, 1556–1561. [Google Scholar] [CrossRef]

- Hillebrecht, M.; Schmidt, C.; Saptoka, B.P.; Riha, J.; Nachtnebel, M.; Bärnighausen, T. Maintenance versus Replacement of Medical Equipment: A Cost-Minimization Analysis among District Hospitals in Nepal. BMC Health Serv. Res. 2022, 22, 1023. [Google Scholar] [CrossRef]

- Basri, E.I.; Abdul Razak, I.H.; Ab-Samat, H.; Kamaruddin, S. Preventive Maintenance (PM) Planning: A Review. J. Qual. Maint. Eng. 2017, 23, 114–143. [Google Scholar] [CrossRef]

- Wu, S. Preventive Maintenance Models: A Review. In Replacement Models with Minimal Repair; Tadj, L., Ouali, M.-S., Yacout, S., Ait-Kadi, D., Eds.; Springer: London, UK, 2011; pp. 129–140. ISBN 978-0-85729-215-5. [Google Scholar]

- Benisch, J.; Helm, B.; Bertrand-Krajewski, J.-L.; Bloem, S.; Cherqui, F.; Eichelmann, U.; Kroll, S.; Poelsma, P. Operation and Maintenance; IWA Publishing: London, UK, 2021. [Google Scholar] [CrossRef]

- Zhou, J.; Xu, B.; Fang, Z.; Zheng, X.; Tang, R.; Haroglu, H. Operations and Maintenance. In Digital Built Asset Management; Edward Elgar Publishing: Cheltenham, UK, 2024; pp. 161–189. ISBN 978-1-0353-2144-5. [Google Scholar]

- Fajrin, H.R.; Pramudya, T.; Supriyadi, K. The Development of a Medical Equipment Inventory Information System. In Proceedings of the 2024 IEEE International Conference on Artificial Intelligence and Mechatronics Systems (AIMS), Virtual Conference, 22–23 February 2024; pp. 1–6. [Google Scholar]

- Lin, Z.; Kang, J.; Wei, Y.; Zou, B. Maintenance Management Strategies for Medical Equipment in Healthcare Institutions: A Review. BME Horiz. 2024, 2, 135. [Google Scholar] [CrossRef]

- Gandhare, S.; Narad, S.; Kumar, P.; Madankar, T. Integrating Quantitative Parameters for Automating Medical Equipment Maintenance Using Industry 4.0 and FMEA. In Proceedings of the 2024 2nd DMIHER International Conference on Artificial Intelligence in Healthcare, Education and Industry (IDICAIEI), Wardha, India, 29–30 November 2024; pp. 1–6. [Google Scholar]

- Tripathi, R.; Mishra, V.K.; Maheshwari, H.; Tiwari, R.G.; Agarwal, A.K.; Gupta, A. Extrapolative Preservation Management of Medical Equipment through IoT. In Proceedings of the 2023 International Conference on Artificial Intelligence for Innovations in Healthcare Industries (ICAIIHI), Raipur, India, 29–30 December 2023; Volume 1, pp. 1–5. [Google Scholar]

- Shamayleh, A.; Awad, M.; Farhat, J. IoT Based Predictive Maintenance Management of Medical Equipment. J. Med. Syst. 2020, 44, 72. [Google Scholar] [CrossRef]

- Guissi, M.; Alaoui, M.H.E.Y.; Belarbi, L.; Chaik, A. IoT for Predictive Maintenance of Critical Medical Equipment in a Hospital Structure. Inform. Autom. Pomiary Gospod. Ochr. Śr. 2024, 14, 71–76. [Google Scholar] [CrossRef]

- Rahman, N.H.A.; Zaki, M.H.M.; Hasikin, K.; Razak, N.A.A.; Ibrahim, A.K.; Lai, K.W. Predicting Medical Device Failure: A Promise to Reduce Healthcare Facilities Cost through Smart Healthcare Management. PeerJ Comput. Sci. 2023, 9, e1279. [Google Scholar] [CrossRef] [PubMed]

- Ahmed Qaid, M.S.; Mohd Noor, A.; Norali, A.N.; Zakaria, Z.; Ahmad Firdaus, A.Z.; Abu Bakar, A.H.; Fook, C.Y. Remote Monitoring and Predictive Maintenance of Medical Devices. In Proceedings of the International e-Conference on Intelligent Systems and Signal Processing; Thakkar, F., Saha, G., Shahnaz, C., Hu, Y.-C., Eds.; Springer: Singapore, 2022; pp. 727–737. [Google Scholar]

- Shah Ershad Bin Mohd Azrul Shazril, M.H.; Mashohor, S.; Amran, M.E.; Fatinah Hafiz, N.; Rahman, A.A.; Ali, A.; Rasid, M.F.A.; Safwan, A.; Kamil, A.; Azilah, N.F. Predictive Maintenance Method Using Machine Learning for IoT Connected Computed Tomography Scan Machine. In Proceedings of the 2023 IEEE 2nd National Biomedical Engineering Conference (NBEC), Melaka, Malaysia, 5–7 September 2023; pp. 42–47. [Google Scholar]

- Li, X.; Williams, J.; Swanson, C.; Berg, T. A Machine Learning Approach to Predictive Maintenance: Remaining Useful Life and Motor Fault Analysis. Comput. Ind. Eng. 2025, 206, 111222. [Google Scholar] [CrossRef]

- Sabah, S.; Moussa, M.; Shamayleh, A. Predictive Maintenance Application in Healthcare. In Proceedings of the 2022 Annual Reliability and Maintainability Symposium (RAMS), Tucson, AZ, USA, 24–27 January 2022; pp. 1–9. [Google Scholar]

- Titah, M.; Bouchaala, M.A. An Ontology-Driven Model for Hospital Equipment Maintenance Management: A Case Study. J. Qual. Maint. Eng. 2024, 30, 409–433. [Google Scholar] [CrossRef]

- Habib Shah Ershad Mohd Azrul Shazril, M.; Mashohor, S.; Effendi Amran, M.; Fatinah Hafiz, N.; Mohd Ali, A.; Saiful Bin Naseri, M.; Rasid, M.F.A. Assessment of IoT-Driven Predictive Maintenance Strategies for Computed Tomography Equipment: A Machine Learning Approach. IEEE Access 2024, 12, 195505–195515. [Google Scholar] [CrossRef]

- Manchadi, O.; Ben-BOUAZZA, F.-E.; Dehbi, Z.E.O.; Said, Z.; Jioudi, B. Towards Industry 4.0: An IoT-Enabled Data-Driven Architecture for Predictive Maintenance in Pharmaceutical Manufacturing. In Proceedings of the International Conference on Advanced Intelligent Systems for Sustainable Development (AI2SD’2023), Marrakech, Morocco, 15–17 October 2023; Ezziyyani, M., Kacprzyk, J., Balas, V.E., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 28–45. [Google Scholar]

- Wang, B.; Rui, T.; Skinner, S.; Ayers-Comegys, M.; Gibson, J.; Williams, S. Medical Equipment Aging: Part I—Impact on Maintenance. J. Clin. Eng. 2024, 49, 52. [Google Scholar] [CrossRef]

- Amran, M.E.; Aziz, S.A.; Muhtazaruddin, M.N.; Masrom, M.; Haron, H.N.; Bani, N.A.; Mohd Izhar, M.A.; Usman, S.; Sarip, S.; Najamudin, S.S.; et al. Critical Assessment of Medical Devices on Reliability, Replacement Prioritization and Maintenance Strategy Criterion: Case Study of Malaysian Hospitals. Qual. Reliab. Eng. Int. 2024, 40, 970–1001. [Google Scholar] [CrossRef]

- Tettey, F.; Parupelli, S.K.; Desai, S. A Review of Biomedical Devices: Classification, Regulatory Guidelines, Human Factors, Software as a Medical Device, and Cybersecurity. Biomed. Mater. Devices 2024, 2, 316–341. [Google Scholar] [CrossRef]

- Peruničić, Ž.; Lalatović, I.; Spahić, L.; Ašić, A.; Pokvić, L.G.; Badnjević, A. Enhancing Mechanical Ventilator Reliability through Machine Learning Based Predictive Maintenance. Technol. Health Care 2025, 33, 1288–1297. [Google Scholar] [CrossRef]

- Zheng, F.; Sun, G.; Suo, Y.; Ma, H.; Feng, T. Research on Through-Flame Imaging Using Mid-Wave Infrared Camera Based on Flame Filter. Sensors 2024, 24, 6696. [Google Scholar] [CrossRef]

- Montes-Sánchez, J.M.; Uwate, Y.; Nishio, Y.; Vicente-Díaz, S.; Jiménez-Fernández, Á. Predictive Maintenance Edge Artificial Intelligence Application Study Using Recurrent Neural Networks for Early Aging Detection in Peristaltic Pumps. IEEE Trans. Reliab. 2024, 74, 3730–3744. [Google Scholar] [CrossRef]

- Zamzam, A.H.; Hasikin, K.; Wahab, A.K.A. Integrated Failure Analysis Using Machine Learning Predictive System for Smart Management of Medical Equipment Maintenance. Eng. Appl. Artif. Intell. 2023, 125, 106715. [Google Scholar] [CrossRef]

- Singgih, M.L.; Zakiyyah, F.F. Andrew Machine Learning for Predictive Maintenance: A Literature Review. In Proceedings of the 2024 Seventh International Conference on Vocational Education and Electrical Engineering (ICVEE), Malang, Indonesia, 30–31 October 2024; pp. 250–256. [Google Scholar]

- Ouardi, A.; Sekkaki, A.; Mammass, D. Towards an Inter-Cloud Architecture in Healthcare System. In Proceedings of the 2017 International Symposium on Networks, Computers and Communications (ISNCC), Marrakech, Morocco, 16–18 May 2017; pp. 1–6. [Google Scholar]

- Rezende, M.C.C.; Santos, R.P.; Coelli, F.C.; Almeida, R.M.V.R. Reliability Analysis Techniques Applied to Highly Complex Medical Equipment Maintenance. In Proceedings of the IX Latin American Congress on Biomedical Engineering and XXVIII Brazilian Congress on Biomedical Engineering, Florianópolis, Brazil, 24–28 October 2022; Marques, J.L.B., Rodrigues, C.R., Suzuki, D.O.H., Marino Neto, J., García Ojeda, R., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 184–192. [Google Scholar]

- Boppana, V.R. Data Analytics for Predictive Maintenance in Healthcare Equipment; EPH-International Journal of Business & Management Science: Perth, Australia, 2023. [Google Scholar]

- Daniel, C. Medical Device Maintenance Regimes in Healthcare Institutions. In Inspection of Medical Devices: For Regulatory Purposes; Badnjević, A., Cifrek, M., Magjarević, R., Džemić, Z., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 59–91. ISBN 978-3-031-43444-0. [Google Scholar]

- Haninie Abd Wahab, N.; Hasikin, K.; Wee Lai, K.; Xia, K.; Ying Taing, A.; Zhang, R. Predicting Medical Device Life Expectancy and Estimating Remaining Useful Life Using a Data-Driven Multimodal Framework. IEEE Access 2025, 13, 117300–117327. [Google Scholar] [CrossRef]

- Ahmed, R.; Nasiri, F.; Zayed, T. A Novel Neutrosophic-Based Machine Learning Approach for Maintenance Prioritization in Healthcare Facilities. J. Build. Eng. 2021, 42, 102480. [Google Scholar] [CrossRef]

- Jallal, M.; Serhier, Z.; Berrami, H.; Othmani, M.B. Telemedicine: The Situation in Morocco. In Studies in Health Technology and Informatics; IOS Press: Amsterdam, The Netherlands, 2023. [Google Scholar]

- Jallal, M.; Serhier, Z.; Berrami, H.; Othmani, M.B. Current State and Prospects of Telemedicine in Morocco: Analysis of Challenges, Initiatives, and Regulatory Framework. Cureus 2023, 15, e50963. [Google Scholar] [CrossRef] [PubMed]

- Mohssine, N.; Raji, I.; Lanteigne, G.; Amalik, A.; Chaouch, A. Impact organisationnel de la préparation à l’accréditation en établissement de santé au Maroc. Santé Publique 2015, 27, 503–513. [Google Scholar] [CrossRef] [PubMed]

- Ramadan, E.A.; Abu-Ghaleb, A.A.; El-Brawany, M.A. Medical Equipment Maintenance Management and Optimization in Healthcare Facilities: Literature Review. In Proceedings of the 2023 3rd International Conference on Electronic Engineering (ICEEM), Menouf, Egypt, 7–8 October 2023; pp. 1–11. [Google Scholar]

- Zinaoui, T.; El Khettab, M.K. La digitalisation des administrations publiques à l’ère de la pandémie. Commun. Organ. Rev. Sci. Francoph. Commun. Organ. 2022, 62, 143–161. [Google Scholar] [CrossRef]

- Mansoury, O.; Sebbani, M. Réflexion Sur le Système de Santé du Maroc Dans la Perspective de la Promotion de la Santé. Maghreb Rev. 2023, 48, 317–321. [Google Scholar] [CrossRef]

- Mahdaoui, M.; Kissani, N. Morocco’s Healthcare System: Achievements, Challenges, and Perspectives. Cureus 2023, 15, e41143. [Google Scholar] [CrossRef]

- Hoxha, K.; Hung, Y.W.; Irwin, B.R.; Grépin, K.A. Understanding the Challenges Associated with the Use of Data from Routine Health Information Systems in Low- and Middle-Income Countries: A Systematic Review. Health Inf. Manag. J. 2022, 51, 135–148. [Google Scholar] [CrossRef]

- Jidane, S.; Zidouh, S.; Belyamani, L. The Impact of Telemedicine in Morocco: A Transformative Shift in Healthcare Delivery. Int. J. Biomed. Eng. Clin. Sci. 2025, 11, 6–10. [Google Scholar] [CrossRef]

- Ahmad, A. Enhancing Hospital Efficiency through IoT and AI: A Smart Healthcare System. J. Comput. Sci. Appl. Eng. 2024, 2, 34–38. [Google Scholar] [CrossRef]

- Janjua, J.I.; Ghazal, T.M.; Abushiba, W.; Abbas, S. Optimizing Patient Outcomes with AI and Predictive Analytics in Healthcare. In Proceedings of the 2024 IEEE 65th International Scientific Conference on Power and Electrical Engineering of Riga Technical University (RTUCON), Riga, Latvia, 10–12 October 2024; pp. 1–6. [Google Scholar]

- Patnaik, S.K.; Kushagra, D.P.; Sahran, D.; Kalita, B.J.; Kumar, B.; Prusty, H.; Pandit, P. Optimising Medical Equipment Utilisation and Serviceability: A Data-Driven Approach through Insights from Five Healthcare Institutions. Med. J. Armed Forces India 2025, in press. [CrossRef]

- Alahmadi, K.M.; Mahmoud, E.R.I.; Imaduddin, F. Model Development to Improve the Predictive Maintenance Reliability of Medical Devices. Inform. Autom. Pomiary Gospod. Ochr. Śr. 2025, 15, 117–124. [Google Scholar] [CrossRef]

- Aljasmi, M.; Piya, S. Identification of Suitable Maintenance Strategy for Medical Devices in UAE Healthcare Facilities. In Proceedings of the 2024 IEEE International Conference on Technology Management, Operations and Decisions (ICTMOD), Sharjah, United Arab Emirates, 4–6 November 2024; pp. 1–8. [Google Scholar]

- Amitabh, K.; Mathur, A. Impact of Repair and Maintenance of Hospital Equipment on Health Services in Government Hospitals in North—Eastern Region of India. In Proceedings of the NIELIT’s International Conference on Communication, Electronics and Digital Technology, Guwahati, India, 16–17 February 2024; Shivakumara, P., Mahanta, S., Singh, Y.J., Eds.; Springer Nature: Singapore, 2024; pp. 411–422. [Google Scholar]

- Chaminda, J.L.P.; Dharmagunawardene, D.; Rohde, A.; Kularatna, S.; Hinchcliff, R. Implementation of a Multicomponent Program to Improve Effective Use and Maintenance of Medical Equipment in Sri Lankan Hospitals. WHO South-East Asia J. Public Health 2023, 12, 85. [Google Scholar] [CrossRef]

- Medenou, D.; Fagbemi, L.A.; Houessouvo, R.C.; Jossou, T.R.; Ahouandjinou, M.H.; Piaggio, D.; Kinnouezan, C.-D.A.; Monteiro, G.A.; Idrissou, M.A.Y.; Iadanza, E.; et al. Medical Devices in Sub-Saharan Africa: Optimal Assistance via Acomputerized Maintenance Management System (CMMS) in Benin. Health Technol. 2019, 9, 219–232. [Google Scholar] [CrossRef]

- Niyonambaza, I.; Zennaro, M.; Uwitonze, A. Predictive Maintenance (PdM) Structure Using Internet of Things (IoT) for Mechanical Equipment Used into Hospitals in Rwanda. Future Internet 2020, 12, 224. [Google Scholar] [CrossRef]

- Gallab, M.; Ahidar, I.; Zrira, N.; Ngote, N. Towards a Digital Predictive Maintenance (DPM): Healthcare Case Study. Procedia Comput. Sci. 2024, 232, 3183–3194. [Google Scholar] [CrossRef]

- Castañeira, M.; Rubio, D.; Salguero, M.G.; Ponce, S.; Madrid, F. Optimizing Biomedical Equipment Management Through a Business Intelligence Platform. In Proceedings of the Advances in Bioengineering and Clinical Engineering, Buenos Aires, Argentina, 3–6 October 2023; Ballina, F.E., Armentano, R., Acevedo, R.C., Meschino, G.J., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 114–121. [Google Scholar]

- Fan, C.; Chen, M.; Wang, X.; Wang, J.; Huang, B. A Review on Data Preprocessing Techniques Toward Efficient and Reliable Knowledge Discovery from Building Operational Data. Front. Energy Res. 2021, 9, 652801. [Google Scholar] [CrossRef]

- Brand, D.; Singh, J.A.; McKay, A.G.N.; Cengiz, N.; Moodley, K. Data Sharing Governance in Sub-Saharan Africa during Public Health Emergencies: Gaps and Guidance. S. Afr. J. Sci. 2023, 118, 11–12. [Google Scholar] [CrossRef]

- Sarker, I.H. Data Science and Analytics: An Overview from Data-Driven Smart Computing, Decision-Making and Applications Perspective. SN Comput. Sci. 2021, 2, 377. [Google Scholar] [CrossRef]

- Kandel, S.; Paepcke, A.; Hellerstein, J.; Heer, J. Wrangler: Interactive Visual Specification of Data Transformation Scripts. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 3363–3372. [Google Scholar]

- Barapatre, D.; Vijayalakshmi, A. Data Preparation on Large Datasets for Data Science. Asian J. Pharm. Clin. Res. 2017, 10, 485–488. [Google Scholar] [CrossRef]

- Dhillon, B.S. Maintainability, Maintenance, and Reliability for Engineers; CRC Press: Boca Raton, FL, USA, 2006; ISBN 978-0-429-12821-9. [Google Scholar]

- Iadanza, E.; Gonnelli, V.; Satta, F.; Gherardelli, M. Evidence-Based Medical Equipment Management: A Convenient Implementation. Med. Biol. Eng. Comput. 2019, 57, 2215–2230. [Google Scholar] [CrossRef] [PubMed]

- Cinar, E.; Kalay, S.; Saricicek, I. A Predictive Maintenance System Design and Implementation for Intelligent Manufacturing. Machines 2022, 10, 1006. [Google Scholar] [CrossRef]

- Zamzam, A.H.; Al-Ani, A.K.I.; Wahab, A.K.A.; Lai, K.W.; Satapathy, S.C.; Khalil, A.; Azizan, M.M.; Hasikin, K. Prioritisation Assessment and Robust Predictive System for Medical Equipment: A Comprehensive Strategic Maintenance Management. Front. Public Health 2021, 9, 782203. [Google Scholar] [CrossRef]

- Pakala, A.; Shah, D.; Jha, S. Advancing Predictive Maintenance: A Data-Driven Approach for Accurate Equipment Failure Prediction. In Proceedings of the Industry 4.0 and Advanced Manufacturing, Volume 1; Chakrabarti, A., Suwas, S., Arora, M., Eds.; Springer Nature: Singapore, 2025; pp. 219–228. [Google Scholar]

- Taouabit, O.; Touhami, F.; Elmoukhtar, M. Transformation digitale de l’administration publique au Maroc et son impact sur l’expérience utilisateur: Cas des services rendus aux notaires par l’Agence Nationale de la Conservation Foncière du Cadastre et de la Cartographie. Int. J. Account. Finance Audit. Manag. Econ. 2023, 4, 384–401. [Google Scholar] [CrossRef]

- Mandel, J.C.; Kreda, D.A.; Mandl, K.D.; Kohane, I.S.; Ramoni, R.B. SMART on FHIR: A Standards-Based, Interoperable Apps Platform for Electronic Health Records. J. Am. Med. Inform. Assoc. 2016, 23, 899–908. [Google Scholar] [CrossRef]

- Zhou, H.; Liu, Q.; Liu, H.; Chen, Z.; Li, Z.; Zhuo, Y.; Li, K.; Wang, C.; Huang, J. Healthcare Facilities Management: A Novel Data-Driven Model for Predictive Maintenance of Computed Tomography Equipment. Artif. Intell. Med. 2024, 149, 102807. [Google Scholar] [CrossRef]

| Stage (M) | Transformation | Description |

|---|---|---|

| M1 | Removal of non-tabular rows | Elimination of administrative headers and unstructured elements in rows 1 to 5 at the top of source files. |

| M1 | Provenance logging | Recording file name, sheet, UTC timestamp, and the SHA-256 file fingerprint, plus parser version and mapping version, for auditability. |

| M2 | Date format harmonization | Systematic conversion of dates to the DD/MM/YYYY format (as used in Moroccan hospitals), with explicit parsing of legacy formats. |

| M2 | Inventory ID normalization | Standardization of equipment and inventory identifiers with consistent casing and padding. |

| M2 | Unique identifier assignment | Assignment of standardized equipment IDs (e.g., EQP_REAL_00001) to ensure a one-to-one mapping between physical units and records; IDs are generated sequentially and linked to hospital and legacy inventory codes to preserve traceability. |

| M2 | Duplicate elimination | Removal of exact or near-duplicates using the composite key {equipment ID, failure date, intervention type}. |

| M2 | Column disambiguation | Splitting vague fields such as Summary, Model/Type, and Room into distinct columns. |

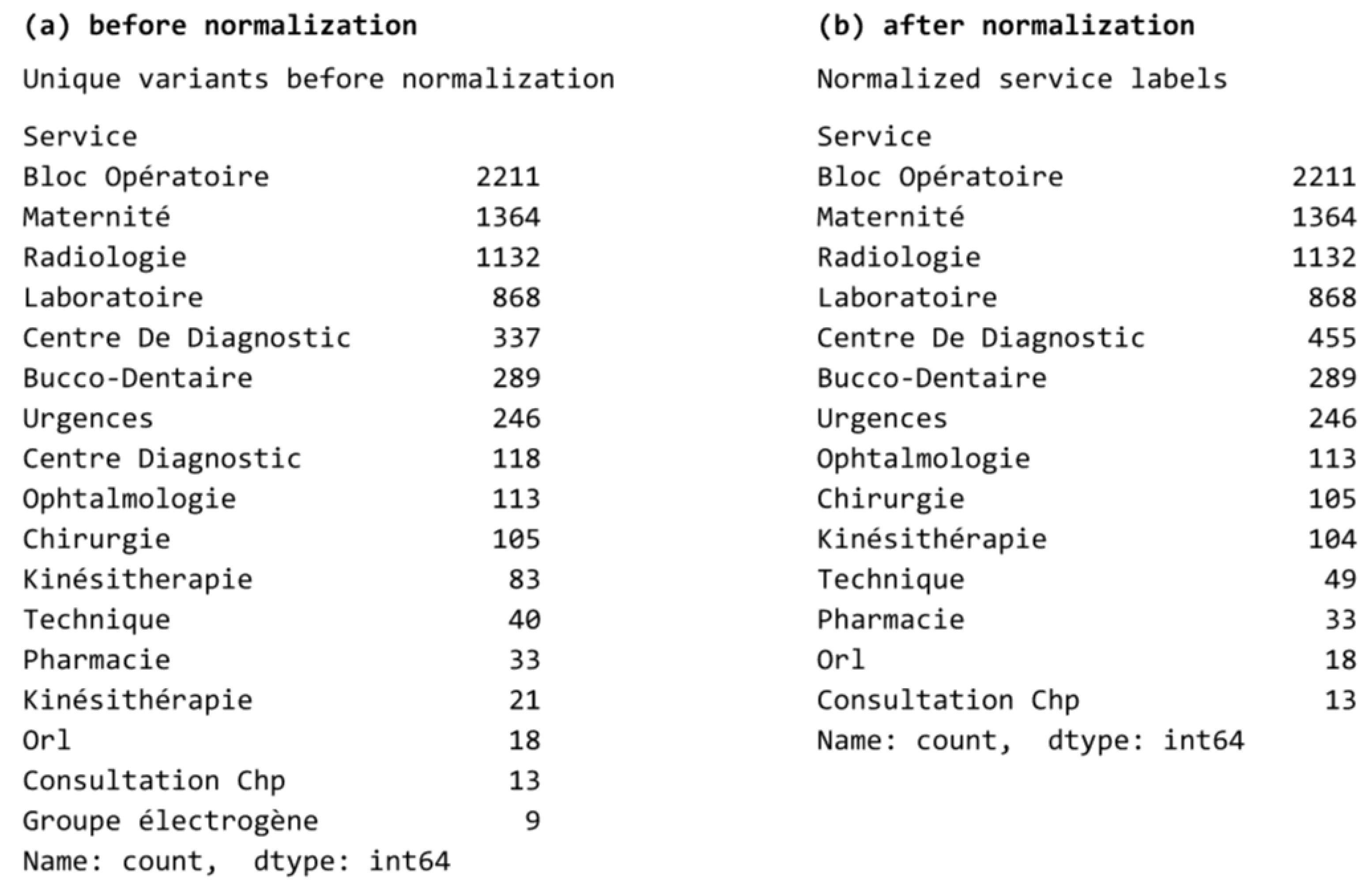

| M3 | Label normalization | Unification of department names, with FR variants mapped to canonical labels to reduce label variance. |

| M3 | Failure type categorization | Grouping of raw descriptions into homogeneous classes such as electrical, mechanical, and software. |

| M4 | Derivation of analytical variables | Computation of normalized reliability indicators including Failure Rate (FR), Mean Time Between Failures (MTBF), Mean Time to Repair (MTTR), and Downtime Hours (DH). |

| M4 | Time aware computation | Features computed on right-closed windows to avoid temporal information leakage. |

| M5 | Variable type definition | Explicit classification of variables into temporal, categorical, or quantitative types. |

| M5 | Missing value handling | Simple imputations or informed deletions based on business rules. |

| M6 | Final data structuring | Assembly of a normalized tabular dataset with 26 interoperable variables and a fixed column order; schema versioning (e.g., BioMedStruct_Schema_CST v1.0.0). |

| M6 | Documentation assets | Delivery of a prediction-ready dataset, a data dictionary, a validation report, and provenance logs. |

| M7 | Integration readiness deliverables | Packaging of the prediction-ready dataset into standardized distribution formats (CSV/Parquet) with fixed schema and versioned releases, complemented by interoperability assets to support adoption in biomedical maintenance workflows, including CMMS integration, inter-hospital data sharing, IoT connectivity, and risk-scoring dashboards. |

| Variable Name | Type | Unit | Description | Missing (%) Before | Missing (%) After | |

|---|---|---|---|---|---|---|

| 1 | internal_id | Categorical | Label | Internal identifier generated by the pipeline (e.g., iq_real_001) | 0% | 0% |

| 2 | equipment_id | Categorical | Identifier | Official hospital inventory number (e.g., 9608/19) | 0% | 0% |

| 3 | hospital_id | Categorical | Code | Hospital code | 0% | 0% |

| 4 | department | Categorical | Label | Clinical department where the device is used | 3% | 0% |

| 5 | sha256_checksum | Categorical | Hash | SHA-256 checksum ensuring data provenance and integrity | 0% | 0% |

| 6 | equipment_designation | Categorical | Label | Designation or common name of the device | 2% | 0% |

| 7 | technology | Categorical | Label | Equipment technology (Analog/Digital/Hybrid) | 6% | 0% |

| 8 | brand_model | Categorical | Label | Unified brand–model label | NA | 0% |

| 9 | acquisition_date | Temporal | DD/MM/YYYY | Date of acquisition | 10% | 0% |

| 10 | commissioning_date | Temporal | DD/MM/YYYY | Date of commissioning (first use) | 10% | 0% |

| 11 | Operational_Age | Numerical | Years | Operational_Age (time elapsed since commissioning) | NA | 0% |

| 12 | warranty_status | Categorical | Yes/No | Indicates if the device is under warranty | 12% | 0% |

| 13 | warranty_end_date | Temporal | DD/MM/YYYY | End date of warranty period | 12% | 0% |

| 14 | estimated_end_of_life_date | Temporal | DD/MM/YYYY | Estimated end-of-life date of the equipment | 25% | NA |

| 15 | service_status | Categorical | Label | Operational/Under repair/Retired | 5% | 0% |

| 16 | CIₙ | Numerical (ordinal) | Scale 1–5 | Internal Criticality Index combining downtime, failure frequency, and clinical importance | NA | 0% |

| 17 | intervention_date | Temporal | DD/MM/YYYY | Maintenance intervention date | 2% | 0% |

| 18 | failure_date | Temporal | DD/MM/YYYY | Failure occurrence date | 7% | 0% |

| 19 | repair_date | Temporal | DD/MM/YYYY | Repair completion date | 9% | 0% |

| 20 | downtime_hours | Numerical | Hours | Total hours of downtime | 12% | 0% |

| 21 | repair_duration | Numerical | Hours | Duration of the repair | 15% | 0% |

| 22 | intervention_year | Numerical | Year | Extracted year of intervention (for temporal grouping) | NA | 0% |

| 23 | intervention_type | Categorical | Label | Curative/Preventive/Minor adjustment/External | 0% | 0% |

| 24 | failure_type | Categorical | Label | Failure category (electrical, mechanical, software…) | 5% | 0% |

| 25 | failure_criticality | Categorical | Low/Med/High | Failure severity level | 20% | 0% |

| 26 | intervention_status | Categorical | Label | Completed/Ongoing/Abandoned | 4% | 0% |

| Indicator | Estimated Value |

|---|---|

| Total number of records | 6816 |

| Total number of tracked equipment | 780 |

| Number of equipment categories | 410 |

| Number of identified failure types | 2300 |

| Total number of clinical departments covered | 30 |

| Indicator | Raw Value | Corrected Value | Unit |

|---|---|---|---|

| MTTR | 67 h | 42 h | Hours |

| DH | 102 | 68 | hours/device·year |

| Dataset | AUROC Mean ± SD (95% CI) | F1-Macro Mean ± SD | Accuracy Mean ± SD |

|---|---|---|---|

| Full (6816) | 0.65 ± 0.04 (0.61–0.69) | 0.47 ± 0.05 | 0.71 ± 0.03 |

| Subset (2000) | 0.82 ± 0.03 (0.76–0.87) | 0.66 ± 0.04 | 0.79 ± 0.02 |

| Dataset | AUROC [95% CI] | F1-Macro | Accuracy |

|---|---|---|---|

| Full (6816) | 0.63 [0.61–0.67] | 0.46 | 0.70 |

| Subset (2000) | 0.80 [0.77–0.83] | 0.65 | 0.78 |

| Classifier | Evaluation Metric | Full (6816) | Subset (2000) | Δ (Subset−Full) |

|---|---|---|---|---|

| Random Forest | AUROC | 0.65 [0.63–0.67] | 0.80 [0.77–0.83] | +0.15 |

| F1-macro | 0.44 | 0.78 | +0.34 | |

| Accuracy | 0.70 | 0.81 | +0.11 | |

| Logistic Regression | AUROC | 0.61 [0.58–0.64] | 0.72 [0.69–0.76] | +0.11 |

| F1-macro | 0.41 | 0.66 | +0.25 | |

| Accuracy | 0.68 | 0.74 | +0.06 |

| Training Period → Testing Period | Full (6816) | Subset (2000) |

|---|---|---|

| 2014–2017 → 2018 | 0.64 | 0.79 |

| 2014–2018 → 2019 | 0.65 | 0.80 |

| 2014–2019 → 2020 | 0.62 | 0.81 |

| 2014–2020 → 2021 | 0.63 | 0.80 |

| 2014–2021 → 2022 | 0.65 | 0.79 |

| Dataset | TP | FN | TN | FP |

|---|---|---|---|---|

| Full (6816) | 0.12 | 0.73 | 0.18 | 0.07 |

| Subset (2000) | 0.38 | 0.12 | 0.37 | 0.13 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moufid, J.; Koulali, R.; Moussaid, K.; Abghour, N. Toward Predictive Maintenance of Biomedical Equipment in Moroccan Public Hospitals: A Data-Driven Structuring Approach. Appl. Sci. 2025, 15, 10983. https://doi.org/10.3390/app152010983

Moufid J, Koulali R, Moussaid K, Abghour N. Toward Predictive Maintenance of Biomedical Equipment in Moroccan Public Hospitals: A Data-Driven Structuring Approach. Applied Sciences. 2025; 15(20):10983. https://doi.org/10.3390/app152010983

Chicago/Turabian StyleMoufid, Jihanne, Rim Koulali, Khalid Moussaid, and Noreddine Abghour. 2025. "Toward Predictive Maintenance of Biomedical Equipment in Moroccan Public Hospitals: A Data-Driven Structuring Approach" Applied Sciences 15, no. 20: 10983. https://doi.org/10.3390/app152010983

APA StyleMoufid, J., Koulali, R., Moussaid, K., & Abghour, N. (2025). Toward Predictive Maintenance of Biomedical Equipment in Moroccan Public Hospitals: A Data-Driven Structuring Approach. Applied Sciences, 15(20), 10983. https://doi.org/10.3390/app152010983