Evaluating Pupillometry as a Tool for Assessing Facial and Emotional Processing in Nonhuman Primates

Abstract

:1. Introduction

- (1)

- This study investigates the potential of pupil size variations as indicators of emotional responses in NHPs. Furthermore, it aims to evaluate the effectiveness of changes in pupil size as a method for interpreting the emotional states of animals.

- (2)

- By analyzing the pupillary reactions of NHPs to facial imagery, it can differentiate their emotional responses to faces from those of humans.

- (3)

- Ultimately, this research seeks to explore the viability of pupil responses as a biomarker for deficits in face processing, as such impairments are a significant hallmark of several neurological disorders.

2. Related Works

3. Materials and Methods

3.1. Animals

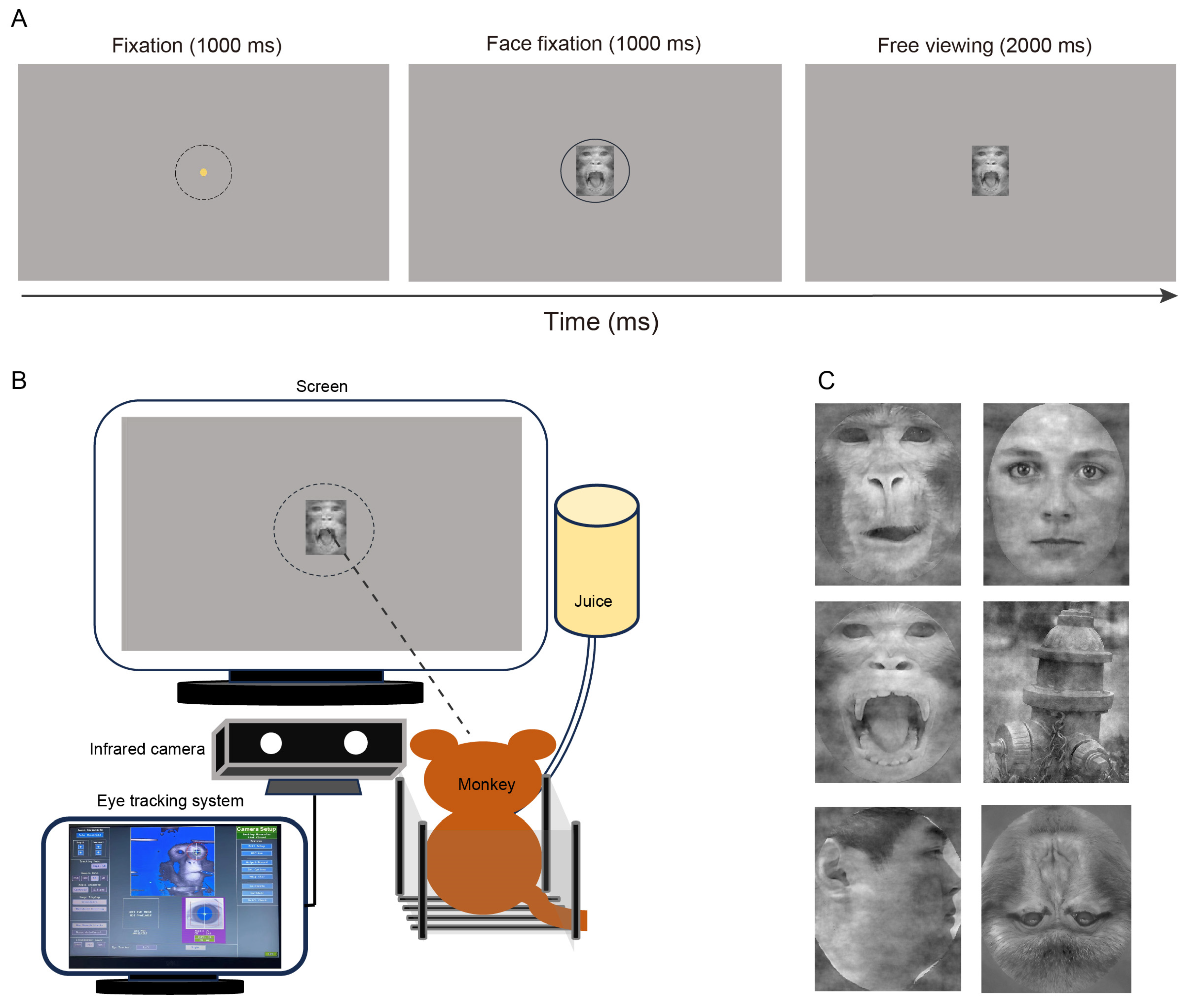

3.2. Experimental Procedures

3.3. Experiment 1: Responses to Different Animals and Objects

3.4. Experiment 2: Responses to Monkey and Human Facial Expressions

3.5. Experiment 3: Responses to Faces of Different Viewing Angles

3.6. Experiment 4: Responses to Faces of Different Orientations

3.7. Data Analysis

3.8. Statistical Analysis

4. Results

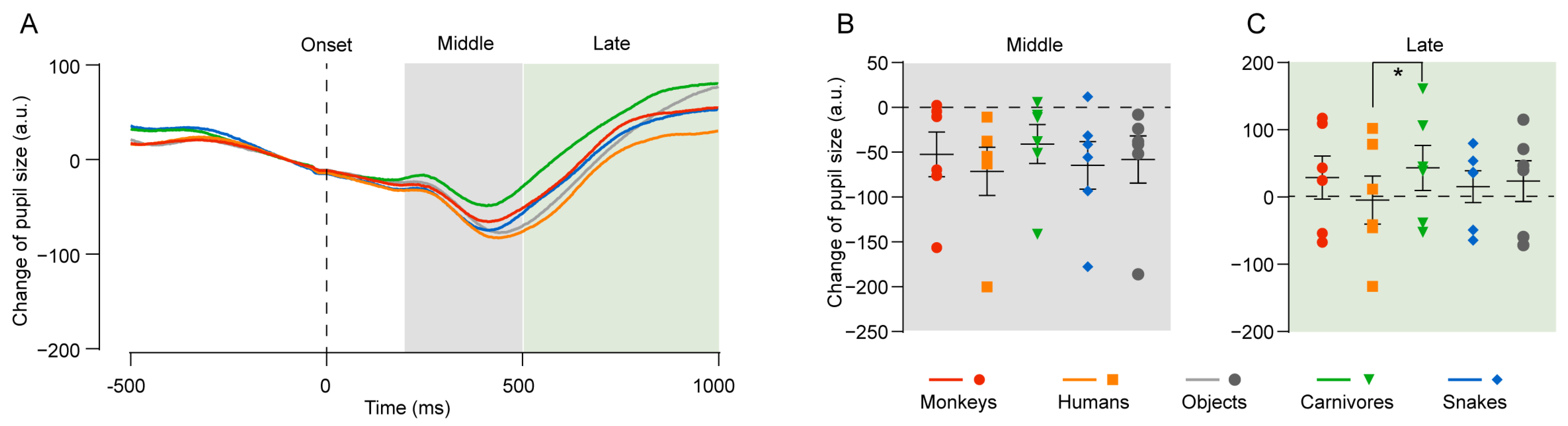

4.1. Experiment 1: Pupil Change in Response to Face Images of Different Species

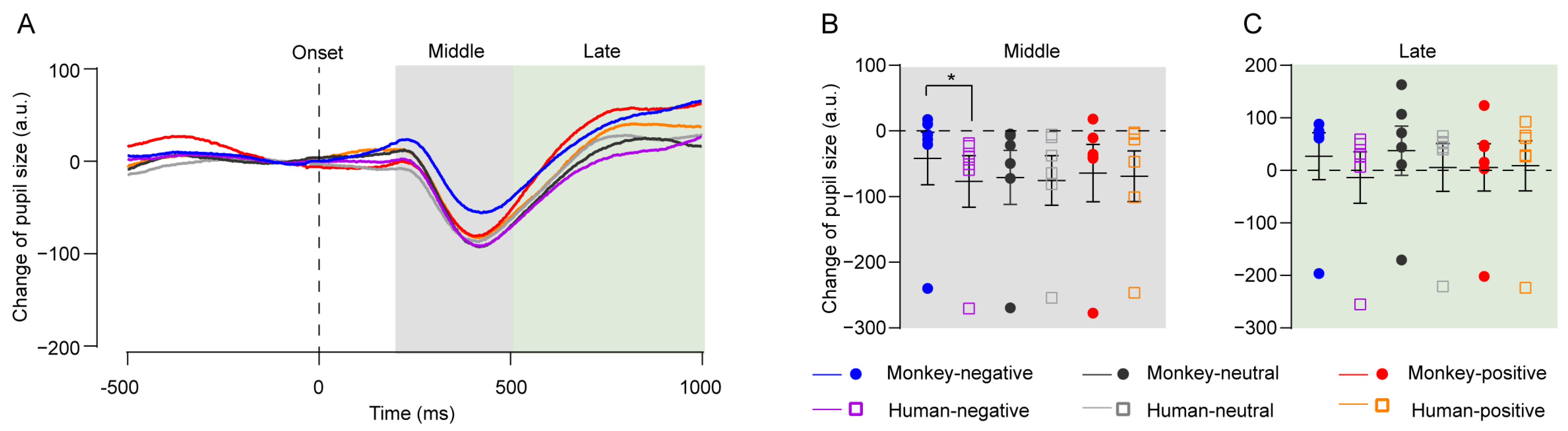

4.2. Experiment 2: Pupil Change to Facial Expressions of Humans and Monkeys

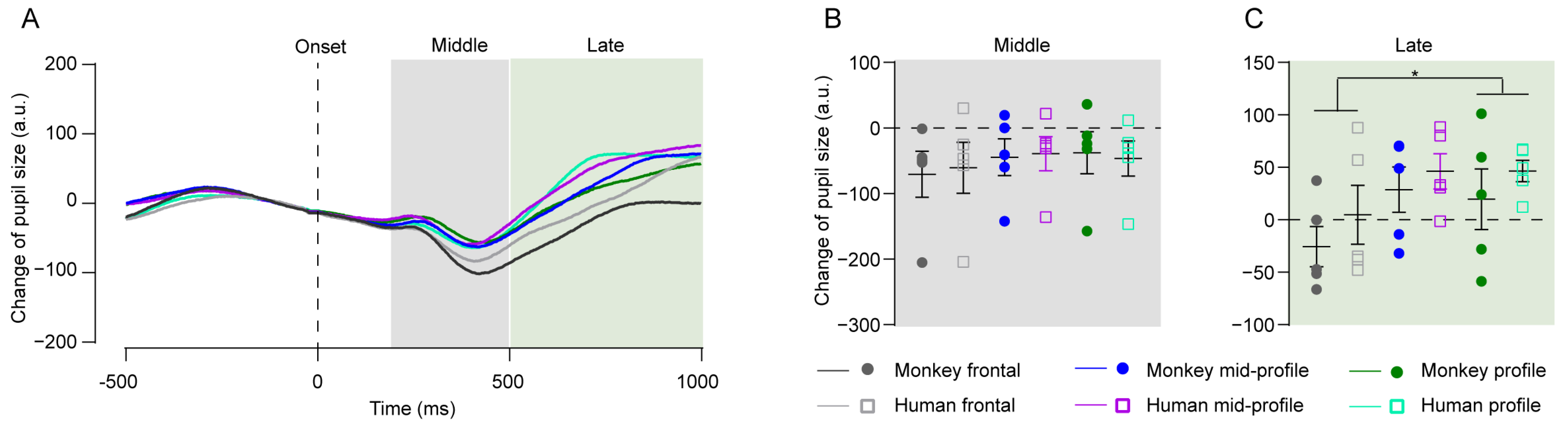

4.3. Experiment 3: Pupil Size Change in Response to Face Images with Different Viewing Angles

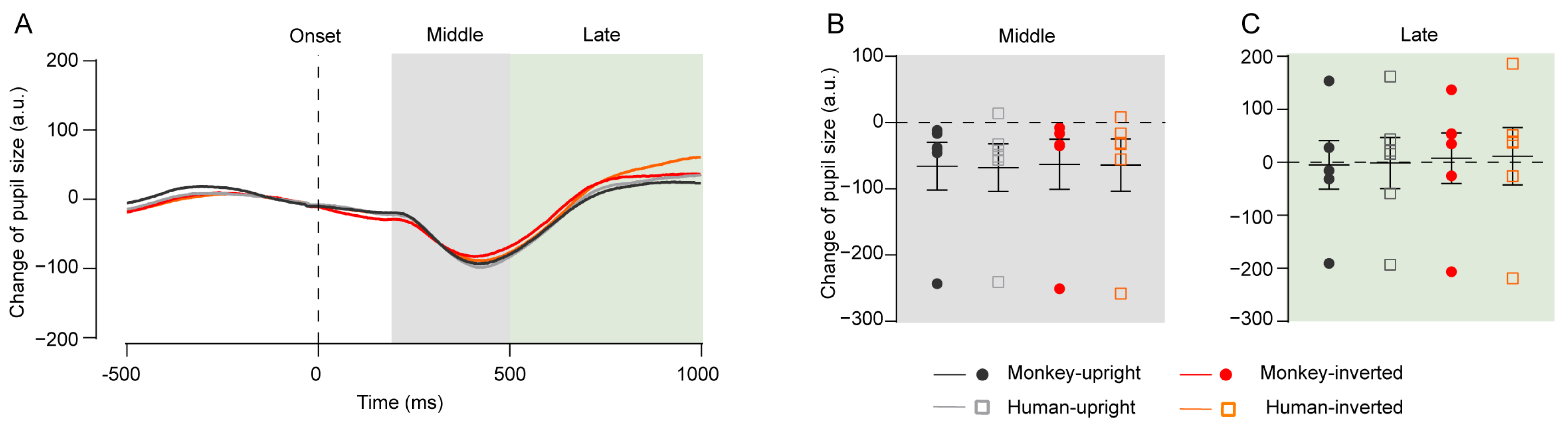

4.4. Experiment 4: Pupil Size Change in Response to Upright and Inverted Faces

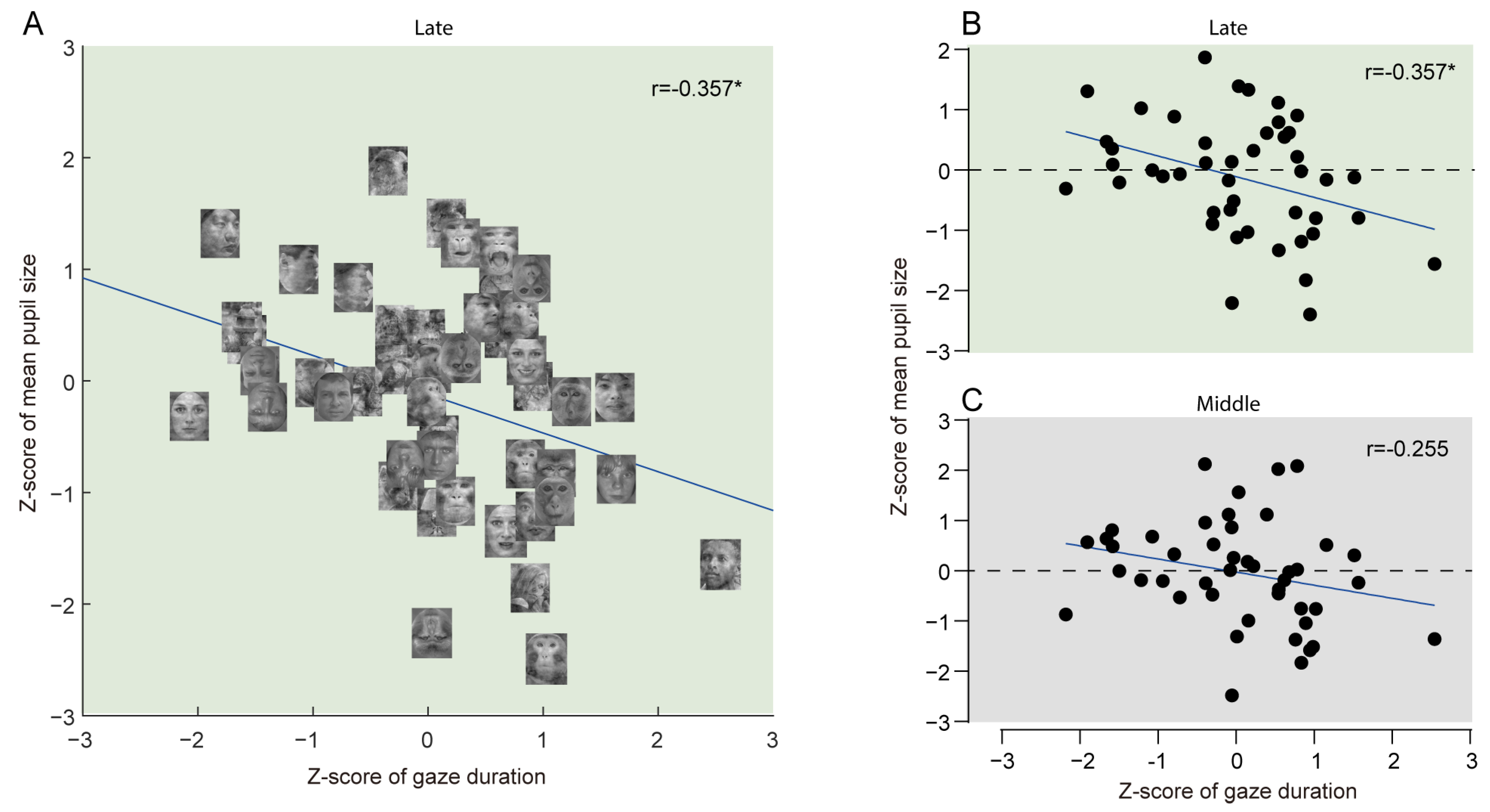

4.5. Correlation Between Gaze Duration and Mean Pupil Size

5. Discussion

5.1. Pupil Size Changes in Face Processing

5.2. Limitations of Pupillometry in Exploring Face Processing

5.3. Conclusions and Directions for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hesse, J.K.; Tsao, D.Y. The macaque face patch system: A turtle’s underbelly for the brain. Nat. Rev. Neurosci. 2020, 21, 695–716. [Google Scholar] [CrossRef] [PubMed]

- Dahl, C.D.; Wallraven, C.; Bülthoff, H.H.; Logothetis, N.K. Humans and macaques employ similar face-processing strategies. Curr. Biol. 2009, 19, 509–513. [Google Scholar] [CrossRef] [PubMed]

- Shepherd, S.V.; Steckenfinger, S.A.; Hasson, U.; Ghazanfar, A.A. Human-monkey gaze correlations reveal convergent and divergent patterns of movie viewing. Curr. Biol. 2010, 20, 649–656. [Google Scholar] [CrossRef]

- Dinh, H.T.; Nishimaru, H.; Matsumoto, J.; Takamura, Y.; Le, Q.V.; Hori, E.; Maior, R.S.; Tomaz, C.; Tran, A.H.; Ono, T.; et al. Superior Neuronal Detection of Snakes and Conspecific Faces in the Macaque Medial Prefrontal Cortex. Cereb. Cortex 2018, 28, 2131–2145. [Google Scholar] [CrossRef]

- Gothard, K.M.; Battaglia, F.P.; Erickson, C.A.; Spitler, K.M.; Amaral, D.G. Neural responses to facial expression and face identity in the monkey amygdala. J. Neurophysiol. 2007, 97, 1671–1683. [Google Scholar] [CrossRef]

- Sugase-Miyamoto, Y.; Matsumoto, N.; Ohyama, K.; Kawano, K. Face inversion decreased information about facial identity and expression in face-responsive neurons in macaque area TE. J. Neurosci. 2014, 34, 12457–12469. [Google Scholar] [CrossRef]

- Desimone, R.; Albright, T.; Gross, C.; Bruce, C. Stimulus-selective properties of inferior temporal neurons in the macaque. J. Neurosci. 1984, 4, 2051–2062. Available online: https://www.jneurosci.org/content/jneuro/4/8/2051.full.pdf (accessed on 1 August 2024). [CrossRef]

- Perrett, D.I.; Oram, M.W.; Harries, M.H.; Bevan, R.; Hietanen, J.K.; Benson, P.J.; Thomas, S. Viewer-centred and object-centred coding of heads in the macaque temporal cortex. Exp. Brain Res. 1991, 86, 159–173. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-0025734432&doi=10.1007%2fBF00231050&partnerID=40&md5=f116a3a935f8853eb7f2b018e3bbf82a (accessed on 1 January 2024). [CrossRef]

- Perrett, D.I.; Mistlin, A.J.; Chitty, A.J. Visual neurones responsive to faces. Trends Neurosci. 1987, 10, 358–364. Available online: https://www.sciencedirect.com/science/article/pii/0166223687900713 (accessed on 1 September 2024). [CrossRef]

- Perrett, D.I.; Rolls, E.T.; Caan, W. Visual neurones responsive to faces in the monkey temporal cortex. Exp. Brain Res. 1982, 47, 329–342. [Google Scholar] [CrossRef]

- Joshi, S.; Gold, J.I. Pupil Size as a Window on Neural Substrates of Cognition. Trends Cogn. Sci. 2020, 24, 466–480. [Google Scholar] [CrossRef] [PubMed]

- Ferencová, N.; Višňovcová, Z.; Bona Olexová, L.; Tonhajzerová, I. Eye pupil—A window into central autonomic regulation via emotional/cognitive processing. Physiol. Res. 2021, 70, S669–S682. [Google Scholar] [CrossRef] [PubMed]

- Ebitz, R.B.; Moore, T. Both a Gauge and a Filter: Cognitive Modulations of Pupil Size. Front. Neurol. 2019, 9, 1190. [Google Scholar] [CrossRef] [PubMed]

- Gamlin, P.D.; McDougal, D.H.; Pokorny, J.; Smith, V.C.; Yau, K.W.; Dacey, D.M. Human and macaque pupil responses driven by melanopsin-containing retinal ganglion cells. Vis. Res. 2007, 47, 946–954. [Google Scholar] [CrossRef]

- Arnsten, A.F.; Goldman-Rakic, P.S. Selective prefrontal cortical projections to the region of the locus coeruleus and raphe nuclei in the rhesus monkey. Brain Res. 1984, 306, 9–18. [Google Scholar] [CrossRef] [PubMed]

- Porrino, L.J.; Goldman-Rakic, P.S. Brainstem innervation of prefrontal and anterior cingulate cortex in the rhesus monkey revealed by retrograde transport of HRP. J. Comp. Neurol. 1982, 205, 63–76. [Google Scholar] [CrossRef] [PubMed]

- Morrison, J.H.; Foote, S.L.; O’Connor, D.; Bloom, F.E. Laminar, tangential and regional organization of the noradrenergic innervation of monkey cortex: Dopamine-beta-hydroxylase immunohistochemistry. Brain Res. Bull. 1982, 9, 309–319. [Google Scholar] [CrossRef] [PubMed]

- Steele, G.E.; Weller, R.E. Subcortical connections of subdivisions of inferior temporal cortex in squirrel monkeys. Vis. Neurosci. 1993, 10, 563–583. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-0027603502&doi=10.1017%2fS0952523800004776&partnerID=40&md5=11acec2b634f23922c4ae5c7d37ebb22 (accessed on 31 May 2024). [CrossRef]

- Leichnetz, G.R. Preoccipital cortex receives a differential input from the frontal eye field and projects to the pretectal olivary nucleus and other visuomotor-related structures in the rhesus monkey. Vis. Neurosci. 1990, 5, 123–133. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-0025477761&doi=10.1017%2fS095252380000016X&partnerID=40&md5=2faad4f2ef1de2448a4727adec881e17 (accessed on 15 August 2024). [CrossRef]

- Joshi, S.; Li, Y.; Kalwani, R.M.; Gold, J.I. Relationships between Pupil Diameter and Neuronal Activity in the Locus Coeruleus, Colliculi, and Cingulate Cortex. Neuron 2016, 89, 221–234. Available online: https://www.sciencedirect.com/science/article/pii/S089662731501034X (accessed on 6 January 2024). [CrossRef]

- Ebitz, R.B.; Moore, T. Selective Modulation of the Pupil Light Reflex by Microstimulation of Prefrontal Cortex. J. Neurosci. 2017, 37, 5008–5018. Available online: https://www.jneurosci.org/content/jneuro/37/19/5008.full.pdf (accessed on 10 May 2024). [CrossRef] [PubMed]

- Lehmann, S.J.; Corneil, B.D. Transient Pupil Dilation after Subsaccadic Microstimulation of Primate Frontal Eye Fields. J. Neurosci. 2016, 36, 3765–3776. [Google Scholar] [CrossRef]

- Murphy, P.R.; O’Connell, R.G.; O’Sullivan, M.; Robertson, I.H.; Balsters, J.H. Pupil diameter covaries with BOLD activity in human locus coeruleus. Hum. Brain Mapp. 2014, 35, 4140–4154. [Google Scholar] [CrossRef]

- Kuraoka, K.; Nakamura, K. Facial temperature and pupil size as indicators of internal state in primates. Neurosci. Res. 2022, 175, 25–37. [Google Scholar] [CrossRef] [PubMed]

- Liu, N.; Hadj-Bouziane, F.; Jones, K.B.; Turchi, J.N.; Averbeck, B.B.; Ungerleider, L.G. Oxytocin modulates fMRI responses to facial expression in macaques. Proc. Natl. Acad. Sci. USA 2015, 112, E3123–E3130. [Google Scholar] [CrossRef]

- Xu, P.; Peng, S.; Luo, Y.J.; Gong, G. Facial expression recognition: A meta-analytic review of theoretical models and neuroimaging evidence. Neurosci. Biobehav. Rev. 2021, 127, 820–836. [Google Scholar] [CrossRef]

- Adolphs, R.; Tranel, D.; Damasio, H.; Damasio, A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 1994, 372, 669–672. [Google Scholar] [CrossRef]

- Barat, E.; Wirth, S.; Duhamel, J.R. Face cells in orbitofrontal cortex represent social categories. Proc. Natl. Acad. Sci. USA 2018, 115, E11158–E11167. [Google Scholar] [CrossRef]

- Moeller, S.; Crapse, T.; Chang, L.; Tsao, D.Y. The effect of face patch microstimulation on perception of faces and objects. Nat. Neurosci. 2017, 20, 743–752. [Google Scholar] [CrossRef] [PubMed]

- Tsao, D.Y.; Freiwald, W.A.; Tootell, R.B.; Livingstone, M.S. A cortical region consisting entirely of face-selective cells. Science 2006, 311, 670–674. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.H.; Yep, R.; Wang, C.A. Pupil size correlates with heart rate, skin conductance, pulse wave amplitude, and respiration responses during emotional conflict and valence processing. Psychophysiology 2024, 62, e14726. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.L.; Pei, W.; Lin, Y.C.; Granmo, A.; Liu, K.H. Emotion Detection Based on Pupil Variation. Healthcare 2023, 11, 322. [Google Scholar] [CrossRef] [PubMed]

- Pan, J.; Sun, X.; Park, E.; Kaufmann, M.; Klimova, M.; McGuire, J.T.; Ling, S. The effects of emotional arousal on pupil size depend on luminance. Sci. Rep. 2024, 14, 21895. [Google Scholar] [CrossRef] [PubMed]

- Yuan, T.; Wang, L.; Jiang, Y. Multi-level processing of emotions in life motion signals revealed through pupil responses. Neuroscience 2024, 12, RP89873. [Google Scholar] [CrossRef]

- Yu, P.; Yu, L.; Li, Y.; Qian, C.; Hu, J.; Zhu, W.; Liu, F.; Wang, Q. Emotional and visual responses to trypophobic images with object, animal, or human body backgrounds: An eye-tracking study. Front. Psychol. 2024, 15, 1467608. [Google Scholar] [CrossRef] [PubMed]

- Bonino, G.; Mazza, A.; Capiotto, F.; Berti, A.; Pia, L.; Dal Monte, O. Pupil dilation responds to the intrinsic social characteristics of affective touch. Sci. Rep. 2024, 14, 24297. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Zhu, L.; Fang, X.; Tang, Y.; Xiao, Y.; Jiang, S.; Lin, J.; Li, Y. Pupil dilation and behavior as complementary measures of fear response in Mice. Cogn. Neurodyn. 2024, 18, 4047–4054. [Google Scholar] [CrossRef]

- Keene, P.A.; deBettencourt, M.T.; Awh, E.; Vogel, E.K. Pupillometry signatures of sustained attention and working memory. Atten. Percept. Psychophys. 2022, 84, 2472–2482. [Google Scholar] [CrossRef] [PubMed]

- Zokaei, N.; Board, A.G.; Manohar, S.G.; Nobre, A.C. Modulation of the pupillary response by the content of visual working memory. Proc. Natl. Acad. Sci. USA 2019, 116, 22802–22810. Available online: https://www.pnas.org/doi/abs/10.1073/pnas.1909959116 (accessed on 5 November 2024). [CrossRef]

- Zhang, Z.; Shan, L.; Wang, Y.; Li, W.; Jiang, M.; Liang, F.; Feng, S.; Lu, Z.; Wang, H.; Dai, J. Primate preoptic neurons drive hypothermia and cold defense. Innovation 2023, 4, 100358. Available online: https://www.ncbi.nlm.nih.gov/pubmed/36583100 (accessed on 5 December 2023). [CrossRef]

- Liu, X.H.; Gan, L.; Zhang, Z.T.; Yu, P.K.; Dai, J. Probing the processing of facial expressions in monkeys via time perception and eye tracking. Zool. Res. 2023, 44, 882–893. Available online: https://www.ncbi.nlm.nih.gov/pubmed/37545418 (accessed on 18 September 2023). [CrossRef]

- Shan, L.; Yuan, L.; Zhang, B.; Ma, J.; Xu, X.; Gu, F.; Jiang, Y.; Dai, J. Neural Integration of Audiovisual Sensory Inputs in Macaque Amygdala and Adjacent Regions. Neurosci. Bull. 2023, 39, 1749–1761. Available online: https://www.ncbi.nlm.nih.gov/pubmed/36920645 (accessed on 15 March 2023). [CrossRef]

- Wang, C.A.; Baird, T.; Huang, J.; Coutinho, J.D.; Brien, D.C.; Munoz, D.P. Arousal Effects on Pupil Size, Heart Rate, and Skin Conductance in an Emotional Face Task. Front. Neurol. 2018, 9, 1029. [Google Scholar] [CrossRef] [PubMed]

- Finke, J.B.; Deuter, C.E.; Hengesch, X.; Schächinger, H. The time course of pupil dilation evoked by visual sexual stimuli: Exploring the underlying ANS mechanisms. Psychophysiology 2017, 54, 1444–1458. [Google Scholar] [CrossRef]

- Suzuki, T.W.; Kunimatsu, J.; Tanaka, M. Correlation between Pupil Size and Subjective Passage of Time in Non-Human Primates. J. Neurosci. 2016, 36, 11331–11337. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; University of Florida: Gainesville, FL, USA, 2008. [Google Scholar]

- Tottenham, N.; Tanaka, J.W.; Leon, A.C.; McCarry, T.; Nurse, M.; Hare, T.A.; Marcus, D.J.; Westerlund, A.; Casey, B.J.; Nelson, C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Res. 2009, 168, 242–249. [Google Scholar] [CrossRef] [PubMed]

- Maréchal, L.; Levy, X.; Meints, K.; Majolo, B. Experience-based human perception of facial expressions in Barbary macaques (Macaca sylvanus). PeerJ 2017, 5, e3413. [Google Scholar] [CrossRef] [PubMed]

- Micheletta, J.; Whitehouse, J.; Parr, L.A.; Waller, B.M. Facial expression recognition in crested macaques (Macaca nigra). Anim. Cogn. 2015, 18, 985–990. [Google Scholar] [CrossRef]

- Morozov, A.; Parr, L.A.; Gothard, K.; Paz, R.; Pryluk, R. Automatic Recognition of Macaque Facial Expressions for Detection of Affective States. eNeuro 2021, 8, 1–16. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, B.; Lu, X.; Wang, R.; Ma, J.; Chen, Y.; Zhou, Y.; Dai, J.; Jiang, Y. Genetic and Neuronal Basis for Facial Emotion Perception in Humans and Macaques. Natl. Sci. Rev. 2024, 11, nwae381. [Google Scholar] [CrossRef]

- Willenbockel, V.; Sadr, J.; Fiset, D.; Horne, G.O.; Gosselin, F.; Tanaka, J.W. Controlling low-level image properties: The SHINE toolbox. Behav. Res. Methods 2010, 42, 671–684. [Google Scholar] [CrossRef] [PubMed]

- Isbell, L.A. Snakes as agents of evolutionary change in primate brains. J. Hum. Evol. 2006, 51, 1–35. [Google Scholar] [CrossRef] [PubMed]

- Isbell, L.A. Predation on primates: Ecological patterns and evolutionary consequences. Evol. Anthropol. Issues News Rev. 1994, 3, 61–71. [Google Scholar] [CrossRef]

- Parr, L.A.; Waller, B.M.; Vick, S.J.; Bard, K.A. Classifying chimpanzee facial expressions using muscle action. Emotion 2007, 7, 172–181. [Google Scholar] [CrossRef] [PubMed]

- Swystun, A.G.; Logan, A.J. Quantifying the effect of viewpoint changes on sensitivity to face identity. Vis. Res. 2019, 165, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Matsumiya, K.; Wilson, H.R. Size-invariant but viewpoint-dependent representation of faces. Vis. Res. 2006, 46, 1901–1910. [Google Scholar] [CrossRef] [PubMed]

- Hill, H.; Schyns, P.G.; Akamatsu, S. Information and viewpoint dependence in face recognition. Cognition 1997, 62, 201–222. [Google Scholar] [CrossRef] [PubMed]

- Guo, K.; Shaw, H. Face in profile view reduces perceived facial expression intensity: An eye-tracking study. Acta Psychol. 2015, 155, 19–28. [Google Scholar] [CrossRef] [PubMed]

- Ewbank, M.P.; Smith, W.A.; Hancock, E.R.; Andrews, T.J. The M170 reflects a viewpoint-dependent representation for both familiar and unfamiliar faces. Cereb. Cortex 2008, 18, 364–370. [Google Scholar] [CrossRef] [PubMed]

- Magnuski, M.; Gola, M. It’s not only in the eyes: Nonlinear relationship between face orientation and N170 amplitude irrespective of eye presence. Int. J. Psychophysiol. 2013, 89, 358–365. [Google Scholar] [CrossRef]

- Jacques, C.; Rossion, B. Misaligning face halves increases and delays the N170 specifically for upright faces: Implications for the nature of early face representations. Brain Res. 2010, 1318, 96–109. [Google Scholar] [CrossRef]

- Orczyk, J.; Schroeder, C.E.; Abeles, I.Y.; Gomez-Ramirez, M.; Butler, P.D.; Kajikawa, Y. Comparison of Scalp ERP to Faces in Macaques and Humans. Front. Syst. Neurosci. 2021, 15, 667611. [Google Scholar] [CrossRef] [PubMed]

- Kardon, R. Pupillary light reflex. Curr. Opin. Ophthalmol. 1995, 6, 20–26. [Google Scholar] [CrossRef]

- Menzel, C.; Hayn-Leichsenring, G.U.; Langner, O.; Wiese, H.; Redies, C. Fourier power spectrum characteristics of face photographs: Attractiveness perception depends on low-level image properties. PLoS ONE 2015, 10, e0122801. [Google Scholar] [CrossRef]

- Barros, F.; Soares, S.C.; Rocha, M.; Bem-Haja, P.; Silva, S.; Lundqvist, D. The angry versus happy recognition advantage: The role of emotional and physical properties. Psychol. Res. 2023, 87, 108–123. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.; Xu, H.; Wang, H.; Huang, H.; Xu, Y.; Li, B.; Hong, S.; Feng, G.; Kui, S.; Liu, G.; et al. Artificial intelligence for medicine: Progress, challenges, and perspectives. Innov. Med. 2023, 1, 100030. Available online: https://www.the-innovation.org/medicine//article/doi/10.59717/j.xinn-med.2023.100030 (accessed on 15 September 2023). [CrossRef]

- Feng, G.; Xu, H.; Wan, S.; Wang, H.; Chen, X.; Magari, R.; Han, Y.; Wei, Y.; Gu, H. Twelve practical recommendations for developing and applying clinical predictive models. Innov. Med. 2024, 2, 100105. Available online: https://www.the-innovation.org/medicine//article/doi/10.59717/j.xinn-med.2024.100105 (accessed on 6 December 2024). [CrossRef]

| Study | Objective Measures | Subjects | Experimental Materials | Explore Cross-Species Effects? | Control Low-Level Properties | Compare Effects of View Point | Compare Correlation with Gaze Duration |

|---|---|---|---|---|---|---|---|

| Chang YH et al., 2024 [31] | Pupil size | Human | Images and words | No | Not mention | No | No |

| Lee CL et al., 2023 [32] | Pupil size | Human | Videos | No | Not mention | No | No |

| Pan J et al., 2024 [33] | Pupil size | Human | Auditory stimuli | No | Yes | No | No |

| Yuan T. et al., 2024 [34] | Pupil size | Human | Motion visual stimuli | No | Yes | No | No |

| Yu P et al., 2024 [35] | Pupil size | Human | Images | Yes | Yes | No | No |

| Bonino G et al., 2024 [36] | Pupil size | Human | Touch | No | No mention | No | No |

| Current study | Pupil size | NHP | Images | Yes | Yes | Yes | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Zhang, Z.; Dai, J. Evaluating Pupillometry as a Tool for Assessing Facial and Emotional Processing in Nonhuman Primates. Appl. Sci. 2025, 15, 3022. https://doi.org/10.3390/app15063022

Liu X, Zhang Z, Dai J. Evaluating Pupillometry as a Tool for Assessing Facial and Emotional Processing in Nonhuman Primates. Applied Sciences. 2025; 15(6):3022. https://doi.org/10.3390/app15063022

Chicago/Turabian StyleLiu, Xinhe, Zhiting Zhang, and Ji Dai. 2025. "Evaluating Pupillometry as a Tool for Assessing Facial and Emotional Processing in Nonhuman Primates" Applied Sciences 15, no. 6: 3022. https://doi.org/10.3390/app15063022

APA StyleLiu, X., Zhang, Z., & Dai, J. (2025). Evaluating Pupillometry as a Tool for Assessing Facial and Emotional Processing in Nonhuman Primates. Applied Sciences, 15(6), 3022. https://doi.org/10.3390/app15063022