Abstract

This review is dedicated to the fast developing area of robotic technology cohesion with extended reality techniques. This paper analyzes existing extended reality technologies, categorizing them as virtual reality, augmented reality, and mixed reality. These technologies differ from each other based on resulting graphics and are similar in terms of hardware, software, and functional issues. The research methodology is shown in a separate section, which declares the depth of the research, used keywords, and criteria for paper selection. A brief classification of extended realty issues provides a systematic approach to these techniques and reveals their applications in robotics, placing the focus on mobile robotic technology. The term extended reality covers technologies of virtual, augmented, and mixed reality, which are classified in this review. Each section of the reviewed field gives an original issue classification in a table format. Finally, the general outcome of the review is summarized in the discussion, and conclusions are drawn.

1. Introduction

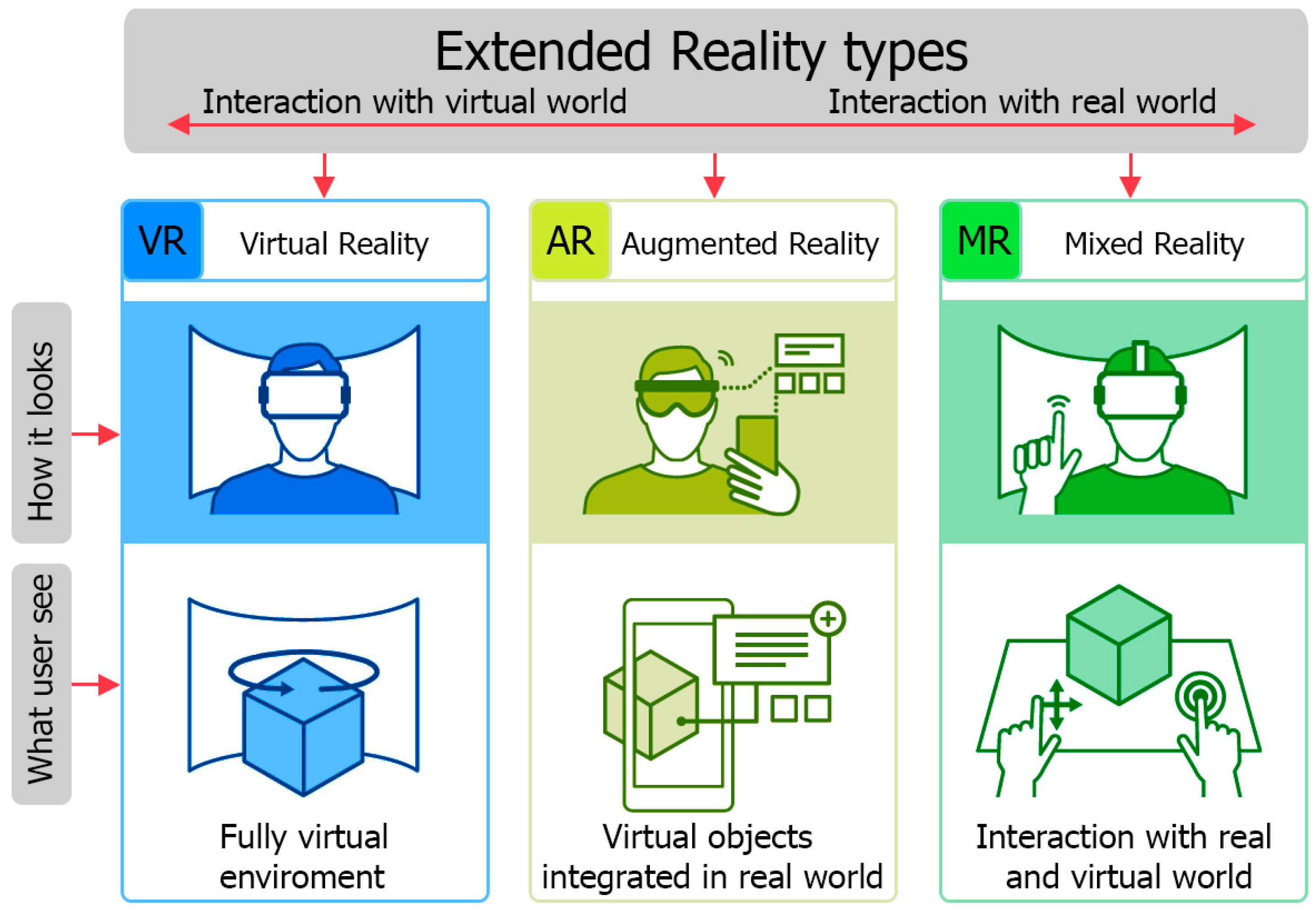

Extended reality (XR) is an intensively developing technology that provides us with useful tools for displaying and interacting with various information. It provides virtual and augmented ways to interact with information in the real and virtual worlds [1]. Extended reality includes virtual reality (VR) where the user sees only the virtual world and does not see the real one, augmented reality (AR) where the user sees computer graphics superimposed on the real world, and a mixed reality (MR) where the user can additionally see and interact with both real and virtual objects simultaneously, all of which use computer graphics to visualize any information. The active development of these technologies finds their application in various fields, including use in combination with robots. These technologies offer new opportunities for the visualization of equipment before installation, simulating and controlling its operation, which makes them in demand in various industries ranging from manufacturing to entertainment. One of the key areas of application is education and training [2]. XR technologies allow us to create interactive environments both in the virtual world and complement the real one. This allows safe simulation of real conditions for practical training. In robotics, such approaches are used to train operators and engineers to work with complex systems. For example, XR can improve the operator’s understanding of the tasks assigned to them in remote work [3], the robot’s task in path planning for finding the shortest way avoiding all obstacles [4], and simulation of the robot movements in virtual environment, making the human–machine interface (HMI) more user-friendly and natural [5]. This can improve the speed, quality, and safety of work, including tasks with robots and their path planning, which is truly relevant for modern factories [6]. Augmented reality can improve the training process for a new employee. For example, it can show the assembly instructions for the mechanisms and their settings, as well as all the necessary documentation [7], which will increase the speed of training and reduce the likelihood of errors. In production or service centers, these systems can show equipment parameters such as temperature, pressure, percentage of wear of parts, date of previous or predictive replacement, and which part will need maintenance, which can speed up maintenance and diagnostics thanks to interactive instructions, pre-ordered parts or consumables, and clear maintenance planning, which is part of Industry 4.0 systems [7]. The implementation of augmented reality systems, as well as systems that improve safety in production, allows us to approach Industry 5.0 systems, where a person is part of the working system and interacts with an automated production line and robots. The use of VR head-mounted display (HMD) technology improves spatial belief when teleoperating a robot [5], since the operator sees a stereo image and controls the view by simply turning his head, thereby allowing both hands to be used for control [6,8]. Teleoperation is truly relevant in areas dangerous to humans, such as deep-sea work, work in space, and demining or rescue operations. Teleoperation is used in sapper robots and reconnaissance robots, where it is necessary to ensure the safety of the mission [9], as well as in robotic surgery, where the surgeon remotely controls the robot that performs the operation [10]. The main problem is that programming robots often takes a lot of time and requires high qualifications [11]. The main way is to manually control or input coordinates step by step and train the robot to create a path. Some mobile robots can use a 2D floor plan to create a map for navigation. Navigation is very important for the correct localization of mobile robots. Navigation is often used in industrial autonomous mobile robots (AMRs) for fast and accurate internal logistics in production as well as in home robotic vacuum cleaners for bypassing obstacles and cleaning the entire territory without missing any areas [12]. However, this means that not all the data about the real state of the environment are collected, and every part of the robot’s path needs to be checked. This complete process is time-consuming and sometimes cannot give the right result the first time. It is particularly important to check whether the robot can follow the created path or there are some obstacles that will not allow robots with some characteristics to pass them, but other models can. Also, XR can detect and display more information about the environment to create a real-time map or remotely control the mobile robot [5,8]. Creating a map using XR can provide more detailed and exact information about the environment with all the obstacles and real locations of objects. This technology can help improve the quality of mobile robot control, improve the robot’s path, and prevent accidents or added costs.

Augmented reality is also used in other areas. In medicine, it visualizes the surgical process for surgeons in real time and for patient rehabilitation after surgery and staff training. In architecture and design, XR visualizes virtual buildings and interiors using BIM systems, helping with the development, simulation, and presentation of the project to the customer for approval. In navigation and tourism, augmented reality improves spatial orientation for visitors and provides interactive guides to attractions. The entertainment and multimedia industry are already actively implementing XR in applications ranging from virtual worlds for video games to interactive quests and films with a fully immersive effect. In military and rescue operations, XR helps train personnel, simulate extreme scenarios, and support the execution of operations, for example, in conditions of limited visibility or in a disaster area. Despite the enormous potential, the development and application of XR technologies face several challenges. This review aims to find effective modern methods and ways of implementing extended reality systems, as well as the advantages and disadvantages of this system. One of the main challenges is the excessive cost of hardware and the development of specialized applications, which limits mass adoption. Ergonomic issues such as the usability of headsets and the risk of user fatigue during long-term operation are also relevant. In addition, there are some more XR implementation challenges, for example, real-time synchronization of virtual and real data, excessive computing request, and the size and weight of the device. As a technical limitation, short battery operation time should also be mentioned. There are technically difficult issues in application areas, begging for better solutions, like navigation, path planning, monitoring, and troubleshooting of mobile ground robots, to ensure efficient, comfortable, and safe work with robots [12].

This review covers the technical characteristics of augmented reality systems, the main software of augmented reality systems, mobile ground robot navigation applications, and related issues. In addition, this review reveals the purpose and benefit of implementing the use of mixed reality technology in mobile ground robots. This information can help with further developments in this area. This review focuses on current methods of using augmented reality and its use with robotics.

2. Materials and Methods

The review was created by analyzing articles in broader areas related to extended reality issues. This review includes papers describing the implementation of extended reality in different areas, including robotics and manufacturing, with a focus on mobile robotics. This paper covers research conducted from 2020 to 2024.

The references for the analysis were taken from public sources. Well-known databases were searched, such as Scopus search, Google Scholar, Engineering Village, and IEEE Xplore.

The search was performed using these keywords: robot, mobile robot, cobot, collaborative robot, virtual reality, augmented reality, mixed reality, extended reality, digital twin, manufacturing, education, medicine, military, SLAM, navigation, simulation, and visualization.

The number of articles published in this field was significant. In total, 536 articles were analyzed.

The criteria for selecting papers in this review were as follows:

- (a)

- Papers were chosen based on keywords due to related content;

- (b)

- Papers were chosen based on quality parameters, which includes indexing in databases like Web of Science (WoS), Scopus, and IEEE; regular papers have priority over proceedings and conference papers;

- (c)

- Papers were chosen based on the importance of paper content to discuss issues within the review.

After the analysis, 162 articles were selected from which duplicates were later excluded, and versions with a more complete disclosure of the topic were selected. As a result, 102 sources were used in the article.

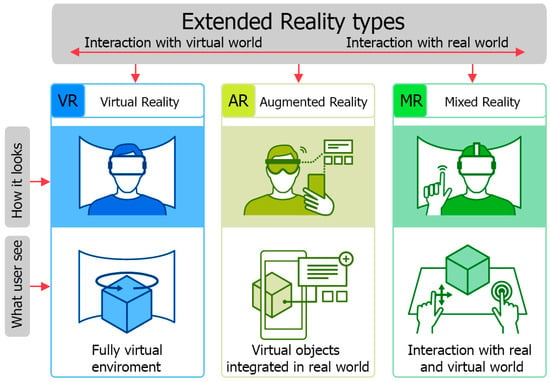

3. Applications of Extended Reality

Augmented reality, XR or X reality, is a general name for several technologies, where X stands for current or future visualization systems that connect the real world with virtual visualization using computer graphics, head-mounted displays, sensors, and cameras. Together, this gives the user a more natural feeling of information using virtual and augmented methods to visualize various information. The differences among the XR systems are illustrated in Figure 1, which is adapted from [13]. The illustration shows a scale that shows the degree of interaction with the real and virtual worlds of each system. VR systems can immerse the user in a completely virtual environment that is completely unrelated to the real world, excluding systems that warn the user to avoid collisions with obstacles from the real world because the device completely blocks the view of the real world. AR systems, on the contrary, allow you to see the real world with computer graphics superimposed on top through augmented reality glasses or through the camera and screen of a smartphone or tablet. MR is a combination of technologies that most often uses a head-mounted display system like VR, but these systems have cameras that capture the real world and broadcast a picture with superimposed computer graphics like MR. In addition to this, the user’s hand motion capture technology or spatial controllers are used to interact with real and virtual objects. The mixed reality system is the most interactive with the real world of those presented. Below are presented the areas of application of extended reality in various fields found using keyword searches of scientific databases, such as Scopus search, Google Scholar, Engineering Village, and IEEE Xplore.

Figure 1.

Extended reality types. Adapted from [13].

3.1. Extended Reality and Its Usage

Extended reality (XR) includes virtual reality (VR), augmented reality (AR), and mixed reality (MR) [7]. These systems cover a variety of applications, including the most common uses of XR in education, architecture, design, entertainment, and media for detailed and interactive visualization, allowing for a better feeling of information. In science, education, and engineering, XR is also used to simulate various processes, such as analysis of robot trajectories before programming a real one as well as for correction and safety testing. It is also used as a visual part of a digital twin system [14]. Provided below are examples of integrating of each type of extended reality case into technical solutions.

3.1.1. Virtual Reality and Its Usage

Virtual reality tools allow technical visualization of the processes in 3D space, so it can be implemented in different areas—from showing visualization in a virtual room from a regular monitor to a virtual world with interaction using a helmet with spatial controllers [15]. Provided below are some examples of the use of virtual reality systems in different application areas.

- Education—A virtual workspace is especially useful because the user can see and interact intuitively. VR is used to create a virtual classroom, an environment for a better feeling of the subject [16]. Gamification of educational material using VR engages students, which leads to improved perception and learning of subjects [17]. For example, these include visual demonstration of various processes in biology, chemistry, and technical subjects; virtual presence in important historical events; interactive travel to any corner of the earth when studying geography; or the study of astronomy with visualizations of solar systems and other planetary systems [2]. The implementation of VR makes lessons more exciting and memorable using step-by-step methods without leaving the classroom [18]. Students can see and interact with virtual objects and conduct modeling experiments in virtual laboratories [19]. This can save money on equipment and consumables and allow for safe experimentation. In the professional field, VR is used for visual safety training in various industries such as construction, fire safety, aviation, and mining. This type of visualization leads to an improved understanding and feeling of safety measures [20].

- Medicine—VR can also be used for professional purposes. In medicine, it accounts for 81% of other XR technologies [21]. These systems are often used in medicine to train doctors and visualize the patient’s anatomical features when preparing and planning an operation based on the 3D models created from magnetic resonance imaging (MRI) and computed tomography (CT) scans. VR simulators are used to simulate operations and train surgeons without risk to patients and after showing the latter to the public during the educational process. Thus, future doctors can safely study anatomy, physiology, and complex medical procedures in an interactive 3D environment. Such solutions make the educational process more visual and interactive [22]. Virtual reality is used in robotic surgery, which increases the accuracy of the operation and allows it to be carried out remotely, for example, in remote areas or in the case of an urgent operation [23].

Rehabilitation using VR makes it easier for patients to recover from surgery, as one of the options for physical therapy, replacing standard exercises with exciting tasks that encourage patients to actively take part in rehabilitation. This technology has proven itself in the treatment of mental disorders, such as the treatment of phobias and post-traumatic stress disorder [24], and is especially useful after amputation and in those who need adaptation to prostheses [25].

- Architecture and Design—Modern design almost always uses computer-aided design (CAD) systems that allow you to create structures in virtual space and perform simulations. One of these systems is called Building Information Modeling (BIM). This system is used in many architectural software packages, such as Autodesk Revit 2024 and ArchiCAD 28 [26]. The convenience of this system is that it creates a standard 3D model of the future project and allows one to show it on a computer or tablet screen, as well as using virtual reality glasses, which allows designers to virtually walk in the 3D models of buildings they have created and check them at the design stage. This simplifies the development process, making it more interactive and visual. Such visualization allows a better understanding of spaces’ scale, proportions, and functionality [27]. It also allows you to evaluate the design, functionality, and appearance of buildings when choosing apartments or houses, for example, for demonstration to buyers or tenants. This makes viewing objects quick and eliminates the need to go to them. Some clients may not have the skills to read drawings and may find it difficult to imagine how the building will look after construction or correctly estimate the scale of the building [28]. A virtual presentation of the project using a virtual reality headset can easily demonstrate how the project will look, and in case of additional wishes of the customer, slightly change the project on the fly, for example, change the texture of the walls, floor material, move sockets and switches, rearrange furniture, etc. [29].

- Navigation and Information—Modern navigation apps like Google Maps have a street view mode [30] that allows virtually walking around any point in the world, such as streets, museums, hotels, or natural attractions, to decide whether a place is worth visiting. This is useful for planning trips and finding unfamiliar places. Users can also add their photos and videos to help future travelers. Some hotels and tour operators provide VR presentations of rooms, restaurants, attractions, and other infrastructure facilities to attract more customers using modern technology [31]. Some museums also use virtual reality to partially show exhibits, such as the Louvre Museum [32], thus enticing tourists to visit and view all the exhibits. Also, in museums, virtual reality technologies are very often used to add interactivity to exhibitions and installations [33].

- Entertainment and Media—Three-dimensional computer games are a type of virtual simulation, as the gameplay takes place in a simulated virtual environment displayed on a regular monitor. HMD headsets allow for even greater immersion in the gameplay by tracking the user’s head [34], enabling hands-free control of the gameplay. Thus, adding the ability to use HMD headsets to a 3D game and turning it into a full-fledged VR application allows for additional interactivity and translation of the game, such as the use of spatial controllers and/or hand motion capture, which transfers the movements into the game, improving the gaming experience and the player’s immersion in the game. Recently, many games have been developed with implemented virtual reality support, and some projects were created only for VR mode, for example, the game Half-Life: Alyx [35]. Also, due to the modern sedentary lifestyle, people often have health problems, and VR can be one of the solutions. There are games in which the main goal is to entertain the player with the help of a rhythm game in which it is necessary to move. This can help people to increase their activity. An example of such a game is VR BeatSaber, which positively affects players [36]. The use of more human interface devices, such as a steering wheel with pedals or a joystick, allows the application of VR not only for games but also for training using various simulators, for example, training forklift drivers or car driving instructors in a virtual environment [37].

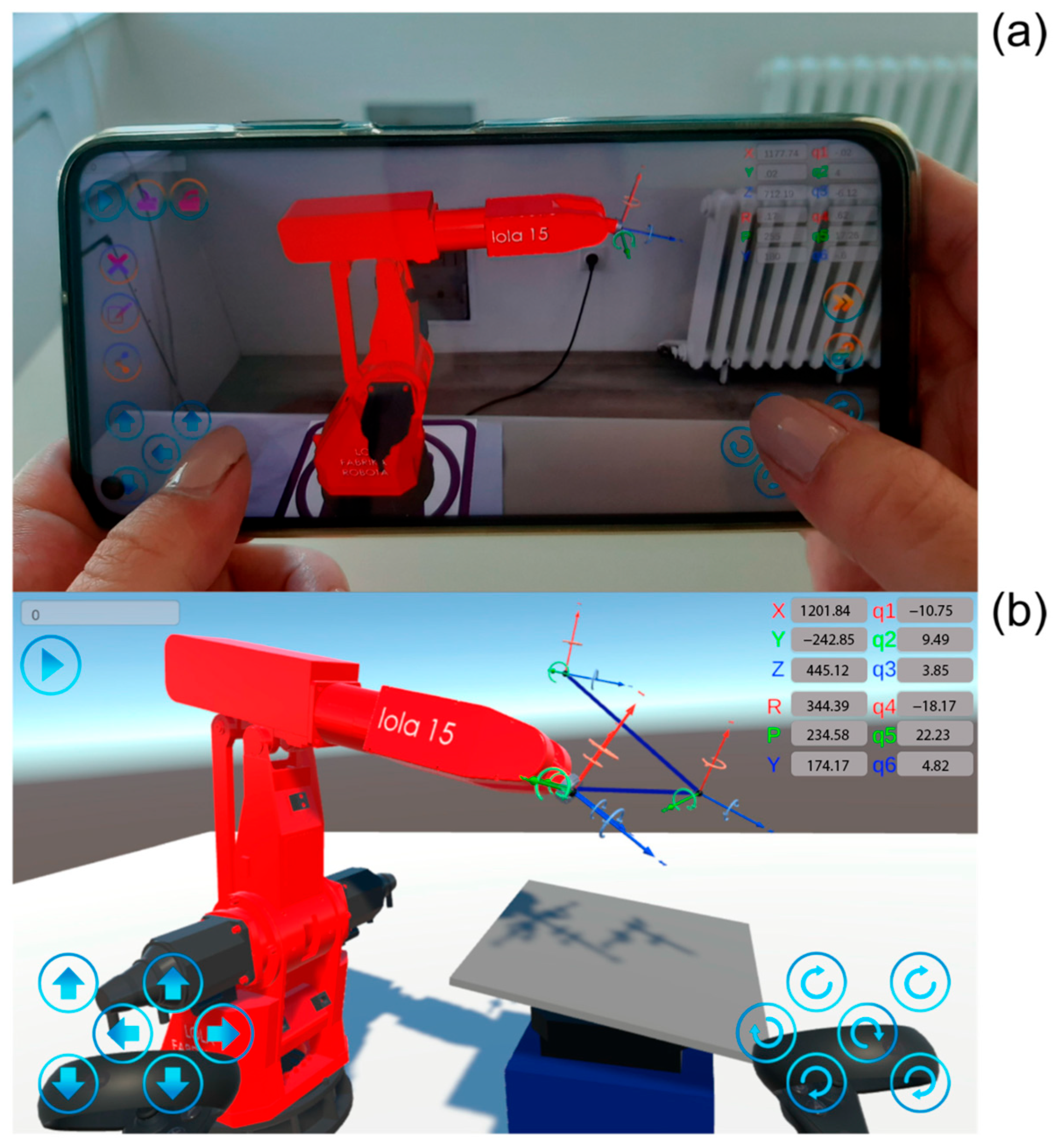

- Robotics and Manufacturing—Stationary robotics is one of the main tools in manufacturing. They are often used for repetitive work. With added sensors, it can do sorting and correct placing of objects. The use of a stationary robot allows complex technological processes to be performed, while it is possible to change the robot configuration and program to perform new tasks.

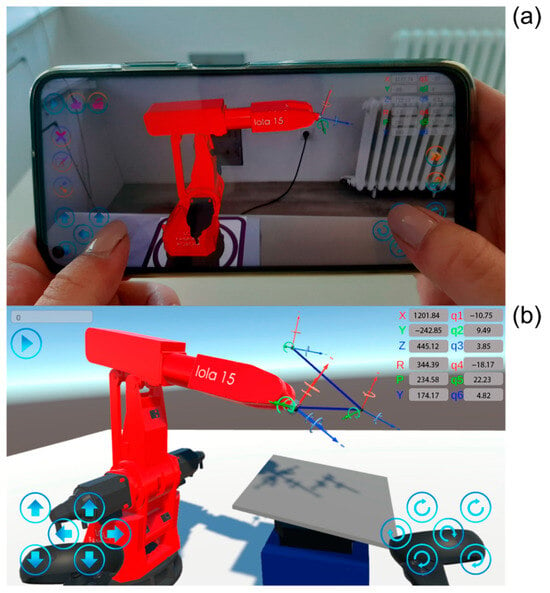

XR is a useful tool for monitoring robot tasks, controlling robot operation in real-time conditions, programming new tasks, and providing safe movement simulation of stationary robots (Figure 2). This is useful for studying for real or remote training of fresh staff to work with stationary robots [38,39]. They can safely study with a simulated robot in a virtual room, using VR, while the cost of error is reduced to zero, since all simulations are virtual.

Figure 2.

(a) Augmented reality and (b) virtual reality robot programming and simulation [11].

Nowadays, mobile robots are universally used in various areas for transportation and combined transportation and manipulation applications. They are used to deliver goods in workshops and warehouses for automated parcel delivery. Recently, autonomous vehicles like trucks, cars, or buses have been classified as mobile robots generally. Today, it is possible to use automated transport in autonomous mode, like an automated subway or city bus. In house applications, there are robotic vacuum cleaners and robotic lawn mowers. This type of mobile robot is employed to clean the room floor or mow the lawn when people are at work or relaxing.

The method of mobile robot control using an XR headset can provide more information about the environment and robot sensors in real-time than a regular method. The method of virtual control room implementation for mobile legged robot control was tested and showed a better and faster feeling of space, which has a beneficial effect on the results for remote control [8]. There are developed tools that help create digital maps, useful for operator and robot navigation, as described in the paper [40]. During COVID-19 times, mobile robots were used in hospitals to watch the condition of patients and deliver food and medications. Public areas and volumes of public buildings were disinfected using all kinds of mobile robots carrying UV light sources, spray jets, and other tools. Some models were automatic, but most were controlled remotely. Some models used a virtual reality system for better orientation and interaction between the operator and the client [41].

- Military and Rescue Applications—Virtual reality technologies from games have found their application in training soldiers and rescue teams. Soldiers can conduct training using VR, simulating combat actions [20]. This technology is also relevant for rescuers, for example, for first aid training [42]. The use of bomb disposal robots and small reconnaissance robots helps ensure the safety of soldiers and rescuers for reconnaissance during a rescue operation or when checking suspicious objects for explosives. Adding slam systems to the robot and visualizing the collected data in VR can help the operator better understand the previously unknown situation and make better decisions [40].

In conclusion, virtual reality is an excellent method of visualizing virtual worlds, which allows learning, experimenting, and conducting simulations interactively and safely, even without experimental testing in real conditions.

3.1.2. Augmented Reality and Its Usage

Augmented reality technology provides visualization of objects that overlay on the real world. The real environment is captured by the camera, and its position is tracked using sensors [34]. The computer system analyzes the images of the real world, adds the virtual object to the image, and shows the result on the screen of the computer, phone, or HMD headset. Additionally, modern HMD headsets can track hand and leg gestures to increase the usability of the AR interface [43]. Below in the text, typical examples of AR applications in various fields are given.

- Education—AR can improve the quality of learning by showing more information in an interactive way than a regular book can provide. Some books or magazines may have special markers printed on them. By installing an app on your phone, you can see more information or “bring to life” the book with animated visualization. In school education, this technology can improve academic performance and make students more interested in learning a subject by explaining complex material in an engaging and interactive way [44].

- Navigation and Tourism—AR is gradually being introduced for navigation, such as the previously described projection display that helps with navigation, thanks to the leading AR systems Google ARCore, which make it relatively easy to create applications with augmented reality, ensuring the emergence of new applications. Based on this system, the Google Maps application has an AR navigation mode. As with projection displays, Google Maps also shows the direction of travel, but with the visualization tied to the first world. This makes navigation as clear as possible.

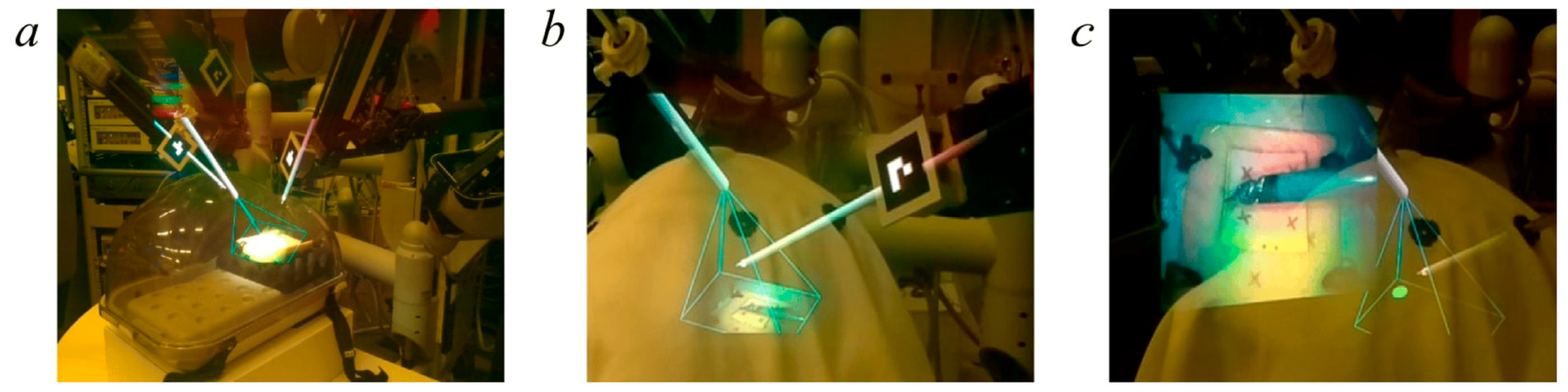

- Medicine—It became efficient in surgery, especially ultrasound-guided interventions. This allows doctors to learn faster and reduces cognitive load, thereby making their work easier [45]. The surgery preparation procedure also could be simplified using detailed 3D visualizations of all the patient’s anatomical features [23] or the use of a projector to visualize the internal operation process on top of the body [46], as shown in Figure 3. AR could also be used for direct robotic system control, remote surgery processes, or studying [47].

Figure 3.

AR visualization of an internal operation process on top of body [46]. (a) Visualization of a transparent body phantom in ARssist. (b,c) Examples of AR-based visualization of endoscopy in ARssist.

Figure 3.

AR visualization of an internal operation process on top of body [46]. (a) Visualization of a transparent body phantom in ARssist. (b,c) Examples of AR-based visualization of endoscopy in ARssist.

- Assistance—Using an AR assistance system (Figure 4), non-qualified workers can complete the task while a qualified specialist is not on-site, for example, while on vacation or sick leave. Specialists or service help can provide AR instructions, like “go here”, “open this door”, “press that button”, and “enter these commands”. This helps in machine maintenance or fast problem solving [38].

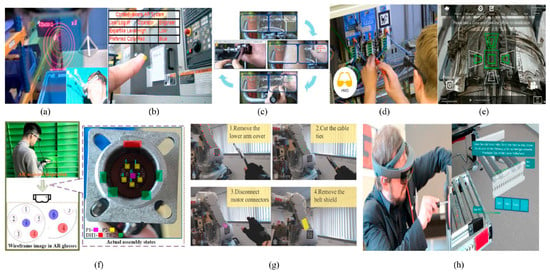

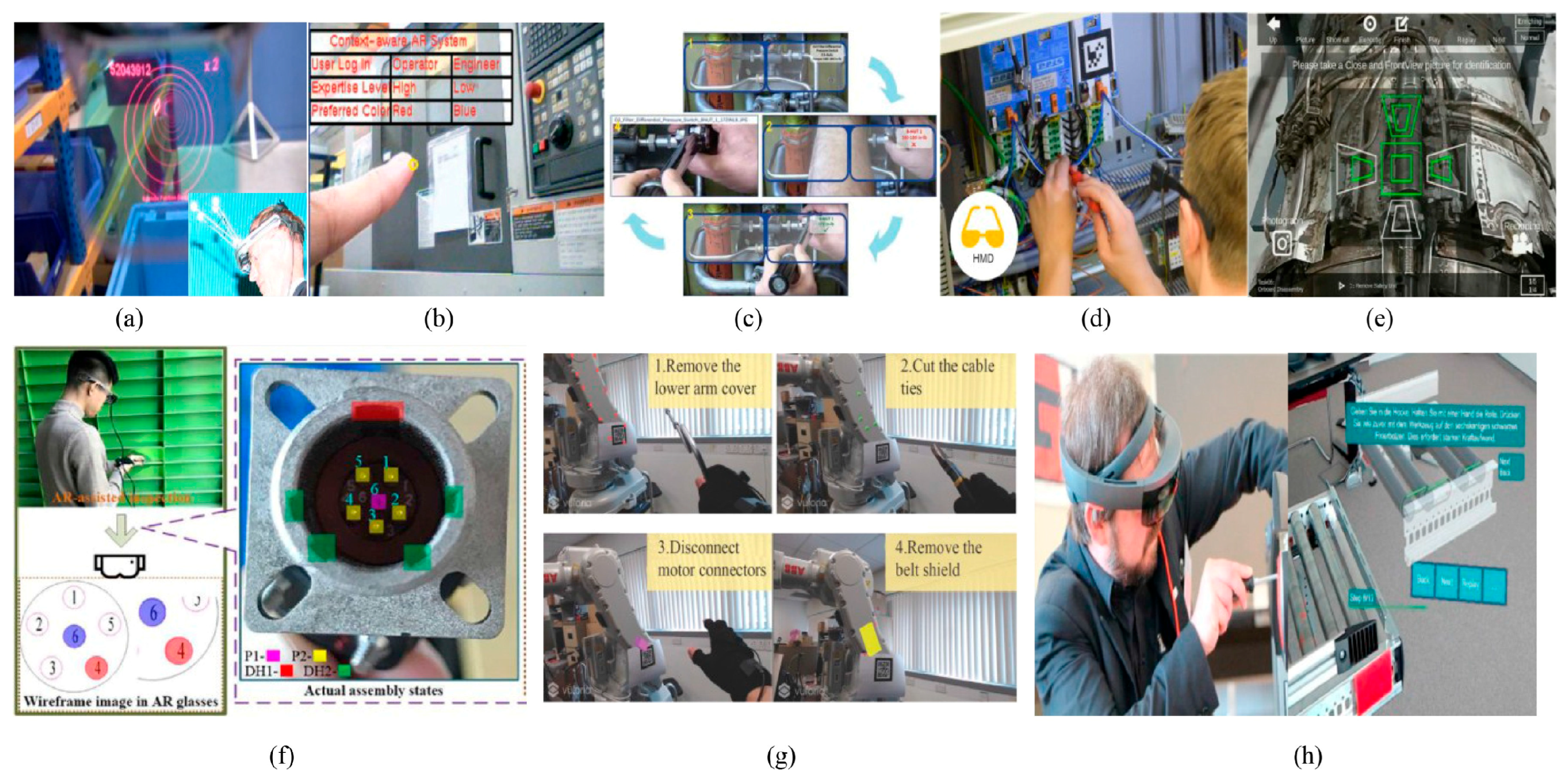

Figure 4.

Operative assistance as well as manufacturing planning and maintenance using augmented reality [39]. (a) Pick-by-vision with HMD AR glasses. (b) Context-aware HMD AR-assisted maintenance. (c) Reducing maintenance error with AR glasses. (d) HMD AR-supported collaborative repair operations of industrial robots. (e) Adaptive AR instructions for complex industrial operations. (f) AR-assisted deep learning-based approach for automatic inspection. (g) HMD AR-based humancentric approach for maintenance. (h) AR in intralogistics.

Figure 4.

Operative assistance as well as manufacturing planning and maintenance using augmented reality [39]. (a) Pick-by-vision with HMD AR glasses. (b) Context-aware HMD AR-assisted maintenance. (c) Reducing maintenance error with AR glasses. (d) HMD AR-supported collaborative repair operations of industrial robots. (e) Adaptive AR instructions for complex industrial operations. (f) AR-assisted deep learning-based approach for automatic inspection. (g) HMD AR-based humancentric approach for maintenance. (h) AR in intralogistics.

- Manufacturing Planning and Maintenance—During the planning of a factory floor, augmented reality can visualize how an empty room will look with installed equipment to check how it fits and determine if it will be comfortable to use. Augmented reality is used with a tablet camera and integrated lidar for tracking a mobile robot’s position for planning and visual simulation of the routes of mobile robots or work of other equipment [48]. AR helps to see a virtual robot in conditions that are close to reality (Figure 2). Also, it is useful for technical support for quick problem solving. Modern usage of XR is a collaboration between robots and humans [38]. Furthermore, in manufacturing, AR can be used to help employees understand new tasks easier and faster by showing virtual schemes or instructions of a task that overlay on a real world [39]. The maintenance help system shows the lifetime of equipment or provides necessary reparation plan for personnel, using predictive fault finding (Figure 5) [49].

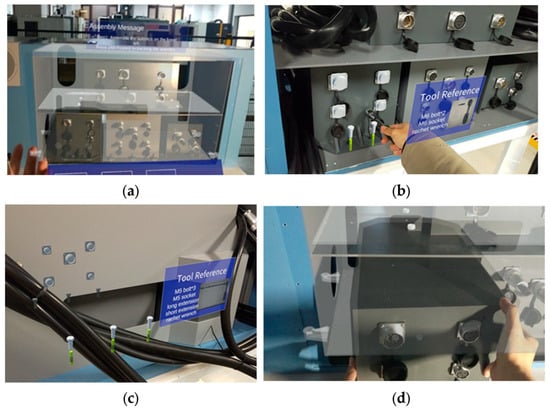

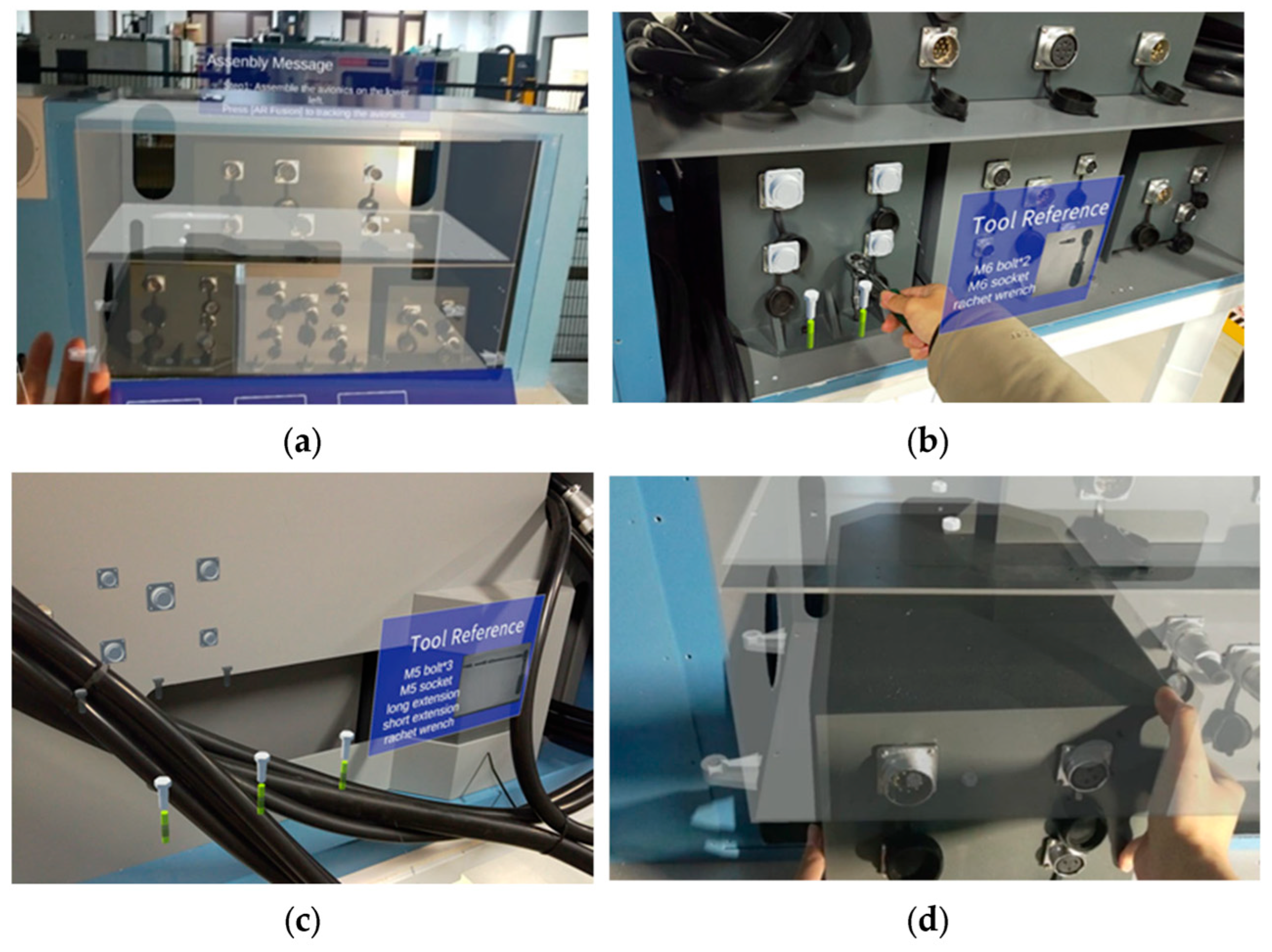

Figure 5.

Example of AR-assisted visualization of guidance information for machine maintenance [50]. (a) Overlay of virtual information on the actual equipment bay; (b) bolt holes to be assembled are highlighted in yellow; (c) bolt holes that are occluded are highlighted in yellow; (d) the occluded area can be seen through the virtual model.

Figure 5.

Example of AR-assisted visualization of guidance information for machine maintenance [50]. (a) Overlay of virtual information on the actual equipment bay; (b) bolt holes to be assembled are highlighted in yellow; (c) bolt holes that are occluded are highlighted in yellow; (d) the occluded area can be seen through the virtual model.

- Data Display—Head-up displays are also an element of augmented reality. This method of visualization is often found in modern fighters to display information about the combat situation, targets, targeting systems, radars, and parameters of internal systems [51]. Some models of cars are equipped with a head-up display from the factory, which projects information onto the windshield or a transparent reflector screen to indicate the speed of the car, the direction of movement according to navigation, data on multimedia, phone calls, and various notifications. This allows you to move your gaze minimally, without losing sight of the traffic situation, making driving safer and more comfortable [52]. Holographic displays are sometimes used in advertising, allowing you not only to display an image but to superimpose it on an object standing behind the screen, thereby supplementing it with effects or a description and attracting the attention of potential buyers with an unusual appearance [53].

- Architecture and Design—In architecture, AR is used to visualize structures, arrange furniture, and design based on a real room. This allows engineers and designers to clearly show the customer or designer how the construction process will look at different stages or the final result, namely to evaluate the project before construction begins [54]. This method allows the customer to better understand the project and avoid alterations at the construction stage. An interesting solution was presented in an article [55] where the researchers used a mobile robot that navigates in space and has a built-in projector that projects the necessary drawings from a 3D BIM model in real scale onto the wall. The system automatically adjusts the image to the surface, even a curved one, regardless of where the robot with the projector is placed. It can show the electrical wiring diagram; the location of pipes for water and sewage; and the heating, air conditioning, and ventilation systems. This system allows information to be visualized without the need to wear or hold the device. Furthermore, information can be seen by several people simultaneously. For workers, the use of AR helps them navigate large spaces and check tasks that need to be completed in a specific area to avoid errors and improve the use of time and resources. It also offers the ability to watch the construction work process remotely [56].

In conclusion, augmented reality is an excellent method of visualizing virtual objects in the real world, which is used in a lot of applications such as architecture, navigation, tourism, education, medicine, assistance, and production. AR also helps in assisting, which simplifies and accelerates the understanding of tasks, learning, experimenting, and conducting simulations interactively.

3.1.3. Mixed Reality and Its Usage

Mixed reality is about the same as augmented reality but more interactive with augmented and real environments. Augmented reality usually only shows content and the tracking position of the viewing device. Mixed reality usually involves an HMD headset with a set of color and time of flight (ToF) cameras and hand spatial controllers [57]. Overall, mixed reality systems can make a 3D scan of the environment [58] and recognize real objects, which allows users to interact with them with virtual assistance.

- Education—MR system can be used for fast teaching of new employees and checking their work for errors [59,60]. Using the technology of a digital twin of the robot or other machine, it is possible to study in a half-real environment, for example, a simulation of a virtual production line with a real robotic manipulator or a simulation of human safety and ergonomics [61].

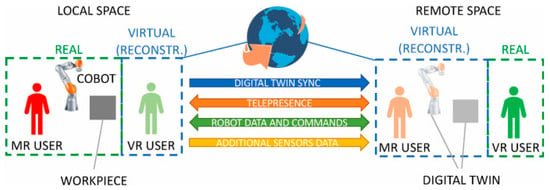

- Production Planning and Maintenance—The use of mixed reality technology has found its application in Industry 5.0 concepts, where the emphasis is on the interaction of humans and machines (Figure 6). This concept has become an incentive for the development of collaborative robots (cobots). They are increasingly used in modern production to improve and ensure the necessary safety of workers during work [62].

Figure 6.

Digital twin and collaborative robot work diagram [9].

Figure 6.

Digital twin and collaborative robot work diagram [9].

Conventional robots used in manufacturing are often dangerous because they may run at high speeds, handle dangerous attachments, work in hostile environments, or lack sensors to detect human actions that could be dangerous to the person. Collaborative robot control systems are about the same as regular robot systems but with extra safety and XR features that add the possibility to work together with people [63]. These systems can use a HMD or projector to show the operator work instructions, what the robot plans to do, and its status, to prevent accidents. [64]. For example, conventional robots in production simply turn on a pre-trained program with possible correction using data from sensors. Adding a system of cameras and sensors, as well as a special controller, allows artificial intelligence to track human actions and facilitates safe interactions between humans and robots [61]. Robots used for such tasks usually have lower speed and power. This allows them to be used for simple tasks that can help a person, such as easing the movement of objects or packaging. One example of MR usage is the digital twin method of communication between robot and user.

- Military and Rescue Applications—Mixed reality can be used for reconnaissance, automatic creation of a map in real-time, and interactive control of a robot for military purposes or for rescue operations when it is necessary to safely explore a location with rubble and find people, for example, after earthquakes. The article [40] shows a method for interactively visualizing a real-time map and the robot’s position, as well as an intuitive way to specify points for the robot to move.

Research performed on the subject reveals that augmented reality is an excellent method of visualizing virtual objects in the real world and interacting with both worlds using hand gestures or spatial controllers. In addition, there are a lot of applications such as education, production, and military. MR helps in collaborations with cobots by simplifying and accelerating the understanding of robot tasks, learning, experimenting, and conducting simulations interactively.

3.2. Implementing of Extended Reality Systems in Robotics

Extended reality is a nice tool to easily and natively understand information about robot operations using visualization techniques. This tool enables us to see the robot’s movement trajectory during the process of simulation. Such simulation can run at natural or augmented speed, which saves time for robot program preparation before real field testing.

4. Hardware in Extended Reality

4.1. Main Types of Hardware in Extended Reality

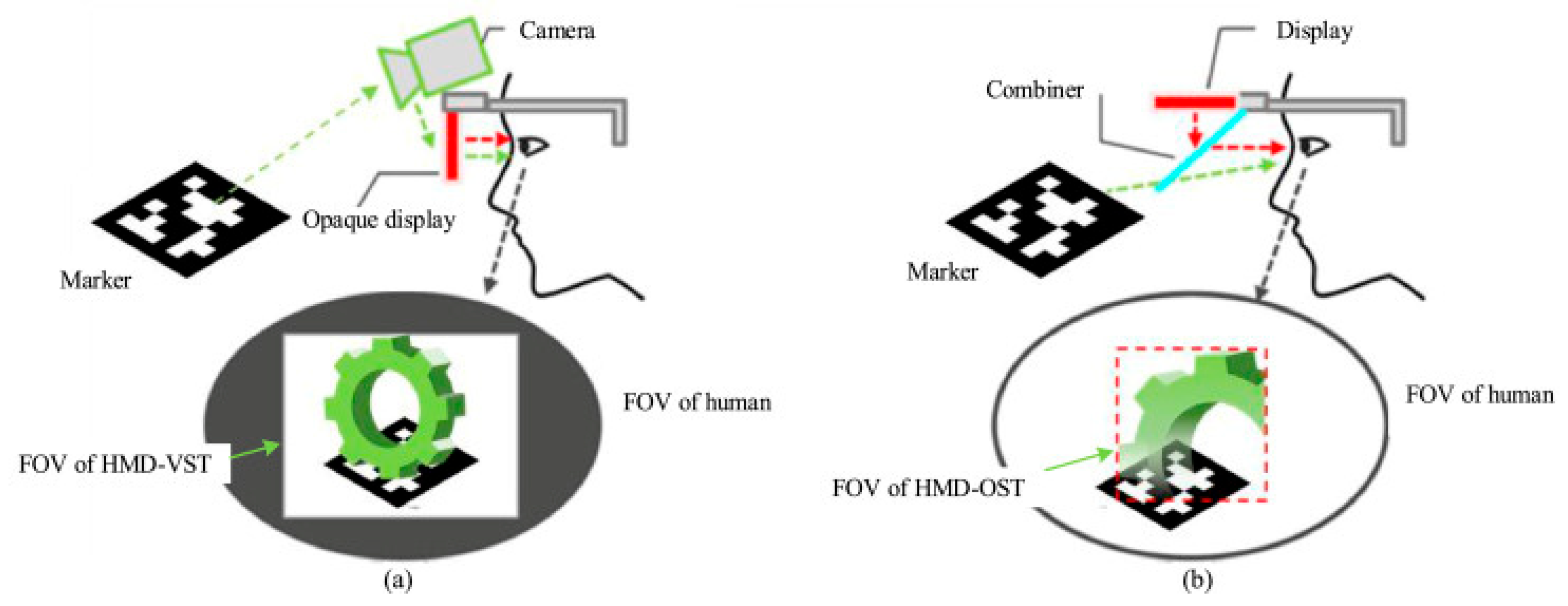

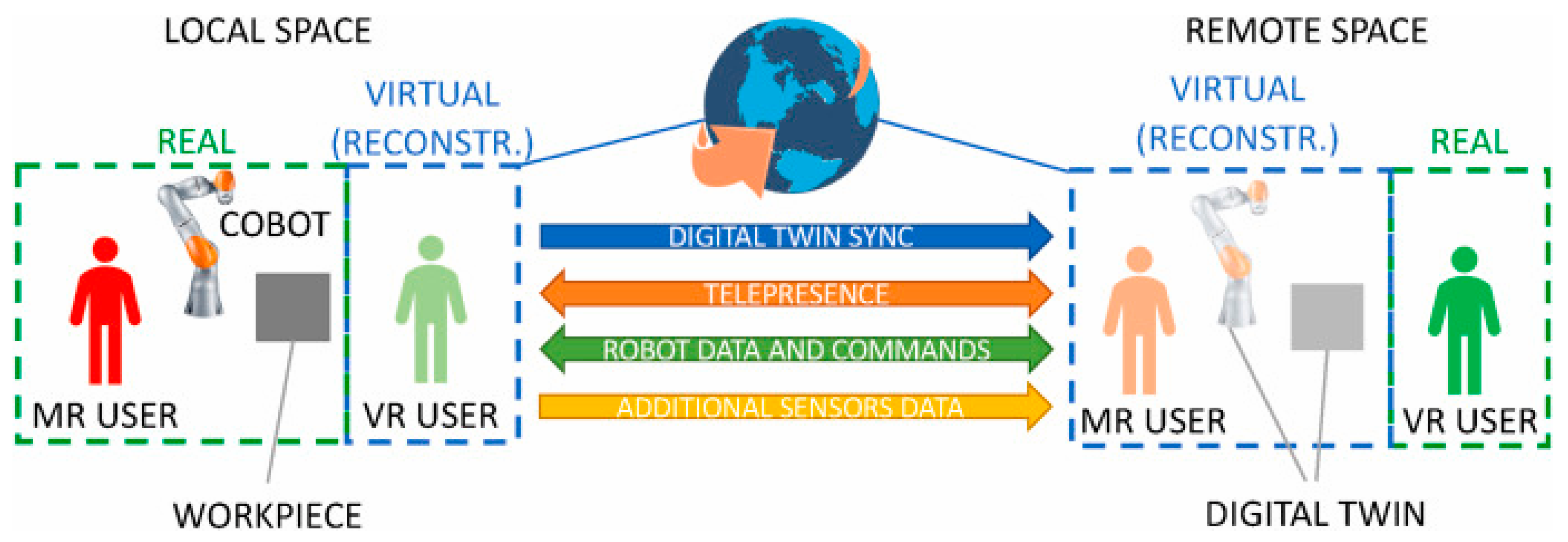

From the previously analyzed articles, information was obtained about the design features of the XR headsets. Extended reality includes virtual, augmented, and mixed reality technologies, but the method of visualization is different. Figure 7 graphically explains these differences.

Figure 7.

Differences in methods of visualization. (a) VR and MR headset with HMD. (b) AR and MR headset with optical combiner [39].

Virtual reality graphics are typically realized using head-mounted displays (HMDs), which show the simulation or game process in a virtual room/space not connected to the real world. It is possible to compare with a camera view in 3D computer games when a player moves his head. The sensors of the HMD headset detect it and send coordinates to the computer. A virtual camera sees the virtual game world or a computer simulation and rotates it by the same angle in a virtual room or space. The user sees a view that is not connected with a real world, but shown in virtual reality. Also, VR HMDs can be just a binocular display, without tracking of movement, or with tracking, that adds interactive features in visualizations. VR is widely used in computer games and simulators, for example, VR helmets with spatial controllers, Play Station VR (Sony Interactive Entertainment, Tokyo, Japan), or Oculus Rift (Oculus VR, Menlo Park, CA, USA). These helmets have the ability to track head and hand movements, allowing you to improve the game’s interactivity and immerse yourself in virtual space. Nice examples of VR headset units are the Oculus Rift, Samsung Gear VR (Samsung Electronics, Suwon, Republic of Korea), and Google Cardboard (Google Inc., Mountain View, CA, USA). The main hardware components are provided below:

- Main VR hardware: Head-mounted display (HMD).

- Additional VR hardware: Motion tracking of the HMD, spatial controllers, gamepad, joystick, steering wheel, keyboard, or mouse.

Augmented reality is a technology that can show more information using a real-world environment on a device with a camera and screen (smartphone, tablet) or an HMD headset with a camera and projector with a special prism, as shown in Figure 7b. A nice example of an AR headset is Google Glass. It can also be any mixed-reality headset. Also, in basic versions of AR, the camera and special markers are used to position a virtual object on the screen of the device [15].

- Main AR hardware: Head-mounted display or projector and RGB camera.

- Additional AR hardware: Fiducial markers, ToF camera, or LiDAR.

Mixed reality is a combination of augmented reality and a body tracking system. For visualization, it can use a projector with a prism like in AR glasses or an HMD headset with integrated cameras to capture the real world. Both are shown in Figure 7. The best examples of MR headsets are Microsoft HoloLens, Meta Quest, HTC Vive, and Apple Vision Pro.

- Main MR hardware: Head-mounted display or projector and RGB camera.

- Additional AR hardware: Fiducial markers, ToF camera or LiDAR.

- Additional VR hardware: Motion tracking of HMD, spatial controllers, gamepad, joystick, steering wheel, keyboard, or mouse.

Extended reality gives the operator a lot of ways to interact with virtual environments and objects. For headset tracking, it usually uses a sensor fusion from various types of sensors or their arrays. For example, the motion tracking system of the HTC Vive headset works through sensor fusion of inertial measurement units (IMU) and motion tracking using the Vive tracker system. The system defines the position using the time of arrival of the infrared (IR) signals, which are emitted as laser pulses from the base stations and detected by the photodiodes on the tracker [65].

The medium productivity and autonomy of autonomous headsets is the main disadvantage. Modern standalone XR headsets have a working time of about 2 h. It can be increased by increasing the battery, but this will negatively affect the weight and ergonomics of the device. A possible solution is to use an external battery connected to the headset via a cable. Another pressing problem is that the equipment quickly becomes obsolete, especially processors, and periodically requires updating. However, since most devices are made as all-in-one devices, the entire device needs to be replaced. A possible solution is to use devices that only show the visualization, and the data are processed by a computer or server. In this way, it is possible to upgrade the computer and at the same time improve the quality of the virtual world and continue using the same XR device. Therefore, there are solutions where the headset does not process information, but it acts only as a screen with sensors. Here, all information is processed on the server. In those systems, communication plays a significant role in the XR system [66]. The latency of the XR-based system is related to the speed of connection, the calculation time, and the detection latency [14,60].

Existing hardware types and configurations are similar among all types of extended reality; nevertheless, virtual reality headsets often have no cameras and provide no image fusion to date.

4.2. Existing Main Forms of Interface

After analyzing the articles, the most popular devices for x-ray visualization were identified and will be described and compared below. Extended reality gives a variety of ways to interact with graphical, audial, or sensory information. Examples of XR headsets that are mostly used as hardware are shown in Figure 8.

All-In-One HMD—The Meta Quest is a standalone mixed reality HMD device with an integrated IMU and camera system for visual SLAM localization. The cameras can detect user hand gestures to control visualization without controllers. The integrated CPU is powerful enough to run medium-quality mixed reality simulations and games, but the battery life is about 2.5 h. Also, the headset supports connection to the computer via Wi-Fi. Connecting via cable removes the battery limitations, but limits the user’s movements [67]. This device is quite advanced, especially for the price of USD 500. It is also quite compact and ergonomic, weighing 515 g [68].

HMD with Sensors—HTC Vive Pro 2 is also an HMD device with an integrated IMU and camera system. It also works on MR, AR, and VR modes, but it does not have an integrated CPU. This headset always needs to be connected to the PC and provides high-quality visualizations or precisely calculated simulations. The disadvantage is only that user is always connected to a PC and limited in movements. The device costs USD 800 and is quite large in size, weighing 850 g, which is heavy for the head [69].

HMD without Sensors—The Oculus rift is only an HMD headset. This device only has a screen and uses infrared (IR) LED throughout the entire body for tracking, so it only provides a VR experience. For tracking the position of this system, an external IR camera is used to track IR points of a headset. An example of such a device is the first versions of the Oculus Rift DK1 and DK2. The weight of the device is 470 g, and the price is USD 300.

Standalone with Prismatic Combiner—Microsoft HoloLens is an AR and MR headset where the user always sees a real world, and virtual data are projected through a special prism. The two types of views are combined to provide the look of a real world with a virtual object. It uses cameras and IMU to localize the position of the headset [67,70]. This technology is used in the Microsoft HoloLens headset, which is often used in scientific papers and experiments due to its good support, with a cost of USD 3500. The device is compact and ergonomic and weighs 566 g [71].

Smartphones—Modern smartphones are also suitable for use in VR and AR visualization. They have sufficiently powerful processors and high-quality cameras to process data in real time. For AR use, just a smartphone is enough. However, for VR use, it needs a special adapter with lenses, like Google Cardboard or Samsung Gear VR, for comfortable use. Also, due to their low weight, about 100–300 g, such devices are ergonomic, which makes their use comfortable. This is the cheapest method to get the VR experience, from EUR 10 without including the smartphone [2], but it has the disadvantages of low screen resolution and a low battery life due to the heavy load on the phone’s processor. There are also usually no spatial controllers, or there is only one, like in Samsung Gear VR, which worsens control and immersion in the VR environment.

Regular Monitor and Camera—This is an old and straightforward way to get the AR experience. Usually, they used a graphic marker printed on paper, which was read by a program using a web camera. The computer processed everything and displayed a picture from the camera on the monitor on which a virtual object was superimposed.

Figure 8.

Examples of XR headsets: 1. Meta Quest 3 [68], 2. HTC Vive [69], 3. Microsoft HoloLens [70], 4. Samsung Gear VR [72].

Figure 8.

Examples of XR headsets: 1. Meta Quest 3 [68], 2. HTC Vive [69], 3. Microsoft HoloLens [70], 4. Samsung Gear VR [72].

Extended reality systems have several types of user interfaces with headsets. Examples of interfaces used in XR headsets are provided in Table 1.

Table 1.

Classification of interface types in XR.

In conclusion, modern devices include various sensors, high-quality screens, and data processing algorithms, which allow them to navigate in space quite accurately, recognize hand gestures, and scan rooms in 3D. Each type of equipment is good for certain purposes. Phones are portable and always with you for AR. All-in-one HMDs are suitable for most XR tasks, and prismatic devices are used when it is very important to see the real world without changes. Devices powered by a computer are necessary for high-quality detailed visualizations requiring high detection power.

5. Software in XR

5.1. Types of Software by Implemented Platform

There exist a lot of types of extended reality HMDs, standalone devices, or connected to the computer. Standalone devices like Meta Quest usually work using a modified version of Android OS. This includes a special algorithm for visual “Simultaneous Localization and Mapping” (vSLAM) [73,74] This algorithm gives the headset the ability to create a map of a working environment using cameras to track the user’s position in a room. It can also detect objects and markers. The created map allows the user to navigate in that environment using virtual coordinates and rotation angles. With each added pass of scanning through the same place, the detail of the map improves.

There are many platforms for creating augmented reality applications, mostly using real-time rendering engines. The most well-known are game engines such as Unity 3D and Unreal Engine [38]. Both game engines provide tools to create XR programs and support various platforms, such as Windows, Mac OS, Android, iOS, PlayStation, and others. These two game engines have assorted flavors. Unity is more open and focused on the rapid development of small games and applications, which is possible due to its exceptionally large developer community. Basically, in research, Unity 3D is usually used because it offers very flexible settings and a lot of add-ons and allows you to easily and quickly create software and hardware systems. Thus, it is often used in various experiments [59,75]. The Unreal Engine is more focused on high-quality graphics and large, detailed virtual worlds, which can make it difficult to develop small applications. A big plus of the Unreal Engine is its blueprints, which allow you to graphically program the virtual objects you use. This makes it easier to understand the program you are creating and to create simple projects without much knowledge of programming. An overall comparison of the most popular 3D engines is shown in Table 2.

Table 2.

Classification of 3D engines for creating XR software.

The provided comparison of the 3D engines and analysis of their capabilities and implementation offers some highlights. First, the Unreal graphical engine, being open source and open for modification, is much more suitable for further modernization and can be explicitly implemented into the robotic system using ROS or by direct programing. In other words, the Unity 3D engine is ready for the final user, but the customization of this device is hardly possible. As an outcome for comparison, it can be said that both graphical engines have their own place in the field and do not replace each other.

5.2. Software Classification by Facilities and XR Toolkits

A toolkit is needed to create 3D applications, in addition to a 3D engine. An XR toolkit is a set of specialized software tools that are already configured and simplify the creation of various interactive visualizations from scratch.

Toolkits are used to interact with the equipment, interchange data from the headset sensors to the 3D engine, and then receive feedback in the form of an image. It is necessary to correctly interpret the data between the equipment and the 3D engine. The main XR toolkit features are provided below in Table 3.

Table 3.

XR toolkits classification by facilities and opportunities.

ARtoolkitX is an opensource toolkit that supports the most popular platform and can be adapted for different tasks and prototypes. It is a continuation of AR toolkit, one of the first tools to create and work with AR [76,77].

Google ARCore is a platform to create AR applications for Android devices that was created by Google. This platform can also determine the plane and depth. This allows the use AR without graphic markers and the visualization of virtual objects on any surface [78].

Apple ARKit is a framework for creating an AR application for IOS devices that was developed by Apple. Like This framework works using object tracking method, and it is also does not need graphic markers [81].

Vuforia is a popular platform for creating AR, which integrates Unity, a game engine that supports Windows, Android, and IOS. It is often used to create educational applications [94].

Unity XR is a complex solution for VR, AR, and MR. Unity XR is a built-in module in Unity. It has an exceptionally large community and support for many devices and platforms, from Windows and Android to gaming consoles and augmented reality headsets. It has a powerful physics engine, which allows you to realistically process the movement of objects in the XR [84].

Unreal Engine XR is a powerful game engine integration that supports various platforms of AR, VR, and MR technologies. The distinctive feature of this engine is high-quality, photorealistic visualization, which is sometimes used in the creation of films, but the main application is games. The platform is quite flexible but requires significant knowledge of C++ to be used for non-gaming purposes. It has great hardware and community support [86].

Mixed Reality Toolkit (MRTK) is a set of tools developed by Microsoft that was created to accelerate the creation of AR applications for mixed reality headsets. It uses the game engine Unity as a base. MRTK is customized for the Microsoft HoloLens headset but also supports other headsets [88].

Magic Leap SDK (MLSDK) is used to speed up development of AR applications for Magic Leap headset only [95].

OpenXR is a free, open-source standard developed by the Khronos Group for creating high-performance applications for VR and AR. It aimed to simplify and speed up the development of apps by providing a unified interface and code for various hardware platforms. There is also a plugin available for Unity [90].

RViz is open-source visualization software that is a part of the ROS ecosystem. Using this tool, it is possible to simulate robot movements in virtual environments. It is very flexible and has a lot of plugins, including AR/VR plugins [93].

Having analyzed the existing development tools in the field of augmented reality, it is evident that not only the foundational game engine is important, but also the extensions that significantly speed up and simplify the development of such applications. Each solution has its own direction and is customized for certain hardware or operating systems. Therefore, many different extensions and the openness of some solutions allows them to be used not only for games and entertainment, but also in any areas of activity, including robotics, medicine, navigation, etc.

After performing the analysis, there is one outcome. Specifically, the system that has good compatibility with robots has software packages, equipped with object tracking features. Other parameters, including the operation system and SDK integration support, did not reveal high compatibility issue.

5.3. Software Based Improvements of XR Technology

The development of and reduction in the prices of augmented reality devices makes them accessible and popular, thereby increasing the need for faster network and wireless interfaces with low latency. XR headsets have serious hardware limitations such as dimensions, weight, heat generation, and autonomy. Since modern VR headsets use high-resolution displays (2064 × 2208 pixels per eye in Meta Quest 3) that are updated from 75 to 120 times per second, to work with such a resolution [96], a bandwidth of approximately 0.3 ∼ 1 Gbps is needed for operation [97]. Using modern AI algorithms, it is possible to reduce the load on the network traffic and integrated GPU. Now, AMD and NVIDIA have solutions for improving graphics for games in real time with minimal latency, such as AMD FidelityFX Super Resolution (FSR) and Nvidia Deep Learning Super Sampling (DLSS) [98]. Super-resolution techniques, closely related to DLSS, are used to enhance the resolution of images, videos, and games. So, if you are using a system with a wireless connection to a computer, or even more so, cloud computing, lowering the image resolution can help. The XR device can then upscale the image with enough detail, which helps to offload the communication channel. The author of the article proposes to use a real-time super-resolution (RTSR) framework for XR HD video transmission to reduce data traffic and latency by transmitting low-definition frames and locally upscaling using deep learning models. This approach is like the working principle of DLSS, which provides rendering at a lower resolution and sending it together with a trained neural network super-resolution (SR) model. On the XR device, this model is then used to reconstruct HD images.

Another modern technology is the generation of intermediate frames. Additionally, AMD has Fluid Motion Frames and NVIDIA has DLSS Frame Generation technology. These methods can perform frame generation to increase frames per second [99] and reduce the load on the GPU. This subsequently reduces energy consumption and increases the battery life. Additionally, an increased frame rate is necessary for smooth movements in XR. However, this technology adds a significant input lag due to the delay. For regular computer games, it may be unnoticeable; however, for XR it can cause dizziness or nausea. In addition, technology like NVIDIA Reflex can be used to reduce latency [99,100].

Therefore, to slightly reduce the load, the foveal rendering method is used. In this method, the image in the user’s field of view is made to maximum quality, and everything that goes beyond the boundaries is rendered in lower detail. Because people have lower visual acuity in their peripheral vision, this method has a negligible effect on perceived quality. There are two types of foveated rendering: fixed foveated rendering (FFR) and tracked foveated rendering (TFR). FFR does not track the user’s eye direction but simply renders a high-quality image in the center and a lower-quality image at the edges if the user mostly looks at the center of the image. TFR uses a user eye tracking system and improves the image in the place where the user is looking. Thus, the point of view is rendered in maximum quality, and everything that enters the peripheral vision is rendered with lower quality and dynamically adjusted to eye movements [101]. TFR, compared to FFR, has a smaller area with high quality. However, because this area is dynamic, the quality stays good. At the same time, it is necessary to process a smaller area, which is used to increase the number of frames and the responsiveness of the device [102]. In conclusion, if you combine eye tracking technology with an image upscaling algorithm, then theoretically you can reduce the load on the equipment and extend the life of the device with a slight increase in latency.

In general, theoretically, a combination of the listed technologies or their analogs, including TFR, super resolution, generation of intermediate frames and technology for reducing latency, can provide the largest optimization for extended reality in terms of performance battery life and reducing the load on communication channels. Perhaps in the future we will see the use of such combinations in XR headsets.

6. Discussion

This literature review analyzed modern technologies in the field of extended reality, including the following:

- Application in various fields;

- Design features;

- Capabilities of popular XR devices;

- Software used in the development of applications and in the XR devices themselves;

- Possible improvements based on modern algorithms.

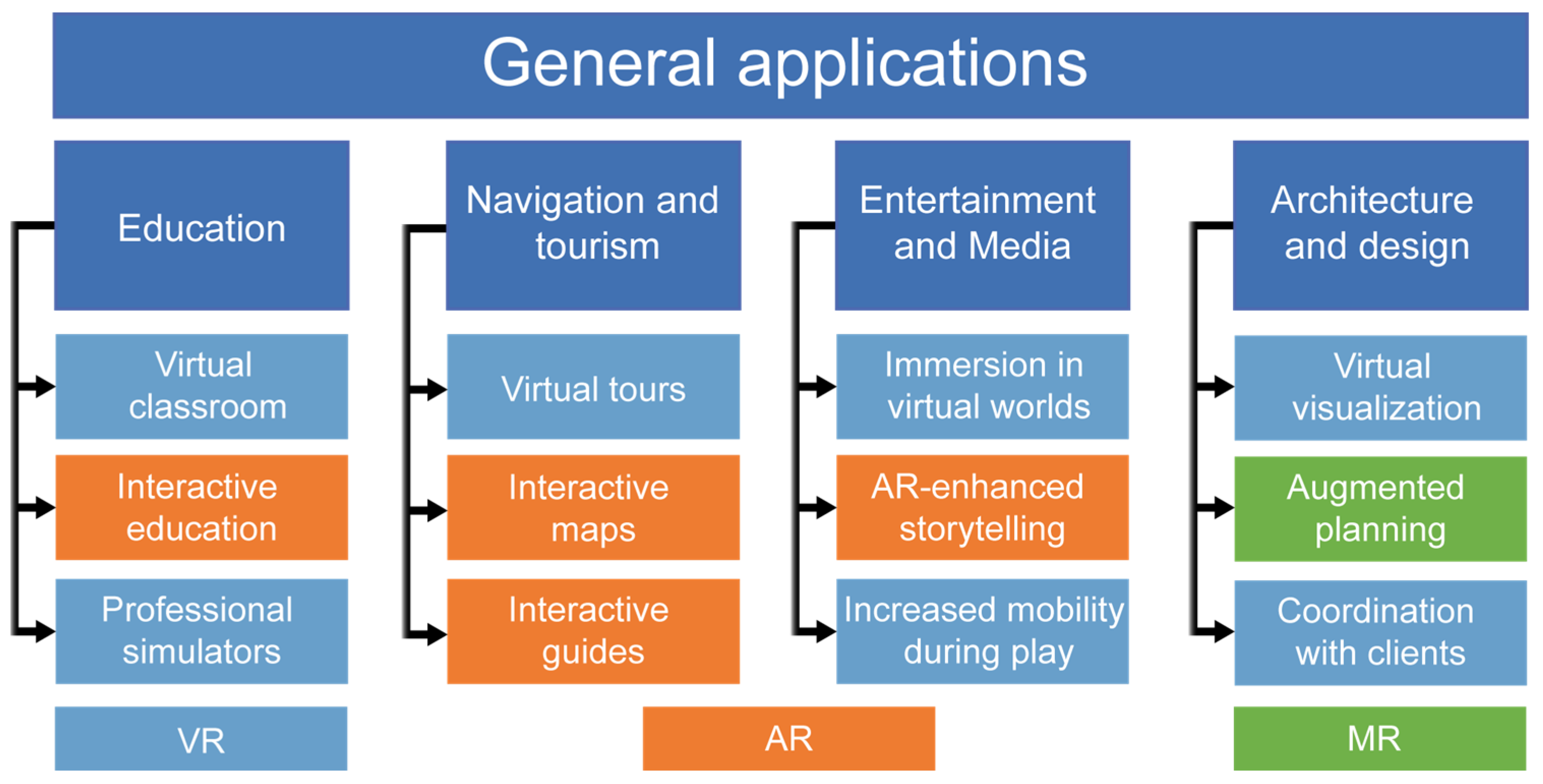

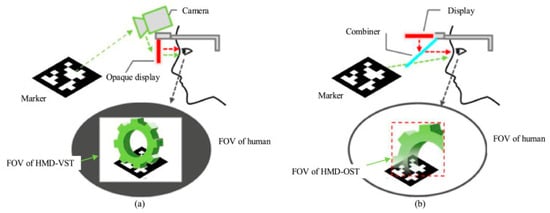

The use of XR systems finds applications in various fields of activity. The classification of applications shown in Table 4 splits the identified studies into general categories without detail, and they are colored according the intensity of the research. The analysis of references took data from publicly accessible databases, including Scopus, Web of Science, Engineering Village 2, and Google Scholar. The items were sorted by relevant keywords. Rectified references were counted and presented under their section in Table 4. This approach cannot access the depth of research issues, such as the implementation mode, covered activities (Figure 9 and Figure 10), and many others.

Table 4.

XR classification based on facilities and opportunities.

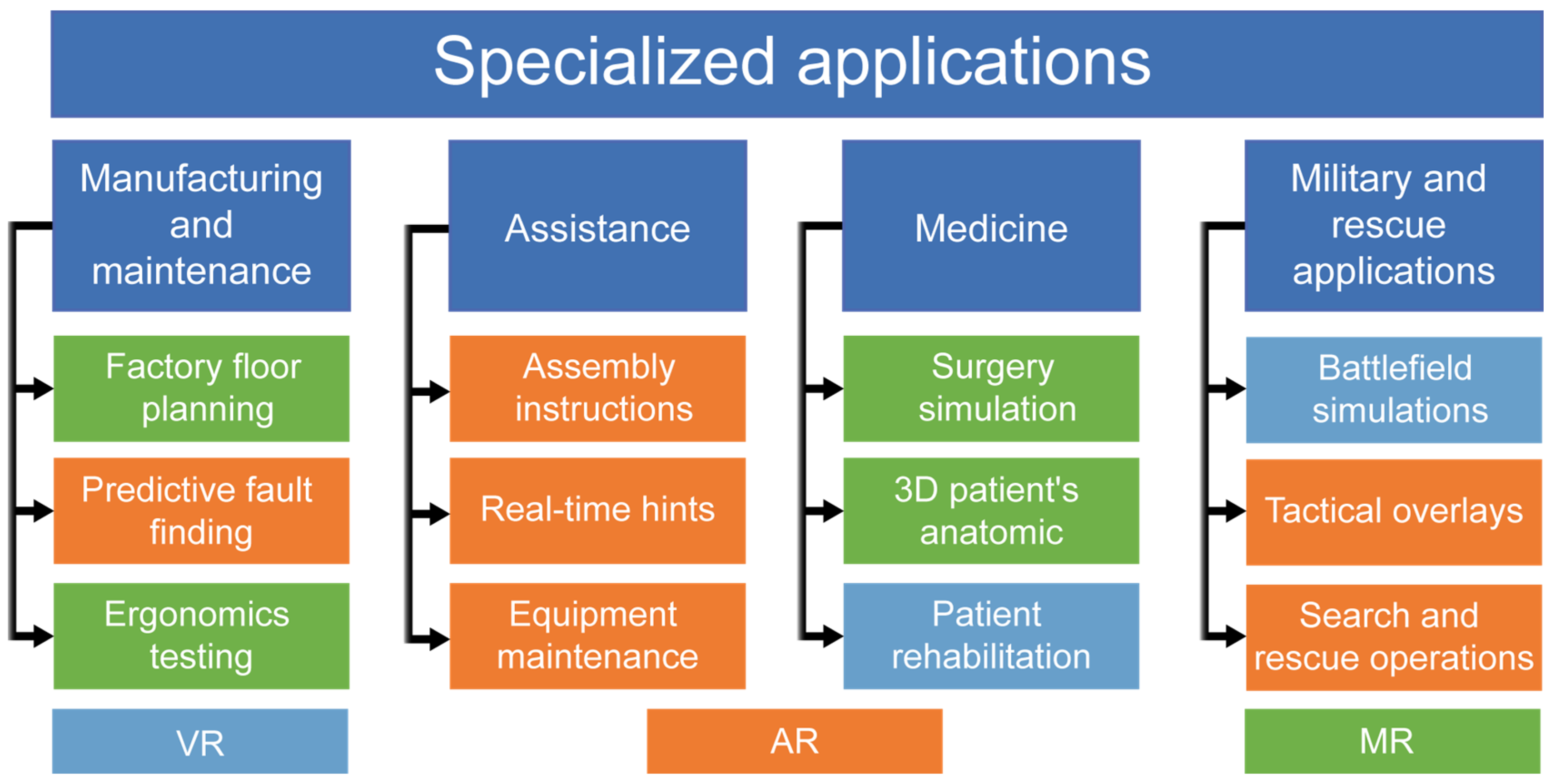

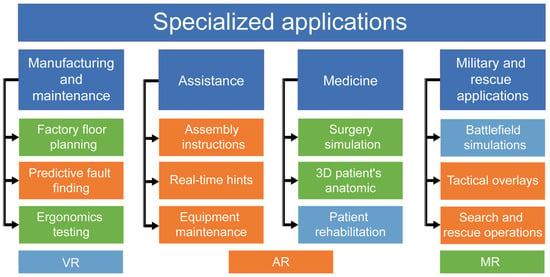

Figure 9.

General applications in extended reality.

Figure 10.

Specialized applications in extended reality.

However, such an approach provides the factor of research intensity, which shows an interest in this area within the scope of used technology. The uneven distribution of research between technologies leads to an understanding of each implementation field’s convenience. For example, the use of headset devices limits the implementation of technology in media. However, in the assistance area, this limitation is absent.

The low activity of VR, MR, and XR technologies in military and rescue areas signals the underdevelopment of applications and possible non-existent devices for field conditions with a long battery operation times, suitable shock-resistant devices, and devices suitable for autonomous operation without an Internet connection. Prevailing office-based implementation areas, such as education, planning, architecture, and entertainment, mostly rely on PC screen-based technologies, but XR headsets are still attractive for some entertainment and gaming applications. The discovered shortage of hardware shows a gap between possibilities and the real need for activity, especially in military and rescue. Classification of specialized applications here is provided in the Figure 10.

Comparison of designs and capabilities used in popular VR, AR, and MR headsets showed that MR devices that use a screen for visualization and cameras to capture the view of the real world are the most versatile, since they can work in all three XR modes. The most optimal and popular was Meta Quest 3, due to its ability to work in three XR modes, good support for content creation programs, and a low price. In cases where it is important to always see the real world, a good and popular option would be Microsoft HoloLens, since its visualization system uses optics that allow you to see the real world with superimposed computer graphics.

Having analyzed modern toolkits supporting XR (ARtoolkitX, Google ARCore, Apple ARKit, Vuforia, Unity XR, Unreal Engine XR, MRTK, MLSDK, OpenXR, and RViz), differences in functionality and compatibility with equipment and the possibility of applications in various tasks were revealed. As an example, Google ARCore and Apple ARKit are used to create mobile applications, and MRTK and OpenXR are used for cross-platform development.

The different popularities of 3D engines for use in graphical technology remains distributed approximately in proportion among all virtual technologies, as provided in Table 5. Unreal Engine has higher graphic quality, which is key in some professional applications, but Unity is more popular for XR app development due to its user-friendly tools and extensive hardware support. On the other hand, the difference in a programming language (unity written in C++) sometimes opens broader possibilities for user applications and speed of implementation.

Table 5.

The popularity of 3D engines for XR is based on the number of articles from 2020 to 2024.

It is proposed to implement modern technologies in XR headsets, such as eye tracking, foveal rendering, super resolution, and intermediate frame generation. In combination, these solutions can increase the autonomy of devices and reduce the load on the data channel by reducing the computing load from the processor built into the headset.

7. Conclusions

The performed analysis of accessible publications and research reports in extended reality issues related to robotics revealed the following main findings:

- Virtual reality (VR) systems are mostly used in entertainment and education based on the established hardware conditions.

- The education area is predominant due to the number of studies on extended reality technologies; this is a definite marker of the underdevelopment of industrial and military area in XR.

- The development of user-friendly 3D engines attracts a greater amount of research and a wider use of specialized applications in industry, medicine, and all other areas of activities.

- The development of technologies allows us to make affordable and technologically advanced devices, such as Meta Quest 3. Using these, it is possible to develop XR technologies and expand the scope of application.

- The use of modern technologies and algorithms such as eye tracking for foveal rendering, frame generation, DLSS, and FSR, which can reduce the computing load on the device and network load, increase battery life based on device optimization.

- The future development of XR technologies can be aimed at optimizing computing processes, reducing delays, and increasing the autonomy of devices, which can expand the scope of application of this technology.

Author Contributions

Conceptualization, O.S. and V.B.; methodology, A.D. and M.P; validation, A.D., O.S. and M.P.; formal analysis, O.S. and A.D.; investigation, O.S. and V.B.; resources, V.B. and A.D.; data curation, M.P.; writing—original draft preparation, O.S.; writing—review and editing, V.B., A.D. and O.S.; visualization, O.S.; supervision, V.B.; project administration, A.D.; funding acquisition, V.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the European Commission as part of the European Union’s Horizon 2020 research innovation program, through the ECSEL Joint Undertaking project AI4CSM, under Grant Agreement No. 101007326.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the study’s design; the collection, analysis, or interpretation of data; the writing of the manuscript; or the decision to publish the results.

References

- Gupta, P.; Bhadani, K.; Bandyopadhyay, A.; Banik, D.; Swain, S. Impact of Metaverse in the Near “Future”. In Proceedings of the 4th International Conference on Intelligent Engineering and Management, (ICIEM), London, UK, 9–11 May 2023. [Google Scholar] [CrossRef]

- Wang, Z.; Chan, M.-T. A Systematic Review of Google Cardboard Used in Education. Comput. Educ. X Real. 2024, 4, 100046. [Google Scholar] [CrossRef]

- Xue, C.; Qiao, Y.; Murray, N. Enabling Human-Robot-Interaction for Remote Robotic Operation via Augmented Reality. In Proceedings of the 21st IEEE International Symposium on a World of Wireless, Mobile and Multimedia Networks, WoWMoM 2020, Cork, Ireland, 31 August–3 September 2020; pp. 194–196. [Google Scholar] [CrossRef]

- Path Planning. MATLAB & Simulink. Available online: https://se.mathworks.com/discovery/path-planning.html (accessed on 5 March 2025).

- Livatino, S.; Guastella, D.C.; Muscato, G.; Rinaldi, V.; Cantelli, L.; Melita, C.D.; Caniglia, A.; Mazza, R.; Padula, G. Intuitive Robot Teleoperation through Multi-Sensor Informed Mixed Reality Visual Aids. IEEE Access 2021, 9, 25795–25808. [Google Scholar] [CrossRef]

- Batistute, A.; Santos, E.; Takieddine, K.; Lazari, P.M.; Da Rocha, L.G.; Vivaldini, K.C.T. Extended Reality for Teleoperated Mobile Robots. In Proceedings of the 2021 Latin American Robotics Symposium, 2021 Brazilian Symposium on Robotics, and 2021 Workshop on Robotics in Education, LARS-SBR-WRE, Natal, Brazil, 11–15 October 2021; pp. 19–24. [Google Scholar] [CrossRef]

- Adriana Cárdenas-Robledo, L.; Hernández-Uribe, Ó.; Reta, C.; Antonio Cantoral-Ceballos, J. Extended Reality Applications in Industry 4.0.—A Systematic Literature Review. Telemat. Inform. 2022, 73, 101863. [Google Scholar] [CrossRef]

- Walker, M.E.; Gramopadhye, M.; Ikeda, B.; Burns, J.; Szafr, D. The Cyber-Physical Control Room: A Mixed Reality Interface for Mobile Robot Teleoperation and Human-Robot Teaming. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–14 March 2024; pp. 762–771. [Google Scholar] [CrossRef]

- Illing, B.; Westhoven, M.; Gaspers, B.; Smets, N.; Bruggemann, B.; Mathew, T. Evaluation of Immersive Teleoperation Systems Using Standardized Tasks and Measurements. In Proceedings of the 29th IEEE International Conference on Robot and Human Interactive Communication, RO-MAN 2020, Naples, Italy, 31 August–4 September 2020; pp. 278–285. [Google Scholar] [CrossRef]

- Jiang, L.; Chen, G.; Li, L.; Chen, Z.; Yang, K.; Wang, X. Remote Teaching System for Robotic Surgery and Its Validation: Results of a Randomized Controlled Study. Surg. Endosc. 2023, 37, 9190–9200. [Google Scholar] [CrossRef] [PubMed]

- Devic, A.; Vidakovic, J.; Zivkovic, N. Development of Standalone Extended-Reality-Supported Interactive Industrial Robot Programming System. Machines 2024, 12, 480. [Google Scholar] [CrossRef]

- Niloy, M.A.K.; Shama, A.; Chakrabortty, R.K.; Ryan, M.J.; Badal, F.R.; Tasneem, Z.; Ahamed, M.H.; Moyeen, S.I.; Das, S.K.; Ali, M.F.; et al. Critical Design and Control Issues of Indoor Autonomous Mobile Robots: A Review. IEEE Access 2021, 9, 35338–35370. [Google Scholar] [CrossRef]

- VR, AR, MR, and XR: The Metaverse Market and XR-Related Technologies. Available online: https://techtimes.dexerials.jp/en/elemental-technologies/applied-technology-for-xr/ (accessed on 27 November 2024).

- Calandra, D.; Pratticò, F.G.; Cannavò, A.; Casetti, C.; Lamberti, F. Digital Twin- and Extended Reality-Based Telepresence for Collaborative Robot Programming in the 6G Perspective. Digit. Commun. Netw. 2024, 10, 315–327. [Google Scholar] [CrossRef]

- Arena, F.; Collotta, M.; Pau, G.; Termine, F. An Overview of Augmented Reality. Computers 2022, 11, 28. [Google Scholar] [CrossRef]

- Jung, K.; Nguyen, V.T.; Lee, J. BlocklyXR: An Interactive Extended Reality Toolkit for Digital Storytelling. Appl. Sci. 2021, 11, 1073. [Google Scholar] [CrossRef]

- Zolezzi, D.; Iacono, S.; Martini, L.; Vercelli, G.V. Comunicazione Digitale XR: Assessing the Impact of Extended Reality Technologies on Learning. Comput. Educ. X Real. 2024, 5, 100077. [Google Scholar] [CrossRef]

- Vergara, D.; Extremera, J.; Rubio, M.P.; Dávila, L.P. Meaningful Learning Through Virtual Reality Learning Environments: A Case Study in Materials Engineering. Appl. Sci. 2019, 9, 4625. [Google Scholar] [CrossRef]

- Tan, Y.; Xu, W.; Li, S.; Chen, K. Augmented and Virtual Reality (AR/VR) for Education and Training in the AEC Industry: A Systematic Review of Research and Applications. Buildings 2022, 12, 1529. [Google Scholar] [CrossRef]

- Scorgie, D.; Feng, Z.; Paes, D.; Parisi, F.; Yiu, T.W.; Lovreglio, R. Virtual Reality for Safety Training: A Systematic Literature Review and Meta-Analysis. Saf. Sci. 2024, 171, 106372. [Google Scholar] [CrossRef]

- Asoodar, M.; Janesarvatan, F.; Yu, H.; de Jong, N. Theoretical Foundations and Implications of Augmented Reality, Virtual Reality, and Mixed Reality for Immersive Learning in Health Professions Education. Adv. Simul. 2024, 9, 36. [Google Scholar] [CrossRef]

- Einloft, J.; Meyer, H.L.; Bedenbender, S.; Morgenschweis, M.L.; Ganser, A.; Russ, P.; Hirsch, M.C.; Grgic, I. Immersive Medical Training: A Comprehensive Longitudinal Study of Extended Reality in Emergency Scenarios for Large Student Groups. BMC Med. Educ. 2024, 24, 978. [Google Scholar] [CrossRef] [PubMed]

- Magalhães, R.; Oliveira, A.; Terroso, D.; Vilaça, A.; Veloso, R.; Marques, A.; Pereira, J.; Coelho, L. Mixed Reality in the Operating Room: A Systematic Review. J. Med. Syst. 2024, 48, 76. [Google Scholar] [CrossRef]

- Sasu, V.G.; Cîrneci, D.; Goga, N.; Popa, R.; Neacșu, R.F.; Goga, M.; Podina, I.; Bratosin, I.A.; Bordea, C.A.; Pomana, L.N.; et al. Enhancing Multisensory Virtual Reality Environments through Olfactory Stimuli for Autobiographical Memory Retrieval. Appl. Sci. 2024, 14, 8826. [Google Scholar] [CrossRef]

- Lento, B.; Leconte, V.; Bardisbanian, L.; Doat, E.; Segas, E.; De Rugy, A. Bioinspired Head-To-Shoulder Reference Frame Transformation for Movement-Based Arm Prosthesis Control. IEEE Robot. Autom. Lett. 2024, 9, 7875–7882. [Google Scholar] [CrossRef]

- Wong, J.Y.; Yip, C.C.; Yong, S.T.; Chan, A.; Kok, S.T.; Lau, T.L.; Ali, M.T.; Gouda, E. BIM-VR Framework for Building Information Modelling in Engineering Education. Int. J. Interact. Mob. Technol. 2020, 14, 15–39. [Google Scholar] [CrossRef]

- Strand, I. Virtual Reality in Design Processes-a Literature Review of Benefits, Challenges, and Potentials. FormAkademisk 2020, 13, 1–19. [Google Scholar] [CrossRef]

- Ahmed, K.G. Integrating VR-Enabled BIM in Building Design Studios, Architectural Engineering Program, UAEU: A Pilot Study. In Proceedings of the 2020 Advances in Science and Engineering Technology International Conferences, ASET 2020, Dubai, United Arab Emirates, 4 February–9 April 2020. [Google Scholar] [CrossRef]

- Ventura, S.M.; Castronovo, F.; Ciribini, A.L.C. A Design Review Session Protocol for the Implementation of Immersive Virtual Reality in Usability-Focused Analysis. J. Inf. Technol. Constr. 2020, 25, 233–253. [Google Scholar] [CrossRef]

- Google Maps Information. Available online: https://www.google.com/streetview/ (accessed on 1 December 2024).

- Lodhi, R.N.; Asif, M.; Del Gesso, C.; Cobanoglu, C. Exploring the Critical Success Factors of Virtual Reality Adoption in the Hotel Industry. Int. J. Contemp. Hosp. Manag. 2024, 36, 3566–3586. [Google Scholar] [CrossRef]

- Louvre Virtual Tours Information. Available online: https://www.louvre.fr/en/online-tours (accessed on 26 November 2024).

- Sylaiou, S.; Kasapakis, V.; Dzardanova, E.; Gavalas, D. Leveraging Mixed Reality Technologies to Enhance Museum Visitor Experiences. In Proceedings of the 9th International Conference on Intelligent Systems 2018: Theory, Research and Innovation in Applications, IS 2018, Funchal, Portugal, 25–27 September 2018; pp. 595–601. [Google Scholar] [CrossRef]

- Nwobodo, O.J.; Wereszczynski, K.; Cyran, K. A Review on Tracking Head Movement in Augmented Reality Systems. Procedia Comput. Sci. 2023, 225, 4344–4353. [Google Scholar] [CrossRef]

- Half-Life: Alyx Information on Steam. Available online: https://store.steampowered.com/app/546560/HalfLife_Alyx/ (accessed on 1 December 2024).

- Albert, I.; Burkard, N.; Queck, D.; Herrlich, M. The Effect of Auditory-Motor Synchronization in Exergames on the Example of the VR Rhythm Game BeatSaber. Proc. ACM Hum. Comput. Interact. 2022, 6, 1–26. [Google Scholar] [CrossRef]

- Alonso, F.; Faus, M.; Riera, J.V.; Fernandez-Marin, M.; Useche, S.A. Effectiveness of Driving Simulators for Drivers’ Training: A Systematic Review. Appl. Sci. 2023, 13, 5266. [Google Scholar] [CrossRef]

- Pan, M.; Wong, M.O.; Lam, C.C.; Pan, W. Integrating Extended Reality and Robotics in Construction: A Critical Review. Adv. Eng. Inform. 2024, 62, 102795. [Google Scholar] [CrossRef]

- Fang, W.; Chen, L.; Zhang, T.; Chen, C.; Teng, Z.; Wang, L. Head-Mounted Display Augmented Reality in Manufacturing: A Systematic Review. Robot. Comput. Integr. Manuf. 2023, 83, 102567. [Google Scholar] [CrossRef]

- Chen, J.; Sun, B.; Pollefeys, M.; Blum, H. A 3D Mixed Reality Interface for Human-Robot Teaming. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 11327–11333. [Google Scholar] [CrossRef]

- Kaiser, M.S.; Al Mamun, S.; Mahmud, M.; Tania, M.H. Healthcare Robots to Combat COVID-19. In Lecture Notes on Data Engineering and Communications Technologies; Springer: Berlin/Heidelberg, Germany, 2021; Volume 60, pp. 83–97. [Google Scholar] [CrossRef]

- Del Carmen Cardós-Alonso, M.; Otero-Varela, L.; Redondo, M.; Uzuriaga, M.; González, M.; Vazquez, T.; Blanco, A.; Espinosa, S.; Cintora-Sanz, A.M. Extended Reality Training for Mass Casualty Incidents: A Systematic Review on Effectiveness and Experience of Medical First Responders. Int. J. Emerg. Med. 2024, 17, 99. [Google Scholar] [CrossRef] [PubMed]

- Austin, C.R.; Ens, B.; Satriadi, K.A.; Jenny, B. Elicitation Study Investigating Hand and Foot Gesture Interaction for Immersive Maps in Augmented Reality. Cartogr. Geogr. Inf. Sci. 2020, 47, 214–228. [Google Scholar] [CrossRef]

- Ntagiantas, A.; Konstantakis, M.; Aliprantis, J.; Manousos, D.; Koumakis, L.; Caridakis, G. An Augmented Reality Children’s Book Edutainment through Participatory Content Creation and Promotion Based on the Pastoral Life of Psiloritis. Appl. Sci. 2022, 12, 1339. [Google Scholar] [CrossRef]

- Liao, S.C.; Shao, S.C.; Gao, S.Y.; Lai, E.C.C. Augmented Reality Visualization for Ultrasound-Guided Interventions: A Pilot Randomized Crossover Trial to Assess Trainee Performance and Cognitive Load. BMC Med. Educ. 2024, 24, 1058. [Google Scholar] [CrossRef]

- Makhataeva, Z.; Varol, H.A. Augmented Reality for Robotics: A Review. Robotics 2020, 9, 21. [Google Scholar] [CrossRef]

- Sinha, A.; West, A.; Vasdev, N.; Sooriakumaran, P.; Rane, A.; Dasgupta, P.; McKirdy, M. Current Practises and the Future of Robotic Surgical Training. Surgeon 2023, 21, 314–322. [Google Scholar] [CrossRef] [PubMed]

- Zea, A.; Fennel, M.; Hanebeck, U.D. Robot Joint Tracking With Mobile Depth Cameras for Augmented Reality Applications. In Proceedings of the 2022 25th International Conference on Information Fusion, FUSION, Linköping, Sweden, 4–7 July 2022. [Google Scholar] [CrossRef]

- Konstantinidis, F.K.; Kansizoglou, I.; Santavas, N.; Mouroutsos, S.G.; Gasteratos, A. MARMA: A Mobile Augmented Reality Maintenance Assistant for Fast-Track Repair Procedures in the Context of Industry 4.0. Machines 2020, 8, 88. [Google Scholar] [CrossRef]

- Xue, Z.; Yang, J.; Chen, R.; He, Q.; Li, Q.; Mei, X. AR-Assisted Guidance for Assembly and Maintenance of Avionics Equipment. Appl. Sci. 2024, 14, 1137. [Google Scholar] [CrossRef]

- Kumar, J.; Saini, S.S.; Agrawal, D.; Karar, V.; Kataria, A. Human Factors While Using Head-Up-Display in Low Visibility Flying Conditions. Intell. Autom. Soft Comput. 2023, 36, 2411–2423. [Google Scholar] [CrossRef]

- Zhou, C.; Qiao, W.; Hua, J.; Chen, L. Automotive Augmented Reality Head-Up Displays. Micromachines 2024, 15, 442. [Google Scholar] [CrossRef]

- Gao, F.; Ge, X.; Li, J.; Fan, Y.; Li, Y.; Zhao, R. Intelligent Cockpits for Connected Vehicles: Taxonomy, Architecture, Interaction Technologies, and Future Directions. Sensors 2024, 24, 5172. [Google Scholar] [CrossRef]

- Zollmann, S.; Hoppe, C.; Kluckner, S.; Poglitsch, C.; Bischof, H.; Reitmayr, G. Augmented Reality for Construction Site Monitoring and Documentation. Proc. IEEE 2014, 102, 137–154. [Google Scholar] [CrossRef]

- Xiang, S.; Wang, R.; Feng, C. Mobile Projective Augmented Reality for Collaborative Robots in Construction. Autom. Constr. 2021, 127, 103704. [Google Scholar] [CrossRef]

- Alekhya, V.; Jose, S.; Lakhanpal, S.; Khan, I.; Paul, S.; Mohammad, Q. Integrating Augmented Reality in Architectural Design: A New Paradigm. E3S Web Conf. 2024, 505, 03009. [Google Scholar] [CrossRef]

- Sheng, X.; Mao, S.; Yan, Y.; Yang, X. Review on SLAM Algorithms for Augmented Reality. Displays 2024, 84, 102806. [Google Scholar] [CrossRef]

- Michiels, N.; Jorissen, L.; Put, J.; Liesenborgs, J.; Vandebroeck, I.; Joris, E.; Van Reeth, F. Tracking and Co-Location of Global Point Clouds for Large-Area Indoor Environments. Virtual Real. 2024, 28, 106. [Google Scholar] [CrossRef]

- Xie, J.; Liu, Y.; Wang, X.; Fang, S.; Liu, S. A New XR-Based Human-robot Collaboration Assembly System Based on Industrial Metaverse. J. Manuf. Syst. 2024, 74, 949–964. [Google Scholar] [CrossRef]

- Calandra, D.; Prattico, F.G.; Fiorenza, J.; Lamberti, F. Exploring the Suitability of a Digital Twin- and EXtended Reality-Based Telepresence Platform for a Collaborative Robotics Training Scenario over next-Generation Mobile Networks. In Proceedings of the EUROCON 2023—20th International Conference on Smart Technologies, Torino, Italy, 6–8 July 2023; pp. 701–706. [Google Scholar] [CrossRef]

- Weistroffer, V.; Keith, F.; Bisiaux, A.; Andriot, C.; Lasnier, A. Using Physics-Based Digital Twins and Extended Reality for the Safety and Ergonomics Evaluation of Cobotic Workstations. Front. Virtual Real. 2022, 3, 781830. [Google Scholar] [CrossRef]

- Fragapane, G.; de Koster, R.; Sgarbossa, F.; Strandhagen, J.O. Planning and Control of Autonomous Mobile Robots for Intralogistics: Literature Review and Research Agenda. Eur. J. Oper. Res. 2021, 294, 405–426. [Google Scholar] [CrossRef]

- Kumar, A.A.; Uz Zaman, U.K.; Plapper, P. Collaborative Robots. In Handbook of Manufacturing Systems and Design: An Industry 4.0 Perspective; CRC Press: Boca Raton, FL, USA, 2023; pp. 90–106. [Google Scholar] [CrossRef]