Abstract

A Knowledge Graph (KG), which structurally represents entities (nodes) and relationships (edges), offers a powerful and flexible approach to knowledge representation in the field of Artificial Intelligence (AI). KGs have been increasingly applied in various domains—such as natural language processing (NLP), recommendation systems, knowledge search, and medical diagnostics—spurring continuous research on effective methods for their construction and maintenance. Recently, efforts to combine large language models (LLMs), particularly those aimed at managing hallucination symptoms, with KGs have gained attention. Consequently, new approaches have emerged in each phase of KG development, including Extraction, Learning Paradigm, and Evaluation Methodology. In this paper, we focus on major publications released after 2022 to systematically examine the process of KG construction along three core dimensions: Extraction, Learning Paradigm, and Evaluation Methodology. Specifically, we investigate (1) large-scale data preprocessing and multimodal extraction techniques in the KG Extraction domain, (2) the refinement of traditional embedding methods and the application of cutting-edge techniques—such as Graph Neural Networks, Transformers, and LLMs—in the KG Learning domain, and (3) both intrinsic and extrinsic metrics in the KG Evaluation domain, as well as various approaches to ensure interpretability and reliability.

1. Introduction

1.1. Background

Knowledge Graphs (KGs) were initially popularized by Google as a means to enhance search capabilities. Subsequently, the rise of Large Language Models (LLMs) has positioned KGs as a robust method for organizing large-scale heterogeneous data, and they have been applied in semantic search, recommendation systems, and question answering [,,]. By representing entities (nodes) and their interlinked relationships (edges), KGs improve data retrieval efficiency and the interpretability of trained models, and even mitigate the hallucination effects often associated with LLMs []. However, constructing high-quality KGs remains complex due to diverse data sources, nuanced relationship learning, and the need for rigorous enhancement and evaluation.

This survey provides an overview of research from 2022 to 2024 on KG construction—covering extraction, learning, and evaluation—and examines recent work addressing hallucinations and knowledge gaps in various LLM-driven tasks. Through this exploration, we identify key challenges and unresolved issues in the current knowledge graph landscape, offering insights for future innovation.

1.2. Survey Taxnomy

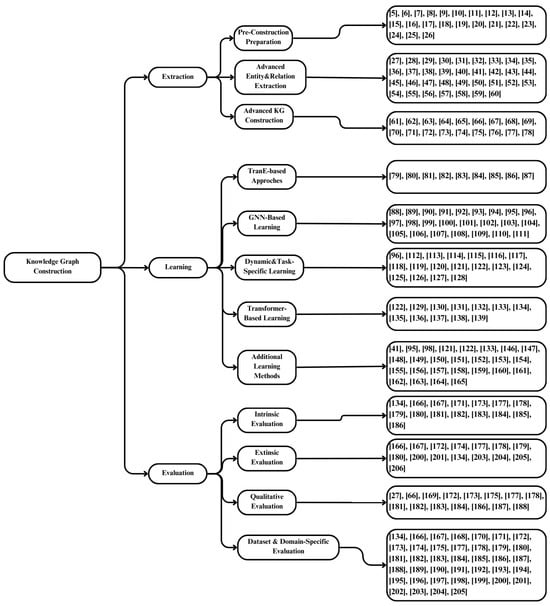

Figure 1 provides a high-level overview of the proposed research framework for knowledge graph construction, visually representing the three core phases—Extraction, Learning, and Evaluation—along with their key sub-components. This figure is crucial not only for understanding the logical progression of each phase but also for situating the various methodologies discussed in this paper. The diverse methodologies presented in the main text are sometimes reiterated to help the readers better comprehend the content. By aligning the surveyed taxonomy with the structure shown in Figure 1, readers can easily understand how foundational extraction techniques, advanced machine learning approaches, and rigorous evaluation protocols collectively form a cohesive pipeline. In particular, the sub-stages within each phase (e.g., Pre-Construction Preparation for Extraction, Graph Neural Network-Based Learning, Qualitative Evaluation methods, etc.) reflect the positioning of the various research threads synthesized in this paper, thereby clarifying how each method and reference discussed in the following sections fits into the broader knowledge graph construction roadmap.

Figure 1.

A systematic overview of the key stages of knowledge graph construction (Extraction, Learning, Evaluation) and the reference numbers allocated to each sub-section.

Extraction. Section 2 discusses the various processes involved in collecting and transforming raw data into structured data to construct knowledge graphs. This includes a range of extraction techniques, from rule-based and machine learning-based methods for foundational entity and relationship extraction to approaches that enhance extraction accuracy using LLMs (e.g., transformer-based models). By analyzing diverse methods, from traditional rule-based approaches to deep learning architectures, this section aims to provide an understanding of both conventional techniques and the latest advancements.

Learning. Section 3 focuses on machine learning techniques that infer and refine relational patterns within knowledge graphs. The discussion begins by exploring traditional embedding models and relation learning algorithms—such as TransE and DistMult—that provide a foundational framework for representing entities and their interconnections in a low-dimensional space. Building on these established methods, this section then delves into recent developments that utilize Graph Neural Networks, attention-based architectures, and contrastive learning techniques to capture complex interactions and higher-order structures. Additionally, it highlights advanced strategies, including transfer learning and various few-shot, one-shot, and zero-shot approaches that address data sparsity and enhance the generalization and robustness of the knowledge graph models.

Evaluation. Section 4 examines the various metrics and evaluation frameworks used to assess the performance of Knowledge Graphs (KGs). The evaluation process consists of intrinsic and extrinsic evaluations. Intrinsic evaluation measures the internal quality of a knowledge graph by focusing on three key aspects: First, accuracy evaluates whether the entities and relationships are correctly identified. Second, coverage indicates the extent to which a KG represents a relevant domain. Third, consistency ensures that the information in the KG is maintained without contradictions. In contrast, extrinsic evaluation measures how effectively a KG can be utilized in practical downstream tasks such as information retrieval and question answering. Furthermore, this section provides a comparative analysis of various evaluation benchmarks, including widely used datasets and challenge tasks, to comprehensively highlight the strengths and limitations of current approaches.

In summary, this survey aims to integrate the recent research landscape surrounding knowledge graph construction into a coherent roadmap, providing a reference for researchers and practitioners seeking to transcend the current limitations of the field. By comprehensively exploring extraction, learning, enhancement, and evaluation, we aim to lay a foundation for future innovations. The following sections will delve into each aspect in detail, thoroughly reviewing the methodologies, challenges, and emerging trends in this domain.

1.3. Semi-Automatic Paper Selection Process

In this paper, a semi-automatic survey process was implemented to rapidly and systematically synthesize research trends on Knowledge Graphs spanning 2022 to 2024. The overall procedure comprises four key steps.

Collection Articles. The Web of Science (WOS) database was utilized to automatically collect approximately 4000 articles related to Knowledge Graphs. This approach enabled the systematic acquisition of a vast body of literature, providing a robust foundation for subsequent evaluation and analysis.

Relevance Assessment. Each collected article was evaluated for alignment with the survey objectives using a predefined evaluation prompt. Specifically, GPT-4o mini and GPT-4o were employed to automatically generate relevance scores based on the content and research direction of the articles. This objective assessment facilitated the selection of articles most pertinent to the survey, ensuring an unbiased and systematic review process.

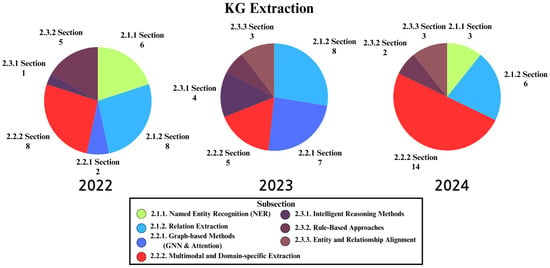

As a result of this scoring process, the number of papers in Knowledge Graph (KG) research was determined across three major categories: Extraction, Learning, and Evaluation. Figure 2 presents the distribution of these papers, with 74, 87, and 40 papers in each category, respectively. These values reflect the assessed relevance and suitability of each paper for this study, providing a structured overview of the research landscape.

Figure 2.

Yearly paper distribution in KG extraction subsections (2022–2024). This figure presents the annual number of research papers across different subsections within the KG extraction domain from 2022 to 2024.

Category Generation. Guided by the relevance scores, the articles were initially clustered into three primary themes—Extraction, Learning, and Evaluation. Subsequent analysis refined these broad categories, yielding three distinct sub-categories within each theme. While automated methods—such as keyword matching and topic modeling—helped identify candidate labels, researchers manually finalized and validated these sub-themes, ensuring their conceptual alignment with the broader survey goals.

By incorporating these semi-automated and human-centered measures, this study efficiently handled a large volume of literature while maintaining methodological rigor and transparency. Automated scoring (via GPT-4o mini and GPT-4o) proved valuable for initial filtering; however, the writing phase prioritized manual composition and expert review. Consequently, the final survey manuscript combines the speed and breadth of automation with the depth, reliability, and nuanced perspectives of human oversight.

2. KGs Extraction

This illustration details the annual distribution of research papers across various subfields of KG extraction from 2022 to 2024. This highlights that while some areas—such as relation extraction and multimodal/domain-specific extraction—maintain steady, high levels of scholarly activity, other subsections, like graph-based methods and intelligent reasoning, experience significant surges in certain years.

Steady or Growing Areas. Multimodal and Domain-Specific Extraction (Section 2.2.2) and Relation Extraction (Section 2.1.2) appear to maintain consistently high interest. This sustained attention could be driven by the increasing need to handle diverse data formats—such as images, videos, and specialized domain texts—and the growing importance of accurate entity-to-entity linking in complex applications.

Peaks and Surges in Specific Years. Topics like Graph-based Methods (Section 2.2.1) and Intelligent Reasoning (Section 2.3.1) show marked surges in certain years. These spikes may be attributed to breakthroughs in neural architectures (e.g., attention-based GNNs) or new reasoning paradigms that have temporarily shifted the research focus. Additionally, improvements in computational hardware and libraries can accelerate development in these areas, causing sudden increases in publication volume.

Fluctuations and Declines. Some subsections experienced sporadic peaks followed by rapid declines, suggesting that research efforts in those areas either reached a saturation point or were eclipsed by more innovative methods. Shifting priorities—such as the rise of end-to-end extraction pipelines or more advanced reasoning techniques—can lead to a decrease in traditional rule-based or less flexible approaches.

Emerging Use Cases and Practical Constraints. Real-world applications requiring scalable, domain-specific extraction solutions often motivate the research community to refine existing methods and explore novel frameworks. For instance, heightened interest in industry adoption might sustain certain topics for longer, whereas academic research might pivot quickly if a method shows diminishing returns or new challenges arise.

Overall, the trends in KG extraction highlight how some techniques remain stable focal points, whereas others gain or lose prominence in response to new methodological breakthroughs, changing computational capabilities, and domain-driven requirements.

2.1. Pre-Construction Preparation

2.1.1. Named Entity Recognition (NER)

In the initial stage of KG construction, it is essential to identify key terms (entities) from unstructured text and consistently map them to predefined ontologies or schemas. Approaches that leverage pretrained language models, such as BERT, have been shown to effectively improve NER precision when processing large corpora []. By combining statistical distributions with language graphs, these methods can extend not only commonsense knowledge but also domain-specific information.

In the relation extraction stage, techniques such as dependency parsing, semantic feature extraction, multi-head stacked graph convolution networks (GCN), and attention mechanisms are widely used []. For example, the entities identified by NER are embedded using a Bi-LSTM model, and methods such as Simplified Graph Convolution Networks (SGC) are then applied to optimize relational information, thereby enhancing the accuracy of relation extraction. Research has also been conducted on combining BERT-based NER with rule-learning methods (e.g., the Open Path or OPRL algorithms) to generate extraction queries and complete the KG [].

Another study applied a supervised information extraction model based on a BERT transformer to perform tokenization, contextual embedding, and entity classification, and then fine-tuned it on a domain-specific dataset to improve its accuracy []. BERT’s core strength lies in its exceptional contextual understanding at the token level, which surpasses traditional approaches.

The entities identified by NER are then mapped to a predefined ontology or set of labels, which is a critical process for ensuring the consistency of KG creation []. This mapping is further reinforced by combining the statistical distributions with the language graph information. In addition, some studies have optimized KG performance using multi-relational graph attention networks or embedding-based models that reflect the importance or attention weights of adjacent nodes [].

2.1.2. Relation Extraction

Relation extraction identifies the semantic connections between entities to generate triple structures (subject–relation–object), which form the basic structure of the knowledge graph. Techniques such as bidirectional relation-guided attention have been used to create a complementary effect between entity recognition and relation extraction []. In the cybersecurity domain, a method combining BERT-based NER with a GCN to extract attack behaviors significantly improved the accuracy of threat behavior recognition []. In various domains (e.g., healthcare, security, software, and education), BERT-based NER has become a core technology for KG construction.

Recent approaches have incorporated Retrieval-Augmented Generation (RAG) into KG applications to integrate engineering knowledge from texts. This method employs token classification and sequence-to-sequence (Seq2Seq) models to extract explicit engineering facts from the KG []. In contrast, the AutoAlign technique leverages large language models to automate the alignment process of knowledge graphs, significantly reducing the need for manual intervention [].

Domain-specific studies include methods for extracting the relationships between ketamine and the gut microbiota from the literature and pathway data [], approaches that capture diverse features using multi-channel convolutions in triple embeddings [], methods for constructing a processed knowledge graph [], approaches based on hierarchical transformers and dual quaternions [], and triple extraction via sentence transformers utilizing dependency parsing [].

Generative approaches extend and refine the KG by directly generating triple structures from learned data distributions, while sampling techniques effectively extract sub-structures from large graphs to balance accuracy and computational cost. For example, a multi-information preprocessing event extraction approach using BiLSTM-CRF with attention extracts events from academic texts to construct a KG []. Document-level dynamic graph attention integrates distributed information from an entire document using a two-stage graph strategy to improve the relation accuracy []. Seq2EG employs a sequence-to-sequence transformer to generate event graphs and incorporate complex event structures into the KG []. In addition, methods such as biomedical relation comparison, cognitively inspired multi-task frameworks [], and KG linking through self-supervised learning have been proposed. SelfKG [] leverages a novel self-supervised contrastive learning strategy that does not require labeled entity pairs by focusing on pushing away many negative samples. By employing a relative similarity metric along with self-negative sampling and multiple negative queues (inspired by momentum contrast techniques), SelfKG demonstrates that accurate entity alignment across different KGs can be achieved without any manual supervision. This approach not only reduces the labeling cost, but also achieves performance comparable to that of state-of-the-art supervised methods. along with the ERGM method—a multi-stage joint extraction technique that includes global entity matching []—and KG-based methods for automating complex scenarios, such as emergency planning or water resource management [].

While Large Language Models (LLMs) have demonstrated state-of-the-art performance in extracting entity-relation tuples, they can occasionally generate “hallucinated” relationships that are not grounded in the source text. For example, a model may infer a causal link between two entities that are merely co-mentioned but lack any actual causal or logical connection. To mitigate such issues, recent studies have explored automated validation steps—such as cross-referencing extracted relationships against an external knowledge base or domain-specific rules—to filter out spurious links. Furthermore, incorporating fine-grained attention mechanisms and contrastive learning approaches can help ensure that relation extraction remains faithful to the available evidence, thereby reducing the risk of erroneously generated triples.

2.2. Advanced Entity and Relation Extraction

In some domain-specific corpora, LLM-driven extraction pipelines have generated “hallucinated” triples, such as attributing non-existent functions to certain genes or synthesizing medical relations (e.g., drug–condition links) that are not supported by clinical evidence. These errors typically arise when the training data are sparse, ambiguous or contradictory. To address this, researchers have proposed post-processing heuristics—using either knowledge graph consistency checks or multimodal cross-validation (e.g., text plus imaging data)—to detect and remove unsubstantiated relationships before finalizing the KG.

2.2.1. Graph-Based Methods (GNN and Attention)

Graph-based extraction methods organize the analysis results (entities and relations) from text into a graph structure and use Graph Neural Networks (GNN) and attention mechanisms to infer and validate information. For example, one approach achieved high precision by combining NER with a deep learning transformer to verify the entities and relations extracted at the sentence level [].

In the biological domain, studies have combined BERT-based NER with topological clustering to learn the gene interaction structures within protein networks. In addition, research has been conducted on extracting drug–drug interactions by integrating heterogeneous knowledge graphs [] and methods that extract relationships by merging web tables []. Other approaches include graph-based methods using multimodal learning [], research on expanding KGs from large heterogeneous data sources [], and comprehensive information extraction pipelines, where BERT-based NER plays a core role [].

Studies have reported the use of multi-task graph convolution networks (MGCN) to extract entities and relations. For example, by integrating dependency trees into a GCN, structure information can be captured while simultaneously learning node and edge features, effectively handling overlapping or complex relation labels []. Furthermore, various methods have been employed, such as combining BERT-based NER with translation-based frameworks like TransE for accurate extraction from domain datasets [] and constructing KGs using multimodal educational data [].

Other approaches include using BiLSTM-CRF models to extract named entities and adding entity type information through unsupervised learning to reduce false positives [] and employing TrellisNet-CRF to complement the accuracy of BERT-based NER []. Strategies that integrate graph structure learning, attention-based BERT NER, and automated feature generation (combining deep learning with graph learning) further enhance KG extraction performance []. Finally, a strategy was developed that uses BERT-based NER to automatically extract triple structures from geological reports and link them to a predefined ontology [].

2.2.2. Multimodal and Domain-Specific Extraction

Multimodal and domain-specific approaches are designed to handle various data types—not only text but also images, audio, and sensor data—thus enabling the high-precision extraction of entities and relations in specific industries. For instance, the GridOnto system integrates fault events from power grids into a KG, demonstrating its effective application in the energy sector []. In the security domain, LLM-TIKG utilizes large language models to construct a threat intelligence KG that enhances the extraction of security-related events []. Additionally, causal relation extraction methods focus on deriving deep causal relationships from long texts, thereby enriching the KG with explicit engineering and domain-specific facts []. Knowledge Guided Attention focuses on chemical–disease relationships by combining attention mechanisms with GCN to reduce noise []. Methods that use distant supervision to incorporate the global context and suppress errors have also been proposed []. The Experiencer-Driven Graph approach captures deep semantic information in emotion-cause relationships [], and dual-fusion models that combine text with equipment sensor data have been developed to simultaneously analyze logs and documents []. An automated clinical knowledge graph framework that integrates clinical records and literature to support evidence-based medicine (EBM) is also included [].

Additional domain-specific improvement techniques include progressive entity type assignment methods to effectively learn domain-specific ontologies [] and the CG-JKNN model, which integrates image and graph data to form medical relations for tuberculosis diagnosis []. In the industrial and healthcare sectors, systems such as the Novel Rational Medicine Use System [], semantic-spatial aware data integration for place KGs [], relation labeling in product KGs for e-commerce [], pipelines for extracting relations using transportation authority data [], and task-centric KG construction based on multimodal representation learning for industrial maintenance automation [] have been proposed. For geological data, approaches include deep learning-based methods that combine text and images to extract entities [], methods that fuse entity descriptions with type information to enhance the completeness of a medical KG [], joint extraction approaches for geological reports [], BDCore, which uses bidirectional decoding and joint graph representations [], prompt learning for biomedical relation extraction using GCN [], and NER for equipment fault diagnosis that combines RoBERTa-wwm-ext with deep learning [].

2.3. Advanced Knowledge Graph Construction

2.3.1. Intelligent Reasoning Methods

KG construction is increasingly leveraging advanced techniques to automatically extend complex domain knowledge. In particular, methods such as multi-hop reasoning, causal reasoning, and quantum embeddings are being integrated to enhance knowledge representation. For example, PharmKE is a knowledge extraction platform that applies transfer learning to pharmaceutical texts to automatically extract various medical entities and relations (e.g., drug names, drug–drug interactions (DDI), adverse drug reactions (ADR), and indications) within an integrated environment []. By fine-tuning a BERT-like model on pharmaceutical corpora, it accurately recognizes specialized terms, such as drug codes and clinical names, and integrates them into the KG using subsequent pipelines. Similarly, an event ontology-based knowledge-enhanced event relation method actively leverages domain ontologies [].

In addition, the Learning Relation Prototype maps long-tail relations with few labels in an unsupervised manner using prototype embeddings []. In software project KGs, the CAJP technique has been utilized [], and Query Path Generation—performing bidirectional inference to handle complex question-answering tasks—has been applied []. The MHlinker model, which integrates the extraction of fault entity relations in mine hoists, has also been reported [], as has a novel joint extraction approach that combines interactive encoding with visual attention to extract entities and relations simultaneously [].

2.3.2. Rule-Based Approaches

Rule-based approaches achieve high precision by utilizing predefined rules and patterns established by domain experts, although they may be somewhat vulnerable to new variations. For example, the Heterogeneous Affinity Graph Inference method proposes a way to reduce noise when constructing document-level entity relations []. In addition, Image2Triplets combines BERT-based NER with computer vision techniques to extract relations simultaneously from images and text, applying specific rules (e.g., focus targets) to improve accuracy [].

In the medical field, joint models that combine sequence labeling, GCN, and transformers have been proposed to extract adverse drug event (ADE) relationships from complex pharmaceutical texts []. QLogicE integrates BERT-based NER with quantum embeddings and translation-based models to enhance the efficiency of entity and relationship representations []. A generative model called KGGen manages the quality of the generated triple structures through adversarial learning, which combines negative sampling and quality control rules []. Furthermore, an improved attention network that combines deep learning with rule-based techniques enhances the accuracy of equipment used for KG construction []. AtenSy-SNER utilizes syntactic features and semantic augmentation for entity extraction in the software domain and reduces false positives using rule-based validation [].

2.3.3. Entity and Relationship Alignment

Recent studies addressing the problem of entity and relation alignment have proposed various approaches aimed at effectively modeling complex contextual information and mitigating noise. One notable model is the Dual Attention Graph Convolutional Network (DAGCN) [], which combines local attention and global attention to capture both local structural information around entities (e.g., neighboring nodes, adjacent words, attributes, etc.) and long-range dependencies arising from global contextual and syntactic relationships within sentences. By modeling both local and global contexts, DAGCN achieves high accuracy for complex sentences, as shown by benchmarks like TACRED and SemEval.

The Contextual Dependency-Aware GCN [] integrates dependency-based graph structures with contextual information by segmenting sentences at the token level to build and process a dependency graph with a GCN. Unlike earlier methods that only consider simple dependency relations, it incorporates contextual embeddings to resolve polysemy and ambiguity, thereby enhancing entity and relation extraction, even in complex sentences.

Another notable line of research focuses on extracting and aligning relationships between objects and their corresponding actions and states in knowledge graphs. A proposed approach [] automatically identifies and connects object-action and object-state relationships in knowledge graphs—such as linking “cup” with “drink” or “cup” with “fragile”. This method applies a sophisticated matching algorithm that enables the effective association of actions or states with objects, even in noisy or structurally complex contexts. By enriching the knowledge graph information with object-centric features and their associated attributes and actions, this approach enhances the graph’s semantic richness and utility.

Furthermore, an Interactive Optimization approach [] has been introduced to incrementally improve model performance by incorporating continuous user or expert feedback during the relation extraction and alignment process. In this framework, misclassified cases or additional information identified during human inspection are fed back into the model, which adjusts its knowledge graph representation learning based on the feedback. Through repeated cycles of interaction and adjustment, the model gradually refines its alignment capabilities, enhancing its accuracy and reliability, even in complex domains where fully automated systems might struggle.

In summary, approaches based on attention-based modeling, dependency graph integration, object-action/state extraction, and interactive feedback have significantly improved the accuracy and robustness of entity alignment and relationship alignment. Specifically, the DAGCN [] and Contextual Dependency-Aware GCN [] effectively integrate diverse contextual information, achieving superior performance even in complex sentence structures. The object-action/state extraction technique [] robustly captures intricate relationships in noisy environments, while the Interactive Optimization approach [] leverages continuous feedback to iteratively refine model performance, ensuring higher reliability in challenging domains.

2.4. Final Summary

In the Pre-Construction Preparation phase, the focus is on laying a robust foundation for KG construction through two key steps. First, Named Entity Recognition employs advanced techniques such as BERT-based models, Bi-LSTM, CRF, and graph-based methods to accurately identify key entities and map them to predefined ontologies [,,,,,]. Next, Relation Extraction leverages methods like dependency parsing, semantic feature extraction, multi-head graph convolution networks, and attention mechanisms to extract subject–relation–object triples from unstructured text [,,,,,,,,,,,,,,,]

Building on these initial tasks, the Advanced Extraction stage further refines the process. In this stage, Graph-based Methods—using graph neural networks (GNNs) and attention mechanisms—organize entities and relations into structured graphs [,,,,,,,,,,,,]. Additionally, Multimodal and Domain-Specific Extraction integrates diverse data sources (including text, images, and sensor inputs) to enhance precision in specialized fields such as healthcare, cybersecurity, and industrial applications [,,,,,,,,,,,,,,,,,,,,].

Finally, during the Construction phase, the process is extended and automated by incorporating Intelligent Reasoning Methods—such as multi-hop reasoning, causal inference, and quantum embeddings—to support complex inference tasks [,,,,]. This is complemented by Rule-based Approaches that utilize predefined rules, knowledge-guided attention, and logic-based models to further refine extraction accuracy in specialized domains [,,,,,,]. Moreover, advanced techniques for Entity and Relationship Alignment ensure consistency and seamless integration across heterogeneous knowledge graphs [,,,,,].

3. KGs Learning

This section provides a structured guide to various methodologies in Knowledge Graph (KG) learning, covering foundational techniques, advanced models, and other learning methods. The emphasis lies on how these approaches contribute to tasks such as link prediction, relational inference, and structured data analysis. In the subsections that follow, key models and enhancements are described with both theoretical insights and practical examples drawn from diverse application domains.

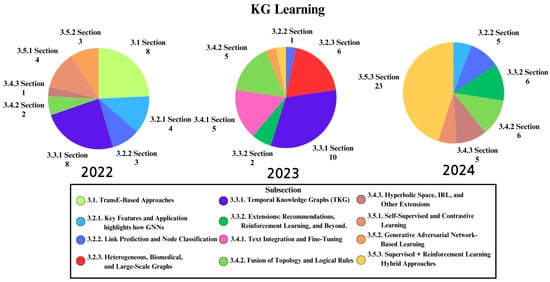

Figure 3 illustrates the annual distribution of research papers on KG learning from 2022 to 2024, offering a closer look at how interest in specific subsections fluctuated over time. Overall, the learning domain exhibits patterns in which certain areas undergo substantial surges in 2023 or 2024, while others remain steady or even decline. Several potential reasons and driving factors are outlined below:

Figure 3.

The graph illustrates the distribution of research papers across different subsections in the knowledge graph (KGs) learning domain over three years (2022–2024). This includes re-cited papers, excluding reference [].

Notable surges in 2022–2024. Supervised plus Reinforcement Learning Hybrid Approach (Section 3.5.3): A dramatic increase in 2024 could stem from breakthroughs in reinforcement learning frameworks and the growing availability of large-scale data. Researchers have been motivated by the promise of achieving more robust and adaptive link prediction or reasoning tasks by combining supervised signals with reinforcement-based explorations.

Text Integration (Section 3.4.1) and Fusion of Topology with Logical Rules (Section 3.4.2): These areas display either steady growth or a marked resurgence. This uptake may be attributed to the release of more powerful natural language processing models (e.g., large language models) and an expanding set of well-structured corpora. As a result, integrating textual information with topological or logical features has become more feasible, thereby driving further research.

Consistent or Gradual Growth. Certain subfields (e.g., Heterogeneous Graphs or Temporal Knowledge Graphs) may show incremental increases in research output. This trend is often fueled by improvements in computational resources and an industry-wide shift toward real-time, dynamic KG applications—leading to a steady demand for incremental or temporal modeling approaches.

Fluctuations and Declines. Some subsections (e.g., Section 3.2.3 and Section 3.5.2) exhibit a concentration of research in a single year, followed by a sharp decrease. Such sporadic peaks may reflect shifting priorities—perhaps due to the saturation of certain methods, a pivot toward more promising alternatives, or newly emerging challenges that redirect attention. Additionally, competition from advanced approaches (like reinforcement learning hybrids or deep generative models) can overshadow previously popular techniques.

Overall, the observed trends highlight how KG learning research has evolved in response to novel techniques, growing computational capabilities, and increasing availability of domain-specific datasets. As new methodologies prove their value (e.g., reinforcement learning hybrids or improved NLP integration), they spur a surge of interest, whereas areas that face fewer unresolved challenges may experience relative decline.

3.1. TransE-Based Approaches

TransE [] learns embeddings for triples (h, r, t) by representing entities (h, t) and relations (r) as low-dimensional vectors that ideally satisfy using a margin-based ranking loss that pulls real triples closer while pushing negative (corrupted) triples apart. Its simplicity, rapid training on large datasets, and scalability have made it a popular foundational method.

h + r ≈ t

However, a single-vector operation often struggles to capture complex relational patterns. In particular, one-to-many or many-to-one relationships pose a significant challenge. For example, by constructing a KG from patent and technical report data, basic embeddings are obtained with TransE and then enhanced by training additional diffusion and absorption vectors to predict “how ideas flow between technological domains” in Technological Knowledge Flow models []. To further improve accuracy, the Multi-Filter Soft Shrinkage technique combines convolutional layers with TransE embeddings as initialization, suppressing noise while modeling higher-order interactions [].

Because TransE embeddings can degrade on sparse or incomplete graphs, iterative rule-guided reasoning methods employing reinforcement learning (RL) have been proposed to dynamically supplement “missing paths” and update embeddings []. The SSKGE framework integrates structural reinforcement and semantic guidance to reduce the training time and improve link prediction performance []. HolmE extends TransE by capturing compositional patterns in Riemannian space, providing a generalized embedding framework that encompasses models like TransE and RotatE [].

Another extension employs TransE as the embedding backbone with a two-level RL mechanism for multi-hop reasoning. In this hierarchical RL approach, a high-level agent decides which relation to explore while a low-level agent selects the appropriate node for that relation, effectively compressing the action space and updating the model end-to-end []. To handle continuously emerging entities and relations, embedding generators are trained to create vectors dynamically from side information (e.g., text or attributes), preserving the overall vector space structure for real-time or online KG completion [].

Finally, for temporal knowledge graphs (TKGs), techniques such as the Temporal-Structural Adaptation Network (TSA-Net) incorporate specialized embedding modules or recurrent mechanisms to capture complex temporal dependencies among temporal, relational, and entity representations [].

Collectively, these studies demonstrate that while TransE [] provides a simple and scalable foundation for knowledge graph embedding, its inherent limitations—such as difficulty in capturing complex relational patterns and sensitivity to data sparsity—have spurred various enhancements. For example, hierarchical and convolutional extensions [,] improve the modeling of knowledge flow and noise suppression, whereas iterative rule-guided reasoning frameworks using reinforcement learning [,] effectively supplement the missing paths in sparse graphs. Additionally, approaches for open-world and temporal graph completion [,] have been developed to dynamically adapt to emerging entities and time-dependent data.

3.2. Graph Neural Network (GNN)-Based Learning

GNNs learn node embeddings by iteratively aggregating and transforming information from nodes, edges, and their neighbors using message passing. When applied to KGs, GNNs can flexibly handle heterogeneous graphs with diverse nodes and relationship types.

3.2.1. Key Features and Application Highlights How GNNs

Graph Neural Networks (GNNs) learn embeddings for each node by iteratively aggregating and transforming the structure of the nodes, edges, and their neighbors using message-passing techniques. When applied to knowledge graphs, GNNs exhibit the following characteristics: First, heterogeneous graphs with diverse types of nodes and relationships can be flexibly handled using techniques like meta-paths or attention mechanisms. Second, while iterative structural learning enables information from neighboring nodes to propagate into deeper layers, allowing higher-order structure learning, caution is required to avoid over-smoothing. Multi-Grained Semantics-Aware Graph Neural Networks [] proposed a GNN that effectively learns the rich semantic information of knowledge graphs by considering multi-grained semantics. Furthermore, the Graph Relearn Network [] introduced methods to mitigate performance fluctuations in GNNs and improve prediction accuracy. Recent work has further expanded the application scope of GNNs. For instance, ref. [] leveraged spatiotemporal scene graph embeddings—where GNNs are combined with LSTM layers—to predict autonomous vehicle collisions by capturing dynamic inter-object relationships in complex traffic scenes. Similarly, ref. [] demonstrated that integrating Graph Convolutional Neural Networks with Label Propagation can significantly enhance node classification performance by adaptively learning edge weights based on node label information.

The GNN-based Imbalanced Node Classification Model (GNN-INCM) [] is designed to address class imbalance issues by applying loss balancing along with node sampling strategies to enhance performance in minority classes. Specifically, it selects nodes based on importance and utilizes the structural characteristics of graph networks to effectively learn from minority class data. This leads to a robust node classification performance, even in datasets with severe class imbalances. INS-GNN [], which integrates self-supervised learning into GNNs, ensures stable node classification performance on imbalanced datasets. By autonomously learning the characteristics of graph data, this model alleviates the problem of under-learning in minority class nodes, achieving practical performance improvements in various real-world networks. GNNs have emerged as a powerful paradigm for learning structured graph data by leveraging both node attributes and underlying graph topology to generate informative embeddings []. By aggregating information from neighbors, GNNs capture complex dependencies within graphs, making them highly effective for tasks like node classification and link prediction. The Graph Convolutional Network (GCN), an early GNN framework, introduced convolution-like operations tailored for graph-structured data [].

The Graph Attention Network (GAT) architecture refines this process by incorporating an attention mechanism to assign adaptive weights to neighbor information []. The same GAT architecture leverages a specialized relational aggregator that captures nuanced interactions associated with various entity and relationship types, making it well-suited for heterogeneous graphs [,]. The Dynamic Representation of Relations and global information (DRR-GAT) [] extends the existing GAT by applying dynamic relation-specific weights, effectively modeling complex graph structures. Iterative Graph Self-Distillation (IGSD) [] employs an iterative self-distillation technique to enhance the prediction stability of GNN models by refining the latent representation of nodes. At each iteration, it generates new embeddings to improve the model prediction accuracy, particularly in large graph datasets, reducing the model variance and delivering more precise results.

Beyond general graph analytics, GNNs serve as the cornerstone of knowledge graph (KG) learning, supporting various tasks such as entity classification, relationship prediction, and KG completion [,]. In this context, GNNs leverage node embeddings to exploit relational information, uncovering complex semantic patterns encoded in KGs. The integration of contrastive learning techniques within GNN frameworks is especially noteworthy, as it promotes the alignment of embeddings with meaningful semantic subspaces. This alignment aids in distinguishing relational patterns, ultimately enhancing the performance of KG completion and other downstream tasks []. Additionally, the flexibility of GNNs has spurred the emergence of advanced architectures, such as higher-order models that capture multi-hop dependencies and path-based models that incorporate longer relational contexts to further refine these relationships []. These diverse approaches underscore the adaptability of GNNs, highlighting their capability to tackle various learning scenarios and enhance the utility of knowledge graphs in real-world applications.

Compared to TransE-based methods, GNN-based approaches leverage iterative message passing to capture both local and higher-order relational structures. This makes them particularly effective for heterogeneous graphs, where diverse node types and complex interactions are prevalent [,,,,,,,,,,,,,,]. In particular, architectures such as the Graph Relearn Network [] and Graph Attention Network [,] have been shown to mitigate issues like over-smoothing and performance fluctuations, resulting in more robust performance in tasks such as node classification and link prediction.

3.2.2. Link Prediction and Node Classification

In Graph Neural Network (GNN)-based learning, link prediction and node classification are two key tasks that leverage the inherent structure of graphs. Link prediction infers missing or potential future connections by analyzing the relational patterns among nodes, while node classification assigns labels to nodes by considering both their individual features and the influence of their neighboring nodes. Together, these tasks empower various applications, such as recommendation systems, network analysis, and anomaly detection, by effectively capturing the underlying connectivity within the graph.

By representing objects such as vehicles, pedestrians, and road facilities as a graph and combining it with a temporal LSTM, SG2VEC [] can predict future collision events. Node embeddings are directly optimized for the downstream task of “collision likelihood” and are lightweight for fast inference on actual AV (autonomous vehicle) edge hardware. MuCoMiD [] addresses biological knowledge graphs (genes, miRNAs, diseases, proteins, etc.) using GNN-based multi-task learning. It addresses challenges such as data scarcity and noise by enabling automatic feature extraction and integrating more than five different biological data sources. The GNN layers learn the interactions between nodes (biomolecules), demonstrating robust predictions even with limited labeled data.

The heterogeneous graph-based knowledge tracing method with spatiotemporal evolution (TSKT) [] is designed to learn from graph data containing heterogeneous nodes and relationships. This model captures complex interactions among various node and relationship types through meta-paths and meta-graphs, achieving excellent performance in modeling learners’ learning patterns. The Graph Structure Self-Contrasting (GSSC) [] approach enhances graph-based learning performance by integrating graph structures into a multilayer perceptron (MLP). By incorporating graph structures into the input layer of the MLP, this model effectively learns the nonlinear interactions between nodes, significantly improving the node classification performance.

The Graph-Aware Tensor Factorization Convolutional Network (GATFCN) [] combines tensor factorization with graph convolution (GCN). By integrating graph structural information into the tensor factorization process, this model learns more precise interactions between nodes and relationships, achieving outstanding performance in tasks like link prediction. Ref. [] combined a GNN with self-supervised learning to detect adverse effects in drug−drug interactions. This model learns complex relationships from large-scale drug datasets and captures patterns of drug−drug similarities, interactions, and side effects. It predicts potential side effects based on real clinical data, aiding in drug safety evaluation and clinical trial design. GraphX-Net [], a GNN model designed for cancer survival prediction, represents patient data as nodes and similarities as edges to construct the graph structure. This model evaluates the contribution of each sample (patient) within the graph and accurately predicts the probability of survival in patients with cancer. By leveraging geometric features and graph structural information, advanced cancer survival predictions are supported, significantly contributing to personalized treatment design and survival analysis.

3.2.3. Heterogeneous, Biomedical, and Large-Scale Graphs

In heterogeneous information networks (HINs) containing different types of nodes and relationships (e.g., person-organization, paper-author, drug-disease), GNNs leverage meta-paths and meta-graphs to learn diverse proximities []. For example, HIGCN (Heterogeneous graph convolutional network with local influence) calculates the local importance of each node to extract multi-faceted semantics within heterogeneous graphs. GNNs are actively utilized to predict the survival rates of patients with cancer, drug−drug interaction (DDI) predictions, and hospital knowledge graph expansions []. Advanced variants like Graph Neural Topic Models and Bayesian GNNs, have also emerged to address challenges in medical data, such as uncertainty, heterogeneity, and scalability. For instance, the Geometric GNN [], used for cancer survival prediction based on omics data, calculates node embeddings by considering the geometric features of the data (e.g., curvature and Ricci curvature). A comprehensive survey of graph embedding techniques tailored for biomedical data [] provides an overview of graph-specific embedding methods for biomedical applications.

For large-scale knowledge graphs (KGs) containing hundreds of millions to billions of nodes, the cost of message passing becomes a significant bottleneck, leading to research on distributed implementations or model parallelization []. For example, the distributed non-negative RESCAL algorithm integrates GNN-like operations to maximize the efficiency. Moreover, automatic model selection in large-scale environments is a critical issue. The distributed non-negative RESCAL with automatic model selection for exascale data [] introduces a distributed RESCAL embedding method suitable for exascale datasets, enabling the efficient learning of large-scale knowledge graphs.

3.3. Dynamic and Task-Specific Learning

3.3.1. Temporal Knowledge Graphs (TKG)

Research is being conducted on GNNs that learn temporal knowledge graphs (TKGs) that change over time, enabling the inference of future facts from past data. When tracking structural changes over time, the GNN layers update the node representations by integrating the current timestamp graph with past (or neighboring) timestamps. For example, SFTe [] predicts future interactions by embedding the structure, factuality, and temporality individually.

To adapt quickly, even with few-shot training samples for new relations or entities, research is exploring GNNs with meta-learning to dynamically adjust parameters []. Models like MTRN (task-related network based on meta-learning) employ specialized components, such as neighbor-aware encoders and self-attention encoders, to sensitively respond to task transitions. Knowledgebra [] proposed an embedding method that optimizes the model performance by leveraging the algebraic structure of KGs to enhance global consistency. Dynamic relation learning for link prediction in knowledge hypergraphs [] improves the link prediction performance in hypergraphs through dynamic relation learning.

T-GAE [] utilizes temporal information in graphs to learn the interactions between nodes. It employs a graph attention mechanism to integrate spatiotemporal dependencies, which allows the model to capture dynamic changes in temporal graphs, predict future interactions from past data and handle complex temporal patterns efficiently. The Bayesian hierarchical graph neural network (BHGNN) [] incorporates uncertainty in graph data into the learning process to produce highly reliable results. It is particularly effective in tasks where reliability is crucial, such as industrial fault diagnosis, in which uncertainty feedback is used to simultaneously improve prediction accuracy and model confidence. This approach contributes to error detection and refinement in several scenarios.

CDRGN-SDE [] employs a cross-dimension network to model interactions across multiple dimensions when learning temporal data. The model captures changing graph structures along the temporal axis and learns complex dependencies in temporal graphs to make accurate predictions. This enables effective learning, even in large-scale temporal graphs. Ref. [] proposed a model that simultaneously learns multiple graph structures along the temporal axis and infers relationships and patterns in TKGs. This model efficiently captures time-dependent patterns and reflects interactions across the past, present, and future.

Dynamic learning approaches in temporal knowledge graphs not only adapt to evolving data but also help mitigate issues such as hallucinated relationships. For instance, models like T-GAE adjust node representations over time to reduce the occurrence of spurious or unsupported links. For example, models like T-GAE [] incorporate temporal-structural adaptations to adjust node representations over time, reducing the occurrence of spurious or unsupported relations. Additionally, frameworks that integrate reinforcement learning [] provide mechanisms to continuously refine the graph structure, ensuring that errors due to hallucination are minimized in real-world applications.

3.3.2. Extensions: Recommendations, Reinforcement Learning, and Beyond

When user-item, item-attribute, or item-item interactions are represented as a graph and GNNs are used to learn node embeddings, the recommendation accuracy improves significantly []. EMKR employs multi-task learning (recommendation + KGE) to enhance the performance of both tasks. Reinforcement Negative Sampling (KGRec-RNS) uses reinforcement learning (RL) to identify “meaningful” negative samples [], which provide far more informative negatives than random sampling, improving training efficiency and interpretability.

For fully inductive learning in evolving KGs, one method has been proposed to classify or predict links using GNNs on sampled subgraphs rather than the entire graph []. Techniques such as personalized PageRank (PPR) are employed to extract neighboring nodes, which helps mitigate over-smoothing and enables flexible inductive reasoning. Ref. [] proposed a sequential recommendation system based on knowledge graphs and transformers, and ref. [] introduced an enhanced multi-task learning framework that integrates recommendation and KG embedding tasks. Additionally, some approaches combine first-order neuro-symbolic and logic rule embeddings to improve the explainability of recommendation tasks []. For example, logical rules such as “If X is related to Y, and Y is similar to Z, then X is related to Z” can be used in conjunction with neural embeddings. Moreover, BiG-Fed [] further enhances the recommendation performance by combining hierarchical optimization with federated learning.

Ref. [] introduced a method for extracting local subgraphs from large-scale graphs to reduce the training time and improve prediction accuracy. This approach performs link prediction based on subgraphs, achieving high efficiency and performance without requiring the entire graph to be trained, making it particularly useful for large-scale datasets like recommendation systems. Ref. [] proposed a model for knowledge graph-based question generation using subgraphs. By focusing on specific nodes and relationships, the model generates questions that reflect the structural information from the graph, enabling more precise responses in question-answering systems.

Global-Local Anchor Representation (GLAR) [] is an inductive link prediction model centered on subgraphs, capable of effectively predicting links in dynamic environments where new nodes and relationships are continuously added. This model leverages the properties of local subgraphs to enable scalability and achieves high performance with an efficient computation.

In summary, dynamic and task-specific learning models offer significant advantages over static embedding methods by adapting to new data using meta-learning and dynamic relation learning. This adaptability not only improves the overall accuracy but also enhances the robustness of the knowledge graphs by reducing errors, such as hallucinated relationships.

3.4. Transformer-Based Learning

Transformers have demonstrated exceptional performance in natural language processing, leading to a significant increase in research combining Transformers with knowledge graphs (KGs). For KGs that extensively utilize textual information from documents, the self-attention mechanism allows modeling of long-range dependencies and subtle contextual relationships between entities. This capability has positioned Transformers as an increasingly influential approach to KG learning [].

3.4.1. Text Integration and Finetuning

Research such as MHAVGAE [] combines weakly supervised learning with a Multi-head Attention Variational Graph Auto-Encoder, integrating Transformer-style multi-head attention into a VAE to automatically learn prerequisite relationships in large-scale conceptual graphs. Metrics like Resource-Prerequisite Reference Distance (RPRD) are used to augment incomplete labels, significantly reducing the need for manual labeling.

Pretrained text-based models (e.g., BERT) have inspired dual attention structures in graph layers, assigning different attention channels based on the importance of the relations []. For instance, the Dual-AEN model independently updates entities and relations while facilitating their interactions.

Methods like SATrans [] inject structural information from tables and graphs directly into the attention mask of a Transformer encoder, mitigating structural loss caused by “flattening” data into plain text. The lower layers of self-attention focus on local information, while the upper layers emphasize global information, achieving high accuracy in tasks that reflect local-global structures, such as validation and question answering. Fine-tuning these models on domain-specific KG data enables the capture of nuanced semantic features that facilitate tasks like relation extraction and entity linking [].

Ref. [] proposed a technique to expand knowledge graphs (KGs) using pretrained language models (e.g., BERT, GPT). This approach extracts entities and relationships from text data and integrates them into the KG, thereby enriching its representation. By leveraging the strong language understanding capabilities of pretrained models, this method adds new information that existing KGs may lack and enhances the semantics of the existing relationships. The synergy between NLP and KG in this approach improves performance across various applications, including question answering and recommendation systems.

Transformer architectures (e.g., BERT) have become the dominant paradigm in knowledge graph construction, particularly due to their ability to capture long-range dependencies. However, traditional recurrent neural networks (RNNs), such as LSTM or GRU models, can still offer advantages in terms of computational efficiency for shorter sequences. Table 1 summarizes the key differences between these methods in terms of accuracy, efficiency, and practical applicability.

Table 1.

Comparison of RNN-based methods vs. transformer-based methods.

3.4.2. Fusion of Topology and Logical Rules

The Fuse Topology contexts and Logical rules in Language Model (FTL-LM) [] enhances knowledge graph (KG) learning by combining graph topology information (structural relationships between nodes) with logical rules. This model imposes constraints on relationships within the graph through logical rules while learning the structural interactions of nodes and edges via topology information. For instance, it improves the reliability of relation prediction using rules like “If X is related to Y, and Y is related to Z, then X is also related to Z”. This approach excels in domains where logical consistency is critical, such as healthcare and the law.

The Medical Bidirectional Encoder Representations from Transformers (MCBERT) [] system learns the relationships among diseases, drugs, and symptoms using domain-specific KGs. By modeling complex relationships in medical data using KGs, MCBERT facilitates efficient analysis and prediction. It is particularly useful for tasks such as drug-disease interactions, prescription suitability evaluations, and patient profiling, supporting medical professionals in decision-making.

The Adaptive Hierarchical Transformer with Memory (AHTM) [] combines a hierarchical Transformer architecture with model compression techniques to effectively generate KG embeddings for large-scale medical datasets. This method accounts for the high-dimensional nature of medical data and learns key patterns and relationships while reducing memory usage and computational costs. For example, it processes extensive medical datasets, including patient records, pharmaceutical databases, and genomic information to produce embeddings that maintain predictive accuracy while enhancing computational efficiency.

Ref. [] explored the potential of integrating large language models (LLMs) with graph data to expand and utilize KGs. This survey proposes methods for combining LLMs’ language understanding and generation capabilities with graph-based learning to effectively learn new entities and relationships. By merging the structural information of graph data with the rich textual representations of LLMs, this approach achieves strong performance in various tasks, such as KG-based question answering, summarization, and relation prediction.

The Unsupervised Semantics and Syntax into heterogeneous Graphs (USS-Graph) [] adopts unsupervised learning techniques to efficiently learn from graph data. This model simultaneously captures the structural features and syntactic semantics of a graph and models node and edge interactions with precision. By automatically learning significant patterns and relationships in the graph without explicit labels, it demonstrates high scalability, even with large datasets. This approach is applicable to graph-based text classification, relation extraction, and other similar tasks.

3.4.3. Hyperbolic Space, IRL, and Other Extensions

Traditional Transformers often assume Euclidean space; however, many studies suggest that hyperbolic space (curvature < 0) is better suited for hierarchical KGs (e.g., tree-structured or layered graphs). For instance, HyGGE [] performs graph attention in a hyperbolic space to capture complex neighbor structures and relationship patterns more effectively, while DeER [] adopts a trainable curvature mechanism that automatically adjusts geometric properties to fit the data. Similarly, RpHGNN [] refines embeddings in heterogeneous graphs via an iterative “propagation-update” cycle, thus reducing information loss and preserving hidden relationships across multi-type nodes. Each of these methods demonstrates how hyperbolic embeddings can offer more nuanced representations than standard Euclidean approaches, particularly for KGs with strongly hierarchical features.

Beyond the choice of embedding space, AInvR [] integrates IRL (Inverse Reinforcement Learning) with a Transformer-based inference module to automatically learn reward functions—thereby tackling the sparse reward issues frequently seen in multi-hop reasoning tasks. This IRL-based approach can also be combined with meta-learning strategies (discussed in Section 3.5.4) to further address data sparsity or dynamically adapt to new reasoning paths. Meanwhile, ref. [] models user-item interactions for sequential recommendation tasks, highlighting how Transformers handle temporal or sequential dependency in KGs, and ref. [] processes object-region relationships within video data to enhance interpretability in Video QA. Furthermore, HG-SCM [] adopts a structural causal model perspective to identify events and their causes in a human-like manner, emphasizing causal inference from graph data. Lastly, ref. [] proposed a graph-augmented MLP-based parallel learning model that employs quantization and parallelized processes—allowing real-time training and inference for massive graph datasets.

3.5. Additional Learning Methods

3.5.1. Self-Supervised and Contrastive Learning

Techniques for self-supervised learning of embeddings by constructing pairs of nodes or subgraphs within graphs are emerging []. Implicit GCL (iGCL) constructs augmentations in the latent space, avoiding excessive random edge deletion/addition to balance structural preservation and training stability. Fine-Grained GCL (FSGCL) [] enhances representation learning by contrasting meanings across graph motifs and meta-paths. Dynamic attention networks adaptively focus on different parts of the graph based on task requirements, helping to better distinguish between entities and relationships []. This approach emphasizes context-specific features, enabling models to better understand the significance of entities and their relationships.

TCKGE [] combines contrastive learning with Transformers to enhance embedding performance, while Clustering Enhanced Multiplex Graph Contrastive Representation Learning [] improves clustering performance across multiple graphs using contrastive learning. Graph Contrastive Learning with Implicit Augmentations [] proposes contrastive learning leveraging implicit augmentations, and [] improves knowledge graph completion performance by separating relational GNNs through contrastive learning. Graph Contrastive Learning with Personalized Augmentation [] introduced contrastive learning with personalized augmentations, and LLM-TIKG [] proposed the generation of threat intelligence knowledge graphs based on large language models using contrastive learning. In addition, one method [] shows that self-attention mechanisms can generate low-dimensional knowledge graph embeddings by dynamically adjusting inter-node interactions, thereby enhancing the link prediction performance. Ref. [] introduced a multi-level graph knowledge contrastive learning framework that effectively integrates both local and global graph information for superior representation learning. Ref. [] proposed a method that leverages self-attention mechanisms to generate low-dimensional embeddings, thereby dynamically adjusting inter-node interactions and significantly enhancing link prediction performance.

3.5.2. Generative Adversarial Network (GAN)-Based Learning

In KGE models, generating negative triples by random replacement is often too easy, which allows the model to quickly distinguish them. To address this, GANs are used to generate plausible negative triples, while a discriminator simultaneously classifies real/fake triples and learns embeddings []. After training with Generative Adversarial Graph Embedding, the generator effectively restores the distribution of the training data, and the discriminator acquires expressive capabilities for triple classification or link prediction []. Moreover, one study [] proposed a GAN-based framework that effectively models complex relational patterns—such as one-to-many and many-to-one—thus overcoming the limitations of traditional single-vector representations.

3.5.3. Integrating Supervised and Reinforcement Learning

Traditional supervised learning excels at learning from labeled triples and node annotations, ensuring stable and reliable embeddings. However, supervised methods alone may miss latent or less frequent relationships, especially in sparse regions. Reinforcement learning (RL) addresses this limitation by dynamically exploring the graph to discover additional link candidates and multi-hop paths. By integrating both approaches—as exemplified in RD-MPNN []—the model benefits from the strengths of each method. Specifically, supervised learning provides a solid foundation by reliably learning known relationships, while RL complements this by continuously exploring and validating new connections in underrepresented areas. This hybrid strategy leads to higher-quality embeddings that are both robust and comprehensive.

In recommendation systems, for example, KGRec-RNS [] leverages RL to model user activity as a Markov Decision Process. This approach identifies “meaningful” negative samples that are more informative than random negatives, thereby enhancing the learning efficiency and interpretability. Additionally, in multi-hop reasoning tasks, using Inverse Reinforcement Learning (IRL) [] to learn reward parameters from real data allows the model to better prioritize correct paths and mitigate over-rewarding of incorrect routes. The combination of supervised learning and RL thus achieves a balanced trade-off between stability and dynamic exploration, resulting in improved overall performance.

3.5.4. Hyperbolic and Geometric Learning, and Meta-Learning

Building on the hyperbolic concepts introduced in Section 3.4.3, more advanced hyperbolic and non-Euclidean geometric learning methods further exploit the properties of curved spaces to model hierarchical and complex relationships in KGs. For example, one approach [] leverages Poincaré geometry to enhance link prediction by capturing intricate interactions between entities and relations, while another [] proposes a deep hyperbolic convolutional model that expands the expressive power of KG embeddings. CoPEs (Composition-based Poincaré embeddings) [] similarly use hyperbolic geometry to preserve distance relationships in large-scale or deeply hierarchical graphs, improving generalization and interpretability []—a critical advantage when dealing with real-world KGs that often contain multi-level hierarchies.

Meanwhile, meta-learning techniques address the challenges of data sparsity and frequent domain shifts by focusing on both the global graph structure and local few-shot scenarios []. Approaches such as MAML [] and Prototypical Networks [] enable learning from minimal examples [], offering robust KG completion in environments with limited or intermittently available data. Building on these fundamentals, ref. [] introduced the selective transfer of KG embeddings for few-shot relation prediction, while ref. [] applied multi-granularity meta-learning to tackle long-tail classification—a scenario in which many entities or relations appear infrequently. Furthermore, ref. [] integrated dynamic prompt learning with meta-learning for multi-label disease diagnosis, swiftly adapting to newly introduced diseases and label structures by modeling disease-symptom relationships within a KG. This approach ensures high diagnostic accuracy in data-sparse conditions and is extendable to personalized diagnostic systems, underscoring the potential synergy between hyperbolic embedding methods and meta-learning for real-world medical applications.

3.6. Final Summary

KG learning encompasses a broad range of methodologies that enable effective link prediction, relational inference, and structured data analysis. Foundational approaches, such as TransE-based embeddings [,,,,,,,,], efficiently represent entities and relations, while GNN-based methods [,,,,,,,,,,,,,,,,,,,,,,,] capture complex, heterogeneous interactions—supporting tasks like node classification and link prediction. Dynamic and task-specific strategies leverage temporal modeling and meta-learning to adapt to evolving graph structures and further enhance recommendation systems and reinforcement learning [,,,,,,,,,,,,,,,,,]. Transformer-based approaches—notably those incorporating pretrained text models []—integrate textual and structural information via self-attention [,,,,,] and improve relational consistency by fusing topology with logical rules [,,,,]. Finally, additional learning methods—including self-supervised/contrastive learning, GAN-based negative sampling, and supervised plus reinforcement learning hybrids—address challenges such as few-shot and long-tail learning [,,,,,,,,,,,,,,,,,,,,,,,,,]. Together, these diverse strategies form a comprehensive framework that drives significant advancements in KG learning in various domains.

4. KGs Evaluation

Intrinsic Evaluation (Section 4.1), Extrinsic Evaluation (Section 4.2), Qualitative Evaluation (Section 4.3), and Dataset and Domain-Specific Evaluation (Section 4.4). Overall, the number of publications peaks in 2023 before declining in 2024, but each sub-section follows its own trajectory.

Steady or Growing Areas Intrinsic Evaluation (Section 4.1) gradually increases and stabilizes over time. This steady rise may be attributed to the ongoing need for robust internal metrics—such as accuracy, consistency, and completeness—that allow researchers to benchmark KG models in controlled settings.

Peaks and Surges in Specific Years Extrinsic Evaluation (Section 4.2) and Dataset and Domain-Specific Evaluation (Section 4.4) show a marked surge in 2023, possibly reflecting a heightened focus on real-world applications and specialized datasets during that period. As industries and academic communities sought more tangible results and domain-aligned benchmarks, publications in these areas spiked to address the practical constraints.

Fluctuations and Declines Qualitative Evaluation (Section 4.3) reaches its highest point in 2023, then experiences a decline in 2024. This decline may indicate a shift in research priorities toward more standardized or quantifiable metrics or the saturation of existing qualitative methods. Over time, as certain qualitative approaches mature, interest may wane in favor of new, data-intensive evaluation techniques.

Overall, these trends underscore how the KG Evaluation landscape evolves in tandem with methodological advances and the practical demands of specific domains. While some approaches—like intrinsic metrics—remain consistently relevant, others experience spikes or dips as researchers adapt to changing expectations for rigorous, real-world performance and domain-driven requirements.

4.1. Intrinsic Evaluation

4.1.1. What Is an Intrinsic Evaluation?

Intrinsic evaluation offers a suite of quantitative metrics that assess the quality and performance of the outputs generated by a knowledge graph model, and each metric is particularly well-suited to different tasks and application scenarios. For example, classification metrics such as Precision, Recall, and F1-Score are advantageous when evaluating discrete prediction tasks—like determining whether a predicted RDF triple correctly represents the relationship between entities. In contexts where false positives are especially detrimental, a high Precision is critical, while Recall becomes paramount when it is crucial to capture all relevant relations. The F1-Score then serves as a balanced indicator when both types of errors are equally consequential.

In contrast, rank-based metrics, such as Mean Reciprocal Rank (MRR) and Hits@K, are more appropriate for tasks involving link prediction or information retrieval, where the model generates a ranked list of candidate relations. MRR is beneficial because it captures the average rank of the first correct answer, thereby favoring models that bring correct candidates to the top, while Hits@K focuses on the proportion of cases in which the correct answer appears within the top K predictions—making these metrics particularly useful in systems that can operate effectively on a shortlist of candidates.

For continuous numerical prediction tasks, regression metrics like Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE) are used. RMSE is especially sensitive to large errors, which is advantageous in applications where significant deviations can have a profound impact, whereas MAE provides a straightforward average error measure that is easier to interpret. MAPE, which expresses errors as percentages relative to true values, is particularly useful in scenarios in which the relative scale of error is more informative than the absolute difference.

Additionally, when assessing the quality of the learned embeddings, methods such as the Pearson correlation coefficient can quantify the linear relationship between embedding similarities and ground-truth semantic relationships. In some cases, an F1-Score is also computed for clustering or classification tasks performed on embedding spaces, providing further insight into the expressive power of the representations.

Finally, evaluating algorithm optimization and efficiency through measures like average effectiveness, standard deviation, execution time, and memory usage is essential when scaling models to large datasets or deploying them in real-time applications. These metrics not only reflect the model’s internal optimization and stability across various runs but also determine its practical viability in resource-constrained environments.

By carefully selecting and interpreting these intrinsic evaluation metrics in accordance with the specific requirements and constraints of the knowledge graph application, researchers can gain a comprehensive understanding of the model performance and make informed decisions regarding further optimization and deployment strategies. Through Table 2, we can see how the evaluation metrics are assessed.

Table 2.

Comprehensive overview of intrinsic evaluation metrics and their mathematical definitions.

4.1.2. Intrinsic Evaluation: Metrics, Methods, and Case Studies

In a study based on the public standard for public transportation data, researchers used the GTFS Madrid benchmark to precisely measure how well a CSV-based RML mapping tool produced results that matched public transportation data and to evaluate the impact of data types (e.g., differences in handling xsd:long versus xsd:integer) on mapping errors and attribute matching success rates []. Additionally, a study was conducted that rigorously evaluated the performance differences among link prediction techniques using various knowledge graph datasets under the same preprocessing conditions and demonstrated the statistical significance between methods using the Wilcoxon test and the Kolmogorov-Smirnov test []. In the Chinese domain, intrinsic performance was systematically verified by quantitatively evaluating the embedding representation and the effect of negative samples in an entity linking model using the FB15K237, WN18RR, and SCWS datasets, measured using the Pearson correlation coefficient and F1-Score [].