Trust in the Automatic Train Supervision System and Its Effects on Subway Dispatchers’ Operational Behavior

Abstract

:1. Introduction

2. Theoretical Background, Related Work, and Hypotheses

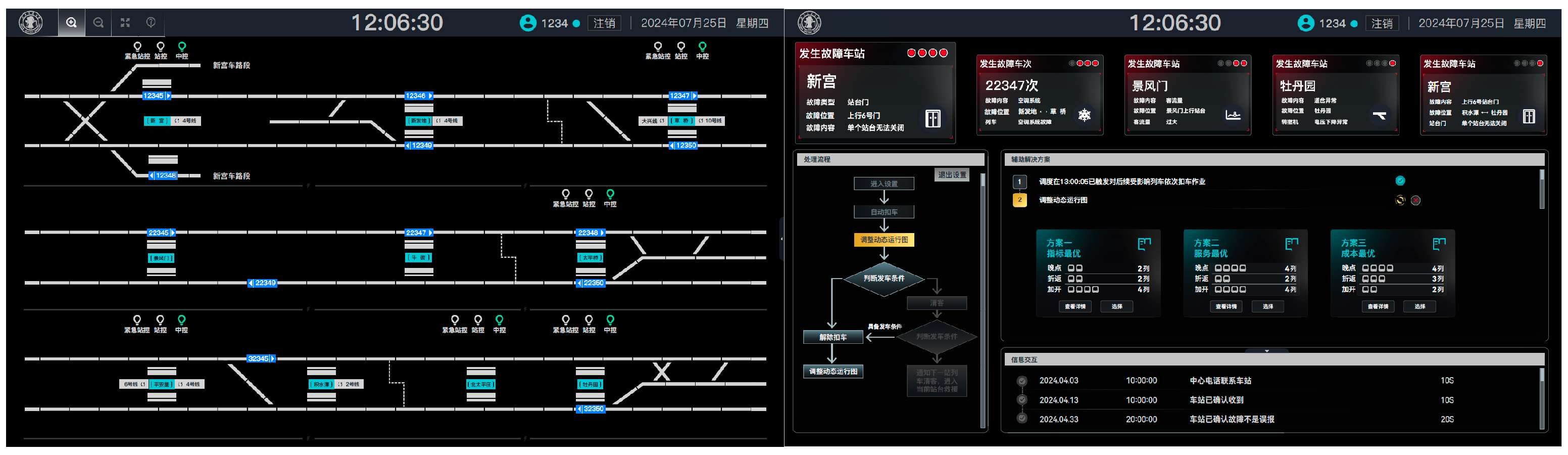

2.1. Foundations of Automation Trust in ATS Systems

2.2. Trust-Driven Reliance Behavior in Operational Contexts

3. Research Methodology and Empirical Analysis

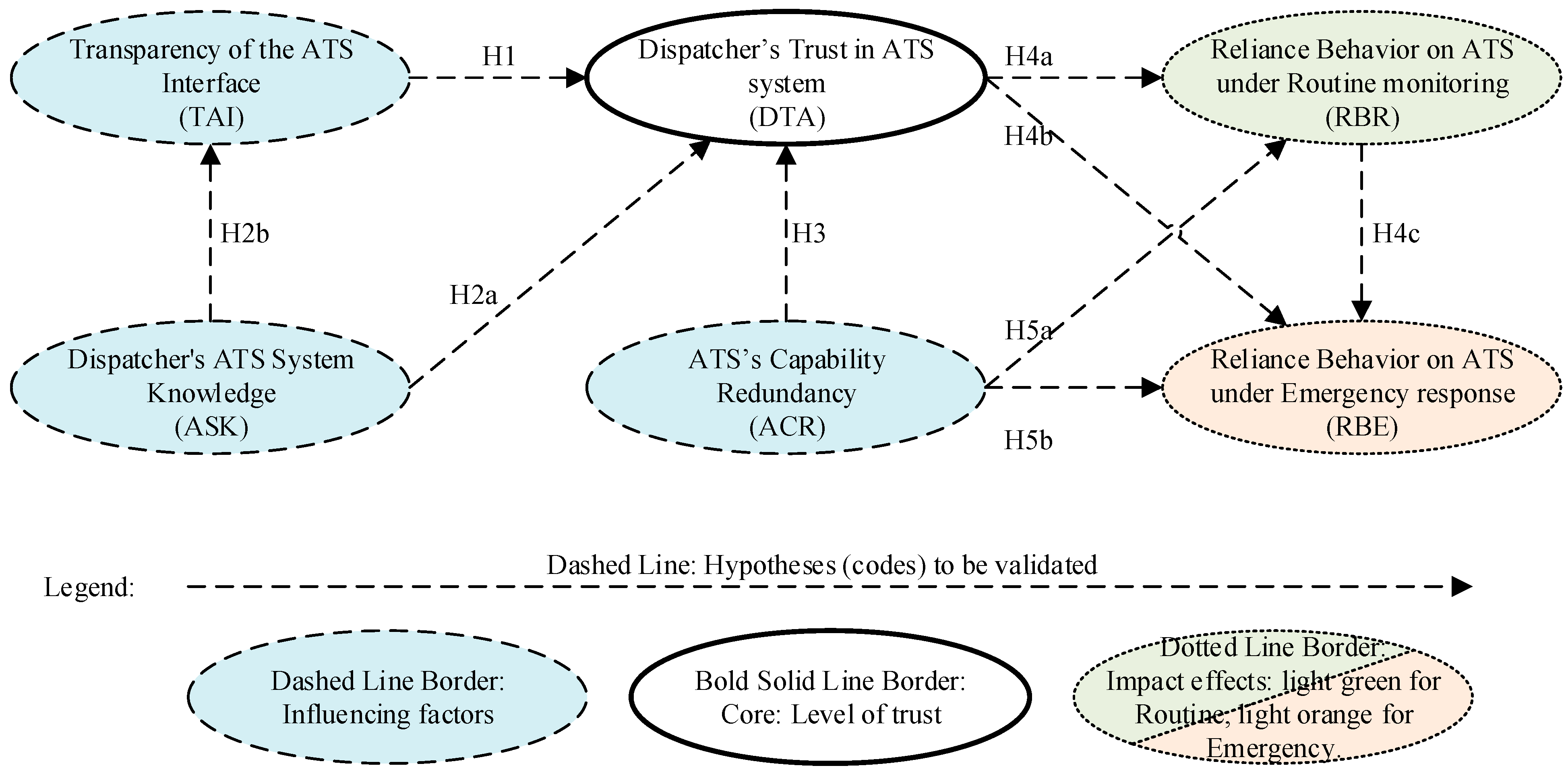

3.1. Summary of Core Constructs and Hypotheses

3.2. Research Design: Pre-Tests and Final Questionnaire Development

3.3. Data Collection and Sample Screening

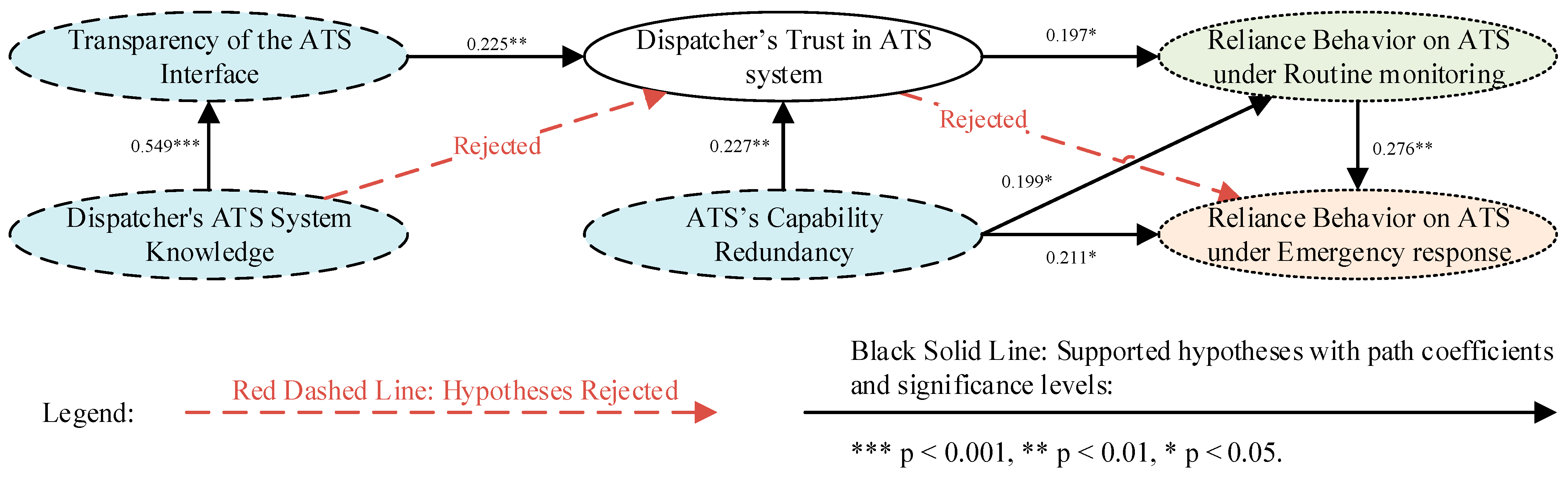

3.4. Structural Equation Modeling and Hypothesis Validation

4. Discussion

4.1. Key Factors Shaping Dispatchers’ Trust in ATS Systems

4.2. Impact of Trust on Dispatchers’ Behavioral Responses

4.3. Task Complexity in Emergency vs. Routine Monitoring Situations: A Comparative Discussion

4.4. Future Directions and Practical Implications

4.5. Limitations and Generalizability

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Automatic Train Supervision System Automation Trust Questionnaire

Appendix B. Overview of Questionnaire Setup

| No. | Research Dimension (Automation Trust in ATS) | No. | Research Dimension (Task Complexity) |

|---|---|---|---|

| 1 | ASK.Q2 | 21 | Emergency TC-1-Size |

| 2 | TAI.Q1 (Scale Reversed) | 22 | Emergency TC-2-Variety |

| 3 | DTA.Q2 | 23 | Emergency TC-3-Ambiguity |

| 4 | RBR.Q2 (Scale Reversed) | 24 | Emergency TC-4-Relationship |

| 5 | ACR.Q2 | 25 | Emergency TC-5-Variability |

| 6 | RBE.Q2 | 26 | Emergency TC-6-Unreliability |

| 7 | RBR.Q1 | 27 | Emergency TC-7-Novelty |

| 8 | TAI.Q3 | 28 | Emergency TC-8-Incongruity |

| 9 | RBE.Q3 (Scale Reversed) | 29 | Emergency TC-9-Action Complexity |

| 10 | TAI.Q2 | 30 | Emergency TC-10-Temporal Demand |

| 11 | ACR.Q3 (Scale Reversed) | 31 | Routine Monitoring TC-1-Size |

| 12 | ASK.Q1 | 32 | Routine Monitoring TC-2-Variety |

| 13 | DTA.Q3 (Scale Reversed) | 33 | Routine Monitoring TC-3-Ambiguity |

| 14 | RBR.Q3 | 34 | Routine Monitoring TC-4-Relationship |

| 15 | RBE.Q1 (Scale Reversed) | 35 | Routine Monitoring TC-5-Variability |

| 16 | ASK.Q3 (Scale Reversed) | 36 | Routine Monitoring TC-6-Unreliability |

| 17 | DTA.Q1 (Scale Reversed) | 37 | Routine Monitoring TC-7-Novelty |

| 18 | ACR.Q1 (Scale Reversed) | 38 | Routine Monitoring TC-8-Incongruity |

| 19 | Open-Ended Questions on Enhancing Trust in Automation | 39 | Routine Monitoring TC-9-Action Complexity |

| 20 | Open-Ended Questions on Reducing Trust in Automation | 40 | Routine Monitoring TC-10-Temporal Demand |

References

- Zeng, J.; Li, Z. Trust towards autonomous driving, worthwhile travel time, and new mobility business opportunities. Appl. Econ. 2024, 1–15. [Google Scholar] [CrossRef]

- Vasile, L.; Seitz, B.; Staab, V.; Liebherr, M.; Däsch, C.; Schramm, D. Influences of personal driving styles and experienced system characteristics on driving style preferences in automated driving. Appl. Sci. 2023, 13, 8855. [Google Scholar] [CrossRef]

- Kim, H. Trustworthiness of unmanned automated subway services and its effects on passengers’ anxiety and fear. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 158–175. [Google Scholar]

- Tilbury, J.; Flowerday, S. Humans and Automation: Augmenting Security Operation Centers. J. Cybersecur. Priv. 2024, 4, 388–409. [Google Scholar] [CrossRef]

- Ferraris, D.; Fernandez-Gago, C.; Lopez, J. A model-driven approach to ensure trust in the IoT. Hum.-Centric Comput. Inf. Sci. 2020, 10, 50. [Google Scholar]

- Parasuraman, R.; Mouloua, M.; Molloy, R. Effects of adaptive task allocation on monitoring of automated systems. Hum. Factors 1996, 38, 665–679. [Google Scholar] [PubMed]

- Sauer, J.; Chavaillaz, A. The use of adaptable automation: Effects of extended skill lay-off and changes in system reliability. Appl. Ergon. 2017, 58, 471–481. [Google Scholar] [CrossRef] [PubMed]

- Bowden, V.K.; Griffiths, N.; Strickland, L.; Loft, S. Detecting a single automation failure: The impact of expected (but not experienced) automation reliability. Hum. Factors 2023, 65, 533–545. [Google Scholar]

- Feng, J.; Shang, J.; Ibrahim, A.N.H.; Borhan, M.N.B.; Tao, Y. Analysis of Safe Operation Behavior for Dispatching Fully Automated Operation Urban Rail Transit Lines Based on FTA. In Proceedings of the The International Conference on Artificial Intelligence and Logistics Engineering, Guiyang, China, 16–17 April 2024; pp. 142–151. [Google Scholar]

- Kippnich, M.; Kowalzik, B.; Cermak, R.; Kippnich, U.; Kranke, P.; Wurmb, T. Katastrophen-und Zivilschutz in Deutschland. AINS-Anästhesiologie Intensivmed. Notfallmedizin Schmerzther. 2017, 52, 606–617. [Google Scholar]

- Ferraro, J.C.; Mouloua, M. Effects of automation reliability on error detection and attention to auditory stimuli in a multi-tasking environment. Appl. Ergon. 2021, 91, 103303. [Google Scholar]

- Fahim, M.A.A.; Khan, M.M.H.; Jensen, T.; Albayram, Y. Human vs. Automation: Which One Will You Trust More If You Are About to Lose Money? Int. J. Hum.–Comput. Interact. 2022, 39, 2420–2435. [Google Scholar] [CrossRef]

- Dimitrova, E.; Tomov, S. Automatic Train Operation for Mainline. In Proceedings of the 2021 13th Electrical Engineering Faculty Conference (BulEF), Varna, Bulgaria, 8–11 September 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Singh, P.; Dulebenets, M.A.; Pasha, J.; Gonzalez, E.D.S.; Lau, Y.-Y.; Kampmann, R. Deployment of autonomous trains in rail transportation: Current trends and existing challenges. IEEE Access 2021, 9, 91427–91461. [Google Scholar] [CrossRef]

- Ajenaghughrure, I.B.; Sousa, S.D.C.; Lamas, D. Measuring trust with psychophysiological signals: A systematic mapping study of approaches used. Multimodal Technol. Interact. 2020, 4, 63. [Google Scholar] [CrossRef]

- Calhoun, C.; Bobko, P.; Gallimore, J.; Lyons, J. Linking precursors of interpersonal trust to human-automation trust: An expanded typology and exploratory experiment. J. Trust Res. 2019, 9, 28–46. [Google Scholar] [CrossRef]

- Schaefer, K. The Perception and Measurement of Human-Robot Trust; University of Central Florida: Orlando, FL, USA, 2013.

- Pushparaj, K.; Ky, G.; Ayeni, A.J.; Alam, S.; Duong, V.N. A quantum-inspired model for human-automation trust in air traffic controllers derived from functional Magnetic Resonance Imaging and correlated with behavioural indicators. J. Air Transp. Manag. 2021, 97, 102143. [Google Scholar] [CrossRef]

- Malekshahi Rad, M.; Rahmani, A.M.; Sahafi, A.; Nasih Qader, N. Social Internet of Things: Vision, challenges, and trends. Hum.-Centric Comput. Inf. Sci. 2020, 10, 52. [Google Scholar] [CrossRef]

- Rheu, M.; Shin, J.Y.; Peng, W.; Huh-Yoo, J. Systematic review: Trust-building factors and implications for conversational agent design. Int. J. Hum.–Comput. Interact. 2021, 37, 81–96. [Google Scholar] [CrossRef]

- Kohn, S.C.; De Visser, E.J.; Wiese, E.; Lee, Y.-C.; Shaw, T.H. Measurement of trust in automation: A narrative review and reference guide. Front. Psychol. 2021, 12, 604977. [Google Scholar] [CrossRef]

- Liehner, G.L.; Brauner, P.; Schaar, A.; Ziefle, M. Delegation of Moral Tasks to Automated Agents—The Impact of Risk and Context on Trusting a Machine to Perform a Task. IEEE Trans. Technol. Soc. 2022, 3, 46–57. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- French, B.; Duenser, A.; Heathcote, A. Trust in Automation A Literature Review; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Selkowitz, A.R.; Lakhmani, S.G.; Chen, J.Y.C. Using agent transparency to support situation awareness of the Autonomous Squad Member. Cogn. Syst. Res. 2017, 46, 13–25. [Google Scholar] [CrossRef]

- Wang, K.; Hou, W.; Hong, L.; Guo, J. Smart Transparency: A User-Centered Approach to Improving Human–Machine Interaction in High-Risk Supervisory Control Tasks. Electronics 2025, 14, 420. [Google Scholar] [CrossRef]

- Eloy, L.; Doherty, E.J.; Spencer, C.A.; Bobko, P.; Hirshfield, L. Using fNIRS to identify transparency-and reliability-sensitive markers of trust across multiple timescales in collaborative human-human-agent triads. Front. Neuroergonomics 2022, 3, 838625. [Google Scholar] [CrossRef]

- Li, J.; Liu, J.; Wang, X.; Liu, L. The Impact of Transparency on Driver Trust and Reliance in Highly Automated Driving: Presenting Appropriate Transparency in Automotive HMI. Appl. Sci. 2024, 14, 3203. [Google Scholar] [CrossRef]

- Bhaskara, A.; Duong, L.; Brooks, J.; Li, R.; McInerney, R.; Skinner, M.; Pongracic, H.; Loft, S. Effect of automation transparency in the management of multiple unmanned vehicles. Appl. Ergon. 2021, 90, 103243. [Google Scholar] [CrossRef]

- Motamedi, S.; Wang, P.; Zhang, T.; Chan, C.-Y. Acceptance of Full Driving Automation: Personally Owned and Shared-Use Concepts. Hum. Factors 2020, 62, 288–309. [Google Scholar] [CrossRef]

- Brauner, P.; Philipsen, R.; Valdez, A.C.; Ziefle, M. What happens when decision support systems fail?—The importance of usability on performance in erroneous systems. Behav. Inf. Technol. 2019, 38, 1225–1242. [Google Scholar] [CrossRef]

- Cheng, X.; Guo, F.; Chen, J.; Li, K.; Zhang, Y.; Gao, P. Exploring the Trust Influencing Mechanism of Robo-Advisor Service: A Mixed Method Approach. Sustainability 2019, 11, 4917. [Google Scholar] [CrossRef]

- Parasuraman, R.; Riley, V. Humans and Automation: Use, misuse, disuse, abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Khastgir, S.; Birrell, S.; Dhadyalla, G.; Jennings, P. Calibrating trust through knowledge: Introducing the concept of informed safety for automation in vehicles. Transp. Res. Pt. C-Emerg. Technol. 2018, 96, 290–303. [Google Scholar] [CrossRef]

- Brdnik, S.; Podgorelec, V.; Šumak, B. Assessing perceived trust and satisfaction with multiple explanation techniques in XAI-enhanced learning analytics. Electronics 2023, 12, 2594. [Google Scholar] [CrossRef]

- Zhang, X.; Song, Z.; Huang, Q.; Pan, Z.; Li, W.; Gong, R.; Zhao, B. Shared eHMI: Bridging Human–Machine Understanding in Autonomous Wheelchair Navigation. Appl. Sci. 2024, 14, 463. [Google Scholar] [CrossRef]

- Niu, J.W.; Geng, H.; Zhang, Y.J.; Du, X.P. Relationship between automation trust and operator performance for the novice and expert in spacecraft rendezvous and docking (RVD). Appl. Ergon. 2018, 71, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Zhang, L.; Zheng, W. Research on Quantitative Evaluation of Emergency Handling Workload of Railway Dispatcher. In Proceedings of the 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023; pp. 61–66. [Google Scholar]

- Balfe, N.; Sharples, S.; Wilson, J.R. Understanding Is Key: An Analysis of Factors Pertaining to Trust in a Real-World Automation System. Hum. Factors 2018, 60, 477–495. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Li, X.; Jiang, B. How people perceive the safety of self-driving buses: A quantitative analysis model of perceived safety. Transp. Res. Rec. 2023, 2677, 1356–1366. [Google Scholar]

- Knocton, S.; Hunter, A.; Connors, W.; Dithurbide, L.; Neyedli, H.F. The Effect of Informing Participants of the Response Bias of an Automated Target Recognition System on Trust and Reliance Behavior. Hum. Factors 2021, 65, 189–199. [Google Scholar]

- Long, S.K.; Lee, J.; Yamani, Y.; Unverricht, J.; Itoh, M. Does automation trust evolve from a leap of faith? An analysis using a reprogrammed pasteurizer simulation task. Appl. Ergon. 2022, 100, 103674. [Google Scholar]

- Hutchinson, J.; Strickland, L.; Farrell, S.; Loft, S. The Perception of Automation Reliability and Acceptance of Automated Advice. Hum. Factors 2022, 65, 1596–1612. [Google Scholar]

- Stowers, K.; Oglesby, J.; Sonesh, S.; Leyva, K.; Iwig, C.; Salas, E. A framework to guide the assessment of human–machine systems. Hum. Factors 2017, 59, 172–188. [Google Scholar]

- Klingbeil, A.; Grützner, C.; Schreck, P. Trust and reliance on AI—An experimental study on the extent and costs of overreliance on AI. Comput. Hum. Behav. 2024, 160, 108352. [Google Scholar]

- Sanchez, J.; Rogers, W.A.; Fisk, A.D.; Rovira, E. Understanding reliance on automation: Effects of error type, error distribution, age and experience. Theor. Issues Ergon. Sci. 2014, 15, 134–160. [Google Scholar] [PubMed]

- Chen, Y.; Zahedi, F.M.; Abbasi, A.; Dobolyi, D. Trust calibration of automated security IT artifacts: A multi-domain study of phishing-website detection tools. Inf. Manag. 2021, 58, 103394. [Google Scholar]

- Niu, K.; Liu, W.; Zhang, J.; Liang, M.; Li, H.; Zhang, Y.; Du, Y. A Task Complexity Analysis Method to Study the Emergency Situation under Automated Metro System. Int. J. Environ. Res. Public Health 2023, 20, 2314. [Google Scholar] [CrossRef] [PubMed]

- Jin, M.; Lu, G.; Chen, F.; Shi, X.; Tan, H.; Zhai, J. Modeling takeover behavior in level 3 automated driving via a structural equation model: Considering the mediating role of trust. Accid. Anal. Prev. 2021, 157, 106156. [Google Scholar]

- Fenn, Z. Lassoing the Loop: An Examination of Factors Influencing Trust in Automation. Master’s Thesis, University of Basel, Basel, Switzerland, 2020. [Google Scholar]

- Gulati, S.; Sousa, S.; Lamas, D. Design, development and evaluation of a human-computer trust scale. Behav. Inf. Technol. 2019, 38, 1004–1015. [Google Scholar]

- Chancey, E.T. The Effects of Alarm System Errors on Dependence: Moderated Mediation of Trust with and Without Risk. Ph.D. Thesis, Old Dominion University, Norfolk, VA, USA, 2016. [Google Scholar]

- Mayer, R.C.; Davis, J.H. The effect of the performance appraisal system on trust for management: A field quasi-experiment. J. Appl. Psychol. 1999, 84, 123–136. [Google Scholar]

- Madsen, M.; Gregor, S. Measuring human-computer trust. In Proceedings of the 11th Australasian Conference on Information Systems, Brisbane, Australia, 6–8 December 2000; pp. 6–8. [Google Scholar]

- Singh, I.L.; Molloy, R.; Parasuraman, R. Automation-induced “complacency”: Development of the complacency-potential rating scale. Int. J. Aviat. Psychol. 1993, 3, 111–122. [Google Scholar]

- Merritt, S.M.; Ako-Brew, A.; Bryant, W.J.; Staley, A.; McKenna, M.; Leone, A.; Shirase, L. Automation-Induced Complacency Potential: Development and Validation of a New Scale. Front. Psychol. 2019, 10, 225–237. [Google Scholar] [CrossRef]

- Guo, Z.; Zou, J.; He, C.; Tan, X.; Chen, C.; Feng, G. The Importance of Cognitive and Mental Factors on Prediction of Job Performance in Chinese High-Speed Railway Dispatchers. J. Adv. Transp. 2020, 2020, 7153972. [Google Scholar] [CrossRef]

- Liu, P.; Li, Z. Task complexity: A review and conceptualization framework. Int. J. Ind. Ergon. 2012, 42, 553–568. [Google Scholar] [CrossRef]

- Comrey, A.L.; Lee, H.B. A First Course in Factor Analysis; Psychology Press: Hove, UK, 2013. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis; Andover: Hampshire, UK, 2019. [Google Scholar]

- Muthumani, A.; Diederichs, F.; Galle, M.; Schmid-Lorch, S.; Forsberg, C.; Widlroither, H.; Feierle, A.; Bengler, K. How visual cues on steering wheel improve users’ trust, experience, and acceptance in automated vehicles. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Orlando, FL, USA, 16–20 July 2020; pp. 186–192. [Google Scholar]

- Lopez, J.; Watkins, H.; Pak, R. Enhancing Component-Specific Trust with Consumer Automated Systems through Humanness Design. Ergonomics 2022, 66, 291–302. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Ha, M.; Kwon, S.; Shim, Y.; Kim, J. Egoistic and altruistic motivation: How to induce users’ willingness to help for imperfect AI. Comput. Hum. Behav. 2019, 101, 180–196. [Google Scholar] [CrossRef]

- Lee, J.; Abe, G.; Sato, K.; Itoh, M. Developing human-machine trust: Impacts of prior instruction and automation failure on driver trust in partially automated vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2021, 81, 384–395. [Google Scholar] [CrossRef]

- Neuhuber, N.; Lechner, G.; Kalayci, T.E.; Stocker, A.; Kubicek, B. Age-related differences in the interaction with advanced driver assistance systems-a field study. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 19–24 July 2020; pp. 363–378. [Google Scholar]

- Miller, D.; Sun, A.; Ju, W. Situation Awareness with Different Levels of Automation. In Proceedings of the 2014 IEEE International Conference on Systems, Man and Cybernetics, San Diego, CA, USA, 5–8 October 2014; IEEE: New York, NY, USA, 2014; pp. 688–693. [Google Scholar]

- Karpinsky, N.D.; Chancey, E.T.; Palmer, D.B.; Yamani, Y. Automation trust and attention allocation in multitasking workspace. Appl. Ergon. 2018, 70, 194–201. [Google Scholar] [CrossRef]

- Pipkorn, L.; Victor, T.W.; Dozza, M.; Tivesten, E. Driver conflict response during supervised automation: Do hands on wheel matter? Transp. Res. Part F Traffic Psychol. Behav. 2021, 76, 14–25. [Google Scholar] [CrossRef]

- Nordhoff, S.; Stapel, J.; He, X.; Gentner, A.; Happee, R. Perceived safety and trust in SAE Level 2 partially automated cars: Results from an online questionnaire. PLoS ONE 2021, 16, e0260953. [Google Scholar] [CrossRef]

- Sato, T. Exploring the Effects of Task Priority on Attention Allocation and Trust Towards Imperfect Automation: A Flight Simulator Study. Master’s Thesis, Old Dominion University, Norfolk, VA, USA, 2020. [Google Scholar]

| Construct | Factor Code | Conceptual Definitions and Sources |

|---|---|---|

| Dispatcher’s Trust in the ATS System | DTA | How much the dispatcher subjectively believes in the ATS system [39,51]. |

| ATS’s Capability Redundancy | ACR | Redundancy in the ATS system’s capability to ensure safety that is perceptible to dispatchers [31,52,53]. |

| Dispatcher’s ATS System Knowledge | ASK | The dispatcher’s familiarity with the ATS system, associated hardware- and software-related information, and operational dispatching rules and regulations [3,54]. |

| Transparency of the ATS Interface | TAI | The degree to which the user interface allows the dispatcher to review past history records, understand the current system status, and assist in predicting future trends [30,31,32]. |

| Reliance Behavior on ATS under Routine Monitoring | RBR | The extent to which the dispatcher relies on and benefits from the ATS system during routine monitoring conditions [55,56]. |

| Reliance Behavior on ATS under Emergency Response | RBE | The extent to which the dispatcher relies on and benefits from the ATS system during emergency response conditions [55,56]. |

| Hypothesis Code | H1 | H2a | H2b | H3 | H4a | H4b | H4c | H5a | H5b |

|---|---|---|---|---|---|---|---|---|---|

| Predictor Code | TAI | ASK | ASK | ACR | DTA | DTA | RBR | ACR | ACR |

| Dependent Code | DTA | DTA | TAI | DTA | RBR | RBE | RBE | RBR | RBE |

| Age | Years of Service | Years of Experience in Dispatching | Educational Background | ||||

|---|---|---|---|---|---|---|---|

| 24–33: | 99 (46.3%) | 1–10: | 84 (39.3%) | 1–9: | 150 (70.1%) | Bachelor’s Degree: | 189 (88.3%) |

| 34–43: | 102 (47.7%) | 11–20: | 100 (46.7%) | 10–19: | 57 (26.6%) | Associate Degree: | 17 (7.9%) |

| 44–53: | 11 (5.1%) | 21–30: | 27 (12.6%) | 20–29: | 4 (1.9%) | Graduate Degree: | 8 (3.7%) |

| 54–63: | 2 (0.9%) | 31–40: | 3 (1.4%) | 30–39: | 3 (1.4%) | ||

| Descriptives | Factor Loadings | |||||||

|---|---|---|---|---|---|---|---|---|

| Item | Mean | SD | F1 | F2 | F3 | F4 | F5 | F6 |

| ACR Q1 (excluded due to low factor loading) | 3.701 | 1.758 | 0.353 | |||||

| ACR Q2 | 4.206 | 1.530 | 0.870 | |||||

| ACR Q3 | 3.775 | 1.890 | 0.853 | |||||

| ASK Q1 | 4.713 | 1.334 | 0.769 | |||||

| ASK Q2 | 5.112 | 1.534 | 0.863 | |||||

| ASK Q3 | 5.037 | 1.378 | 0.890 | |||||

| TAI Q1 | 3.938 | 1.482 | 0.785 | |||||

| TAI Q2 | 4.169 | 1.727 | 0.932 | |||||

| TAI Q3 | 3.681 | 1.531 | 0.851 | |||||

| DTA Q1 | 3.619 | 1.693 | 0.844 | |||||

| DTA Q2 | 3.737 | 1.523 | 0.897 | |||||

| DTA Q3 | 3.337 | 1.533 | 0.820 | |||||

| RBR Q1 | 4.875 | 1.444 | 0.868 | |||||

| RBR Q2 | 5.088 | 1.490 | 0.946 | |||||

| RBR Q3 | 4.938 | 1.512 | 0.842 | |||||

| RBE Q1 | 5.675 | 1.315 | 0.932 | |||||

| RBE Q2 | 5.094 | 1.268 | 0.805 | |||||

| RBE Q3 | 5.506 | 1.274 | 0.834 | |||||

| Explained Variance (%) | 14.14 | 13.62 | 13.32 | 13.26 | 13.17 | 9.42 | ||

| Cumulative Explained Variance (%) | 14.14 | 27.76 | 41.08 | 54.34 | 67.51 | 76.93 | ||

| Cronbach’s α | 0.918 | 0.910 | 0.893 | 0.898 | 0.891 | 0.860 | ||

| 95% Confidence Intervals | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Latent | Observed | Estimate | SE | Lower | Upper | β | z | p | AVE |

| ACR | ACR.Q2 | 1 | 0 | 1 | 1 | 0.950 | 0.753 | ||

| ACR.Q3 | 1.052 | 0.122 | 0.813 | 1.292 | 0.809 | 8.612 | <0.001 | ||

| ASK | ASK.Q1 | 1 | 0 | 1 | 1 | 0.798 | 0.735 | ||

| ASK.Q2 | 1.209 | 0.102 | 1.009 | 1.408 | 0.838 | 11.891 | <0.001 | ||

| ASK.Q3 | 1.207 | 0.093 | 1.025 | 1.389 | 0.932 | 13.015 | <0.001 | ||

| TAI | TAI.Q1 | 1 | 0 | 1 | 1 | 0.869 | 0.779 | ||

| TAI.Q2 | 1.204 | 0.081 | 1.046 | 1.362 | 0.898 | 14.938 | <0.001 | ||

| TAI.Q3 | 1.043 | 0.072 | 0.902 | 1.184 | 0.877 | 14.482 | <0.001 | ||

| DTA | DTA.Q1 | 1 | 0 | 1 | 1 | 0.858 | 0.732 | ||

| DTA.Q2 | 0.927 | 0.070 | 0.789 | 1.064 | 0.884 | 13.229 | <0.001 | ||

| DTA.Q3 | 0.870 | 0.071 | 0.732 | 1.009 | 0.824 | 12.336 | <0.001 | ||

| RBR | RBR.Q1 | 1 | 0 | 1 | 1 | 0.859 | 0.79 | ||

| RBR.Q2 | 1.125 | 0.071 | 0.985 | 1.265 | 0.938 | 15.769 | <0.001 | ||

| RBR.Q3 | 1.056 | 0.074 | 0.911 | 1.200 | 0.866 | 14.339 | <0.001 | ||

| RBE | RBE.Q1 | 1 | 0 | 1 | 1 | 0.913 | 0.751 | ||

| RBE.Q2 | 0.868 | 0.065 | 0.740 | 0.996 | 0.822 | 13.272 | <0.001 | ||

| RBE.Q3 | 0.911 | 0.065 | 0.784 | 1.037 | 0.859 | 14.107 | <0.001 | ||

| 95% Confidence Intervals | Hypothesis Code | Validation Status | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Dep | Pred | Estimate | SE | Lower | Upper | β | z | p | ||

| DTA | TAI | 0.204 | 0.115 | −0.021 | 0.429 | 0.182 | 1.778 | 0.075 | H1 | |

| DTA | ASK | 0.122 | 0.155 | −0.181 | 0.425 | 0.090 | 0.788 | 0.431 | H2a | Rejected |

| TAI | ASK | 0.662 | 0.102 | 0.461 | 0.862 | 0.548 | 6.463 | <0.001 | H2b | |

| DTA | ACR | 0.206 | 0.098 | 0.015 | 0.398 | 0.208 | 2.113 | 0.035 | H3 | |

| RBR | DTA | 0.172 | 0.076 | 0.024 | 0.321 | 0.201 | 2.269 | 0.023 | H4a | |

| RBE | DTA | −0.040 | 0.074 | −0.185 | 0.104 | −0.049 | −0.549 | 0.583 | H4b | Rejected |

| RBE | RBR | 0.277 | 0.084 | 0.112 | 0.442 | 0.286 | 3.290 | 0.001 | H4c | |

| RBR | ACR | 0.168 | 0.076 | 0.020 | 0.316 | 0.197 | 2.228 | 0.026 | H5a | |

| RBE | ACR | 0.187 | 0.074 | 0.042 | 0.332 | 0.227 | 2.522 | 0.012 | H5b | |

| 95% Confidence Intervals | Hypothesis Code | Validation Status | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Dep | Pred | Estimate | SE | Lower | Upper | β | z | p | ||

| DTA | TAI | 0.251 | 0.097 | 0.060 | 0.442 | 0.225 | 2.575 | 0.010 | H1 | Supported |

| TAI | ASK | 0.665 | 0.103 | 0.464 | 0.866 | 0.549 | 6.482 | <0.001 | H2b | Supported |

| DTA | ACR | 0.224 | 0.088 | 0.053 | 0.396 | 0.227 | 2.560 | 0.010 | H3 | Supported |

| RBR | DTA | 0.170 | 0.076 | 0.021 | 0.319 | 0.197 | 2.238 | 0.025 | H4a | Supported |

| RBE | RBR | 0.267 | 0.082 | 0.106 | 0.429 | 0.276 | 3.245 | 0.001 | H4c | Supported |

| RBR | ACR | 0.169 | 0.075 | 0.022 | 0.317 | 0.199 | 2.253 | 0.024 | H5a | Supported |

| RBE | ACR | 0.174 | 0.071 | 0.035 | 0.314 | 0.211 | 2.451 | 0.014 | H5b | Supported |

| Descriptive Statistics | Significance Statistics | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Emergency Condition | Normal Condition | Repeated Measures ANOVA (Non-Parametric) | ||||||||

| Task Complexity Factors | N | Mean | Std. Error Mean | Standard Deviation | Mean | Std. Error Mean | Standard Deviation | p | Mean Difference (I-J) | |

| 1—Size | 160 | 5.319 | 0.112 | 1.416 | 4.856 | 0.127 | 1.609 | 11.636 | <0.001 | 0.463 |

| 2—Variety | 160 | 5.650 | 0.099 | 1.255 | 5.069 | 0.114 | 1.446 | 26.042 | <0.001 | 0.581 |

| 3—Ambiguity | 160 | 2.594 | 0.118 | 1.493 | 2.650 | 0.118 | 1.489 | 0.821 | 0.365 | −0.056 |

| 4—Relationship | 160 | 5.369 | 0.104 | 1.316 | 4.669 | 0.131 | 1.651 | 15.042 | <0.001 | 0.700 |

| 5—Variability | 160 | 5.963 | 0.076 | 0.964 | 4.200 | 0.148 | 1.866 | 70.738 | <0.001 | 1.763 |

| 6—Unreliability | 160 | 3.931 | 0.138 | 1.742 | 3.288 | 0.137 | 1.732 | 15.696 | <0.001 | 0.644 |

| 7—Novelty | 160 | 3.856 | 0.131 | 1.663 | 3.331 | 0.134 | 1.692 | 19.463 | <0.001 | 0.525 |

| 8—Incongruity | 160 | 4.819 | 0.119 | 1.500 | 4.231 | 0.139 | 1.753 | 7.353 | 0.007 | 0.588 |

| 9—Action Complexity | 160 | 4.894 | 0.111 | 1.399 | 3.881 | 0.139 | 1.757 | 37.586 | <0.001 | 1.013 |

| 10—Temporal Demand | 160 | 5.756 | 0.098 | 1.238 | 4.094 | 0.145 | 1.832 | 67.930 | <0.001 | 1.663 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Fang, W.; Bao, H. Trust in the Automatic Train Supervision System and Its Effects on Subway Dispatchers’ Operational Behavior. Appl. Sci. 2025, 15, 3839. https://doi.org/10.3390/app15073839

Wang J, Fang W, Bao H. Trust in the Automatic Train Supervision System and Its Effects on Subway Dispatchers’ Operational Behavior. Applied Sciences. 2025; 15(7):3839. https://doi.org/10.3390/app15073839

Chicago/Turabian StyleWang, Jianxin, Weining Fang, and Haifeng Bao. 2025. "Trust in the Automatic Train Supervision System and Its Effects on Subway Dispatchers’ Operational Behavior" Applied Sciences 15, no. 7: 3839. https://doi.org/10.3390/app15073839

APA StyleWang, J., Fang, W., & Bao, H. (2025). Trust in the Automatic Train Supervision System and Its Effects on Subway Dispatchers’ Operational Behavior. Applied Sciences, 15(7), 3839. https://doi.org/10.3390/app15073839