Innovative Approaches in Sensory Food Science: From Digital Tools to Virtual Reality

Abstract

1. Introduction

2. Integrating Virtual Reality and Augmented Reality into Sensory Food Research

- Educational and Scientific Value: VR enhances learning by creating engaging, interactive experiences.

- Cost Reduction: Virtual environments minimize the need for physical laboratory setups, reducing research costs.

- Expanded Research Opportunities: Researchers can conduct sensory studies in controlled virtual settings, improving data collection and analysis [28].

3. The Role of Artificial Intelligence in Sensory Analysis and Food Innovation

4. Electronic Nose, Electronic Tongue, and Electronic Eye in Sensory Food Sciences

4.1. Electronic-Nose

4.2. Electronic Tongue

4.3. Electronic Eye

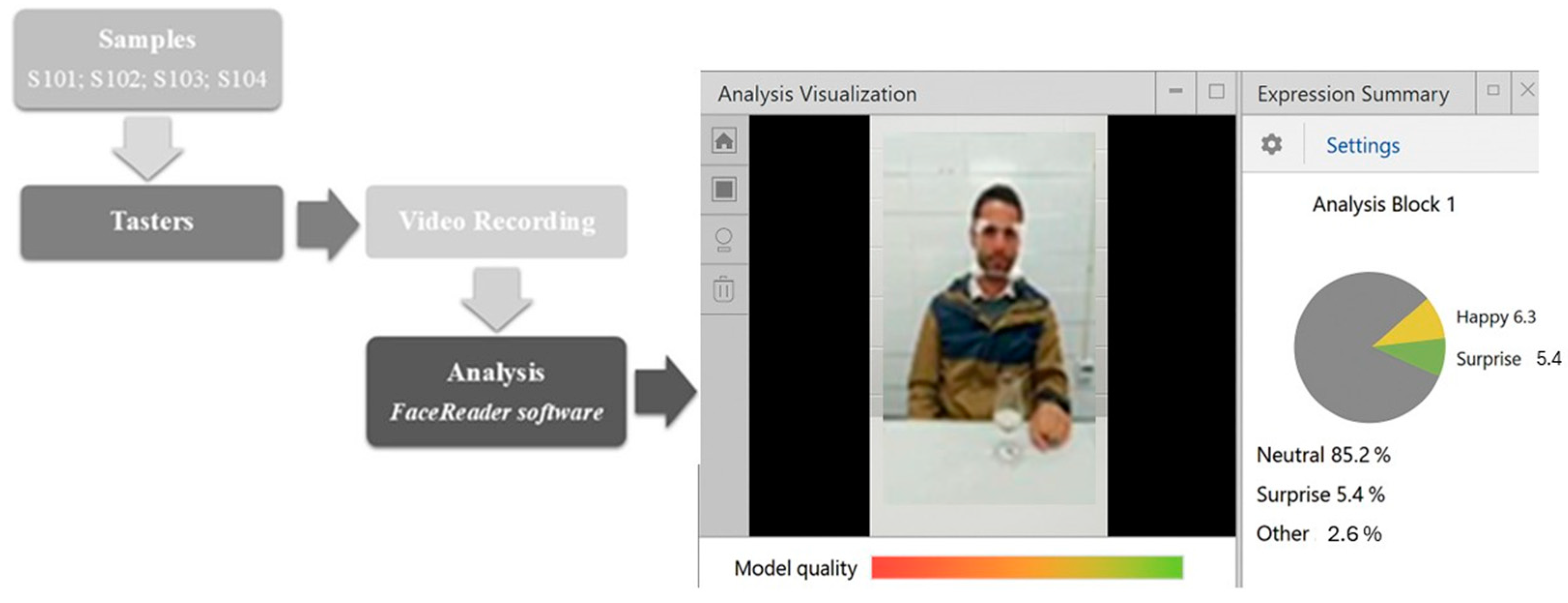

5. The Use of Eye Tracking and FaceReader for Food Products

6. Holistic Research Based on Sensory and Consumer Studies

6.1. Holistic Approaches and Food Marketing

6.2. Holistic Frameworks and Methodologies for Wine and Food Sensory Evaluation

7. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Dumitru, L.M.; Irimia-Vladu, M.; Sariciftci, N.S. Biocompatible Integration of Electronics into Food Sensors. In Comprehensive Analytical Chemistry; Elsevier: Amsterdam, The Netherlands, 2016; Volume 74, pp. 247–271. [Google Scholar] [CrossRef]

- Méndez Pérez, R.; Cheein, F.A.; Rosell-Polo, J.R. Flexible system of multiple RGB-D sensors for measuring and classifying fruits in agri-food Industry. Comput. Electron. Agric. 2017, 139, 231–242. [Google Scholar] [CrossRef]

- Fuentes, S.; Viejo, C.; Torrico, D.; Dunshea, F. Digital Integration and Automated Assessment of Eye-Tracking and Emotional Response Data Using the BioSensory App to Maximize Packaging Label Analysis. Sensors 2021, 21, 7641. [Google Scholar] [CrossRef] [PubMed]

- Lichters, M.; Möslein, R.; Sarstedt, M.; Scharf, A. Segmenting consumers based on sensory acceptance tests in sensory labs, immersive environments, and natural consumption settings. Food Qual. Prefer. 2021, 89, 104138. [Google Scholar] [CrossRef]

- Torrico, D.D.; Mehta, A.; Borssato, A.B. New methods to assess sensory responses: A brief review of innovative techniques in sensory evaluation. Curr. Opin. Food Sci. 2023, 49, 100978. [Google Scholar] [CrossRef]

- Wang, J.; Wang, J.; Qiao, L.; Zhang, N.; Sun, B.; Li, H.; Sun, J.; Chen, H. From Traditional to Intelligent, A Review of Application and Progress of Sensory Analysis in Alcoholic Beverage Industry. Food Chem. 2024, 23, 101542. [Google Scholar] [CrossRef]

- Gere, A.; Zulkarnain, A.H.; Szakál, D.; Fehér, O.; Kókai, Z. Virtual reality applications in food science. Current knowledge and prospects. Prog. Agric. Eng. Sci. 2021, 17, 3–14. [Google Scholar] [CrossRef]

- Ares, G. Special issue on “Virtual reality and food: Applications in sensory and consumer science”. Food Res. Int. 2019, 117, 1. [Google Scholar] [CrossRef]

- Xu, C.; Demir-Kaymaz, Y.; Hartmann, C.; Menozzi, M.; Siegrist, M. The comparability of consumers’ behavior in virtual reality and real life: A validation study of virtual reality based on a ranking task. Food Qual. Prefer. 2021, 87, 104071. [Google Scholar] [CrossRef]

- Gonzalez Viejo, C.; Fuentes, S.; Torrico, D.D.; Dunshea, F.R. Non-Contact Heart Rate and Blood Pressure Estimations from Video Analysis and Machine Learning Modelling Applied to Food Sensory Responses: A Case Study for Chocolate. Sensors 2018, 18, 1802. [Google Scholar] [CrossRef]

- Torrico, D.D.; Fuentes, S.; Viejo, C.G.; Ashman, H.; Gunaratne, N.M.; Gunaratne, T.M.; Dunshea, F.R. Images and chocolate stimuli affect physiological and affective responses of consumers: A cross-cultural study. Food Qual. Prefer. 2018, 65, 60–71. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Zulkarnain, A.H.B.; Gere, A. Virtual reality sensory analysis approaches for sustainable food production. Appl. Food Res. 2025, 5, 100780. [Google Scholar] [CrossRef]

- Stelick, A.; Penano, A.G.; Riak, A.C.; Dando, R. Dynamic context sensory testing–A proof of concept study bringing virtual reality to the sensory booth. J. Food Sci. 2018, 83, 2047–2051. [Google Scholar] [CrossRef]

- Low, J.Y.Q.; Lin, V.H.F.; Jun Yeon, L.; Hort, J. Considering the application of a mixed reality context and consumer segmentation when evaluating emotional response to tea break snacks. Food Qual. Prefer. 2021, 88, 104113. [Google Scholar] [CrossRef]

- Kong, Y.; Sharma, C.; Kanala, M.; Thakur, M.; Li, L.; Xu, D.; Harrison, R.; Torrico, D. Virtual reality and immersive environments on sensory perception of chocolate products: A preliminary study. Foods 2020, 9, 515. [Google Scholar] [CrossRef] [PubMed]

- Torrico, D.D.; Han, Y.; Sharma, C.; Fuentes, S.; Gonzalez Viejo, C.; Dunshea, F.R. Effects of context and virtual reality environments on the wine tasting experience, acceptability, and emotional responses of consumers. Foods 2020, 9, 191. [Google Scholar] [CrossRef]

- Huang, F.; Huang, J.; Wan, X. Influence of virtual color on taste: Multisensory integration between virtual and real worlds. Comput. Hum. Behav. 2019, 95, 168–174. [Google Scholar] [CrossRef]

- Ammann, J.; Stucki, M.; Siegrist, M. True colours: Advantages and challenges of virtual reality in a sensory science experiment on the influence of colour on flavour identification. Food Qual Prefer. 2020, 86, 103998. [Google Scholar] [CrossRef]

- Liu, R.; Hannum, M.; Simons, C.T. Using immersive technologies to explore the effects of congruent and incongruent contextual cues on context recall, product evaluation time, and preference and liking during consumer hedonic testing. Food Res. Int. 2019, 117, 19–29. [Google Scholar] [CrossRef]

- de Wijk, D.; Polet, C.; Holthuysen, E.; van der Laan, J. Virtual Reality: Applications in Sensory and Consumer Science. Foods 2021, 10, 1154. [Google Scholar] [CrossRef]

- Chylinski, M.; Heller, J.; Hilken, T.; Keeling, D.I.; Mahr, D.; de Ruyter, K. Augmented reality marketing: A technology-enabled approach to situated customer experience. Australas. Mark. J. 2020, 28, 374–384. [Google Scholar] [CrossRef]

- Crofton, E.; Botinestean, C. Using virtual reality as a context-enhancing technology in sensory science. Digit. Sens. Sci. 2023, 213–228. [Google Scholar] [CrossRef]

- Crofton, E.C.; Botinestean, C.; Fenelon, M.; Gallagher, E. Potential Applications for Virtual and Augmented Reality technologies in Sensory Science. Innov. Food Sci. Emerg. Technol. 2019, 56, 102178. [Google Scholar] [CrossRef]

- Taufik, D.; Marvin, C.K.; Onwezen, M. C Changing consumer behaviour in virtual reality: A systematic literature review. Comput. Hum. Behav. Rep. 2021, 3, 100093. [Google Scholar] [CrossRef]

- Zulkarnain, A.H.B.; Moskowitz, H.R.; Kókai, Z.; Gere, A. Enhancing consumer sensory science approach through augmented virtuality. Curr. Res. Food Sci. 2024, 9, 100834. [Google Scholar] [CrossRef]

- Taboada, I.; Daneshpajouh, A.; Toledo, N.; de Vass, T. Artificial Intelligence Enabled Project Management: A Systematic Literature Review. Appl. Sci. 2023, 13, 5014. [Google Scholar] [CrossRef]

- Akbari, M.; Vilela, A.; Barroso, J.; Rocha, T. Modeling an Integrated Oenology Laboratory in Blender; v3_AV; University of Trás-os-Montes e Alto Douro: Vila Real, Portugal, 2023. [Google Scholar]

- Zatsu, V.; Shine, A.E.; Tharakan, J.M.; Peter, D.; Ranganathan, T.V.; Alotaibi, S.S.; Mugabi, R.; Muhsinah, A.B.; Waseem, M.; Nayik, G.A. Revolutionizing the food industry: The transformative power of artificial intelligence-a review. Food Chem X. 2024, 24, 101867. [Google Scholar] [CrossRef]

- Zou, W.; Pan, F.; Yi, J.; Peng, W.; Tian, W.; Zhou, L. Targeted prediction of sensory preference for fermented pomegranate juice based on machine learning. LWT 2024, 201, 116260. [Google Scholar] [CrossRef]

- Carvalho, N.; Minim, V.P.R.; Silva, R.C.S.N.; Lucia, S.M.D.; Minim, L. Artificial Neural Networks (ANN): Prediction of sensory measurements from instrumental data. Food Sci. Technol. 2023, 33, 722–729. [Google Scholar] [CrossRef]

- Yang, H.; Wang, Y.; Zhao, J.; Li, P.; Li, L.; Wang, F. A machine learning method for juice human sensory hedonic prediction using electronic sensory features. Curr. Res. Food Sci. 2023, 7, 100576. [Google Scholar] [CrossRef]

- Shibata, A.; Ikegami, A.; Nakauma, M.; Higashimori, M. Convolutional Neural Network based Estimation of Gel-like Food Texture by a Robotic Sensing System. Robotics 2017, 6, 37. [Google Scholar] [CrossRef]

- Pietro Cavallo, D.; Cefola, M.; Pace, B.; Logrieco, A.F.; Attolico, G. Non-destructive automatic quality evaluation of fresh-cut iceberg lettuce through packaging material. J. Food Eng. 2018, 223, 46–52. [Google Scholar] [CrossRef]

- Natsume, H.; Okamoto, S. Prediction of Temporal Liking from Temporal Dominance of Sensations by Using Reservoir Computing and Its Sensitivity Analysis. Foods 2024, 13, 3755. [Google Scholar] [CrossRef] [PubMed]

- Bi, K.; Qiu, T.; Huang, Y. A deep learning method for yogurt preferences prediction using sensory attributes. Processes 2020, 8, 518. [Google Scholar] [CrossRef]

- Bi, K.; Zhang, S.; Zhang, C. Consumer-oriented sensory optimization of yogurt: An artificial intelligence approach. Food Control 2022, 138, 108995. [Google Scholar] [CrossRef]

- Mahesh, B. Machine learning algorithms—A review. Int. J. Sci. Res. 2020, 9, 381–386. [Google Scholar] [CrossRef]

- Jiménez-Carvelo, A.M.; González-Casado, A.; Bagur-González, M.G.; Cuadros-Rodríguez, L. Alternative data mining/machine learning methods for the analytical evaluation of food quality and authenticity—A review. Food Res. Int. 2019, 122, 25–39. [Google Scholar] [CrossRef]

- Dębska, B.; Guzowska-Świder, B. Decision trees in selection of featured determined food quality. Anal. Chim. Acta 2011, 705, 261–271. [Google Scholar] [CrossRef]

- Kotsiantis, S.B. Decision trees: A recent overview. Artif. Intell. Rev. 2013, 39, 261–283. [Google Scholar] [CrossRef]

- Chen, H.; Wang, X.; Zhang, L. Neural Networks for Sensory Analysis: Applications in Food Chemistry. Sensors 2020, 20, 485. [Google Scholar] [CrossRef]

- Nunes, C.A.; Ribeiro, M.N.; de Carvalho, T.C.; Ferreira, D.D.; de Oliveira, L.L.; Pinheiro, A.C. Artificial intelligence in sensory and consumer studies of food products. Curr. Opin. Food Sci. 2023, 50, 101002. [Google Scholar] [CrossRef]

- Dhal, S.B.; Kar, D. Leveraging artificial intelligence and advanced food processing techniques for enhanced food safety, quality, and security: A comprehensive review. Discov. Appl. Sci. 2025, 7, 75. [Google Scholar] [CrossRef]

- Tawakuli, A.; Engel, T. Make your data fair: A survey of data preprocessing techniques that address biases in data towards fair AI. J. Eng. Res. 2024, in press. [CrossRef]

- Hooker, S. Moving beyond “algorithmic bias is a data problem”. Patterns 2021, 2, 100241. [Google Scholar] [CrossRef]

- Chen, P.; Wu, L.; Wang, L. AI Fairness in Data Management and Analytics: A Review on Challenges, Methodologies and Applications. Appl. Sci. 2023, 13, 10258. [Google Scholar] [CrossRef]

- Tseng, Y.J.; Chuang, P.J.; Appell, M. When machine learning and deep learning come to the big data in food chemistry. ACS Omega 2023, 8, 15854–15864. [Google Scholar] [CrossRef] [PubMed]

- Ji, H.; Pu, D.; Yan, W.; Zhang, Q.; Zuo, M.; Yuyu, Z. Recent advances and application of machine learning in food flavor prediction and regulation. Trends Food Sci. 2023, 138, 738–751. [Google Scholar] [CrossRef]

- Kou, X.; Shi, P.; Gao, C.; Ma, P.; Xing, H.; Ke, Q.; Zhang, D. Data-driven elucidation of flavor chemistry. J. Agric. Food Chem. 2023, 71, 6789–6802. [Google Scholar] [CrossRef]

- Colantonio, V.; Ferrão, L.F.V.; Tieman, D.M.; Bliznyuk, N.; Sims, C.; Klee, H.J.; Resende, M.F., Jr. Metabolomic selection for enhanced fruit flavor. Proc. Natl. Acad. Sci. USA 2022, 119, e2115865119. [Google Scholar] [CrossRef]

- Hastie, M.; Torrico, D.; Li, Z.; Ha, M.; Warner, R. Consumer characterization of wet-and dry-aged mutton flavor profile using check-all-that-apply. Foods 2022, 11, 3167. [Google Scholar] [CrossRef]

- Goyache, F.; Bahamonde, A.; Alonso, J.; López, S.; Del Coz, J.J.; Quevedo, J.R.; Luaces, O.; Alvarez, I.; Royo, L.J.; Diez, J.; et al. The usefulness of artificial intelligence techniques to assess subjective quality of products in the food industry. Trends Food Sci. 2001, 12, 370–381. [Google Scholar] [CrossRef]

- Lakeh, M.A. Reporting the Work Plan Activities; Final Report; University of Trás-os-Montes e Alto Douro: Vila Real, Portugal, 2023. [Google Scholar]

- Orlandi, G.; Calvini, R.; Foca, G.; Pigani, L.; Vasile Simone, G.; Ulrici, A. Data fusion of electronic eye and electronic tongue signals to monitor grape ripening. Talanta 2019, 195, 181–189. [Google Scholar] [CrossRef] [PubMed]

- Tan, J.; Xu, J. Applications of electronic nose (e-nose) and electronic tongue (e-tongue) in food quality-related properties determination: A review. Artif. Intell. Agric. 2020, 4, 104–115. [Google Scholar] [CrossRef]

- Shooshtari, M.; Salehi, A. An electronic nose based on carbon nanotube -titanium dioxide hybrid nanostructures for detection and discrimination of volatile organic compounds. Sens. Actuators B Chem. 2022, 357, 131418. [Google Scholar] [CrossRef]

- Ciosek, P.; Wróblewski, W. Sensor arrays for liquid sensing—Electronic tongue systems. Analyst 2007, 132, 963–978. [Google Scholar] [CrossRef]

- Del Valle, M. Sensor Arrays and Electronic Tongue Systems. Int. J. Electrochem. 2012, 2012, 986025. [Google Scholar] [CrossRef]

- Podrażka, M.; Bączyńska, E.; Kundys, M.; Jeleń, P.S.; Witkowska Nery, E. Electronic Tongue—A Tool for All Tastes? Biosensors 2018, 8, 3. [Google Scholar] [CrossRef]

- Kuswandi, B.; Siddiqui, M.W. Sensor-Based Quality Assessment Systems for Fruits and Vegetables; Apple Academic Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Schlossareck, C.; Ross, C.F. Electronic tongue and consumer sensory evaluation of spicy paneer cheese. J. Food Sci. 2019, 84, 1563–1569. [Google Scholar] [CrossRef]

- Gonzalez Viejo, C.; Fuentes, S.; Godbole, A.; Widdicombe, B.; Unnithan, R.R. Development of a low-cost E-nose to assess aroma profiles: An artificial intelligence application to assess beer quality. Sens. Actuators B Chem. 2020, 308, 127688. [Google Scholar] [CrossRef]

- Jiarpinijnun, A.; Osako, K.; Siripatrawan, U. Visualization of Volatomic Profiles for Early Detection of Fungal Infection on Storage Jasmine Brown Rice Using Electronic Nose Coupled with Chemometrics. Meas. J. Int. Meas. Confed. 2020, 157, 107561. [Google Scholar] [CrossRef]

- Shi, H.; Zhang, M.; Adhikari, B. Advances of electronic nose and its application in fresh foods: A review. Crit. Rev. Food Sci. Nutr. 2018, 58, 2700–2710. [Google Scholar] [CrossRef]

- Aouadi, B.; Zaukuu, J.-L.Z.; Vitális, F.; Bodor, Z.; Fehér, O.; Gillay, Z.; Bazar, G.; Kovacs, Z. Historical Evolution and Food Control Achievements of Near Infrared Spectroscopy, Electronic Nose, and Electronic Tongue—Critical Overview. Sensors 2020, 20, 5479. [Google Scholar] [CrossRef] [PubMed]

- Cipriano, D.; Capelli, L. Evolution of electronic noses from research objects to engineered environmental odour monitoring systems: A review of standardization approaches. Biosensors 2019, 9, 75. [Google Scholar] [CrossRef]

- Wei, Z.; Xiao, X.; Wang, J. Identification of the rice wines with different marked ages by electronic nose coupled with smartphone and cloud storage platform. Sensors 2017, 17, 2500. [Google Scholar] [CrossRef] [PubMed]

- Deshmukh, S.; Bandyopadhyay, R.; Bhattacharyya, N.; Pandey, R.A.; Jana, A. Application of electronic nose for industrial odors and gaseous emissions measurement and monitoring—An overview. Talanta 2015, 144, 329–340. [Google Scholar] [CrossRef] [PubMed]

- Štefániková, J.; Martišová, P.; Árvay, J.; Jankura, E.; Kačániová, M.; Gálová, J.; Vietoris, V. Comparison of electronic systems with sensory analysis for the quality evaluation of parenica cheese. Czech J. Food Sci. 2020, 38, 273–279. [Google Scholar] [CrossRef]

- Romani, S.; Rodriguez-Estrada, M. Bakery Products and Electronic Nose. In Electronic Noses and Tongues in Food Science; Elsevier Inc.: Amsterdam, The Netherlands, 2016; pp. 39–47. [Google Scholar] [CrossRef]

- Rusinek, R.; Gancarz, M.; Nawrocka, A. Application of an electronic nose with novel method for generation of smellprints for testing the suitability for consumption of wheat bread during 4-day storage. LWT 2020, 117, 108665. [Google Scholar] [CrossRef]

- Gonzalez Viejo, C.; Fuentes, S. Low-cost methods to assess beer quality using artificial intelligence involving robotics, an electronic nose, and machine learning. Fermentation 2020, 6, 104. [Google Scholar] [CrossRef]

- Fuentes, S.; Summerson, V.; Gonzalez Viejo, C.; Tongson, E.; Lipovetzky, N.; Wilkinson, K.L.; Szeto, C.; Unnithan, R.R. Assessment of smoke contamination in grapevine berries and taint in wines due to bushfires using a low-cost E-nose and an artificial intelligence approach. Sensors 2020, 20, 5108. [Google Scholar] [CrossRef]

- Nomura, M.; Osada, E.; Tokita, T.; Iwamoto, T.; Manome, Y. Measurement and differentiation of banana juice scent using an electronic nose FF-2A. PeerJ 2021, 9, e10638. [Google Scholar] [CrossRef]

- Farahmand, E.; Razavi, S.H.; Mohtasebi, S.S. Investigating effective variables to produce desirable aroma in sourdough using enose and sensory panel. J. Food Process Preserv. 2021, 45, e15157. [Google Scholar] [CrossRef]

- Hübert, T.; Tiebe, C.; Banach, U. Electronic Noses for the Quality Control of Spices. In Electronic Noses and Tongues in Food Science; Elsevier Inc.: Amsterdam, The Netherlands, 2016; pp. 115–124. [Google Scholar] [CrossRef]

- Radi, M.; Rivai, M.; Purnomo, M. Study on electronic-nose-based quality monitoring system for coffee under roasting. J. Circuits Syst. Comput. 2016, 25, 1650116. [Google Scholar] [CrossRef]

- Di Rosa, A.R.; Leone, F.; Cheli, F.; Chiofalo, V. Fusion of electronic nose, electronic tongue and computer vision for animal source food authentication and quality assessment—A review. J. Food Eng. 2017, 210, 62–75. [Google Scholar] [CrossRef]

- Geană, E.I.; Ciucure, C.T.; Apetrei, C. Electrochemical sensors coupled with multivariate statistical analysis as screening tools for wine authentication issues: A review. Chemosensors 2020, 8, 59. [Google Scholar] [CrossRef]

- Calvini, R.; Pigani, L. Toward the Development of Combined Artificial Sensing Systems for Food Quality Evaluation: A Review on the Application of Data Fusion of Electronic Noses, Electronic Tongues and Electronic Eyes. Sensors 2022, 22, 577. [Google Scholar] [CrossRef]

- Wang, W.; Liu, Y. Electronic tongue for food sensory evaluation. In Evaluation Technologies for Food Quality; Elsevier Inc.: Amsterdam, The Netherlands, 2019; pp. 23–36. [Google Scholar]

- Nam, S.H.; Lee, J.; Kim, E.; Koo, J.W.; Shin, Y.; Hwang, T.M. Electronic tongue for the simple and rapid determination of taste and odor compounds in water. Chemosphere 2023, 338, 139511. [Google Scholar] [CrossRef] [PubMed]

- Vagin, M.Y.; Eriksson, M.; Winquist, F. Drinking Water Analysis Using Electronic Tongues. In Electronic Noses and Tongues in Food Science; Elsevier Inc.: Amsterdam, The Netherlands, 2016; pp. 255–264. [Google Scholar]

- Del Valle, M. Bioelectronic tongues employing electrochemical biosensors. Bioanal. Rev. 2017, 6, 143–202. [Google Scholar]

- Winquist, F.; Holmin, S.; Krantz-Rülcker, C.; Wide, P.; Lundström, I. A hybrid electronic tongue. Anal. Chim. Acta 2000, 406, 147–157. [Google Scholar] [CrossRef]

- Zeravik, J.; Hlavacek, A.; Lacina, K.; Skladal, P. State of the art in the field of electronic and bioelectronic tongues—Towards the analysis of wines. Electroanalysis 2009, 21, 2503–2520. [Google Scholar] [CrossRef]

- Nuñez, L.; Cetó, X.; Pividori, M.I.; Zanoni, M.V.B.; del Valle, M. Development and application of an electronic tongue for detection and monitoring of nitrate, nitrite and ammonium levels in waters. Microchem. J. 2013, 110, 273–279. [Google Scholar] [CrossRef]

- Kutyła-Olesiuk, A.; Zaborowski, M.; Prokaryn, P.; Ciosek, P. Monitoring of beer fermentation based on hybrid electronic tongue. Bioelectrochemistry 2012, 87, 104–113. [Google Scholar] [CrossRef]

- Gutiérrez, M.; Llobera, A.; Vila-Planas, J.; Capdevila, F.; Demming, S.; Büttgenbach, S.; Mínguez, S.; Jiménez-Jorquera, C. Hybrid electronic tongue based on optical and electrochemical microsensors for quality control of wine. Analyst 2010, 135, 1718–1725. [Google Scholar] [CrossRef] [PubMed]

- Kutyla-Olesluk, A.; Wesoly, M.; Wróblewaki, W. Hybrid Electronic Tongue as a Tool for the Monitoring of Wine Fermentation and Storage Process. Electroanalysis 2018, 30, 1983–1989. [Google Scholar] [CrossRef]

- Breijo, E.G.; Pinatti, C.O.; Peris, R.M.; Fillol, M.A.; Martínez-Máñez, R.; Camino, J.S. TNT detection using a voltammetric electronic tongue based on neural networks. Sens. Actuators A Phys. 2013, 192, 1–8. [Google Scholar] [CrossRef]

- Vlasov, Y.; Legin, A. Non-selective chemical sensors in analytical chemistry: From “electronic nose” to “electronic tongue”. Fresenius J. Anal. Chem. 1998, 361, 255–260. [Google Scholar] [CrossRef]

- Tian, X.; Wang, J.; Zhang, X. Discrimination of preserved licorice apricot using electronic tongue. Math. Comput. Model. 2013, 58, 737–745. [Google Scholar] [CrossRef]

- Dong, W.; Zhao, J.; Hu, R.; Dong, Y.; Tan, L. Differentiation of Chinese robusta coffees according to species, using a combined electronic nose and tongue, with the aid of chemometrics. Food Chem. 2017, 229, 743–751. [Google Scholar] [CrossRef]

- He, W.; Hu, X.; Zhao, L.; Liao, X.; Zhang, Y.; Zhang, M.; Wu, J. Evaluation of Chinese tea by the electronic tongue: Correlation with sensory properties and classification according to geographical origin and grade level. Food Res. Int. 2009, 42, 1462–1467. [Google Scholar] [CrossRef]

- Xu, S.; Li, J.; Baldwin, E.A.; Plotto, A.; Rosskopf, E.; Hong, J.C.; Bai, J. Electronic tongue discrimination of four tomato cultivars harvested at six maturities and exposed to blanching and refrigeration treatments. Postharvest Biol. Technol. 2018, 136, 42–49. [Google Scholar] [CrossRef]

- Beullens, K.; Mészáros, P.; Vermeir, S.; Kirsanov, D.; Legin, A.; Buysens, S.; Cap, N.; Nicolaï, B.M.; Lammertyn, J. Analysis of tomato taste using two types of electronic tongues. Sens. Actuators B Chem. 2008, 131, 10–17. [Google Scholar] [CrossRef]

- Jung, H.Y.; Kwak, H.S.; Kim, M.J.; Kim, Y.; Kim, K.-O.; Kim, S.S. Comparison of a descriptive analysis and instrumental measurements (electronic nose and electronic tongue) for the sensory profiling of Korean fermented soybean paste (doenjang). J. Sens. Stud. 2017, 32, e12282. [Google Scholar] [CrossRef]

- Yan, S.; Ping, C.; Weijun, C.; Haiming, C. Monitoring the Quality Change of Fresh Coconut Milk Using an Electronic Tongue. J. Food Process. Preserv. 2016, 41, e13110. [Google Scholar] [CrossRef]

- Jambrak, A.R.; Šimunek, M.; Petrović, M.; Bedić, H.; Herceg, Z.; Juretić, H. Aromatic profile and sensory characterisation of ultrasound treated cranberry juice and nectar. Ultrason. Sonochem. 2017, 38, 783–793. [Google Scholar] [CrossRef] [PubMed]

- Paup, V.D.; Barnett, S.M.; Diako, C.; Ross, C.F. Detection of spicy compounds using the electronic tongue. J. Food Sci. 2019, 84, 2619–2627. [Google Scholar] [CrossRef]

- Nery, E.W.; Kubota, L.T. Integrated, paper-based potentiometric electronic tongue for the analysis of beer and wine. Anal. Chim. Acta 2016, 918, 60–68. [Google Scholar] [CrossRef]

- Daikuzono, C.M.; Delaney, C.; Morrin, A.; Diamond, D.; Florea, L.; Oliveira, O.N. Paper based electronic tongue–a low-cost solution for the distinction of sugar type and apple juice brand. Analyst 2019, 144, 2827–2832. [Google Scholar] [CrossRef]

- Rodríguez-Méndez, M.L. Electronic Noses and Tongues in Food Industry; Academic Press: London, UK, 2016. [Google Scholar]

- Litvinenko, S.V.; Bielobrov, D.; Lysenko, V.; Nychyporuk, T.; Skryshevsky, V.A. Might silicon surface be used for electronic tongue application? ACS Appl. Mater. Interfaces 2015, 6, 18440–18444. [Google Scholar] [CrossRef]

- McCaig, T.N. Extending the use of visible/near-infrared reflectance spectrophotometers to measure colour of food and agricultural products. Food Res. Int. 2002, 35, 731–736. [Google Scholar] [CrossRef]

- Wu, D.; Sun, D.W. Colour measurements by computer vision for food quality control—A review. Trends Food Sci. Technol. 2013, 29, 5–20. [Google Scholar] [CrossRef]

- Cui, Y.X.; Liu, R.X.; Lin, Z.Z.; Chen, P.J.; Wang, L.L.; Wang, Y.L.; Chen, S.Q. Quality evaluation based on color grading: Quality discrimination of the Chinese medicine Corni Fructus by an E-eye. Sci. Rep. 2019, 9, 17006. [Google Scholar]

- Xu, C. Electronic eye for food sensory evaluation. In Evaluation Technologies for Food Quality; Elsevier Inc.: Amsterdam, The Netherlands, 2019; pp. 37–59. [Google Scholar]

- Ordoñez-Araque, R.; Rodríguez-Villacres, J.; Urresto-Villegas, J. Electronic nose, tongue and eye: Their usefulness for the food industry. Vitae 2022, 27, e1. [Google Scholar] [CrossRef]

- Ismael, D.; Ploeger, A. Development of a sensory method to detect food-elicited emotions using emotion-color association and eye-tracking. Foods 2019, 8, 217. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Zhao, C.; Tian, G. Characterization of physical properties and electronic sensory analyses of citrus oil-based nanoemulsions. Food Res. Int. 2018, 109, 149–158. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Vázquez, R.; Stinco, C.M.; Melendez-Martínez, A.J.; Heredia, F.J.; Vicario, I.M. Visual and instrumental evaluation of orange juice color: A consumers’ preference study. J. Sens. Stud. 2011, 26, 436–444. [Google Scholar] [CrossRef]

- Martin, M.L.G.-M.; Wei, J.; Luo, R.; Hutchings, J.; Heredia, F.J. Measuring colour appearance of red wines. Food Qual. Prefer. 2007, 18, 862–871. [Google Scholar] [CrossRef]

- Sahameh, S.; Saeid, M.; Mahdi, M.-C.; Nasrollah, G.-V.; Mohsen, B. Potential application of machine vision to honey characterization. Trends Food Sci. Technol. 2013, 30, 174–177. [Google Scholar]

- Sreeraj, M.; Joy, J.; Kuriakose, A.; Sujith, M.R.; Vishnu, P.K.; Haritha, U. CLadron*: AI assisted device for identifying artificially ripened climacteric fruits. Procedia Comput. Sci. 2020, 171, 635–643. [Google Scholar] [CrossRef]

- Orlandi, G.; Calvini, R.; Pigani, L. Electronic eye for the prediction of parameters related to grape ripening. Talanta 2018, 186, 381–388. [Google Scholar] [CrossRef]

- Sun, X.; Young, J.; Liu, J.H.; Chen, Q.; Newman, D. Predicting pork color scores using computer vision and support vector machine technology. Meat Muscle Biol. 2018, 2, 296–302. [Google Scholar] [CrossRef]

- Marques, C.; Correia, E.; Dinis, L.-T.; Vilela, A. An Overview of Sensory Characterization Techniques: From Classical Descriptive Analysis to the Emergence of Novel Profiling Methods. Foods 2022, 11, 255. [Google Scholar] [CrossRef]

- Motoki, K.; Saito, T.; Onuma, T. Eye-tracking research on sensory and consumer science: A review, pitfalls and future directions. Food Res. Int. 2021, 145, 110389. [Google Scholar] [CrossRef] [PubMed]

- Gheorghe, C.M.; Purcărea, V.L.; Gheorghe, I.R. Using eye-tracking technology in Neuromarketing. Rom. J. Ophthalmol. 2023, 67, 2–6. [Google Scholar] [CrossRef]

- Graham, D.J.; Jeffery, R.W. Location, location, location: Eye-tracking evidence that consumers preferentially view prominently positioned nutrition information. J. Am. Diet. Assoc. 2011, 111, 1704–1711. [Google Scholar] [CrossRef] [PubMed]

- Ruppenthal, T. Eye-Tracking Studies on Sustainable Food Consumption: A Systematic Literature Review. Sustainability 2023, 15, 16434. [Google Scholar] [CrossRef]

- Peng, M.; Browne, H.; Cahayadi, J.; Cakmak, Y. Predicting food choices based on eye-tracking data: Comparisons between real-life and virtual tasks. Appetite 2021, 166, 105477. [Google Scholar] [CrossRef]

- Husić-Mehmedović, M.; Omeragić, I.; Batagelj, Z.; Kolar, T. Seeing is not necessarily liking: Advancing research on package design with eye-tracking. J. Bus. Res. 2017, 80, 145–154. [Google Scholar] [CrossRef]

- Gvoka, T.; Vladić, G.; Bošnjaković, G.; Pál, M.; Maričić, K. Identification of Gaze Patterns in the Observation of Font-Weight and Illustration Size on the Packaging Using Eye-Tracking Analysis. Int. Symp. Graph. Eng. Des. 2024, 57–67. [Google Scholar] [CrossRef]

- Gunaratne, N.M.; Fuentes, S.; Gunaratne, T.M.; Torrico, D.D.; Ashman, H.; Francis, C.; Gonzalez Viejo, C.; Dunshea, F.R. Consumer Acceptability, Eye Fixation, and Physiological Responses: A Study of Novel and Familiar Chocolate Packaging Designs Using Eye-Tracking Devices. Foods 2019, 8, 253. [Google Scholar] [CrossRef]

- Marques, C.; Vilela, A. FaceReader Insights into the Emotional Response of Douro Wines. Appl. Sci. 2024, 14, 10053. [Google Scholar] [CrossRef]

- Pichierri, M.; Peluso, A.M.; Pino, G.; Guido, G. Health claims’ text clarity, perceived healthiness of extra-virgin olive oil, and arousal: An experiment using FaceReader. Trends Food Sci. Technol. 2021, 116, 1186–1194. [Google Scholar] [CrossRef]

- Katsikari, A.; Pedersen, M.E.; Berget, I.; Varela, P. Use of face reading to measure oral processing behaviour and its relation to product perception. Food Qual. Prefer. 2024, 119, 105209. [Google Scholar] [CrossRef]

- Marques, C.; Dinis, L.T.; Modesti, M.; Bellincontro, A.; Correia, E.; Vilela, A. Exploring the influence of terroir on Douro white and red wines characteristics: A study of human perception and electronic analysis. Eur. Food Res. Technol. 2024, 250, 3011–3027. [Google Scholar] [CrossRef]

- Berčík, J.; Mravcová, A.; Nadal, E.S.; Lluch, D.B.L.; Farkaš, A. FaceReader as a neuromarketing tool to compare the olfactory preferences of customers in selected markets. Span. J. Mark.-ESIC. 2024. ahead-of-print. [Google Scholar] [CrossRef]

- Landmann, E. I can see how you feel—Methodological considerations and handling of Noldus’s FaceReader software for emotion measurement. Technol. Forecast. Soc. Chang. 2023, 197, 122889. [Google Scholar] [CrossRef]

- Fontana, L.; Albayay, J.; Zurlo, L.; Ciliberto, V.; Zampini, M. Olfactory modulation of visual attention and preference towards congruent food products: An eye tracking study. Food Qual. Pref. 2025, 124, 105373. [Google Scholar] [CrossRef]

- Gonzalez-Sanchez, J.; Baydogan, M.; Chavez-Echeagaray, M.E.; Atkinson, R.K.; Burleson, W. Chapter 11—Affect Measurement: A Roadmap Through Approaches, Technologies, and Data Analysis. In Emotions and Affect in Human Factors and Human-Computer Interaction; Jeon, M., Ed.; Academic Press: Cambridge, MA, USA, 2017; pp. 255–288. [Google Scholar] [CrossRef]

- Liu, X.; Cui, Y. Eye tracking technology for examining cognitive processes in education: A systematic review. Comput. Educ. 2025, 229, 105263. [Google Scholar] [CrossRef]

- Andrewes, P.; Bullock, S.; Turnbull, R.; Coolbear, T. Chemical instrumental analysis versus human evaluation to measure sensory properties of dairy products: What is fit for purpose? Int. Dairy. J. 2021, 121, 105098. [Google Scholar] [CrossRef]

- Chen, J. It is important to differentiate sensory property from the material property. Trend Food Sci. Technol. 2020, 96, 268–270. [Google Scholar] [CrossRef]

- Abbott, J. Quality measurement of fruits and vegetables. Post. Biol. Technol. 1999, 15, 207–225. [Google Scholar] [CrossRef]

- Nishinari, K.; Fang, Y. Perception and measurement of food texture: Solid foods. J. Text. Stud. 2018, 49, 160–201. [Google Scholar] [CrossRef]

- Harker, F.; Maindonald, J.; Murray, S.; Gunson, F.; Hallett, I.; Walker, S. Sensory interpretation of instrumental measurements 1: Texture of apple fruit. Post. Biol. Technol. 2002, 24, 225–239. [Google Scholar] [CrossRef]

- Tao, K.; Yu, W.; Prakash, S.; Gilbert, R. Investigating cooked rice textural properties by instrumental measurements. Food Sci. Hum. Wellness. 2020, 9, 130–135. [Google Scholar] [CrossRef]

- Ross, C. Sensory science at the human–machine interface. Trend Food Sci. Technol. 2009, 20, 63–72. [Google Scholar] [CrossRef]

- Salam, K.N.; Singkeruang, A.W.T.F.; Husni, M.F.; Baharuddin, B.; Ar, D.P. Gen-Z Marketing Strategies: Understanding Consumer Preferences and Building Sustainable Relationships. Gold. Ratio Mapp. Idea Lit. Format 2024, 4, 53–77. [Google Scholar] [CrossRef]

- Kalariya, K.; Chauhan, R.; Soni, P.; Patel, M.; Patel, H. Unraveling Millennial Online Shopping Preferences: A Comprehensive Analysis of Factors Influencing Consumer Behaviour in the Digital Marketplace. J. Bus. Halal Ind. 2024, 1, 1–12. [Google Scholar] [CrossRef]

- Haris, A. The Role of Marketing Research in Understanding Consumer Behavior and Preferences. Adv. Bus. Ind. Mark. Res. 2024, 2, 59–71. [Google Scholar] [CrossRef]

- Rusdian, S.; Sugiat, J.; Tojiri, Y. Understanding Consumer Behavior in Marketing Management: A Descriptive Study and Review of Literature. Gold. Ratio Mark. Appl. Psychol. Bus. 2024, 4, 76–87. [Google Scholar] [CrossRef]

- Utami, C.V.; Karunia, L.; Marwan, J. The Role of Marketing Strategy on Pricing and its Impact on Purchasing Interest. Moestopo Int. Rev. Soc. Humanit. Sci. 2024, 4, 206–217. [Google Scholar] [CrossRef]

- Theodorakopoulos, L.; Theodoropoulou, A. Leveraging Big Data Analytics for Understanding Consumer Behavior in Digital Marketing: A Systematic Review. Hum. Behav. Emerg. 2024, 2024, 3641502. [Google Scholar] [CrossRef]

- Kim, H.; Park, Y.; Bradlow, E.; Ding, M. PIE: A Holistic Preference Concept and Measurement Model. J. Mark. Res. 2013, 51, 335–351. [Google Scholar] [CrossRef]

- Dalmoro, M.; Isabella, G.; De Almeida, S.; Fleck, J. Developing a holistic understanding of consumers’ experiences. Eur. J. Mark. 2019, 53, 2054–2079. [Google Scholar] [CrossRef]

- Puengwattanapong, P.; Leelasantitham, A. A Holistic Perspective Model of Plenary Online Consumer Behaviors for Sustainable Guidelines of the Electronic Business Platforms. Sustainability 2022, 14, 6131. [Google Scholar] [CrossRef]

- Combris, P.; Bazoche, P.; Giraud-Héraud, E.; Issanchou, S. Food choices: What do we learn from combining sensory and economic experiments? Food Qual. Prefer. 2009, 20, 550–557. [Google Scholar] [CrossRef]

- Dijksterhuis, G. New product failure: Five potential sources discussed. Trends Food Sci. Technol. 2016, 50, 243–248. [Google Scholar] [CrossRef]

- Deng, X.; Srinivasan, R. When do transparent packages increase (or decrease) food consumption? J. Mark. 2013, 77, 104–117. [Google Scholar] [CrossRef]

- Mai, R.; Symmank, C.; Seeberg-Elverfeldt, B. Light and pale colors in food packaging: When does this package cue signal superior healthiness or inferior tastiness? J. Retail. 2016, 92, 426–444. [Google Scholar] [CrossRef]

- Hoegg, J.; Alba, J.W. Taste perception: More than meets the tongue. J. Consum. Res. 2007, 33, 490–498. [Google Scholar] [CrossRef]

- Naylor, R.W.; Droms, C.M.; Haws, K.L. Eating with a purpose: Consumer response to functional food health claims in conflicting versus complementary information environments. J. Public Policy Mark. 2009, 28, 221–233. [Google Scholar] [CrossRef]

- Symmank, C. Extrinsic and intrinsic food product attributes in consumer and sensory research: Literature review and quantification of the findings. Manag. Rev. Q. 2018, 69, 39–74. [Google Scholar] [CrossRef]

- Giboreau, A. Sensory and consumer research in culinary approaches to food. Curr. Opin. Food Sci. 2017, 15, 87–92. [Google Scholar] [CrossRef]

- Deb, P.; Maity, P. Unveiling the Senses: A Bibliometrics Analysis on the Role of Sensory Marketing in impacting Consumer Behaviour. Int. J. Sci. Res. Eng. Manag. 2024, 8, 1–16. [Google Scholar] [CrossRef]

- Spence, C. Managing sensory expectations concerning products and brands: Capitalizing on the potential of sound and shape symbolism. J. Consum. Psychol. 2012, 22, 37–54. [Google Scholar] [CrossRef]

- Jürkenbeck, K.; Spiller, A. Importance of sensory quality signals in consumers’ food choice. Food Qual. Pref. 2021, 90, 104155. [Google Scholar] [CrossRef]

- Trijp, H.; Schifferstein, H. Sensory Analysis in Marketing Practice: Comparison and Integration. J. Sens. Stud. 1995, 10, 127–147. [Google Scholar] [CrossRef]

- Iannario, M.; Manisera, M.; Piccolo, D.; Zuccolotto, P. Sensory analysis in the food industry as a tool for marketing decisions. Adv. Data Anal. Classif. 2012, 6, 303–321. [Google Scholar] [CrossRef]

- Alongi, M.; Anese, M. Re-thinking functional food development through a holistic approach. J. Funct. Foods. 2021, 81, 104466. [Google Scholar] [CrossRef]

- Ghalachyan, A.; Karpova, E.; Frattali, A. Developing a holistic sensory evaluation three-part method for textiles and apparel: A practical application for novel materials and products. Res. J. Text. 2023, 28, 948–964. [Google Scholar] [CrossRef]

- Magalios, P.; Kosmas, P.; Tsakiris, A.; Theocharous, A. Sensory evaluation of wine through correspondence analysis: A theoretical and empirical rationale. J. Wine Res. 2019, 30, 62–77. [Google Scholar] [CrossRef]

- Liu, J.; Grønbeck, M.; Monaco, R.; Giacalone, D.; Bredie, W. Performance of Flash Profile and Napping with and without training for describing small sensory differences in a model wine. Food Qual. Prefer. 2016, 48, 41–49. [Google Scholar] [CrossRef]

- Barton, A.; Hayward, L.; Richardson, C.; McSweeney, M. Use of different panelists (experienced, trained, consumers, and experts) and the projective mapping task to evaluate white wine. Food Qual. Pref. 2020, 83, 103900. [Google Scholar] [CrossRef]

- Rodriguez-Mendez, M.; De Saja, J.; González-Antón, R.; García-Hernández, C.; Medina-Plaza, C.; García-Cabezón, C.; Martín-Pedrosa, F. Electronic Noses and Tongues in Wine Industry. Front. Bioeng. Biotechnol. 2016, 4, 81. [Google Scholar] [CrossRef] [PubMed]

- Malfeito-Ferreira, M. Fine wine flavour perception and appreciation: Blending neuronal processes, tasting methods and expertise. Trends Food Sci. Technol. 2021, 115, 332–346. [Google Scholar] [CrossRef]

- Etaio, I.; Albisu, M.; Ojeda, M.; Gil, P.; Salmerón, J.; Elortondo, F. Sensory quality control for food certification: A case study on wine. Method development. Food Control 2010, 21, 533–541. [Google Scholar] [CrossRef]

- Etaio, I.; Albisu, M.; Ojeda, M.; Gil, P.; Salmerón, J.; Elortondo, F. Sensory quality control for food certification: A case study on wine. Panel training and qualification, method validation and monitoring. Food Control 2010, 21, 542–548. [Google Scholar] [CrossRef]

- Carmer, A.; Kleypas, J.; Orlowski, M. Wine sensory experience in hospitality education: A systematic review. Br. Food J. 2024, 126, 1365–1386. [Google Scholar] [CrossRef]

| Technology | Advantages | Challenges | Reference |

|---|---|---|---|

| Eye Tracking | Provides precise insights into visual attention and gaze patterns | High-cost and specialized equipment and software | [121,135,136,137] |

| Generates objective, unconscious data, reducing bias | Requires precise calibration for accuracy | ||

| Produces detailed metrics like a heatmap | Intrusive devices (e.g., glasses) may affect natural behavior | ||

| Applicable in various fields, including marketing and neuroscience | Generates complex datasets requiring specialized analytical skills | ||

| FaceReader | Automatically detects facial expressions and emotions in real-time | Accuracy is affected by lighting, camera quality, and participant movement | [6,129,133,134] |

| Non-intrusive and user-friendly, requiring no wearable devices | May oversimplify or misinterpret mixed or subtle emotions | ||

| Applicable across disciplines like psychology, marketing, and usability testing | Cultural and individual variations in expressions can impact the reliability of results | ||

| Reduces the need for manual emotion coding, saving time and effort | High initial investment in software and support equipment |

| Concept | Description | Application in Food Marketing | Reference |

|---|---|---|---|

| Sensory Marketing | Integrates human senses (sight, sound, touch, taste, and smell) into marketing to evoke emotions and influence consumer behavior. |

| [162] |

| Crossmodal Correspondences | Utilizes sound and shape symbolism to align sensory expectations with product attributes. |

| [163] |

| Sensory Quality Signals | Employs extrinsic sensory cues like sensory descriptions and labels to influence consumer choices. |

| [164] |

| Integration of Sensory Analysis | Combines sensory analysis with marketing to improve product development and market strategies. |

| [165,166] |

| Holistic Food Development | Proposes a comprehensive approach to functional food development, integrating technological and nutritional perspectives. |

| [167] |

| Culinary and Sensory Research | Encourages collaboration between food scientists and culinary experts to enhance food offerings and consumer experiences. |

| [160] |

| Method | Description | Key Insights | Ref |

|---|---|---|---|

| Napping | A rapid sensory method based on holistic assessment where samples are arranged on a sheet based on perceived similarities and differences. | Napping effectively highlights qualitative sample differences and can be improved with panel training on method or product familiarity. | [170]. |

| Electronic Noses and Tongues | These devices use sensor arrays and pattern recognition software to create fingerprints of samples inspired by mammalian sensory recognition. | They are widely used in the wine industry for quality control, aging control, and fraud detection, offering a holistic approach to sensory evaluation. | [172] |

| Projective Mapping | A method where panelists place samples on a two-dimensional space based on perceived similarities is often used with Ultra-Flash Profiling to provide detailed descriptions. | Experienced panelists show high similarity in results with trained panelists, indicating that familiarity with the method influences evaluations. | [170,171] |

| Holistic Wine Assessment | It focuses on olfaction’s synthetic, emotional, and mental imagery features and considers cross-modal influences on flavor perception. | This approach argues for recognizing synthetic properties like complexity and harmony and suggests that cognitive factors and preferences can bias expert judgments. | [173] |

| Sensory Quality Control | It involves developing specific methods for sensory quality control, including assessor selection, training, and method validation, which are often accredited by official bodies. | This method increases reliability and is crucial for products with quality distinctiveness labels, such as wines with the Protected Designation of Origin. | [174,175] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cosme, F.; Rocha, T.; Marques, C.; Barroso, J.; Vilela, A. Innovative Approaches in Sensory Food Science: From Digital Tools to Virtual Reality. Appl. Sci. 2025, 15, 4538. https://doi.org/10.3390/app15084538

Cosme F, Rocha T, Marques C, Barroso J, Vilela A. Innovative Approaches in Sensory Food Science: From Digital Tools to Virtual Reality. Applied Sciences. 2025; 15(8):4538. https://doi.org/10.3390/app15084538

Chicago/Turabian StyleCosme, Fernanda, Tânia Rocha, Catarina Marques, João Barroso, and Alice Vilela. 2025. "Innovative Approaches in Sensory Food Science: From Digital Tools to Virtual Reality" Applied Sciences 15, no. 8: 4538. https://doi.org/10.3390/app15084538

APA StyleCosme, F., Rocha, T., Marques, C., Barroso, J., & Vilela, A. (2025). Innovative Approaches in Sensory Food Science: From Digital Tools to Virtual Reality. Applied Sciences, 15(8), 4538. https://doi.org/10.3390/app15084538