Retinal Vessels Segmentation Techniques and Algorithms: A Survey

Abstract

:1. Introduction

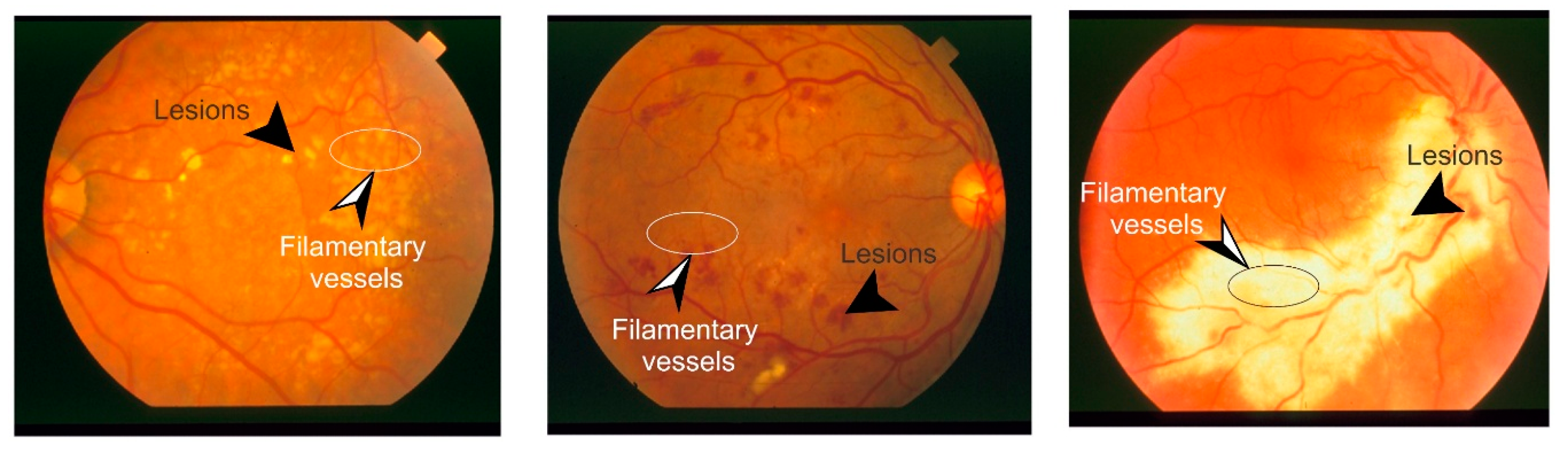

2. Retinal Fundus Imaging

- Full-color Imaging

- Monochromatic (Filtered) Imaging

- Fluorescence Angiogram

3. Retinal Image Processing

4. Retinal Vessels Segmentation Techniques

4.1. Kernel-Based Techniques

4.2. Vessel Tracking/Tracing Techniques

4.3. Mathematical Morphology-Based Techniques

4.4. Multi-Scale Techniques

4.5. Model-Based Techniques

4.5.1. Parametric Deformable Models

4.5.2. Geometric Deformable Models

4.6. Adaptive Local Thresholding Techniques

4.7. Machine Learning Techniques

5. Discussion and Conclusions

Author Contributions

Conflicts of Interest

References

- Lesage, D.; Angelini, E.D.; Bloch, I.; Funka-Lea, G. A review of 3D vessel lumen segmentation techniques: Models, features and extraction schemes. Med. Image Anal. 2009, 13, 819–845. [Google Scholar] [CrossRef] [PubMed]

- Kirbas, C.; Quek, F. A review of vessel extraction techniques and algorithms. ACM Comput. Surv. CSUR 2004, 36, 81–121. [Google Scholar] [CrossRef]

- Kirbas, C.; Quek, F.K. Vessel extraction techniques and algorithms: A survey. In Proceedings of the the Third IEEE symposium on Bioinformatics and Bioengineering, Bethesda, MD, USA, 12 March 2003; pp. 238–245. [Google Scholar]

- Suri, J.S.; Liu, K.; Reden, L.; Laxminarayan, S. A review on MR vascular image processing: Skeleton versus nonskeleton approaches: Part II. IEEE Trans. Inf. Technol. Biomed. 2002, 6, 338–350. [Google Scholar] [CrossRef] [PubMed]

- Fraz, M.M.; Remagnino, P.; Hoppe, A.; Uyyanonvara, B.; Rudnicka, A.R.; Owen, C.G.; Barman, S.A. Blood vessel segmentation methodologies in retinal images—A survey. Comput. Methods Program Biomed. 2012, 108, 407–433. [Google Scholar] [CrossRef] [PubMed]

- Srinidhi, C.L.; Aparna, P.; Rajan, J. Recent Advancements in Retinal Vessel Segmentation. J. Med. Syst. 2017, 41, 70. [Google Scholar] [CrossRef] [PubMed]

- Dash, J.; Bhoi, N. A Survey on Blood Vessel Detection Methodologies in Retinal Images. In Proceedings of the 2015 International Conference on Computational Intelligence and Networks, Bhubaneshwar, India, 12–13 January 2015; pp. 166–171. [Google Scholar]

- Mansour, R. Evolutionary Computing Enriched Computer Aided Diagnosis System For Diabetic Retinopathy: A Survey. IEEE Rev. Biomed. Eng. 2017, 10, 334–349. [Google Scholar] [CrossRef] [PubMed]

- Pohankar, N.P.; Wankhade, N.R. Different methods used for extraction of blood vessels from retinal images. In Proceedings of the 2016 World Conference on Futuristic Trends in Research and Innovation for Social Welfare (Startup Conclave), Coimbatore, India, 29 February–1 March 2016; pp. 1–4. [Google Scholar]

- Singh, N.; Kaur, L. A survey on blood vessel segmentation methods in retinal images. In Proceedings of the 2015 International Conference on Electronic Design, Computer Networks & Automated Verification (EDCAV), Shillong, India, 29–30 January 2015; pp. 23–28. [Google Scholar]

- Kolb, H. Simple Anatomy of the Retina, 2012. Available online: http://webvision.med.utah.edu/book/part-i-foundations/simple-anatomy-of-the-retina/ (accessed on 22 January 2018).

- Oloumi, F.; Rangayyan, R.M.; Eshghzadeh-Zanjani, P.; Ayres, F. Detection of blood vessels in fundus images of the retina using gabor wavelets. In Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 6451–6454. Available online: http://people.ucalgary.ca/~ranga/enel697/ (accessed on 12 September 2017).

- Saine, P.J.; Tyler, M.E. Ophthalmic Photography: Retinal Photography, Angiography, and Electronic Imaging; Butterworth-Heinemann: Boston, MA, USA, 2002; Volume 132. [Google Scholar]

- Kolb, H. Simple Anatomy of the Retina. In Webvision: The Organization of the Retina and Visual System; Kolb, H., Fernandez, E., Nelson, R., Eds.; University of Utah Health Sciences Center: Salt Lake City, UT, USA, 1995; Available online: http://europepmc.org/books/NBK11533;jsessionid=4C8BAD63F75EAD49C21BC65E2AE5F6F3 (accessed on 22 January 2018).

- Ophthalmic Photographers’ Society. Available online: www.opsweb.org (accessed on 22 January 2018).

- Ng, E.; Acharya, U.R.; Rangayyan, R.M.; Suri, J.S. Ophthalmological Imaging and Applications; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Patel, S.N.; Klufas, M.A.; Ryan, M.C.; Jonas, K.E.; Ostmo, S.; Martinez-Castellanos, M.A.; Berrocal, A.M.; Chiang, M.F.; Chan, R.V.P. Color Fundus Photography Versus Fluorescein Angiography in Identification of the Macular Center and Zone in Retinopathy of Prematurity. Am. J. Ophthalmol. 2015, 159, 950–957. [Google Scholar] [CrossRef] [PubMed]

- Orlando, J.I.; Prokofyeva, E.; Blaschko, M.B. A discriminatively trained fully connected conditional random field model for blood vessel segmentation in fundus images. IEEE Trans. Biomed. Eng. 2017, 64, 16–27. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Dashtbozorg, B.; Bekkers, E.; Pluim, J.P.; Duits, R.; ter Haar Romeny, B.M. Robust retinal vessel segmentation via locally adaptive derivative frames in orientation scores. IEEE Trans. Med. Imaging 2016, 35, 2631–2644. [Google Scholar] [CrossRef] [PubMed]

- Abbasi-Sureshjani, S.; Zhang, J.; Duits, R.; ter Haar Romeny, B. Retrieving challenging vessel connections in retinal images by line co-occurrence statistics. Biol. Cybern. 2017, 111, 237–247. [Google Scholar] [CrossRef] [PubMed]

- Abbasi-Sureshjani, S.; Favali, M.; Citti, G.; Sarti, A.; ter Haar Romeny, B.M. Curvature integration in a 5D kernel for extracting vessel connections in retinal images. IEEE Trans. Image Process. 2018, 27, 606–621. [Google Scholar] [CrossRef] [PubMed]

- Gu, L.; Cheng, L. Learning to boost filamentary structure segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 639–647. [Google Scholar]

- De, J.; Cheng, L.; Zhang, X.; Lin, F.; Li, H.; Ong, K.H.; Yu, W.; Yu, Y.; Ahmed, S. A graph-theoretical approach for tracing filamentary structures in neuronal and retinal images. IEEE Trans. Med. Imaging 2016, 35, 257–272. [Google Scholar] [CrossRef] [PubMed]

- Maninis, K.-K.; Pont-Tuset, J.; Arbeláez, P.; Van Gool, L. Deep retinal image understanding. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 140–148. [Google Scholar]

- Sironi, A.; Lepetit, V.; Fua, P. Projection onto the manifold of elongated structures for accurate extraction. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 316–324. [Google Scholar]

- Solouma, N.; Youssef, A.-B.M.; Badr, Y.; Kadah, Y.M. Real-time retinal tracking for laser treatment planning and administration. In Medical Imaging 2001: Image Processing; SPIE—The International Society of Optics and Photonics: Bellingham, WA, USA; pp. 1311–1321.

- Wang, Y.; Lee, S.C. A fast method for automated detection of blood vessels in retinal images. In Proceedings of the Conference Record of the Thirty-First Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 2–5 November 1997; pp. 1700–1704. [Google Scholar]

- Can, A.; Shen, H.; Turner, J.N.; Tanenbaum, H.L.; Roysam, B. Rapid automated tracing and feature extraction from retinal fundus images using direct exploratory algorithms. IEEE Trans. Inf. Technol. Biomed. 1999, 3, 125–138. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Chutatape, O. Fundus image features extraction. In Proceedings of the 22nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 23–28 July 2000; pp. 3071–3073. [Google Scholar]

- Yang, Y.; Huang, S.; Rao, N. An automatic hybrid method for retinal blood vessel extraction. Int. J. Appl. Math. Comput. Sci. 2008, 18, 399–407. [Google Scholar] [CrossRef]

- Zhao, Y.; Rada, L.; Chen, K.; Harding, S.P.; Zheng, Y. Automated vessel segmentation using infinite perimeter active contour model with hybrid region information with application to retinal images. IEEE Trans. Med. Imaging 2015, 34, 1797–1807. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Barriga, E.S.; Agurto, C.; Echegaray, S.; Pattichis, M.S.; Bauman, W.; Soliz, P. Fast localization and segmentation of optic disk in retinal images using directional matched filtering and level sets. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 644–657. [Google Scholar] [CrossRef] [PubMed]

- Aibinu, A.M.; Iqbal, M.I.; Shafie, A.A.; Salami, M.J.E.; Nilsson, M. Vascular intersection detection in retina fundus images using a new hybrid approach. Comput. Biol. Med. 2010, 40, 81–89. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.-H.; Agam, G.; Stanchev, P. A hybrid filtering approach to retinal vessel segmentation. In Proceedings of the IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Arlington, VA, USA, 12–15 April 2007; pp. 604–607. [Google Scholar]

- Siddalingaswamy, P.; Prabhu, K.G. Automatic detection of multiple oriented blood vessels in retinal images. J. Biomed. Sci. Eng. 2010, 3. [Google Scholar] [CrossRef]

- Wang, S.; Yin, Y.; Cao, G.; Wei, B.; Zheng, Y.; Yang, G. Hierarchical retinal blood vessel segmentation based on feature and ensemble learning. Neurocomputing 2015, 149, 708–717. [Google Scholar] [CrossRef]

- Kauppi, T.; Kalesnykiene, V.; Kamarainen, J.-K.; Lensu, L.; Sorri, I.; Raninen, A.; Voutilainen, R.; Uusitalo, H.; Kälviäinen, H.; Pietilä, J. The DIARETDB1 Diabetic Retinopathy Database and Evaluation Protocol. In Proceedings of the British Machine Vision Conference 2007, Coventry, UK, 10–13 September 2007; pp. 1–10. [Google Scholar]

- Walter, T.; Klein, J.-C.; Massin, P.; Erginay, A. A contribution of image processing to the diagnosis of diabetic retinopathy-detection of exudates in color fundus images of the human retina. IEEE Trans. Med. Imaging 2002, 21, 1236–1243. [Google Scholar] [CrossRef] [PubMed]

- Hanley, J.A.; McNeil, B.J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 1982, 143, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Metz, C.E. Receiver operating characteristic analysis: A tool for the quantitative evaluation of observer performance and imaging systems. J. Am. Coll. Radiol. 2006, 3, 413–422. [Google Scholar] [CrossRef] [PubMed]

- Staal, J.; Abràmoff, M.D.; Niemeijer, M.; Viergever, M.A.; Van Ginneken, B. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 2004, 23, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Niemeijer, M.; Staal, J.; van Ginneken, B.; Loog, M.; Abramoff, M.D. Comparative study of retinal vessel segmentation methods on a new publicly available database. In Proceedings of the Medical Imaging 2004: Physics of Medical Imaging, San Diego, CA, USA, 15–17 February 2004; pp. 648–656. [Google Scholar]

- Hoover, A.; Kouznetsova, V.; Goldbaum, M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans. Med. Imaging 2000, 19, 203–210. [Google Scholar] [CrossRef] [PubMed]

- Bankhead, P.; Scholfield, C.N.; McGeown, J.G.; Curtis, T.M. Fast retinal vessel detection and measurement using wavelets and edge location refinement. PLoS ONE 2012, 7, e32435. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- MESSIDOR: Methods for Evaluating Segmentation and Indexing Techniques Dedicated to Retinal Ophthalmology, 2004. Available online: http://www.adcis.net/en/Download-Third-Party/Messidor.html (accessed on 22 January 2018).

- Decencière, E.; Zhang, X.; Cazuguel, G.; Laÿ, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A. Feedback on a publicly distributed image database: The Messidor database. Image Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef] [Green Version]

- Odstrcilik, J.; Kolar, R.; Budai, A.; Hornegger, J.; Jan, J.; Gazarek, J.; Kubena, T.; Cernosek, P.; Svoboda, O.; Angelopoulou, E. Retinal vessel segmentation by improved matched filtering: Evaluation on a new high-resolution fundus image database. IET Image Process. 2013, 7, 373–383. [Google Scholar] [CrossRef]

- Chaudhuri, S.; Chatterjee, S.; Katz, N.; Nelson, M.; Goldbaum, M. Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans. Med. Imaging 1989, 8, 263–269. [Google Scholar] [CrossRef] [PubMed]

- Chanwimaluang, T.; Fan, G. An efficient algorithm for extraction of anatomical structures in retinal images. In Proceedings of the 2003 International Conference on Image Processing (Cat. No.03CH37429), Barcelona, Spain, 14–17 September 2003; Volume 1091, pp. I-1093–I-1096. [Google Scholar]

- Al-Rawi, M.; Qutaishat, M.; Arrar, M. An improved matched filter for blood vessel detection of digital retinal images. Comput. Biol. Med. 2007, 37, 262–267. [Google Scholar] [CrossRef] [PubMed]

- Villalobos-Castaldi, F.M.; Felipe-Riverón, E.M.; Sánchez-Fernández, L.P. A fast, efficient and automated method to extract vessels from fundus images. J. Vis. 2010, 13, 263–270. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, L.; Zhang, L.; Karray, F. Retinal vessel extraction by matched filter with first-order derivative of Gaussian. Comput. Biol. Med. 2010, 40, 438–445. [Google Scholar] [CrossRef] [PubMed]

- Zhu, T.; Schaefer, G. Retinal vessel extraction using a piecewise Gaussian scaled model. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 5008–5011. [Google Scholar]

- Kaur, J.; Sinha, H. Automated detection of retinal blood vessels in diabetic retinopathy using Gabor filter. Int. J. Comput. Sci. Netw. Secur. 2012, 12, 109. [Google Scholar]

- Zolfagharnasab, H.; Naghsh-Nilchi, A.R. Cauchy Based Matched Filter for Retinal Vessels Detection. J. Med. Signals Sens. 2014, 4, 1–9. [Google Scholar] [PubMed]

- Singh, N.P.; Kumar, R.; Srivastava, R. Local entropy thresholding based fast retinal vessels segmentation by modifying matched filter. In Proceedings of the International Conference on Computing, Communication & Automation, Noida, India, 15–16 May 2015; pp. 1166–1170. [Google Scholar]

- Kumar, D.; Pramanik, A.; Kar, S.S.; Maity, S.P. Retinal blood vessel segmentation using matched filter and laplacian of gaussian. In Proceedings of the 2016 International Conference on Signal Processing and Communications (SPCOM), Bangalore, India, 12–15 June 2016; pp. 1–5. [Google Scholar]

- Singh, N.P.; Srivastava, R. Retinal blood vessels segmentation by using Gumbel probability distribution function based matched filter. Comput. Methods Programs Biomed. 2016, 129, 40–50. [Google Scholar] [CrossRef] [PubMed]

- Chutatape, O.; Liu, Z.; Krishnan, S.M. Retinal blood vessel detection and tracking by matched Gaussian and Kalman filters. In Proceedings of the the 20th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vol.20 Biomedical Engineering towards the Year 2000 and Beyond (Cat. No.98CH36286), Hong Kong, China, 1 November 1998; Volume 3146, pp. 3144–3149. [Google Scholar]

- Sofka, M.; Stewart, C.V. Retinal Vessel Centerline Extraction Using Multiscale Matched Filters, Confidence and Edge Measures. IEEE Trans. Med. Imaging 2006, 25, 1531–1546. [Google Scholar] [CrossRef] [PubMed]

- Adel, M.; Rasigni, M.; Gaidon, T.; Fossati, C.; Bourennane, S. Statistical-based linear vessel structure detection in medical images. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 649–652. [Google Scholar]

- Wu, C.H.; Agam, G.; Stanchev, P. A general framework for vessel segmentation in retinal images. In Proceedings of the 2007 International Symposium on Computational Intelligence in Robotics and Automation, Jacksonville, FL, USA, 20–23 June 2007; pp. 37–42. [Google Scholar]

- Yedidya, T.; Hartley, R. Tracking of Blood Vessels in Retinal Images Using Kalman Filter. In Proceedings of the 2008 Digital Image Computing: Techniques and Applications, Canberra, Australia, 1–3 December 2008; pp. 52–58. [Google Scholar]

- Yin, Y.; Adel, M.; Guillaume, M.; Bourennane, S. A probabilistic based method for tracking vessels in retinal images. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 4081–4084. [Google Scholar]

- Li, H.; Zhang, J.; Nie, Q.; Cheng, L. A retinal vessel tracking method based on bayesian theory. In Proceedings of the 2013 8th IEEE Conference on Industrial Electronics and Applications (ICIEA), Melbourne, Australia, 19–21 June 2013; pp. 232–235. [Google Scholar]

- Budai, A.; Michelson, G.; Hornegger, J. Multiscale Blood Vessel Segmentation in Retinal Fundus Images. In Proceedings of the Bildverarbeitung für die Medizin, Aachen, Germany, 14–16 March 2010; pp. 261–265. [Google Scholar]

- Moghimirad, E.; Rezatofighi, S.H.; Soltanian-Zadeh, H. Multi-scale approach for retinal vessel segmentation using medialness function. In Proceedings of the 2010 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Rotterdam, The Netherlands, 14–17 April 2010; pp. 29–32. [Google Scholar]

- Abdallah, M.B.; Malek, J.; Krissian, K.; Tourki, R. An automated vessel segmentation of retinal images using multiscale vesselness. In Proceedings of the Eighth International Multi-Conference on Systems, Signals & Devices, Sousse, Tunisia, 22–25 March 2011; pp. 1–6. [Google Scholar]

- Rattathanapad, S.; Mittrapiyanuruk, P.; Kaewtrakulpong, P.; Uyyanonvara, B.; Sinthanayothin, C. Vessel extraction in retinal images using multilevel line detection. In Proceedings of the 2012 IEEE-EMBS International Conference on Biomedical and Health Informatics, Hong Kong, China, 5–7 January 2012; pp. 345–349. [Google Scholar]

- Kundu, A.; Chatterjee, R.K. Retinal vessel segmentation using Morphological Angular Scale-Space. In Proceedings of the 2012 Third International Conference on Emerging Applications of Information Technology, Kolkata, India, 30 November–1 December 2012; pp. 316–319. [Google Scholar]

- Frucci, M.; Riccio, D.; Baja, G.S.D.; Serino, L. Using Contrast and Directional Information for Retinal Vessels Segmentation. In Proceedings of the 2014 Tenth International Conference on Signal-Image Technology and Internet-Based Systems, Marrakech, Morocco, 23–27 November 2014; pp. 592–597. [Google Scholar]

- Jiang, Z.; Yepez, J.; An, S.; Ko, S. Fast, accurate and robust retinal vessel segmentation system. Biocybern. Biomed. Eng. 2017, 37, 412–421. [Google Scholar] [CrossRef]

- Dizdaro, B.; Ataer-Cansizoglu, E.; Kalpathy-Cramer, J.; Keck, K.; Chiang, M.F.; Erdogmus, D. Level sets for retinal vasculature segmentation using seeds from ridges and edges from phase maps. In Proceedings of the 2012 IEEE International Workshop on Machine Learning for Signal Processing, Santander, Spain, 23–26 September 2012; pp. 1–6. [Google Scholar]

- Jin, Z.; Zhaohui, T.; Weihua, G.; Jinping, L. Retinal vessel image segmentation based on correlational open active contours model. In Proceedings of the 2015 Chinese Automation Congress (CAC), Wuhan, China, 27–29 November 2015; pp. 993–998. [Google Scholar]

- Gongt, H.; Li, Y.; Liu, G.; Wu, W.; Chen, G. A level set method for retina image vessel segmentation based on the local cluster value via bias correction. In Proceedings of the 2015 8th International Congress on Image and Signal Processing (CISP), Shenyang, China, 14–16 October 2015; pp. 413–417. [Google Scholar]

- Jiang, X.; Mojon, D. Adaptive local thresholding by verification-based multithreshold probing with application to vessel detection in retinal images. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 131–137. [Google Scholar] [CrossRef]

- Akram, M.U.; Tariq, A.; Khan, S.A. Retinal image blood vessel segmentation. In Proceedings of the 2009 International Conference on Information and Communication Technologies, Doha, Qatar, 15–16 August 2009; pp. 181–192. [Google Scholar]

- Christodoulidis, A.; Hurtut, T.; Tahar, H.B.; Cheriet, F. A multi-scale tensor voting approach for small retinal vessel segmentation in high resolution fundus images. Comput. Med. Imaging Graph. 2016, 52, 28–43. [Google Scholar] [CrossRef] [PubMed]

- Nekovei, R.; Ying, S. Back-propagation network and its configuration for blood vessel detection in angiograms. IEEE Trans. Neural Netw. 1995, 6, 64–72. [Google Scholar] [CrossRef] [PubMed]

- Salem, S.A.; Salem, N.M.; Nandi, A.K. Segmentation of retinal blood vessels using a novel clustering algorithm. In Proceedings of the 2006 14th European Signal Processing Conference, Florence, Italy, 4–8 September 2006; pp. 1–5. [Google Scholar]

- Xie, S.; Nie, H. Retinal vascular image segmentation using genetic algorithm Plus FCM clustering. In Proceedings of the 2013 Third International Conference on Intelligent System Design and Engineering Applications (ISDEA), Hong Kong, China, 16–18 January 2013; pp. 1225–1228. [Google Scholar]

- Akhavan, R.; Faez, K. A Novel Retinal Blood Vessel Segmentation Algorithm using Fuzzy segmentation. Int. J. Electr. Comput. Eng. 2014, 4, 561. [Google Scholar] [CrossRef]

- Emary, E.; Zawbaa, H.M.; Hassanien, A.E.; Schaefer, G.; Azar, A.T. Retinal vessel segmentation based on possibilistic fuzzy c-means clustering optimised with cuckoo search. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014; pp. 1792–1796. [Google Scholar]

- Maji, D.; Santara, A.; Ghosh, S.; Sheet, D.; Mitra, P. Deep neural network and random forest hybrid architecture for learning to detect retinal vessels in fundus images. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3029–3032. [Google Scholar]

- Sharma, S.; Wasson, E.V. Retinal Blood Vessel Segmentation Using Fuzzy Logic. J. Netw. Commun. Emerg. Technol. 2015, 4. [Google Scholar] [CrossRef]

- Roy, A.G.; Sheet, D. DASA: Domain Adaptation in Stacked Autoencoders using Systematic Dropout. arXiv, 2016; arXiv:1603.06060. [Google Scholar]

- Lahiri, A.; Roy, A.G.; Sheet, D.; Biswas, P.K. Deep neural ensemble for retinal vessel segmentation in fundus images towards achieving label-free angiography. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1340–1343. [Google Scholar]

- Maji, D.; Santara, A.; Mitra, P.; Sheet, D. Ensemble of deep convolutional neural networks for learning to detect retinal vessels in fundus images. arXiv, 2016; arXiv:1603.04833. [Google Scholar]

- Liskowski, P.; Krawiec, K. Segmenting Retinal Blood Vessels With Deep Neural Networks. IEEE Trans. Med. Imaging 2016, 35, 2369–2380. [Google Scholar] [CrossRef] [PubMed]

- Fraz, M.M.; Remagnino, P.; Hoppe, A.; Uyyanonvara, B.; Rudnicka, A.R.; Owen, C.G.; Barman, S.A. An ensemble classification-based approach applied to retinal blood vessel segmentation. IEEE Trans. Biomed. Eng. 2012, 59, 2538–2548. [Google Scholar] [CrossRef] [PubMed]

- Dasgupta, A.; Singh, S. A Fully Convolutional Neural Network based Structured Prediction Approach Towards the Retinal Vessel Segmentation. arXiv, 2016; arXiv:1611.02064. [Google Scholar]

- Huiqi, L.; Hsu, W.; Mong Li, L.; Tien Yin, W. Automatic grading of retinal vessel caliber. IEEE Trans. Biomed. Eng. 2005, 52, 1352–1355. [Google Scholar]

- Yu, H.; Barriga, S.; Agurto, C.; Nemeth, S.; Bauman, W.; Soliz, P. Automated retinal vessel type classification in color fundus images. Proc. SPIE 2013, 8670. [Google Scholar] [CrossRef]

- Ma, Z.; Li, H. Retinal vessel profiling based on four piecewise Gaussian model. In Proceedings of the 2015 IEEE International Conference on Digital Signal Processing (DSP), Singapore, 21–24 July 2015; pp. 1094–1097. [Google Scholar]

- Zhu, T. Fourier cross-sectional profile for vessel detection on retinal images. Comput. Med. Imaging Graph. 2010, 34, 203–212. [Google Scholar] [CrossRef] [PubMed]

- Lenskiy, A.A.; Lee, J.S. Rugged terrain segmentation based on salient features. In Proceedings of the ICCAS 2010, Gyeonggi-do, Korea, 27–30 October 2010; pp. 1737–1740. [Google Scholar]

- Salem, N.M.; Nandi, A.K. Unsupervised Segmentation of Retinal Blood Vessels Using a Single Parameter Vesselness Measure. In Proceedings of the 2008 Sixth Indian Conference on Computer Vision, Graphics & Image Processing, Bhubaneswar, India, 16–19 December 2008; pp. 528–534. [Google Scholar]

- Roerdink, J.B.; Meijster, A. The watershed transform: Definitions, algorithms and parallelization strategies. Fundam. Inform. 2000, 41, 187–228. [Google Scholar]

- Serra, J. Image Analysis and Mathematical Morphology, v. 1; Academic Press: Cambridge, MA, USA, 1982. [Google Scholar]

- Lindeberg, T. Scale-space theory: A basic tool for analyzing structures at different scales. J. Appl. Stat. 1994, 21, 225–270. [Google Scholar] [CrossRef]

- Babaud, J.; Witkin, A.P.; Baudin, M.; Duda, R.O. Uniqueness of the Gaussian Kernel for Scale-Space Filtering. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 26–33. [Google Scholar]

- Lindeberg, T. Scale-Space Theory in Computer Vision; Springer Science & Business Media: New York, NY, USA, 2013; Volume 256. [Google Scholar]

- Burt, P.; Adelson, E. The Laplacian Pyramid as a Compact Image Code. IEEE Trans. Commun. 1983, 31, 532–540. [Google Scholar] [CrossRef]

- Crowley, J.L.; Parker, A.C. A Representation for Shape Based on Peaks and Ridges in the Difference of Low-Pass Transform. IEEE Trans. Pattern Anal. Mach. Intell. 1984, PAMI-6, 156–170. [Google Scholar]

- Klinger, A. Patterns and search statistics. Optim. Methods Stat. 1971, 3, 303–337. [Google Scholar]

- Tankyevych, O.; Talbot, H.; Dokladal, P. Curvilinear morpho-Hessian filter. In Proceedings of the 2008 5th IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Paris, France, 14–17 May 2008; pp. 1011–1014. [Google Scholar]

- Frangi, A.F.; Niessen, W.J.; Vincken, K.L.; Viergever, M.A. Multiscale vessel enhancement filtering. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 1998; pp. 130–137. [Google Scholar]

- Albrecht, T.; Lüthi, M.; Vetter, T. Deformable models. Encycl. Biometr. 2015, 337–343. [Google Scholar]

- McInerney, T.; Terzopoulos, D. Deformable models in medical image analysis: A survey. Med. Image Anal. 1996, 1, 91–108. [Google Scholar] [CrossRef]

- Caselles, V.; Kimmel, R.; Sapiro, G. Geodesic active contours. Int. J. Comput. Vis. 1997, 22, 61–79. [Google Scholar] [CrossRef]

- Sethian, J.A. Analysis of Flame Propagation; LBL-14125; Lawrence Berkeley Lab.: Berkeley, CA, USA; p. 139.

- Sethian, J.A. Curvature and the evolution of fronts. Commun. Math. Phys. 1985, 101, 487–499. [Google Scholar] [CrossRef]

- Foracchia, M.; Grisan, E.; Ruggeri, A. Luminosity and contrast normalization in retinal images. Med. Image Anal. 2005, 9, 179–190. [Google Scholar] [CrossRef] [PubMed]

- Kingsbury, N. The dual-tree complex wavelet transform: A new efficient tool for image restoration and enhancement. In Proceedings of the 9th European Signal Processing Conference (EUSIPCO 1998), Rhodes, Greece, 8–11 September 1998; pp. 1–4. [Google Scholar]

- Nguyen, U.T.V.; Bhuiyan, A.; Park, L.A.F.; Ramamohanarao, K. An effective retinal blood vessel segmentation method using multi-scale line detection. Pattern Recognit. 2013, 46, 703–715. [Google Scholar] [CrossRef]

- Van Antwerpen, G.; Verbeek, P.; Groen, F. Automatic counting of asbestos fibres. In Proceedings of the Signal Processing III: Theories and Applications: Proceedings of EUSIPCO-86, Third European Signal Processing Conference, The Hague, The Netherlands, 2–5 September 1986; pp. 891–896. [Google Scholar]

- Medioni, G.; Lee, M.-S.; Tang, C.-K. A Computational Framework for Segmentation and Grouping; Elsevier: Holland, The Netherlands, 2000. [Google Scholar]

- Medioni, G.; Kang, S.B. Emerging Topics in Computer Vision; Prentice Hall PTR: Upper Saddle River, NJ, USA, 2004. [Google Scholar]

- Arneodo, A.; Decoster, N.; Roux, S. A wavelet-based method for multifractal image analysis. I. Methodology and test applications on isotropic and anisotropic random rough surfaces. Eur. Phys. J. B Condens. Matter Complex Syst. 2000, 15, 567–600. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning (Information Science and Statistics); Springer: New York, NY, USA, 2006. [Google Scholar]

- Liu, J.; Sun, J.; Wang, S. Pattern recognition: An overview. Int. J. Comput. Sci. Netw. Secur. 2006, 6, 57–61. [Google Scholar]

- Skolidis, G. Transfer Learning with Gaussian Processes. Ph.D. Thesis, The University of Edinburgh, Edinburgh, UK, 2012. [Google Scholar]

- Kochenderfer, M.J. Adaptive Modelling and Planning for Learning Intelligent Behaviour. Ph.D. Thesis, The University of Edinburgh, Edinburgh, UK, 2006. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Krishnapuram, R.; Keller, J.M. A possibilistic approach to clustering. IEEE Trans. Fuzzy Syst. 1993, 1, 98–110. [Google Scholar] [CrossRef]

- Pal, N.R.; Pal, K.; Keller, J.M.; Bezdek, J.C. A possibilistic fuzzy c-means clustering algorithm. IEEE Trans. Fuzzy Syst. 2005, 13, 517–530. [Google Scholar] [CrossRef]

| Method | Year | Image Processing Technique | Performance Metric | Validation Dataset | Technique Category |

|---|---|---|---|---|---|

| Chaudhuri et al. [48] | 1989 | Two-dimensional Gaussian matched filter | - | - | Kernel-based |

| Chanwimaluang and Fan [49] | 2003 | Gaussian matched filter + entropy adaptive thresholding | - | STARE | |

| Al-Rawi et al. [50] | 2007 | Gaussian matched filter with modified parameters | ROC 1 | DRIVE | |

| Villalobos-Castaldi et al. [51] | 2010 | Gaussian matched filter + entropy adaptive thresholding | Acc 2, Sp 3, Se 4 | DRIVE | |

| Zhang et al. [52] | 2010 | Two kernels: Gaussian + FDOG 5 | Acc, FPR 6 | DRIVE, STARE | |

| Zhu and Schaefer [53] | 2011 | Piece-wise Gaussian scaled model | - | - | |

| Kaur and Sinha [54] | 2012 | Filter Kernel: Gabor filter | ROC | DRIVE, STARE | |

| Odstrcilik et al. [47] | 2013 | Improved t-dimensional Gaussian matched filter. | Acc, Sp, Se | DRIVE, STARE | |

| Zolfagharnasab et al. [55] | 2014 | Filter kernel: Caushy Probability Density Function | Acc, FPR | DRIVE | |

| Singh et al. [56] | 2015 | Modified Gaussian matched filter + Entropy thresholding | Acc, Sp, Se | DRIVE | |

| Kumar et al. [57] | 2016 | Filter Kernel: Laplacian of Gaussian | Acc, Sp, Se | DRIVE, STARE | |

| Singh and Strivastava [58] | 2016 | Filter kernel: Gumbel Probability Density Function. | Acc, ROC | DRIVE, STARE | |

| Chutatape et al. [59] | 1998 | Vessel tracking by Kalman filter and matched Gaussian | - | - | Vessel tracking |

| Sofka and Stewar [60] | 2006 | Vessel tracking by matched filter responses + confidence | (1-Precision) | DRIVE, STARE | |

| measures + vessel boundaries measure. | versus Recall curve | ||||

| Adel et al. [61] | 2009 | Bayesian vessel tracking. | SMF 7 | Simulated | |

| Dataset + 20 | |||||

| Images at | |||||

| Marseille | |||||

| University | |||||

| Wu et al. [62] | 2007 | Vessel tracking by matched filters + Hessian matrix. | Se, FPR | DRIVE, STARE | |

| Yedidya and Hartley [63] | 2008 | Vessel tracking by Kalman filter. | TPR 8, FNR 9 | DRIVE | |

| Yin et al. [64] | 2010 | Statistical-based vessel tracing | TPR, FPR | DRIVE | |

| Li et al. [65] | 2013 | Vessel tracking by Bayesian theory. | - | - | |

| De et al. [23] | 2016 | Vessel tracking using mathematical graph theory. | GFPR 10 | DRIVE, STARE | |

| Budai et al. [66] | 2010 | Gaussian pyramid multi-scaling. | Acc, Sp, Se | DRIVE, STARE | Multi-scale |

| Moghimirad et al. [67] | 2010 | Multi-scale based on weighted medialness function. | ROC, Acc | DRIVE, STARE | |

| Abdallah et al. [68] | 2011 | Multi-scale based on Anisotropic diffusion. | ROC | STARE | |

| Rattathanapad et al. [69] | 2012 | Multi-scale based on line primitives. | FPR | DRIVE | |

| Kundu and Chatterjee [70] | 2012 | Morphological Angular Scale-space | MSE 11 | DRIVE | Morphological |

| Based | |||||

| Frucci et al. [71] | 2014 | Watershed transform + Contrast and directional Maps. | Acc, Precision | DRIVE | |

| Jiang et al. [72] | 2017 | Global thresholding based on morphological operations. | Acc, Execution time | DRIVE, STARE | |

| Dizdaro et al. [73] | 2012 | Level set in terms of initialization and edge detection. | Acc, Sp, Se | DRIVE | Deformable Model |

| Proposed Dataset | |||||

| Jin et al. [74]. | 2015 | Snakes contours | Acc, Sp, Se | DRIVE | |

| Zhao et al. [31]. | 2015 | Infinite perimeter active contour with hybrid region terms. | Acc, Sp, Se | DRIVE, STARE | |

| Gong et al. [75] | 2015 | Level set without using local region area. | Acc, Sp, Se | DRIVE | |

| Jiang and Mojon [76] | 2003 | Knowledge-guided local adaptive thresholding | TPR, FPR | STARE | Adaptive Local |

| Filter response | Thresholding | ||||

| analysis | |||||

| Akram et al. [77] | 2009 | Statistical-based adaptive thresholding. | Acc, ROC | DRIVE | |

| Christodoulidis et al. [78] | 2016 | Local adaptive thresholding based on multi-scale tensor | Acc, Sp, Se | Erlangen Dataset | |

| voting | |||||

| Nekovei and Ying [79] | 1995 | Back propagation ANN 12 | Se | - | Machine Learning |

| Salem et al. [80] | 2006 | K-nearest neighbors (KNN) | Se, Sp | STARE | |

| Xie and Nie [81] | 2013 | Genetic Algorithm + Fuzzy c-means | - | DRIVE | |

| Akhavan and Faez [82] | 2014 | Vessel Tracking + Fuzzy c-means | Acc | DRIVE, STARE | |

| Emary et al. [83] | 2014 | Possibilistic version of fuzzy c-means | Acc, Sp, Se | DRIVE, STARE | |

| Optimized with Cuckoo search algorithm | |||||

| Maji et al. [84] | 2015 | Hybrid framework of deep ANNS and | |||

| Gu and Cheng [22] | 2015 | Iterative Latent classification tree | Acc | DRIVE, STARE | |

| Sharma and Wasson [85] | 2015 | Fuzzy Logic | Acc | DRIVE | |

| Ensemble Learning. | Acc | DRIVE | |||

| Roy et al. [86] | 2016 | Denoised stacked auto-encoder ANN | ROC | DRIVE, STARE | |

| Lahiri et al. [87] | 2016 | Ensemble of two parallel levels of | Acc | DRIVE | |

| Stacked denoised auto-encoder ANNS | |||||

| Maji et al. [88] | 2016 | Ensemble of 12 convolutional ANNs | Acc | DRIVE | |

| Maninis et al. [24] | 2016 | Deep Convolutional ANNs. | Area under | DRIVE, STARE | |

| Recall-Precision | |||||

| Curve | |||||

| Liskowski et al. [89] | 2016 | Deep ANNs | ROC, Acc | DRIVE, STARE | |

| CHASE [90] | |||||

| Dasgupta and Singh [91] | 2016 | Convolutional ANNs | ROC, Se, Acc, Sp | DRIVE |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almotiri, J.; Elleithy, K.; Elleithy, A. Retinal Vessels Segmentation Techniques and Algorithms: A Survey. Appl. Sci. 2018, 8, 155. https://doi.org/10.3390/app8020155

Almotiri J, Elleithy K, Elleithy A. Retinal Vessels Segmentation Techniques and Algorithms: A Survey. Applied Sciences. 2018; 8(2):155. https://doi.org/10.3390/app8020155

Chicago/Turabian StyleAlmotiri, Jasem, Khaled Elleithy, and Abdelrahman Elleithy. 2018. "Retinal Vessels Segmentation Techniques and Algorithms: A Survey" Applied Sciences 8, no. 2: 155. https://doi.org/10.3390/app8020155

APA StyleAlmotiri, J., Elleithy, K., & Elleithy, A. (2018). Retinal Vessels Segmentation Techniques and Algorithms: A Survey. Applied Sciences, 8(2), 155. https://doi.org/10.3390/app8020155