1. Introduction

Rapid development in micro electro-mechanical systems (MEMS), embedded computing, and wireless communication technologies has provided us with low-power, low-cost, and multifunctional sensor nodes. These sensor nodes can be organized to form a network called wireless sensor networks (WSNs) [

1]. Each sensor node can be equipped with visual and audio information collection modules such as microphones and video cameras. This has encouraged the development of wireless multimedia sensor networks (WMSNs) [

2]. WMSNs consist of a large number of embedded devices that are equipped with low power cameras. These camera nodes are able to retrieve multimedia content from the environment at variable rates and transmit the captured information through multi-hop communication to base station [

3,

4]. WMSNs have generated much interest in recent years, and it is predicted that WMSNs will become useful in our daily life [

5,

6,

7]. WMSNs have been widely applied to healthcare [

5], video-based environment surveillance [

6], biometric tracking [

7], and habitat monitoring [

8]. Extensive studies have been carried out in recent years on the physical layer [

9], the media access control layer [

10,

11], the network layer [

12], and the transport layer [

13,

14] in WMSNs.

Recently, there has been substantial research and extensive development in solving wireless multimedia sensor network challenges. There are several issues that must be addressed such as high packet loss rate, mobility, shared channel, limited bandwidth, high variable delays, and lack of fixed infrastructure in WMSNs [

15,

16]. However, the main issue of enabling real time video streaming in multi-hop WMSNs of embedded devices is still open and largely unexplored. WMSNs produce a large amount of video data; therefore, the probability of congestion in WMSNs is more than that in low-speed wireless sensor networks. Congestion degrades the overall performance of the network and affects the reliability due to the packet loss. Therefore, wireless transmission for multimedia with guaranteed packet delivery in WMSNs is of substantial significance due to higher data rate requirements.

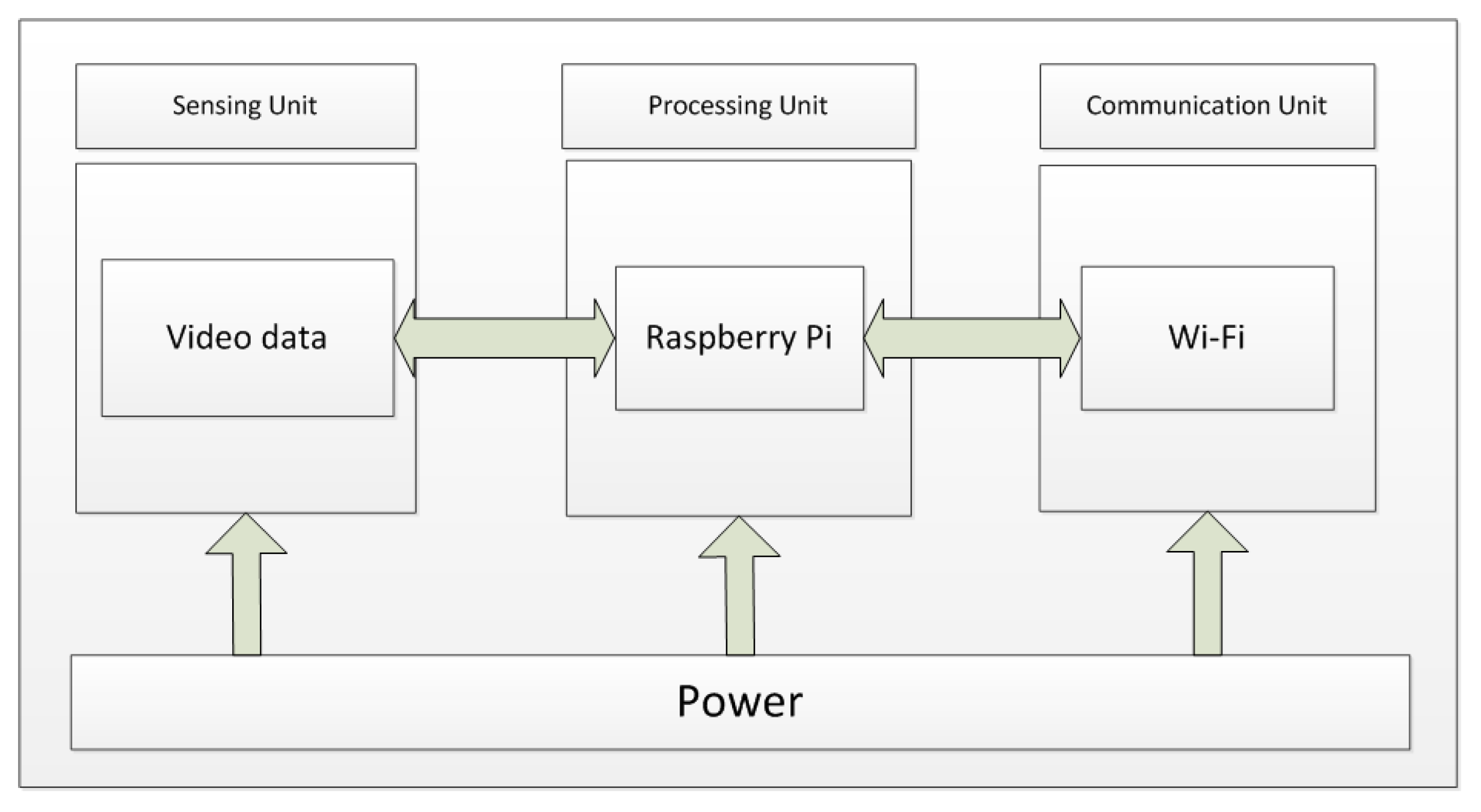

This paper focuses on the most challenging case in which multimedia data can be transferred from a source node to sink node through multi-hop communication. We describe efforts that involve system design and implementation of an embedded platform based on raspberry pi (RPi) sensor nodes. In this paper, we discuss the design and implementation of IVSP deployed for surveillance applications, and our intention is to ensure the reliable delivery of video data from collections of sensors to a sink, while avoiding congestion collapse. This video monitoring data may need to be played back later on. Therefore, when transmission fails, data packets should not be simply discarded. To the best of our knowledge, video surveillance application has not been addressed in this context before.

The major contributions of this article are summarized as follows: (1) We design a cost-effective and computationally intelligent wireless video sensor network platform over multi-hop WMSNs. Our platform consists of 7 WMSN nodes, and each node is built by a raspberry pi (RPi); (2) To get the precise measurement of congestion, we introduce a combination of two different congestion indicators to differentiate congestion levels and handle them correspondingly; (3) We present a method in which each source node maintains its data sending rate and periodically updates its data sending rate according to its parents’ congestion level. Each node has a unique IP address and can establish a routing path from itself to the sink through multi-hop communication; (4) We propose different retransmission mechanisms for different packets. Lost packets can be stored temporarily and retransmitted when congestion situation is improved; (5) The proposed platform is flexible, scalable, and suitable for wireless monitoring of buildings, open terrain, remote areas, etc.

The remainder of this paper is organized as follows.

Section 2 gives the related work.

Section 3 discusses the description of WMSNs system.

Section 4 briefly describes the design implementation.

Section 5 provides an experimental environment that includes parameter selection, optimization, and performance evaluation.

Section 6 concludes our work.

2. Related Work

In recent years, there have been studies on congestion control, reliable transmission, and congestion mitigation in WMSNs [

17,

18,

19], but few of them really consider timing of realistic hardware constraints. Transmission control protocol (TCP) provides famous rate control scheme [

20,

21]. TCP applies an additive increase and multiplicative decrease algorithm (AIMD). When the media rate approaches the bottleneck, the encoding rate also demonstrates sawtooth performance. The algorithm, which constantly generates sawtooth behavior, is not suitable for multimedia communication [

22]. This rate variation in TCP results in poor video quality [

23]. Numerous equation-based rate control schemes have also been investigated [

24]. TCP friendly rate control (TFRC) is one of them [

25]. TFRC is an equation-based congestion control algorithm, which uses throughput of TCP Reno. However, TFRC needs feedback on the basis of per-packet, which generates decrease or increase in the media rate (sawtooth) in very limited duration [

26]. However, in WMSNs, priority should be given to delay sensitive data instead of delay tolerant flows. For these reasons, in this paper, we do not consider TCP fairness for our scheme.

Existing work in congestion control can be divided into two sections. In the first section, we describe protocols with centralized congestion control scheme. In the centralized congestion control schemes, all the actions related to avoiding or controlling congestion are undertaken by the sink node.In a typical centralized congestion control scheme, the sink periodically collects data from the sensor node, detects the possibility of congestion, and accordingly sends messages to the involved sensor to overcome congestion. Rate-controlled reliable transport protocol (RCRT) is one example of a centralized congestion control scheme [

27]. In RCRT, all functions including rate allocation, rate adaptation, and congestion detection are applied at sink node. RCRT uses negative acknowledgement (NACK) based technique to implement end-to-end explicit loss recovery mechanism. However, RCRT has very slow convergence when the network has extremely unstable RTTs. Quasi-static centralized rate allocation for sensor networks (QCRA) is a centralized rate allocation scheme that assigns a fair and efficient rate to each node given the link loss rate information, topology, and routing tree information [

28]. This scheme determines the traffic levels in the vicinity of a node by computing a TDMA schedule among all neighbors of that node. The node with the highest traffic level determines the fair rate allocation. They also derive rate adaptation parameter by observing the behavior of the network during an epoch. Event-to-sink reliable transport protocol (ESRT) distinguishes network into five sections [

29]. Rate allocation is centrally determined in ESRT. In ESRT, sending rate is regulated in such a way that packets reach at a sink node without generating congestion. Due to disadvantages of centralized methods, transient congestion is difficult to manage in ESRT. Centralized congestion control routing protocol based on multi-metrics (CCRPM) [

30] combines the residual energy of a node, buffer occupancy rate, wireless link quality, and the current number of sub-nodes for the candidate parent to reduce the probability of network congestion in the process of network construction. In addition, it adopts a centralized way of determining whether the sub-nodes of the congested node need to be switched based on the traffic analysis when network congestion occurs.

In the second section, we describe protocols with distributed congestion control mechanism. Congestion detection and avoidance (CODA) uses congestion mitigation technique [

31]. The source needs to maintain its data rate using feedback from sink for persistent congestion. For transient congestion, every sensor node observes buffer occupancy level and channel utilization to detect congestion. CODA proposes closed loop multi-source regulation technique and an open loop hop-by-hop backpressure strategy. Fusion uses prioritized MAC, hop-by-hop, and rate-limiting flow control methods to ease congestion [

32]. Fusion has better fairness and higher goodput with heavy loads than previous techniques. Unlike CODA, Fusion explicitly focuses on per-source fairness. Our previous work, enhanced congestion detection and avoidance (ECODA), uses dual buffer-based congestion detection method [

33]. ECODA discriminates route through traffic and locally generated traffic for the queue control. In ECODA, packets are dropped according to their priorities to control congestion. Both congestion control and fairness (CCF) [

34] routing scheme and interference-aware fair control (IFRC) [

35] protocols provide fairness. CCF presents two schemes to ensure fairness. One is epoch-based proportional selection and other is probabilistic selection. IFRC employs multi-level buffer threshold. When buffer occupancy goes beyond certain limit, IFRC reduces the sending rate and sustains its buffer occupancy at less than certain limit. Aghdam et al. [

36] propose congestion control protocol for WMSNs (WCCP). WCCP uses Recipient Congestion Control Protocol (RCCP) at the intermediate nodes and the Source Congestion Avoidance Protocol (SCAP) at the source nodes. RCCP uses buffer length to detect congestion, while SCAP uses group of pictures (GOP) to avoid congestion. In addition, WCCP protects only I-frame data packets and discards less important data packets in congestion situation. Sergiou et al. [

37] propose hierarchical tree alternative path scheme for WSNs (HTAP).Their work provides reliability and minimizes congestion by giving information to other nodes about congestion. When congestion is imminent, HTAP securely transmit the data by creating substitute routes from source to destination. HTAP algorithm consists of four major parts: Alternative Path Creation Algorithm, Flooding with Level Discovery Functionality, Alternative Path Creation Algorithm, The Hierarchical Tree Algorithm, and the Handling of Powerless (Dead Nodes). Siphon [

38] provides congestion avoidance, congestion detection, and application fidelity by using virtual sinks (VSs) with multiple radios in the sensor network. VSs tunnel traffic events that show the signs of high traffic loads. Siphon employs a combination of end-to-end and hop-by-hop congestion control based on the location of congestion. In congestion situation, Siphon adopts the end-to-end congestion control approach between the virtual sinks and sink, and hop-by-hop method between source nodes and the virtual sinks. Gholipour et al. [

39] propose a dynamic and distributed hop-by-hop congestion control scheme to control congestion by adjusting data rate of nodes. They use virtual gradient field to provide a trade-off between possible shortest path and congested path. Sergiou et al. [

40] propose a dynamic alternative path selection scheme for wireless sensor networks (DAlPaS). DAlPaS uses channel interference, node energy, and buffer occupancy to detect congestion. It dynamically routes the traffic and selects the shortest path to avoid congestion. DAlPaS consists of two stages: hard stage and soft stage. In the hard stage, the network protects the receiving node from congestion by forcing the flows to change their paths. In the soft stage, each node receives data from only one stream to avoid congestion. Brahma et al. [

41] present a distributed congestion control algorithm that adaptively assigns a fair and efficient transmission rate to all nodes. Each node monitors and controls its aggregate input and output traffic rate. A node decides to increase or decrease the bandwidth based on the difference between input and output traffic rates.

The main idea of above mentioned researchers was to classify the priority of the packets and guarantee the transmission of these packets by the resource assignment. However, they do not consider the packets with low priority which sometimes may be lost because of no protecting mechanism.

4. Design Implementation

4.1. The Formation and Transmission of Video Packet

Our aim is to send video streams with high frame rate and good quality. For these reasons, IVSP uses H.264 standard for video compression. The server application generates a video stream from either live or stored source and converts video data in H.264 streams. According to H.264 standard, there are I, P and B frames. We do not use B frames in experiments, because the decoding and encoding of these frames is dependent on the next frame. In our experiments, we use I frames and P frames. I frame priority is always much higher than P frame. The length of a packet should be adjusted in such a way that it can fulfill the requirements of transmission. If a packet has very small amount of data, every node needs to transmit quickly. This will increase the probability of congestion, energy waste, and more collisions in wireless channel. If a packet has large amount of data, which may consist of many frames, every packet has to wait until all the video frames are made. This will cause more delay. Therefore, length of packet must be chosen in appropriate way.

We assume that maximum packet length is l. If the length goes beyond this threshold, the video data will be divided into many packets. The packet will be I frame packet, if a packet contains the data of I frame. Otherwise, packet will be P frame packet. We know that wireless transmission is unreliable, so packet loss is an obvious feature. An I frame packet loss will result in loss of all P frame packets, because they will not be decoded properly and will be useless. If P frame packets are lost, video decoding will carry on with some disturbances in video data. If I frame packets are retransmitted very quickly, the consequence of loss packets will not be prominent. Therefore, retransmission of I frame packets is very important for good quality video data.

4.2. Queue Scheduler

As we discussed in

Section 4.1, different kinds of packets have different retransmission requirements. According to the degree of significance, packets can be divided into four priorities from high to low: The I frame packets have highest priority, P frame packets have high priority, lost I frame packets that need retransmission have low priority, and lost P frame packets that needs retransmission have lowest priority.

Figure 4 shows the packet storage and transmission process at a sensor node. When a node generates or receives a data packet, the packet is moved to the I-Frame packet queue or P-Frame packet queue depending on its attribute. These two queues are used to store the unconfirmed packets temporarily, waiting for the retransmission if needed. I frame packets are of significant importance in supporting the real time traffic. Therefore, we give the maximum priority to I frame packets, because our main aim is to protect I frame packets. If I-frame packet is lost, it goes into lost I frame queue, and if a P frame packet is lost it goes into lost P frame queue. In our experiments, in order to deal with traffic classes with different priorities in an efficient way, weighted round-robin scheduler is used [

42]. In the weighted round-robin scheduler, we allocated a weight

,

, that is, it is assigned

slots during each round. A traffic source with a higher weight receives more network bandwidth than a traffic source with less weight.

4.3. Congestion Detection

To measure congestion in wireless sensor networks, we present a combination of two different congestion indicators to accurately detect congestion at each sensor node. The first indicator is buffer occupancy

T, and second indicator is buffer occupancy change rate

δ. The buffer occupancy is a very important indicator of congestion. It is adopted to evaluate the amount of buffer occupied by the packets at a node over its end-to-end transmission. The advantage of buffer occupancy is that congestion at each node can be directly and quickly detected. Buffer occupancy

T can be expressed into three states: normal state, slow state, and urgent state as shown in

Figure 5. To distinguish between different buffer states, we use two different thresholds

and

, as shown in

Figure 5. Buffer occupancy change rate

δ is used to detect the network congestion and can be defined as:

in which

and

show the buffer occupancy in current and last round, respectively. From above equation, we can get the buffer occupancy change rate

δAll priority queues have the same buffer size . The buffer occupancy change rate reflects the tendency of buffer occupancy. For a given buffer occupancy, the larger the value of , the higher the probability of queue overflow is. Similarly, a negative shows that congestion has been alleviated and buffer occupancy is reduced. According to these two congestion indicators, the sensor nodes have a total of three states.

4.3.1. Normal State

Initially, the buffer of a sensor node is empty. When a packet arrives, a sensor node immediately switches to normal state. The buffer occupancy during this state is within [0, T1] and buffer occupancy change rate is also less than ρ. ρ is predefined limit of . All packets are delivered successfully; therefore, queue utilization is low.

4.3.2. Slow State

The sensor node is in slow state when its buffer occupancy is within [T1, T2].During this state, the traffic around the node is close to congestion. Large amount of data are injected into the buffer, so the buffer occupancy will increase rapidly until exceeds . The node transfers to slow state, which indicates that it may have congestion soon, and then activates the rate adjustment algorithm, which will be described later.

4.3.3. Urgent State

In this state, congestion happensatthe sensor node. The packets in this state have a loss probability. The buffer occupancyis fluctuating between regardless of value of . At this state, the children of the sensor node should slow down their sending rates to transmita smalleramount of data to the sensor node. Usually only high priority packets are buffered, because their father’squeue utilization is too high.

4.4. Distributed Source Sending Rate Control

The rate control increases the video quality of several videos sent through the network. Source nodes should control their rates to reduce the packets loss due to buffer overflow. To avoid buffer overflow, sensor nodes should reduce their sending rate if buffer increases to stage that network cannot maintain. We present a method in which each source node maintains its data sending rate and periodically updates its data sending rate according to its neighbor’s congestion level. Each node has a unique IP address and can establish a routing path from itself to the sink through multi-hop communication.

In the beginning, the sink node broadcasts the assigned maximum transmission rate of its child through feedback mechanism. Then, the sink’s child broadcasts and calculates the maximum transmission rate to its child again. After a while, every node will have a maximum transmission rate. The goal of this feedback mechanism is to maximize the quality of the played streams. The feedback process analyses the occupancy at each node and sends feedback information to the sender node. The sender node gets the feedback information, analyses it, and makes required adjustments to its transmitted streams. The idea is to modify the quantity of transmitted data according to the feedback information: when the network appears to be heavily loaded, the quantity of transmitted data is reduced; when the network appears to be lightly loaded, the quantity of transmitted data can be increased again. In this way, the source sending rate can be adjusted more efficiently and accurately.

We use

to denote the falling speed of the sending rate at each time when a state turns to its next slower one. Therefore, the sending rate at node

can be expressed as (3), where

represents minimum sending rate of node, and

represents normal sending rate of state.

The normal sending rate, minimum sending rate, and 𝛽 can be obtained from the experiments.

4.5. Traffic Control Protocol

Figure 6 shows the retransmission mechanism of IVSP. The traffic control is different for both I and P frame packet transmission. If parent node receives any packet, it sends an acknowledgement (ACK) to tell its child node and transmission of that particular packet is completed. If parent node does not receive packet, it returns sequence number of lost packet to the child node. The child node retransmits the lost packet again. The child node must guarantee to receive these return packets. Each packet needs to be confirmed by both the child node and the parent node in order to protect the loss of packet in an efficient manner. If child node is not able to receive any confirmed packet, it will send a timeout message to its parent. The parent node will send the return packet again. If the parent node successfully receives the packet, the packet is immediately removed from the child node queue. If the parent still cannot receive the requested packets after calling for retransmission for several times, it thinks that the packets are lost at its son and will not recall these packets anymore. The lost packets will be transferred to lost I frame queue or lost P frame queue, and these packets will be retransmitted during normal state.

The differences between the lost I frame queue and the lost P frame queue are retransmission time and packet priority. The retransmission of the lost I-frame packet has low priority, and the retransmission of the lost P-frame packet has the lowest priority. The retransmission of these packets should be arranged when link is not busy to avoid congestion. When sink node gets out of order packets, it adjusts the packet order of packets according to packet sequence number. If parent node has lost few packets, the parent node sends their sequence number. If these packets are found, these packets will be retransmitted when congestion is alleviated. If parent node cannot receive the lost packets after few attempts, it will not recall the lost packets.

Retransmission probability of I frame and P frame packets is an important parameter in IVSP. If retransmission probability is very small, there is not enough of a chance to retransmit the lost packets. Therefore, packet loss rate will be much higher. If I frame packet is lost in transmission, all P frame packets after it cannot be decoded correctly and will be useless. Therefore, we give more retransmission probability to lost I frame packets and less retransmission probability to P frame packets. However, too many retransmissions can increase delay, which is not desirable in wireless multimedia sensor networks. Therefore, the appropriate retransmission probability should be carefully chosen to meet the retransmission requirements so that the impact of retransmissions on normal network traffic can be minimized.

5. Experimental Results

We have deployed our platform at Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences for development and testing purposes. The algorithms are implemented in C. We must emphasize that every experiment reported in this paper is based on actual implementation running on a real test-bed. Experimental setup consists of 7 raspberry pi sensor nodes. All multimedia data can be transferred from a source node to sink node through multi-hop communication. In each raspberry pi, we stored a stream of pre-recorded video that was recorded in cubicle environment.

Figure 7 shows the network topology analyzed in this paper. All the nodes are statically deployed in the deployment area. Node 7 is connected to the PC computer, in which the collected data of each node are visualized. Since the distance between nodes is short, we assign each node an IP address. We establish the network using IP address. For example, we write the destination address of node 1 as IP address of node 2, the destination address of node 2 as IP address of node 3, and so on. In the experiments, the following metrics are compared: buffer occupancy, I-frame packet loss, and P-frame packet loss. The parameters used in our experiments are shown in

Table 1. The following three schemes are implemented:

- (1)

IVSP: It is the scheme proposed in this paper.

- (2)

ECODA [

33]: Enhanced congestion detection and avoidance for multiple class of traffic in sensor networks

- (3)

No congestion control: This is the baseline and no congestion control scheme is used. In this scheme, packets are dropped by congested sensors, and no further action is taken. We call this scheme as NoIVSP.

Figure 8 presents the status of the buffer occupancy at different data rates for the NoIVSP.

Figure 8a shows that just node 7 gets congested and remaining nodes do not experience any congestion at data rate of 0.1 Mbps. Increment in the data rate at up to 0.5 Mbps involves two other nodes in congestion, which can be seen in

Figure 8b. However, nodes 1 to 4 do not experience any congestion so far. Similarly,

Figure 8c shows that node 4 gets congested by further increase in the data rate. Results in

Figure 8d indicate that at the data rate of 2 Mbps, congestion happens at every node except node 1; it does not suffer any congestion, because data is only generated at node 1 and it does not relay any traffic. Therefore, probability of congestion is very minimal at node 1.

Figure 9a,b represents the progress made with IVSP. In

Figure 9a,b, the

y-axis is buffer occupancy, and the

x-axis is time in minutes. We can observe that IVSP efficiently adopts the buffer occupancy of sensor nodes according to network condition. Therefore, IVSP achieves superior congestion-free rate. The buffer occupancy over-shoots first and then falls down. The reason is that the network was not congestedwhen sensor nodes started sending the packets at the beginning.

Figure 8 shows that in the case of NoIVSP, the buffer begins to overflow immediately, because it has no congestion control mechanism. Whereas, whenever IVSP determines the network is congested, it applies the rate decrease step we have described, computes a new rate allocation, and sends the new rate to its child node.

Figure 9a,b also represents the variation of β at different values. When β is small, we have less incoming data, which shows that congestion probability is reduced. Therefore, buffer occupancy shuffles between normal state, slow state, and urgent state. Whereas, when β is large, incoming data becomes very high, which shows that there is more probability of congestion. Therefore, buffer occupancy continuously fluctuates between slow and urgent state. Therefore, β should be carefully chosen. From

Figure 9a, for small β, we can observe that buffer occupancy comes to the normal state and then goes back to the urgent state. Whereas in

Figure 9b, for large β, buffer occupancy does not go to the normal state at all and continuously fluctuates between urgent and normal state.

Figure 10 shows the packet delivery ratio of I frame packets with respect to time. The

x-axis shows the time and

y-axis represents packet delivery ratio. The objective of our protocol is to protect the packets with high priority; therefore, IVSP has more mechanisms to protect I Frame packets. If I frame packet is lost in transmission, all P frame packets after it cannot be decoded correctly and will be useless. Since there is no retransmission mechanism for lost packets in ECODA and NoIVSP, the packet delivery ratio of I frame packets in NoIVSP and ECODA is much lower than IVSP. ECODA protocol drops some packets with high dynamic priority in severe congestion, whereas IVSP saves high priority packets in congestion situation and retransmits those high priority packets when free channel is available.

Figure 10 shows that I frame packets have 98.7%, 93.5% and 69.2% packet delivery ratio for IVSP, ECODA and NoIVSP, respectively, when time approaches 2 min. Less I-frame packet loss in IVSP means that the video will not be interrupted and video quality in IVSP will be much superior to NoIVSP and ECODA.

Figure 11 represents the packet delivery ratio of P frame packets with respect to time. Similar to I frame packets, lost P-frame packet retransmission in IVSP should be arranged when the link is not busy to avoid congestion. ECODA drops P frame packets in congestion situations to save I frame packets. Therefore, P frame packet loss in ECODA and NoCC is much higher than P frame packet loss in IVSP.

Figure 11 shows that P frame packets has 95.8%, 87.5%, and 65.3% packet delivery ratio for IVSP, ECODA, and NoIVSP, respectively, when time approaches to 2 min. Again, NoIVSP has the highest number of lost P-frames. IVSP shows lower number of lost P-frames in comparison with NoIVSP and ECODA.