Analysis of Lookout Activity in a Simulated Environment to Investigate Maritime Accidents Caused by Human Error

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Collection

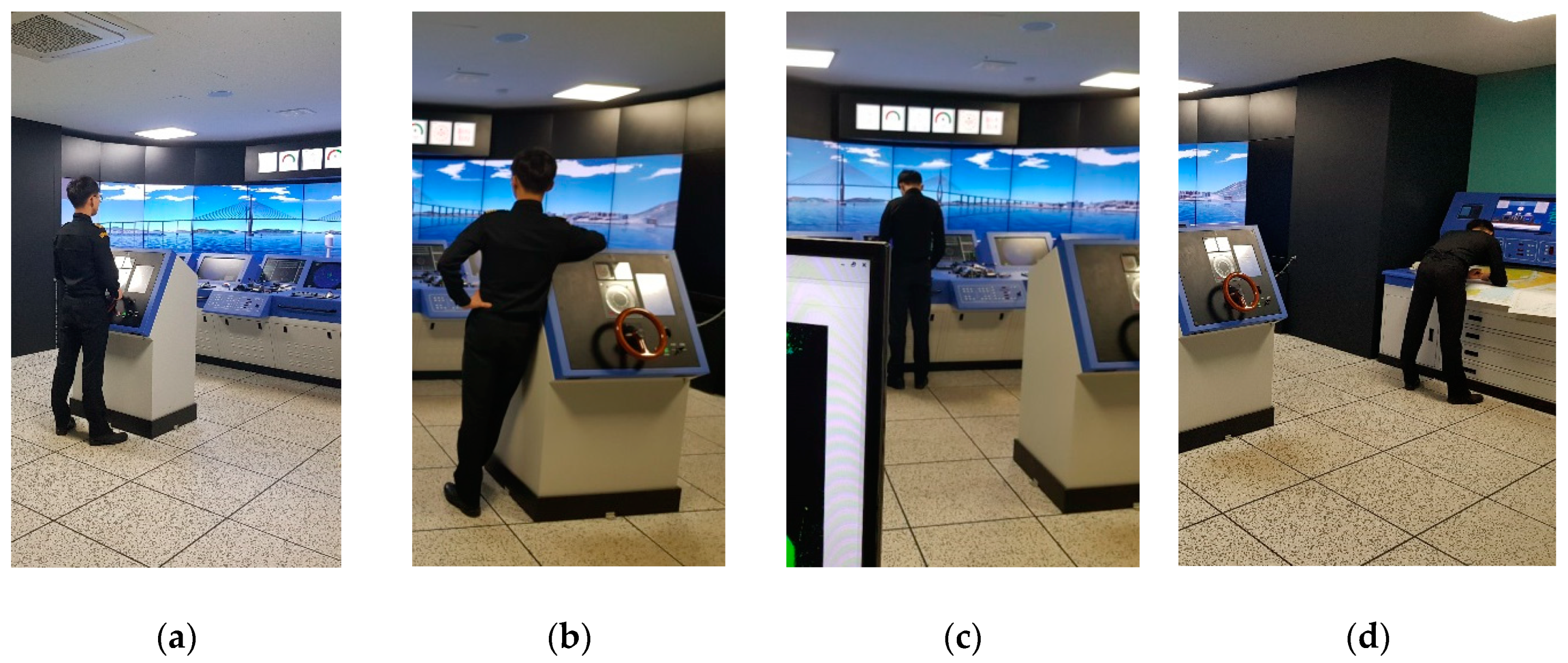

2.1.1. Experiment Configuration

2.1.2. Experiment Protocol

2.2. Data Analysis

2.2.1. Feature Extraction

2.2.2. Classification Model Development

2.2.3. Validation

3. Results

3.1. Lookout Classifications

3.2. Categorical Lookout Classification Sensitivity

3.3. Non-Scenario Validation

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Akhtar, M.J.; Utne, I.B. Human fatigue’s effect on the risk of maritime groundings—A Bayesian Network modeling approach. Saf. Sci. 2014, 62, 427–440. [Google Scholar] [CrossRef]

- Reason, J. Human error: Models and management. BMJ 2000, 320, 768–770. [Google Scholar] [CrossRef] [PubMed]

- Cacciabue, P.C. Human error risk management for engineering systems: A methodology for design, safety assessment, accident investigation and training. Reliab. Eng. Syst. Saf. 2004, 83, 229–240. [Google Scholar] [CrossRef]

- Lin, B. Behavior of ship officers in maneuvering to prevent a collision. J. Mar. Sci. Technol. 2006, 14, 225–230. [Google Scholar]

- Rip, A. Constructive technology assessment. In Futures of Science and Technology in Society; Springer VS: Wiesbaden, Germany, 2018; pp. 97–114. ISBN 978-3-658-21754-9. [Google Scholar]

- Branch, M.A.I.; House, C.; Place, C. Bridge Watchkeeping Safety Study; Department for Transportation, Marine Accident Investigation Branch: Southampton, UK, 2004; Volume 28.

- Corporate, A.G. Safety and Shipping Review; Allianz Global Corporate and Specialty: Hong Kong, China, 2017. [Google Scholar]

- Chauvin, C.; Lardjane, S.; Morel, G.; Clostermann, J.P.; Langard, B. Human and organisational factors in maritime accidents: Analysis of collisions at sea using the HFACS. Accid. Anal. Prev. 2013, 59, 26–37. [Google Scholar] [CrossRef] [PubMed]

- Murai, K.; Hayashi, Y.; Stone, L.C.; Inokuchi, S. Basic evaluation of performance of bridge resource teams involved in on-board smart education: Lookout pattern. In Review of the Faculty of Maritime Sciences; Kobe University: Kobe, Japan, 2006; Volume 3, pp. 77–83. [Google Scholar]

- Mohovic, D.; Mohovic, R.; Baric, M. Deficiencies in Learning COLREGs and New Teaching Methodology for Nautical Engineering Students and Seafarers in Lifelong Learning Programs. J. Navig. 2016, 69, 765–776. [Google Scholar] [CrossRef]

- Härmä, M.; Partinen, M.; Repo, R.; Sorsa, M.; Siivonen, P. Effects of 6/6 and 4/8 watch systems on sleepiness among bridge officers. Chronobiol. Int. 2008, 25, 413–423. [Google Scholar] [CrossRef] [PubMed]

- Lützhöft, M.; Dukic, T. Show me where you look and I’ll tell you if you’re safe: Eye tracking of maritime watchkeepers. In Proceedings of the 39th Nordic Ergonomics Society Conference, Lysekil, Sweden, 1–3 October 2007; pp. 75–78. [Google Scholar]

- Bjørneseth, F.B.; Renganayagalu, S.K.; Dunlop, M.D.; Homecker, E.; Komandur, S. Towards an experimental design framework for evaluation of dynamic workload and situational awareness in safety critical maritime settings. In Proceedings of the 26th Annual BCS Interaction Specialist Group Conference on People and Computers, Birmingham, UK, 12–14 September 2012; pp. 309–314. [Google Scholar]

- Di Nocera, F.; Mastrangelo, S.; Colonna, S.P.; Steinhage, A.; Baldauf, M.; Kataria, A. Mental workload assessment using eye-tracking glasses in a simulated maritime scenario. In Proceedings of the Human Factors and Ergonomics Society Europe, Groningen, The Netherlands, 14–16 October 2015. [Google Scholar]

- Pagliari, D.; Pinto, L. Calibration of kinect for xbox one and comparison between the two generations of microsoft sensors. Sensors 2015, 15, 27569–27589. [Google Scholar] [CrossRef] [PubMed]

- Mentiplay, B.F.; Perraton, L.G.; Bower, K.J.; Pua, Y.H.; McGaw, R.; Heywood, S.; Clark, R.A. Gait assessment using the Microsoft Xbox One Kinect: Concurrent validity and inter-day reliability of spatiotemporal and kinematic variables. J. Biomech. 2015, 48, 2166–2170. [Google Scholar] [CrossRef] [PubMed]

- Clark, R.A.; Bower, K.J.; Mentiplay, B.F.; Paterson, K.; Pua, Y.H. Concurrent validity of the Microsoft Kinect for assessment of spatiotemporal gait variables. J. Biomech. 2013, 46, 2722–2725. [Google Scholar] [CrossRef] [PubMed]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the 24th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2011), Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Jalal, A.; Sarif, N.; Kim, J.T.; Kim, T.S. Human activity recognition via recognized body parts of human depth silhouettes for residents monitoring services at smart home. Indoor Built Environ. 2013, 22, 271–279. [Google Scholar] [CrossRef]

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Trans. Multimedia 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Gaglio, S.; Re, G.L.; Morana, M. Human Activity Recognition Process Using 3-D Posture Data. IEEE Trans. Hum. Mach. Syst. 2015, 45, 586–597. [Google Scholar] [CrossRef]

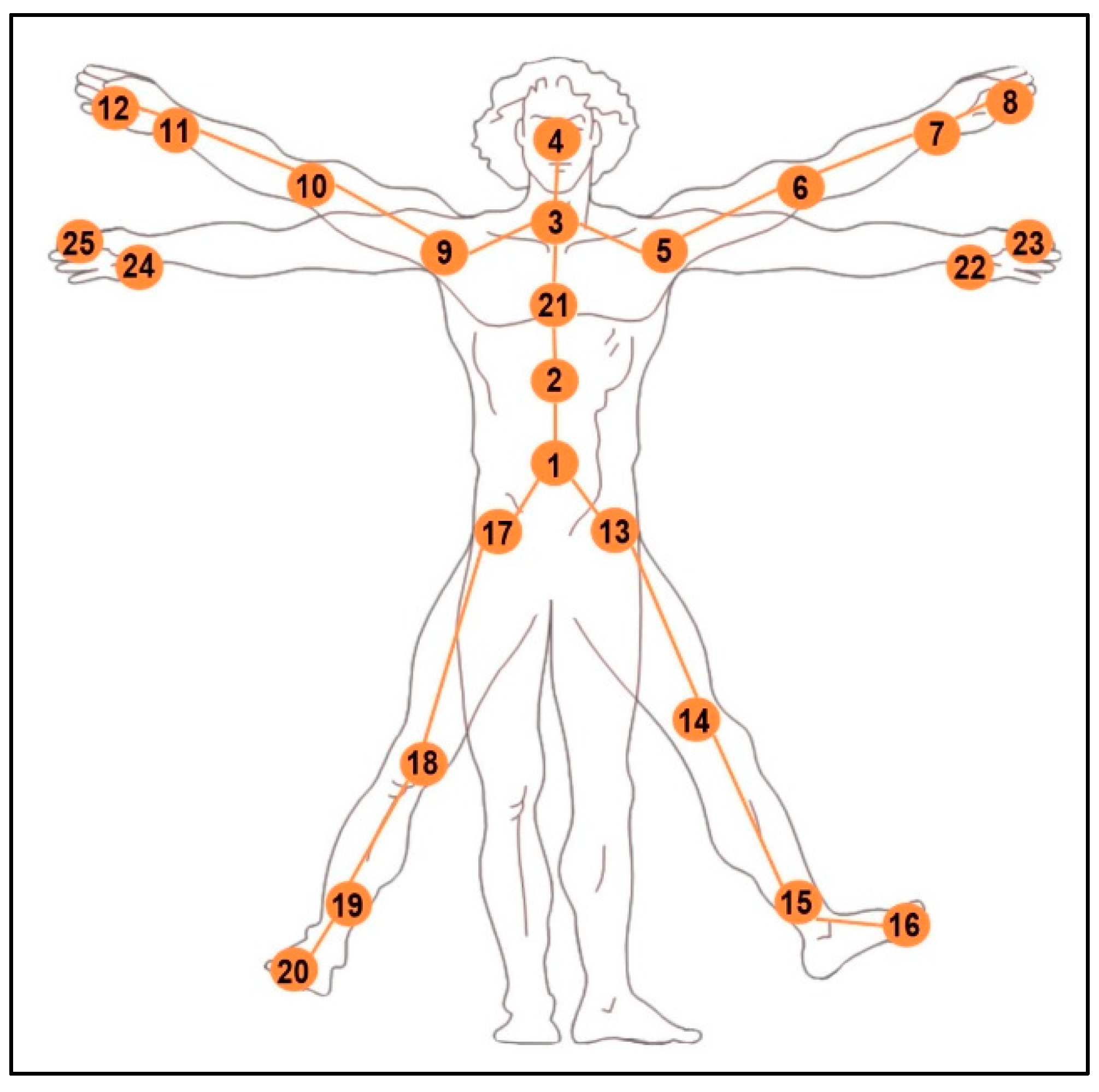

| Category | Location | Feature Number |

|---|---|---|

| Trunk | Base of spine | 1 |

| Mid-spine | 2 | |

| Neck | 3 | |

| Head | 4 | |

| Left hip | 13 | |

| Right hip | 17 | |

| Shoulder-height spine | 21 | |

| Upper limbs | Left shoulder | 5 |

| Left elbow | 6 | |

| Left wrist | 7 | |

| Left hand | 8 | |

| Right shoulder | 9 | |

| Right elbow | 10 | |

| Right wrist | 11 | |

| Right hand | 12 | |

| Left hand tip | 22 | |

| Left thumb | 23 | |

| Right hand tip | 24 | |

| Right thumb | 25 | |

| Lower limbs | Left knee | 14 |

| Left ankle | 15 | |

| Left foot | 16 | |

| Right knee | 18 | |

| Right ankle | 19 | |

| Right foot | 20 |

| Characteristics | Mean (SD) |

|---|---|

| N | 24 |

| Female/male | 4/20 |

| Age (years) | 22.1 (1.6) |

| Height (cm) | 172.2 (6.9) |

| Weight (kg) | 69.6 (11.7) |

| Lookout Type | Activity | Duration (Seconds) | Description |

|---|---|---|---|

| Lookout | Standing | 50 | Standing still for lookout |

| Leaning | 50 | Reluctant posture for lookout | |

| Binocular | 50 | Active lookout | |

| Radar | 50 | Controlling nav. equipment | |

| Walking | 50 | Lookout while walking | |

| Non-lookout | Writing | 50 | Recording navigation info |

| Sitting | 50 | Resting and reluctant posture | |

| Total duration | 350 | Including breaks 1 | |

| Feature | Description | Abbreviation Example |

|---|---|---|

| Joint motion magnitude | Vector magnitude of each joint obtained by using root mean squares of the x-, y-, and z-axis values | JMM-5 = JMM head |

| Joint motion variation | Standard deviations for each joint for the x-, y-, and z-axes | JMV-10 = JMI right elbow |

| True Activity | Predicted Activity (%) | ||||||

|---|---|---|---|---|---|---|---|

| Standing | Leaning | Binocular | Radar | Walking | Writing | Sitting | |

| Standing | 90 | 4 | - | - | - | - | 6 |

| Leaning | 4 | 95 | - | - | - | - | 1 |

| Binocular | - | 5 | 94 | - | - | - | >1 |

| Radar | - | - | 1 | 93 | 3 | - | 3 |

| Walking | - | - | - | 2 | 97 | >1 | - |

| Writing | - | - | - | >1 | - | 99 | - |

| Sitting | 3 | - | 1 | - | - | - | 96 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Youn, I.-H.; Park, D.-J.; Yim, J.-B. Analysis of Lookout Activity in a Simulated Environment to Investigate Maritime Accidents Caused by Human Error. Appl. Sci. 2019, 9, 4. https://doi.org/10.3390/app9010004

Youn I-H, Park D-J, Yim J-B. Analysis of Lookout Activity in a Simulated Environment to Investigate Maritime Accidents Caused by Human Error. Applied Sciences. 2019; 9(1):4. https://doi.org/10.3390/app9010004

Chicago/Turabian StyleYoun, Ik-Hyun, Deuk-Jin Park, and Jeong-Bin Yim. 2019. "Analysis of Lookout Activity in a Simulated Environment to Investigate Maritime Accidents Caused by Human Error" Applied Sciences 9, no. 1: 4. https://doi.org/10.3390/app9010004

APA StyleYoun, I.-H., Park, D.-J., & Yim, J.-B. (2019). Analysis of Lookout Activity in a Simulated Environment to Investigate Maritime Accidents Caused by Human Error. Applied Sciences, 9(1), 4. https://doi.org/10.3390/app9010004