Infrared Stripe Correction Algorithm Based on Wavelet Analysis and Gradient Equalization

Abstract

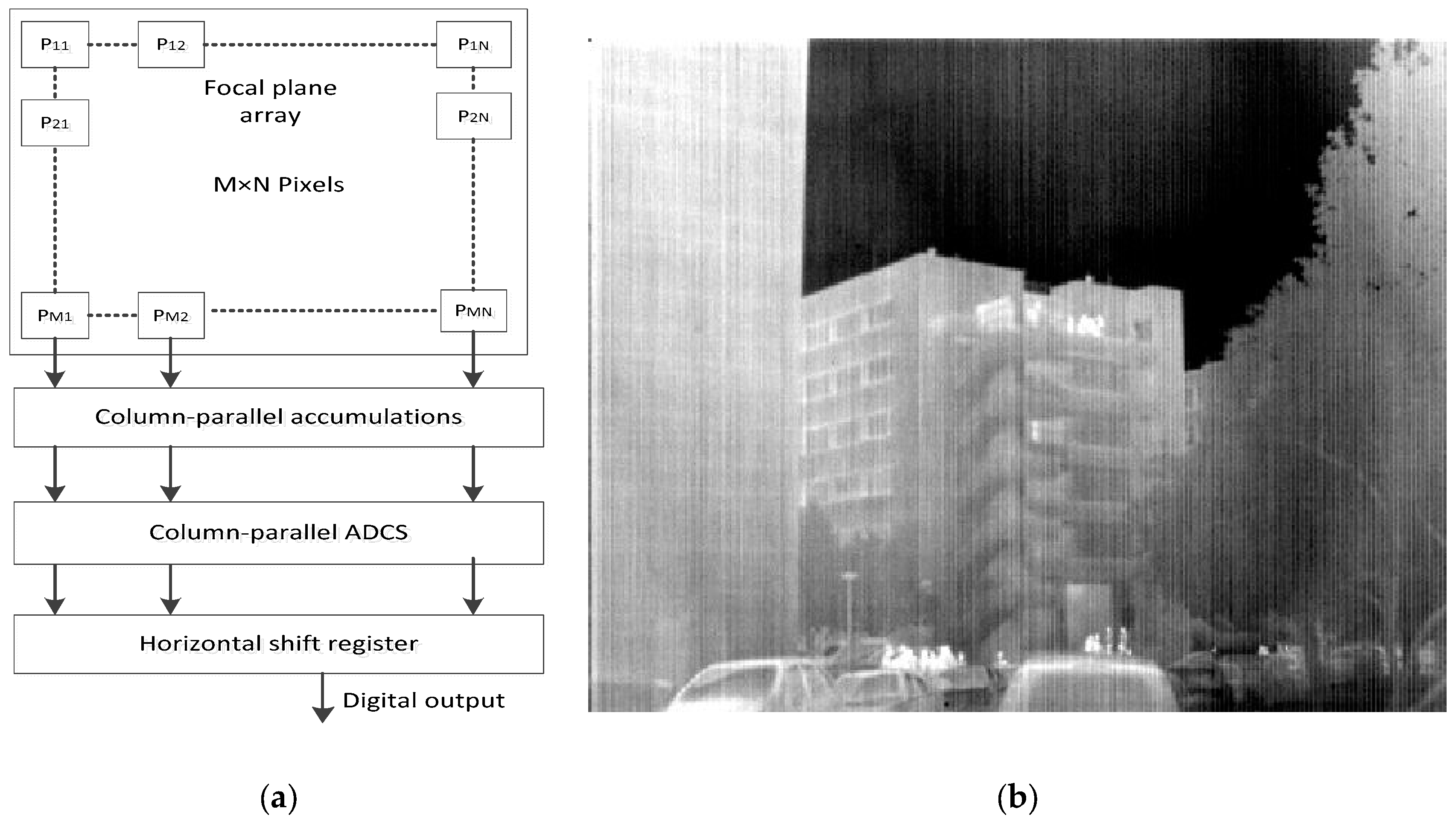

:1. Introduction

2. Related Work

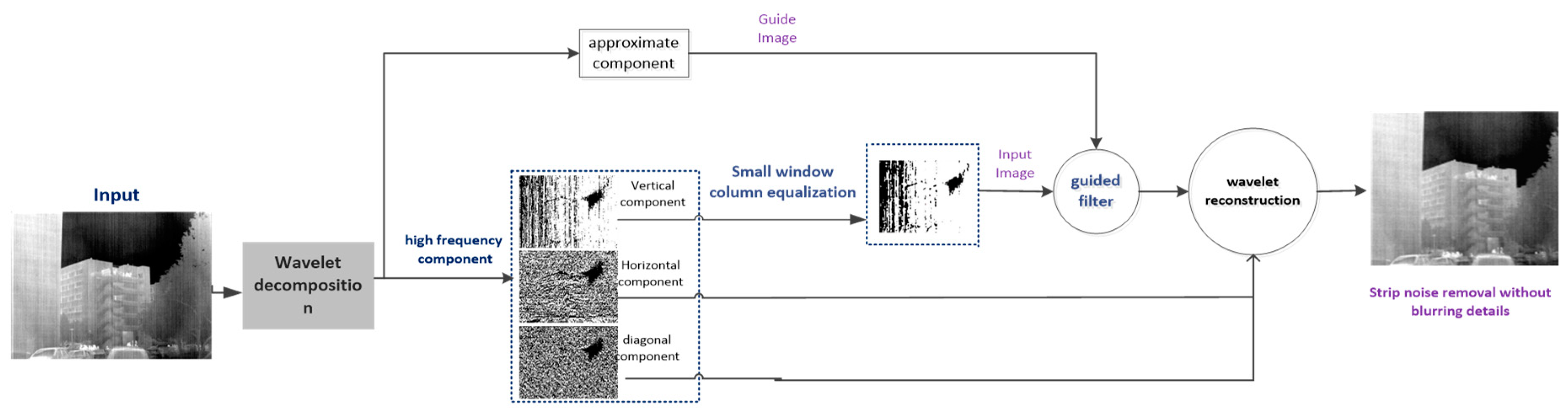

3. Stripe Correction New Algorithm Based on Wavelet Analysis and Gradient Equalization

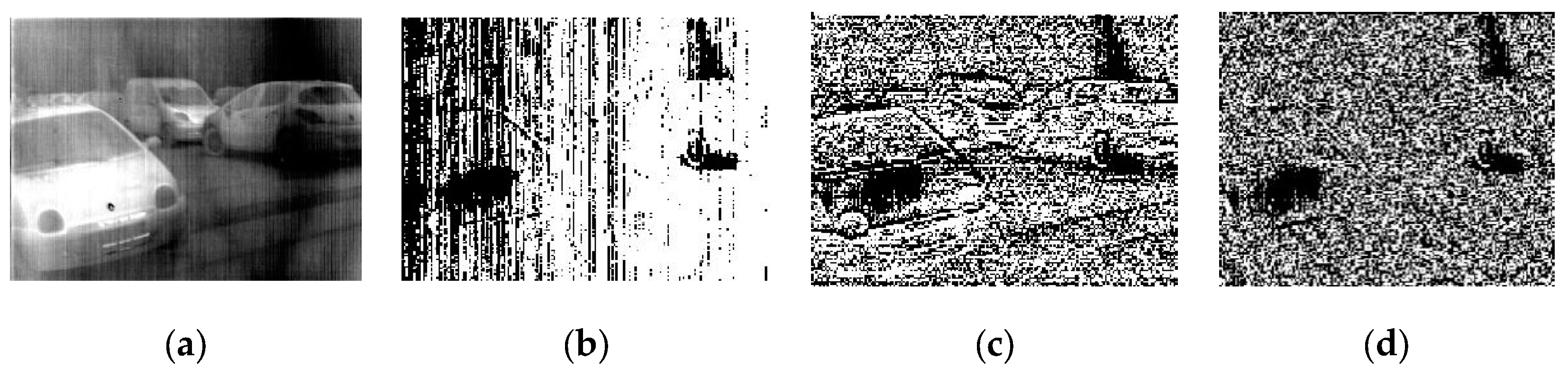

3.1. Wavelet-Based Image Decomposition

3.2. Small Window Column Equalization

3.3. Guide Filtering Removes Vertical Component Noise

4. Implementation Details

4.1. Detail Description

4.2. Procedure

| Algorithm 1: The proposed method for single infrared image stripe non-uniformity correction |

| Input: The raw infrared image U. 1 Wavelet decomposition original image. Parameter: Use db1 wavelet base. Initialization: Decompose the raw image U into approximate components A1, vertical components V1, horizontal components H1, diagonal components D1. 2 Column gradient equalization Parameter: Column equalization window value is 1. Column gradient equalization window size is N. Column equalization: Generating a one-dimensional vector using Gaussian kernel function H. The variance is 5. The cumulative histogram of V1 is M1. for aj = 1: 2N+1 Correlate M1 with H to get the Output V1’ end for 3 Spatial filtering with guided filter Parameter: Regularization parameter = 0.22. Filter window h = 0.3H. H represents the height of the image. Filtration: V1’ as the input image of the guided filter, Approximate component A1 as guide image. Output filtered image . Output: The final corrected result I = A1 + + H1 + D1. |

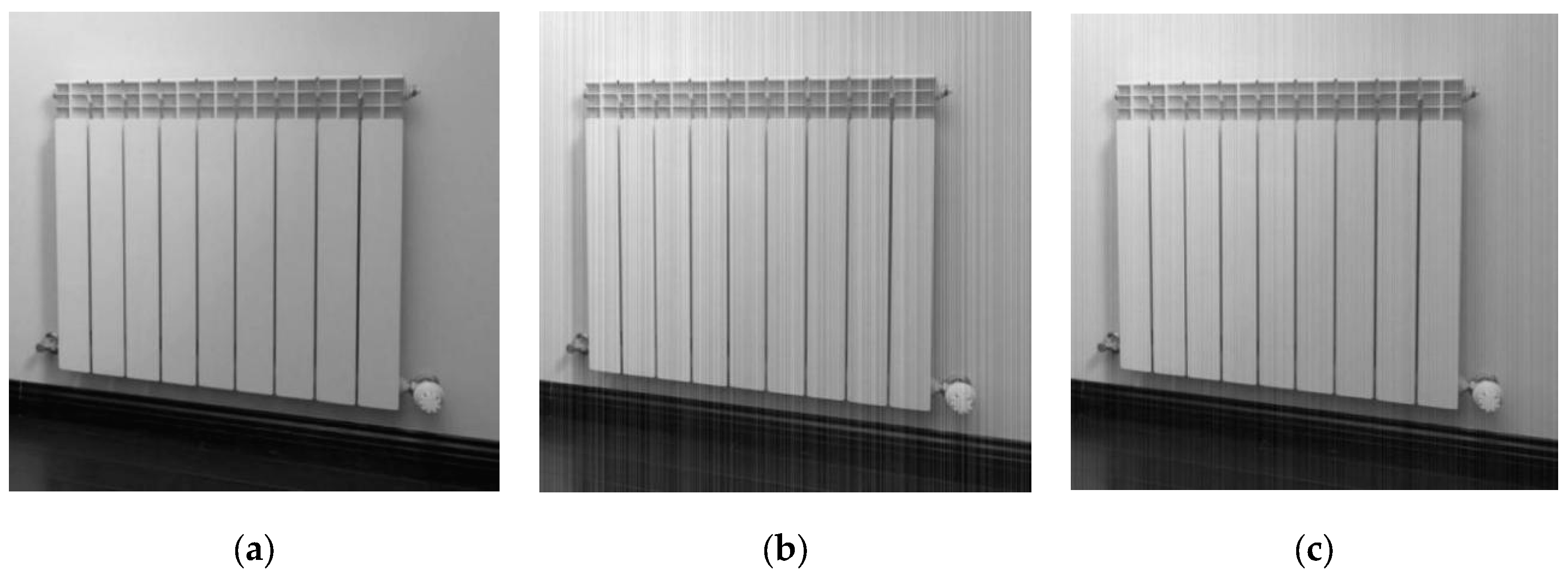

5. Experiment and Analysis

5.1. Data Set

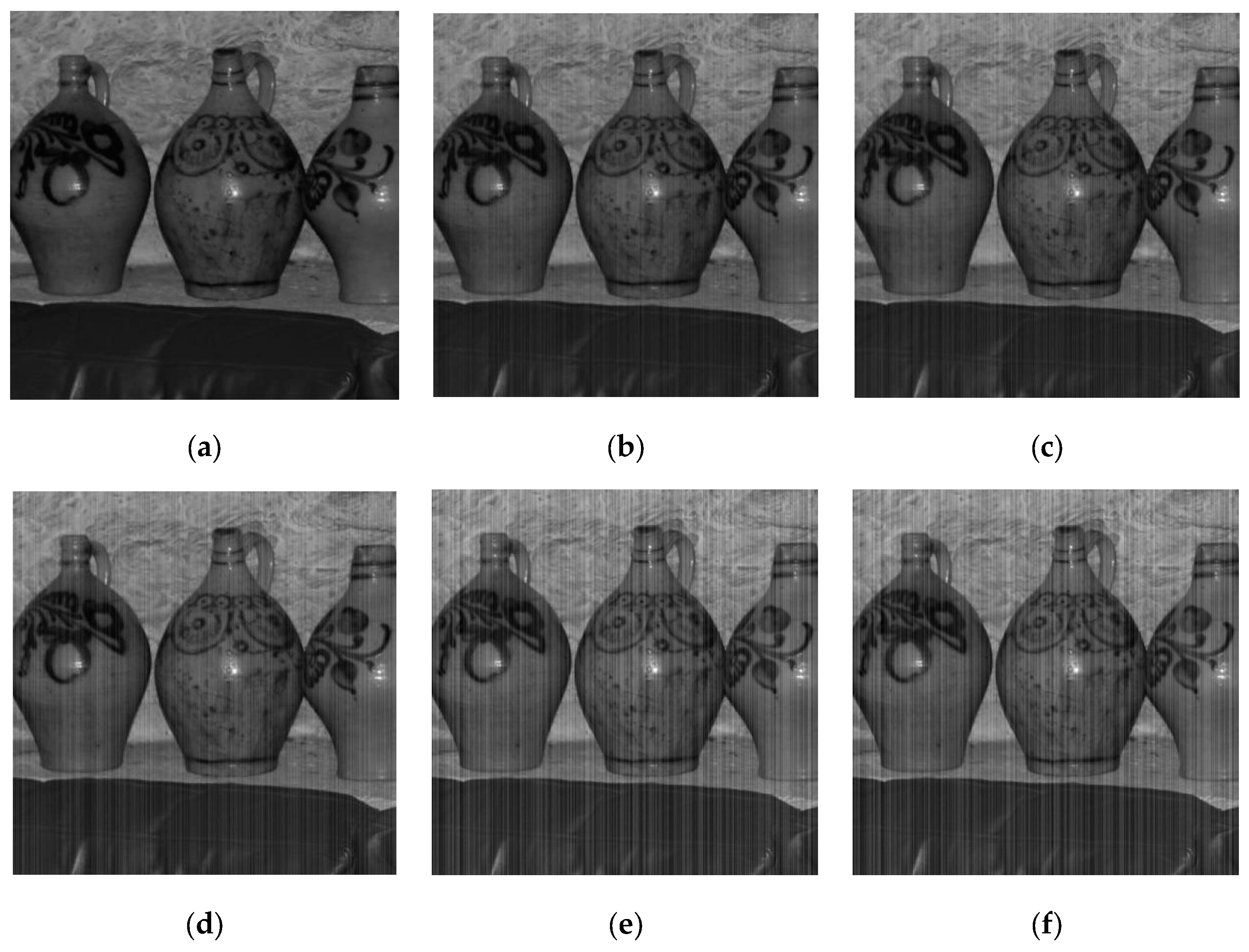

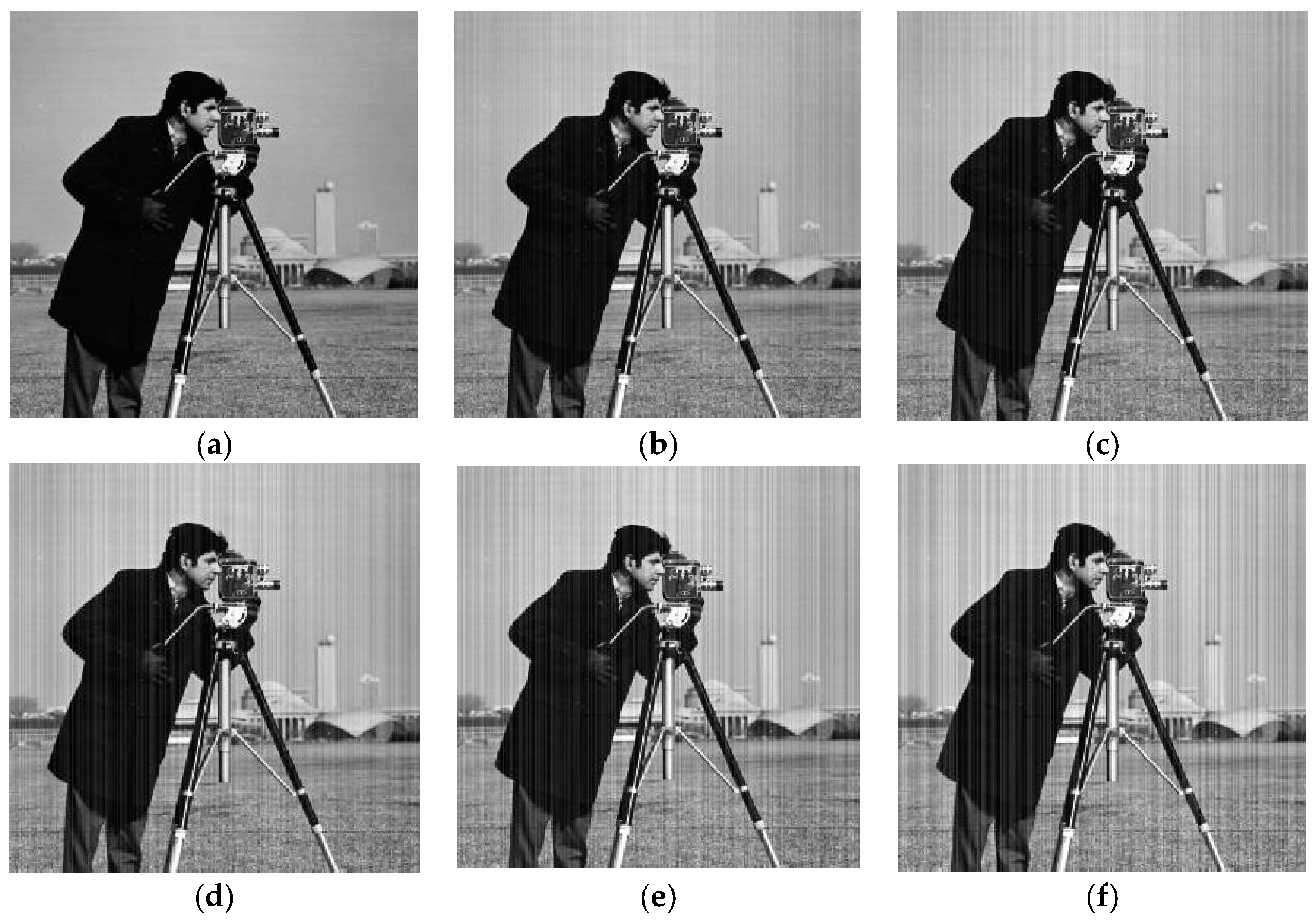

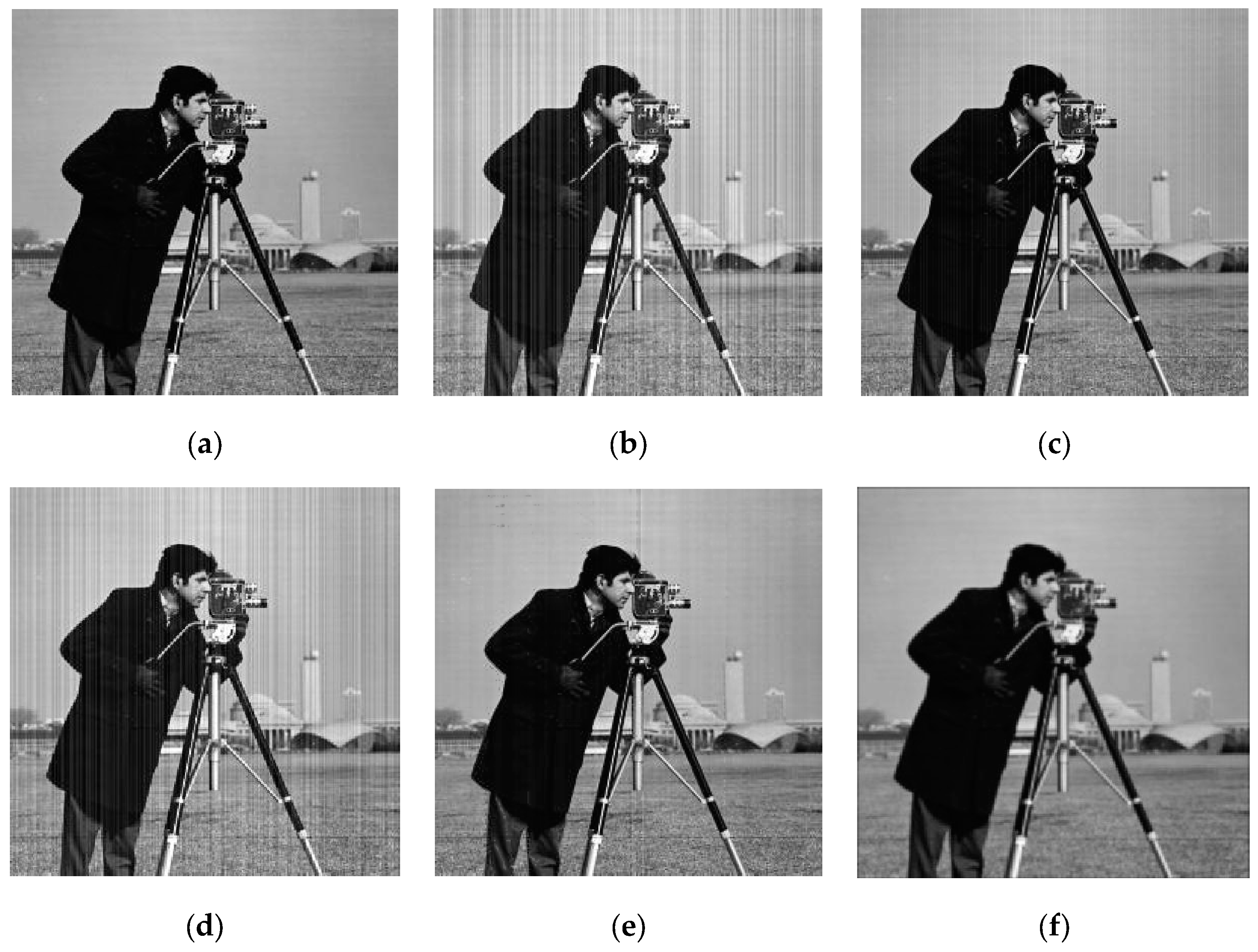

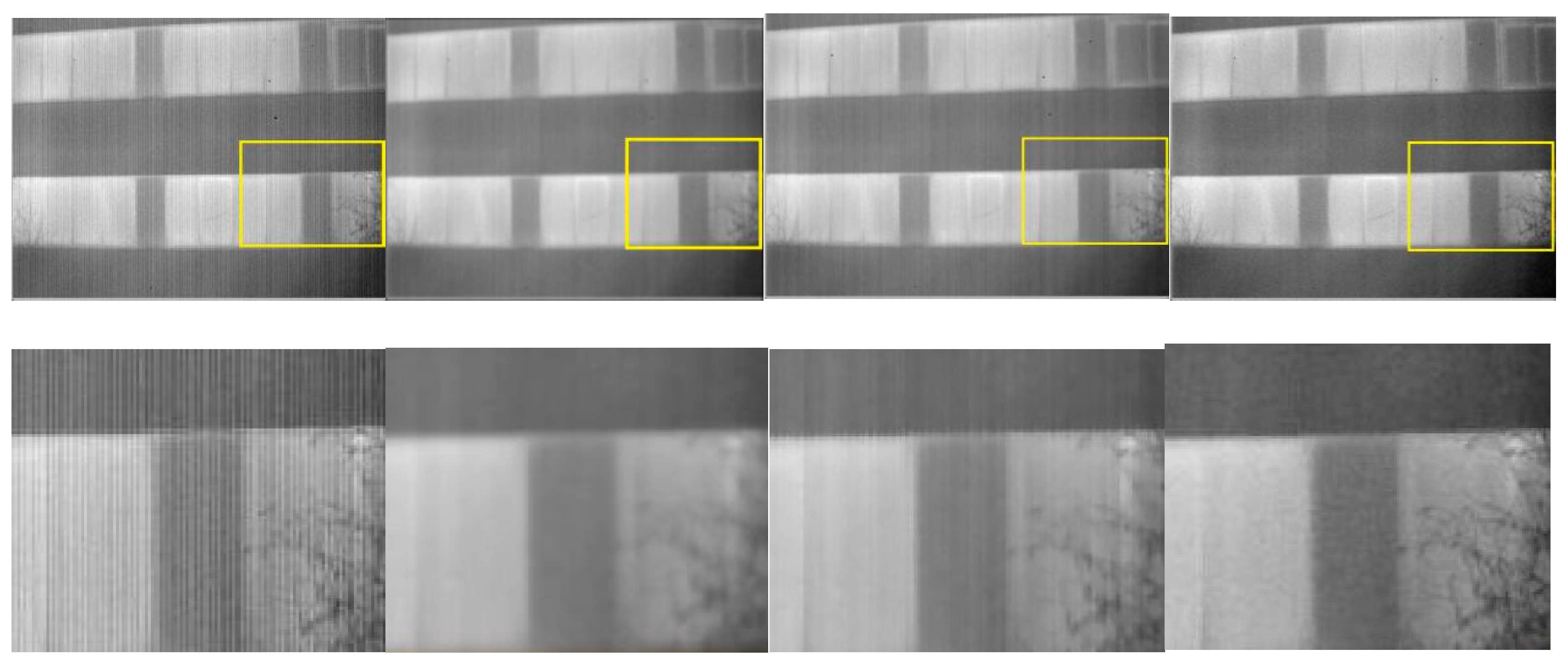

5.2. Analog Noise Image Test

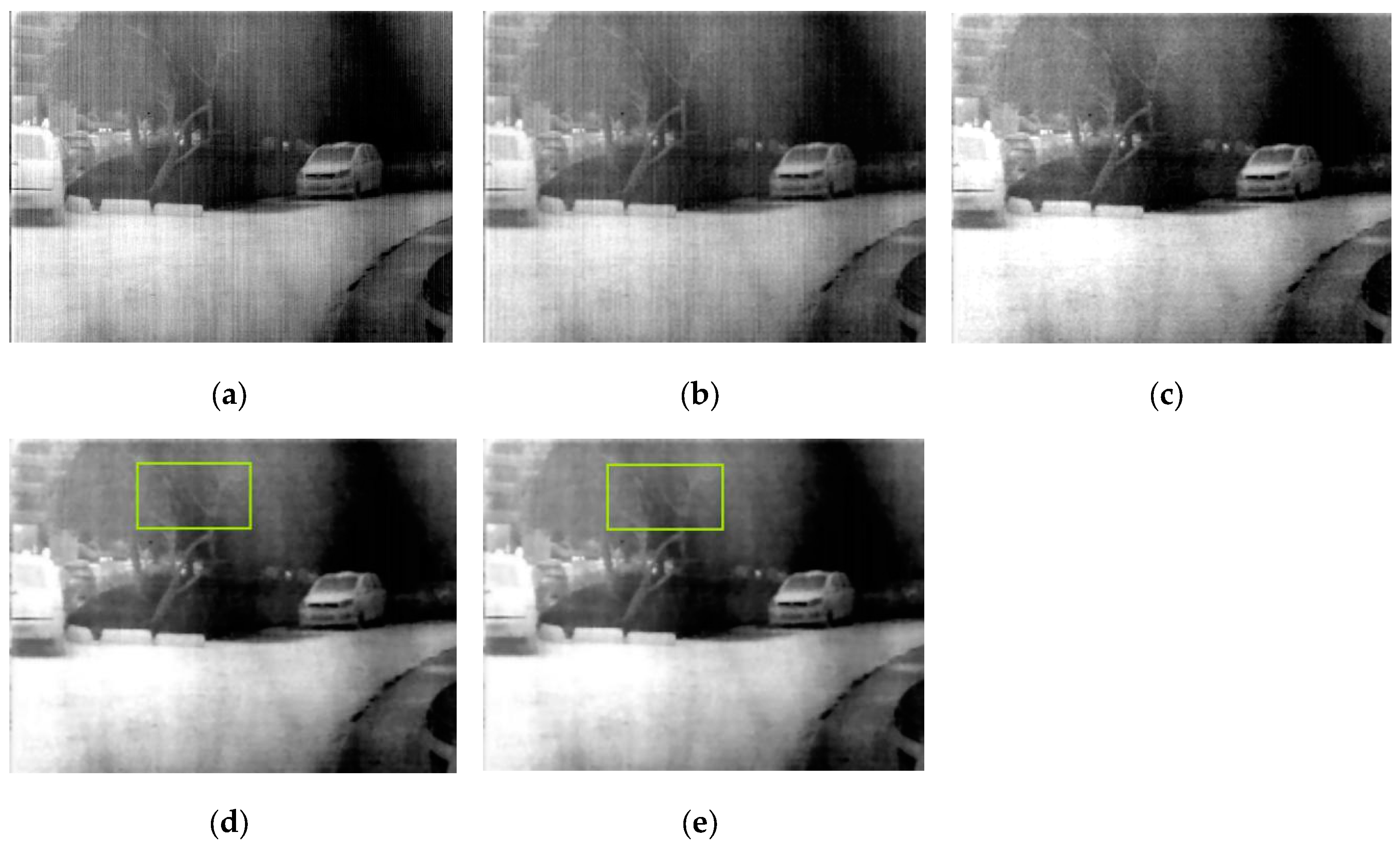

5.3. Infrared Image Test Evaluation

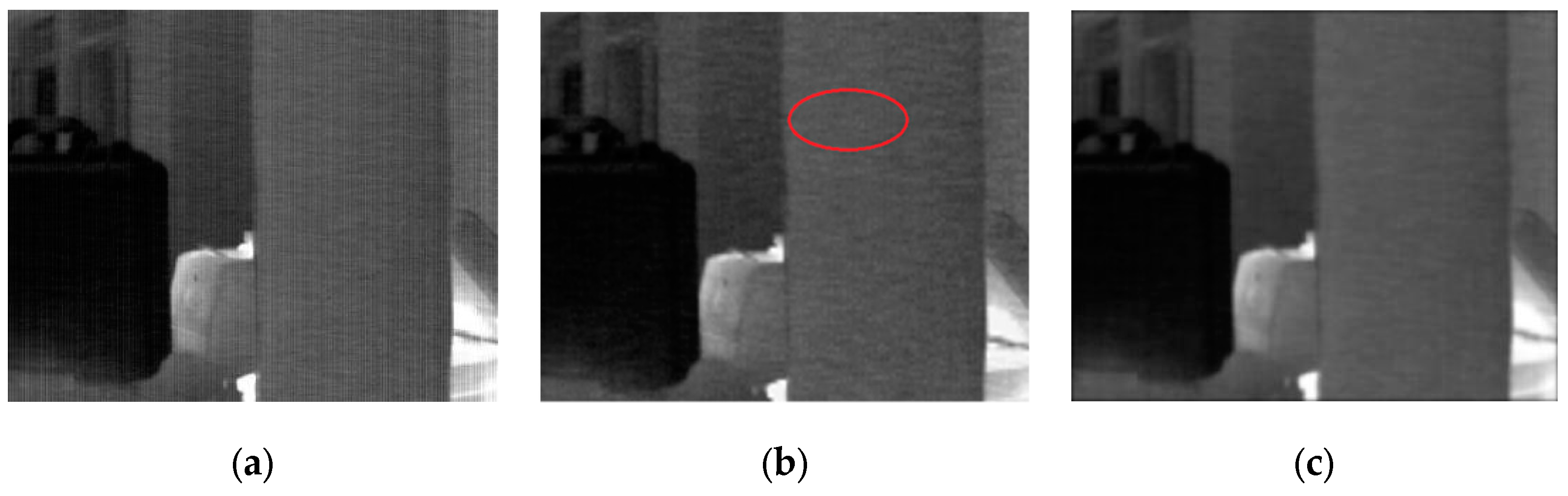

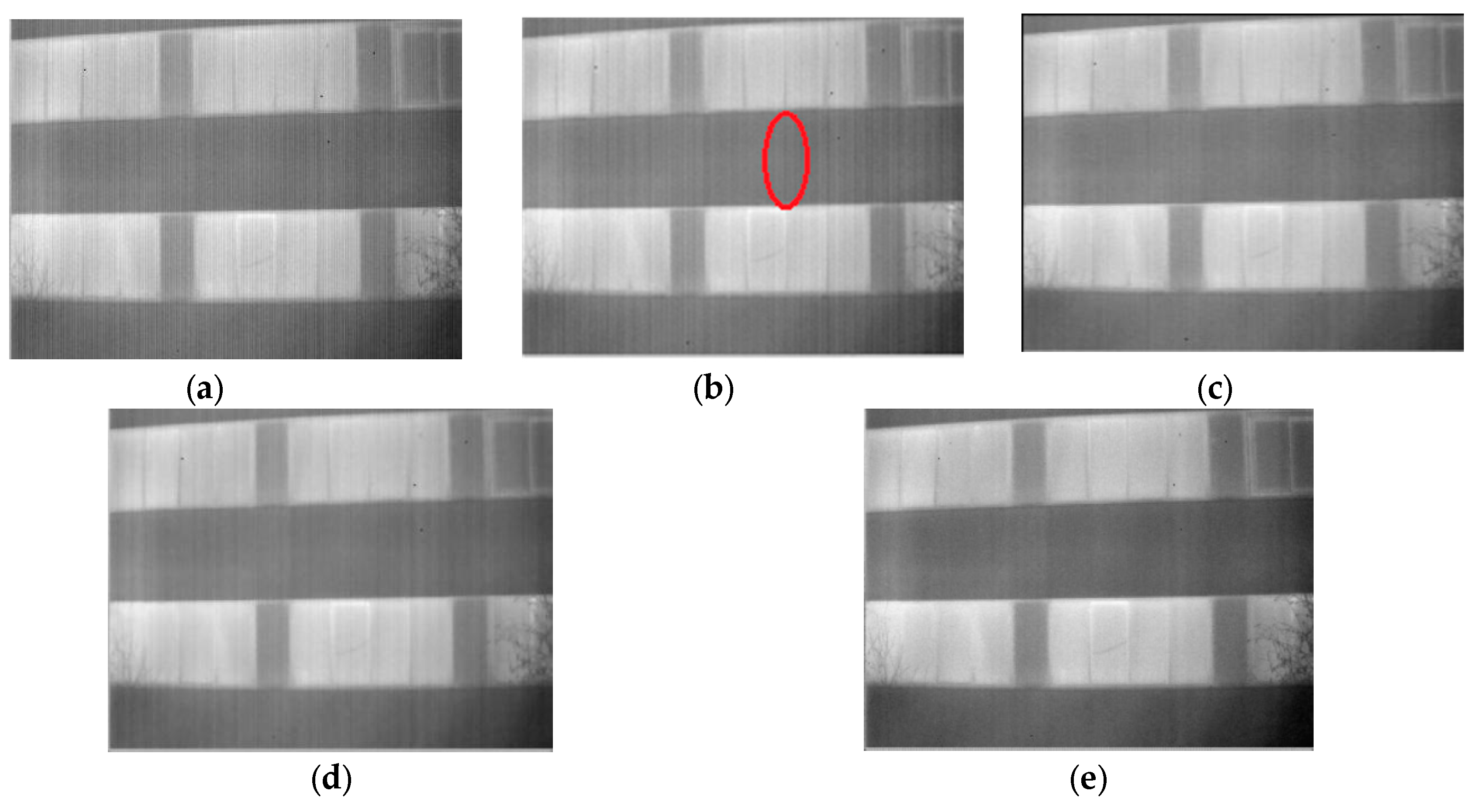

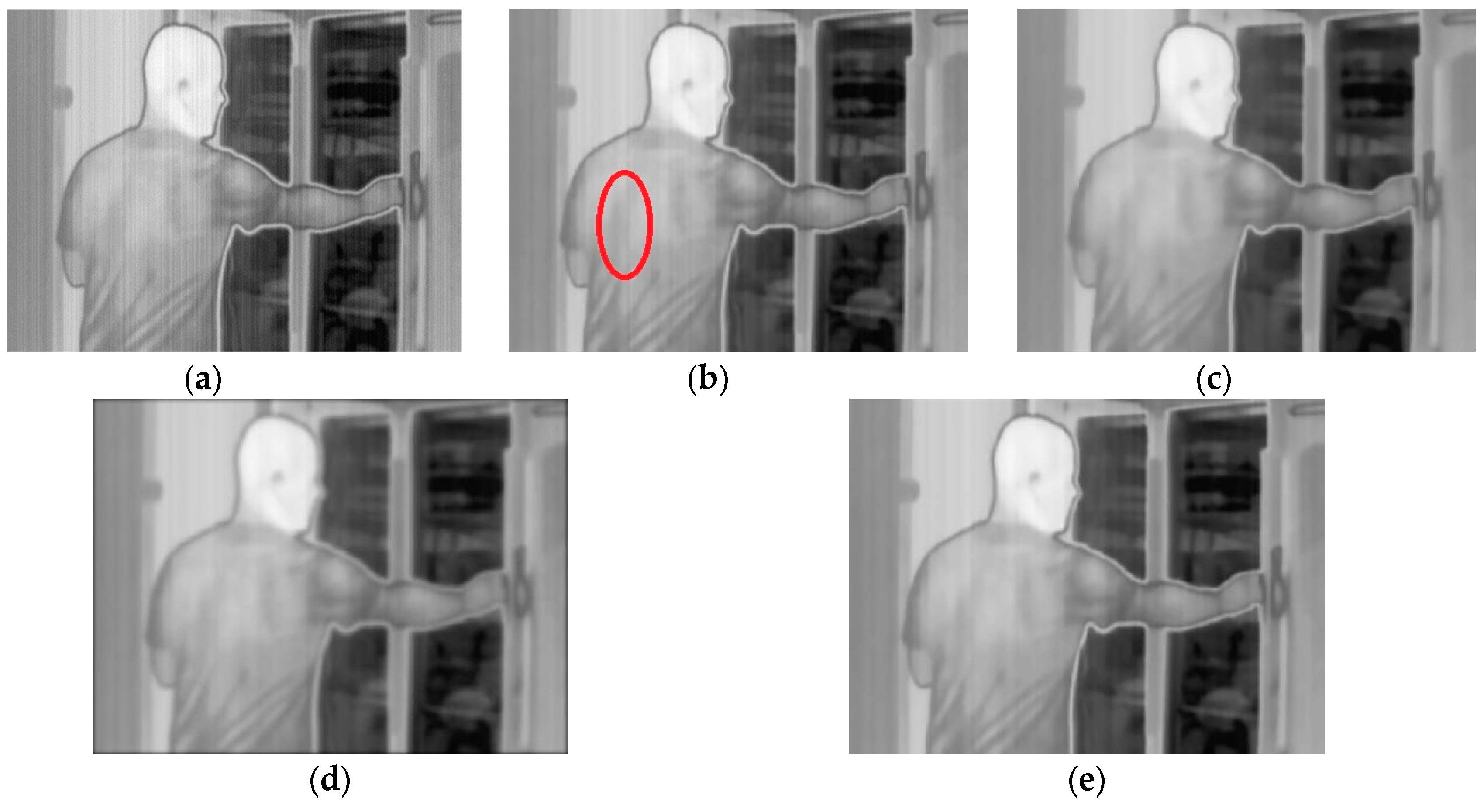

5.3.1. Common Filtering Algorithm Evaluation

5.3.2. Stripe Correction Algorithm Comparison Evaluation

5.4. Time Consumption

5.5. Limitations of the Proposed Method

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| FPN | Fixed Pattern Noise |

| FPA | Focal Plane Array |

| NUC | Non-Uniform Correction |

| CNN | Convolutional Neural Network |

| MHE | Midway Histogram Equalization |

| TV | Total Variation |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity |

| AVGE | Average Vertical Gradient Error |

References

- Cao, Y.; He, Z.; Yang, J.; Ye, X.; Cao, Y. A multi-scale non-uniformity correction method based on wavelet decomposition and guided filtering for uncooled long wave infrared camera. Signal Processing. Image Commun. 2018, 60, 13–21. [Google Scholar] [CrossRef]

- Zuo, C.; Chen, Q.; Gu, G.; Sui, X. Scene-based nonuniformity correction algorithm based on interframe registration. JOSA A 2011, 28, 1164–1176. [Google Scholar] [CrossRef] [PubMed]

- Sui, X.; Chen, Q.; Gu, G. Algorithm for eliminating stripe noise in infrared image. J. Infrared Millim. Waves 2012, 31, 106–112. [Google Scholar] [CrossRef]

- Cao, Y.; He, Z.; Yang, J.; Cao, Y. Spatially Adaptive Column Fixed-Pattern Noise Correction in Infrared Imaging System Using 1D Horizontal Differential Statistics. IEEE Photonics J. 2017, 9, 1–13. [Google Scholar] [CrossRef]

- Qian, W.; Chen, Q.; Gu, G.; Guan, Z. Correction method for stripe nonuniformity. Appl. Opt. 2010, 49, 1764–1773. [Google Scholar] [CrossRef] [PubMed]

- Friedenberg, A.; Goldblatt, I. Nonuniformity two-point linear correction errors in infrared focal plane arrays. Opt. Eng. 1998, 37, 1251–1253. [Google Scholar] [CrossRef]

- Harris, J.G.; Chiang, Y.M. Nonuniformity correction of infrared image sequences using the constant-statistics constraint. IEEE Trans. Image Process. 1999, 8, 1148–1151. [Google Scholar] [CrossRef] [PubMed]

- Pande-Chhetri, R.; Abd-Elrahman, A. De-striping hyperspectral imagery using wavelet transform and adaptive frequency domain filtering. ISPRS J. Photogramm. Remote Sens. 2011, 66, 620–636. [Google Scholar] [CrossRef]

- Tendero, Y.; Landeau, S.; Gilles, J. Non-uniformity correction of infrared images by midway equalization. Image Process. Line 2012, 2, 134–146. [Google Scholar] [CrossRef]

- Qian, W.; Chen, Q.; GU, G. Minimum mean square error method for stripe nonuniformity correction. Chin. Opt. Lett. 2011, 9, 34–36. [Google Scholar]

- Pipa, D.R.; da Silva, E.A.B.; Pagliari, C.L.; Diniz, P.S.R. Recursive algorithms for bias and gain nonuniformity correction in infrared videos. IEEE Trans. Image Process. 2012, 21, 4758–4769. [Google Scholar] [CrossRef]

- Maggioni, M.; Sanchez-Monge, E.; Foi, A. Joint removal of random and fixed-pattern noise through spatiotemporal video filtering. IEEE Trans. Image Process. 2014, 23, 4282–4296. [Google Scholar] [CrossRef]

- Hardie, R.C.; Hayat, M.M.; Armstrong, E.; Yasuda, B. Scene-based nonuniformity correction with video sequences and registration. Appl. Opt. 2000, 39, 1241–1250. [Google Scholar] [CrossRef]

- Cao, Y.; Yang, M.Y.; Tisse, C.L. Effective Strip Noise Removal for Low-Textured Infrared Images Based on 1-D Guided Filtering. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 2176–2188. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, T. Optics temperature-dependent nonuniformity correction via L0-regularized prior for airborne infrared imaging systems. IEEE Photonics J. 2016, 8, 1–10. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the 6th International Conference on Computer Vision, Freiburg, Germany, 2–6 June 1998; pp. 839–846. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. In Computer Vision–ECCV; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–14. [Google Scholar]

- Kou, F.; Chen, W.; Wen, C.; Li, Z. Gradient domain guided image filtering. IEEE Trans. Image Process. 2015, 24, 4528–4539. [Google Scholar] [CrossRef]

- Tendero, Y.; Gilles, J. ADMIRE: A locally adaptive single-image, non-uniformity correction and denoising algorithm: Application to uncooled IR camera. In Infrared Technology and Applications XXXVIII, Proceedings of the SPIE Defense, Security, and Sensing, Baltimore, MD, USA, 23–27 April 2012; International Society for Optics and Photonics: Bellingham, WA, USA, 2012; p. 83531. [Google Scholar]

- Sui, X.; Chen, Q.; Gu, G. Adaptive grayscale adjustment-based stripe noise removal method of single image. Infrared Phys. Technol. 2013, 60, 121–128. [Google Scholar] [CrossRef]

- Boutemedjet, A.; Deng, C.; Zhao, B. Edge-aware unidirectional total variation model for stripe non-uniformity correction. Sensors 2018, 18, 1164. [Google Scholar] [CrossRef]

- Huang, Y.; He, C.; Fang, H.; Wang, X. Iteratively reweighted unidirectional variational model for stripe non-uniformity correction. Infrared Phys. Technol. 2016, 75, 107–116. [Google Scholar] [CrossRef]

- Kuang, X.; Sui, X.; Chen, Q.; Gu, G. Single Infrared Image Stripe Noise Removal Using Deep Convolutional Networks. IEEE Photonics J. 2017, 9, 1–13. [Google Scholar] [CrossRef]

- Li, H.; Manjunath, B.S.; Mitra, S.K. Multisensor image fusion using the wavelet transform. Graph. Models Image Process. 1995, 57, 235–245. [Google Scholar] [CrossRef]

- Zhu, Z.; Yin, H.; Chai, Y.; Li, Y.; Qi, G. A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. 2018, 432, 516–529. [Google Scholar] [CrossRef]

- Zhu, Z.; Qi, G.; Chai, Y.; Yin, H.; Sun, J. A novel visible-infrared image fusion framework for smart city. Int. J. Simul. Process Model. IJSPM 2018, 13, 144–155. [Google Scholar] [CrossRef]

- Liu, Z.; Xu, J.; Wang, X.; Nie, K. A fixed-pattern noise correction method based on gray value compensation for TDI CMOS image sensor. Sensors 2015, 15, 1764–1773. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assesment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zeng, Q.; Qin, H.; Yan, X.; Yang, S.; Yang, T. Single Infrared Image-Based Stripe Nonuniformity Correction via a Two-Stage Filtering Method. Sensors 2018, 18, 4299. [Google Scholar] [CrossRef]

| Test Data | Source | Size | Sensor | Description |

|---|---|---|---|---|

| Simulated images | ||||

| Ceramic cameraman | —— | 512 × 512 | —— | Widely used gray images, add with different levels of stripe noise. |

| Raw IR images | ||||

| Suitcase | Tendero’s dataset | 320 × 220 | Thales Minie-D camera | Simple scene, Obvious edge information. Slight stripe noise image, small details. |

| leaves | Tendero’s dataset | 640× 440 | Thales Minie-D camera | Simple scene, small details, small details and obvious stripe nonuniformity. |

| people | Tendero’s dataset | 640 × 480 | Thales Minie-D camera | Rich scene information, and slight stripe nonuniformity. |

| Ceramic | Cameraman | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Noise | TV | CNN | MHE | Ours | Noise | TV | CNN | MHE | Ours | |

| 0.02 | 30.15 | 28.24 | 34.31 | 24.36 | 36.16 | 29.85 | 31.24 | 34.26 | 30.21 | 36.48 |

| 0.04 | 27.06 | 29.63 | 30.52 | 26.81 | 32.69 | 26.52 | 29.72 | 32.84 | 29.42 | 35.84 |

| 0.10 | 24.57 | 28.39 | 26.75 | 25.65 | 29.76 | 24.13 | 31.25 | 32.59 | 30.34 | 35.47 |

| 0.15 | 18.34 | 22.67 | 25.34 | 21.36 | 28.64 | 17.25 | 24.56 | 30.48 | 23.19 | 33.27 |

| 0.20 | 12.19 | 19.34 | 24.89 | 16.68 | 28.94 | 10.57 | 20.31 | 24.65 | 16.34 | 28.76 |

| Ceramic | Cameraman | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Noise | TV | CNN | MHE | Ours | noise | TV | CNN | MHE | Ours | |

| 0.02 | 0.876 | 0.921 | 0.905 | 0.916 | 0.976 | 0.957 | 0.974 | 0.962 | 0.982 | 0.993 |

| 0.04 | 0.728 | 0.845 | 0.801 | 0.826 | 0.945 | 0.872 | 0.970 | 0.954 | 0.968 | 0.991 |

| 0.10 | 0.543 | 0.878 | 0.579 | 0.835 | 0.927 | 0.684 | 0.962 | 0.859 | 0.958 | 0.986 |

| 0.15 | 0.247 | 0.756 | 0.325 | 0.769 | 0.921 | 0.426 | 0.957 | 0.769 | 0.952 | 0.982 |

| 0.20 | 0.134 | 0.723 | 0.187 | 0.743 | 0.908 | 0.243 | 0.952 | 0.654 | 0.942 | 0.979 |

| Sequence/Method | Suitcase | Leaves | People |

|---|---|---|---|

| TV | 28.5 | 30.8 | 24.6 |

| MHE | 27.9 | 28.7 | 22.8 |

| CNN | 27.2 | 29.1 | 23.4 |

| Proposed | 25.4 | 19.7 | 20.2 |

| Ceramic | Cameraman | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Noise | TV | MHE | CNN | Ours | Noise | TV | MHE | CNN | Ours | |

| 0.02 | 12.34 | 10.39 | 8.46 | 8.32 | 7.42 | 14.10 | 12.30 | 11.06 | 10.79 | 6.42 |

| 0.04 | 15.62 | 12.94 | 9.47 | 9.20 | 8.07 | 16.37 | 13.60 | 12.45 | 12.21 | 8.94 |

| 0.10 | 18.27 | 14.18 | 11.46 | 11.07 | 9.72 | 18.79 | 16.28 | 14.91 | 14.27 | 10.67 |

| 0.15 | 20.34 | 15.81 | 13.87 | 13.14 | 10.63 | 21.42 | 18.70 | 15.74 | 14.95 | 11.34 |

| 0.20 | 25.81 | 19.76 | 15.27 | 14.35 | 11.20 | 27.41 | 21.40 | 18.69 | 18.07 | 15.13 |

| Sequence/Method | Suitcase | Leaves | People |

|---|---|---|---|

| TV | 0.053 | 0.036 | 0.028 |

| MHE | 0.287 | 0.424 | 0.124 |

| CNN | 0.183 | 0.228 | 0.019 |

| Proposed | 0.168 | 0.016 | 0.012 |

| Ceramic | Cameraman | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| TV | MHE | CNN | Ours | TV | MHE | CNN | Ours | ||

| 0.02 | 0.075 | 0.248 | 0.125 | 0.102 | 0.02 | 0.064 | 0.186 | 0.089 | 0.062 |

| 0.04 | 0.180 | 0.314 | 0.176 | 0.124 | 0.04 | 0.125 | 0.243 | 0.108 | 0.120 |

| 0.10 | 0.203 | 0.386 | 0.217 | 0.196 | 0.10 | 0.197 | 0.286 | 0.156 | 0.128 |

| 0.15 | 0.296 | 0.413 | 0.271 | 0.206 | 0.15 | 0.254 | 0.346 | 0.204 | 0.192 |

| 0.20 | 0.387 | 0.459 | 0.352 | 0.305 | 0.20 | 0.309 | 0.495 | 0.287 | 0.215 |

| Sequence/Method | Resolution | TV | CNN | MHE | Ours |

|---|---|---|---|---|---|

| Ceramic/Cameraman | 512 × 512 | 0.045 | 1.462 | 0.031 | 0.247 |

| Suitcase | 320 × 220 | 0.029 | 1.028 | 0.021 | 0.012 |

| Leaves | 640× 440 | 0.062 | 1.634 | 0.051 | 0.039 |

| People | 640 × 480 | 0.065 | 1.642 | 0.059 | 0.042 |

| Noise | Corrected | |

|---|---|---|

| PSNR | 27.56 | 30.21 |

| SSIM | 0.872 | 0.963 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, E.; Jiang, P.; Hou, X.; Zhu, Y.; Peng, L. Infrared Stripe Correction Algorithm Based on Wavelet Analysis and Gradient Equalization. Appl. Sci. 2019, 9, 1993. https://doi.org/10.3390/app9101993

Wang E, Jiang P, Hou X, Zhu Y, Peng L. Infrared Stripe Correction Algorithm Based on Wavelet Analysis and Gradient Equalization. Applied Sciences. 2019; 9(10):1993. https://doi.org/10.3390/app9101993

Chicago/Turabian StyleWang, Ende, Ping Jiang, Xukui Hou, Yalong Zhu, and Liangyu Peng. 2019. "Infrared Stripe Correction Algorithm Based on Wavelet Analysis and Gradient Equalization" Applied Sciences 9, no. 10: 1993. https://doi.org/10.3390/app9101993

APA StyleWang, E., Jiang, P., Hou, X., Zhu, Y., & Peng, L. (2019). Infrared Stripe Correction Algorithm Based on Wavelet Analysis and Gradient Equalization. Applied Sciences, 9(10), 1993. https://doi.org/10.3390/app9101993