Artificial Intelligence in Intelligent Tutoring Robots: A Systematic Review and Design Guidelines

Abstract

:1. Introduction

- First of all, we analyze the teaching-learning process between human teachers and students and discuss teacher competences in teaching activities to motivate the exploration of building ITRs and describe the relationship model with the following four factors involved: teacher, student, curriculum, and social milieu.

- Second, we propose a framework based on the relationship model for analyzing and designing ITR with AI techniques, which can integrate different dimensions of teaching activities into this agent framework. Specifically, we use the perception-planning-action framework to fill up the gap between the teaching-learning relationship analysis in the education domain and the ITR design in an AI and robotic domain.

- Third, since AI techniques have been developing rapidly and applied widely in recent years, we provide guidance on applying the state-of-the-art AI techniques in the intelligent tutoring robot, which may provide insights on the research area of intelligent tutor robotics.

- Lastly, we use a case study to illustrate that the proposed perception-planning-action framework may be incorporated for analyzing and designing practical ITRs. We use the case study to show how to further improve the ITR.

- The rest of this paper is organized as follows. The relationship model of the teaching-learning process is analyzed and reviewed in Section 2, based on which the perception-planning-action framework of the ITR is proposed in Section 3, along with reviews and design guidelines of the recent AI techniques that can be used in the systems. Section 4 puts the framework and the techniques together into a case study. The final section provides insights on future research on ITRs with AI techniques.

2. Relationship Model of the Teaching-Learning Process

2.1. Teacher and Student

- Pedagogy: First of all, pedagogy is an essential part of a teacher’s professional knowledge during the educating process [17,18,19], which is a general body of knowledge about learning, instructing, and learners [15]. Pedagogy includes practical aspects of teaching, curricular issues, and the theoretical fundamentals of how and why learning occurs [16].

- Students diversity awareness: Teachers need to understand students and take into account the characteristics of the students, such as race, religion, physical characteristics, personal life choices (clothing, food, music, lifestyle), cultural factors (clothing, food, music, rituals), and body image, or cognitive diversity, such as personality or learning differences [14,16,19]. Additionally, there are emotional, mental, or physical diversities in students, so it is a teacher’s responsibility to understand these differences and promote tolerance, curiosity, and equity among their students [14].

- Responding to students: Teachers need to be aware of students’ emotion and response during teaching, so they can respond to them accordingly. Experienced teachers can easily distinguish between active students (taking notes or preparing to make comments) and passive students (too tired or bored to participate) with a quick glance [3]. Teachers are also required to respond the current culture and community in the teaching process and make efforts to promote learning with all students, in particular those with lower scores on the tests for accountability [16]. In addition, teachers should understand learners’ thinking, which are the factors that make it easy or difficult for students to learn a particular topic and the best way to teach content to students [16]. Students of different ages and backgrounds bring their own concepts and preconceptions into the process of learning the most regularly taught topics and lessons [16]. If those preconceptions hurdle the learning of new knowledge, teachers need to master the strategies that can reorganize the learners’ understanding [16].

- Multiple communication methods: Without communication, teaching cannot take place. Communication is essential since teachers use it to deliver lessons, convey concepts, understand students’ knowledge, and motivate students. Using communication strategies and a wide range of methods such as analyzing written work, providing explanations and drawing graphics [3], human teachers can effectively develop knowledge of students and transmit information.

- Building relationships: A human teacher is supposed to communicate with students and others and build relationships with them in order to create a community of learners [20]. This flow of information between teacher and student is key to the educational process. If the teacher fails to figure out how the information they give to students has been received and understood, and is not influenced by the information, the educational process has not been completed successfully [15].

2.2. Teacher and Social Milieu

2.3. Teacher and Curriculum

3. Artificial Intelligence Techniques for Designing Intelligent Tutor Robots

3.1. Multi-Modal Perception of Students

3.1.1. Multi-Modal Data Fusion

- Pixel-level data fusion: In Reference [25], the authors provide a review of contributions on pixel-level data fusion for multiple visual sensors, with applications to remote sensing, medical diagnosis, surveillance, and photography. Although pixel-level data fusion is seldom devoted to intelligent educational applications, the fusion methods and quality measures design may be transferred to the design of ITRs. For example, matting methods may be used for multiple image fusion of moving objects captured by the moving visual sensor mounted on the ITR in dynamic scenarios, and recent popularized depth image sensor like Kinect may capture both posture and video data of the learners by fusing multi-channel 2D visual images and depth maps, which provides more information than single 2D visual sensory data for estimating learners’ status [26,27].

- Human action recognition: In Reference [28], a survey focusing on data fusion in applications of human action recognition is given. The authors compare the pros and cons of combining inertial sensory data with traditional visual sensors. Human action recognition may find its wide range of applications such as video analytics, robotics, and human-computer interaction. Therefore, it may be directly adopted in intelligent tutor design. For instance, in a complex tutoring scenario of much occlusion and moving objects, the inertial sensory data may complement the visual sensory data with a limited field of view using a support vector machine and hidden-Markov model. An example of applying human action recognition for second language ITR in Reference [29] uses hidden-Markov models and k-means algorithms to model and annotate Kinect sensory data, which is then fed to train action-recognition classifiers.

- Affective computing: In Reference [30], the authors discuss in detail the recent contributions in affective computing, and the methods are evolving from uni-modal analysis to multi-modal fusion. Affective computing is an inter-disciplinary research field devoted to endow machines with cognitive capabilities to recognize, interpret, and express emotions and sentiments. Hence, it may be applied directly in ITR for students’ emotion and sentiment interpretation that helps the analysis of learning style and knowledge level. From audio-visual sensory data, the ITR may facilitate a series of metabolic variables, e.g., heart monitoring and eye tracking, to gather information about the emotion as well as the level of engagement and attention of the students. Multiple kernel learning and deep convolutional neural network methods may be adopted for the sentiment detection of students. However, the state-of-the-art work in ITRs relies on uni-modal, mostly visual data to derive affective information. A popular commercial tool for affective computing in ITR is Affdex Software Development Kit (SDK), which is adopted by References [31,32] to derive affective information autonomously from video records of children’s interactions with an ITR.

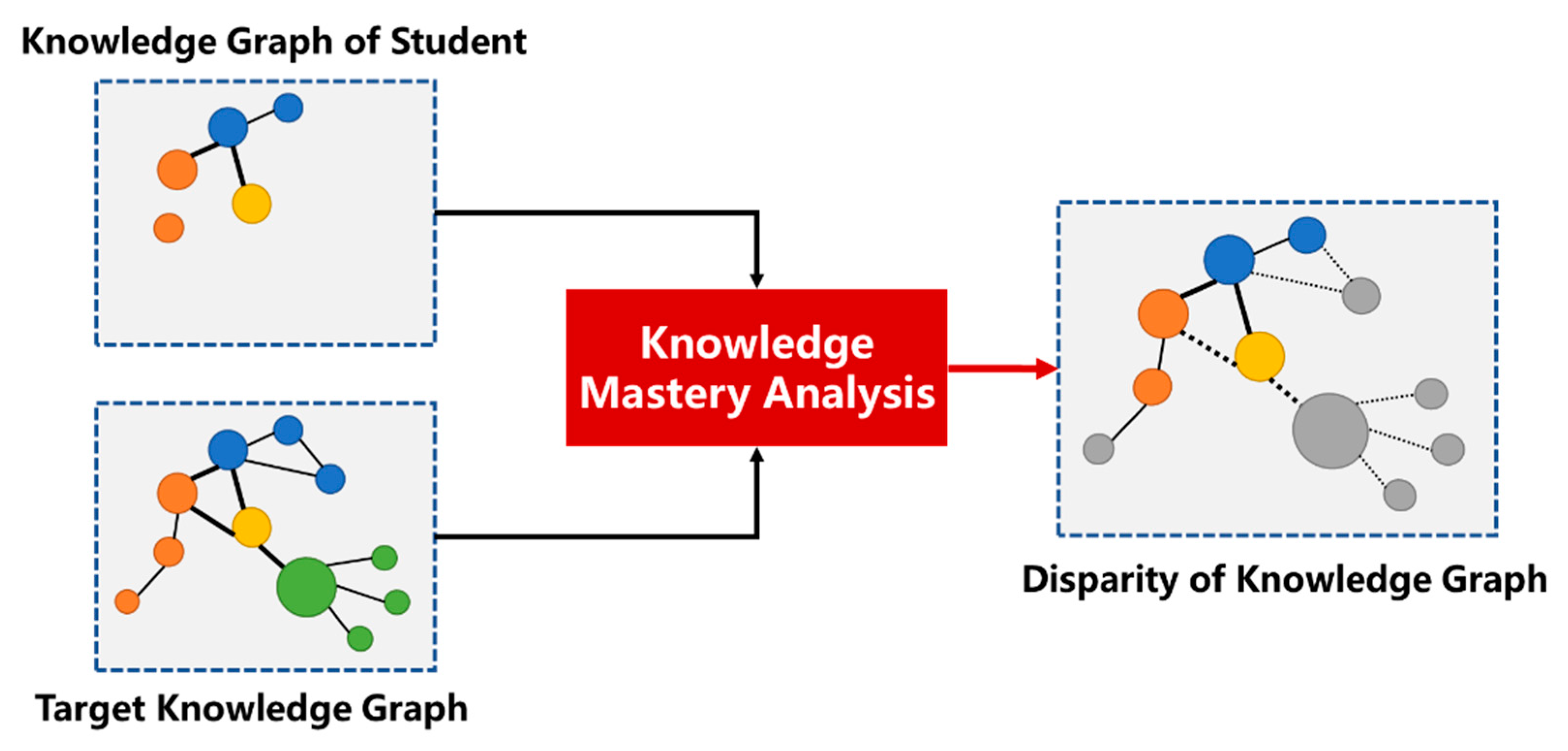

3.1.2. Learning Style and Knowledge Mastery Analysis

3.2. Planning of Teaching Contents and Strategies

3.2.1. Student Model and Teaching Outcome Prediction

3.2.2. Teaching Decision Making

3.3. Action through Multi-Modal Communications

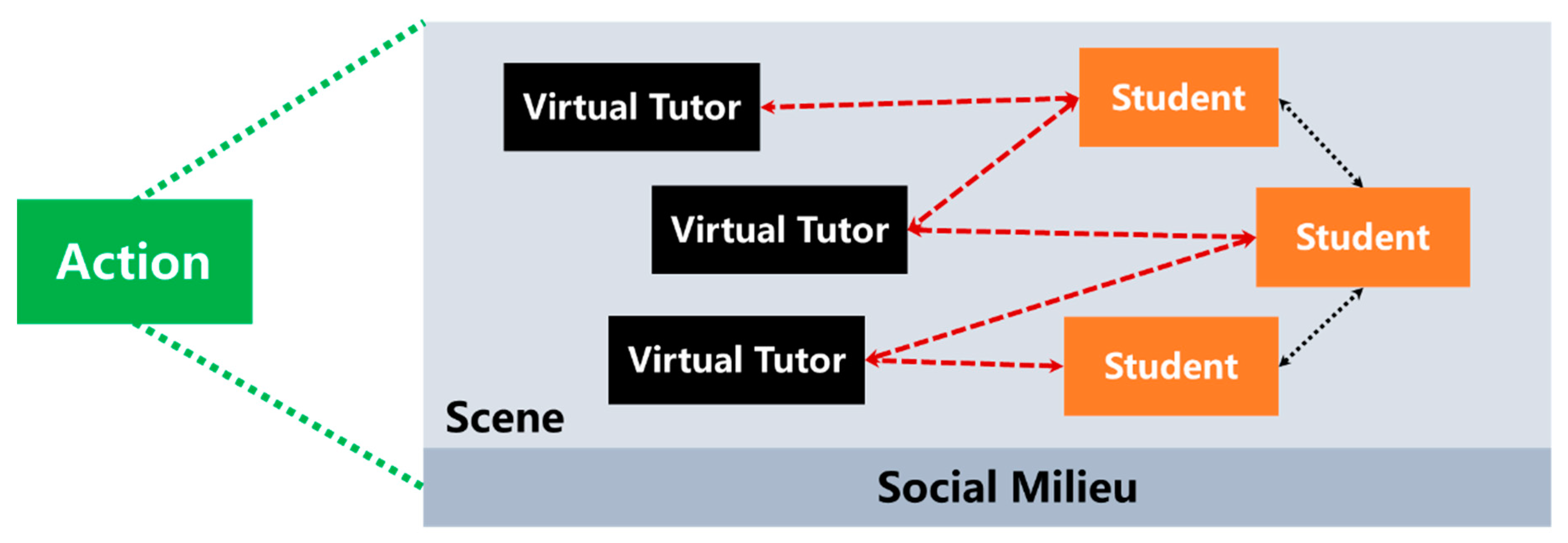

3.3.1. Scene Construction

3.3.2. Multi-Modal Communication Channel

4. Intelligent Tutor Robot Design: A Case Study

4.1. Design Analysis of the Tega System

- Multi-modal perception: The Tega system is equipped with a visual sensor and an acoustic sensor to capture the video and voice streams of the child, along with a tactile sensor to capture the interactions between the child and the virtual game environment over the tablet screen. A real-time facial expression detection and analysis algorithm was implemented to extract the child’s emotions, e.g., smile, brow-furrow, brow-raise, and lip-depress, and to analyze the child’s valence and engagement.

- Planning of teaching contents and strategies: The reinforcement learning (RL) technique is adopted to learn the personal affective policy for each child. The input of the RL is the valence and engagement status of the child as well as the child’s task action within the virtual game, acquired from the multi-modal perception module. The critic design relies on measuring the reward as a weighted sum of child affective performance and task performance. The RL may then facilitate online training and adapt proper verbal and non-verbal actions through the virtual game and the physical robot. Meanwhile, the student characteristic is implicitly modelled in the RL.

- Action through multi-modal communications: The scene construction is implemented by a tablet, where a virtual traveling game is synthesized to allow the child to interact and practice with a virtual animated character. The physical robot may perform head up/down, waist-tilt left/right, waist-lean forward/back, full body up/down, and full body left/right, expressing non-verbal gestures to attract and guide the attention of the child, along with the verbal natural language utterances.

4.2. Potential Enhancement of the Tega System

- Multi-modal perception: The current implementation of the Tega system uses a single visual sensor to capture and analyze the child’s facial expression. It may be extended to multiple visual sensors from the physical robot and the tablet may capture the child’s video streams from multiple perspectives. In this case, pixel-level data fusion may be adopted to synthesize the child’s activities in the 3D environments, where both action recognition and facial expression detection may be used for improving the child’s valence and engagement analysis.

- Planning of teaching contents and strategies: The current implementation of Reinforcement learning (RL) in the Tega system used case-by-case training and a fixed weighted reward. Two methods may be adopted to improve the learning and decision-making performance. First, the Tega system may gather information learned from multiple children to generate transferable student models, which may be deployed in new Tega system to reduce training time. Second, long-term child’s engagement metric may be designed and included in the critic design, which helps adapting weights in the reward function to incorporate a balanced tradeoff between short and long-term learning objectives.

- Action through multi-modal communications: The current implementation of the Tega splits the virtual actions in the 2D tablet game and physical actions by the physical robot, where the 3D actions are limited by the degree-of-freedom of the physical robot. Augmented or virtual reality techniques may be adopted to synthesize a virtual robot for more vivid actions and affections in the interactive game.

5. Discussion and Conclusions

- The first phase of initiating an effective perception-planning-action loop of ITR is to acquire sufficient information concerning the environments and the students, so it introduces multi-modal perception that utilizes multiple communication channels. Then, the ITR leads the students to do a variety of activities for external-assessment and self-assessment, so it can gather information, and then implement statistical machine learning methods to analyze the students’ learning style and knowledge level.

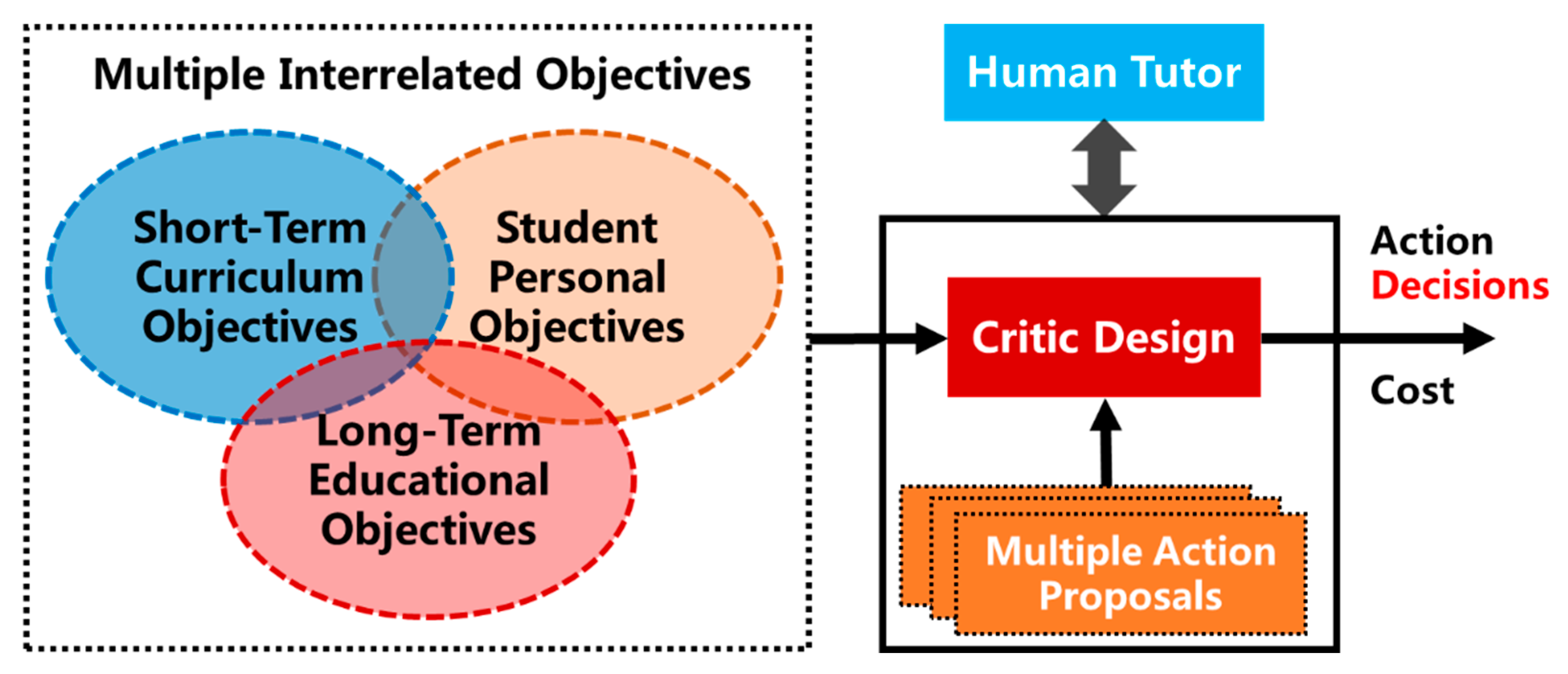

- According to students’ learning style and knowledge level, the second phase of ITR builds student models for each student or a group of students, based on which it can predict short-term and long-term outcomes of delivering certain teaching contents via specific teaching strategies. Then, the ITR may interact with the student models with multiple candidate plans of teaching contents and strategies and make predictions on the outcome of each candidate plan. Human tutors and curriculum designers may also intervene in the decision-making process.

- After receiving the plan result, the third phase is activated by the action module of the ITR that resolves the action sequence indicating what, when, and how teaching contents are to be delivered to the students. The action module needs to form an appropriate scenario for the students, as well as to provide effective communication channels for transmitting the teaching contents and receiving the feedback from students, using scene construction and multi-modal communication channels in the action module.

- With the rapid progress of AI techniques, many open research areas may be defined for ITRs with the aid of the perception-planning-action model.

- Perception: Multi-modal data fusion is not fully researched in the context of ITR design, even though the topic is well-investigated and still in heated discussion in the field of data-mining and robotics. Additionally, little work has compared the effects of different factors that indicate learning styles. Hence, it remains an open question on how to select the appropriate set of variables to predict a students’ learning process.

- Planning: In the context of student modelling, the model tracing, constrained model, and machine learning methods have their own application scenarios and limitations. Therefore, the recent advances of explainable AI may be incorporated in ITRs. In terms of the decision-making process, limited research has contributed to the problem formulation that takes into account the multi-objective problems such as jointly considering the long-term education objective, short-term curriculum objective, and student personal objective.

- Action: The action module field turns out to be the most researched area with state-of-art AI techniques. Even so, the applications of advanced AI techniques in ITRs such as generated adversarial networks are underway, while the design of physically embodied robots is far from mature.

Author Contributions

Funding

Conflicts of Interest

References

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- IFR, Statistical Department. World Robotics Survey; IFR: Frankfurt, Germany, 2008. [Google Scholar]

- Woolf, B.P. Building Inteligent Interactive Tutors, Student-Centered Strategies for Revolutionizing E-Learning; Morgan Kaufman: Burlington, MA, USA, 2008. [Google Scholar]

- Truong, H.M. Computers in Human Behavior Integrating Learning Styles and Adaptive E-Learning System: Current Developments, Problems and Opportunities. Comput. Hum. Behav. 2016, 55, 1185–1193. [Google Scholar] [CrossRef]

- Belpaeme, T.; Kennedy, J.; Ramachandran, A.; Scassellati, B.; Tanaka, F. Social Robots for Education: A Review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef]

- Lopez, T.; Chevaillier, P.; Gouranton, V.; Evrard, P.; Nouviale, F.; Barange, M.; Bouville, R.; Arnaldi, B. Collaborative virtual training with physical and communicative autonomous agents. Comput. Anim. Virtual Worlds 2014, 25, 485–493. [Google Scholar] [CrossRef]

- Johnson, W.L.; Lester, J.C. Face-to-face interaction with pedagogical agents, twenty years later. Int. J. Artif. Intell. Educ. 2016, 26, 25–36. [Google Scholar] [CrossRef]

- Papamitsiou, Z.; Economides, A.A. Learning analytics and educational data mining in practice: A systematic literature review of empirical evidence. J. Educ. Technol. Soc. 2014, 17, 49–64. [Google Scholar]

- Wilson, C.; Scott, B. Adaptive systems in education: A review and conceptual unification. Int. J. Inf. Learn. Technol. 2017, 34, 2–19. [Google Scholar] [CrossRef]

- Mubin, O.; Stevens, C.J.; Shahid, S.; Al Mahmud, A.; Dong, J.J. A Review of the Applicability of Robots in Education. Technol. Educ. Learn. 2013, 1. [Google Scholar] [CrossRef]

- Dewey, J. My Pedagogic Creed. Sch. J. 1897, 54, 77–80. [Google Scholar]

- Schwab, J.J. Science, Curriculum, and Liberal Education; University of Chicago Press: Chicago, IL, USA, 1978. [Google Scholar]

- Novak, J.D.; Gowin, D.B. Learning How to Learn; Cambridge University Press: Boston, MA, USA, 1984. [Google Scholar]

- Howard, T.C.; Aleman, G.R. Teacher capacity for diverse learners: What do teachers need to know? In Handbook of Research on Teacher Education: Enduring Questions in Changing Contexts, 3rd ed.; Cochran-Smith, M., Feiman-Nemser, S., McIntyre, D.J., Demers, K.E., Eds.; Routledge: Abingdon, UK, 2008; pp. 157–174. [Google Scholar]

- Krim, J.S. Critical Reflection and Teacher Capacity: The Secondary Science Pre-Service Teacher Population. Ph.D. Thesis, Montana State University, Bozeman, MT, USA, July 2009. [Google Scholar]

- Shulman, L.S. Knowledge and teaching: Foundations of the new reform. Harv. Educ. Rev. 1987, 57, 1–23. [Google Scholar] [CrossRef]

- Collinson, V. Reaching Students: Teachers Ways of Knowing; Corwin Press, Inc.: Thousand Oaks, CA, USA, 1996. [Google Scholar]

- McDiarmid, G.W.; Clevenger-Bright, M. Rethinking teacher capacity. In Handbook of Research on Teacher Education: Enduring Questions in Changing Contexts, 3rd ed.; Cochran-Smith, M., Feiman-Nemser, S., McIntyre, D.J., Demers, K.E., Eds.; Routledge: Abingdon, UK, 2008; pp. 134–156. [Google Scholar]

- Turner-Bisset, R. Expert Teaching: Knowledge and Pedagogy to Lead the Profession; David Fulton Publishers: London, UK, 2001. [Google Scholar]

- Wenger, E. Artificial Intelligence and Tutoring Systems: Computational and Cognitive Approaches to the Communication of Knowledge; Morgan Kaufmann: Burlington, MA, USA, 2014. [Google Scholar]

- Grossman, P.L. The Making of a Teacher: Teacher Knowledge and Teacher Education; Teachers College Press: New York, NY, USA, 1990. [Google Scholar]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Pearson Education Limited: Kuala Lumpur, Malaysia, 2016. [Google Scholar]

- Ambrose, S.A.; Bridges, M.W.; DiPietro, M.; Lovett, M.C.; Norman, M.K. How Learning Works: Seven Research-Based Principles for Smart Teaching; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Hall, D.L.; Llinas, J. An introduction to multi sensor data fusion. Proc. IEEE 2002, 85, 6–23. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112. [Google Scholar] [CrossRef]

- Ritschel, H. Socially-aware reinforcement learning for personalized human-robot interaction. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 1775–1777. [Google Scholar]

- Tsiami, A.; Koutras, P.; Efthymiou, N.; Filntisis, P.P.; Potamianos, G.; Maragos, P. Multi3: Multi-Sensory Perception System for Multi-Modal Child Interaction with Multiple Robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 1–8. [Google Scholar]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. A survey of depth and inertial sensor fusion for human action recognition. Multimed. Tools Appl. 2017, 76, 4405–4425. [Google Scholar] [CrossRef]

- Kose, H.; Akalin, N.; Yorganci, R.; Ertugrul, B.S.; Kivrak, H.; Kavak, S.; Ozkul, A.; Gurpinar, C.; Uluer, P.; Ince, G. iSign: An architecture for humanoid assisted sign language tutoring. In Intelligent Assistive Robots; Springer: Cham, Switzerland, 2015; pp. 157–184. [Google Scholar]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A review of affective computing: From unimodal analysis to multimodal fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Spaulding, S.; Gordon, G.; Breazeal, C. Affect-aware student models for robot tutors. In Proceedings of the International Conference on Autonomous Agents & Multiagent Systems, Singapore, 9–13 May 2016; pp. 864–872. [Google Scholar]

- Park, H.W.; Gelsomini, M.; Lee, J.J.; Breazeal, C. Telling stories to robots: The effect of backchanneling on a child’s storytelling. In Proceedings of the 12th ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2016; pp. 100–108. [Google Scholar]

- Sharma, S.; Ryan, K.; Ruslan, S. Action Recognition Using Visual Attention. 2015. Available online: https://arxiv.org/abs/1511.04119 (accessed on 12 December 2018).

- Lu, J.; Xiong, C.; Parikh, D.; Socher, R. Knowing when to look: Adaptive attention via a visual sentinel for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 6, p. 2. [Google Scholar]

- Felder, R.M.; Silverman, L.K. Learning and teaching styles in engineering education. Eng. Educ. 1988, 78, 674–681. [Google Scholar]

- Chou, C.Y.; Tseng, S.F.; Chih, W.C.; Chen, Z.H.; Chao, P.Y.; Lai, K.R.; Chan, C.L.; Yu, L.C.; Lin, Y.L. Open student models of core competencies at the curriculum level: Using learning analytics for student reflection. IEEE Trans. Emerg. Top. Comput. 2015, 5, 32–44. [Google Scholar] [CrossRef]

- Epp, C.D.; Bull, S. Uncertainty Representation in Visualizations of Learning Analytics for Learners: Current Approaches and Opportunities. IEEE Trans. Learn. Technol. 2015, 8, 242–260. [Google Scholar]

- Amadieu, F.; van Gog, T.; Paas, F.; Tricot, A.; Mariné, C. Effects of prior knowledge and concept-map structure on disorientation, cognitive load, and learning. Learn. Instr. 2009, 19, 376–386. [Google Scholar] [CrossRef]

- Ehrlinger, L.; Wöß, W. Towards a Definition of Knowledge Graphs. In Proceedings of the 12th International Conference on Semantic Systems (SEMANTiCS 2016), Leipzig, Germany, 12–15 September 2016. [Google Scholar]

- Battaglia, P.W.; Hamrick, J.B.; Bapst, V.; Sanchez-Gonzalez, A.; Zambaldi, V.; Malinowski, M.; Tacchetti, A.; Raposo, D.; Santoro, A.; Faulkner, R. Relational Inductive Biases, Deep Learning, and Graph Networks. arXiv 2018, arXiv:1806.01261. [Google Scholar]

- Zhang, D.; Yin, J.; Zhu, X.; Zhang, C. Network representation learning: A survey. IEEE Trans. Big Data 2018, arXiv:1801.05852. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, B.; Yang, J.; Yang, W.; Zhang, J. An Unified Intelligence-Communication Model for Multi-Agent System Part-I: Overview. arXiv 2018, arXiv:1811.09920. [Google Scholar]

- Li, Q.; Lau, R.W.; Popescu, E.; Rao, Y.; Leung, H.; Zhu, X. Social Media for Ubiquitous Learning and Adaptive Tutoring [Guest editors’ introduction]. IEEE MultiMed. 2016, 23, 18–24. [Google Scholar] [CrossRef]

- Mitrovic, A. Fifteen years of constraint-based tutors: What we have achieved and where we are going. User Model. User-Adapt. Interact. 2012, 22, 39–72. [Google Scholar] [CrossRef]

- Abyaa, A.; Idrissi, M.K.; Bennani, S. Learner modelling: Systematic review of the literature from the last 5 years. Educ. Technol. Res. Dev. 2019. [Google Scholar] [CrossRef]

- Piech, C.; Bassen, J.; Huang, J.; Ganguli, S.; Sahami, M.; Guibas, L.J.; Sohl-Dickstein, J. Deep knowledge tracing. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; pp. 505–513. [Google Scholar]

- Badaracco, M.; MartíNez, L. A fuzzy linguistic algorithm for adaptive test in Intelligent Tutoring System based on competences. Expert Syst. Appl. 2013, 40, 3073–3086. [Google Scholar] [CrossRef]

- Uglev, V. Implementation of Decision-making Methods in Intelligent Automated Educational System Focused on Complete Individualization in Learning. AASRI Procedia 2014, 6, 66–72. [Google Scholar] [CrossRef]

- Maclellan, C.J.; Harpstead, E.; Patel, R.; Koedinger, K.R. The Apprentice Learner architecture: Closing the loop between learning theory and educational data. In Proceedings of the 9th International Conference on Educational Data Mining, Raleigh, NC, USA, 29 June–2 July 2016; pp. 151–158. [Google Scholar]

- Cao, S.; Qin, Y.; Zhao, L.; Shen, M. Modeling the development of vehicle lateral control skills in a cognitive architecture. Transp. Res. Part F Traffic Psychol. Behav. 2015, 32, 1–10. [Google Scholar] [CrossRef]

- Bremgartner, V.; de Magalhães Netto, J.F.; de Menezes, C.S. Adaptation resources in virtual learning environments under constructivist approach: A systematic review. In Proceedings of the 2015 IEEE Frontiers in Education Conference (FIE), Washington, DC, USA, 21–24 October 2015; pp. 1–8. [Google Scholar]

- Ketelhut, D.J.; Dede, C.; Clarke, J.; Nelson, B. Studying Situated Learning in a Multi-User Virtual Environment. In Assessment of Problem Solving Using Simulations; Baker, E., Dickieson, J., Wulfeck, W., O’Neil, H., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2007; pp. 37–58. [Google Scholar]

- Potkonjak, V.; Gardner, M.; Callaghan, V.; Mattila, P.; Guetl, C.; Petrović, V.M.; Jovanović, K. Virtual laboratories for education in science, technology, and engineering: A review. Comput. Educ. 2016, 95, 309–327. [Google Scholar] [CrossRef]

- Vaughan, N.; Gabrys, B.; Dubey, V.N. An overview of self-adaptive technologies within virtual reality training. Comput. Sci. Rev. 2016, 22, 65–87. [Google Scholar] [CrossRef]

- Bacca, J.; Baldiris, S.; Fabregat, R.; Graf, S. Augmented reality trends in education: A systematic review of research and applications. Educ. Technol. Soc. 2014, 17, 133–149. [Google Scholar]

- Radu, I. Augmented reality in education: A meta-review and cross-media analysis. Pers. Ubiquitous Comput. 2014, 18, 1533–1543. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Harley, J.M.; Poitras, E.G.; Jarrell, A.; Duffy, M.C.; Lajoie, S.P. Comparing virtual and location-based augmented reality mobile learning: Emotions and learning outcomes. Educ. Technol. Res. Dev. 2016, 64, 359–388. [Google Scholar] [CrossRef]

- Robb, A.; Kopper, R.; Ambani, R.; Qayyum, F.; Lind, D.; Su, L.M.; Lok, B. Leveraging virtual humans to effectively prepare learners for stressful interpersonal experiences. IEEE Trans. Vis. Comput. Graph. 2013, 19, 662–670. [Google Scholar] [CrossRef]

- Conradi, E.; Kavia, S.; Burden, D.; Rice, A.; Woodham, L.; Beaumont, C.; Savin-Baden, M.; Poulton, T. Virtual patients in a virtual world: Training paramedic students for practice. Med. Teach. 2009, 31, 713–720. [Google Scholar] [CrossRef] [PubMed]

- Wijewickrema, S.; Copson, B.; Ma, X.; Briggs, R.; Bailey, J.; Kennedy, G.; O’Leary, S. Development and Validation of a Virtual Reality Tutor to Teach Clinically Oriented Surgical Anatomy of the Ear. In Proceedings of the 31st International Symposium on Computer-Based Medical Systems, Karlstad, Sweden, 18–21 June 2018; pp. 12–17. [Google Scholar]

- Gavish, N.; Gutiérrez, T.; Webel, S.; Rodríguez, J.; Peveri, M.; Bockholt, U.; Tecchia, F. Evaluating virtual reality and augmented reality training for industrial maintenance and assembly tasks. Interact. Learn. Environ. 2015, 23, 778–798. [Google Scholar] [CrossRef]

- Hassani, K.; Nahvi, A.; Ahmadi, A. Design and implementation of an intelligent virtual environment for improving speaking and listening skills. Interact. Learn. Environ. 2016, 24, 252–271. [Google Scholar] [CrossRef]

- Patterson, R.L.; Patterson, D.C.; Robertson, A.M. Seeing Numbers Differently: Mathematics in the Virtual World. In Emerging Tools and Applications of Virtual Reality in Education; IGI Global: Hershey, PA, USA, 2016; pp. 186–214. [Google Scholar]

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8789–8797. [Google Scholar]

- Nojavanasghari, B.; Huang, Y.; Khan, S. Interactive Generative Adversarial Networks for Facial Expression Generation in Dyadic Interactions. arXiv 2018, arXiv:1801.09092. [Google Scholar]

- Press, O.; Bar, A.; Bogin, B.; Berant, J.; Wolf, L. Language generation with recurrent generative adversarial networks without pre-training. arXiv 2017, arXiv:1706.01399. [Google Scholar]

- Shetty, R.; Rohrbach, M.; Hendricks, A.L.; Fritz, M.; Schiele, B. Speaking the same language: Matching machine to human captions by adversarial training. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4135–4144. [Google Scholar]

- Leyzberg, D.; Spaulding, S.; Toneva, M.; Scassellati, B. The physical presence of a robot tutor increases cognitive learning gains. In Proceedings of the Annual Meeting of the Cognitive Science Society, Sapporo, Japan, 1–4 August 2012; p. 34. [Google Scholar]

- Leyzberg, D.; Spaulding, S.; Scassellati, B. Personalizing robot tutors to individuals’ learning differences. In Proceedings of the 2014 ACM/IEEE international conference on Human-robot interaction, Bielefeld, Germany, 3–6 March 2014; pp. 423–430. [Google Scholar]

- Gordon, G.; Breazeal, C.; Engel, S. Can children catch curiosity from a social robot? In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2015; pp. 91–98. [Google Scholar]

- Gordon, G.; Spaulding, S.; Westlund, J.K.; Lee, J.J.; Plummer, L.; Martinez, M.; Das, M.; Breazeal, C. Affective Personalization of a Social Robot Tutor for Children’s Second Language Skills. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 3951–3957. [Google Scholar]

- Blancas-Muñoz, M.; Vouloutsi, V.; Zucca, R.; Mura, A.; Verschure, P.F. Hints vs Distractions in Intelligent Tutoring Systems: Looking for the proper type of help. arXiv 2018, arXiv:1806.07806. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Zhang, B. Artificial Intelligence in Intelligent Tutoring Robots: A Systematic Review and Design Guidelines. Appl. Sci. 2019, 9, 2078. https://doi.org/10.3390/app9102078

Yang J, Zhang B. Artificial Intelligence in Intelligent Tutoring Robots: A Systematic Review and Design Guidelines. Applied Sciences. 2019; 9(10):2078. https://doi.org/10.3390/app9102078

Chicago/Turabian StyleYang, Jinyu, and Bo Zhang. 2019. "Artificial Intelligence in Intelligent Tutoring Robots: A Systematic Review and Design Guidelines" Applied Sciences 9, no. 10: 2078. https://doi.org/10.3390/app9102078

APA StyleYang, J., & Zhang, B. (2019). Artificial Intelligence in Intelligent Tutoring Robots: A Systematic Review and Design Guidelines. Applied Sciences, 9(10), 2078. https://doi.org/10.3390/app9102078