Disentangled Feature Learning for Noise-Invariant Speech Enhancement

Abstract

1. Introduction

- We modify the DAT framework in order to solve the speech enhancement task in a supervised manner. The proposed model achieves better performance in speech enhancement as compared to the baseline models under both the matched and mismatched noise conditions.

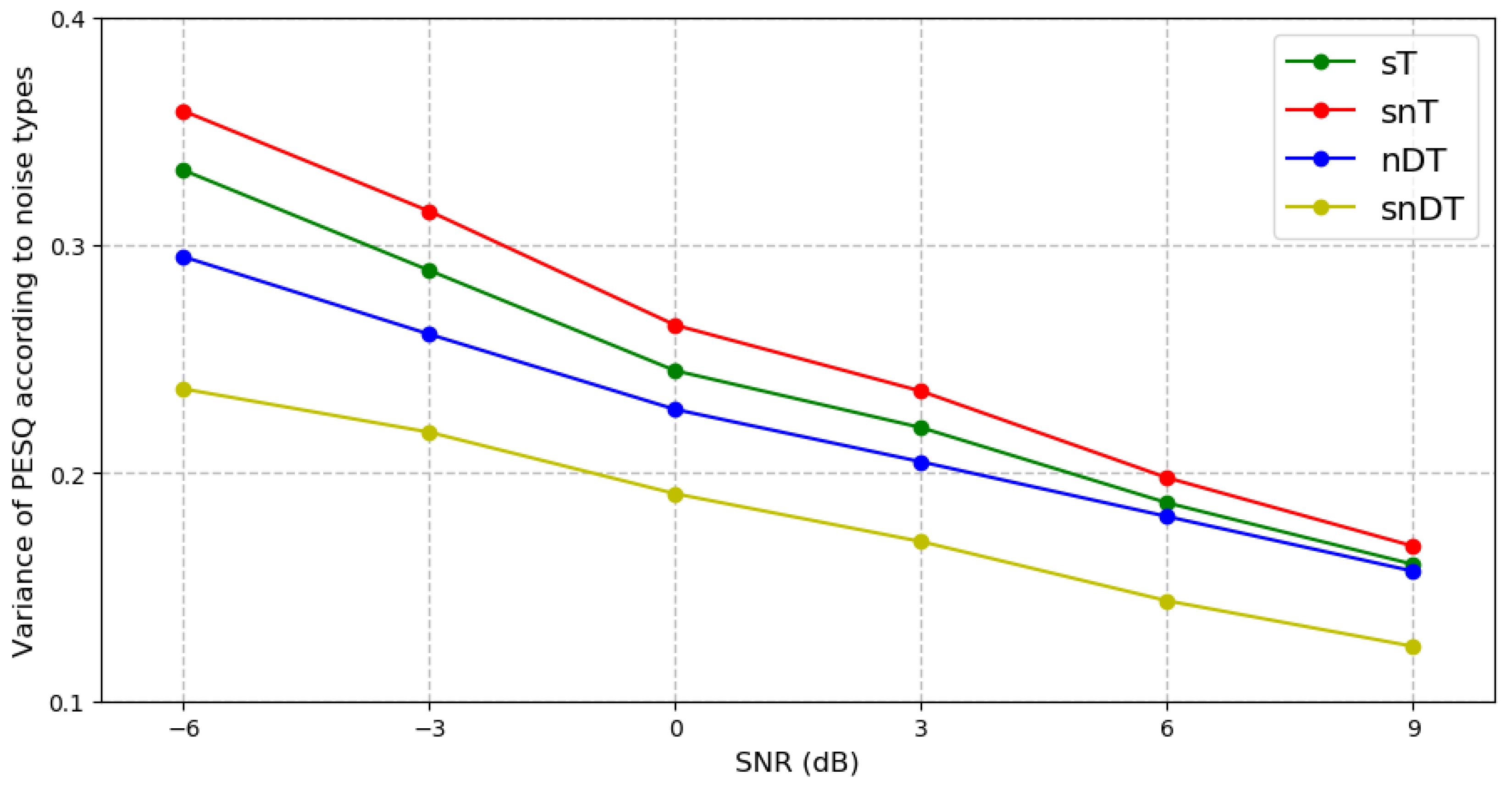

- By reducing the performance gap among different noise types, we show that our method is more robust to noise variability.

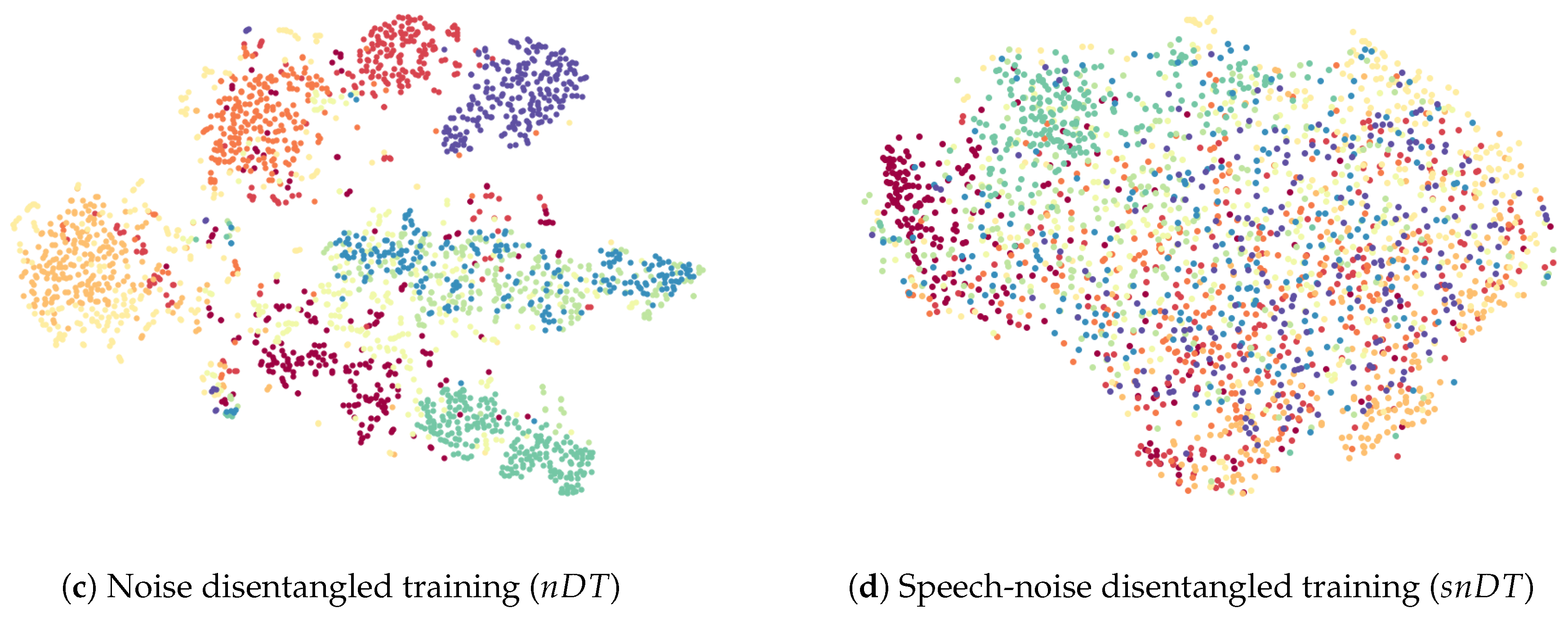

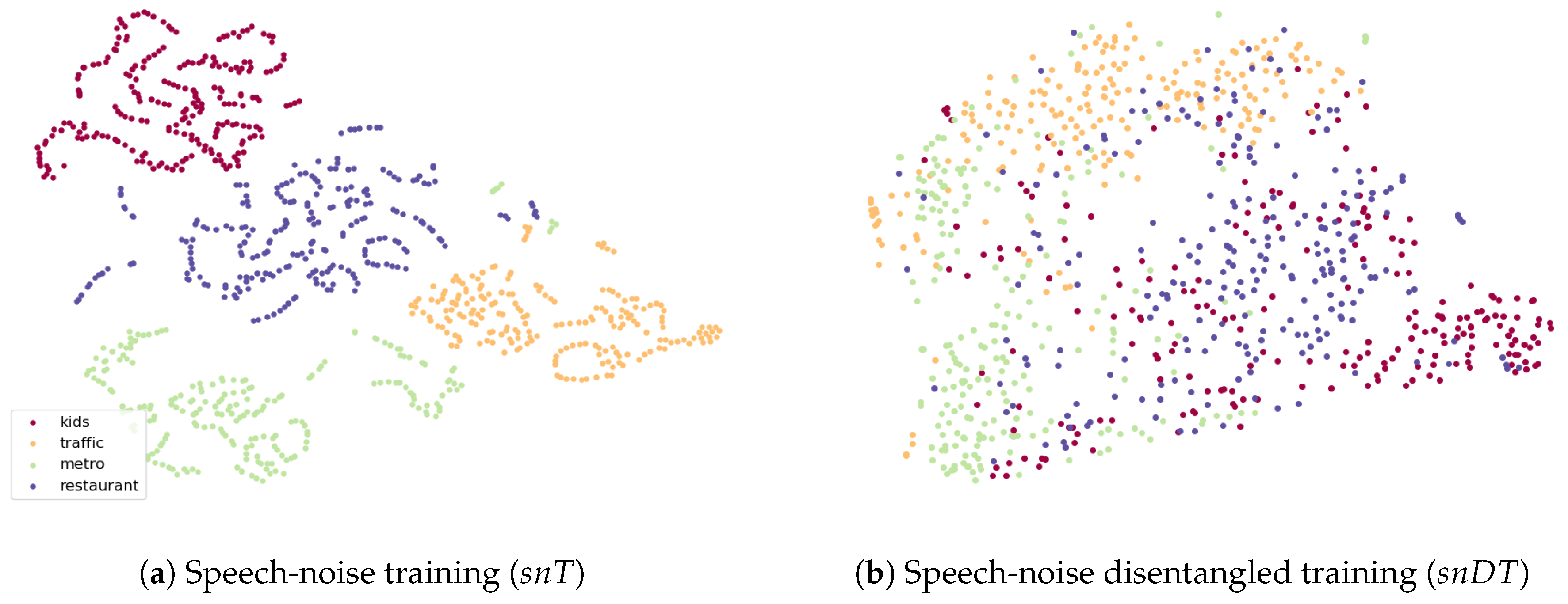

- By visualizing feature representations, we demonstrate that our model successfully disentangles speech and noise latent features.

2. Related Work

2.1. Masking-Based Speech Enhancement

2.2. Domain Adversarial Training

3. Proposed Method

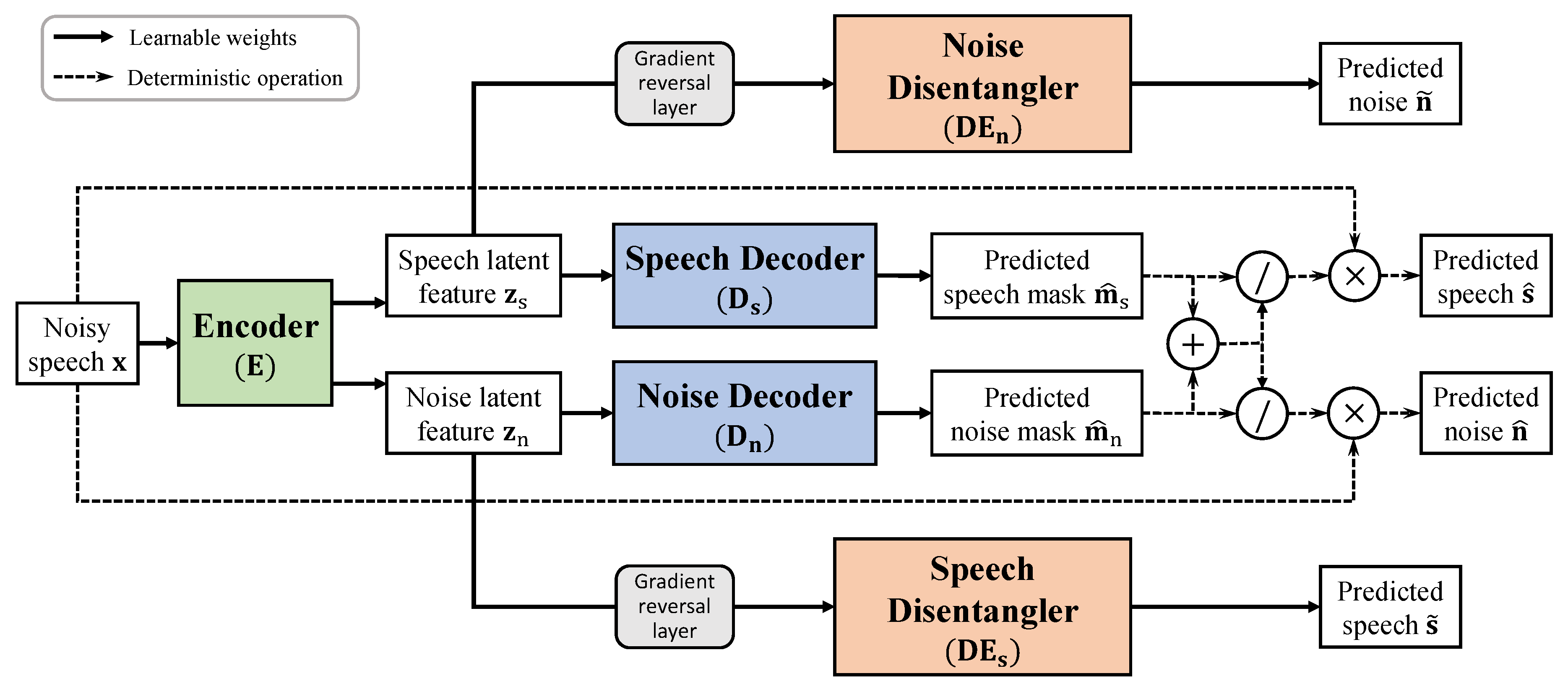

3.1. Neural Network Architecture

3.2. Training Objectives

3.3. Adversarial Training for Disentangled Features

4. Experiments and Results

4.1. Dataset

4.2. Feature Extraction

4.3. Network Setup

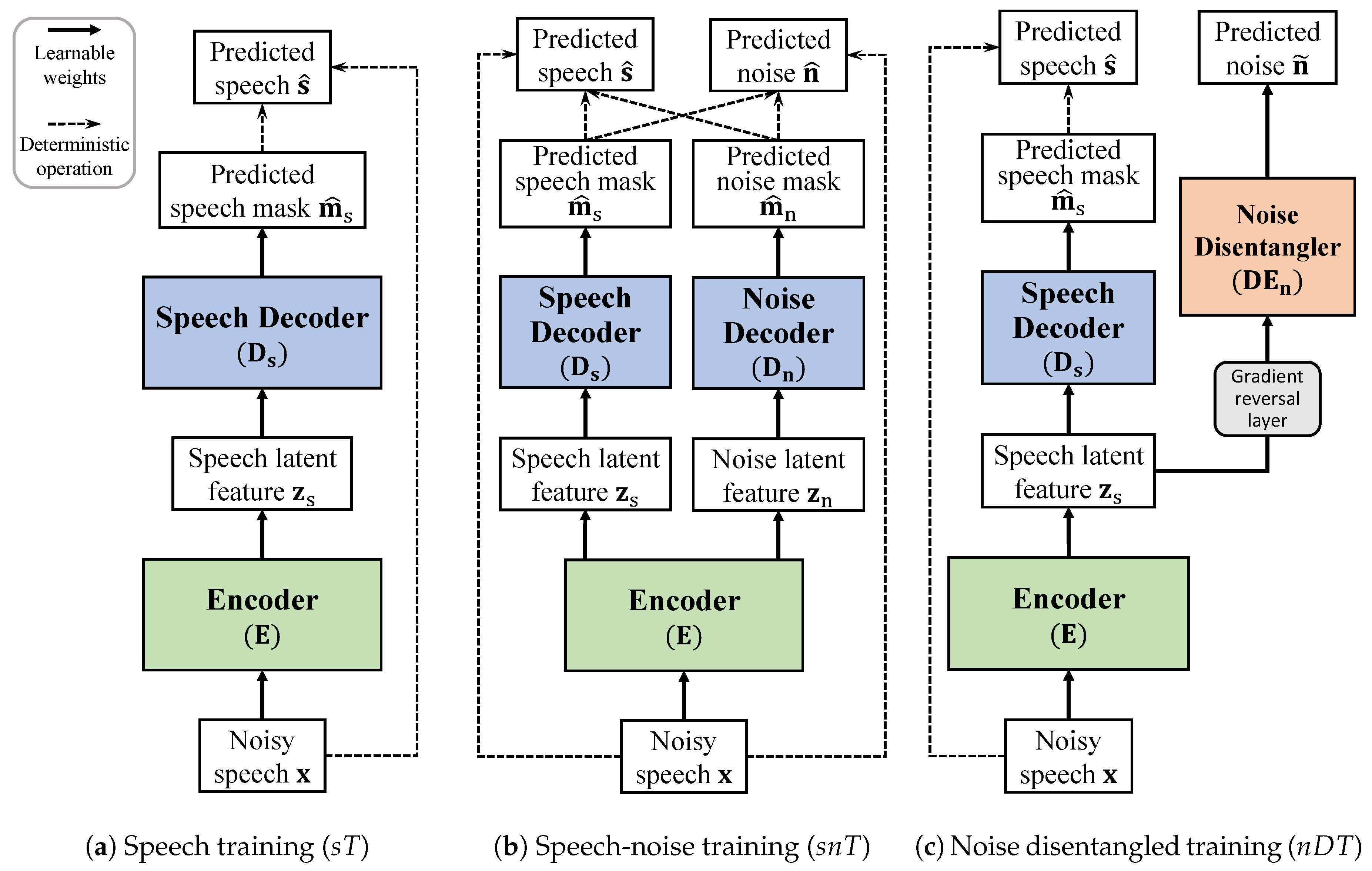

- Noise disentangled training () model, as shown in Figure 3c, was trained so that the noise components were disentangled from the speech latent features without using noise latent features.

4.4. Objective Measures

4.5. Performance Evaluation

4.6. Subjective Test Results

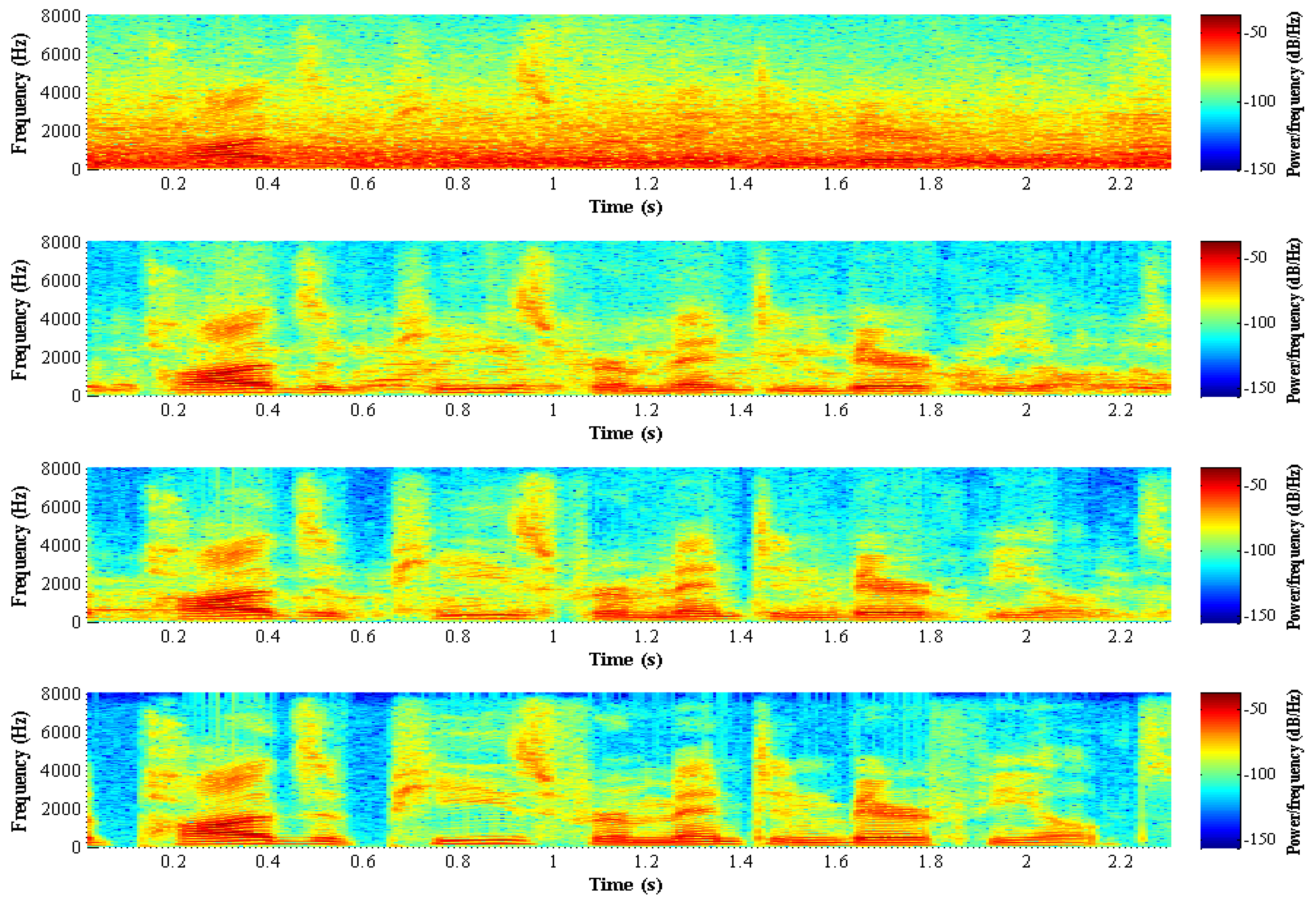

4.7. Analysis of Noise-Invariant Speech Enhancement

4.8. Disentangled Feature Representations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kim, N.S.; Chang, J.H. Statistical model based techniques for robust speech communication. In Recent Advances in Robust Speech Recognition Technology; Bentham Science: Sharjah, UAE, 2010; pp. 114–132. [Google Scholar]

- Chen, J.; Wang, Y.; Yoho, S.E.; Wang, D.; Healy, E.W. Large-scale training to increase speech intelligibility for hearing-impaired listeners in novel noises. J. Acoust. Soc. Am. 2016, 139, 2604–2612. [Google Scholar] [CrossRef] [PubMed]

- Lai, Y.H.; Chen, F.; Wang, S.S.; Lu, X.; Tsao, Y.; Lee, C.H. A deep denoising autoencoder approach to improving the intelligibility of vocoded speech in cochlear implant simulation. IEEE Trans. Biomed. Eng. 2017, 64, 1568–1578. [Google Scholar] [CrossRef] [PubMed]

- Maas, A.L.; Le, Q.V.; O’neil, T.M.; Vinyals, O.; Nguyen, P.; Ng, A.Y. Recurrent neural networks for noise reduction in robust ASR. In Proceedings of the INTERSPEECH, Portland, OR, USA, 9–13 September 2012; pp. 22–25. [Google Scholar]

- Donahue, C.; Li, B.; Prabhavalkar, R. Exploring speech enhancement with generative adversarial networks for robust speech recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5024–5028. [Google Scholar]

- Ortega-García, J.; González-Rodríguez, J. Overview of speech enhancement techniques for automatic speaker recognition. In Proceedings of the Fourth International Conference on Spoken Language Processing, Philadelphia, PA, USA, 3–6 October 1996; pp. 929–932. [Google Scholar]

- Boll, S.F. Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech Signal Process. 1979, 27, 113–120. [Google Scholar] [CrossRef]

- Ephraim, Y.; Malah, D. Speech enhancement using a minimum-mean square error short-time spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process. 1984, 32, 1109–1121. [Google Scholar] [CrossRef]

- Kim, N.S.; Chang, J.H. Spectral enhancement based on global soft decision. IEEE Signal Process. Lett. 2000, 7, 108–110. [Google Scholar]

- Lim, J.S.; Oppenheim, A.V. All-pole modeling of degraded speech. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 197–210. [Google Scholar] [CrossRef]

- Gupta, P.; Patidar, M.; Nema, P. Performance analysis of speech enhancement using LMS, NLMS and UNANR algorithms. In Proceedings of the IEEE International Conference on Computer, Communication and Control (IC4), Madhya Pradesh, India, 10–12 September 2015; pp. 1–5. [Google Scholar]

- Li, R.; Liu, Y.; Shi, Y.; Dong, L.; Cui, W. ILMSAF based speech enhancement with DNN and noise classification. Speech Commun. 2016, 85, 53–70. [Google Scholar] [CrossRef]

- Cohen, I.; Berdugo, B. Speech enhancement for non-stationary noise environments. Signal Process. 2001, 81, 2403–2418. [Google Scholar] [CrossRef]

- Kwon, K.; Shin, J.W.; Kim, N.S. NMF-based speech enhancement using bases update. IEEE Signal Process. Lett. 2015, 22, 450–454. [Google Scholar] [CrossRef]

- Wilson, K.W.; Raj, B.; Smaragdis, P.; Divakaran, A. Speech denoising using nonnegative matrix factorization with priors. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Las Vegas, NV, USA, 30 March–4 April 2008; pp. 4029–4032. [Google Scholar]

- Mohammadiha, N.; Smaragdis, P.; Leijon, A. Supervised and unsupervised speech enhancement using nonnegative matrix factorization. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 2140–2151. [Google Scholar] [CrossRef]

- Lu, X.; Tsao, Y.; Matsuda, S.; Hori, C. Speech enhancement based on deep denoising autoencoder. In Proceedings of the INTERSPEECH, Lyon, France, 25–29 August 2013; pp. 436–440. [Google Scholar]

- Grais, E.M.; Sen, M.U.; Erdogan, H. Deep neural networks for single channel source separation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 3734–3738. [Google Scholar]

- Xu, Y.; Du, J.; Dai, L.R.; Lee, C.H. A regression approach to speech enhancement based on deep neural networks. IEEE Trans. Audio Speech Lang. Process. 2015, 23, 7–19. [Google Scholar]

- Kang, T.G.; Kwon, K.; Shin, J.W.; Kim, N.S. NMF-based target source separation using deep neural network. IEEE Signal Process. Lett. 2015, 22, 229–233. [Google Scholar] [CrossRef]

- Zhang, X.L.; Wang, D. A deep ensemble learning method for monaural speech separation. IEEE Trans. Audio Speech Lang. Process. 2016, 24, 967–977. [Google Scholar] [CrossRef] [PubMed]

- Huang, P.S.; Kim, M.; Hasegawa, J.M.; Smaragdis, P. Joint optimization of masks and deep recurrent neural networks for monaural source separation. IEEE Trans. Audio Speech Lang. Process. 2015, 23, 2136–2147. [Google Scholar] [CrossRef]

- Chen, J.; Wang, D. Long short-term memory for speaker generalization in supervised speech separation. J. Acoust. Soc. Am. 2017, 141, 4705–4714. [Google Scholar] [CrossRef] [PubMed]

- Weninger, F.; Erdogan, H.; Watanabe, S.; Vincent, E.; Le Roux, J.; Hershey, J.R.; Schuller, B. Speech enhancement with LSTM recurrent neural networks and its application to noise-robust ASR. In Proceedings of the International Conference on Latent Variable Analysis and Signal Separation, Liberec, Czech, 25–28 August 2015; pp. 91–99. [Google Scholar]

- Zhao, H.; Zarar, S.; Tashev, I.; Lee, C.H. Convolutional-recurrent neural networks for speech enhancement. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2401–2405. [Google Scholar]

- Chandna, P.; Miron, M.; Janer, J.; Gómez, E. Monoaural audio source separation using deep convolutional neural networks. In Proceedings of the International Conference on Latent Variable Analysis and Signal Separation, Grenoble, France, 21–23 February 2017; pp. 258–266. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Pascual, S.; Bonafonte, A.; Serrà, J. SEGAN: Speech enhancement generative adversarial network. In Proceedings of the INTERSPEECH, Stockholm, Sweden, 20–24 August 2017; pp. 3642–3646. [Google Scholar]

- Soni, M.H.; Shah, N.; Patil, H.A. Time-frequency masking-based speech enhancement using generative adversarial network. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5039–5043. [Google Scholar]

- Pandey, A.; Wang, D. On adversarial training and loss functions for speech enhancement. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5414–5418. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 1–35. [Google Scholar]

- Wang, Y.; Narayanan, A.; Wang, D. On training targets for supervised speech separation. IEEE Trans. Audio Speech Lang. Process. 2014, 22, 1849–1858. [Google Scholar] [CrossRef]

- Delfarah, M.; Wang, D. Features for masking-based monaural speech separation in reverberant conditions. IEEE Trans. Audio Speech Lang. Process. 2017, 25, 1085–1094. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Shinohara, Y. Adversarial Multi-Task Learning of Deep Neural Networks for Robust Speech Recognition. In Proceedings of the INTERSPEECH, San Francisco, CA, USA, 8–12 September 2016; pp. 2369–2372. [Google Scholar]

- Sun, S.; Zhang, B.; Xie, L.; Zhang, Y. An unsupervised deep domain adaptation approach for robust speech recognition. Neurocomputing 2017, 257, 79–87. [Google Scholar] [CrossRef]

- Meng, Z.; Li, J.; Chen, Z.; Zhao, Y.; Mazalov, V.; Gang, Y.; Juang, B.H. Speaker-invariant training via adversarial learning. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5969–5973. [Google Scholar]

- Sun, S.; Yeh, C.F.; Hwang, M.Y.; Ostendorf, M.; Xie, L. Domain adversarial training for accented speech recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4854–4858. [Google Scholar]

- Wang, Q.; Rao, W.; Sun, S.; Xie, L.; Chng, E.S.; Li, H. Unsupervised domain adaptation via domain adversarial training for speaker recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4889–4893. [Google Scholar]

- Abdelwahab, M.; Busso, C. Domain adversarial for acoustic emotion recognition. IEEE Trans. Audio Speech Lang. Process. 2018, 26, 2423–2435. [Google Scholar] [CrossRef]

- Liao, C.F.; Tsao, Y.; Lee, H.Y.; Wang, H.M. Noise adaptive speech enhancement using domain adversarial training. arXiv 2018, arXiv:1807.07501. [Google Scholar]

- Rabiner, L.R.; Gold, B. Theory and Application of Digital Signal Processing; PrenticeHall: Englewood Cliffs, NJ, USA, 1975. [Google Scholar]

- Zue, V.; Seneff, S.; Glass, J. Speech database development at MIT: TIMIT and beyond. Speech Commun. 1990, 9, 351–356. [Google Scholar] [CrossRef]

- ITU. Test Signals for Use in Telephonometry ITU-T Rec. P. 501. 2012. Available online: https://www.itu.int/rec/T-REC-P.501 (accessed on 11 January 2019).

- Varga, A.; Steeneken, H. Assessment for automatic speech recognition: II. NOISEX-92: A database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun. 1993, 12, 247–251. [Google Scholar] [CrossRef]

- Kabal, P. TSP Speech Database; McGill Univ. Tech. Rep.: Montreal, QC, Canada, 2012. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the Symposium on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- ITU-T. Perceptual Evaluation of Speech Quality (PESQ): An Objective Method for End-to-End Speech Quality Assessment of Narrow-Band Telephone Networks and Speech Codecs. Rec. ITU-T P. 862. 2000. Available online: https://www.itu.int/rec/T-REC-P.862 (accessed on 18 February 2019).

- Quackenbush, S.R.; Barnwell, T.P.; Clements, M.A. Objective Measures of Speech Quality; PrenticeHall: Englewood Cliffs, NJ, USA, 1988. [Google Scholar]

- Jensen, J.; Taal, C.H. An algorithm for predicting the intelligibility of speech masked by modulated noise maskers. IEEE Trans. Audio Speech Lang. Process. 2016, 24, 2009–2022. [Google Scholar] [CrossRef]

- Vincent, E.; Gribonval, R.; Févotte, C. Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1462–1469. [Google Scholar] [CrossRef]

- Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| (a) PESQ | (b) segSNR | ||||||||||

| SNR (dB) | SNR (dB) | ||||||||||

| −6 | 1.53 | 2.00 | 2.12 | 2.06 | 2.22 | −6 | −6.87 | 1.49 | 3.18 | 2.85 | 3.53 |

| −3 | 1.71 | 2.23 | 2.35 | 2.30 | 2.45 | −3 | −5.39 | 3.06 | 4.31 | 3.93 | 4.92 |

| 0 | 1.90 | 2.44 | 2.57 | 2.52 | 2.66 | 0 | −3.65 | 4.57 | 5.58 | 5.27 | 6.29 |

| 3 | 2.11 | 2.64 | 2.76 | 2.72 | 2.85 | 3 | −1.80 | 6.08 | 7.03 | 6.79 | 7.86 |

| 6 | 2.33 | 2.83 | 2.95 | 2.90 | 3.02 | 6 | 0.32 | 7.41 | 8.33 | 8.14 | 9.20 |

| 9 | 2.54 | 2.99 | 3.10 | 3.05 | 3.17 | 9 | 2.57 | 8.64 | 9.56 | 9.38 | 10.43 |

| Aver. | 2.02 | 2.52 | 2.64 | 2.59 | 2.73 | Aver. | −2.47 | 5.21 | 6.33 | 6.06 | 7.04 |

| (c) eSTOI | (d) SDR | ||||||||||

| SNR (dB) | SNR (dB) | ||||||||||

| −6 | 0.44 | 0.56 | 0.59 | 0.57 | 0.61 | −6 | −5.97 | 7.07 | 7.96 | 7.22 | 8.75 |

| −3 | 0.52 | 0.64 | 0.67 | 0.65 | 0.69 | −3 | −3.11 | 9.63 | 10.42 | 9.85 | 11.10 |

| 0 | 0.59 | 0.71 | 0.74 | 0.73 | 0.76 | 0 | −0.17 | 11.92 | 12.67 | 12.16 | 13.21 |

| 3 | 0.67 | 0.77 | 0.80 | 0.79 | 0.82 | 3 | 2.80 | 14.06 | 14.71 | 14.27 | 15.14 |

| 6 | 0.74 | 0.82 | 0.84 | 0.84 | 0.86 | 6 | 5.78 | 15.81 | 16.42 | 16.03 | 16.81 |

| 9 | 0.80 | 0.86 | 0.88 | 0.87 | 0.89 | 9 | 8.78 | 17.34 | 17.94 | 17.56 | 18.24 |

| Aver. | 0.63 | 0.73 | 0.75 | 0.74 | 0.77 | Aver. | 1.35 | 12.64 | 13.35 | 12.85 | 13.88 |

| (a) PESQ | (b) segSNR | ||||||||||

| SNR (dB) | SNR (dB) | ||||||||||

| −6 | 1.33 | 1.68 | 1.77 | 1.79 | 1.90 | −6 | −6.59 | −0.86 | 1.78 | 1.70 | 1.90 |

| −3 | 1.55 | 1.93 | 2.02 | 2.02 | 2.13 | −3 | −5.08 | 0.81 | 2.85 | 2.72 | 2.81 |

| 0 | 1.77 | 2.16 | 2.25 | 2.27 | 2.35 | 0 | −3.35 | 2.58 | 3.50 | 3.47 | 4.04 |

| 3 | 1.98 | 2.38 | 2.46 | 2.44 | 2.55 | 3 | −1.48 | 4.16 | 4.97 | 4.89 | 5.69 |

| 6 | 2.20 | 2.59 | 2.67 | 2.65 | 2.75 | 6 | 0.64 | 5.82 | 6.64 | 6.60 | 7.44 |

| 9 | 2.41 | 2.78 | 2.86 | 2.83 | 2.93 | 9 | 2.91 | 7.29 | 8.11 | 8.08 | 8.97 |

| Aver. | 1.88 | 2.25 | 2.34 | 2.33 | 2.43 | Aver. | −2.16 | 3.30 | 4.64 | 4.58 | 5.14 |

| (c) eSTOI | (d) SDR | ||||||||||

| SNR (dB) | SNR (dB) | ||||||||||

| −6 | 0.39 | 0.46 | 0.48 | 0.48 | 0.51 | −6 | −6.00 | 1.96 | 2.44 | 2.20 | 2.59 |

| −3 | 0.47 | 0.55 | 0.58 | 0.57 | 0.60 | −3 | −3.11 | 4.89 | 5.37 | 5.21 | 5.57 |

| 0 | 0.55 | 0.63 | 0.66 | 0.66 | 0.68 | 0 | −0.17 | 7.89 | 8.37 | 8.26 | 8.61 |

| 3 | 0.63 | 0.71 | 0.74 | 0.73 | 0.75 | 3 | 2.79 | 10.50 | 10.92 | 10.78 | 11.17 |

| 6 | 0.71 | 0.77 | 0.80 | 0.80 | 0.81 | 6 | 5.78 | 13.01 | 13.41 | 13.24 | 13.66 |

| 9 | 0.78 | 0.82 | 0.84 | 0.84 | 0.86 | 9 | 8.78 | 15.11 | 15.52 | 15.37 | 15.82 |

| Aver. | 0.59 | 0.66 | 0.68 | 0.68 | 0.70 | Aver. | 1.34 | 8.89 | 9.34 | 9.18 | 9.57 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bae, S.H.; Choi, I.; Kim, N.S. Disentangled Feature Learning for Noise-Invariant Speech Enhancement. Appl. Sci. 2019, 9, 2289. https://doi.org/10.3390/app9112289

Bae SH, Choi I, Kim NS. Disentangled Feature Learning for Noise-Invariant Speech Enhancement. Applied Sciences. 2019; 9(11):2289. https://doi.org/10.3390/app9112289

Chicago/Turabian StyleBae, Soo Hyun, Inkyu Choi, and Nam Soo Kim. 2019. "Disentangled Feature Learning for Noise-Invariant Speech Enhancement" Applied Sciences 9, no. 11: 2289. https://doi.org/10.3390/app9112289

APA StyleBae, S. H., Choi, I., & Kim, N. S. (2019). Disentangled Feature Learning for Noise-Invariant Speech Enhancement. Applied Sciences, 9(11), 2289. https://doi.org/10.3390/app9112289