Hyperspectral Image Inpainting Based on Robust Spectral Dictionary Learning

Abstract

:1. Introduction

- We model the HSI inpainting as a reconstruction problem of sparse signals under incomplete observation, with the theory of dictionary learning and sparse representation. To improve the efficiency of the dictionary learning, different from block loading algorithms, the spectral dictionary was trained progressively using the pixel spectral vectors waiting to be trained.

- To enhance the robustness of the dictionary updating process against noise and outliers and improve the inpainting effect of HSIs, we use more robust loss as the data fitting term in the objective function when performing the dictionary learning.

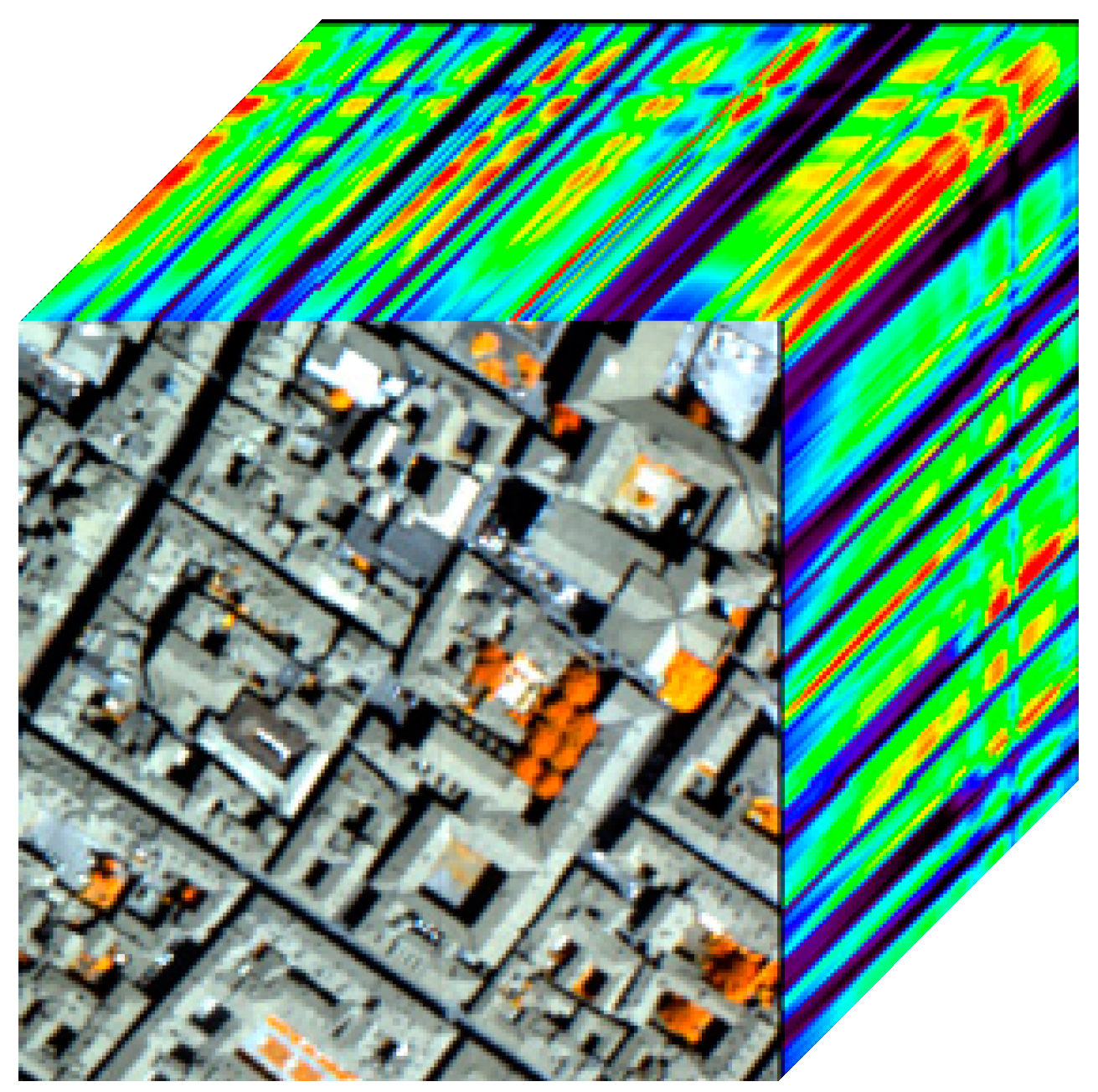

2. HSI Inpainting Model and Mechanism

2.1. HSI Inpainting Model

2.2. HSI Inpainting Mechanism

3. Robust Spectral Dictionary Learning and Sparse Representation

3.1. Robust Spectral Dictionary Learning

| Algorithm 1: Online robust spectral dictionary learning (ORSDL) |

| Input: complete observation pixel spectral vector , number of iterations , regularization term coefficient the number of the pixel spectral vectors per iteration initial spectral dictionary |

| Output: spectral dictionary |

| 1 for to do |

| 2 Randomly choose from |

| /* Solving robust sparse coding problem*/ |

| 3 for to do |

| 4 |

| 5 end for |

| /* dictionary update */ |

| 6 repeat |

| 7 for to do |

| 8 |

| 9 |

| 10 solve linear system |

| 11 |

| 12 end for |

| 13 until convergence |

| 14 end for |

| 15 return |

3.2. Robust Sparse Coding

4. HSI Inpainting Algorithm Outline

| Algorithm 2 INORSDL. |

| Input: (Observed HSI data), (inpainting mask matrix) |

| Output: (Inpainted HSI data) |

| 1 begin |

| 2 select the complete observed HSI pixel spectral vectors |

| 3 (robust spectral dictionary training, Algorithm 1) |

| 4 solve the robust sparse code matrix |

| 5 |

| 6 end |

5. Experimental Results and Analysis

5.1. Simulated Data Experiment

5.2. Real Data Experiment

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bertalmio, M.; Sapiro, G.; Ballester, C. Image Inpainting. Siggraph 2005, 4, 417–424. [Google Scholar] [CrossRef]

- Kokaram, A.C.; Morris, R.D.; Fitzgerald, W.J.; Rayner, P.J.W. Interpolation of missing data in image sequences. IEEE Trans. Image Process. 1995, 4, 1509–1519. [Google Scholar] [CrossRef]

- Shih, T.K.; Chang, R.C.; Lu, L.C.; Ko, W.C.; Wang, C.C. Adaptive Digital Image Inpainting. In Proceedings of the International Conference on Advanced Information Networking and Applications, Fukuoka, Japan, 29–31 March 2004. [Google Scholar] [CrossRef]

- Grossauer, H. A Combined PDE and Texture Synthesis Approach to Inpainting. In Proceedings of the 8th European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004. [Google Scholar] [CrossRef]

- Bertalmio, M. Strong-continuation, contrast-invariant inpainting with a third-order optimal PDE. IEEE Trans. Image Process. 2006, 15, 1934–1938. [Google Scholar] [CrossRef]

- Ng, M.K.; Shen, H.; Chaudhuri, S.; Yau, A.C. Zoom-based super-resolution reconstruction approach using prior total variation. Opt. Eng. 2007, 46, 127003. [Google Scholar] [CrossRef]

- Ng, M.K.; Shen, H.; Lam, E.Y.; Zhang, L. A Total Variation Regularization Based Super-Resolution Reconstruction Algorithm for Digital Video. EURASIP J. Adv. Sig. Pr. 2007, 2007, 074585. [Google Scholar] [CrossRef] [Green Version]

- Chan, T.F.; Yip, A.M.; Park, F.E. Simultaneous total variation image inpainting and blind deconvolution. Int. J. Imag. Syst. Tech. 2010, 15, 92–102. [Google Scholar] [CrossRef]

- Wei, Y.; Sun, J.X.; Gang, Z.; Teng, S.; Wen, G.J. PDE Image Inpainting with Texture Synthesis based on Damaged Region Classification. In Proceedings of the International Conference on Advanced Computer Control, Shenyang, China, 27–29 March 2010. [Google Scholar] [CrossRef]

- Shen, H.; Zhang, L. A MAP-Based Algorithm for Destriping and Inpainting of Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1492–1502. [Google Scholar] [CrossRef]

- Shen, H.; Liu, Y.; Ai, T.; Wang, Y.; Wu, B. Universal reconstruction method for radiometric quality improvement of remote sensing images. Int. J. Appl. Earth Obs. 2010, 12, 278–286. [Google Scholar] [CrossRef]

- Zhuang, L.; Bioucas-Dias, J.M. Fast Hyperspectral Image Denoising and Inpainting Based on Low-Rank and Sparse Representations. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2018, 11, 730–742. [Google Scholar] [CrossRef]

- Xu, X.; Shi, Z.; Pan, B. ℓ0-based sparse hyperspectral unmixing using spectral information and a multi-objectives formulation. ISPRS J. Photogrammetry Remote Sens. 2018, 141, 46–58. [Google Scholar] [CrossRef]

- Pan, B.; Shi, Z.; Xu, X.; Shi, T.; Zhang, N.; Zhu, X. CoinNet: Copy Initialization Network for Multispectral Imagery Semantic Segmentation. IEEE Geosci. Remote Sens. Lett. 2018, 16, 816–820. [Google Scholar] [CrossRef]

- Fadili, M.J.; Starck, J.L. EM Algorithm for Sparse Representation-based Image Inpainting. In Proceedings of the IEEE International Conference on Image Processing, Genova, Italy, 14 September 2005. [Google Scholar] [CrossRef]

- Shen, B.; Wei, H.; Zhang, Y.; Zhang, Y.J. Image inpainting via sparse representation. In Proceedings of the IEEE International Conference on Acoustics, Taipei, Taiwan, 19–24 April 2009. [Google Scholar] [CrossRef]

- Zhou, M.; Chen, H.; Paisley, J.; Ren, L.; Li, L.; Xing, Z.; Dunson, D.; Sapiro, G.; Carin, L. Nonparametric Bayesian dictionary learning for analysis of noisy and incomplete images. IEEE Trans. Image. Process. 2012, 21, 130–144. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Proc. 2006, 54, 4311. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Zhang, L.; Tao, D.; Zeng, C. Compressed Sensing-Based Inpainting of Aqua Moderate Resolution Imaging Spectroradiometer Band 6 Using Adaptive Spectrum-Weighted Sparse Bayesian Dictionary Learning. IEEE Trans. Geosci. Remote Sens. 2013, 52, 894–906. [Google Scholar] [CrossRef]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G. Online Learning for Matrix Factorization and Sparse Coding. J. Mach. Learn. Res. 2009, 11, 19–60. [Google Scholar] [CrossRef]

- Hao, H.; Bioucas-Dias, J.M.; Katkovnik, V. Interferometric Phase Image Estimation via Sparse Coding in the Complex Domain. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2587–2602. [Google Scholar] [CrossRef]

- Song, X.; Wu, L.; Hao, H.; Xu, W. Hyperspectral Image Denoising Based on Spectral Dictionary Learning and Sparse Coding. Electronics 2019, 8, 86. [Google Scholar] [CrossRef]

- Elad, M.; Mario, A.T.F.; Yi, M. On the Role of Sparse and Redundant Representations in Image Processing. Proc. IEEE 2010, 98, 972–982. [Google Scholar] [CrossRef]

- Andrew, W.; John, W.; Arvind, G.; Zihan, Z.; Hossein, M.; Yi, M. Toward a practical face recognition system: Robust alignment and illumination by sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 372. [Google Scholar] [CrossRef]

- Lu, C.; Shi, J.; Jia, J. Online Robust Dictionary Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar] [CrossRef]

- Zhao, C.; Wang, X.; Cham, W.K. Background Subtraction via Robust Dictionary Learning. EURASIP J. Image Vide. 2011, 2011, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Bissantz, N.; Dümbgen, L.; Munk, A.; Stratmann, B. Convergence analysis of generalized iteratively reweighted least squares algorithms on convex function spaces. Tech. Rep. 2008, 19, 1828–1845. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Decoding by linear programming. IEEE Trans. Inf. Theory 2005, 51, 4203–4215. [Google Scholar] [CrossRef]

- D’Errico, J. Inpainting nan elements in 3-d. Available online: http://www.mathworks.com/matlabcentral/fileexchange/21214-inpaintingnan-elements-in-3-d.htm (accessed on 15 April 2019).

- Schneider, C.; Gürenci, J. Mathematical Problems in Image Processing: Partial Differential Equations and the Calculus of Variations; Springer: Berlin, Germany, 2002. [Google Scholar]

- Cerra, D.; Müller, R.; Reinartz, P. Unmixing-based Denoising for Destriping and Inpainting of Hyperspectral Images. In Proceedings of the Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Shen, H.; Zhang, L. Hyperspectral image denoising using local low-rank matrix recovery and global spatial–spectral total variation. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2018, 11, 713–729. [Google Scholar] [CrossRef]

- Ghamisi, P.; Yokoya, N.; Li, J.; Liao, W.; Liu, S.; Plaza, J.; Rasti, B.; Plaza, A. Advances in Hyperspectral Image and Signal Processing: A Comprehensive Overview of the State of the Art. IEEE Geosci. Remote Sens. Mag. 2017, 5, 37–78. [Google Scholar] [CrossRef] [Green Version]

- Markku, M.K.; Alessandro, F. A closed-form approximation of the exact unbiased inverse of the Anscombe variance-stabilizing transformation. IEEE Trans. Image Process. 2011, 9, 2697–2698. [Google Scholar] [CrossRef]

| Index | Noisy Image | PDE | UBD | LLRSSTV | BPFA | INORSDL | |

|---|---|---|---|---|---|---|---|

| Gaussian i.i.d. noise | MPSNR (dB) | 19.7997 | 20.0392 | 34.4112 | 30.8946 | 35.6697 | 36.7168 |

| MSSIM | 0.4331 | 0.4435 | 0.9355 | 0.9120 | 0.9572 | 0.9687 | |

| Time (s) | - | 2 | 53 | 597 | 1126 | 903 | |

| Gaussian non-i.i.d noise | MPSNR (dB) | 28.1013 | 28.6221 | 37.5838 | 34.1243 | 32.8583 | 38.9195 |

| MSSIM | 0.7057 | 0.7206 | 0.9686 | 0.9580 | 0.8240 | 0.9895 | |

| Time (s) | - | 2 | 60 | 574 | 5152 | 1621 | |

| Laplacian noise | MPSNR (dB) | 33.1715 | 33.9045 | 37.9209 | 35.3268 | 38.2032 | 38.5285 |

| MSSIM | 0.9292 | 0.9457 | 0.9873 | 0.9728 | 0.9892 | 0.9914 | |

| Time (s) | - | 2 | 51 | 586 | 1525 | 1124 | |

| Poisson noise | MPSNR (dB) | 26.5466 | 26.9952 | 35.9059 | 33.8843 | 37.3994 | 40.6220 |

| MSSIM | 0.7476 | 0.7609 | 0.9647 | 0.9599 | 0.9669 | 0.9870 | |

| Time (s) | - | 1 | 36 | 287 | 9779 | 1531 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, X.; Wu, L. Hyperspectral Image Inpainting Based on Robust Spectral Dictionary Learning. Appl. Sci. 2019, 9, 3062. https://doi.org/10.3390/app9153062

Song X, Wu L. Hyperspectral Image Inpainting Based on Robust Spectral Dictionary Learning. Applied Sciences. 2019; 9(15):3062. https://doi.org/10.3390/app9153062

Chicago/Turabian StyleSong, Xiaorui, and Lingda Wu. 2019. "Hyperspectral Image Inpainting Based on Robust Spectral Dictionary Learning" Applied Sciences 9, no. 15: 3062. https://doi.org/10.3390/app9153062