Abstract

The optimal fixed-point quantum search (OFPQS) algorithm [Phys. Rev. Lett. 113, 210501 (2014)] achieves both the fixed-point property and quadratic speedup over classical algorithms, which gives a sufficient condition on the number of iterations to ensure the success probability is no less than a given lower bound (denoted by ). However, this condition is approximate and not exact. In this paper, we derive the sufficient and necessary condition on the number of feasible iterations, based on which the exact least number of iterations can be obtained. For example, when , iterations can be saved by almost 25%. Moreover, to find a target item certainly, setting directly , the quadratic advantage of the OFPQS algorithm will be lost, then, applying the OFPQS algorithm with requires multiple executions, which leads to a natural problem of choosing the optimal parameter . For this, we analyze the extreme and minimum properties of the success probability and further analytically derive the optimal which minimizes the query complexity. Our study can be a guideline for both the theoretical and application research on the fixed-point quantum search algorithms.

1. Introduction

Grover search [1,2] provides a quadratic speedup over classical search algorithms, and has been proven optimal [3,4,5,6]. However, there still exists the soufflé problem [7], i.e., the success probability will decline if the algorithm iterates too many times. Therefore, the Grover algorithm can only apply to the case where the optimal number of iterations [8] can be determined.

Based on the quantum amplitude amplification [9,10,11,12] and phase-matching methods [13,14,15,16], a fixed-point quantum search algorithm [17] has been proposed, where the final state of the algorithm converges to the target states and the success probability increases as the number of iterations grows. This algorithm applies to the case where the lower bound (denoted by ) of the fraction of target items (denoted by ) is known. However, the advantage of quadratic speedup is lost [18,19].

Fortunately, Yoder et al. developed the optimal fixed-point quantum search (OFPQS) algorithm [20], which achieves both the fixed-point property and optimal query complexity, where the success probability for any unknown can be always no less than a given value (denoted by ) between 0 and 1, as long as the given condition on the number of iterations is satisfied. However, this condition is approximate and not tight, i.e., the required number of iterations might be further reduced.

In addition, the lower bound of success probability of the OFPQS algorithm is lower than 100%, because setting , the algorithm will change back to the original fixed-point algorithm [17] and thus lose the quadratic speedup [20]. However, in practical search problems, it is often desired to find a target item eventually, rather than just succeed with probability above a lower bound. For this, a natural strategy is to make multiple trials of the OFPQS algorithm with until success. This brings up a new problem, that is, how to choose the optimal parameters to find a target item as soon as possible.

In this paper, we expect to give the minimum feasible number of iterations, analyze the extreme and minimum properties of the success probability, and further derive the optimal parameter of the OFPQS algorithm to find a target item with the minimum query complexity.

The paper is organized as follows: In Section 2, we briefly introduce the OFPQS algorithm. In Section 3, we derive the optimal number of iterations and analyze the properties of the success probability of OFPQS algorithm. In Section 4, we give the selection method of optimal parameter . Section 5 comprises of discussions about the effects of the optimal number of iterations and the optimal , as well as the upper bound of expected queries of applying the OFPQS algorithm to find a target item certainly. Finally, a brief conclusion is given in Section 6.

2. OFPQS Algorithm Revisited

Based on the multi-phase matching method [21,22], the OFPQS algorithm [20] overcomes the souffle problem in the original Grover algorithm [1] as well as the loss of quadratic speedup in the original fixed-point quantum search algorithm [17].

The initial state of the OFPQS algorithm is prepared to be , where A can be an arbitrary unitary operator. Denote the equal superposition of all target and nontarget states as and , respectively, i.e.,

where M is the number of target items in the database of size N, is a Boolean function identifying the target state(s), i.e., if is a target state, then —otherwise, , with and H being the Hadamard transform, can be written as

where represents the fraction of target items.

The sequence of operations performed on is given by (see also Equation (2.6) of [21])

where represents the query complexity of sequence , as each generalized Grover operation requires two Oracle queries [20], and

where and are the selective phase shifts () (see also Equations (1) and (2) of [13]),

Under the multiphase matching condition (see also Equation (11) of [20])

the final state can be obtained as

where , is the success probability of the algorithm (see also Equation (1) of [20]),

and is the Lth Chebyshev polynomial of the first kind [23],

To ensure the success probability no less than a given lower bound for any , an approximated condition of L is given as

which is sufficient but not necessary, as shown below.

3. Performance Analysis of the OFPQS Algorithm

After deep theoretical analysis, we can obtain three new properties on the number of iterations and success probability of the OFPQS algorithm, which are as follows.

Property 1.

Given a lower bound of the fraction λ of target items, the least exact number of iterations l (denoted by ) of the OFPQS algorithm that enables the success probability for any with , can be given in the form

Proof.

Based on Equation (10), for arbitrary and , a necessary and sufficient condition to make the success probability can be given as follows,

due to the fact that

Note that if , then, and ; if , then, . Due to and monotonically increases for , Equation (14) can be further reduced to

Then, according to , we have

Therefore, to ensure that for any , the least number of iterations, expressed by Equation (13), can be finally obtained. □

Property 2.

The extreme properties of the success probability as a function of can be given as follows (see Proof in Appendix A):

For , when , has local maximum points

and l local minimum points

When , there are no local extreme points.

Property 3.

The success probability with has a minimum value (denoted by ) on the range for , which can be written as (for a proof, see Appendix B)

Note that the case can already be well disposed of by classical search.

4. Optimization of Parameters of the OFPQS Algorithm

In practical applications of the OFPQS algorithm, if set , then, from Equation (12) or Equation (13) it can be found that the quadratic speedup over classical algorithms will be lost; while, if set , the output of the OFPQS algorithm is not necessarily a target item. Inspired by [4], which achieves about 12% reduction of the expected queries through stopping the Grover algorithm short of the optimal number of iterations and restarting again in case of failure, to find a target item as soon as possible, a natural strategy is to set the lower bound of success probability and repeat the OFPQS algorithm until it succeeds. Under this strategy, in order to find the optimal parameter , we shall estimate the expected number of Oracle queries of the OFPQS algorithm before a target item is found.

First, a single execution of the OFPQS algorithm requires at least queries, since iterations are required in the sequence of Equation (4). Second, judging whether the algorithm is successful from the measurement result also takes one query. Then, define , we can get the expected number of Oracle queries as

where

is the probability of occurrence of the j-th execution of the OFPQS algorithm. From Equations (20)–(22), we can further obtain that

where represents the upper bound of .

We can define the optimal as the one that makes the upper bound of expected number of queries as few as possible. Detailed analysis shows that such optimal (denoted by ) exists and can be analytically written in the following form (Proof see Appendix C),

where

is defined by Equation (11),

is the unique solution of equation

and x satisfies

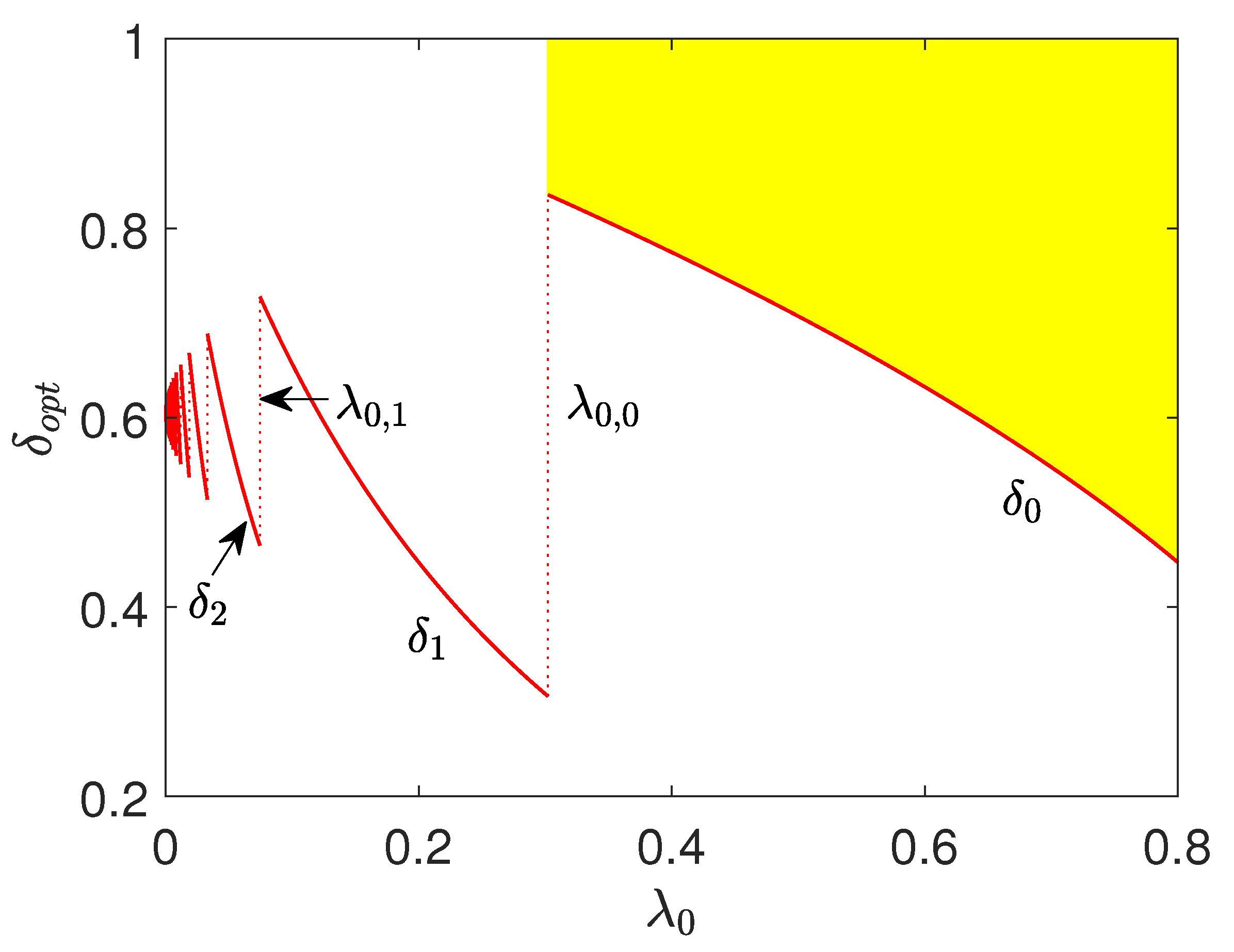

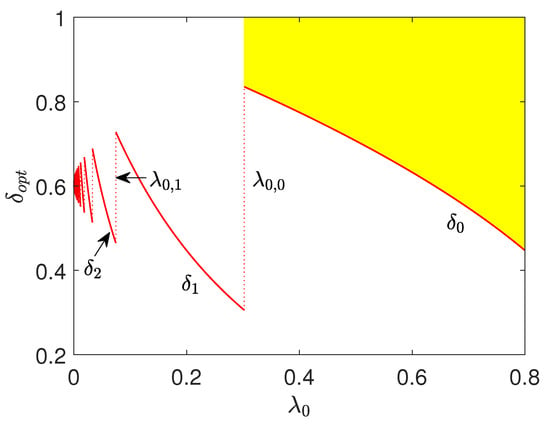

Note that, when () or , the corresponding has multiple values, as shown in Figure 1.

Figure 1.

(Color online.) The optimal parameter as a function of the lower bound of the fraction of target items. The red solid curves, red dotted vertical lines and the yellow (gray) area represent , (), and the value of for , respectively.

Note that, with the above optimal parameters, we can obtain the corresponding upper bound of the expected number of queries, i.e.,

5. Discussion

In this section, the effects of optimal parameters of Equation (13) and of Equation (24), and the complexity of of Equation (29) are discussed as follows.

5.1. Effects of and

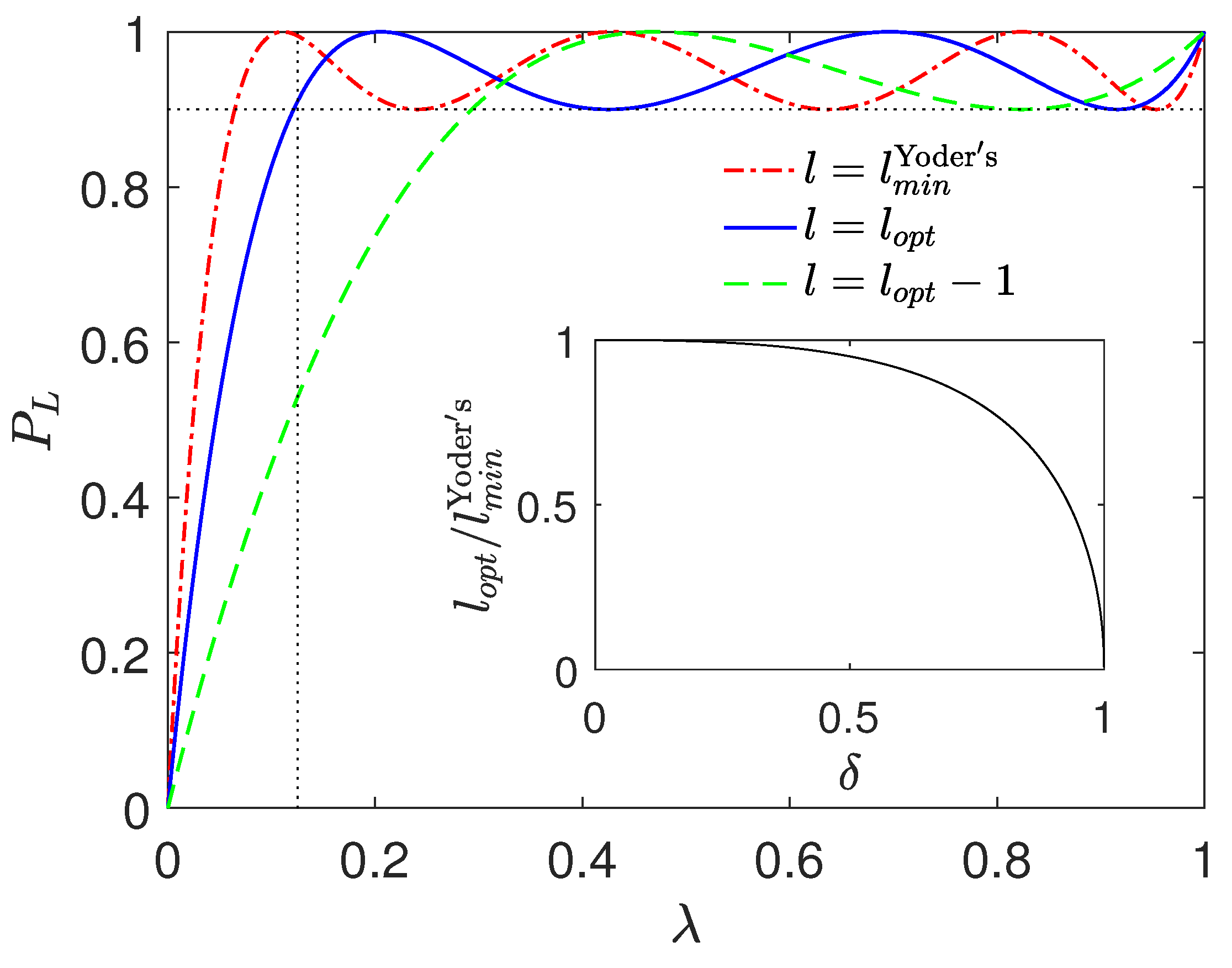

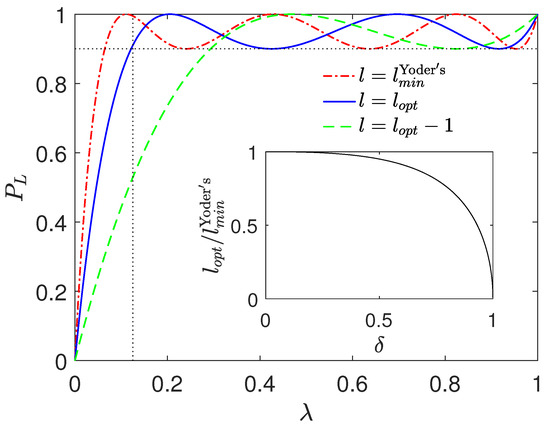

Different from our optimal number of iterations of Equation (13), to achieve a success probability no less than for any in the OFPQS algorithm [20], another number of iterations can be obtained from Equation (12), denoted by

As a comparison, Figure 2 shows some examples of the success probability for the different number of iterations l with . We can see that both and can achieve the goal for ; while can’t. Therefore, is just the least number of iterations required. When , , then, from Equations (13) and (30) it follows that

which is shown in the inset of Figure 2.

Figure 2.

(Color online.) The success probability versus the fraction of target items for different number of iterations l with , (dotted vertical line) and (dotted horizontal line). The red dashed-dotted, blue solid, and green dashed curves correspond to , and , respectively. Inset: We plot against with .

We can see that when , . For example, when , , almost a quarter of iterations can be saved.

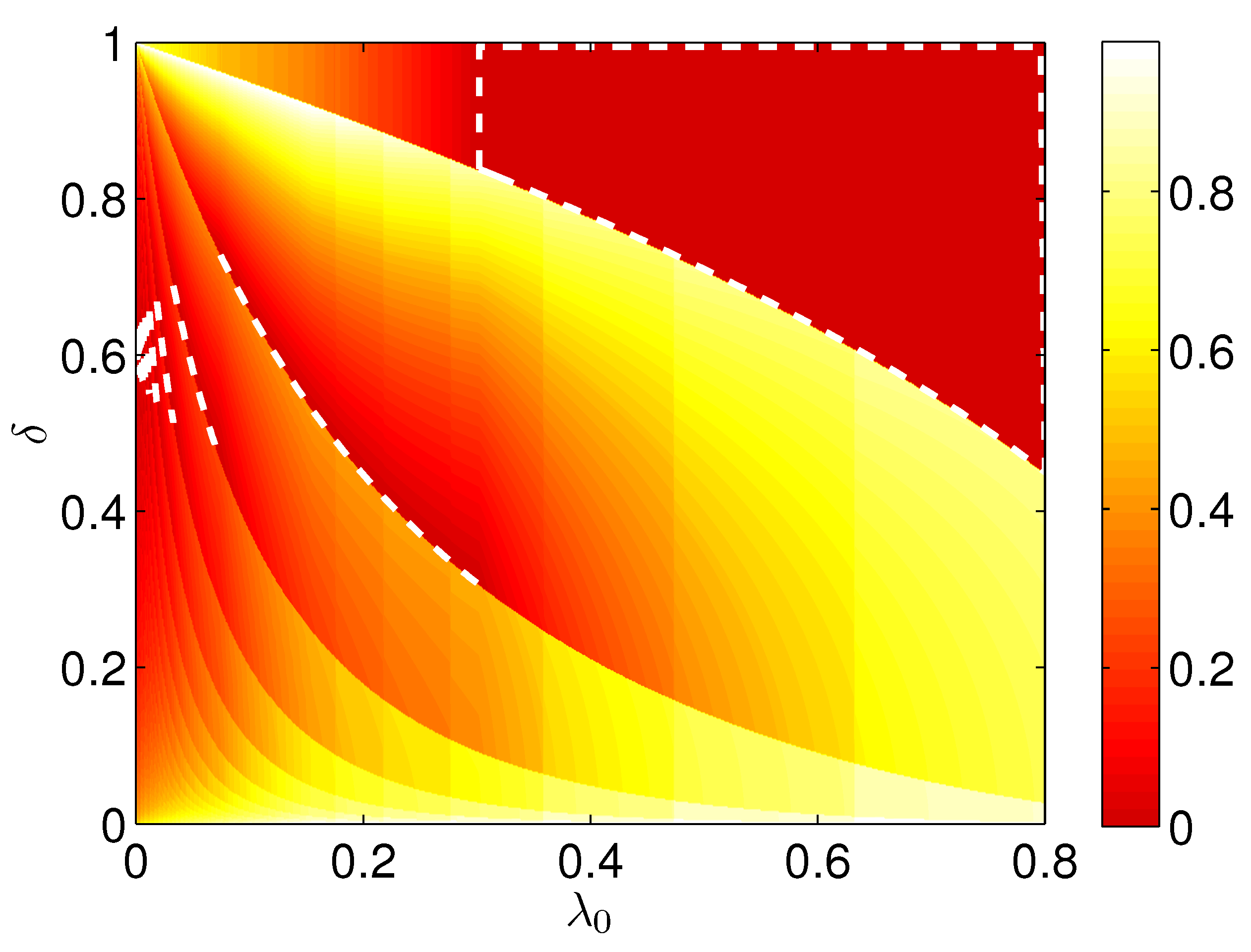

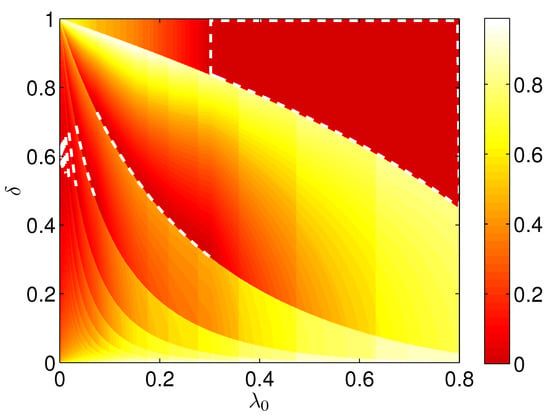

For any given lower bound of the fraction of target items, by theoretical analysis we have obtained the analytical expression of the optimal , i.e., defined by Equation (24). In order to compare the results of and with , we define to be the normalized relative value of with respect to , i.e.,

where

The dependence of on and is shown in Figure 3, where the darker the color, the smaller the value. For ease of comparison, is marked by the white dashed curves. We can see that the color in the area corresponding to is darkest, which indicates that the optimal parameter indeed enables the upper bound of expected queries to reach the minimum.

Figure 3.

(Color online.) The normalized relative value as a function of and . The white dashed curves correspond to the optimal parameter of Equation (24).

5.2. Complexity of

Based on Equations (13), (20), (23), (24), and (29), we can obtain the expression of as below:

where and are defined by Equations (25) and (26), respectively. To analyze the complexity of , first, for any known , we can determine an integer

such that , where is as defined by Equation (A26) and is the solution of Equation (28). From Equations (26) and (27) it follows that

then, we have . When , , , thus,

where

due to , and

due to . Combining Equations (37), (38), and (39), we can obtain

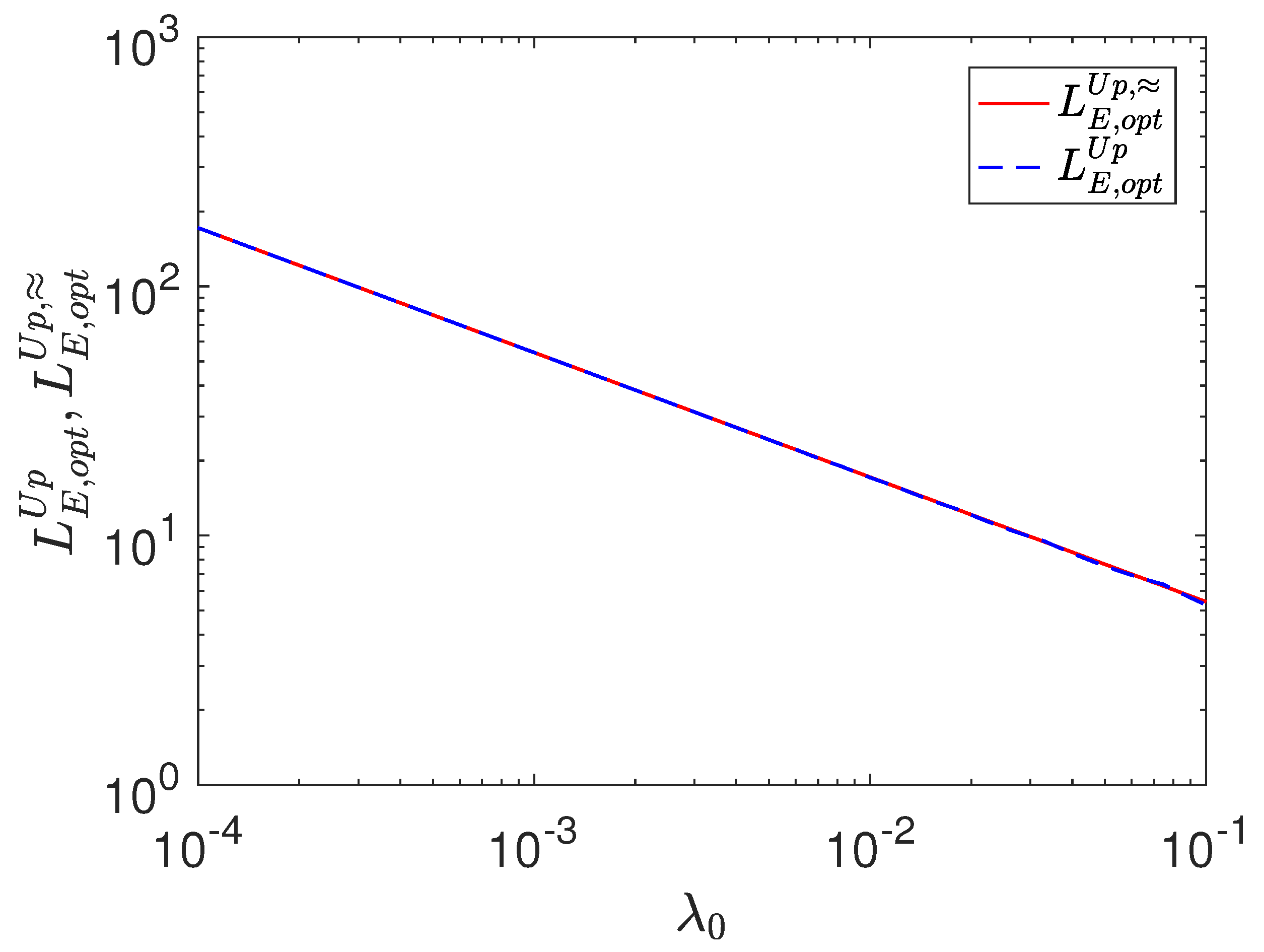

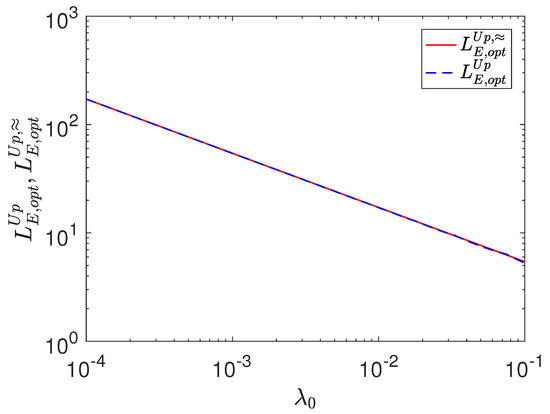

Therefore, we conclude that . The corresponding graphs of and as functions of are shown in Figure 4, which shows a good agreement. Note that, for an unknown and a given , there also exists an upper bound of the expected queries of the classical search, namely, . This means that the OFPQS algorithm with the optimal parameters achieves a quadratic speedup over classical algorithms.

Figure 4.

(Color online.) The optimal upper bound of expected queries and its approximation as functions of the lower bound of the fraction of target items. The blue dashed and red solid curves represent and , respectively.

6. Conclusions

In summary, we have analyzed the performance and optimized the parameters of the OFPQS algorithm. We derived the least number of iterations (denoted by ) of the OFPQS algorithm to ensure the success probability for a given lower bound of the fraction of target items no less than . Moreover, all extreme points as well as the minimum value of the success probability of the OFPQS algorithm were analyzed. In addition, we calculated the upper bound of expected queries of repeatedly executing the OFPQS algorithm to find a target item, and further analytically derived the optimal parameter that minimizes this upper bound. Compared with the minimum number of iterations given by [20], our optimal number of iterations has a significant reduction, e.g., when , almost a quarter of iterations can be saved. Our study can provide a guideline for the research and application of the OFPQS algorithm.

Author Contributions

Conceptualization, T.B. and D.H.; Writing—original draft, T.B.; Writing—review and editing, D.H.

Funding

This research was funded by the NATIONAL NATURAL SCIENCE FOUNDATION OF CHINA grant number 61801522.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviation is used in this manuscript:

| OFPQS | Optimal fixed-point quantum search |

Appendix A. Proof of the Extreme Properties of on

Then, for any and ,

Note that when . Furthermore, we can get

Note that, due to , and for and .

For the case , from Equations (10), (A2), and (A4), it follows that when , , where , thus, the local maximum points can be obtained as

Additionally, because and , has the same number of local maximum points and minimum points. Thus, when , , the corresponding local minimum points can be found, denoted by

For the case , there is no available k for Equation (A4), namely, has no extreme points.

Appendix B. Proof of the Minimum of on of Equation (20)

Based on Equation (13), for any , if the local minimum point (defined by Equation (19)) exists and , then the minimum of with can be obtained as , due to for any , and for any . Note that, . While if doesn’t exist or , then monotonically increases on the range , thus, the minimum success probability is . Based on these, we can give the proof of (defined by Equation (20)) for and as follows.

In the case of , from Equation (13) it follows that , thus, for , does not exist and

In the case of , from Equation (13) it follows that . Then, for a given and , exists and can be written as a step function with respect to , i.e., for ,

where and are defined by Equations (11) and (25), respectively. Note that, and

From Equation (A8), we can see that monotonically decreases on the range , then,

Moreover, increases as k grows. Therefore,

Note that

Then, when , , and thus we can obtain that .

Appendix C. Proof of the Optimal Parameter δ opt of Equation (24)

Based on Equations (20) and (23), for a given , the upper bound of the expected number of queries as a function of can be obtained as follows:

where is defined by Equation (13). We can see that is a step function with , i.e.,

due to for , where , consistent with Equation (25). From Equation (A14) it follows that monotonically increases on range , then

In addition, , therefore,

Note that if () is minimum, then is optimal; while if is minimum, then arbitrary is optimal.

To determine , we define

Moreover, we can get the derivative of with respect to , i.e.,

where

Solving gives rise to a local minimum point, denoted by , which satisfies

where is the unique solution of Equation (28). Then, we can obtain ()

Note that for ,

where

Define

Then, through further analysis about , we can find the following two results: (1) On the range (), there is a solution for of equation , denoted by . (2) for , and for . Corresponding reasons are given as follows:

Note that y increases as grows, and according to Equations (A22), (A26), and (A29), for , and for . When k is sufficiently large, i.e., , , then simple algebra shows that,

which can also be numerically proven for finite k, for example . Therefore, based on the intermediate value theorem (See p. 271 of [24]), we confirm that there exists a solution of between and , denoted by . Correspondingly, from Equation (A29), the solution of for , denoted by , can be finally obtained as defined in Equation (26).

(2) Based on Equation (29), we can see that and have the same sign. Moreover, we can obtain the derivative of with respect to y, as below,

Due to monotonically increases for and when k is sufficiently large, i.e., , we have , and

Therefore, monotonically increases on the range , yielding that for and for . Then, the corresponding results about follow immediately, which can also be numerically proven to hold when k is small, for example, 12.

References

- Grover, L.K. A fast quantum mechanical algorithm for database search. In Proceedings of the Twenty-Eighth Annual ACM Symposium on Theory of Computing; ACM: Philadelphia, PA, USA, 1996; pp. 212–219. [Google Scholar]

- Grover, L.K. Quantum computers can search arbitrarily large databases by a single query. Phys. Rev. Lett. 1997, 79, 4709. [Google Scholar] [CrossRef]

- Bennett, C.H.; Bernstein, E.; Brassard, G.; Vazirani, U. Strengths and weaknesses of quantum computing. SIAM J. Comput. 1997, 26, 1510. [Google Scholar] [CrossRef]

- Boyer, M.; Brassard, G.; Høyer, P.; Tapp, A. Tight bounds on quantum searching. Fortschr. Phys. 1998, 46, 493. [Google Scholar] [CrossRef]

- Zalka, C. Grover’s quantum searching algorithm is optimal. Phys. Rev. A 1999, 60, 2746. [Google Scholar] [CrossRef]

- Grover, L.K.; Radhakrishnan, J. Is partial quantum search of a database any easier? In Proceedings of the Seventeenth Annual ACM Symposium on Parallelism in Algorithms and Architectures; ACM: Las Vegas, NV, USA, 2005; pp. 186–194. [Google Scholar]

- Brassard, G. Searching a quantum phone book. Science 1997, 275, 627. [Google Scholar] [CrossRef]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information, 2nd ed; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Brassard, G.; Høyer, P. An exact quantum polynomial-time algorithm for Simon’s problem. In Proceedings of the Fifth Israel Symposium on the Theory of Computing Systems; IEEE Computer Society: Washington, DC, USA, 1997; pp. 12–23. [Google Scholar]

- Grover, L.K. Quantum computers can search rapidly by using almost any transformation. Phys. Rev. Lett. 1998, 80, 4329. [Google Scholar] [CrossRef]

- Brassard, G.; Høyer, P.; Tapp, A. Quantum counting. In International Colloquium on Automata, Languages, and Programming; Larsen, K.G., Skyum, S., Winskel, G., Eds.; Springer: Berlin, Germany, 1998; pp. 820–831. [Google Scholar]

- Brassard, G.; Høyer, P.; Mosca, M.; Tapp, A. Quantum amplitude amplification and estimation. In Quantum Computation and Information; Lomonaco, S.J., Jr., Brandt, H.E., Eds.; AMS: Providence, RI, USA, 2002; pp. 53–74. [Google Scholar]

- Long, G.L.; Li, Y.S.; Zhang, W.L.; Niu, L. Phase matching in quantum searching. Phys. Lett. A 1999, 262, 27. [Google Scholar] [CrossRef]

- Høyer, P. Arbitrary phases in quantum amplitude amplification. Phys. Rev. A 2000, 62, 052304. [Google Scholar] [CrossRef]

- Long, G.-L.; Li, X.; Sun, Y. Phase matching condition for quantum search with a generalized initial state. Phys. Lett. A 2002, 294, 143. [Google Scholar] [CrossRef]

- Li, P.; Li, S. Phase matching in Grover’s algorithm. Phys. Lett. A 2007, 366, 42. [Google Scholar] [CrossRef]

- Grover, L.K. Fixed-point quantum search. Phys. Rev. Lett. 2005, 95, 150501. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, S.; Radhakrishnan, J.; Raghunathan, N. Bounds for error reduction with few quantum queries. In Approximation, Randomization and Combinatorial Optimization. Algorithms and Techniques; Springer: Berlin, Germany, 2005; pp. 245–256. [Google Scholar]

- Tulsi, T.; Grover, L.K.; Patel, A. A new algorithm for fixed point quantum search. Quantum Inf. Comput. 2006, 6, 483. [Google Scholar]

- Yoder, T.J.; Low, G.H.; Chuang, I.L. Fixed-point quantum search with an optimal number of queries. Phys. Rev. Lett. 2014, 113, 210501. [Google Scholar] [CrossRef] [PubMed]

- Toyama, F.M.; van Dijk, W.; Nogami, Y.; Tabuchi, M.; Kimura, Y. Multiphase matching in the Grover algorithm. Phys. Rev. A 2008, 77, 042324. [Google Scholar] [CrossRef]

- Toyama, F.M.; Kasai, S.; van Dijk, W.; Nogami, Y. Matched-multiphase Grover algorithm for a small number of marked states. Phys. Rev. A 2009, 79, 014301. [Google Scholar] [CrossRef]

- Mason, J.; Handscomb, D. Chebyshev Polynomials; CRC Press: Boca Raton, FL, USA, 2002. [Google Scholar]

- Zwillinger, D. CRC Standard Mathematical Tables and Formulae; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).