Remote Monitoring of Vital Signs in Diverse Non-Clinical and Clinical Scenarios Using Computer Vision Systems: A Review

Abstract

:1. Introduction

2. Video Camera Imaging Based Method

2.1. Basic Framework

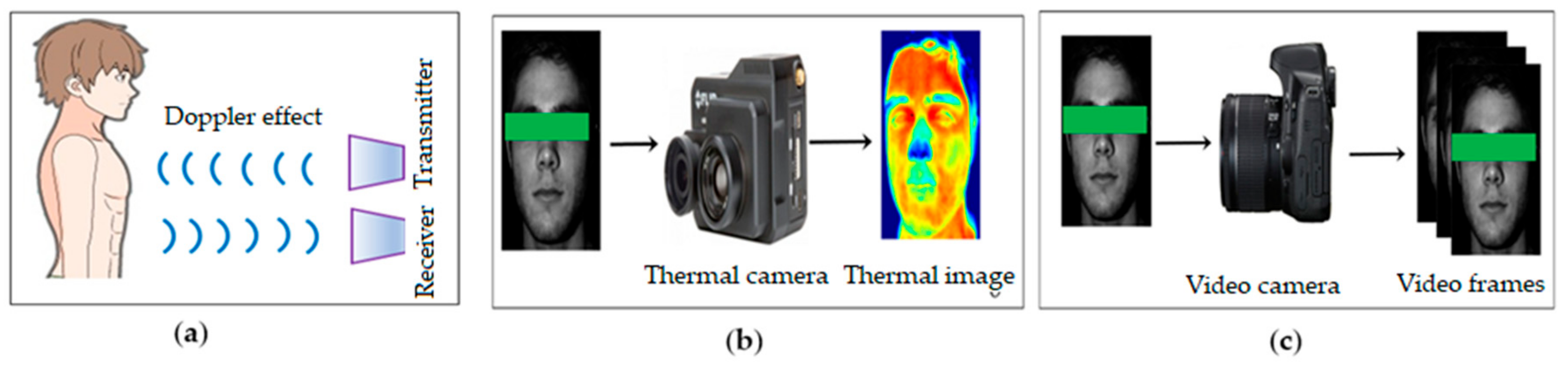

2.1.1. Data Acquisition

2.1.2. ROI Detection

2.1.3. Raw Signal Extraction

2.1.4. Noise Artefact Removal

2.1.5. Vital Sign Extraction

2.2. Motion Based Methods

2.3. Colour-Based Method

3. Different Aspects of Colour-Based Method

3.1. Motion Artefacts

3.2. Illumination Variations

3.3. Both Illumination Variations and Motion Artefacts

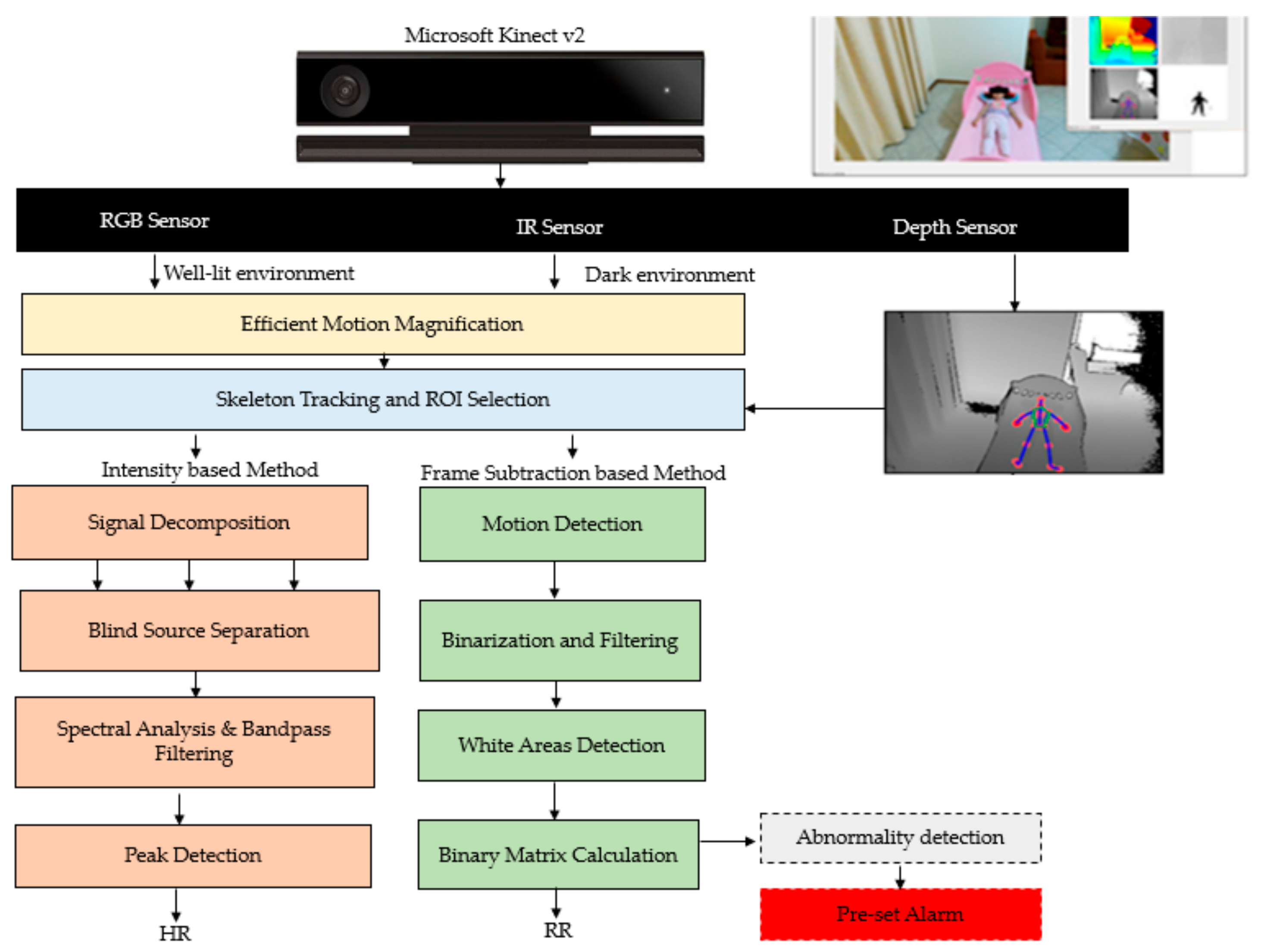

3.4. Alternate Sensors

3.5. Different Subjects

3.6. Different Vital Signs

3.7. Multiple ROIs

3.8. Long Distance and Multiple Persons Simultaneously

3.9. Others

4. Application

4.1. Clinical Application

4.1.1. Neonatal Monitoring

4.1.2. Assessing Patients with Chronic Pain

4.1.3. Critical Patient Monitoring

4.1.4. Arrythmia Detection

4.1.5. Anesthesia Monitoring

4.1.6. Monitoring Dialysis Patients

4.1.7. Burn care

4.2. Non-Clinical Application

4.2.1. Home Health Care

4.2.2. Fitness Monitoring

4.2.3. Sleep Monitoring

4.2.4. Polygraph

4.2.5. Living Skin Detection

4.2.6. Security

4.2.7. Emotion/Stress Monitoring

4.2.8. Driver Monitoring

4.2.9. War Zone or Natural Calamity

4.2.10. Animal Research

5. Research Gaps and Challenges and Future Direction

- Most of the current studies focus on either motion artefacts or illumination variations. However, in real time scenarios both motion and illumination play an important role in degrading accuracy and usability. Only a few researchers have given solutions to both motion artefacts and illumination variations. Therefore, it can be a further topic of research for new researchers to develop a method which can deal with both motion and illumination artefacts.

- Researchers are still mainly interested in extracting HR and RR. Nevertheless, blood oxygen also plays an important role in assessing a human’s health condition. Blood glucose [151,152,153] is another important vital sign which helps to identify and maintain the welfare of diabetes patients. Therefore, more research needs to be done to monitor blood oxygen saturation and blood glucose.

- All of the studies except Pho et al. (3 subjects), Zhao et al. (2 subjects) and Al-Naji et al. (6 subjects), considered only a single subject at a time when monitoring vital signs. Detecting multiple persons simultaneously is a major issue in the current studies that need to be overcome in the future.

- The distance between the camera and a subject is another challenge for current researchers. All the studies except Al-Naji et al. (60 m) considered very small distances while monitoring vital signs.

- The number of ROI and selecting ROI are important issues to overcome as very few researchers have paid attention to these problems well enough for industrial or commercial use. Most of the studies have used manual processes while some have used automatic methods to select from a very limited number of ROI in limited scenarios. However, advanced techniques are required to automatically select multiple ROIs.

- Researchers mainly considered healthy young participants as their subjects to do the experiments and did not use patients, elderly people and premature babies. More research needs to be done considering different subjects such as premature babies and elderly people.

- Most of the existing works considered privately owned databases. Only a few used publicly available databases such as the MAHNOB-HCI (human computer interaction) or DEAP (Database for Emotion Analysis using Physiological Signals). Lack of publicly available datasets taken under a realistic situation is another challenge to deal with in the future.

- To validate proposed methods, researchers have mainly used a pulse oximeter as a ground truth. The ECG was used by very few researchers as a ground truth. There are indications that no commercial instrumentation is truly accurate and most are simply accepted as accurate [110].

- Future research could also include multi-camera fusion as well as new non-visible light sensors to tackle the visible light camera’s shortcomings.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Brüser, C.; Antink, C.H.; Wartzek, T.; Walter, M.; Leonhardt, S.; Brueser, C. Ambient and unobtrusive cardiorespiratory monitoring techniques. IEEE Rev. Biomed. Eng. 2015, 8, 30–43. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Cheng, J.; Song, R.; Liu, Y.; Ward, R.; Wang, Z.J. Video-Based Heart Rate Measurement: Recent Advances and Future Prospects. IEEE Trans. Instrum. Meas. 2018, 68, 3600–3615. [Google Scholar] [CrossRef]

- Zhao, F.; Li, M.; Qian, Y.; Tsien, J.Z. Remote measurements of heart and respiration rates for telemedicine. PLoS ONE 2013, 8, e71384. [Google Scholar] [CrossRef] [PubMed]

- Kranjec, J.; Beguš, S.; Geršak, G.; Drnovšek, J. Non-contact heart rate and heart rate variability measurements: A review. Biomed. Signal Process. Control 2014, 13, 102–112. [Google Scholar] [CrossRef]

- Rouast, P.V.; Adam, M.T.P.; Chiong, R.; Cornforth, D.; Lux, E. Remote heart rate measurement using low-cost RGB face video: A technical literature review. Front. Comput. Sci. 2018, 12, 858–872. [Google Scholar] [CrossRef]

- Phansalkar, S.; Edworthy, J.; Hellier, E.; Seger, D.L.; Schedlbauer, A.; Avery, A.J.; Bates, D.W. A review of human factors principles for the design and implementation of medication safety alerts in clinical information systems. J. Am. Med. Inform. Assoc. 2010, 17, 493–501. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Thakor, N. Photoplethysmography revisited: From contact to noncontact, from point to imaging. IEEE Trans. Biomed. Eng. 2016, 63, 463–477. [Google Scholar] [CrossRef]

- Charlton, P.H.; Bonnici, T.; Tarassenko, L.; Alastruey, J.; Clifton, D.; Beale, R.; Watkinson, P. Extraction of respiratory signals from the electrocardiogram and photoplethysmogram: Technical and physiological determinants. Physiol. Meas. 2017, 38, 669–690. [Google Scholar] [CrossRef]

- Al-Naji, A.; Gibson, K.; Chahl, J. Remote sensing of physiological signs using a machine vision system. J. Med. Eng. Technol. 2017, 41, 396–405. [Google Scholar] [CrossRef]

- Aarts, L.A.; Jeanne, V.; Cleary, J.P.; Lieber, C.; Nelson, J.S.; Oetomo, S.B.; Verkruysse, W. Non-contact heart rate monitoring utilizing camera photoplethysmography in the neonatal intensive care unit—A pilot study. Early Hum. Dev. 2013, 89, 943–948. [Google Scholar] [CrossRef]

- Zaunseder, S.; Henning, A.; Wedekind, D.; Trumpp, A.; Malberg, H. Unobtrusive acquisition of cardiorespiratory signals. Somnologie 2017, 21, 93–100. [Google Scholar] [CrossRef]

- Al-Naji, A.; Gibson, K.; Lee, S.-H.; Chahl, J. Monitoring of cardiorespiratory signal: Principles of remote measurements and review of methods. IEEE Access 2017, 5, 15776–15790. [Google Scholar] [CrossRef]

- Scalise, L. Non contact heart monitoring. In Advances in Electrocardiograms-Methods and Analysis; IntechOpen: London, UK, 2012. [Google Scholar]

- Tarjan, P.P.; McFee, R. Electrodeless measurements of the effective resistivity of the human torso and head by magnetic induction. IEEE Trans. Biomed. Eng. 1968, 4, 266–278. [Google Scholar] [CrossRef] [PubMed]

- Guardo, R.; Trudelle, S.; Adler, A.; Boulay, C.; Savard, P. Contactless recording of cardiac related thoracic conductivity changes. In Proceedings of the 1995 IEEE 17th International Conference of the Engineering in Medicine and Biology Society, Montreal, QC, Canada, 20–23 September 1995; pp. 1581–1582. [Google Scholar]

- Richer, A.; Adler, A. Eddy current based flexible sensor for contactless measurement of breathing. In Proceedings of the 2005 IEEE Instrumentation and Measurement Technology Conference Proceedings, Ottawa, ON, Canada, 16–19 May 2005; pp. 257–260. [Google Scholar]

- Steffen, M.; Aleksandrowicz, A.; Leonhardt, S. Mobile noncontact monitoring of heart and lung activity. IEEE Trans. Biomed. Circuits Syst. 2007, 1, 250–257. [Google Scholar] [CrossRef] [PubMed]

- Vetter, P.; Leicht, L.; Leonhardt, S.; Teichmann, D. Integration of an electromagnetic coupled sensor into a driver seat for vital sign monitoring: Initial insight. In Proceedings of the 2017 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Vienna, Austria, 27–28 June 2017; pp. 185–190. [Google Scholar]

- Dalal, H.; Basu, A.; Abegaonkar, M.P. Remote sensing of vital sign of human body with radio frequency. CSI Trans. ICT 2017, 5, 161–166. [Google Scholar] [CrossRef]

- Rabbani, M.S.; Ghafouri-Shiraz, H. Ultra-wide patch antenna array design at 60 GHz band for remote vital sign monitoring with Doppler radar principle. J. Infrared Millim. Terahertz Waves 2017, 38, 548–566. [Google Scholar] [CrossRef]

- Scalise, L.; Marchionni, P.; Ercoli, I. Optical method for measurement of respiration rate. In Proceedings of the 2010 IEEE International Workshop on Medical Measurements and Applications, Ottawa, ON, Canada, 30 April–1 May 2010; pp. 19–22. [Google Scholar]

- Lohman, B.; Boric-Lubecke, O.; Lubecke, V.; Ong, P.; Sondhi, M. A digital signal processor for Doppler radar sensing of vital signs. IEEE Eng. Med. Biol. Mag. 2002, 21, 161–164. [Google Scholar] [CrossRef] [Green Version]

- Mercuri, M.; Liu, Y.-H.; Lorato, I.; Torfs, T.; Bourdoux, A.; Van Hoof, C. Frequency-Tracking CW doppler radar solving small-angle approximation and null point issues in non-contact vital signs monitoring. IEEE Trans. Biomed. Circuits Syst. 2017, 11, 671–680. [Google Scholar] [CrossRef]

- Nosrati, M.; Shahsavari, S.; Lee, S.; Wang, H.; Tavassolian, N. A Concurrent Dual-Beam Phased-Array Doppler Radar Using MIMO Beamforming Techniques for Short-Range Vital-Signs Monitoring. IEEE Trans. Antennas Propag. 2019, 67, 2390–2404. [Google Scholar] [CrossRef]

- Marchionni, P.; Scalise, L.; Ercoli, I.; Tomasini, E.P. An optical measurement method for the simultaneous assessment of respiration and heart rates in preterm infants. Rev. Sci. Instrum. 2013, 84, 121705. [Google Scholar] [CrossRef]

- Sirevaag, E.J.; Casaccia, S.; Richter, E.A.; O’Sullivan, J.A.; Scalise, L.; Rohrbaugh, J.W. Cardiorespiratory interactions: Noncontact assessment using laser Doppler vibrometry. Psychophysiology 2016, 53, 847–867. [Google Scholar] [CrossRef] [PubMed]

- Holdsworth, D.W.; Norley, C.J.D.; Frayne, R.; Steinman, D.A.; Rutt, B.K. Characterization of common carotid artery blood-flow waveforms in normal human subjects. Physiol. Meas. 1999, 20, 219–240. [Google Scholar] [CrossRef] [PubMed]

- Arlotto, P.; Grimaldi, M.; Naeck, R.; Ginoux, J.-M. An ultrasonic contactless sensor for breathing monitoring. Sensors 2014, 14, 15371–15386. [Google Scholar] [CrossRef] [PubMed]

- Min, S.D.; Yoon, D.J.; Yoon, S.W.; Yun, Y.H.; Lee, M. A study on a non-contacting respiration signal monitoring system using Doppler ultrasound. Med. Biol. Eng. 2007, 45, 1113–1119. [Google Scholar]

- Yang, M.; Liu, Q.; Turner, T.; Wu, Y. Vital sign estimation from passive thermal video. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Bennett, S.; El Harake, T.N.; Goubran, R.; Knoefel, F. Adaptive Eulerian Video Processing of Thermal Video: An Experimental Analysis. IEEE Trans. Instrum. Meas. 2017, 66, 2516–2524. [Google Scholar] [CrossRef]

- Cardoso, J.-F. Blind signal separation: Statistical principles. Proc. IEEE 1998, 86, 2009–2025. [Google Scholar] [CrossRef]

- Elphick, H.E.; Alkali, A.H.; Kingshott, R.K.; Burke, D.; Saatchi, R. Exploratory study to evaluate respiratory rate using a thermal imaging camera. Respiration 2019, 97, 205–212. [Google Scholar] [CrossRef]

- Luca, C.; Corciovă, C.; Andriţoi, D.; Ciorap, R. The Use of Thermal Imaging Techniques as a Method of Monitoring the New Born. In Proceedings of the 6th International Conference on Advancements of Medicine and Health Care through Technology, Cluj-Napoca, Romania, 17–20 October 2018; pp. 35–39. [Google Scholar]

- Abbas, A.K.; Heimann, K.; Jergus, K.; Orlikowsky, T.; Leonhardt, S. Neonatal non-contact respiratory monitoring based on real-time infrared thermography. Biomed. Eng. Online 2011, 10, 93. [Google Scholar] [CrossRef]

- Blanik, N.; Abbas, A.K.; Venema, B.; Blazek, V.; Leonhardt, S. Hybrid optical imaging technology for long-term remote monitoring of skin perfusion and temperature behavior. J. Biomed. Opt. 2014, 19, 16012. [Google Scholar] [CrossRef]

- Chauvin, R.; Hamel, M.; Brière, S.; Ferland, F.; Grondin, F.; Létourneau, D.; Tousignant, M.; Michaud, F. Contact-Free respiration rate monitoring using a pan–tilt thermal camera for stationary bike telerehabilitation sessions. IEEE Syst. J. 2014, 10, 1046–1055. [Google Scholar] [CrossRef]

- Pereira, C.B.; Yu, X.; Czaplik, M.; Rossaint, R.; Blazek, V.; Leonhardt, S. Remote monitoring of breathing dynamics using infrared thermography. Biomed. Opt. Express 2015, 6, 4378–4394. [Google Scholar] [CrossRef] [PubMed]

- Garbey, M.; Sun, N.; Merla, A.; Pavlidis, I. Contact-Free measurement of cardiac pulse based on the analysis of thermal imagery. IEEE Trans. Biomed. Eng. 2007, 54, 1418–1426. [Google Scholar] [CrossRef] [PubMed]

- Pavlidis, I.; Dowdall, J.; Sun, N.; Puri, C.; Fei, J.; Garbey, M. Interacting with human physiology. Comput. Vis. Image Underst. 2007, 108, 150–170. [Google Scholar] [CrossRef]

- Sun, N.; Garbey, M.; Merla, A.; Pavlidis, I. Imaging the cardiovascular pulse. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 416–421. [Google Scholar]

- Chekmenev, S.Y.; Farag, A.A.; Miller, W.M.; Essock, E.A.; Bhatnagar, A. Multiresolution approach for noncontact measurements of arterial pulse using thermal imaging. In Augmented Vision Perception in Infrared; Springer Science and Business Media LLC: Berlin, Germany, 2009; pp. 87–112. [Google Scholar]

- Abbas, A.K.; Heiman, K.; Orlikowsky, T.; Leonhardt, S. Non-Contact Respiratory Monitoring Based on Real-Time IR-Thermography. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering, Munich, Germany, 7–12 September 2009; pp. 1306–1309. [Google Scholar]

- Pulli, K.; Baksheev, A.; Kornyakov, K.; Eruhimov, V. Real-time computer vision with OpenCV. Commun. ACM 2012, 55, 61–69. [Google Scholar] [CrossRef]

- McDuff, D.J.; Estepp, J.R.; Piasecki, A.M.; Blackford, E.B. A survey of remote optical photoplethysmographic imaging methods. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6398–6404. [Google Scholar]

- Sikdar, A.; Behera, S.K.; Dogra, D.P. Computer-Vision-Guided human pulse rate estimation: A Review. IEEE Rev. Biomed. Eng. 2016, 9, 91–105. [Google Scholar] [CrossRef] [PubMed]

- Hassan, M.; Malik, A.; Fofi, D.; Saad, N.; Karasfi, B.; Ali, Y.; Mériaudeau, F. Heart rate estimation using facial video: A review. Biomed. Signal Process. Control 2017, 38, 346–360. [Google Scholar] [CrossRef]

- Zaunseder, S.; Trumpp, A.; Wedekind, D.; Malberg, H. Cardiovascular assessment by imaging photoplethysmography—A review. Biomed. Tech. Eng. 2018, 63, 617–634. [Google Scholar] [CrossRef]

- Nakajima, K.; Osa, A.; Miike, H. A method for measuring respiration and physical activity in bed by optical flow analysis. In Proceedings of the 19th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. ‘Magnificent Milestones and Emerging Opportunities in Medical Engineering’ (Cat. No. 97CH36136), Chicago, IL, USA, 30 October–2 November 1997; pp. 2054–2057. [Google Scholar]

- Frigola, M.; Amat, J.; Pagès, J. Vision based respiratory monitoring system. In Proceedings of the 10th Mediterranean Conference on Control and Automation (MED 2002), Lisbon, Portugal, 9–12 July 2002; pp. 9–13. [Google Scholar]

- Balakrishnan, G.; Durand, F.; Guttag, J. Detecting pulse from head motions in video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3430–3437. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; pp. 1–6. [Google Scholar]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Shan, L.; Yu, M. Video-based heart rate measurement using head motion tracking and ICA. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; pp. 160–164. [Google Scholar]

- Irani, R.; Nasrollahi, K.; Moeslund, T.B. Improved pulse detection from head motions using DCT. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; pp. 118–124. [Google Scholar]

- Haque, M.A.; Irani, R.; Nasrollahi, K.; Moeslund, T.B. Heartbeat rate measurement from facial video. IEEE Intell. Syst. 2016, 31, 40–48. [Google Scholar] [CrossRef]

- Lomaliza, J.-P.; Park, H. Detecting Pulse from Head Motions Using Smartphone Camera. In Proceedings of the International Conference on Advanced Engineering Theory and Applications, Busan, Vietnnam, 8–10 December 2016; pp. 243–251. [Google Scholar]

- Lomaliza, J.-P.; Park, H. Improved Heart-Rate Measurement from Mobile Face Videos. Electronics 2019, 8, 663. [Google Scholar] [CrossRef]

- He, X.; Goubran, R.A.; Liu, X.P. Wrist pulse measurement and analysis using Eulerian video magnification. In Proceedings of the 2016 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Las Vegas, NV, USA, 24–27 February 2016; pp. 41–44. [Google Scholar]

- Wu, H.-Y.; Rubinstein, M.; Shih, E.; Guttag, J.; Durand, F.; Freeman, W. Eulerian video magnification for revealing subtle changes in the world. ACM Trans. Graph. 2012, 31, 1–8. [Google Scholar] [CrossRef]

- Al-Naji, A.; Chahl, J. Contactless cardiac activity detection based on head motion magnification. Int. J. Image Graph. 2017, 17, 1750001. [Google Scholar] [CrossRef]

- Al-Naji, A.; Chahl, J. Remote respiratory monitoring system based on developing motion magnification technique. Biomed. Signal Process. Control 2016, 29, 1–10. [Google Scholar] [CrossRef]

- Al-Naji, A.; Gibson, K.; Lee, S.-H.; Chahl, J. Real time apnoea monitoring of children using the microsoft kinect sensor: A pilot study. Sensors 2017, 17, 286. [Google Scholar] [CrossRef] [PubMed]

- Al-Naji, A.; Chahl, J. Detection of cardiopulmonary activity and related abnormal events using microsoft kinect sensor. Sensors 2018, 18, 920. [Google Scholar] [CrossRef]

- Al-Naji, A.; Lee, S.-H.; Chahl, J. An efficient motion magnification system for real-time applications. Mach. Vis. Appl. 2018, 29, 585–600. [Google Scholar] [CrossRef]

- Hertzman, A.B. Observations on the finger volume pulse recorded photoelectrically. Am. J. Physiol. 1937, 119, 334–335. [Google Scholar]

- Wang, W.; den Brinker, A.C.; Stuijk, S.; de Haan, G. Algorithmic principles of remote PPG. IEEE Trans. Biomed. Eng. 2017, 64, 1479–1491. [Google Scholar] [CrossRef]

- Verkruysse, W.; Svaasand, L.O.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445. [Google Scholar] [CrossRef] [Green Version]

- Poh, M.-Z.; McDuff, D.J.; Picard, R.W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express 2010, 18, 10762–10774. [Google Scholar] [CrossRef]

- Poh, M.-Z.; McDuff, D.J.; Picard, R.W. Advancements in noncontact, multiparameter physiological measurements using a webcam. IEEE Trans. Biomed. Eng. 2011, 58, 7–11. [Google Scholar] [CrossRef]

- Pursche, T.; Krajewski, J.; Moeller, R. Video-based heart rate measurement from human faces. In Proceedings of the 2012 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 13–16 January 2012; pp. 544–545. [Google Scholar]

- Lewandowska, M.; Rumiński, J.; Kocejko, T.; Nowak, J. Measuring pulse rate with a webcam—A non-contact method for evaluating cardiac activity. In Proceedings of the 2011 Federated Conference on Computer Science and Information Systems (FedCSIS), Szczecin, Poland, 18–21 September 2011; pp. 405–410. [Google Scholar]

- Hyvärinen, A.; Oja, E. Independent component analysis: Algorithms and applications. Neural Netw. 2000, 13, 411–430. [Google Scholar] [CrossRef]

- Sun, Y.; Hu, S.; Azorin-Peris, V.; Greenwald, S.; Chambers, J.; Zhu, Y. Motion-compensated noncontact imaging photoplethysmography to monitor cardiorespiratory status during exercise. J. Biomed. Opt. 2011, 16, 077010. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Po, L.-M.; Xu, X.; Li, Y. Motion artifacts suppression for remote imaging photoplethysmography. In Proceedings of the 2014 19th International Conference on Digital Signal Processing, Hong Kong, China, 20–23 August 2014; pp. 18–23. [Google Scholar]

- Qi, H.; Guo, Z.; Chen, X.; Shen, Z.; Wang, Z.J. Video-based human heart rate measurement using joint blind source separation. Biomed. Signal Process. Control 2017, 31, 309–320. [Google Scholar] [CrossRef]

- Bousefsaf, F.; Maaoui, C.; Pruski, A. Continuous wavelet filtering on webcam photoplethysmographic signals to remotely assess the instantaneous heart rate. Biomed. Signal Process. Control 2013, 8, 568–574. [Google Scholar] [CrossRef]

- De Haan, G.; Jeanne, V. Robust pulse rate from chrominance-based rPPG. IEEE Trans. Biomed. Eng. 2013, 60, 2878–2886. [Google Scholar] [CrossRef]

- De Haan, G.; Van Leest, A. Improved motion robustness of remote-PPG by using the blood volume pulse signature. Physiol. Meas. 2014, 35, 1913–1926. [Google Scholar] [CrossRef]

- Feng, L.; Po, L.-M.; Xu, X.; Li, Y.; Ma, R. Motion-resistant remote imaging photoplethysmography based on the optical properties of skin. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 879–891. [Google Scholar] [CrossRef]

- Wang, W.; Stuijk, S.; De Haan, G. A novel algorithm for remote photoplethysmography: Spatial subspace rotation. IEEE Trans. Biomed. Eng. 2016, 63, 1974–1984. [Google Scholar] [CrossRef]

- Wang, W.; Brinker, A.C.D.; Stuijk, S.; De Haan, G. Robust heart rate from fitness videos. Physiol. Meas. 2017, 38, 1023–1044. [Google Scholar] [CrossRef]

- Wu, B.-F.; Huang, P.-W.; Tsou, T.-Y.; Lin, T.-M.; Chung, M.-L. Camera-based heart rate measurement using continuous wavelet transform. In Proceedings of the 2017 International Conference on System Science and Engineering (ICSSE), Ho Chi Minh City, Vietnam, 21–23 July 2017; pp. 7–11. [Google Scholar]

- Wu, B.-F.; Huang, P.-W.; Lin, C.-H.; Chung, M.-L.; Tsou, T.-Y.; Wu, Y.-L. Motion resistant image-photoplethysmography based on spectral peak tracking algorithm. IEEE Access 2018, 6, 21621–21634. [Google Scholar] [CrossRef]

- Xie, K.; Fu, C.-H.; Liang, H.; Hong, H.; Zhu, X. Non-contact Heart Rate Monitoring for Intensive Exercise Based on Singular Spectrum Analysis. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 228–233. [Google Scholar]

- McDuff, D.J.; Blackford, E.B.; Estepp, J.R.; Nishidate, I. A Fast Non-Contact Imaging Photoplethysmography Method Using a Tissue-Like Model. In Optical Diagnostics and Sensing XVIII: Toward Point-of-Care Diagnostics; International Society for Optics and Photonics: San Francisco, CA, USA, 2018; p. 105010Q-1-9. [Google Scholar]

- Fallet, S.; Schoenenberger, Y.; Martin, L.; Braun, F.; Moser, V.; Vesin, J.-M. Imaging photoplethysmography: A real-time signal quality index. In Proceedings of the 2017 Computing in Cardiology (CinC), Rennes, France, 24–27 September 2017; pp. 1–4. [Google Scholar]

- Moço, A.V.; Stuijk, S.; De Haan, G. Motion robust PPG-imaging through color channel mapping. Biomed. Opt. Express 2016, 7, 1737–1754. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, D.-Y.; Wang, J.-J.; Lin, K.-Y.; Chang, H.-H.; Wu, H.-K.; Chen, Y.-S.; Lee, S.-Y. Image sensor-based heart rate evaluation from face reflectance using Hilbert–Huang transform. IEEE Sens. J. 2015, 15, 618–627. [Google Scholar] [CrossRef]

- Lin, K.-Y.; Chen, D.-Y.; Tsai, W.-J. Face-based heart rate signal decomposition and evaluation using multiple linear regression. IEEE Sens. J. 2016, 16, 1351–1360. [Google Scholar] [CrossRef]

- Lee, D.; Kim, J.; Kwon, S.; Park, K. Heart rate estimation from facial photoplethysmography during dynamic illuminance changes. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2758–2761. [Google Scholar]

- Tarassenko, L.; Villarroel, M.; Guazzi, A.; Jorge, J.; Clifton, D.A.; Pugh, C. Non-contact video-based vital sign monitoring using ambient light and auto-regressive models. Physiol. Meas. 2014, 35, 807–831. [Google Scholar] [CrossRef] [PubMed]

- Cheng, J.; Chen, X.; Xu, L.; Wang, Z.J. Illumination variation-resistant video-based heart rate measurement using joint blind source separation and ensemble empirical mode decomposition. IEEE J. Biomed. Health Inform. 2017, 21, 1422–1433. [Google Scholar] [CrossRef] [PubMed]

- Xu, L.; Cheng, J.; Chen, X. Illumination variation interference suppression in remote PPG using PLS and MEMD. Electron. Lett. 2017, 53, 216–218. [Google Scholar] [CrossRef]

- Li, X.; Chen, J.; Zhao, G.; Pietikainen, M. Remote heart rate measurement from face videos under realistic situations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 4264–4271. [Google Scholar]

- Kumar, M.; Veeraraghavan, A.; Sabharwal, A. DistancePPG: Robust non-contact vital signs monitoring using a camera. Biomed. Opt. Express 2015, 6, 1565–1588. [Google Scholar] [CrossRef] [Green Version]

- Al-Naji, A.; Perera, A.G.; Chahl, J. Remote monitoring of cardiorespiratory signals from a hovering unmanned aerial vehicle. Biomed. Eng. Online 2017, 16, 101. [Google Scholar] [CrossRef]

- Al-Naji, A.; Lee, S.-H.; Chahl, J. Quality index evaluation of videos based on fuzzy interface system. IET Image Process. 2017, 11, 292–300. [Google Scholar] [CrossRef]

- Chen, J.-H.; Tang, I.-L.; Chang, C.-H. Enhancing the detection rate of inclined faces. In Proceedings of the 2015 IEEE Trustcom/BigDataSE/ISPA, Helsinki, Finland, 20–22 August 2015; pp. 143–146. [Google Scholar]

- Borga, M.; Knutsson, H. A Canonical Correlation Approach to Blind Source Separation; Report LiU-IMT-EX-0062; Department of Biomedical Engineering, Linkping University: Linköping, Sweden, 2001. [Google Scholar]

- Kwon, S.; Kim, H.; Park, K.S. Validation of heart rate extraction using video imaging on a built-in camera system of a smartphone. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 2174–2177. [Google Scholar]

- Al-Naji, A.; Perera, A.G.; Chahl, J. Remote Measurement of Cardiopulmonary Signal Using an Unmanned Aerial Vehicle; IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2018; p. 012001. [Google Scholar]

- McDuff, D.; Gontarek, S.; Picard, R.W. Improvements in remote cardiopulmonary measurement using a five band digital camera. IEEE Trans. Biomed. Eng. 2014, 61, 2593–2601. [Google Scholar] [CrossRef]

- Sun, Y.; Azorin-Peris, V.; Kalawsky, R.; Hu, S.; Papin, C.; Greenwald, S.E. Use of ambient light in remote photoplethysmographic systems: Comparison between a high-performance camera and a low-cost webcam. J. Biomed. Opt. 2012, 17, 37005. [Google Scholar] [CrossRef] [PubMed]

- Bernacchia, N.; Scalise, L.; Casacanditella, L.; Ercoli, I.; Marchionni, P.; Tomasini, E.P. Non contact measurement of heart and respiration rates based on Kinect™. In Proceedings of the 2014 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Lisboa, Portugal, 11–12 June 2014; pp. 1–5. [Google Scholar]

- Smilkstein, T.; Buenrostro, M.; Kenyon, A.; Lienemann, M.; Larson, G. Heart rate monitoring using Kinect and color amplification. In Proceedings of the 2014 IEEE Healthcare Innovation Conference (HIC), Seattle, WA, USA, 8–10 October 2014; pp. 60–62. [Google Scholar]

- Gambi, E.; Agostinelli, A.; Belli, A.; Burattini, L.; Cippitelli, E.; Fioretti, S.; Pierleoni, P.; Ricciuti, M.; Sbrollini, A.; Spinsante, S. Heart rate detection using microsoft kinect: Validation and comparison to wearable devices. Sensors 2017, 17, 1776. [Google Scholar] [CrossRef] [PubMed]

- Scalise, L.; Bernacchia, N.; Ercoli, I.; Marchionni, P. Heart rate measurement in neonatal patients using a webcamera. In Proceedings of the 2012 IEEE International Symposium on Medical Measurements and Applications Proceedings, Budapest, Hungary, 18–19 May 2012; pp. 1–4. [Google Scholar]

- Cobos-Torres, J.-C.; Abderrahim, M.; Martínez-Orgado, J. Non-Contact, Simple Neonatal Monitoring by Photoplethysmography. Sensors 2018, 18, 4362. [Google Scholar] [CrossRef] [PubMed]

- Gibson, K.; Al-Naji, A.; Fleet, J.; Steen, M.; Esterman, A.; Chahl, J.; Huynh, J.; Morris, S. Non-contact heart and respiratory rate monitoring of preterm infants based on a computer vision system: A method comparison study. Pediatr. Res. 2019, 1–4. [Google Scholar]

- Bal, U. Non-contact estimation of heart rate and oxygen saturation using ambient light. Biomed. Opt. Express 2015, 6, 86–97. [Google Scholar] [CrossRef]

- Fouad, R.M.; Omer, O.A.; Aly, M.H. Optimizing Remote Photoplethysmography Using Adaptive Skin Segmentation for Real-Time Heart Rate Monitoring. IEEE Access 2019, 7, 76513–76528. [Google Scholar] [CrossRef]

- Datcu, D.; Cidota, M.; Lukosch, S.; Rothkrantz, L. Noncontact automatic heart rate analysis in visible spectrum by specific face regions. In Proceedings of the 14th International Conference on Computer Systems and Technologies, Ruse, Bulgaria, 28–29 June 2013; pp. 120–127. [Google Scholar]

- Feng, L.; Po, L.-M.; Xu, X.; Li, Y.; Cheung, C.-H.; Cheung, K.-W.; Yuan, F. Dynamic ROI based on K-means for remote photoplethysmography. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, QLD, Australia, 19–24 April 2015; pp. 1310–1314. [Google Scholar]

- Zhao, C.; Chen, W.; Lin, C.-L.; Wu, X. Physiological signal preserving video compression for remote photoplethysmography. IEEE Sens. J. 2019, 19, 4537–4548. [Google Scholar] [CrossRef]

- Al-Naji, A.; Chahl, J.; Lee, S.-H. Cardiopulmonary Signal Acquisition from Different Regions Using Video Imaging Analysis. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2018, 7, 117–131. [Google Scholar] [CrossRef]

- Al-Naji, A.; Chahl, J. Remote Optical Cardiopulmonary Signal Extraction With Noise Artifact Removal, Multiple Subject Detection & Long-Distance. IEEE Access 2018, 6, 11573–11595. [Google Scholar]

- Hsu, Y.; Lin, Y.-L.; Hsu, W. Learning-based heart rate detection from remote photoplethysmography features. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, QLD, Australia, 19–24 April 2014; pp. 4433–4437. [Google Scholar]

- Chen, W.; McDuff, D. Deepphys: Video-based physiological measurement using convolutional attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Ruse, Bulgaria, 28–29 June 2018; pp. 349–365. [Google Scholar]

- Yu, Z.; Li, X.; Zhao, G. Remote Photoplethysmograph Signal Measurement from Facial Videos Using Spatio-Temporal Networks. arXiv 2019, arXiv:1905.02419. [Google Scholar]

- Wiede, C.; Richter, J.; Hirtz, G. Signal fusion based on intensity and motion variations for remote heart rate determination. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; pp. 526–531. [Google Scholar]

- Klaessens, J.H.; van den Born, M.; van der Veen, A.; Sikkens-van de Kraats, J.; van den Dungen, F.A.; Verdaasdonk, R.M. Development of a baby friendly non-contact method for measuring vital signs: First results of clinical measurements in an open incubator at a neonatal intensive care unit. In Advanced Biomedical and Clinical Diagnostic Systems XII; International Society for Optics and Photonics: San Francisco, CA, USA, 2014; pp. 89351P-1–89351P-7. [Google Scholar]

- Villarroel, M.; Guazzi, A.; Jorge, J.; Davis, S.; Watkinson, P.; Green, G.; Shenvi, A.; McCormick, K.; Tarassenko, L. Continuous non-contact vital sign monitoring in neonatal intensive care unit. Healthc. Technol. Lett. 2014, 1, 87–91. [Google Scholar] [CrossRef] [PubMed]

- Rubins, U.; Marcinkevics, Z.; Logina, I.; Grabovskis, A.; Kviesis-Kipge, E. In Imaging Photoplethysmography for Assessment of Chronic Pain Patients. In Optical Diagnostics and Sensing XIX: Toward Point-of-Care Diagnostics; International Society for Optics and Photonics: San Francisco, CA, USA, 2019; pp. 1088508-1–1088508-8. [Google Scholar]

- Zaproudina, N.; Teplov, V.; Nippolainen, E.; Lipponen, J.A.; Kamshilin, A.A.; Närhi, M.; Karjalainen, P.A.; Giniatullin, R. Asynchronicity of facial blood perfusion in migraine. PLoS ONE 2013, 8, e80189. [Google Scholar] [CrossRef] [PubMed]

- Rasche, S.; Trumpp, A.; Waldow, T.; Gaetjen, F.; Plötze, K.; Wedekind, D.; Schmidt, M.; Malberg, H.; Matschke, K.; Zaunseder, S. Camera-based photoplethysmography in critical care patients. Clin. Hemorheol. Microcirc. 2016, 64, 77–90. [Google Scholar] [CrossRef] [PubMed]

- Amelard, R.; Clausi, D.A.; Wong, A. Spectral-spatial fusion model for robust blood pulse waveform extraction in photoplethysmographic imaging. Biomed. Opt. Express 2016, 7, 4874–4885. [Google Scholar] [CrossRef] [Green Version]

- Rubīns, U.; Spīgulis, J.; Miščuks, A. Photoplethysmography imaging algorithm for continuous monitoring of regional anesthesia. In Proceedings of the 2016 14th ACM/IEEE Symposium on Embedded Systems For Real-time Multimedia (ESTIMedia), Pittsburgh, PA, USA, 6–7 October 2016; pp. 1–5. [Google Scholar]

- Trumpp, A.; Lohr, J.; Wedekind, D.; Schmidt, M.; Burghardt, M.; Heller, A.R.; Malberg, H.; Zaunseder, S. Camera-based photoplethysmography in an intraoperative setting. Biomed. Eng. Online 2018, 17, 33. [Google Scholar] [CrossRef]

- Villarroel, M.; Jorge, J.; Pugh, C.; Tarassenko, L. Non-contact vital sign monitoring in the clinic. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 278–285. [Google Scholar]

- Thatcher, J.E.; Li, W.; Rodriguez-Vaqueiro, Y.; Squiers, J.J.; Mo, W.; Lu, Y.; Plant, K.D.; Sellke, E.; King, D.R.; Fan, W. Multispectral and photoplethysmography optical imaging techniques identify important tissue characteristics in an animal model of tangential burn excision. J. Burn. Care Res. 2016, 37, 38–52. [Google Scholar] [CrossRef]

- Vogels, T.; van Gastel, M.; Wang, W.; de Haan, G. Fully-automatic camera-based pulse-oximetry during sleep. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1349–1357. [Google Scholar]

- Wang, W.; Stuijk, S.; De Haan, G. Unsupervised subject detection via remote PPG. IEEE Trans. Biomed. Eng. 2015, 62, 2629–2637. [Google Scholar] [CrossRef]

- Wang, W.; Stuijk, S.; De Haan, G. Living-Skin classification via remote-PPG. IEEE Trans. Biomed. Eng. 2017, 64, 2781–2792. [Google Scholar]

- Lakshminarayana, N.N.; Narayan, N.; Napp, N.; Setlur, S.; Govindaraju, V. A discriminative spatio-temporal mapping of face for liveness detection. In Proceedings of the 2017 IEEE International Conference on Identity, Security and Behavior Analysis (ISBA), New Delhi, India, 22–24 February 2017; pp. 1–7. [Google Scholar]

- Nowara, E.M.; Sabharwal, A.; Veeraraghavan, A. In PPGSecure: Biometric presentation attack detection using photopletysmograms. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 56–62. [Google Scholar]

- Seepers, R.M.; Wang, W.; de Haan, G.; Sourdis, I.; Strydis, C. Attacks on heartbeat-based security using remote photoplethysmography. IEEE J. Biomed. Health Inform. 2017, 22, 714–721. [Google Scholar] [CrossRef]

- Maaoui, C.; Bousefsaf, F.; Pruski, A. Automatic human stress detection based on webcam photoplethysmographic signals. J. Mech. Med. Biol. 2016, 16, 1650039. [Google Scholar] [CrossRef]

- Madan, C.R.; Harrison, T.; Mathewson, K.E. Noncontact measurement of emotional and physiological changes in heart rate from a webcam. Psychophysiology 2018, 55, e13005. [Google Scholar] [CrossRef] [PubMed]

- McDuff, D.; Gontarek, S.; Picard, R. Remote measurement of cognitive stress via heart rate variability. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 2957–2960. [Google Scholar]

- Burzo, M.; McDuff, D.; Mihalcea, R.; Morency, L.-P.; Narvaez, A.; Pérez-Rosas, V. Towards sensing the influence of visual narratives on human affect. In Proceedings of the 14th ACM International Conference on Multimodal Interaction, Santa Monica, CA, USA, 22–26 October 2012; pp. 153–160. [Google Scholar]

- Monkaresi, H.; Bosch, N.; Calvo, R.A.; D’Mello, S.K. Automated detection of engagement using video-based estimation of facial expressions and heart rate. IEEE Trans. Affect. Comput. 2016, 8, 15–28. [Google Scholar] [CrossRef]

- Rouast, P.V.; Adam, M.T.; Cornforth, D.J.; Lux, E.; Weinhardt, C. Using contactless heart rate measurements for real-time assessment of affective states. In Information Systems and Neuroscience; Springer: Berlin, Germany, 2017; pp. 157–163. [Google Scholar]

- Kuo, J.; Koppel, S.; Charlton, J.L.; Rudin-Brown, C.M. Evaluation of a video-based measure of driver heart rate. J. Saf. Res. 2015, 54, 55.e29–59. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Wu, Q.; Zhou, Y.; Wu, X.; Ou, Y.; Zhou, H. Webcam-based, non-contact, real-time measurement for the physiological parameters of drivers. Measurement 2017, 100, 311–321. [Google Scholar] [CrossRef]

- Lee, K.; Han, D.K.; Ko, H. Video Analytic Based Health Monitoring for Driver in Moving Vehicle by Extracting Effective Heart Rate Inducing Features. J. Adv. Transp. 2018, 2018, 8513487. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhou, Y.; Song, S.; Liang, G.; Ni, H. Heart Rate Extraction Based on Near-Infrared Camera: Towards Driver State Monitoring. IEEE Access 2018, 6, 33076–33087. [Google Scholar] [CrossRef]

- Pereira, C.B.; Kunczik, J.; Bleich, A.; Haeger, C.; Kiessling, F.; Thum, T.; Tolba, R.; Lindauer, U.; Treue, S.; Czaplik, M. Perspective review of optical imaging in welfare assessment in animal-based research. J. Biomed. Opt. 2019, 24, 070601. [Google Scholar] [CrossRef]

- Blanik, N.; Pereira, C.; Czaplik, M.; Blazek, V.; Leonhardt, S. Remote Photopletysmographic Imaging of Dermal Perfusion in a porcine animal model. In Proceedings of the 15th International Conference on Biomedical Engineering, Singapore, 4–7 December 2013; pp. 92–95. [Google Scholar]

- Unakafov, A.M.; Möller, S.; Kagan, I.; Gail, A.; Treue, S.; Wolf, F. Using imaging photoplethysmography for heart rate estimation in non-human primates. PLoS ONE 2018, 13, e0202581. [Google Scholar] [CrossRef]

- Dantu, V.; Vempati, J.; Srivilliputhur, S. Non-invasive blood glucose monitor based on spectroscopy using a smartphone. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 3695–3698. [Google Scholar]

- Devadhasan, J.P.; Oh, H.; Choi, C.S.; Kim, S. Whole blood glucose analysis based on smartphone camera module. J. Biomed. Opt. 2015, 20, 117001. [Google Scholar] [CrossRef]

- Leijdekkers, P.; Gay, V.; Lawrence, E. Smart homecare system for health tele-monitoring. In Proceedings of the First International Conference on the Digital Society (ICDS’07), Guadeloupe, France, 2–6 January 2007; pp. 1–5. [Google Scholar]

| Ref. | Used Sensors with Parameter | Vital Signs | ROIs | Used Technique | Distance (m) | No. of Participants at a Time |

|---|---|---|---|---|---|---|

| Verkruysse et al. [68] | CCD 15 or 30 fps | HR and RR | Face and forehead | Single/multichannel analysis | 1–2 m | 1 |

| Pho et al. [70] | Webcam resolution 640 × 480, 15 fps | HR, RR and HRV | Face | ICA | 0.5 m | 1 |

| Purche et al. [71] | Webcam resolution 640 × 480, 30 fps | HR | Forehead, nose and mouth | ICA | 0.5 m | 1 |

| Lewandoska et al. [72] | Webcam resolution 640 × 480, 20 fps | HR | Face and forehead | PCA | 1 m | 1 |

| Ref. | Used Sensors with Parameter | Vital Signs | ROIs | Used Technique | Distance (m) | No. of Participants at a Time | Results |

|---|---|---|---|---|---|---|---|

| Pho et al. [69] | Webcam resolution 640 × 480, 25 fps | HR | Face | ICA | 0.5 m | 3 | PPC = 0.95, RMSE = 4.63 bpm |

| Yu et al. [74] | CMOS 720 × 576 face detector, 25 fps | HR and RR | Palm and face | SCICA | 0.35–0.4 m | 1 | PCC = 0.9 |

| Feng et al. [75] | Webcam resolution 640 × 480, 30 fps | HR | Forehead | ICA | 0.75 m | 1 | PCC = 0.99 |

| Qi et al. [76] | Digital camera resolution 720 × 576, 50 fps | HR | Face | JBSS | - | 1 | PCC = 0.74 |

| Bousefsaf et al. [77] | Webcam resolution 320 × 240, 30 fps | HR | Face | CWT | 1 m | 1 | PCC = 1 |

| De Haan et al. [78] | CCD resolution 1024 × 752, 20 fps | HR | Face | CHROM | - | 1 | PCC = 1, RMSE = 0.5 |

| De Haan et al. [79] | CCD resolution 1024 × 752, 20 fps | HR | Face | PBV | - | 1 | PCC = 0.99, RMSE = 0.64 |

| Feng et al. [80] | Webcam resolution 640 × 480, 30 fps | HR | Cheeks | GRD | 0.75 m | 1 | PCC = 0.97 |

| Wang et al. [81] | CCD resolution 768 × 576, 20 fps | HR | Face and forehead | 2SR | 1.5 m | 1 | PCC = 0.94 |

| Wang et al. [67] | CCD resolution 768 × 576, 20 fps | HR | Face | POS | 1.5 m | 1 | SNR (dB) = 5.16 |

| Wang et al. [82] | CCD resolution 768 × 576, 20 fps | HR | Face | Sub-band decomposition | 2 m | 1 | SNR (dB) = 4.77 |

| Wu et al. [83] | Webcam | HR | Face | CWT | 0.5–1.5 m | 1 | SNR (dB) = −3.01 |

| Wu et al. [84] | Webcam | HR | Cheeks | MRSPT | 0.6–1.6 m | 1 | RMSE = 6.44 bpm |

| Xie et al. [85] | Video camera with 30 fps | HR | Face | SSA and SVD | - | 1 | PCC = 0.99, RMSE = 3.99 |

| McDuff et al. [86] | Colour camera resolution 658 x 492, 120 fps. | HR and HRV | Face | Linear transformation | - | 1 | MAE = 4.17 bpm, SNR (dB) = 3.13 |

| Fallet et al. [87] | Video camera resolution 1.3 megapixels, 20 fps. | HR | Forehead and face | SQI | - | 1 | MAE = 4.72 bpm |

| Ref. | Used Sensors with Parameter | Vital Signs | ROIs | Used Technique | Distance (m) | No. of Participants at a Time | Results |

|---|---|---|---|---|---|---|---|

| Chen et al. [89] | Digital camera 30 fps | HR | Brow area | EEMD | 0.07–0.09 m | 1 | PCC = 0.91 |

| Lin et al. [90] | Digital camera 30 fps | HR | Brow area | EEMD + MLR | 0.10–0.25 m | 1 | PCC = 0.96 |

| Lee et al. [91] | Digital Camera resolution 1280 × 720 | HR | Cheek | MOCF | 1 m | 1 | RMSE = 1.8 bpm |

| Tarassenko et al. [92] | Digital camera Resolution 5 megapixels, 12 fps | HR, RR, Sp | Forehead and cheek | AR modelling and pole cancellation | 1 m | 1 | MAE = 3 bpm |

| Cheng et al. [93] | Webcam resolution 640 × 480, 30 fps | HR | Face | JBSS + EEMD | 0.50 m | 1 | PCC = 0.91 |

| Xu et al. [94] | Webcam resolution 640 × 480, 30 fps | HR | Face | PLS + MEMD | 0.50 m | 1 | PCC = 0.81 |

| Ref. | Used Sensors with Parameter | Vital Signs | ROIs | Used Technique | Distance (m) | No. of Participants at a Time | Results |

|---|---|---|---|---|---|---|---|

| Li et al. [95] | iSight camera of an IPAD resolution 780 × 580, 61 fps | HR | Face | NLMS adaptive filtering | 0.35–0.50 m | 1 | RMSE = 1.8 bpm |

| Kumar et al. [96] | Monochrome camera 30 fps | HR and HRV | Face | Weighted average | 0.5 m | 1 | SNR (dB) = 6.5 |

| Al-Naji et al. [97] | Hovering UAV resolution 1920 × 1080, 60 fps | HR and RR | Face | CEEMDAN + CCA | 3 m | 1 | PCC = 0.99, RMSE = 0.65 bpm |

| Ref. | Used Sensors with Parameter | Vital Signs | ROIs | Used Technique | Distance (m) | No. of Participants at a Time | Results |

|---|---|---|---|---|---|---|---|

| Kwon et al. [101] | Smartphone resolution 640 × 480, 30 fps | HR | Face | ICA | 0.3 m | 1 | MAE = 1.47 bpm |

| Al-Naji et al. [102] | UAV resolution 3840 × 2160, 25 fps | HR and RR | Face | CEEMD + ICA | 3 m | 1 | PCC = 0.99, RMSE = 0.7 bpm |

| Mcduff et al. [103] | Digital camera with five bands resolution 960 × 720, 30 fps | HR, RR, and HRV | Face | ICA | 3 m | 1 | PCC = 0.92 |

| Sun et al. [104] | CMOS resolution 1280 × 1024, Webcam | HR | Face | TFR | 0.2–0.35 m | 1 | PCC = 0.85 |

| Bernacchia et al. [105] | Microsoft Kinect 30fps | HR and RR | Neck, thorax and abdominal area | ICA | - | 1 | PCC = 0.91 |

| Smilkstein et al. [106] | Microsoft Kinect | HR | Face | EVM | - | 1 | - |

| Gambi et al. [107] | Microsoft Kinect resolution 1920 × 2080, 30 fps | HR | Forehead, cheeks, neck, | EVM | - | 1 | RMSE = 2.2 bpm |

| Scalise et al. [108] | Webcam resolution 640 × 480, 30 fps | HR | Forehead | ICA | 20 cm | 1 | PCC = 0.94 |

| Arts et al. [10] | Digital camera 300 × 300 face detector, 15 fps | HR | Face and cheek | JFTD | 1 m | 1 | - |

| Cobos-Torres et al. [109] | Digital camera resolution 1920 × 1080 or 1280 × 720 pixels, 24 or 30 fps | HR | Abdominal area | Stack FIFO | 50 cm | 1 | PCC = 0.94 |

| Gibson et al. [110] | Digital camera resolution 1920 × 1080, 30 fps | HR and RR | Face and chest | EVM | 1–2 m | 1 | Mean bias = 4.5 bpm |

| Bal [111] | Webcam resolution 640 × 480, 30 fps | HR and Sp | Face | DTCWT | 50 cm | 1 | PCC = 0.92, RMSE = 2.05 bpm |

| Tarassenko et al. [92] | Digital camera resolution 5 megapixels, 12 fps | HR, RR, Sp | Forehead and cheek | AR modelling and pole cancellation | 1 m | 1 | MAE = 3 bpm |

| Foud et al. [112] | Webcam resolution 640 × 480, 30 fps | HR | Face and cheeks | ICA | 1–2 m | 1 | RMSE = 2.7 bpm |

| Datcu et al. [113] | Video camera resolution 252 × 350, 15 fps | HR | 10 different parts of face | ICA | - | 1 | RMSE = 1.47 bpm |

| Feng et al. [114] | Webcam resolution 640 × 480, 30 fps | HR | Face and palm | Block division | 0.75 m | 1 | PCC = 0.96 |

| Zhao et al. [115] | Webcam resolution 640 × 480, 30 fps | HR | Face, arm, hand | POSCC | 1.5 m | 2 | SNR (dB) = 4.5 |

| Al-Naji et al. [116] | Digital camera resolution 1920 × 1080 or 1080 × 720, 60 or 30 fps | HR and RR | Face, palm, wrist, arm, neck, leg, forehead, head and chest | EEMD + ICA | 2 m | 1 | PCC = 0.96, RMSE = 3.52 |

| Al-Naji et al. [117] | Digital camera resolution 1080 × 720, 60 fps, UAV 10 megapixel | HR and RR | Face and Forehead | CEEMDAN + CCA | 60 m | 6 | PCC = 0.99, RMSE = 0.89 bpm |

| Hsu et al. [118] | Video camera resolution 1920 × 1080, 29.97 fps | HR | Face | SVR | - | 1 | PCC = 0.88, RMSE = 5.48 bpm |

| Chen et al. [119] | Video camera resolution 658 × 492, 120 fps | HR and BR | Face | Convolutional attention network | - | 1 | MAE = 1.50 bpm |

| Yu et al. [120] | Video camera resolution 1920 × 2080, 60 fps | HR and HRV | Face | Spatio-temporal network | - | 1 | PCC = 0.99, RMSE = 1.8 bpm |

| Weidi et al. [121] | Industrial camera | HR | Forehead | ICA | 2 m | 1 | RMSE = 4.83 bpm |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khanam, F.-T.-Z.; Al-Naji, A.; Chahl, J. Remote Monitoring of Vital Signs in Diverse Non-Clinical and Clinical Scenarios Using Computer Vision Systems: A Review. Appl. Sci. 2019, 9, 4474. https://doi.org/10.3390/app9204474

Khanam F-T-Z, Al-Naji A, Chahl J. Remote Monitoring of Vital Signs in Diverse Non-Clinical and Clinical Scenarios Using Computer Vision Systems: A Review. Applied Sciences. 2019; 9(20):4474. https://doi.org/10.3390/app9204474

Chicago/Turabian StyleKhanam, Fatema-Tuz-Zohra, Ali Al-Naji, and Javaan Chahl. 2019. "Remote Monitoring of Vital Signs in Diverse Non-Clinical and Clinical Scenarios Using Computer Vision Systems: A Review" Applied Sciences 9, no. 20: 4474. https://doi.org/10.3390/app9204474

APA StyleKhanam, F.-T.-Z., Al-Naji, A., & Chahl, J. (2019). Remote Monitoring of Vital Signs in Diverse Non-Clinical and Clinical Scenarios Using Computer Vision Systems: A Review. Applied Sciences, 9(20), 4474. https://doi.org/10.3390/app9204474