An Overall Deformation Monitoring Method of Structure Based on Tracking Deformation Contour

Abstract

:Featured Application

Abstract

1. Introduction

2. Orthogonal Projection and Global Deformation Acquisition Method of Structures

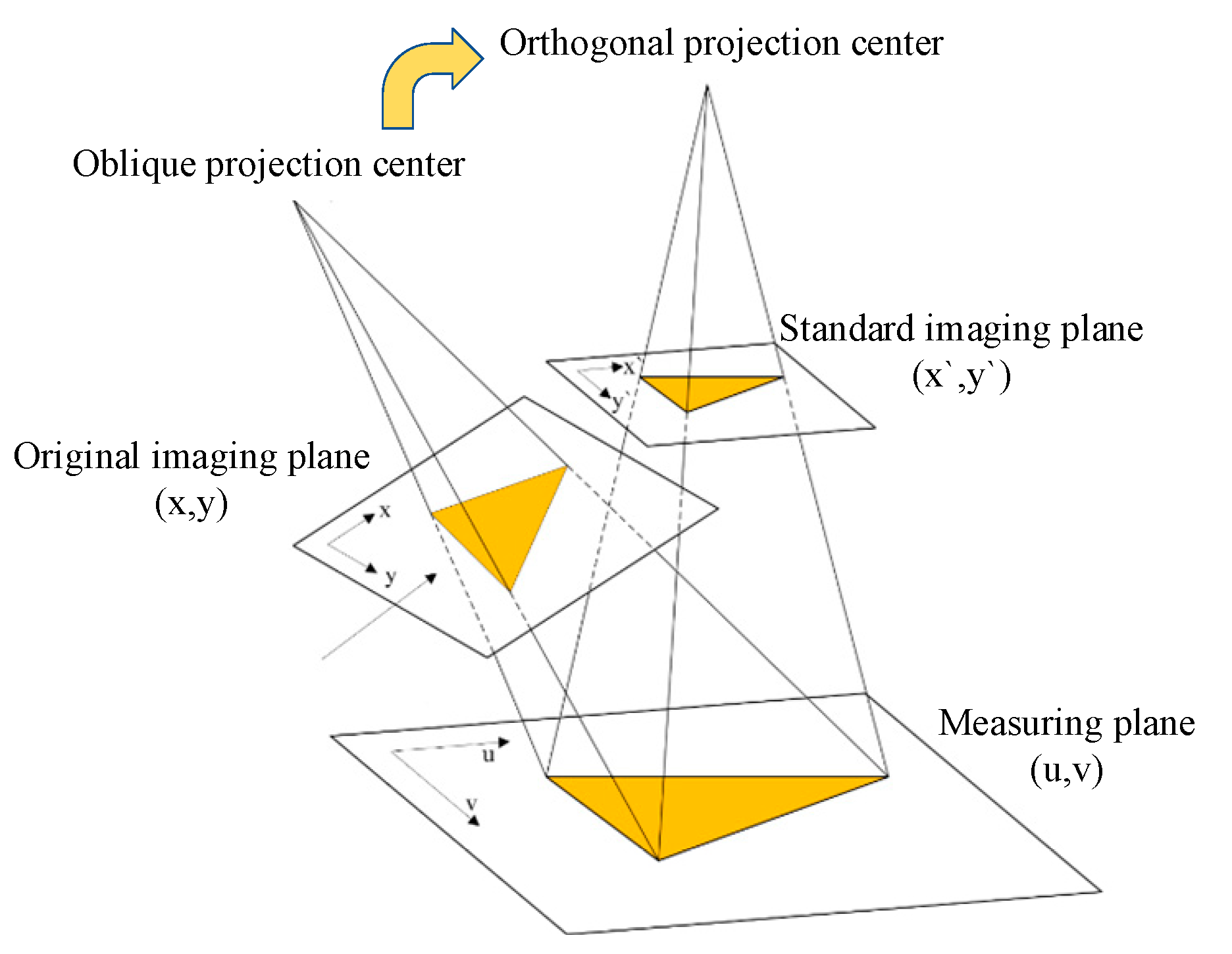

2.1. Perspective Transformation of Digital Image

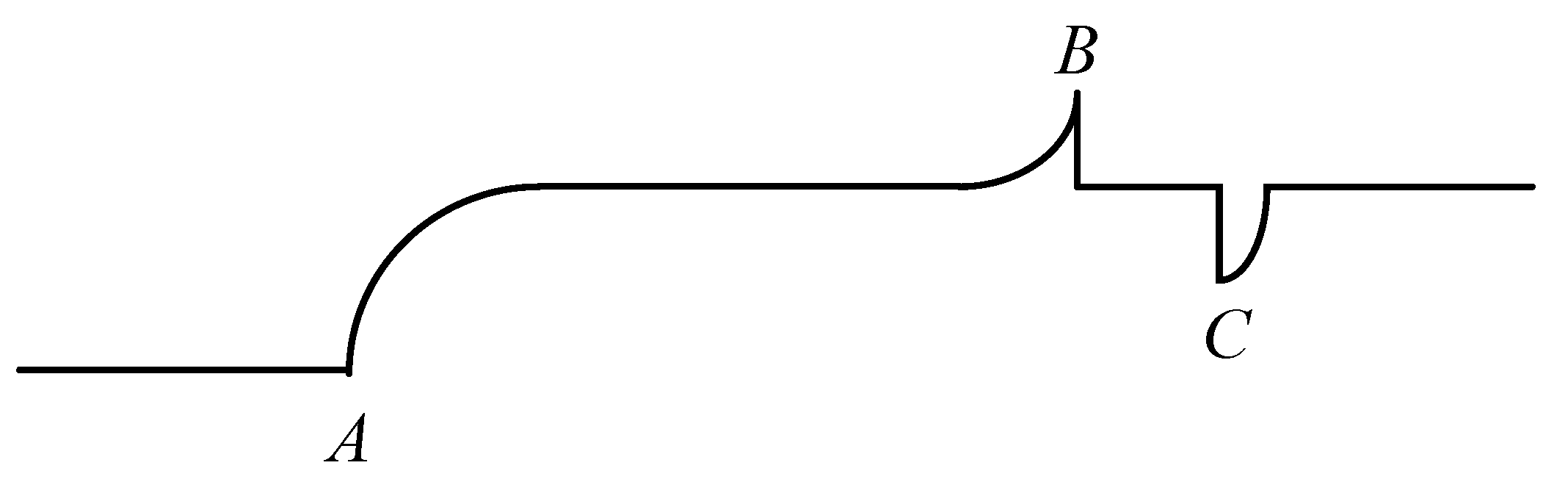

2.2. Edge Detection

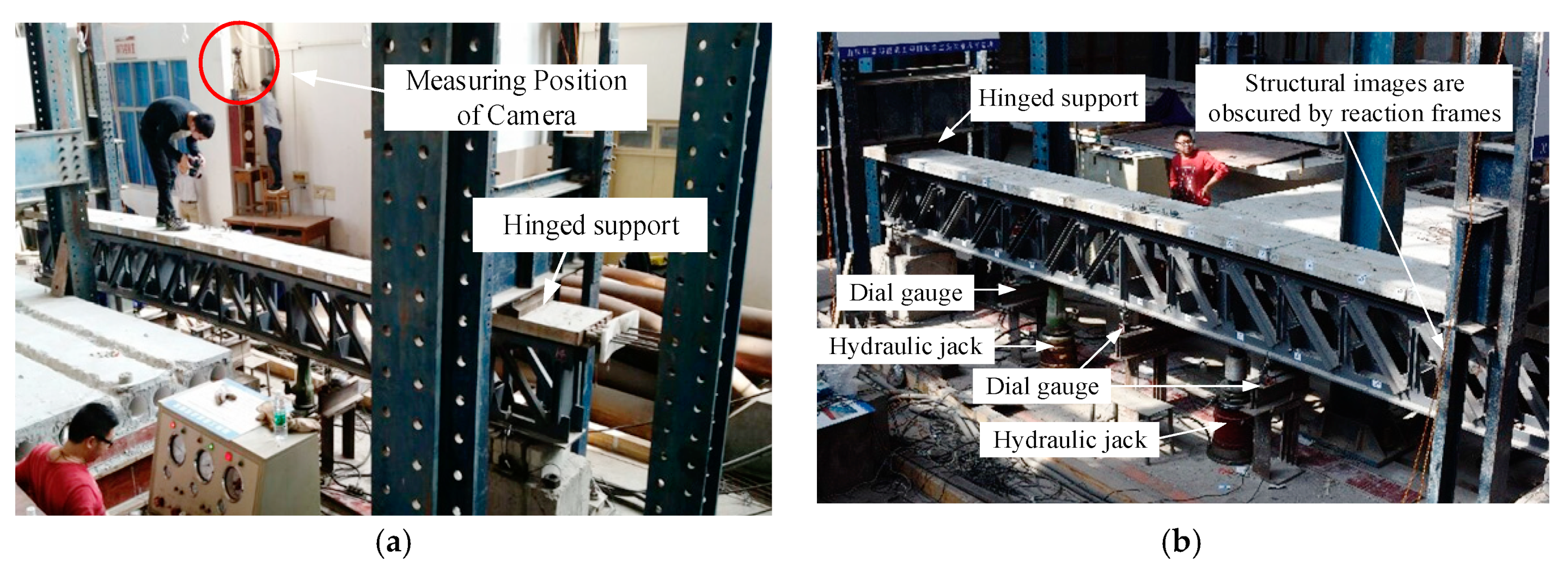

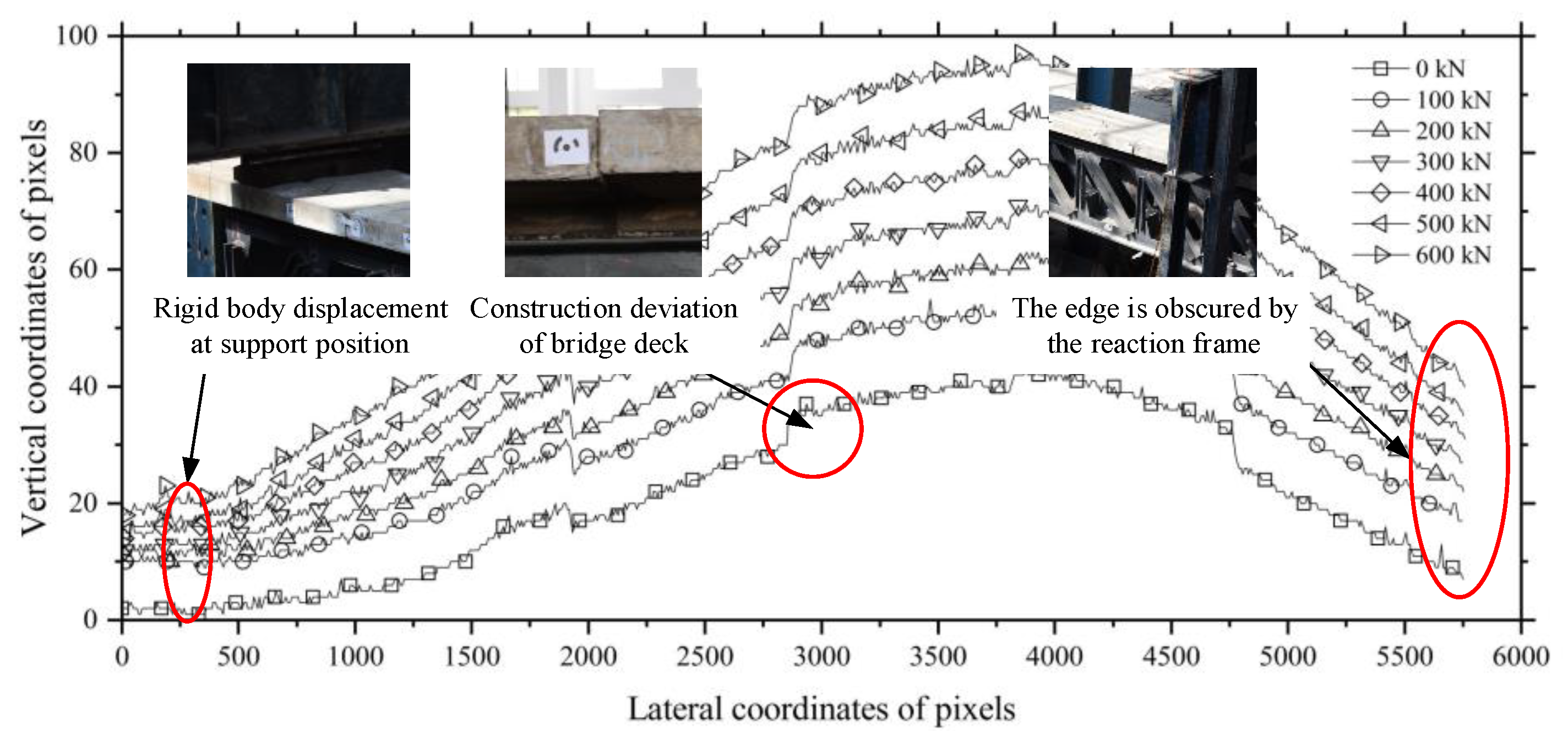

3. Static Test of the Beam

4. Result and Discussion

4.1. Picture Perspective Transform of Test Beam

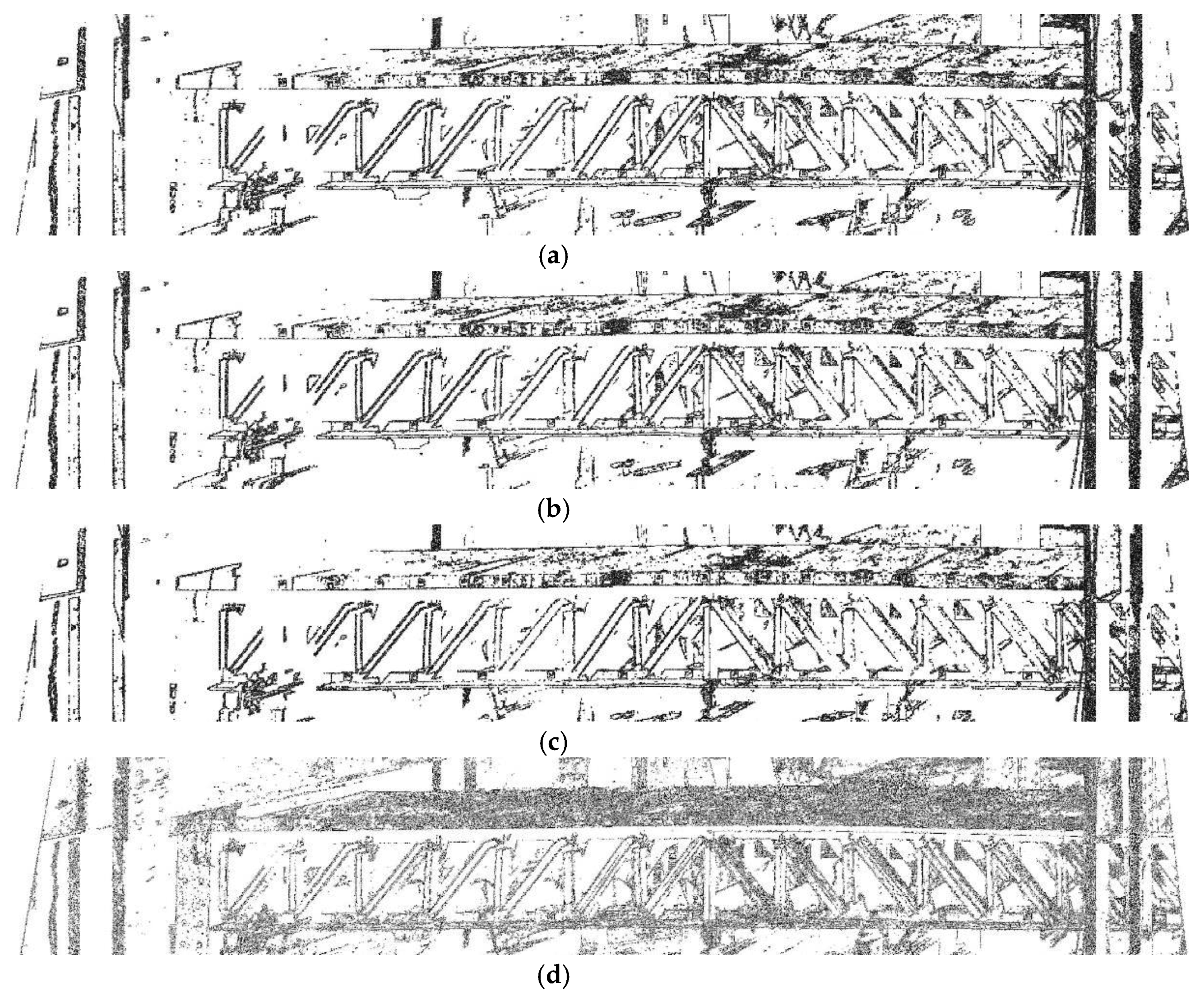

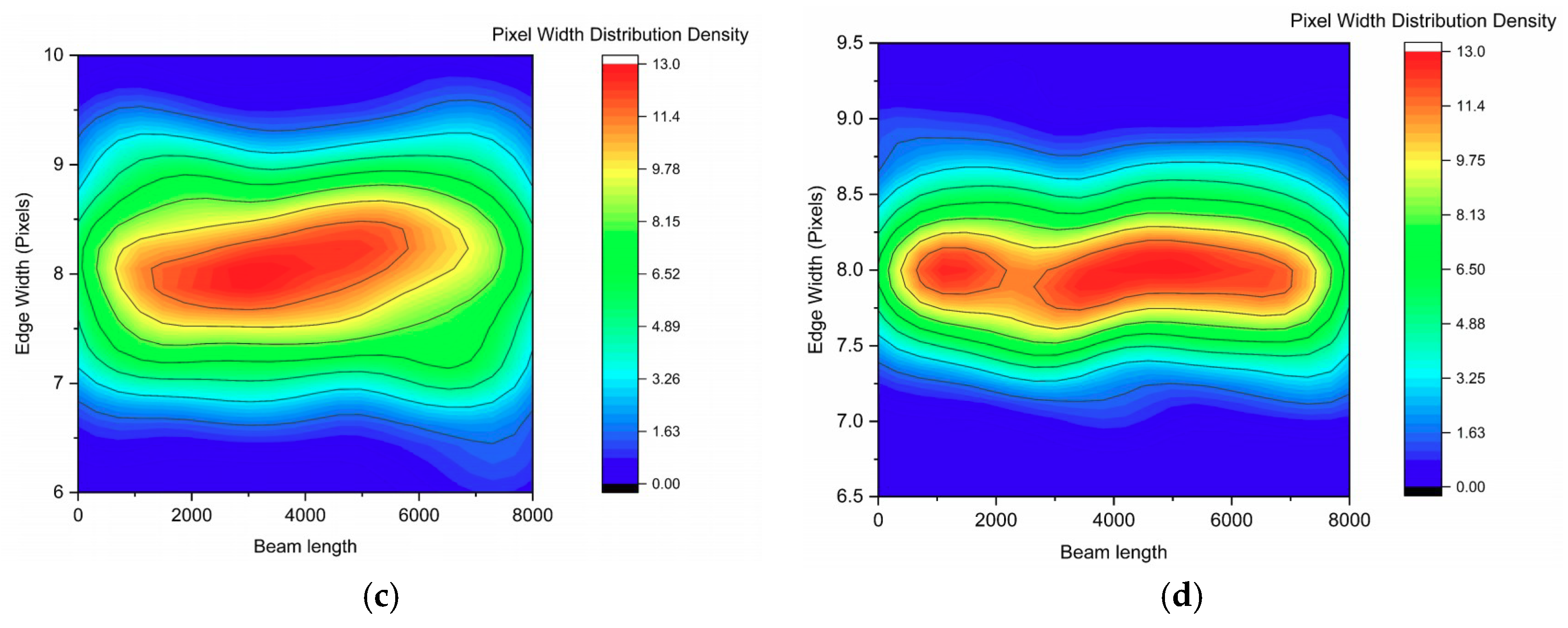

4.2. Edge Contour Extraction of Structures

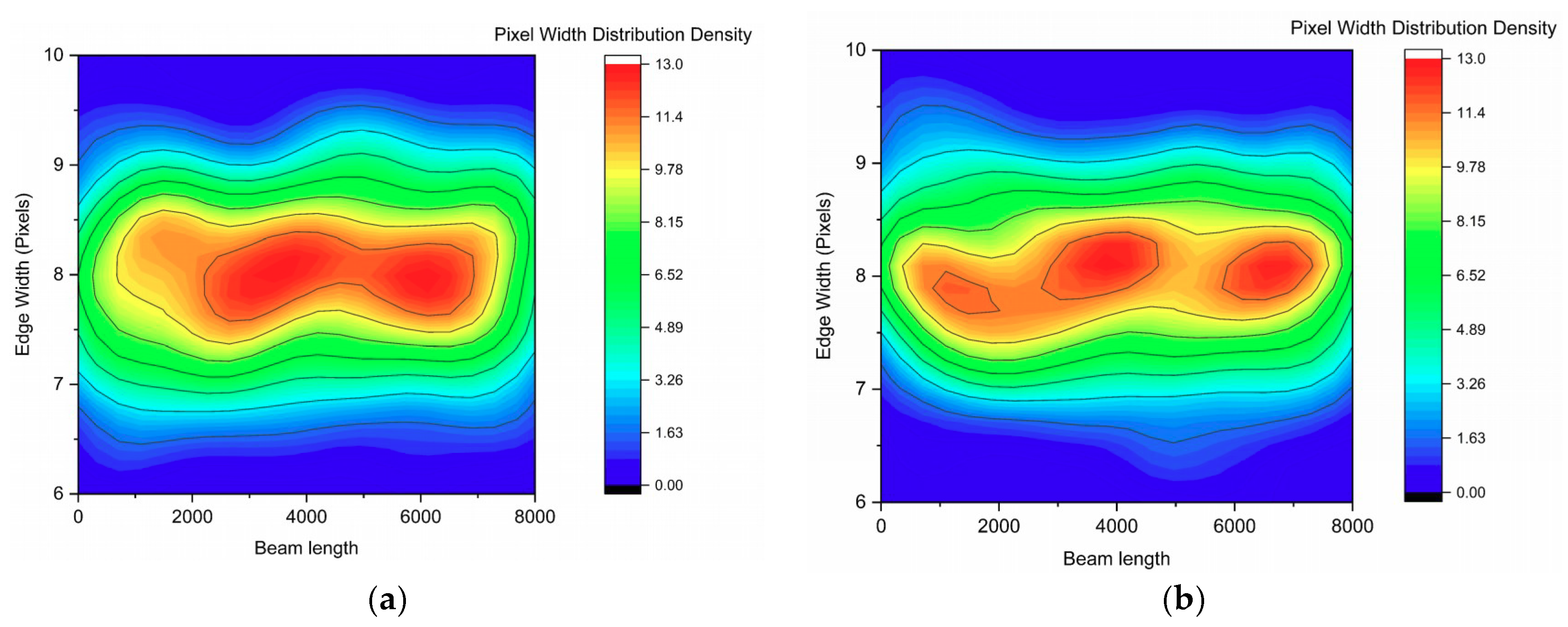

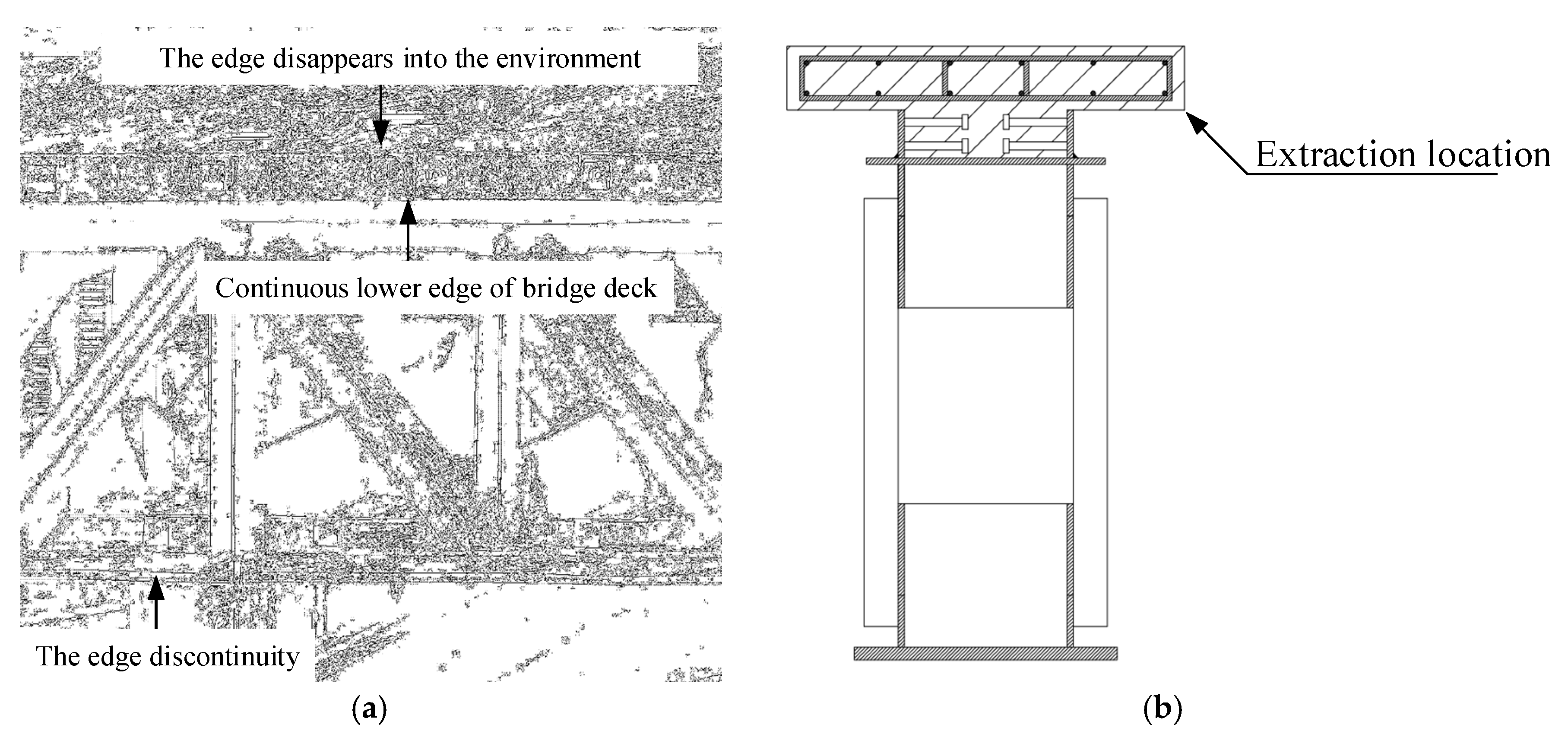

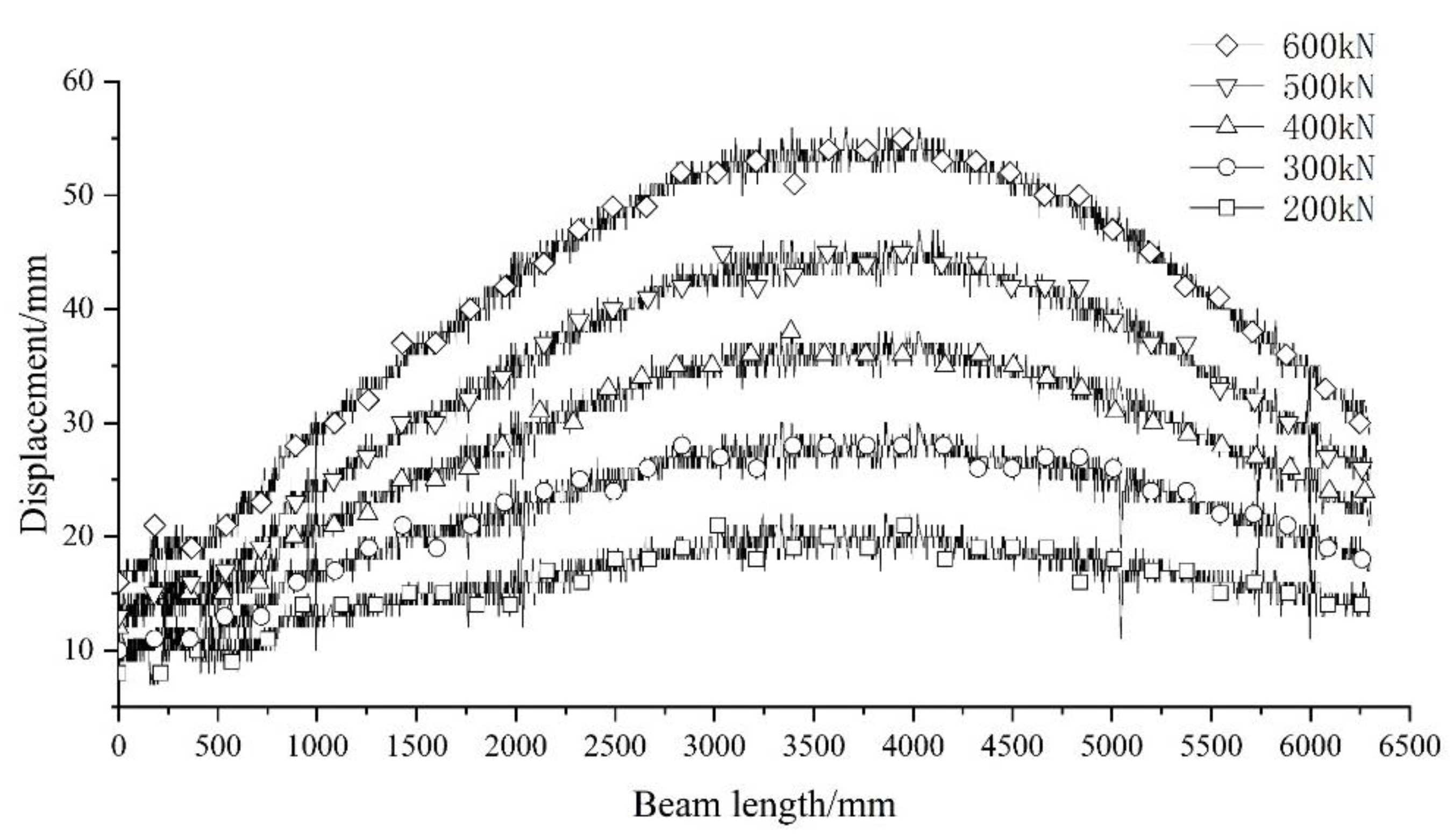

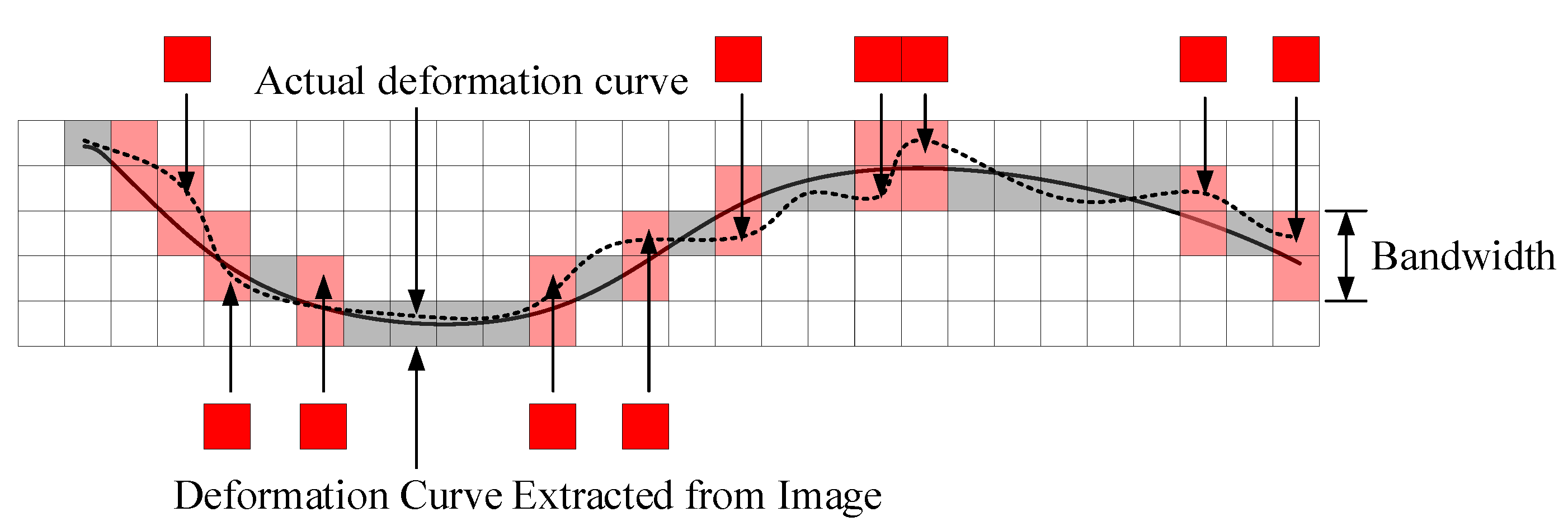

4.3. Deformation Curve Obtained by Overlapping Difference of Contour Line

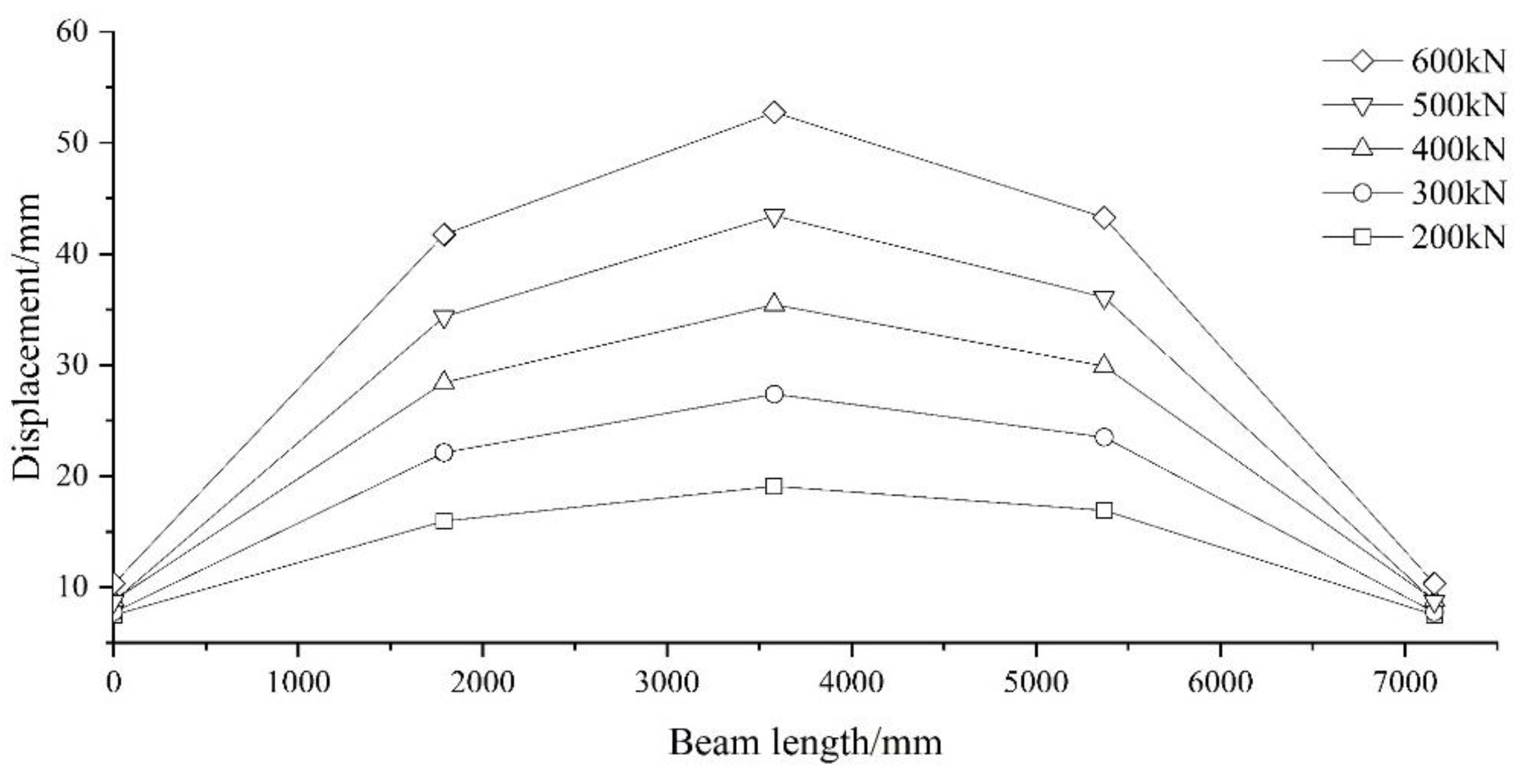

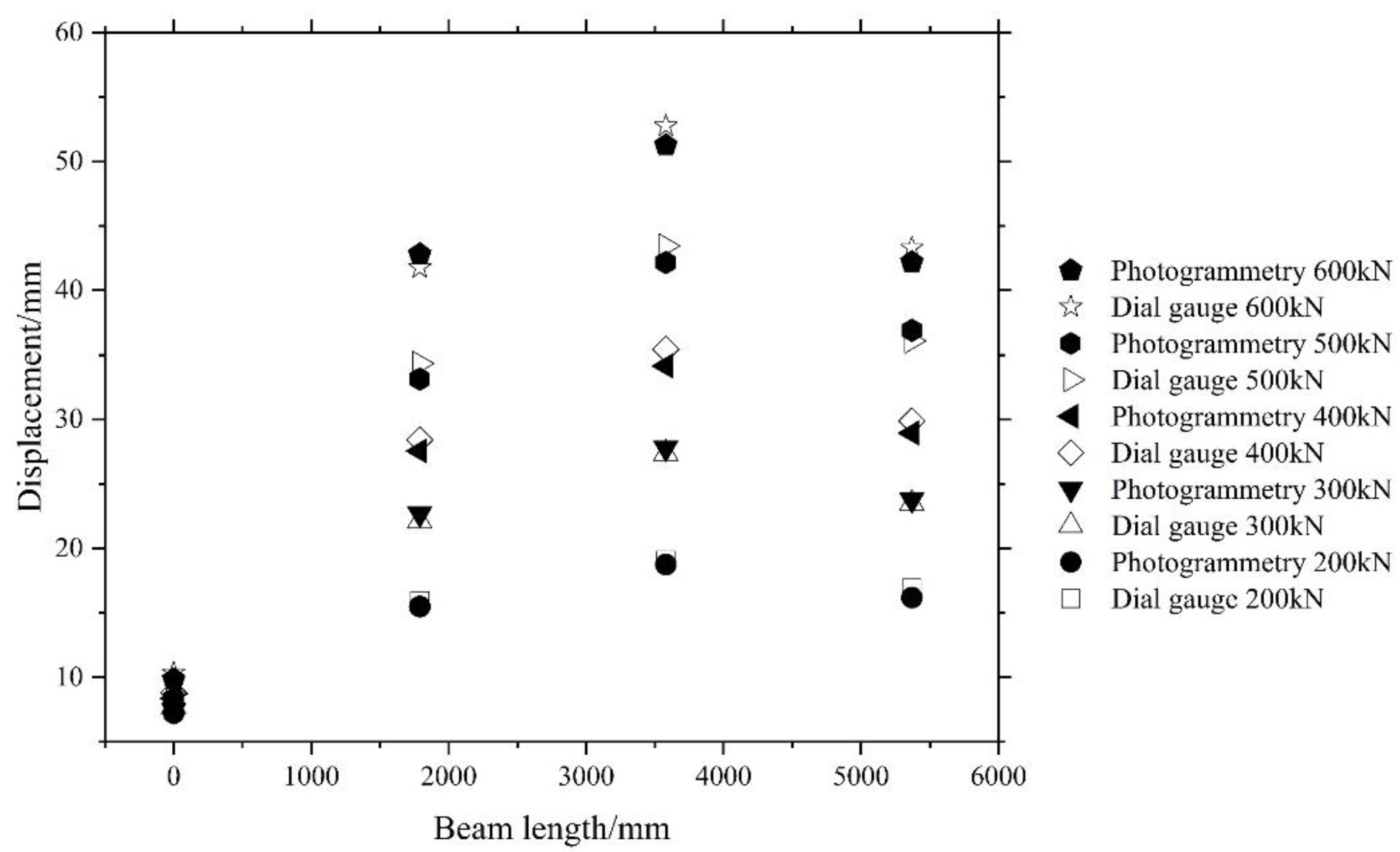

4.4. Error Analysis of Photogrammetry

5. Conclusions

- (1)

- Due to the limitation on camera sites, orthogonal projection images are usually difficult to be accessed for large engineering structures such as bridges. In dealing with the issue, the perspective transformation method is applied to acquire the orthogonal projection of structures from the originally inclined images. The experimental results show that the orthogonal projection image obtained by the proposed method can correctly reflect the overall deformation of the structure.

- (2)

- In order to characterize the key feature of structural deformation, the edge detection operator is utilized to obtain the edge contour of the structure from the processed orthogonal images. Using the operator, the overall deflection curve of the structure can be obtained by locating and calibrating the edge pixels.

- (3)

- The edge line of the structure acquired from the position of the pixel shows a notable zigzag effect. Further investigations have been carried out, and the result suggests that the illumination environment can be mainly attributed to the zigzag effect. Since the image edge of the structure has a certain bandwidth, the final position of edge pixels in the bandwidth range will be affected by the illumination environment, which eventually results in the fluctuation around the actual deflection curve. On this end, the fitting method is used to minimize the fluctuation and obtain the linear approximation of the actual deflection curve. After comparison with the data measured by the dial meters, it shows the error of the proposed method is less than 5%.

- (4)

- Since the proposed method is based on digital images, the accuracy is dependent on the quality of available images even if some advanced image processing methods are utilized. For instance, a major limitation of the method is that the overall deformation cannot be directly obtained when some parts of the measuring structures are obscured. Under such a situation, the postprocessing method, such as the fitting, can be applied to obtain the approximation data of the blocked parts.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liu, Z.; Zhang, S.; Cai, S. Experimental Study on Deflection Monitoring Scheme of Steep Gradient and High Drop Bridge. J. Highw. Transp. Res. Dev. 2015, 32, 88–93. [Google Scholar]

- Jiang, T.; Tang, L.; Zhou, Z. Study on Application of Close-Range Photogrammetric 3D Reconstruction in Structural Tests. Res. Explor. Lab. 2016, 35, 26–29. [Google Scholar]

- Heng, J.; Zheng, K.; Kaewunruen, S.; Zhu, J.; Baniotopoulos, C. Probabilistic fatigue assessment of rib-to-deck joints using thickened edge U-ribs. Steel Compos. Struct. 2020, 2, 23–56. [Google Scholar]

- Luhmann, T.; Robson, S.; Kyle, S.; Boehm, J. Close-Range Photogrammetry and 3D Imaging, 2nd Edition. Photogramm. Eng. Remote Sens. 2015, 81, 273–274. [Google Scholar]

- Baltsavias, E.P. A comparison between photogrammetry and laser scanning. ISPRS J. Photogramm. Remote Sens. 1999, 54, 83–94. [Google Scholar] [CrossRef]

- Smith, M.J. Close range photogrammetry and machine vision. Emp. Surv. Rev. 2001, 34, 276. [Google Scholar] [CrossRef]

- Ke, T.; Zhang, Z.X.; Zhang, J.Q. Panning and multi-baseline digital close-range photogrammetry. Proc. SPIE Int. Soc. Opt. Eng. 2007, 34, 43–44. [Google Scholar]

- Feng, D.; Feng, M.Q. Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection—A review. Eng. Struct. 2018, 156, 105–117. [Google Scholar] [CrossRef]

- Kromanis, R.; Forbes, C. A Low-Cost Robotic Camera System for Accurate Collection of Structural Response. Inventions 2019, 4, 47. [Google Scholar] [CrossRef]

- Artese, S.; Achilli, V.; Zinno, R. Monitoring of bridges by a laser pointer: Dynamic measurement of support rotations and elastic line displacements: Methodology and first test. Sensors 2018, 18, 338. [Google Scholar] [CrossRef]

- Ghorbani, R.; Matta, F.; Sutton, M.A. Full-field Deformation Measurement and Crack Mapping on Confined Masonry Walls Using Digital Image Correlation. Exp. Mech. 2015, 55, 227–243. [Google Scholar] [CrossRef]

- Wang, G.; Ma, L.; Yang, T. Study and Application of Deformation Monitoring to Tunnel with Amateur Camera. Chin. J. Rock Mech. Eng. 2005, 24, 5885–5889. [Google Scholar]

- Pan, B.; Xie, H.; Dai, F. An Investigation of Sub-pixel Displacements Registration Algorithms in Digital Image Correlation. Chin. J. Theor. Appl. Mech. 2007, 39, 245–252. [Google Scholar]

- Zhang, G.; Yu, C. The Application of Digital Close-Range Photogrammetry in the Deformation Observation of Bridge. GNSS World China 2016, 41, 91–95. [Google Scholar]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A Vision-Based Sensor for Noncontact Structural Displacement Measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef] [PubMed]

- Detchev, I.; Habib, A.; El-Badry, M. Case study of beam deformation monitoring using conventional close range photogrammetry. In Proceedings of the ASPRS 2011 Annual Conference, ASPRS, Milwaukee, WI, USA, 1–5 May 2011. [Google Scholar]

- Chu, X.; Zhou, Z.; Deng, G. Improved design of fuzzy edge detection algorithm for blurred images. J. Jilin Univ. Sci. Ed. 2019, 57, 875–881. [Google Scholar]

- Chu, X.; Xiang, X.; Zhou, Z. Experimental study of Euler motion amplification algorithm in bridge vibration analysis. J. Highw. Transp. Res. Dev. 2019, 36, 41–47. [Google Scholar]

- Chang, X.; Du, S.; Li, Y.; Fang, S. A Coarse-to-Fine Geometric Scale-Invariant Feature Transform for Large Size High Resolution Satellite Image Registration. Sensors 2018, 18, 1360. [Google Scholar] [CrossRef]

- Jianming, W.; Yiming, M.; Tao, Y. Perspective Transformation Algorithm for Light Field Image. Laser Optoelectron. Prog. 2019, 56, 151003. [Google Scholar] [CrossRef]

- Ren, H.; Zhao, S.; Gruska, J. Edge detection based on single-pixel imaging. Opt. Express 2018, 26, 5501. [Google Scholar] [CrossRef]

- Bao, P.; Zhang, L.; Wu, X. Canny edge detection enhancement by scale multiplication. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1485–1490. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yi-Bin, H.E.; Zeng, Y.J.; Chen, H.X. Research on improved edge extraction algorithm of rectangular piece. Int. J. Mod. Phys. C 2018, 29, 169–186. [Google Scholar]

- Mamedbekov, S.N. Definition Lens Distortion Camera when Photogrammetric Image Processing. Her. Dagestan State Tech. Univ. Tech. Sci. 2016, 35, 8–13. [Google Scholar] [CrossRef]

- Myung-Ho, J.; Hang-Bong, K. Stitching Images with Arbitrary Lens Distortions. Int. J. Adv. Robot. Syst. 2014, 11, 1–7. [Google Scholar]

- Ronda, J.I.; Valdés, A. Geometrical Analysis of Polynomial Lens Distortion Models. J. Math. Imaging Vis. 2019, 61, 252–268. [Google Scholar] [CrossRef]

- Bergamasco, F.; Cosmo, L.; Gasparetto, A. Parameter-Free Lens Distortion Calibration of Central Cameras. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Li, J.; Di, W.; Yue, W. A novel method of Brillouin scattering spectrum identification based on Sobel operators in optical fiber sensing system. Opt. Quantum Electron. 2018, 50, 27. [Google Scholar] [CrossRef]

- Bora, D.J. An Efficient Innovative Approach Towards Color Image Enhancement. Int. J. Inf. Retr. Res. 2018, 8, 20–37. [Google Scholar] [CrossRef] [Green Version]

- Barbeiro, S.; Cuesta, E. Cross-Diffusion Systems for Image Processing: I. The Linear Case. J. Math. Imaging Vis. 2017, 58, 447–467. [Google Scholar] [Green Version]

- Cobos, F.; Fernández-Cabrera, L.M.; Kühn, T. On an extreme class of real interpolation spaces. J. Funct. Anal. 2009, 256, 2321–2366. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Cheng, M.M.; Hu, X. Richer Convolutional Features for Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1939–1946. [Google Scholar] [CrossRef]

| Number of Pixels | Size of Sensor | Data Interface | Aspect Ratio | Photo-Sensors |

|---|---|---|---|---|

| 50.6 million | 36 × 24 mm | USB 3.0 | 3:2 | CMOS |

| Image Amplitude | Pixel Size | Lens Type | Focal Length | Lens Relative Aperture |

| 8688 × 5792 | 4.14 | EF 24–70 mm f/2.8 L | 50 mm | F2.8–F22 |

| Outline Size | Phase Principal Coordinates X0 | −0.0769 mm |

|---|---|---|

| Phase Principal Coordinates Y0 | 0.0045 mm | |

| Camera Main Distance f | 50.9339 mm | |

| Radial Distortion Coefficient | K1 | 1.9644 × 10−8 |

| K2 | 5.6287 × 10−6 | |

| Eccentric Distortion Coefficient | P1 | 1.4683 × 10−5 |

| P2 | −3.8601 × 10−6 | |

| Pixel Size | 0.004096 mm | |

| Image Size | 5792 × 8668 | |

| Number of Vertical Members | Number of Pixels | Real Length of Members/mm | Calibration Value | Average Value |

|---|---|---|---|---|

| 1 | 344 | 387 | 1.12 | 1.12 mm/px |

| 2 | 342 | 379 | 1.10 | |

| 3 | 340 | 380 | 1.11 | |

| 4 | 345 | 377 | 1.09 | |

| 5 | 339 | 376 | 1.10 | |

| 6 | 292 | 332 | 1.12 | |

| 7 | 346 | 375 | 1.08 | |

| 8 | 421 | 473 | 1.12 | |

| 9 | 330 | 376 | 1.14 | |

| 10 | 295 | 331 | 1.22 | |

| 11 | 335 | 377 | 1.12 | |

| 12 | 351 | 376 | 1.07 | |

| 13 | 342 | 381 | 1.11 |

| Loading Condition/kN | Dialgauge Position | Dialgauge Measured Value R1/mm | Photogrammetric Value R2/mm | |R2 − R1| = S/mm | Error S/R1/% | RMSE |

|---|---|---|---|---|---|---|

| 200 | Left support | 7.50 | 7.25 | 0.25 | 3.33 | 0.82 |

| L/4 | 15.95 | 15.50 | 0.45 | 2.82 | ||

| 2L/4 | 19.09 | 18.75 | 0.34 | 1.78 | ||

| 3L/4 | 16.90 | 16.19 | 0.71 | 4.20 | ||

| 300 | Left support | 7.74 | 7.45 | 0.29 | 3.75 | |

| L/4 | 22.13 | 22.69 | 0.56 | 2.53 | ||

| 2L/4 | 27.37 | 27.80 | 0.43 | 1.57 | ||

| 3L/4 | 23.49 | 23.78 | 0.29 | 1.23 | ||

| 400 | Left support | 8.82 | 8.47 | 0.35 | 3.97 | |

| L/4 | 28.42 | 27.56 | 0.86 | 3.03 | ||

| 2L/4 | 35.45 | 34.15 | 1.30 | 3.67 | ||

| 3L/4 | 29.89 | 28.94 | 0.95 | 3.18 | ||

| 500 | Left support | 8.72 | 8.36 | 0.36 | 4.13 | |

| L/4 | 34.34 | 33.14 | 1.20 | 3.49 | ||

| 2L/4 | 43.46 | 42.18 | 1.28 | 2.95 | ||

| 3L/4 | 36.08 | 36.91 | 0.83 | 2.30 | ||

| 600 | Left support | 10.33 | 9.84 | 0.49 | 4.74 | |

| L/4 | 41.76 | 42.81 | 1.05 | 2.51 | ||

| 2L/4 | 52.75 | 51.26 | 1.49 | 2.82 | ||

| 3L/4 | 43.28 | 42.19 | 1.09 | 2.52 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chu, X.; Zhou, Z.; Deng, G.; Duan, X.; Jiang, X. An Overall Deformation Monitoring Method of Structure Based on Tracking Deformation Contour. Appl. Sci. 2019, 9, 4532. https://doi.org/10.3390/app9214532

Chu X, Zhou Z, Deng G, Duan X, Jiang X. An Overall Deformation Monitoring Method of Structure Based on Tracking Deformation Contour. Applied Sciences. 2019; 9(21):4532. https://doi.org/10.3390/app9214532

Chicago/Turabian StyleChu, Xi, Zhixiang Zhou, Guojun Deng, Xin Duan, and Xin Jiang. 2019. "An Overall Deformation Monitoring Method of Structure Based on Tracking Deformation Contour" Applied Sciences 9, no. 21: 4532. https://doi.org/10.3390/app9214532