Bag-of-Visual-Words for Cattle Identification from Muzzle Print Images

Abstract

:1. Introduction

2. Related Work

3. BoVW-Based Cattle Identification

3.1. Feature Extraction

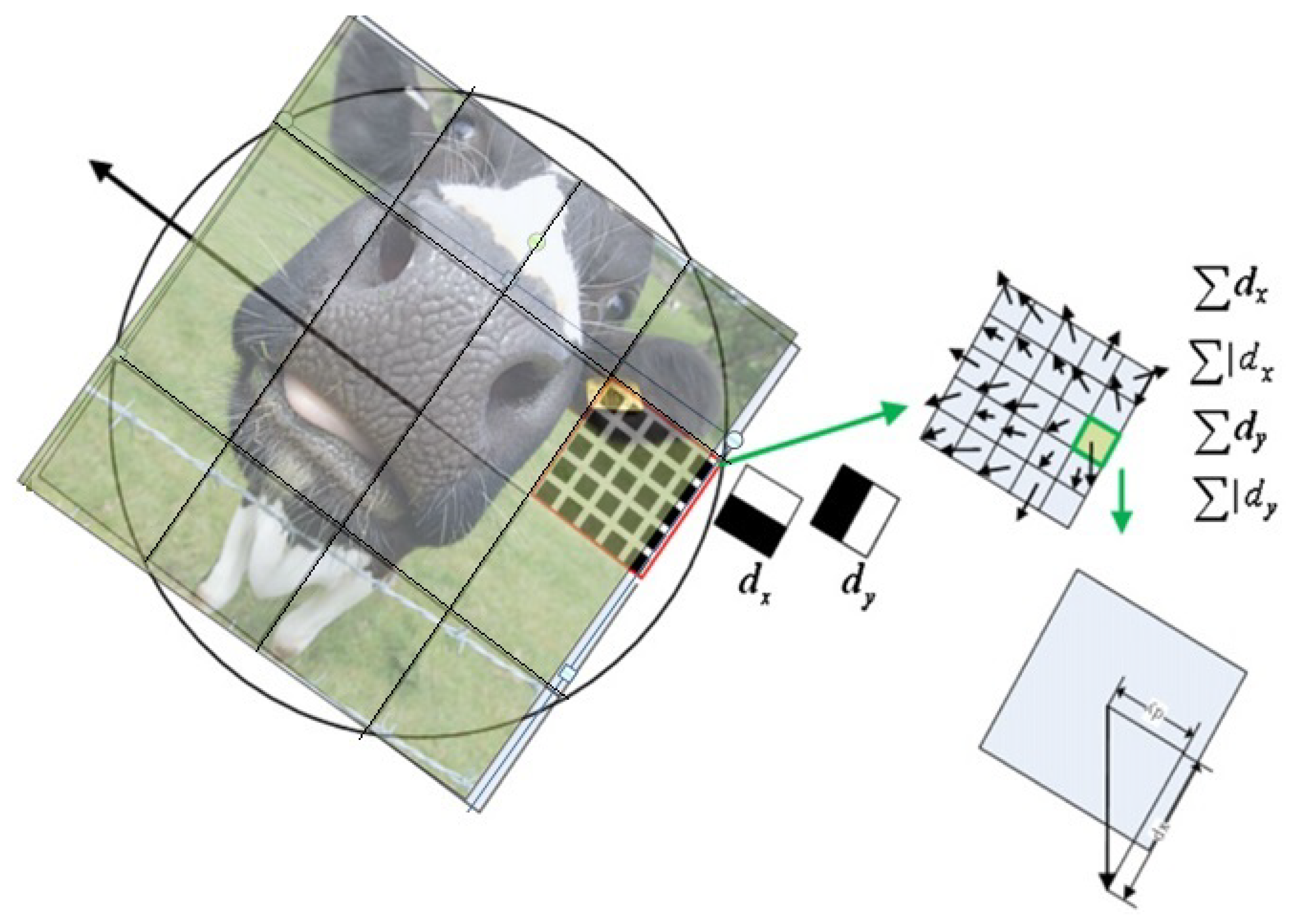

3.1.1. Speeded-Up Robust Features (SURF)

3.1.2. Maximally Stable Extremal Regions (MSER)

3.2. Bag-of-Visual-Words Representation

3.3. Classification Stage

4. Experimental Results

4.1. Bag-of-Visual-Words with SURF Features

4.2. Bag-of-Visual-Words with MSER Features

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Awad, A.I. From classical methods to animal biometrics: A review on cattle identification and tracking. Comput. Electron. Agric. 2016, 123, 423–435. [Google Scholar] [CrossRef]

- Chen, C.S.; Chen, W.C. Research and development of automatic monitoring system for livestock farms. Appl. Sci. 2019, 9, 1132. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K. Monitoring of pet animal in smart cities using animal biometrics. Future Gener. Comput. Syst. 2018, 83, 553–563. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K. Visual animal biometrics: Survey. IET Biomed. 2016, 6, 139–156. [Google Scholar] [CrossRef]

- Huhtala, A.; Suhonen, K.; Mäkelä, P.; Hakojärvi, M.; Ahokas, J. Evaluation of instrumentation for cow positioning and tracking indoors. Biosyst. Eng. 2007, 96, 399–405. [Google Scholar] [CrossRef]

- Bowling, M.B.; Pendell, D.L.; Morris, D.L.; Yoon, Y.; Katoh, K.; Belk, K.E.; Smith, G.C. Review: Identification and traceability of cattle in selected countries outside of North America. Prof. Anim. Sci. 2008, 24, 287–294. [Google Scholar] [CrossRef]

- Li, W.; Ji, Z.; Wang, L.; Sun, C.; Yang, X. Automatic individual identification of Holstein dairy cows using tailhead images. Comput. Electron. Agric. 2017, 142, 622–631. [Google Scholar] [CrossRef]

- Sofos, J.N. Challenges to meat safety in the 21st century. Meat Sci. 2008, 78, 3–13. [Google Scholar] [CrossRef]

- Dalvit, C.; De Marchi, M.; Cassandro, M. Genetic traceability of livestock products: A review. Meat Sci. 2007, 77, 437–449. [Google Scholar] [CrossRef]

- Kumar, S.; Singh, S.K. Cattle recognition: A new frontier in visual animal biometrics research. Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 2019, 1–20. [Google Scholar] [CrossRef]

- Jain, A.K.; Ross, A.A.; Nandakumar, K. Introduction to Biometrics; Springer: New York, NY, USA, 2011. [Google Scholar]

- Awad, A.I.; Hassanien, A.E. Impact of Some Biometric Modalities on Forensic Science. In Computational Intelligence in Digital Forensics: Forensic Investigation and Applications; Kamilah Muda, A., Choo, Y.H., Abraham, A., Srihari, S.N., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 47–62. [Google Scholar]

- Barry, B.; Gonzales Barron, U.; Butler, F.; McDonnell, K.; Ward, S. Using muzzle pattern recognition as a biometric approach for cattle identification. Trans. Am. Soc. Agric. Biol. Eng. 2007, 50, 1073–1080. [Google Scholar] [CrossRef]

- Lu, Y.; He, X.; Wen, Y.; Wang, P.S. A new cow identification system based on iris analysis and recognition. Int. J. Biomed. 2014, 6, 18–32. [Google Scholar] [CrossRef]

- Barry, B.; Corkery, G.; Barron, U.G.; Mc Donnell, K.; Butler, F.; Ward, S. A longitudinal study of the effect of time on the matching performance of a retinal recognition system for lambs. Comput. Electron. Agric. 2008, 64, 202–211. [Google Scholar] [CrossRef]

- Barron, U.G.; Corkery, G.; Barry, B.; Butler, F.; McDonnell, K.; Ward, S. Assessment of retinal recognition technology as a biometric method for sheep identification. Comput. Electron. Agric. 2008, 60, 156–166. [Google Scholar] [CrossRef]

- Kumar, S.; Tiwari, S.; Singh, S.K. Face recognition of cattle: Can it be done? Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 2016, 86, 137–148. [Google Scholar] [CrossRef]

- Jiménez-Gamero, I.; Dorado, G.; Muñoz-Serrano, A.; Analla, M.; Alonso-Moraga, A. DNA microsatellites to ascertain pedigree-recorded information in a selecting nucleus of Murciano-Granadina dairy goats. Small Rumin. Res. 2006, 65, 266–273. [Google Scholar] [CrossRef]

- Minagawa, H.; Fujimura, T.; Ichiyanagi, M.; Tanaka, K. Identification of beef cattle by analyzing images of their muzzle patterns lifted on paper. Publ. Jpn. Soc. Agric. Inf. 2002, 8, 596–600. [Google Scholar]

- Baranov, A.; Graml, R.; Pirchner, F.; Schmid, D. Breed differences and intra-breed genetic variability of dermatoglyphic pattern of cattle. J. Anim. Breed. Genet. 1993, 110, 385–392. [Google Scholar] [CrossRef]

- Awad, A.I.; Baba, K. Fingerprint singularity detection: A comparative study. In Software Engineering and Computer Systems; Mohamad Zain, J., Wan Mohd, W.M., El-Qawasmeh, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 179, pp. 122–132. [Google Scholar]

- Awad, A.I.; Zawbaa, H.M.; Mahmoud, H.A.; Nabi, E.H.H.A.; Fayed, R.H.; Hassanien, A.E. A robust cattle identification scheme using muzzle print images. In Proceedings of the Federated Conference on Computer Science and Information Systems, Kraków, Poland, 8–11 September 2013; pp. 529–534. [Google Scholar]

- Noviyanto, A.; Arymurthy, A.M. Automatic cattle identification based on muzzle photo using speed-up robust features approach. In Proceedings of the 3rd European Conference of Computer Science, Paris, France, 2–4 December 2012; WSEAS Press: Athens, Greece, 2012; pp. 110–114. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Nistér, D.; Stewénius, H. Linear time maximally stable extremal regions. In Proceedings of the 10th European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 183–196. [Google Scholar]

- Sinha, S.; Agarwal, M.; Singh, R.; Vatsa, M. Animal Biometrics: Techniques and Applications; Springer: Singapore, 2019. [Google Scholar]

- Mahmoud, H.A.; El Hadad, H.M.R. Automatic cattle muzzle print classification system using multiclass support vector machine. Int. J. Image Min. 2015, 1, 126–140. [Google Scholar] [CrossRef]

- Noviyanto, A.; Arymurthy, A.M. Beef cattle identification based on muzzle pattern using a matching refinement technique in the SIFT method. Comput. Electron. Agric. 2013, 99, 77–84. [Google Scholar] [CrossRef]

- Gaber, T.; Tharwat, A.; Hassanien, A.E.; Snasel, V. Biometric cattle identification approach based on Weber’s local descriptor and AdaBoost classifier. Comput. Electron. Agric. 2016, 122, 55–66. [Google Scholar] [CrossRef]

- Rivas, A.; Chamoso, P.; González-Briones, A.; Corchado, J. Detection of cattle using drones and convolutional neural networks. Sensors 2018, 18, 2048. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Zhang, D.; Cheng, G.; Liu, N.; Xu, D. Advanced deep-learning techniques for salient and category-specific object detection: A survey. IEEE Signal Process. Mag. 2018, 35, 84–100. [Google Scholar] [CrossRef]

- Ning, Y.; He, S.; Wu, Z.; Xing, C.; Zhang, L.J. A review of deep learning based speech synthesis. Appl. Sci. 2019, 9, 4050. [Google Scholar] [CrossRef]

- Ju, M.; Luo, H.; Wang, Z.; Hui, B.; Chang, Z. The application of improved YOLO V3 in multi-scale target detection. Appl. Sci. 2019, 9, 3775. [Google Scholar] [CrossRef]

- Shen, W.; Hu, H.; Dai, B.; Wei, X.; Sun, J.; Jiang, L.; Sun, Y. Individual identification of dairy cows based on convolutional neural networks. Multimed. Tools Appl. 2019, 1–14. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Andrew, W.; Greatwood, C.; Burghardt, T. Visual localisation and individual identification of holstein friesian cattle via deep learning. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2850–2859. [Google Scholar]

- Kumar, S.; Pandey, A.; Satwik, K.S.R.; Kumar, S.; Singh, S.K.; Singh, A.K.; Mohan, A. Deep learning framework for recognition of cattle using muzzle point image pattern. Measurement 2018, 116, 1–17. [Google Scholar] [CrossRef]

- Phyo, C.N.; Zin, T.T.; Hama, H.; Kobayashi, I. A hybrid rolling skew histogram-neural network approach to dairy cow identification system. In Proceedings of the International Conference on Image and Vision Computing New Zealand (IVCNZ), Auckland, New Zealand, 19–21 November 2018; pp. 1–5. [Google Scholar]

- Zhangyong, L.; Shen, S.; Ge, C.; Li, X. Cow individual identification based on convolutional neural network. In Proceedings of the International Conference on Algorithms, Computing and Artificial Intelligence, Sanya, China, 21–23 December 2018; p. 45. [Google Scholar]

- Qiao, Y.; Truman, M.; Sukkarieh, S. Cattle segmentation and contour extraction based on Mask R-CNN for precision livestock farming. Comput. Electron. Agric. 2019, 165, 104958. [Google Scholar] [CrossRef]

- Pang, Y.; Li, W.; Yuan, Y.; Pan, J. Fully affine invariant SURF for image matching. Neurocomputing 2012, 85, 6–10. [Google Scholar] [CrossRef]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Donoser, M.; Bischof, H. Efficient maximally stable extremal region (MSER) tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 1, pp. 553–560. [Google Scholar]

- Hassaballah, M.; Abdelmgeid, A.A.; Alshazly, H.A. Image features detection, description and matching. In Image Feature Detectors and Descriptors: Foundations and Applications; Awad, A.I., Hassaballah, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 11–45. [Google Scholar]

- Jingyan, W.; Yongping, L.; Ying, Z.; Chao, W.; Honglan, X.; Guoling, C.; Xin, G. Bag-of-features based medical image retrieval via multiple assignment and visual words weighting. IEEE Trans. Med. Imaging 2011, 30, 1996–2011. [Google Scholar]

- Perronnin, F. Universal and adapted vocabularies for generic visual categorization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1243–1256. [Google Scholar] [CrossRef] [PubMed]

- Sheng, X.; Tao, F.; Deren, L.; Shiwei, W. Object classification of aerial images with bag-of-visual words. IEEE Geosci. Remote Sens. Lett. 2010, 7, 366–370. [Google Scholar] [CrossRef]

- Peng, X.; Wang, L.; Wang, X.; Qiao, Y. Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice. Comput. Vis. Image Underst. 2016, 150, 109–125. [Google Scholar] [CrossRef] [Green Version]

- Richard, A.; Gall, J. A bag-of-words equivalent recurrent neural network for action recognition. Comput. Vis. Image Underst. 2016, 151, 79–91. [Google Scholar] [CrossRef] [Green Version]

- Lahrache, S.; El Ouazzani, R.; El Qadi, A. Bag-of-features for image memorability evaluation. IET Comput. Vis. 2016, 10, 577–584. [Google Scholar] [CrossRef]

- Dziuk, P. Positive, accurate animal identification. Anim. Reprod. Sci. 2003, 79, 319–323. [Google Scholar] [CrossRef]

- Trevarthen, A. The national livestock identification system: The importance of traceability in E-Business. J. Theor. Appl. Electron. Commer. Res. 2007, 2, 49–62. [Google Scholar]

| Training (%) | Configuration Parameters | BoVW Performance Metrics | |||

|---|---|---|---|---|---|

| No. of Images | No. of Features (Average) | Accuracy (%) | Time (s) | ||

| 30% | 30 | 7600 | 75 | 27.7 | |

| 45% | 45 | 7600 | 83 | 32.6 | |

| 60% | 60 | 7600 | 91 | 42.5 | |

| 75% | 75 | 7600 | 93 | 89.5 | |

| Training (%) | Configuration Parameters | BoVW Performance Metrics | |||

|---|---|---|---|---|---|

| No. of Images | No. of Features (Average) | Accuracy (%) | Time (s) | ||

| 30% | 30 | 208 | 52 | 15.0 | |

| 45% | 45 | 197 | 60 | 19.7 | |

| 60% | 60 | 191 | 67 | 22.1 | |

| 75% | 75 | 192 | 67 | 28.0 | |

| No. of Images | No. of Cattle | Total Accuracy (%) | |

|---|---|---|---|

| Minagawa et al. [19] | 86 | 30 | 66.6 |

| Awad et al. [22] | 105 | 15 | 93.3 |

| Noviyanto & Arymurthy [23] | 120 | 08 | 90.0 |

| Proposed BoVW (SURF) | 105 | 15 | 93.0 |

| Proposed BoVW (MSER) | 150 | 15 | 67.0 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Awad, A.I.; Hassaballah, M. Bag-of-Visual-Words for Cattle Identification from Muzzle Print Images. Appl. Sci. 2019, 9, 4914. https://doi.org/10.3390/app9224914

Awad AI, Hassaballah M. Bag-of-Visual-Words for Cattle Identification from Muzzle Print Images. Applied Sciences. 2019; 9(22):4914. https://doi.org/10.3390/app9224914

Chicago/Turabian StyleAwad, Ali Ismail, and M. Hassaballah. 2019. "Bag-of-Visual-Words for Cattle Identification from Muzzle Print Images" Applied Sciences 9, no. 22: 4914. https://doi.org/10.3390/app9224914