1. Introduction

With the rapid development of robots nowadays, service robots are becoming increasingly popular in ageing societies. It is necessary that such robots be capable of gathering information about their external environment. However, most robots are only able to consider physical information, such as images captured from cameras or depth information provided by a laser rangefinder. Given the wide variety of objects encountered in the environment, it is impossible to collect all possible appearances of objects to train robots to recognize them, regardless of whether the data are in the form of laser points or images. Therefore, it is necessary to develop a solution for this problem.

To extract extra information in the environment model, there are two principal methods: the semantic map and cognitive map. In the semantic map, semantic labels are recorded for the object in the environment so that the map can provide a semantic level of understanding of the environment. Nüchter and Hertzberg [

1] defined this as “a map that contains, in addition to spatial information about the environment, assignments of mapped features to entities of known classes. Further knowledge about these entities, independent of the map contents, is available for reasoning in some knowledge base with an associated reasoning engine”. The semantic map focuses on tagging an object or area so that robots can understand the meaning of a statement and perform the appropriate action [

2,

3,

4]. A cognitive map is a mentality model of a person to explain the mechanism of recording information from an environment. The cognitive map is constructed by the abstract information, such as landmarks, path and nodes [

5].

Humans learn about an object and its function by imitating the interactions of other people with the object. Like animals can learn new behavior by observing other animals [

6], people can gain benefit from imitating other people, and so do robots [

7,

8,

9]. By learning the behavior that happens in a specific position, robots can understand the use of space. Therefore, recording human behavior in a specific position is the primary idea involved in creating a cognitive map.

In biology, a cognitive map is regarded as a cognitive representation of a specific location in space and is constructed from place cells, which are found in the hippocampus [

10,

11]. The activity of place cells in the cognitive map is associated with a specific location in the environment and the corresponding location is called the place field. Place cells provide the location in the environment in the same way as the global positioning system (GPS). The activity of place cells will be reset, called remapping, as seen in studies on rats in another environment. This explains the plasticity of the hippocampus and the rats’ ability to adapt. With the development of the brain, grid cells have been found in the entorhinal cortex [

12]. The activity of a grid cell is associated not only with a specific location but with several locations in the environment. Grid cells provide an internal coordinate system for navigation. The cognitive map is formed of place cells and grid cells such that it memorizes and understands the use of space.

A lot of research that adapts the cognitive map to robot applications is focused on creating a mathematical model for navigation or building a map for navigation because the cognitive map tries to classify the mechanism of navigation and memory for the use of space [

13,

14]. There are some research works [

15,

16] that associate the use of space with behavior. However, the behavior of moving still remains unsolved, such as busy walking, idle walking, wandering and stopping [

17].

However, the cognitive map is rarely recording the low-level human behavior in the map such as standing, sitting, picking up and object, waving a hand, etc. In [

18], the authors proposed a behavior-based map which is divided into many sub-regions. For each region, all human movement directions are described as transition probabilities. This method described the relationship between human behaviors and the environment. Inspired by these articles, we devise a method to enable robots to understand an environment through human behavior not only on physical information alone. This method also records human behaviors in each cell of map and describes the human behaviors that are suitable for the specific location. Moreover, the proposed method can enable a robot to understand an environment automatically according to the behaviors of humans in that environment. To these ends, we focus on developing a behavior cognitive map that can recognize and record human behaviors. Robots based on this map can be used in diverse applications, for example, as exhibition assistants and patrol robots. In general, the task of creating a cognitive map can be divided into two steps: recognition of human behaviors and recording of human behaviors.

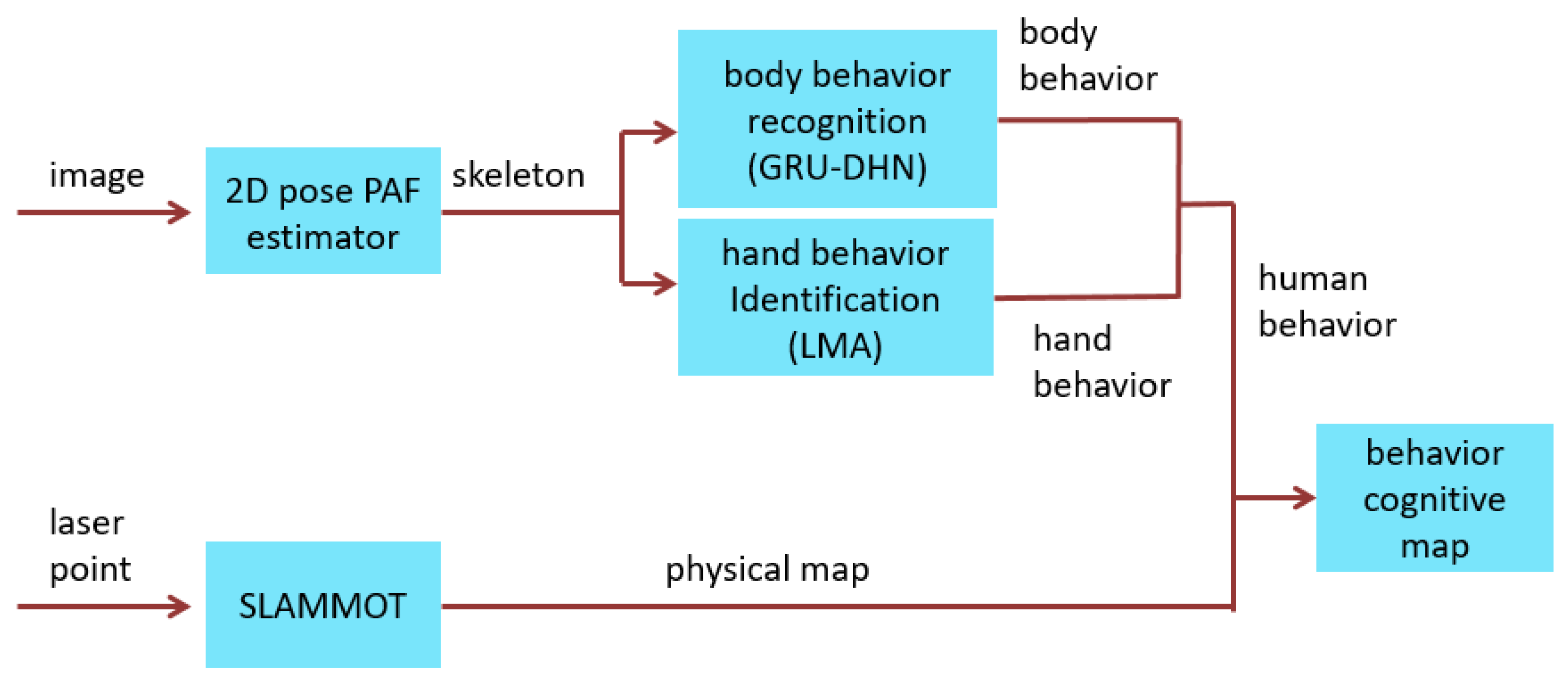

A system based on the proposed method can understand the use of space to enable robots to respond correctly to human behavior. The aforementioned behavior cognitive map that records the use of space by humans is designed based on two dimensions of human behavior models, namely the hand dimension and the body dimension. After fusing the behavioral features of humans, robots can identify whether a behavior is normal and provide an appropriate response. A block diagram of the structure of the proposed behavior cognitive map is shown in

Figure 1. The aforementioned system has several functional components, including two-dimensional (2D) pose estimator using part affinity fields (PAFs), body behavior recognition using gated-recurrent-units (GRU)-based dynamic highway network (DHN), behavior-identification using Laban movement analysis, and behavior cognitive map. The first functional component is the 2D pose estimator using PAFs [

19], which employs a convolutional neural network (CNN) and can draw a human skeleton on images. The second functional component, that is, the GRU-based DHN, is a combination of highway networks [

20] and GRU neural networks [

21]. The GRU-based DHN uses the skeleton position with the 2D pose estimator to predict body behavior. The third functional component is behavior identification. LMA [

22,

23] (Laban movement analysis) is applied to the skeleton positions to extract the features associated with hand behaviors. In LMA, 22 features are defined, and a similarity function is defined to calculate the similarity for the same behavior for each feature. Integrated similarity values estimated with the fuzzy integral algorithm [

24]. Meanwhile, the positions of humans in images are estimated with simultaneous localization, mapping, and moving object tracking (SLAMMOT). Based on the estimated positions of humans, the normal behaviors recorded in the behavior cognitive map can be extracted and compared with observed behaviors.

The remaining parts of this paper are organized as follows:

Section 2 presents two modules for human behavior recognition and introduce the 2D pose estimator using PAFs and the GRU-based DHN. With these two functional components, a behavior-recognition model is constructed. In the behavior identification, the Laban movement analysis (LMA) is applied to identify the features that represent specific hand behaviors. The SLAMMOT is applied to construct the environment map.

Section 3 introduces the behavior cognitive map. The interval recording method used in pedagogy is employed to record features into the behavior cognitive map. The similarity function and the fuzzy integral are applied to associate behaviors with specific positions. Identification of normal behaviors help robots react expediently. In

Section 4, a series of simulations and experiments is presented and discussed. Finally, the results of the study are summarized in

Section 5 and an outline for future work is given.

2. Human Behavior Recognition

Before building the cognition behavior map, the robot has to understand the human behavior. The first step is to establish a mechanism for recognizing human behavior. The task of human behavior recognition is divided into two parts: human pose estimation and behavior-recognition models. In 2D pose estimation, human postures are described with a skeleton. The information presented by the skeleton was used to train the two behavior-recognition models, namely, GRU-based DHN and similarity calculations of the Laban features.

2.1. 2D Pose Estimation

Cao at al. [

19] proposed the 2D pose estimator using PAFs to address the human pose. The PAFs (part affinity fields) are the sets of 2D vector fields which describe the relation between human body joints. These vector fields can be used to extract the original position and location of each limb. This method retains the advantages of the heatmap and addresses several of its disadvantages. Thus, it will be used to estimate 2D poses from images.

A two-branch multi-stage CNN was used to calculate the PAFs and the joint confidence maps for each limb. The transformation function of the first branch of CNN, which is represented by ρ, is designed to predict the joint confidence maps. The set

S = (

S1,

S2, …,

SJ) contains

J confidence maps, where

denotes the confidence map of each body joint. The other branch’s transformation function of CNN, which is represented by

φ, employs PAFs to express associations between joints. The set

L = (

L1,

L2, …,

LC) contains

C vector fields, where

denotes the 2D vector field in the relationship between body joints, also called limbs. In the first stage, the confidence maps and PAFs are represented as

S1 =

ρ1(F) and

L1 =

φ1(F). F is the output of a CNN initialized by the first 10 layers of VGG-19 [

25]. Therefore, the 2D pose estimator using PAFs can be expressed as Equations (1) and (2):

where the superscript

t denotes the variables at stage

t.

ρt and

φt are the transformation function of CNN at the stage

t. Finally,

S and

L follow the definitions given in the paragraph above.

To train the subnetworks ρ, it is necessary to generate the ground truth confidence map. The ground truth of confidence map

satisfies the following equation:

where

denotes any position on the confidence map;

the ground truth position of the body joint

j for person

k; and σ the spread of joint

j, which is set by the designer.

Instead of solving an integer linear programming problem, this method assembles body joints via 2D vector fields, called the PAFs. The PAFs are calculated by using CNNs, and they can consider large-scale features in an adjacent region. The PAF is a 2D vector field of each limb, and it preserves the position and orientation information. We formulate the ground truth of the PAF

for training

as (4):

where

denotes any position on the PAF,

the ground truth positions of body joints

j1 and

j2 for person

k, and limb

c the connection between

and

. To verify whether position

x is on limb

c, we define Equations (5) and (6). If position

x satisfies Equations (5) and (6), then it is on limb

c:

where

x,

and

follow the definition in Equation (4),

is a vector perpendicular to

, and

σl is a constant that controls the width of the vectors for each limb. Consequently, Equation (5) constrains the vectors that only exist between two joints, and Equation (6) constrains the vectors that exist at a specific distance from each other.

After training, the CNN

can estimate a PAF that shows the possibility of association between two joints. Equations (7) and (8) are advanced to evaluate this probability:

where

and

denote the estimated positions of joints

j1 and

j2, respectively, and

x(

u) the interpolation of two joints. Using Equation (7), we can determine with certainty the relationship between two joints. After computing the relation of all joints, the human skeleton can be obtained. The axes of each joint, described as

X, are collected as the inputs for two behavior-recognition models to identify the human behavior.

2.2. Body Behavior Recognition Model

The 2D pose estimator using PAF is useful for recognizing human behavior. Because human behavior is relative to time, the GRU (gated-recurrent-units) is necessary for learning temporal characteristics. Therefore, a new type of neural networks called GRU-based DHN is proposed.

Srivastava et al. [

20] proposed highway networks based on the idea of bypassing a few upper layers and transmitting information to the deeper layers. In highway networks, a transform

T(W

TX+

bT) is additionally defined to control the weights of the combination of input x and the output of the transform

H(W

HX+

bH). However, the

X is the axis of each joint from the human skeleton. The detailed function (Equation (9)) is illustrated in

Figure 2:

where

denote the input and output of a layer, respectively;

the weights and bias in the transform

T(W

TX+

bT);

H an activation function, which is a rectified linear unit here; and

T an activation function, which is a sigmoid function here. The dot operator

denotes element-wise multiplication, and 1 denotes column vector with elements of 1.

In addition, Equation (9) shows that y is a linear combination of input x and the output of the transform H(WHX+bH) in which the output of function T ranges between 0 and 1. Recalling the idea of the output of T(WTX+bT) the output denotes the weights of the combination of input x and the output of the transform H(WHX+bH) and backpropagation tunes the weights to minimize loss function. We can consider the weights to be tuned according to the utility of input and the output of the transform H(WHX+bH) through backpropagation.

Based on the idea of the output of

T(W

TX+

bT) the utility of input x and the output of the transform

H(W

HX+

bH) can be compared. The proposed dynamic high network (DHN) is expressed as Equations (10) and (11), and it is shown in

Figure 3:

where

denote the input, output, and middle input of the entire layer, respectively.

denote the weights and the bias in the first and second layers, respectively, while

H1,

H2,

T1,

T2 are the activation functions. Here,

X and y are called pass units, and

d indicates growing units.

In DHNs, the function of the first layer is expressed as Equation (10). In this layer, compares the utility of ) with 0. Based on the values of , one can determine whether the number of neurons in the growing units is adequate. Consequently, the dimensionality of the growing units, n, can be raised until the values of are adequately small or the maximum permissible number of growing units is achieved. As a result, n can change dynamically according to the utility of ).

A GRU was proposed in [

21]. The GRU is a structure of nodes. It is used in recurrent neural networks to learn the temporal features. The GRU introduces reset gates and update gates. Update gates avoid gradient diverging or gradient vanishing owing to the recurrent use of the same weights. The GRU is used instead of long short-term memory (LSTM) because the GRU model contains fewer parameters, which means the model can be trained faster and with lower memory usage. Information on human behavior is dependent on time. Therefore, extending the DHNs with the GRU model improves the behavior-recognition performance. The structure of the proposed GRU-based DHNs is illustrated in

Figure 4.

In DHNs, the neurons are used as the normal nodes. There is no recurrent ability in the nodes, so they are unable to cope with temporal characteristics. In GRU-based DHNs, the neurons in the two sublayers are replaced with the GRU model to extend the model’s ability to address human behavior. Moreover, we use gates and in this model, with the same purpose as the forget gate in the LSTM model. This sublayer structure is intermediate between the GRU and the LSTM models.

As a result, the new model combining the 2D pose estimator using PAF and GRU-based DHNs is used to perform human skeleton estimation first, followed by behavior recognition, and it is shown in

Figure 5. In other words, the 2D pose estimator using PAF first determines the 2D positions of the body joints; then, a GRU-based DHN is constructed to estimate human behavior from the movements of the body joints with time.

2.3. Hand Behavior Identification Model

The human hand behavior is identified by using Laban movement analysis (LMA), which was proposed by Rudolf von Laban [

22] to evaluate the similarity between the data and actual human action. This system combines psychology, anatomy, and kinesiology. It was developed for recording body language in dancing and has subsequently been widely used for behavior analysis. LAM not only records the positions of body joints but also describes the relationships among body representations, initial states, intentions, and emotions [

26]. It divides human movement into the following elements: body, effort, shape, and space. These elements provide different aspects of information pertaining to human movement. In addition, they offer a few features to describe human movement, such as a dancer can adopt the correct pose for dancing based on the same foundation. Therefore, any dancer can recreate a masterpiece by using these basic elements, regardless of their cultural or language background.

The behavior of hands is represented by LMA. Laban movement analysis can be used to ascertain the principal information in a movement so that the movement can be identified easily based on the calculated LMA features. The 2D pose estimator using PAF estimates the skeletons of the people from a given image. With the skeleton information, the LMA features are extracted based on the description of the given LMA. These features are considered on the frontal plane because they only consist of 2D information and are normalized by shoulder width and height. Based on these features, the diverse behaviors of the hands can be recorded in the behavior cognitive map. The features used in each element are defined as follows:

Body: The body feature describes the relationships between each limb while moving. We use nine distance features to describe the relationships and connections of each body joint. Therefore, we employ the distances from the hands to the shoulders, from the elbows to the shoulders, from the right hand to the left hand, from the hands to the head, and from the hands to the hips to represent the body.

Effort: The effort features are used to understand the people’s inner intention, strength, timing, and control of movement. We use abrupt motions (jerky motion) of the hands and the hips as the features for the flow factor. We use acceleration of the hands as the feature for the weight factor. The time factors are extracted from the velocities of the hands. The above eight features are extracted as effort features.

Shape: The shape describes the relationship between the body and environment. This research focuses on the hand’s behavior. So, we select three features to describe the shape features which are the height of both hands and the ratio of the distance from the hands to the center of the shoulder.

Space: Space relates the motion and environment. It can be described as the space in which the human’s limbs can occupy. The area of the body can explain the relationship between the body and the environment. As a result, we use two area features, which are the area covered by the hands to the head and the hands to the hip, to indicate the space element.

We extract a total of 22 features, including nine distance features, two velocity features, two acceleration features, four jerk features, two position features, one distance ratio feature and two area features as the LMA features. These calculated features are recorded in the behavior cognitive map. Meanwhile, the behavior of the hand is predicted using the behavior-recognition model. The complete behavior-identification algorithm is illustrated in

Figure 6.

A series of features is recorded, and these features are used to construct the corresponding vectors of the features that describe each behavior. The vector that contains the series of data for a specific feature

f at time t is expressed as

Vf(t). The vector that contains the series of data for a specific feature

f and is recorded in the behavior cognitive map is expressed as

. Therefore, the similarity between two behaviors for feature

f can be calculated as the Euclidean distance between two vectors, as in Equations (12) and (13):

where the parameters of (13) are determined by trial and error.

The similarity value of the LMA features extracted from two similar hand behaviors is high. When the behavior resembles the recording behavior, the Euclidean distance is close to 0 and the similarity value is close to 1. Conversely, the similarity value is close to 0. After using Equation (13), the 22 similarity values of 22 features are obtained. In order to speed up the system and collect more gestures as possible, we use a fuzzy integral to fuse 22 features instead of using a model to identify what kind of behavior, and the fusing result is the final similarity value.

2.4. SLAMMOT (Simultaneous Localization, Mapping, and Moving Object Tracking)

The SLAMMOT [

27,

28] algorithm is widely used in mobile robotics so that robots are able to know their environment and also track moving objects. In this research, we use this method to track the moving objects by treating the participants who actively interact with one another in the area as the targeted moving objects. The SLAMMOT problem can be approximated as the posterior estimation, which can be shown as Equation (14):

where

t is the time index,

gt is the state of the robot,

ot is the state of the moving object.

Zt is observation,

Ut is the control input.

and

denote measurements of stationary and moving objects, respectively.

M is the environment map. It is used as a reference to record the location where the behavior arises from the body behavior model and hand behavior model. The detailed introduction to SLAMMOT, can be found in [

8]. In the next section, the process of building the behavior cognitive map is carried out.

3. Behavior Cognitive Map

After recognizing human behavior, the next step is to record the behavior in the cognitive map in order to distinguish the normality of human motion. In biology, a cognitive map is regarded as a cognitive representation of a specific location in space, which is composed of place cells and grid cells, such that it memorizes and understands the use of space.

A cognitive map has been recognized as a mechanism for navigation and memory related to the use of space. Many robot applications [

13,

14] were focused on creating a mathematical model or building a navigation map. In the present study, we further develop the cognitive map and add records of human behavior to it to enhance the realization of the use of space. The proposed cognitive map, called a behavior cognitive map, establishes a relationship between a behavior and the location associated with the behavior. A few studies have associated the use of space with behavior. However, in those studies, the unclear motivation behaviors are related to states of movement, such as busy walking, idle walking, wandering, and stopping. In contrast, we introduce a variety types of behavior into the behavior cognitive map so that it can supply more information about the environment.

3.1. Recording Behavior

It is necessary to discuss the relevant human behaviors before creating the behavior cognitive map. In this study, we consider only those human behaviors that are associated with actions. The behaviors recorded in the behavior cognitive map should be daily or routine behaviors rather than unusual behaviors because the purpose of the behavior cognitive map is to learn the normal use of space. Thus, we collected datasets of daily behavior to determine representative daily behaviors.

Table 1 summarizes the contents of eight datasets: kth [

29], IXMAS [

30], MuHAVi [

31], ACT4 [

32], UCLA [

33], HuDaAct [

34], ASTS [

35], and AIC [

36]. We employ these datasets to determine the types of behavior that can be classified as representative daily behaviors based on the frequency of their occurrence, as highlighted in

Table 1.

The selected datasets contain only instances of low-level behaviors. High-level behaviors describe the interactions between a human and specific objects, i.e., behaviors that involve holding something and paying attention to it, such as a person using a smartphone or reading a book. The behavior cognitive map expresses the relationship between behavior and location, and records the use of space, it should describe how people tend to perform a specific behavior in a given location. Thus, the object of a person’s attention is not important for the behavior cognitive map, and such high-level behavior should be ignored. Moreover, it is impractical to consider such objects because a great number of them can be found in real environments. Therefore, we selected the datasets covering low-level behaviors as opposed to high-level behaviors.

As shown in

Table 1, we choose seven representative daily highly repeated behaviors, namely, standing, walking, running, sitting, punching, hand waving, and picking up. In this study, we regard people as pedestrians if they are walking or running. Although the behavior cognitive map connects behavior with location, the behavior of pedestrians is not relative to their locations because they simply want to move toward their destination. For this reason, pedestrians are neglected in the behavior cognitive map. The remaining representative daily behaviors can be separated into two dimensions: the body and the hands. In the body dimension, standing, sitting, and picking up denote different body positions. In the hands dimension, punching and hand waving denote different hand positions.

From the hand behaviors in

Table 1, it is evident that the behaviors of the hands are not expressed adequately by punching and hand waving due to the great diversity of hand behaviors. To expand the behavior-recording capacity, hand behaviors are described with LMA in the behavior cognitive map. Unlike hand behaviors, body behaviors can be represented accurately by standing, picking up, and sitting. Therefore, the behavior-recognition model classifies the body behaviors as standing, picking up, and sitting. After this classification, the position information pertaining to these behaviors is recorded in the behavior cognitive map.

3.2. Structure of Behavior Cognitive Map

The behavior cognitive map is divided into three maps—sitting, picking up, and standing—because it is impossible for these three states to occur simultaneously. As one of these behaviors occurs, the behavior of the body is predicted, and the features of the behavior of the hands are calculated in accordance with the definition of body behaviors above. These features are recorded in the behavior map where the body is in the corresponding position. These maps are illustrated in

Figure 8.

The red dots indicate that features are recorded at those locations. Thus, the behavior cognitive map is defined, and it can record the relationships between behaviors and the locations at which they occur. Therefore, a behavior can be decomposed into the body dimension and the hand dimension. In the body dimension, three body behaviors (standing, sitting, picking up) represent the body behavior. In the hand dimension, LMA is employed due to the large number of possible hand behaviors. The model structure is shown in

Figure 9.

In the behavior cognitive map, recording the frequency of behaviors is helpful for constructing a more ideal map. Based on the frequency of a behavior, a robot can determine whether that behavior is normal or not. Therefore, the behavior cognitive map can learn online and revise itself to adjust to dynamic environments.

Many methods have been proposed to record behavior, such as continuous recording, duration recording, time sampling, and interval recording. Of these methods, interval recording is suitable for our purpose and has been employed in the proposed behavior cognitive map. Interval recording records the occurrence of a given behavior in specified periods. The total observation time is divided into smaller intervals. Therefore, the total observation time should be decided first. According to the AVA dataset [

37], an observation period of 1 s is adequate to recognize a behavior. Thus, we selected this observation period. The number of intervals is determined by the processing time of the program. In each interval, each feature is calculated and recorded.

3.3. Behavior Identification

To clarify the use of space, comparing the behavior in the behavior cognitive map and the behavior of a person at a given moment is an essential function of robots. A robot that can judge whether a behavior is normal can help a person to complete a task or prevent a person from completing a prohibited task.

In the behavior cognitive map, we have not selected specific behaviors as classifiers because it is impossible to classify all possible behaviors through the integration of all possible features and infer the results. Therefore, we have employed a similarity function to estimate similarities and integrated these similarities by using a fusion algorithm. From the final similarity, hand behaviors are identified as being either distinct or not distinct. To this end, we have applied a fusion algorithm called fuzzy integral to compute the extent of the similarity between hand behavior database and online testing data based on the features proposed. Sugeno introduced a non-additive “fuzzy” measure by replacing the additive property with the weaker monotonicity property [

24], based on which the fuzzy integral was proposed. The fuzzy integral combines information from different sensors according to a non-linear function based on a fuzzy measure. Because of the concept of the fuzzy measure, the fuzzy integral has been widely applied. The principles of the fuzzy measure and fuzzy integral are introduced as Equation (15):

where

r(

ε) denotes the final similarity of all features.

ε is the set for each measure feature that is represented as

ε = {

ε1,

ε2, …,

ε22}. ∨ and ∧ denote the maximum operator and minimum operator.

h(

mi) is the measure result for model

mi. There are 22 models which use the thresholds to classifier the feature is similar or not. The finite set

is defined as

and a subset

and

g is a

-fuzzy measure, the value of

g(

Ai) can be determined as:

and the

λ value can be calculated by Equation (18):

In this research, we use F-score defined gi to perform the fuzzy integral operations. After computing the λ value, the final similarity can be obtained.

An F-score set is created to express the reliability of each model for a positive class. In the F-score set, each F-score serves as a measure to judge the evaluation of a model. Two parts are considered for calculating the F-score—precision

p and recall

r. The precision

p expresses the proportion of correct estimates of a positive result among all positive estimations. The recall

r expresses the proportion of correct estimates of a positive result among all correct estimations. The detailed calculation is shown in

Figure 10.

The F-score can be regarded as a weighted average of the precision and the recall for evaluating the performance of a model. The precision, recall, and F-score are related as follows:

The behaviors of the body can be represented by standing, picking up, and sitting. In relative terms, the behaviors of the hands are too wide-ranged, which makes it difficult to select representative behaviors. Therefore, the behaviors of the hands are described with LMA. Laban movement analysis defines 22 features, and these features are adequate to represent the behaviors of the hands. As a result, these features are recorded in the behavior cognitive map at the corresponding positions. With these features, the similarity of each feature can be calculated between two behaviors. The fuzzy integral is then introduced to integrate all features. Thereafter, the final similarity between two behaviors can be calculated. Consequently, a robot can determine whether a behavior is normal and select the correct response to interact with a person.

5. Conclusions

In this paper we have proposed a behavior cognitive map that can help mobile service robots understand the use of space. Using the proposed behavior cognitive map, robots can recognize normal and abnormal behaviors at specific locations in the environment. To construct the behavior-recognition model, we combined the 2D pose estimator using PAF and GRU-based DHNs to create a behavior-recognition model that can recognize postures of the human body. We then proposed a behavior-identification algorithm to determine whether the behaviors of two hands are identical. Multi-feature fusion, which can dynamically balance the result based on objective predictions and the importance or reliability of the features, was realized through Sugeno fuzzy integral fusion. These behaviors were recorded on the behavior cognitive map directly based on the location of their occurrence. Therefore, the use of space was realized, and any abnormal behavior can be identified by the proposed system. Finally, the aforementioned behavior-recognition system and the behavior cognitive map were implemented on a mobile robot, and behavior-recognition and servicing experiments were conducted. The results of the experiments showed that the robot can easily identify abnormal behavior and provide appropriate responses in human–robot interaction tasks.

The behavior cognitive map is used to build the human behaviors. This method complements the cognitive map without considering low-level human behaviors. This model lets the robot know what kinds of human behaviors usually occur at the specific space. However, this method does not consider the situation that the surrounding environment may change. For instance, the exhibitions are moved to other locations and the visiting traffic flow is changed. These situations will invalidate the behavior cognitive map.

In future work, there are three aspects in our research. First, add objects in the environment to the behavior cognitive map. It may help the cognitive map more complete and provide the ability to identify the abnormal behaviors that human interact with objects. Furthermore, it protects human to avoid the dangerous or inappropriate situation. Second, add adaptive functions. The behavior cognitive map can be adjusted as the spatial relationship changes. Finally, our approach will be extended to record high-level behaviors. Based on this extension, the robot can recognize more complex human behaviors.