STN-Homography: Direct Estimation of Homography Parameters for Image Pairs

Abstract

:1. Introduction

2. Dataset

3. STN-Homography

3.1. Architecture of STN-Homography

3.2. Training and Results

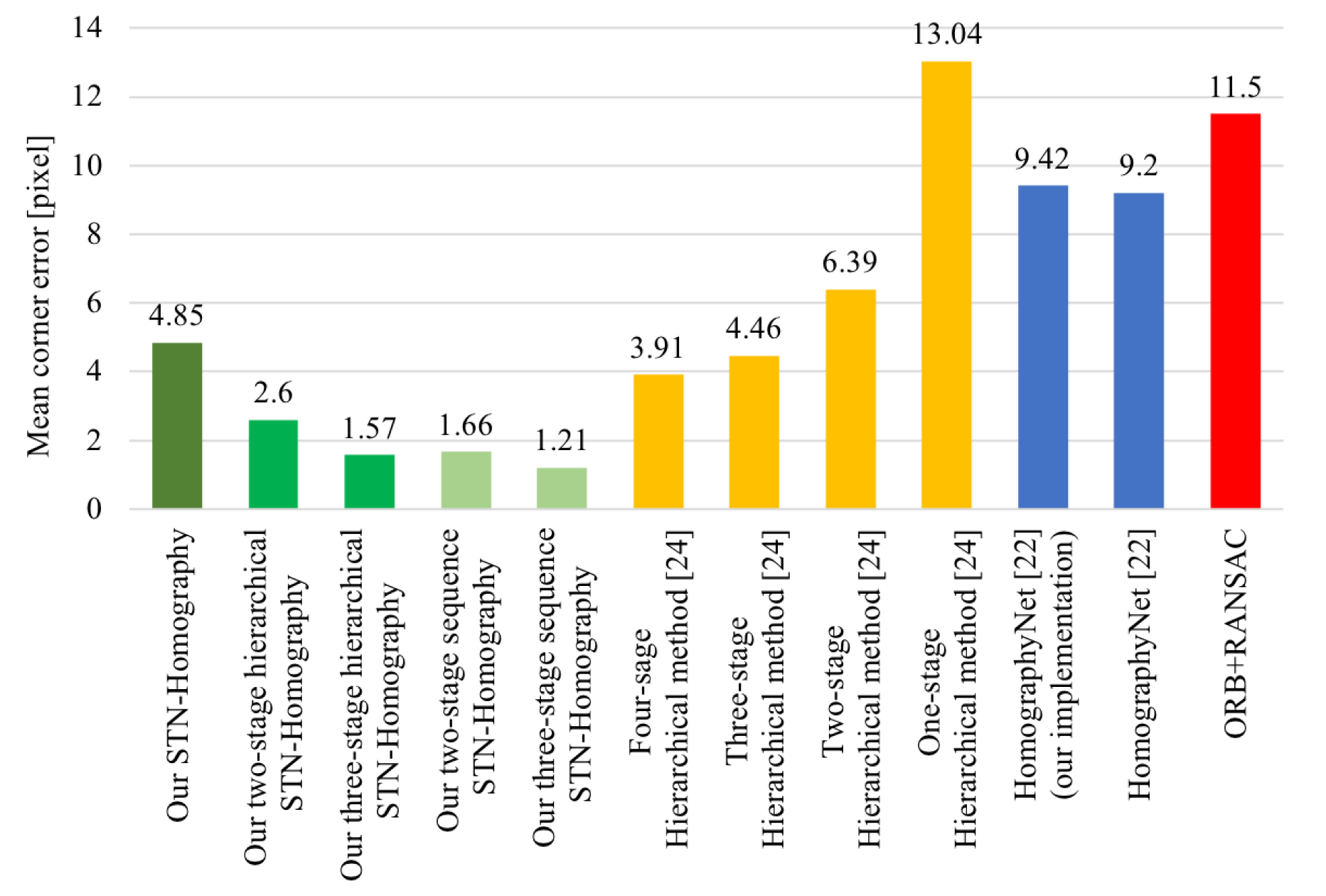

3.3. Comparison with Other Approaches

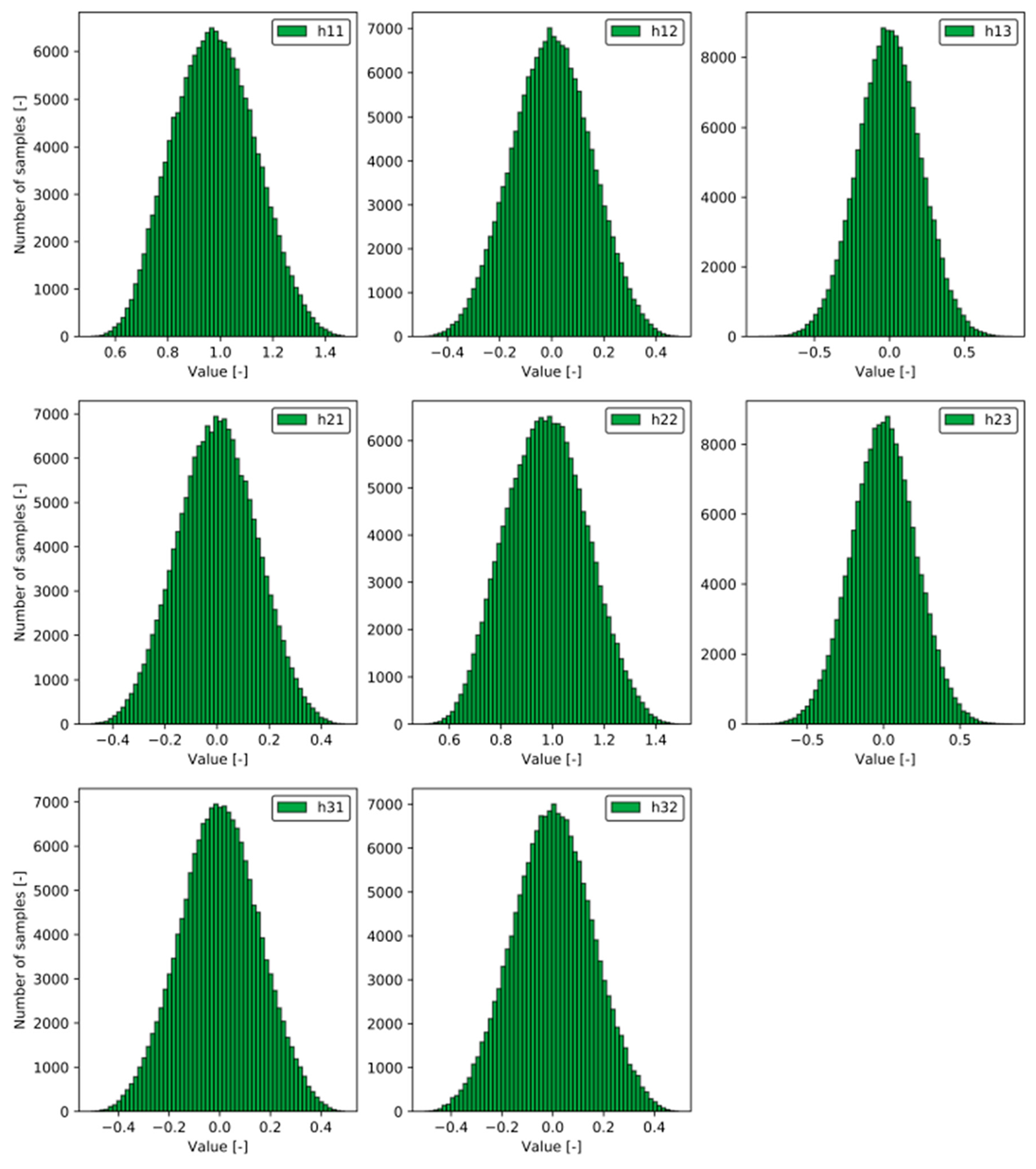

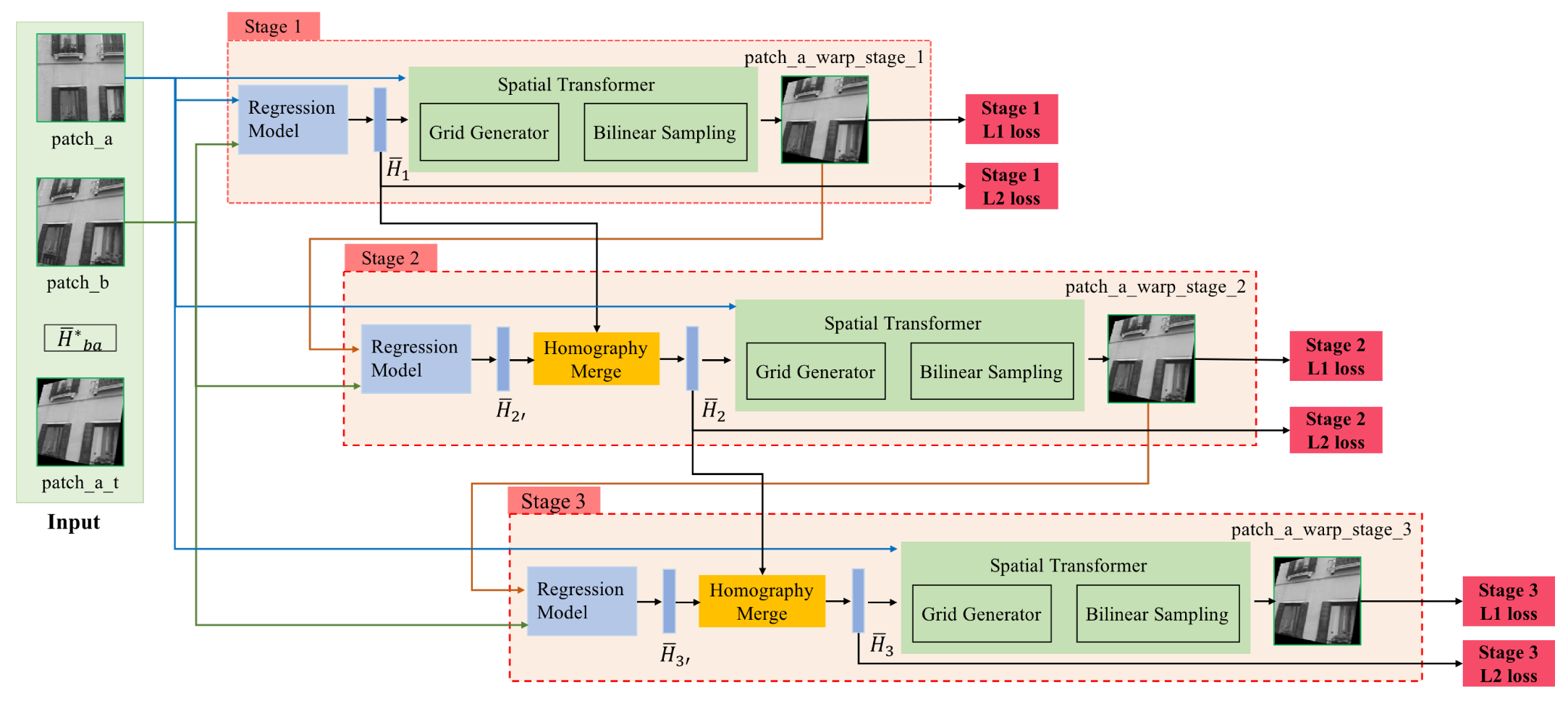

4. Hierarchical STN-Homography

4.1. Architecture of Hierarchical STN-Homography

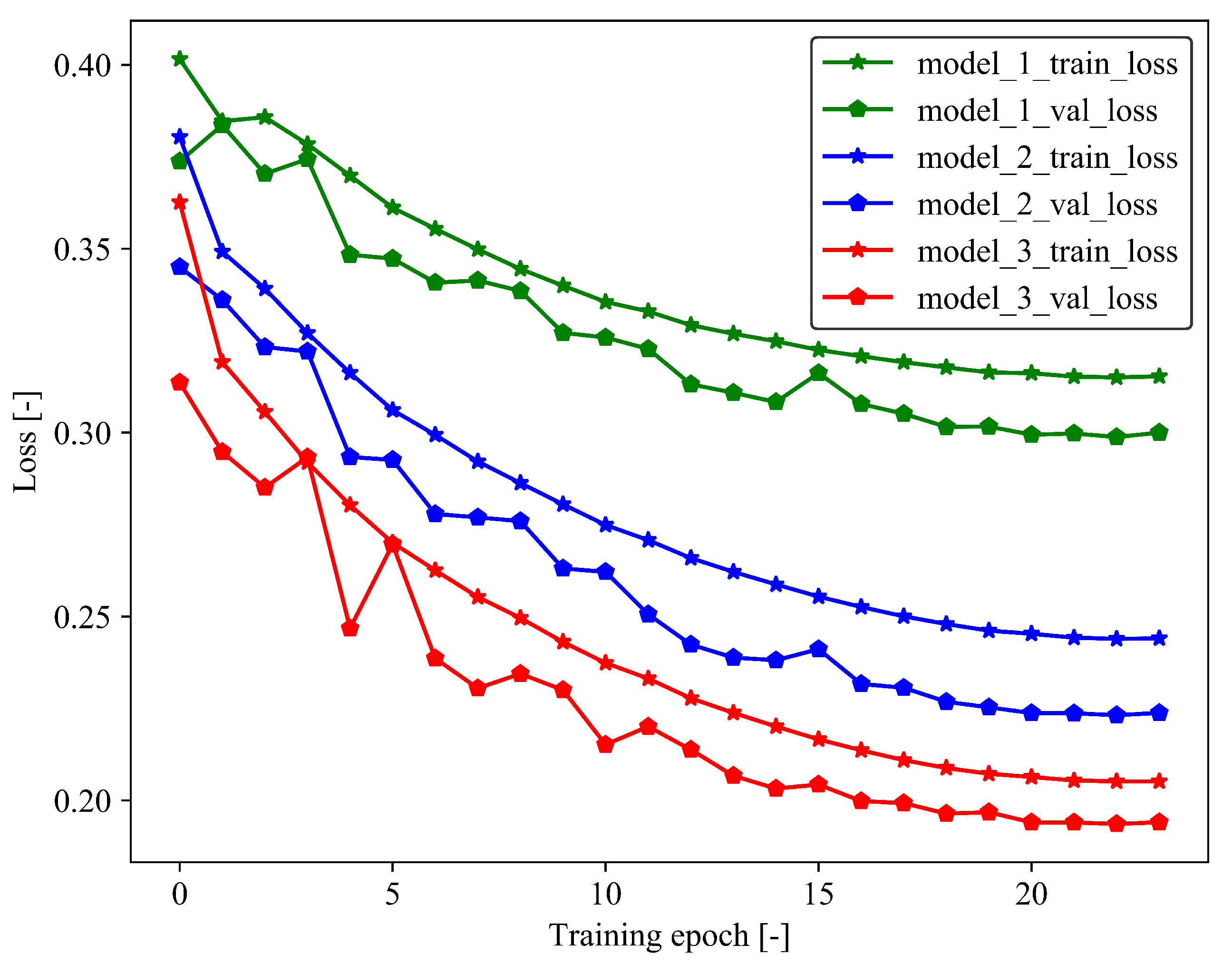

4.2. Training, Results and Comparison with Other Approaches

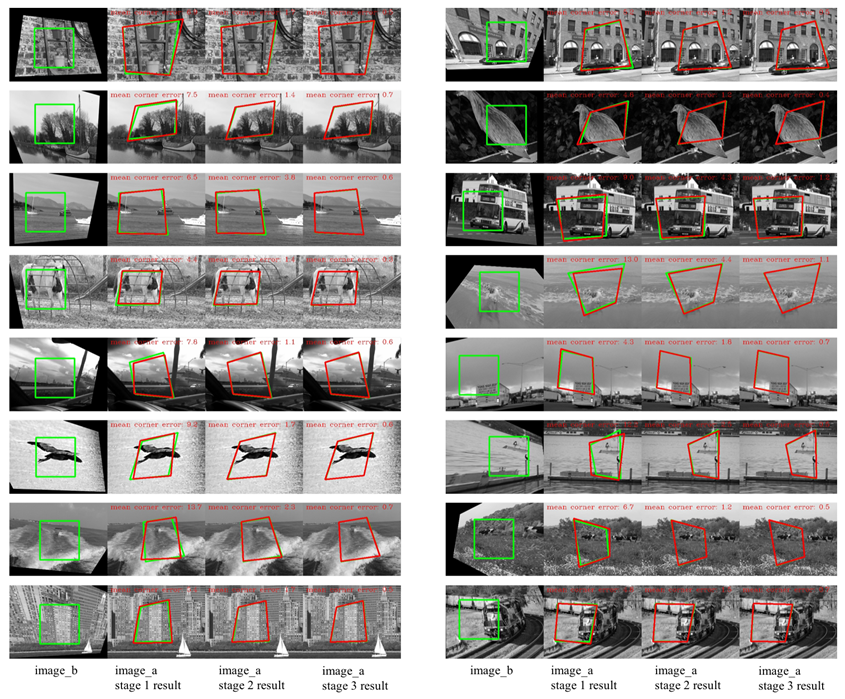

4.3. Time Consumption and Predicted Results

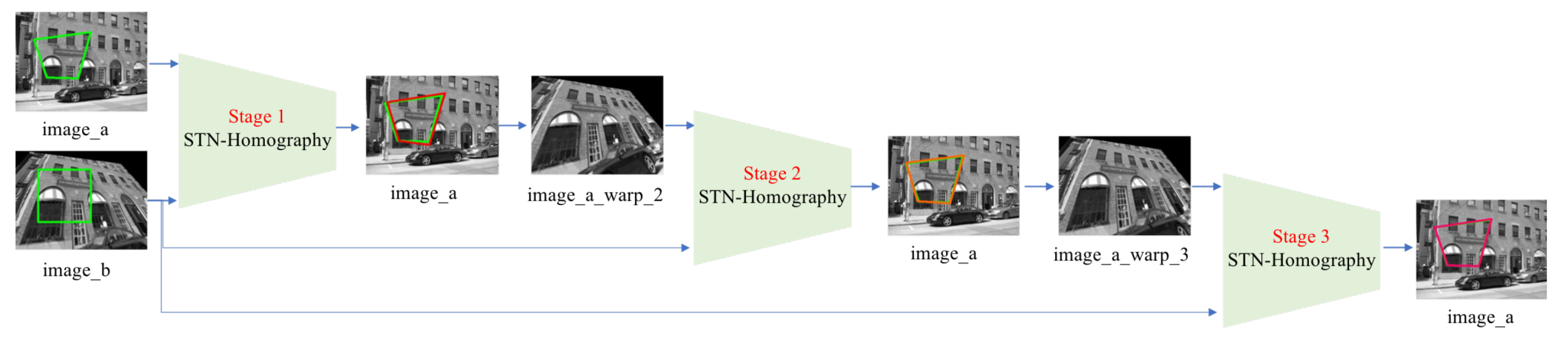

5. Sequence STN-Homography

5.1. Architecture of Sequence STN-Homography

5.2. Training, Results and Comparison with Other Approaches

5.3. Time Consumption and Predicted Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Brown, M.; Lowe, D.G. Automatic Panoramic Image Stitching using Invariant Features. Int. J. Comput. Vis. 2006, 74, 59–73. [Google Scholar] [CrossRef]

- Li, N.; Xu, Y.; Wang, C. Quasi-Homography Warps in Image Stitching. IEEE Trans. Multimed. 2018, 20, 1365–1375. [Google Scholar] [CrossRef]

- Chen, J.; Xu, Q.; Luo, L.; Wang, Y.; Wang, S. A Robust Method for Automatic Panoramic UAV Image Mosaic. Sensors 2019, 19, 1898. [Google Scholar] [CrossRef] [PubMed]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Perdices, E.; Cañas, J. SDVL: Efficient and Accurate Semi-Direct Visual Localization. Sensors 2019, 19, 302. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Hanson, A. 3D Reconstruction Based on Homography Mapping. In ARPA Image Understanding Workshop; Elsevier: San Francisco, CA, USA, 1996. [Google Scholar]

- Park, H.S.; Shiratori, T.; Matthews, I.; Sheikh, Y. 3D Reconstruction of a Moving Point from a Series of 2D Projections. In Computer Vision—ECCV 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 158–171. [Google Scholar]

- Mei, C.; Benhimane, S.; Malis, E.; Rives, P. Efficient Homography-Based Tracking and 3-D Reconstruction for Single-Viewpoint Sensors. IEEE Trans. Robot. 2008, 24, 1352–1364. [Google Scholar] [CrossRef]

- Yang, J.; Li, H. Dense, accurate optical flow estimation with piecewise parametric model. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Xu, J.; Ranftl, R.; Koltun, V. Accurate Optical Flow via Direct Cost Volume Processing. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Shao, J.; Qu, C.; Li, J.; Peng, S. A Lightweight Convolutional Neural Network Based on Visual Attention for SAR Image Target Classification. Sensors 2018, 18, 3039. [Google Scholar] [CrossRef] [PubMed]

- Fischer, P.; Dosovitskiy, A.; Ilg, E.; Häusser, P.; Hazırbaş, C.; Golkov, V.; van der Smagt, P.; Cremers, D.; Brox, T. FlowNet: Learning Optical Flow with Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. FlowNet 20: Evolution of Optical Flow Estimation with Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2462–2470. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Deep Image Homography Estimation. arXiv 2016, arXiv:1606.03798. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Nowruzi, F.E.; Laganiere, R.; Japkowicz, N. Homography Estimation from Image Pairs with Hierarchical Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Nguyen, T.; Chen, S.W.; Shivakumar, S.S.; Taylor, C.J.; Kumar, V. Unsupervised Deep Homography: A Fast and Robust Homography Estimation Model. IEEE Robot. Autom. Lett. 2018, 3, 2346–2353. [Google Scholar] [CrossRef] [Green Version]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2017–2025. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

| Model Name | L2 Loss Weight | L1 Loss Weight | Mean Corner Error [Pixel] |

|---|---|---|---|

| STN-Homography | 1.0 | 1.0 | 5.83 |

| STN-Homography | 1.0 | 10.0 | 21.86 |

| STN-Homography | 1.0 | 0.1 | 6.21 |

| STN-Homography | 10.0 | 1.0 | 4.85 |

| STN-Homography | 0.1 | 1.0 | 6.24 |

| Model Name | Time Consumption on a GPU [ms] |

|---|---|

| One-stage hierarchical STN-Homography | 4.87 |

| Two-stage hierarchical STN-Homography | 11.46 |

| Three-stage hierarchical STN-Homography | 17.85 |

| Model Name | Time Consumption on a GPU [ms] |

|---|---|

| Two-stage Sequence STN-Homography | 9.55 |

| Three-stage Sequence STN-Homography | 13.85 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Q.; Li, X. STN-Homography: Direct Estimation of Homography Parameters for Image Pairs. Appl. Sci. 2019, 9, 5187. https://doi.org/10.3390/app9235187

Zhou Q, Li X. STN-Homography: Direct Estimation of Homography Parameters for Image Pairs. Applied Sciences. 2019; 9(23):5187. https://doi.org/10.3390/app9235187

Chicago/Turabian StyleZhou, Qiang, and Xin Li. 2019. "STN-Homography: Direct Estimation of Homography Parameters for Image Pairs" Applied Sciences 9, no. 23: 5187. https://doi.org/10.3390/app9235187

APA StyleZhou, Q., & Li, X. (2019). STN-Homography: Direct Estimation of Homography Parameters for Image Pairs. Applied Sciences, 9(23), 5187. https://doi.org/10.3390/app9235187