A Comprehensive Feature Comparison Study of Open-Source Container Orchestration Frameworks

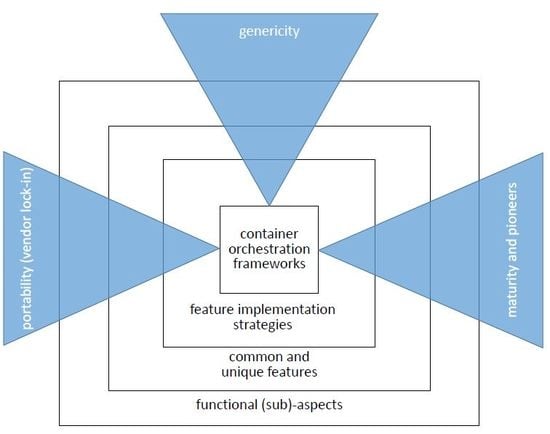

Abstract

:Featured Application

Abstract

1. Introduction

1.1. Research Questions

1.2. Contribution Statement

- A descriptive feature comparison overview of the three most prominent CO frameworks used in cloud-native application engineering: Docker Swarm, Kubernetes and Mesos.

- The study identifies 124 common and 54 unique features of all frameworks and groups it into nine functional and 27 sub-functional aspects.

- The study compares these features qualitatively and quantitatively concerning genericity, maturity, and stability.

- Furthermore, this study investigates the pioneering nature of each framework by studying the historical evolution of the frameworks on GitHub.

- compare CO frameworks on a per feature-basis (thereby avoiding comparing apples with oranges),

- quickly grasp what constitutes the overall functionality that is commonly supported by the CO frameworks by inspecting the 9 functional aspects and 27 sub-aspects,

- understand what are the unique features of CO frameworks,

- determine which functional aspects are most generic in terms of common features,

- identify those CO frameworks that support the most common and unique features, and

- determine those CO frameworks that support the most common and unique features for a specific functional (sub)-aspect.

- identify and understand the impact of relevant standardization efforts,

- compare the maturity of CO frameworks with respect to a specific common feature,

- understand which features have a higher risk of being halted or deprecated, and

- determine those (sub)-aspects that can be considered as mature and well-understood and therefore shape the stable foundation of the technological domain; moreover, academic researchers and entrepreneurs are guided to invest their time and energy in adding innovative functional or non-functional aspects that have not yet been well supported.

1.3. Structure of the Article

2. Related Work

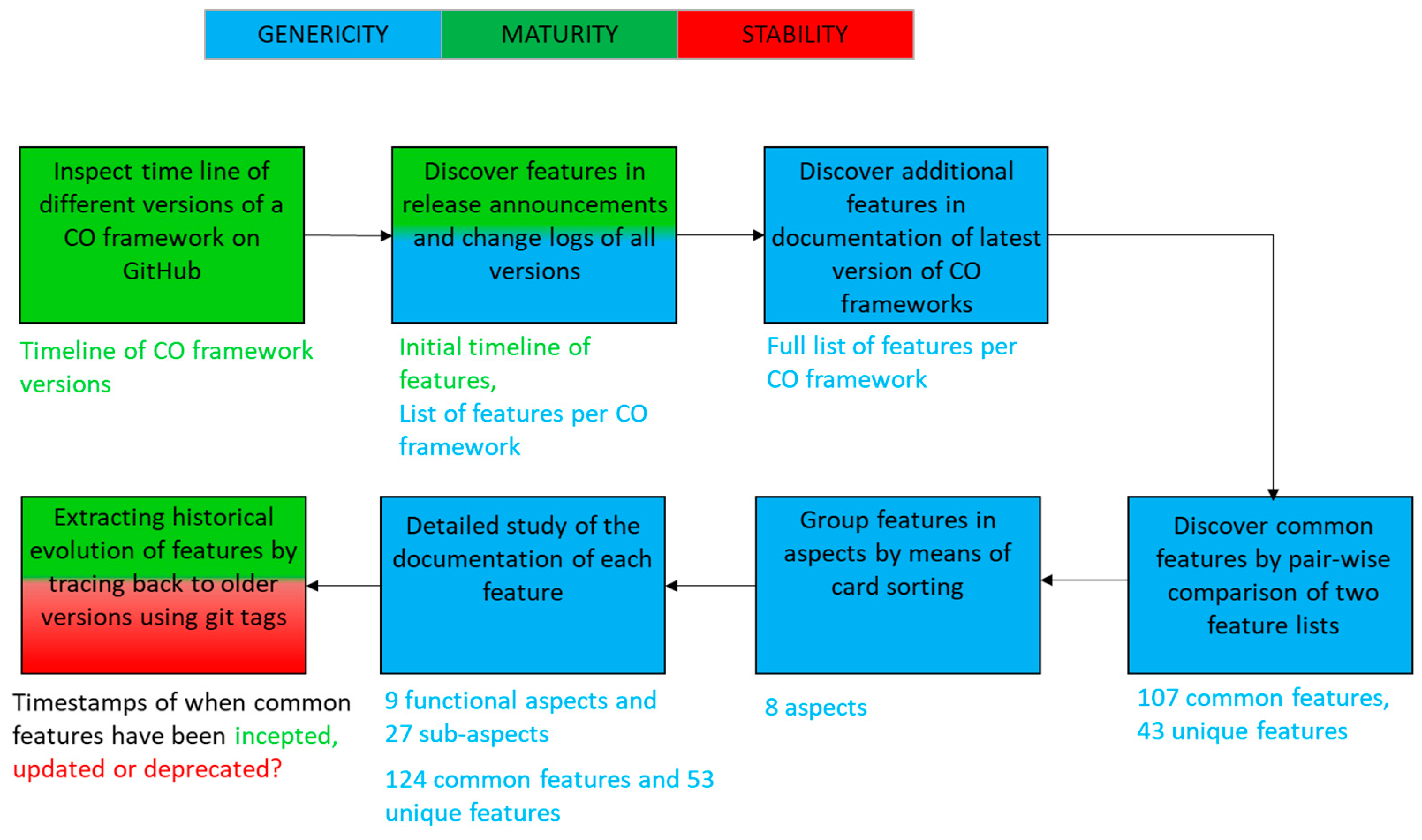

3. Research Method

3.1. Qualitative Assessment of Genericity

3.1.1. Identifying Features in Documentation of CO Frameworks

3.1.2. Discovering Common and Unique Features

3.1.3. Organizing Features in Functional Aspects and Sub-Aspects

3.2. Quantitative Analysis with Respect to Genericity

3.3. Study of Maturity

3.4. Assessment of Stability

3.5. Involvement and Feedback from Industry

3.6. Dealing with Continuous Evolution of CO Frameworks during the Research

4. Genericity: Qualitative Assessment of Common Features

- 1.

- Kubernetes [61] supports deploying and managing both service- and job-oriented workloads as sets of containers.

- 2.

- Docker Swarm stand-alone [62] manages a set of nodes as a single virtual host that serves the standard Docker Engine API. Any tool that already communicates with a Docker daemon can thus use this framework to transparently scale to multiple nodes. This framework is minimal but also the most flexible because almost the entire API of the Docker daemon is available. As such it is mostly relevant for platform developers that like to build a custom framework on top of Docker.

- 3.

- The newer Docker Swarm integrated mode [63] departs from the stand-alone model by re-positioning Docker as a complete container management platform that consists of several architectural components, one of which is Docker Swarm.

- 4.

- Apache Mesos [41,64] supports fine-grained allocation of resources of a cluster of physical or virtual machines to multiple higher-level scheduler frameworks. Such higher-level scheduler frameworks do not only include container orchestration frameworks but also more traditional non-containerized job schedulers such as Hadoop.

- 5.

- Aurora [65], initially developed by Twitter, supports deploying long-running jobs and services. These workloads can optionally be started inside containers.

- 6.

- Marathon [66] supports deploying groups of applications together and managing their mutual dependencies. Applications can optionally be composed and managed as a set of containers.

- 7.

- DC/OS [67] is an easy-to-install distribution of Mesos and Marathon that extends Mesos and Marathon with additional features.

- Application Managers develop, deploy, configure, control or monitor an application that runs in a container cluster. An application manager can be an application developer, application architect, release architect or site reliability engineer.

- Cluster administrators install, configure, control and monitor container clusters. A cluster administrator can be a framework administrator, a site reliability engineer, an application manager who manages a dedicated container cluster for his application, a framework developer who customizes the CO framework implementation to fit the requirements of his or her project.

4.1. Cluster Architecture and Setup

- To deal with the differences between frameworks (e.g., some frameworks execute applications in containers, while other frameworks do not), Mesos uses the generic concept of Task for launching both containerized and non-containerized processes.

- Mesos consists of a two-level scheduler architecture, i.e., the Mesos master and multiple framework schedulers. The Mesos master offers free resources to a particular framework based on the Dominant Resource Fairness [76] algorithm. The selected framework can then accept or reject the offer, for example based on data locality constraints [41]. In order to accept an offer, the framework must explicitly reserve the offered resources [75]. Once a subset of resources is reserved by a framework, the scheduler of that framework can schedule tasks using these resources by sending the tasks to the Mesos master [77]. The Mesos master continues to offer the reserved resources to the framework that has performed the reservation. This is because the framework can respond by unreserving [78] the resources.

- Since the task life cycle management is distributed across the Mesos master and the framework scheduler, task state needs to be kept synchronized. Mesos supports at-most-once [79], unreliable message delivery between the Mesos master and the frameworks. Therefore, when a framework’s scheduler has requested the master to start a task, but doesn’t receive an update from the Mesos master, the framework scheduler needs to perform task reconciliation [80].

- Methods that install the CO software itself as a set of Docker containers.

- Methods that use VM images with the CO software installed for local development.

- Methods that install the CO software from a traditional Linux package.

- Methods that use configuration management tools such as Puppet or Chef.

- Cloud provider owned tools and APIs

- Cloud provider independent orchestration tools that come with specific deployment bundles for installing a container cluster on one or multiple public cloud providers.

- Container orchestration-as-a-Service platforms

- Setup-tools for Microsoft Windows or Windows Server

4.2. CO framework Customization

4.3. Container Networking

- Whether the LB is automatically distributed on every node of the cluster vs. centrally installed on a few nodes by the cluster administrator. In the latter case, sending a request to a service port requires a multi-hop network routing to an instance of the LB.

- Whether the LB supports Layer 4 (i.e., TCP/UDP) vs Layer 7 (i.e., HTTPS) load balancing. Layer 7 load balancing allows implementing application-specific load-balancing policies.

- Whether the Layer 4 LB implementation is based on the ipvs load balancing module of the Linux kernel. This ipvs module is known as a highly-performing load balancer implementation [113].

- Whether containers can run in bridged or in virtual network mode. In the former mode containers can only be accessed via a host port of the local node; the host port is mapped to the container port via a local virtual bridge. In the latter case, remote network connections to a container can be served.

- in Docker Swarm integrated mode [126], host ports are centrally managed at the service level such that requests for creating a new service with an already allocated host port is a priori rejected

- in Kubernetes [127], the default scheduler policy (see Section 4.1) ensures that containers are automatically scheduled on nodes where the requested host port is still available.

4.4. Application Configuration and Deployment

- Support for Docker volume plugin architecture. The Docker Engine plugin framework [171], which offers a unified interface between the container runtime and various volume plugins, is adopted by Mesos [172], Marathon [173] and DC/OS [174] in order to support external persistent volumes. In Mesos-based frameworks, Docker volume plugins must be integrated via a separate Mesos module, named dvdi [175], which requires writing plugin-specific glue code. As such a limited number of Docker volume plugins are currently supported in Mesos.

- Support for the Common Storage Interface (CSI) specification. The Common Storage Interface specification [176] aims to provide a common interface for volume plugins so that each volume plugin needs to written only once and can be used in any container orchestration framework. The specification also supports run-time installation of volume plugins. Typically, CSI can be implemented in any CO framework as a normal volume plugin, which itself is capable interacting with multiple external CSI-based volume plugins. Currently, CSI has been adopted by Kubernetes [177], Mesos [178] and DC/OS [179].

4.5. Resource Quota Management

- Apache Aurora allows attaching to user groups quota for memory and disk [205] via the aurora_admin set_quota command.

4.6. Container QoS Management

- Resource allocation models have been developed that support QoS differentiation between containers while also allowing for over-subscription of resources to improve server consolidation.

- CO frameworks offer various mechanisms to application managers for controlling scheduling decisions that influence the performance of the application. These decisions include the placement of inter-dependent containers and data, and prioritization of containers during resource contention.

4.7. Securing Clusters

4.8. Securing Containers

4.9. Application and Cluster Management

5. Genericity: Qualitative Assessment of Unique Features

5.1. Kubernetes

5.2. Mesos-Based Frameworks

- Mesos is better suited for managing datacenter-scale deployments given the inherent improved scalability properties of its two-level scheduler architecture with multiple distributed scheduler frameworks [41].

- Mesos offers a resource provider abstraction [372] for customizing Mesos worker agents to framework-specific needs.

- DC/OS offers integrated support for load-balancing Marathon-based services as well as load-balancing of workloads that are managed by other, non-container-based frameworks [373].

- Local volumes can be shared by tasks from different frameworks [374].

- Framework rate limiting [375] aims to ensure performance isolation between frameworks with respect to request rate quota towards the Mesos Master.

- With respect to container QoS management, Mesos contributes to improved network performance isolation between containers for both routing mesh networking [377] and virtual networks [378]. Unfortunately, little of these features are currently used by the CO frameworks Aurora and Marathon. Only container port ranges in routing mesh networks can be isolated in Marathon.

5.3. Docker Swarm

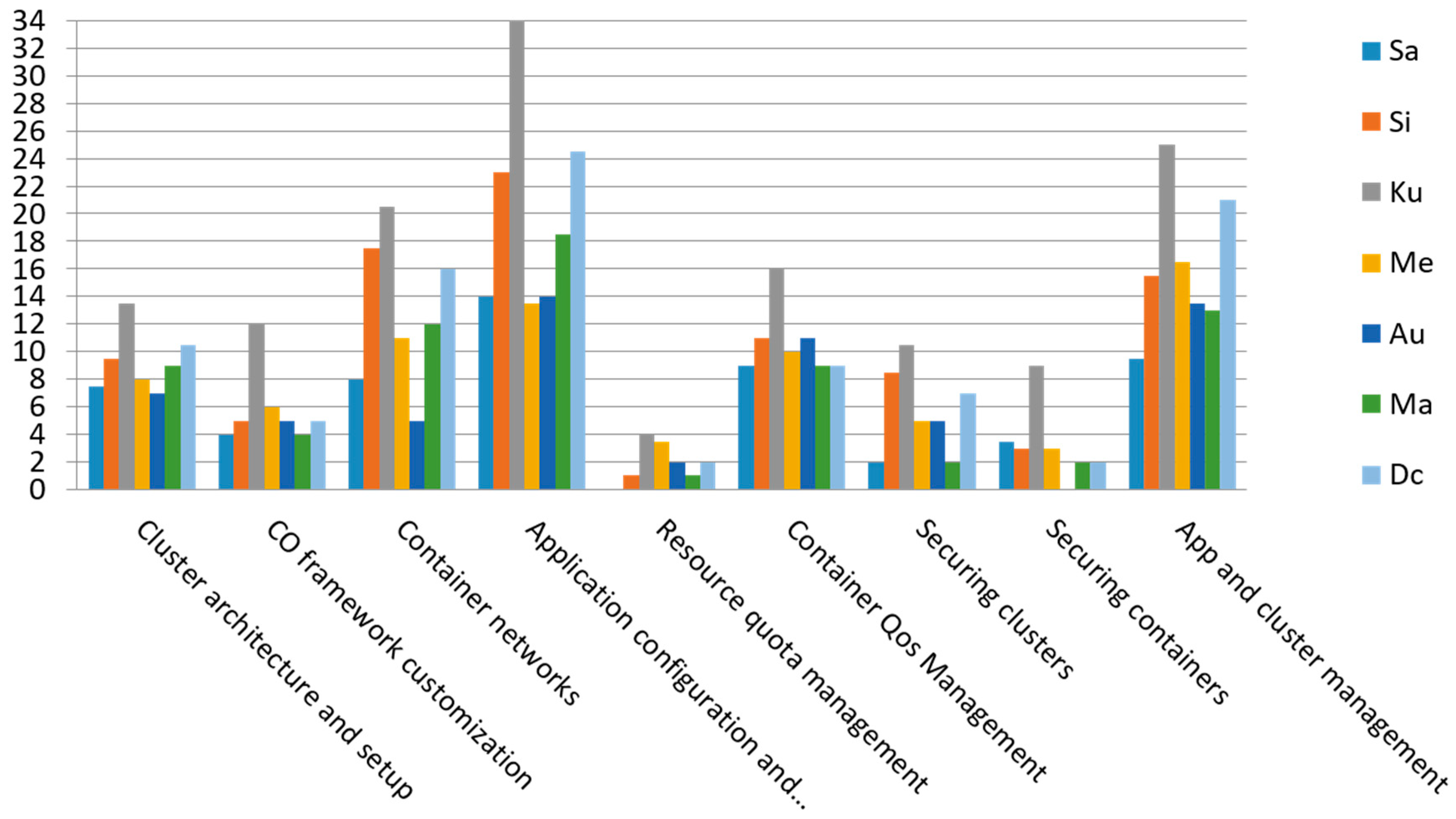

6. Genericity: Quantitative Analysis

- Besides the main functional requirement of persistent storage, various orthogonal orchestration features for management of persistent volumes can be distinguished. Moreover, most of these features are supported by almost all CO frameworks.

- The adoption of the Docker volume plugin architecture by Mesos-based systems as well as the CSI specification by Kubernetes and Mesos has also been recorded as an additional feature.

- No less than 3 alternative approaches to services networking can be distinguished that are all supported by multiple CO frameworks and within each alternative approach one can distinguish at a lower nested level between different alternative load balancing strategies.

- There are again two standardization initiatives related to this sub-aspect: Docker’s libnetwork architecture and the CNI specification.

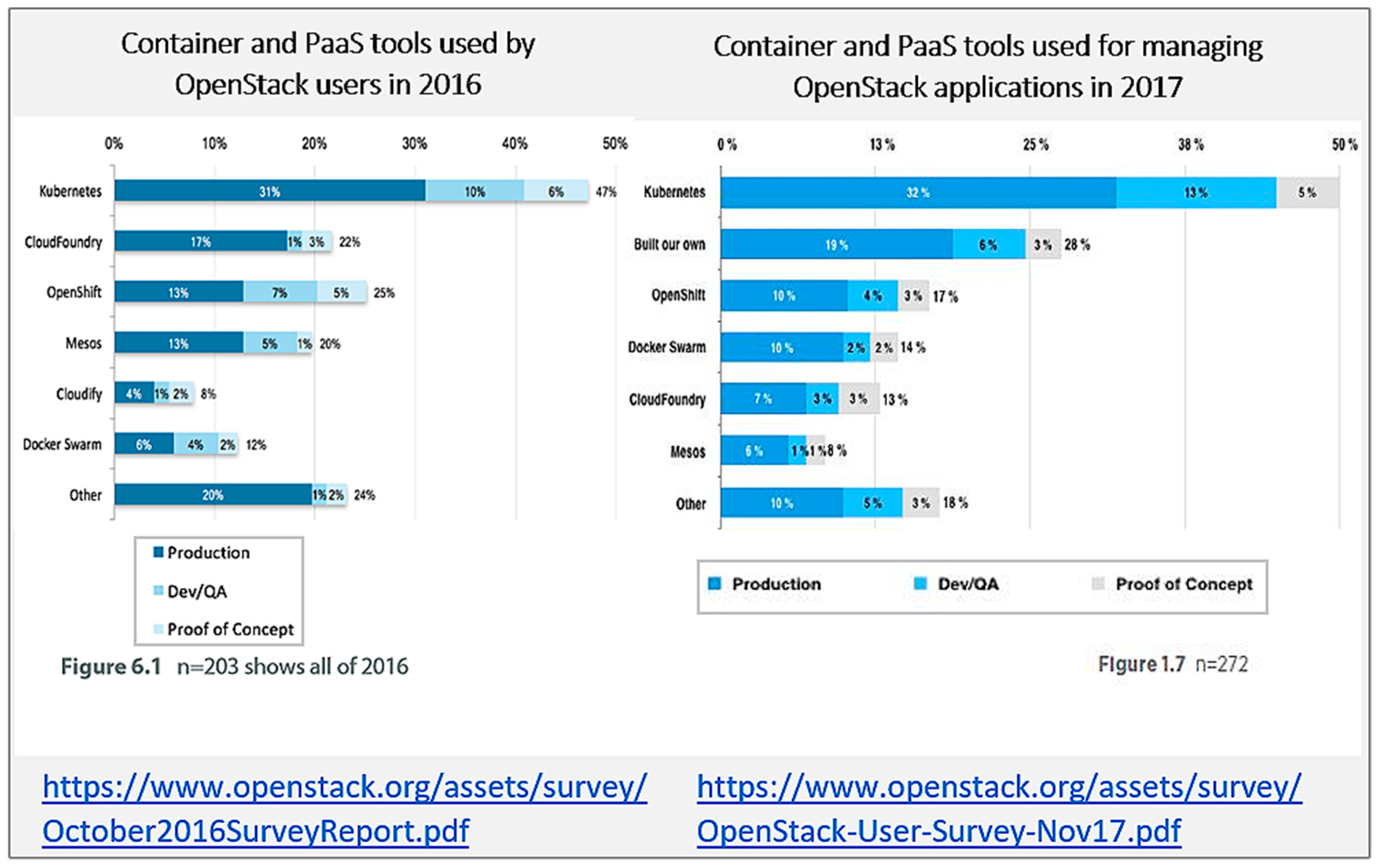

- Docker Swarm stand-alone and Aurora are indeed clearly less generic in terms of offered features than the other CO frameworks. After all, Aurora is specifically designed for running long-running jobs and cron jobs, while Docker Swarm stand-alone is also a more simplified framework with substantial less automated management.We only recommend Docker Swarm stand-alone as a possible starting point for developing one’s own CO framework. This is a relevant direction because 28% of surveyed users in the most recent OpenStack survey [4], responded that they have built their own CO framework instead of using existing CO frameworks (see also Figure 4). We make such recommendation because the API of Docker Swarm stand-alone is the least restrictive in terms of the range of offered options for common commands such as creating, updating and stopping a container. For example, Docker Swarm stand-alone is the only framework that allows to dynamically change resource limits without restarting containers. Such less restrictive API is a more flexible starting point for implementing a custom developed CO framework.

- The significant difference between Kubernetes and Mesos can be partially explained by the fact that Mesos by itself is not a complete CO framework as Mesos enables fine-grained sharing of resources across different CO frameworks such as Marathon, Aurora and DC/OS.

- The significant difference between Kubernetes and Marathon can be explained by the fact that very few new features have been added to Marathon since the start of DC/OS. After all, DC/OS is the extended Mesos+Marathon distribution that has also an enterprise edition.

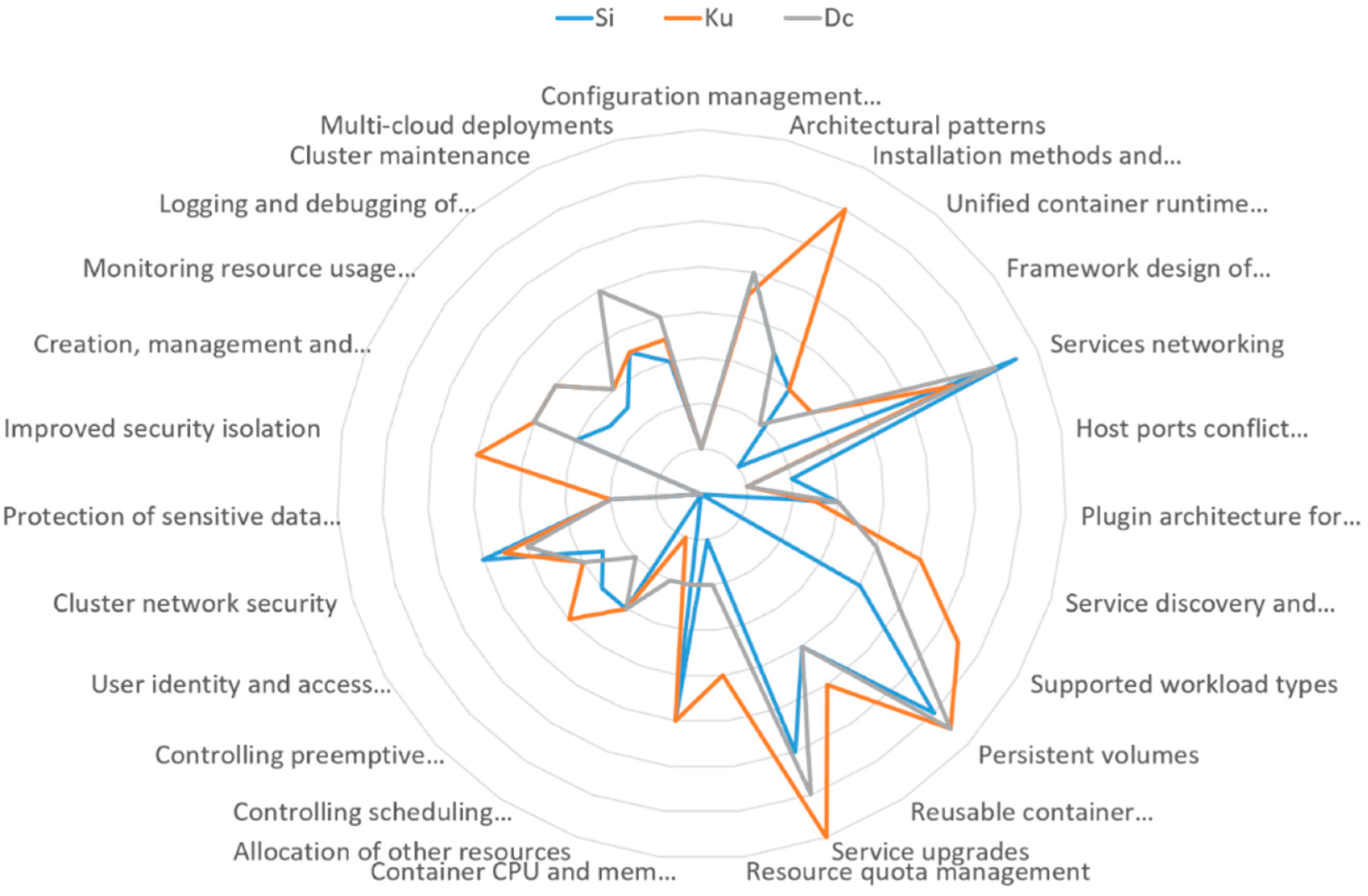

- Kubernetes has the absolutely most features for 7 sub-aspects:

- Installation methods and deployment tools

- Service discovery and external access

- Supported workloads

- Reusable container configuration

- Service upgrades

- Resource quota management

- Improved security isolation

For all 7 sub-aspects, the open-source distribution of Kubernetes supports all common features of these sub-aspects. As such Kubernetes is very generic with respect to these sub-aspects. - Docker Swarm integrated mode has the most features for 3 sub-aspects:

- Services networking

- Host ports conflict management

- Cluster network security

For the first two sub-aspects, Docker Swarm integrated mode offers support for all common features, while for the last sub-aspect, the open-source distribution of Docker Swarm integrated mode offers support for all common features except authorization of CO agents on worker nodes. - DC/OS has the most features for 2-sub-aspects:

- Cluster maintenance

- Multi-cloud deployments

For the first sub-aspect, DC/OS offers support for all common features of this sub-aspect by building upon Mesos and Marathon and providing detailed manual instructions for upgrading DC/OS. For the second sub-aspect, DC/OS offers support for all common features except recovery from network partitions. - Mesos has the most features for 1 sub-aspect:

- Persistent volumes

7. Assessment of Maturity and Stability

- The sub-aspect “monitoring resource usage and health” is still in flux as Kubernetes’ monitoring service (Heapster) has recently been completely replaced by two new monitoring services.

- Host port conflict management is expected to evolve due to the growing importance of supporting service networking in true host mode.

- Improved security isolation support by Kubernetes has not been substantially adopted by other orchestration frameworks; instead, security isolation is becoming a customizable property of container runtimes themselves.

- Logging support has remained very basic in all frameworks. Instead, many third-party companies have already offered commercial solutions for centralized log management.

- The network plugin architecture of Kubernetes has remained in alpha-stage for a very long time and is therefore expected to evolve. Docker’s network plugin architecture is also expected to evolve because Docker EE supports Kubernetes as an alternative orchestrator.

- Inspection of cluster applications is expected to evolve towards a fully reflective interface so that it becomes possible to support application-specific instrumentation of different types of container orchestration functionality (see Section 8.3).

- Cluster maintenance, especially cluster upgrades, remains poorly automated.

- If the performance overhead of StatefulSets for running database clusters cannot be resolved, DC/OS’ approach to offer a user-friendly software development kit for generating custom scheduler frameworks for specific database may be the better approach.

- Horizontal and vertical Pod autoscalers are not fit to meet SLOs for complex stateful applications like databases. The generic design of these autoscalers will need to be sacrificed so that application managers can develop custom auto-scalers for particular workloads. As such, there is a substantial chance that the generic autoscaler will be replaced by different types of auto-scalers (see also Section 8.3).

- The development of the federation API for managing multiple Kubernetes clusters across cloud availability zones has been halted; instead, a new API is being planned [384]. Most likely the federation API will be replaced by a simplified API where some existing federated instantiations of Kubernetes API objects such as federated namespaces will be deprecated.

8. A Look-Ahead, Missing Functionality and Research Challenges

8.1. Further Evolutions in the Short Term

- As stated above, Kubernetes is the only framework that offers rich support for container security isolation whereas Mesos and DC/OS offer very limited support and Docker EE uses another approach so that security isolation policies in Kubernetes are not easy to migrate to Docker. It is expected, however, that existing research [385] of how container security guarantees can be enforced using trusted computing architectures such as Intel SGX will influence the overall container security approach of CO frameworks.

- A weakness of Kubernetes is its limited support for performance isolation of GPU and disk resources and its lack of support for network isolation. Improved support for persistent volumes as part of the Container Storage Interface (CSI) specification effort has been the main focus of the most recent releases of Kubernetes. Network isolation features for Kubernetes have also been subject to recent research [386,387]. It is expected that thus in the near future these features will be considerably improved.

- Finally, network plugin architectures themselves will change considerably due to recent research in the area of network function virtualization (NFV). Better support for high-performance networking without sacrificing automated management is currently also a main focus of current systems research [388]. It is expected that these innovations will also trigger improvements in virtual networking architectures for containers.

8.2. Missing Sub-Aspects

8.2.1. Monitoring Dynamic Cloud Federations

8.2.2. Support for Application-Level Multi-Tenancy

8.3. Research Challenges

8.3.1. Accurate Estimation of Compute Resources

8.3.2. Performance-Driven Instrumentations of CO Framework Functionality

8.3.3. Elastic SLO Management

9. Threats to Validity and Limitations of the Study

- Selection bias, i.e., the decision what CO framework to select and the selection of the different features and the overarching (sub)-aspects may be determined subjectively. Thus, we may have missed features or interpreted feature implementation strategies inappropriately.

- Experimenter bias, i.e., unconscious preferences for certain CO frameworks that influence interpretation of documentation; e.g., whether a feature is partially or fully supported by a framework.

9.1. Selection Bias

9.2. Experimenter Bias

9.3. Limitations of the Study

- We have only studied the documentation of CO frameworks, not the actual code. We have not used any automated methods for mining features/aspects from code. As such features that can only be extracted from code are not covered in this study.

- Any claims about performance or scalability of a certain CO framework’s feature implementation strategy are based on actual performance evaluation of Kubernetes and Docker Swarm integrated mode in the context of the aforementioned publications [49,50]. However, projections of these claims towards performance and scalability of similar feature implementation strategies in Mesos-based frameworks are speculative.

- The study does not provide findings about the robustness of the CO frameworks such as or the ratio of bugs per line of code, or the number of bug reports per user.

10. Lessons Learned

10.1. Genericity

- The ratio of common features over unique features is relatively large and most common features are supported by at least 50% of the CO frameworks. Such a high ratio of common features allows for direct comparison of the CO frameworks with respect to non-functional requirements such as scalability and performance of feature implementation strategies.

- Features in the sub-aspects “improved security isolation” and “allocation of other resources” are only supported by two or three CO frameworks

- ○

- Although Kubernetes consolidated a full feature set for container isolation policies almost 36 months ago, there is little uptake of these features by the other CO frameworks.

- ○

- Mesos-based support for allocating GPU and disk resources to co-located containers is only marginally supported by Kubernetes and not supported by Docker Swarm.

- Kubernetes offers the highest number of common features and the highest number of unique features. When adding up both common and unique features, Kubernetes even offers the highest number of features for all 9 aspects and it offers the highest number of features for 15 sub-aspects.

- Significant differences in genericity with Docker EE and DC/OS have however not been found. After all, when taking into account only common features, Kubernetes offers the absolute highest number of common features for 7 sub-aspects, whereas Docker Swarm integrated mode offers the highest number of common features for the sub-aspects “services networking”, “host port conflict management” and “cluster network security”. Mesos offers the most common features for the sub-aspect “persistent volumes” and DC/OS offers the most common features of the sub-aspects “cluster maintenance” and “multi-cloud deployments”.

- In the sub-aspects “services networking” and “host port conflict management”, Docker Swarm integrated mode and DC/OS offer support for the features host mode services networking, stable DNS name for services and dynamic allocation of host ports. We have found that the other approaches to services networking such as routing meshes and virtual IP networks introduce a substantial performance overhead in comparison to running Docker containers in host mode. As such, a host mode service networking approach with appropriate host port conflict management is a viable alternative for high-performance applications.

- For some of these sub-aspects, there remain differences between whether a particular set of features is offered by the open-source distribution or commercial version of these frameworks:

- ○

- Architectural patterns. The open-source distributions of Docker Swarm and DC/OS all support automated setup of highly available clusters, where Kubernetes only provides support for this feature in particular commercial versions.

- ○

- User identity and access management. Kubernetes offers the most extensive support for authentication and authorization of cluster administrators and application managers because the open-source distributions of these frameworks offer support for tenant-aware access control lists. In opposition, only the commercial versions of Docker Swarm and DC/OS offer support for this feature.

- ○

- Creation, management and inspection of cluster and applications. The open-source distributions of Kubernetes and DC/OS offer the most extensive command-line interfaces and web-based user interfaces with support for common features such as labels for organizing API objects and visual inspection of resource usage graphs. The commercial version of Docker Swarm also includes a web-based UI with the same set of features, though.

- ○

- Logging and debugging of containers and CO frameworks. The open-source distribution of Kubernetes and DC/OS offer support for integrating existing log aggregation systems. In opposition, only the commercial version of Docker Swarm supports this feature.

- ○

- Multi-cloud support. Docker Swarm and all Mesos-based systems have invested most of their effort in building extensive support for running a single container cluster in high availability mode where multiple masters are spread across different cloud availability zones. Support for such an automated HA cluster across multiple availability zones is not supported by the open-source contribution of Kubernetes; it is only supported by the commercial Kubernetes-as-a-Service offerings on top of AWS and Google cloud.

10.2. Maturity

- The 15 sub-aspects identified by the green rectangle in Figure 11 shape a mature foundation for the overall technology domain as these sub-aspects are well-understood by now and little feature deprecations have been found in these sub-aspects.

- Figure 11 further indicates that Kubernetes is the most creative project in terms of pioneering common features despite being a younger project than Mesos, Aurora and Marathon.

10.3. Stability

- Mesos is the most interesting platform for prototyping novel techniques for (i) container networking and (ii) persistent volumes because Mesos’ adherence to all relevant standardization initiatives in these two areas maximizes the potential to deploy these techniques in Docker Swarm and Kubernetes as well. Docker or Kubernetes are best fit for prototyping innovating techniques for container runtimes.

- The overall rate of feature deprecations among common features in the past is about 2% of the total number of feature updates (i.e., feature additions, feature replacements, and feature deprecations).

- Only one unique feature of Kubernetes, federated instantiations of the Kubernetes API objects, has been halted and will probably be deprecated without a replacing feature update.

10.4. Main Insights with Respect to Docker Swarm

10.5. Main Insights with Respect to Kubernetes

10.6. Main Insights with Respect to Mesos and DC/OS

10.7. Main Insights with Respect to Docker Swarm Alone and Apache Aurora

11. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Aspects and Sub-aspects | Container Orchestration Frameworks | ||||||

|---|---|---|---|---|---|---|---|

| Cluster architecture and setup | Sa | Si | Ku | Me | Au | Ma | Dc |

| Configuration management approach | |||||||

| Architectural patterns | |||||||

| Installation methods and tools for setting up a cluster | Kubernetes-as-a-Service | GUI-based installer | |||||

| CO framework customization | Sa | Si | Ku | Me | Au | Ma | Dc |

| Unified container runtime architecture | |||||||

| Framework design of orchestration engine | install plugins as global Swarm services | cloud-provider plugin custom API objects aggregation of additional APIs annotations to API objects discovery of a node’s hardware features dynamic kubelet reconfig | resource provider abstraction to customize how Mesos agent synchronizes with the Mesos master about available resources and operations on those resources | custom worker agent software | |||

| Container networking | Sa | Si | Ku | Me | Au | Ma | Dc |

| Services networking | SCTP protocol support | load balancing of non-containerized services | |||||

| Host ports conflict management | |||||||

| Plugin architecture for network services | |||||||

| Service discovery and external access | exposing service via LB of cloud provider synchronize services with external DNS providers hide Pod’s virtual IP behind Node IP override DNS lookup with custom /etc/hosts entries in Pod override name server with custom /etc/resolv in Pod install another DNS server in cluster | ||||||

| App configuration and deployment | Sa | Si | Ku | Me | Au | Ma | Dc |

| Supported workload types | Initial. of containers vertical pod auto-scaler | ||||||

| Persistent volumes | deploying and managing stateful services raw block volumes dynamically grow volume size dynamic maximum volume count | local volume can be shared between tasks from different frameworks | tools and libraries for integration with and deployment of stateful services | ||||

| Reusable container configuration | system init inside a container | injection of configs at Pod creation time | |||||

| Service upgrades | customizing the rollback of a service | ||||||

| Resource quota management | Sa | Si | Ku | Me | Au | Ma | Dc |

| request rate limiting of frameworks | |||||||

| Container QoS management | Sa | Si | Ku | Me | Au | Ma | Dc |

| Container CPU and memory allocation with support for oversubscription | updating resource policies without restarting the container | ||||||

| Allocation of other resources | define custom node resources of random kind scheduling of huge pages | network isolation for routing mesh networks network isolation between virtual networks | |||||

| Controlling scheduling behavior | |||||||

| Controlling preemptive scheduling and re-scheduling | cpu-cache affinity policies | ||||||

| Securing clusters | Sa | Si | Ku | Me | Au | Ma | Dc |

| User identity and access management | audit of master API requests | ||||||

| Cluster network security | encryption of master/ manager logs | access control for the kubelet network policies for Pods | |||||

| Securing containers | Sa | Si | Ku | Me | Au | Ma | Dc |

| Protection of sensitive data and proprietary software | |||||||

| Improved security isolation | customize service isolation mode in Windows | run-time verification of system-wide Pod security policies configuring kernel parameters at run-time | |||||

| App and cluster management | Sa | Si | Ku | Me | Au | Ma | Dc |

| Creation, management and inspection of cluster and applications | command-line auto-complete | ||||||

| Monitoring resource usage and health | auto-scaling of cluster | SLA metrics | custom health checks | ||||

| Logging and debugging of CO framework and containers | debug running Pod from local work station | ||||||

| Cluster maintenance | control the number of Pod disruptions automated upgrade of Google Kubernetes Engine | ||||||

| Multi-cloud support | API for using externally managed services federated API objects discovery of the closest healthy service shard | ||||||

References

- Xavier, M.G.; de Oliveira, I.C.; Rossi, F.D.; Passos, R.D.D.; Matteussi, K.J.; de Rose, C.A.F. A Performance Isolation Analysis of Disk-Intensive Workloads on Container-Based Clouds. In Proceedings of the 2015 23rd Euromicro International Conference Parallel, Distributed, Network-Based Processing, Turku, Finland, 4–6 March 2015; pp. 253–260. [Google Scholar]

- Truyen, E.; van Landuyt, D.; Reniers, V.; Rafique, A.; Lagaisse, B.; Joosen, W. Towards a container-based architecture for multi-tenant SaaS applications. In Proceedings of the ARM 2016 Proceedings of the 15th International Workshop on Adaptive and Reflective Middleware, Trento, Italy, 12–16 December 2016. [Google Scholar]

- Kratzke, N. A Lightweight Virtualization Cluster Reference Architecture Derived from Open Source PaaS Platforms. Open J. Mob. Comput. Cloud Comput. 2014, 1, 17–30. [Google Scholar]

- OpenStack. User Survey. April 2016. Available online: https://www.openstack.org/assets/survey/April-2016-User-Survey-Report.pdf (accessed on 1 March 2019).

- Openstack. User Survey. October 2016. Available online: https://www.openstack.org/assets/survey/October2016SurveyReport.pdf (accessed on 27 October 2016).

- OpenStack. User Survey—A Snapshot of the OpenStack Users’ Attitudes and Deployments. 2017. Available online: https://www.openstack.org/assets/survey/OpenStack-User-Survey-Nov17.pdf (accessed on 1 March 2019).

- Mesosphere. mesos/docker-containerizer.md at 0.20.0·apache/mesos. Available online: https://github.com/apache/mesos/blob/0.20.0/docs/docker-containerizer.md (accessed on 9 November 2018).

- Pieter Noordhuis. Kubernetes. Available online: https://github.com/kubernetes/kubernetes/blob/release-0.4/README.md (accessed on 9 November 2018).

- kubernetes/networking.md at v0.6.0 · kubernetes/kubernetes. Available online: https://github.com/kubernetes/kubernetes/blob/v0.6.0/docs/networking.md (accessed on 9 November 2018).

- kubernetes/pods.md at release-0.4 · kubernetes/kubernetes. Available online: https://github.com/kubernetes/kubernetes/blob/release-0.4/docs/pods.md (accessed on 9 November 2018).

- kubernetes/volumes.md at v0.6.0 · kubernetes/kubernetes. Available online: https://github.com/kubernetes/kubernetes/blob/v0.6.0/docs/volumes.md (accessed on 9 November 2018).

- Docker Inc. Manage Data in Containers. Available online: https://docs.docker.com/v1.10/engine/userguide/containers/dockervolumes/ (accessed on 9 November 2018).

- Mesosphere. mesos/docker-volume.md at 1.0.0 · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.0.0/docs/docker-volume.md (accessed on 9 November 2018).

- Mesosphere. mesos/networking-for-mesos-managed-containers.md at 0.25.0 · apache/mesos. Available online: https://github.com/apache/mesos/blob/0.25.0/docs/networking-for-mesos-managed-containers.md (accessed on 9 November 2018).

- Docker Inc. swarm/networking.md at v1.0.0 · docker/swarm. Available online: https://github.com/docker/swarm/blob/v1.0.0/docs/networking.md (accessed on 9 November 2018).

- ClusterHQ. ClusterHQ/Flocker: Container Data Volume Manager for Your Dockerized Application. Available online: https://github.com/ClusterHQ/flocker/ (accessed on 9 November 2018).

- Lardinois, F. ClusterHQ Raises $12M Series A Round to Expand Its Container Data Management Service. techcrunch.com. 2015. Available online: https://techcrunch.com/2015/02/05/clusterhq-raises-12m-series-a-round-to-help-developers-run-databases-in-docker-containers/ (accessed on 27 March 2018).

- ClusterHQ. Flocker Integrations. Available online: https://flocker.readthedocs.io/en/latest/ (accessed on 9 November 2018).

- Lardinois, F. ClusterHQ, an Early Player in the Container Ecosystem, Calls It Quits. techcrunch.com. 2016. Available online: https://techcrunch.com/2016/12/22/clusterhq-hits-the-deadpool/ (accessed on 27 March 2018).

- Containerd—An Industry-Standard Container Runtime with an Emphasis on Simplicity, Robustness and Portability. Available online: https://containerd.io/ (accessed on 9 November 2018).

- Open Container Initiative. Available online: https://github.com/opencontainers/ (accessed on 9 November 2018).

- General Availability of Containerd 1.0 is Here! The Cloud Native Computing Foundation. 2017. Available online: https://www.cncf.io/blog/2017/12/05/general-availability-containerd-1-0/ (accessed on 27 March 2018).

- Cloud Native Computing Foundation Launches Certified Kubernetes Program with 32 Conformant Distributions and Platforms. The Cloud Native Computing Foundation. 2017. Available online: https://www.cncf.io/announcement/2017/11/13/cloud-native-computing-foundation-launches-certified-kubernetes-program-32-conformant-distributions-platforms/ (accessed on 27 March 2018).

- OpenStack. OPENSTACK USER SURVEY: A Snapshot of OpenStack Users’ Attitudes and Deployments; Openstack.org. 2015. Available online: https://www.openstack.org/assets/survey/Public-User-Survey-Report.pdf (accessed on 1 March 2019).

- GitHub. The State of the Octoverse 2017—Ten Most-Discussed Repositories. 2018. Available online: https://octoverse.github.com/2017/ (accessed on 1 March 2019).

- Mesosphere. Kubernetes—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/services/kubernetes/ (accessed on 9 November 2018).

- Docker Inc. Run Swarm and Kubernetes Interchangeably|Docker. Available online: https://www.docker.com/products/orchestration (accessed on 9 November 2018).

- Amazon Web Services (AWS). Amazon EKS—Managed Kubernetes Service. Available online: https://aws.amazon.com/eks/ (accessed on 9 November 2018).

- Kratzke, N. A Brief History of Cloud Application Architectures. Appl. Sci. 2018, 8, 1368. [Google Scholar] [CrossRef]

- Truyen, E.; van Landuyt, D. Structured Feature Comparison between Container Orchestration Frameworks. 2018. Available online: https://zenodo.org/record/1494190#.XDh2ls17lPY (accessed on 11 January 2019).

- Truyen, E.; van Landuyt, D.; Preuveneers, D.; Lagaisse, B.; Joosen, W. A Comprehensive Feature Comparison Study of Open-Source Container Orchestration Frameworks. 2019. Available online: https://doi.org/10.5281/zenodo.2547979 (accessed on 11 January 2019).

- Soltesz, S.; Soltesz, S.; Pötzl, H.; Pötzl, H.; Fiuczynski, M.E.; Fiuczynski, M.E.; Bavier, A.; Bavier, A.; Peterson, L.; Peterson, L. Container-based operating system virtualization: A scalable, high-performance alternative to hypervisors. SIGOPS Oper. Syst. Rev. 2007, 41, 275–287. [Google Scholar] [CrossRef]

- Xavier, M.G.; Neves, M.V.; Rossi, F.D.; Ferreto, T.C.; Lange, T.; de Rose, C.F. Performance Evaluation of Container-based Virtualization for High Performance Computing Environments. In Proceedings of the 2013 21st Euromicro International Conference Parallel, Distributed, Network-Based Processing, Belfast, UK, 27 February–1 March 2013; pp. 233–240. [Google Scholar]

- Dua, R.; Raja, A.R.; Kakadia, D. Virtualization vs Containerization to Support PaaS. In Proceedings of the 2014 IEEE International Conference on Cloud Engineering, Boston, MA, USA, 11–14 March 2014; pp. 610–614. [Google Scholar]

- Felter, W.; Ferreira, A.; Rajamony, R.; Rubio, J. An updated performance comparison of virtual machines and Linux containers. In Proceedings of the 2015 IEEE international symposium on performance analysis of systems and software (ISPASS), Philadelphia, PA, USA, 29–31 March 2015; pp. 171–172. [Google Scholar]

- Tosatto, A.; Ruiu, P.; Attanasio, A. Container-Based Orchestration in Cloud: State of the Art and Challenges. In Proceedings of the 2015 Ninth International Conference on Complex, Intelligent, and Software Intensive Systems, Blumenau, Brazil, 8–10 July 2015; pp. 70–75. [Google Scholar]

- Casalicchio, E. Autonomic Orchestration of Containers: Problem Definition and Research Challenges. In Proceedings of the 10th EAI International Conference on Performance Evaluation Methodologies and Tools, Taormina, Italy, 25–28 October 2017. [Google Scholar]

- Heidari, P.; Lemieux, Y.; Shami, A. “QoS Assurance with Light Virtualization—A Survey. In Proceedings of the 2016 IEEE International Conference on Cloud Computing Technology and Science (CloudCom), Luxembourg, 12–15 December 2016; pp. 558–563. [Google Scholar]

- Jennings, B.; Stadler, R. Resource Management in Clouds: Survey and Research Challenges. J. Netw. Syst. Manag. 2014, 23, 567–619. [Google Scholar] [CrossRef]

- Costache, S.; Dib, D.; Parlavantzas, N.; Morin, C. Resource management in cloud platform as a service systems: Analysis and opportunities. J. Syst. Softw. 2017, 132, 98–118. [Google Scholar] [CrossRef]

- Hindman, B.; Konwinski, A.; Platform, A.; Resource, F.-G.; Zaharia, M. Mesos: A platform for fine-grained resource sharing in the data center. In Proceedings of the 8th USENIX Conference on Networked Systems Design and Implementation (NSDI 2011), Boston, MA, USA, 30 March–1 April 2011. [Google Scholar]

- Verma, A.; Pedrosa, L.; Korupolu, M.; Oppenheimer, D.; Tune, E.; Wilkes, J. Large-scale cluster management at Google with Borg. In Proceedings of the Tenth European Conference on Computer Systems, Bordeaux, France, 21–24 April 2015. [Google Scholar]

- Pahl, C. Containerisation and the PaaS Cloud. IEEE Cloud Comput. 2015, 2, 24–31. [Google Scholar] [CrossRef]

- Kratzke, N.; Peinl, R. ClouNS-a Cloud-Native Application Reference Model for Enterprise Architects. In Proceedings of the 2016 IEEE 20th International Enterprise Distributed Object Computing Workshop (EDOCW), Vienna, Austria, 5–9 September 2016; pp. 198–207. [Google Scholar]

- Quint, P.-C.; Kratzke, N. Towards a Lightweight Multi-Cloud DSL for Elastic and Transferable Cloud-native Applications. In Proceedings of the 8th International Conference on Cloud Computing and Services Science (CLOSER 2018), Madeira, Portugal, 19–21 March 2018. [Google Scholar]

- Kratzke, N. Smuggling Multi-cloud Support into Cloud-native Applications using Elastic Container Platforms. Proc. 7th Int. Conf. Cloud Comput. Serv. Sci. 2017, 2017, 57–70. [Google Scholar]

- Kratzke, N.; Quint, P. Project CloudTRANSIT—Transfer Cloud-Native Applications at Runtime; Technische Hochschule Lübeck: Lübeck, Germany, 2018. [Google Scholar] [CrossRef]

- DeCoMAdS: Deployment and Configuration Middleware for Adaptive Software-As-A-Service. 2015. Available online: https://distrinet.cs.kuleuven.be/research/projects/DeCoMAdS (accessed on 1 March 2019).

- Truyen, E.; Bruzek, M.; van Landuyt, D.; Lagaisse, B.; Joosen, W. Evaluation of container orchestration systems for deploying and managing NoSQL database clusters. In Proceedings of the 2018 IEEE 11th International Conference on Cloud Computing (CLOUD), Zurich, Switzerland, 17–20 December 2018. [Google Scholar]

- Delnat, W.; Truyen, E.; Rafique, A.; van Landuyt, D.; Joosen, W. K8-Scalar: A workbench to compare autoscalers for container-orchestrated database clusters. In Proceedings of the 2018 IEEE/ACM 13th International Symposium on Software Engineering for Adaptive and Self-Managing Systems (SEAMS), Gothenburg, Sweden, 27 May–3 June 2018; pp. 33–39. [Google Scholar]

- Campbell, J.C.; Zhang, C.; Xu, Z.; Hindle, A.; Miller, J. Deficient documentation detection: A methodology to locate deficient project documentation using topic analysis. In Proceedings of the 10th Working Conference on Mining Software Repositories, San Francisco, CA, USA, 18–19 May 2013. [Google Scholar]

- Al-Subaihin, A.A.; Sarro, F.; Black, S.; Capra, L.; Harman, M.; Jia, Y.; Zhang, Y. Clustering Mobile Apps Based on Mined Textual Features. In Proceedings of the 10th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement—ESEM ’16, Ciudad Real, Spain, 8–9 September 2016; pp. 1–10. [Google Scholar]

- Mei, H.; Zhang, W.; Gu, F. A feature oriented approach to modeling and reusing requirements of software product lines. In Proceedings of the 27th Annual International Computer Software and Applications Conference. COMPAC 2003, Dallas, TX, USA, 3–6 November 2003; pp. 250–256. [Google Scholar]

- Kang, K.C.; Cohen, S.G.; Hess, J.A.; Novak, W.E.; Peterson, A.S. Feature-Oriented Domain Analysis (FODA) Feasibility Study; Software Engineering Inst.: Pittsburgh, PA, USA, 1990. [Google Scholar]

- Spencer, D.; Garrett, J.J. Card Sorting: Designing Usable Categories; Rosenfeld Media: Brooklyn, NY, USA, 2009. [Google Scholar]

- Demsar, J. Statistical Comparisons of Classifiers over Multiple Data Sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Khomh, F.; Dhaliwal, T.; Zou, Y.; Adams, B.; Engineering, C. Do Faster Releases Improve Software Quality ? An Empirical Case Study of Mozilla Firefox; IEEE Press: Piscataway, NJ, USA, 2012; pp. 179–188. [Google Scholar]

- Truyen, E.; van Landuyt, D.; Lagaisse, B.; Joosen, W. A Comparison between Popular Open-Source container Orchestration Frameworks. 2017. Available online: https://docs.google.com/document/d/19ozfDwmbeeBmwuAemCxNtKO1OFm7FsXyMioYjUJwZVo/ (accessed on 1 March 2019).

- Red Hat. Overview—Core Concepts|Architecture|OpenShift Container Platform 3.11. Available online: https://docs.openshift.com/container-platform/3.11/architecture/core_concepts/index.html (accessed on 9 November 2018).

- Cloud Foundry. Powered by Kubernetes—Container Runtime|Cloud Foundry. Available online: https://www.cloudfoundry.org/container-runtime/ (accessed on 9 November 2018).

- Kubernetes Home Page. Available online: https://kubernetes.io/ (accessed on 1 March 2018).

- Docker Inc. Docker Swarm|Docker Documentation. Available online: https://docs.docker.com/swarm/ (accessed on 9 November 2018).

- Docker Inc. Swarm Mode Overview|Docker Documentation. Available online: https://docs.docker.com/engine/swarm/ (accessed on 9 November 2018).

- Mesosphere. Apache Mesos. Available online: http://mesos.apache.org/ (accessed on 9 November 2018).

- Apache. Apache Aurora. Available online: http://aurora.apache.org/ (accessed on 9 November 2018).

- Mesosphere. Marathon: A Container Orchestration Platform for Mesos and DC/OS. Available online: https://mesosphere.github.io/marathon/ (accessed on 9 November 2018).

- Mesosphere. The Definitive Platform for Modern Apps|DC/OS. Available online: https://dcos.io/ (accessed on 9 November 2018).

- Breitenbücher, U.; Binz, T.; Kopp, O.; Képes, K.; Leymann, F.; Wettinger, J. Hybrid TOSCA Provisioning Plans: Integrating Declarative and Imperative Cloud Application Provisioning Technologies; Springer International Publishing: Cham, Switzerland, 2016; pp. 239–262. [Google Scholar]

- Docker Inc. docker.github.io/deploy-app.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/swarm/swarm_at_scale/deploy-app.md#extra-credit-deployment-with-docker-compose (accessed on 9 November 2018).

- docker.github.io/index.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/compose/compose-file/index.md (accessed on 9 November 2018).

- Cloud Native Computing Foundation. website/overview.md at release-1.11 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.11/content/en/docs/concepts/configuration/overview.md (accessed on 9 November 2018).

- Apache. Apache Aurora Configuration Reference. Available online: http://aurora.apache.org/documentation/latest/reference/configuration/ (accessed on 9 November 2018).

- Mesosphere. Marathon REST API. Available online: https://mesosphere.github.io/marathon/api-console/index.html (accessed on 9 November 2018).

- Mesosphere. Creating Services—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.10/deploying-services/creating-services/ (accessed on 9 November 2018).

- Mesosphere. mesos/architecture.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/architecture.md (accessed on 9 November 2018).

- Ghodsi, A.; Zaharia, M.; Hindman, B.; Konwinski, A.; Shenker, S.; Stoica, I. Dominant Resource Fairness: Fair Allocation of Multiple Resource Types Maps Reduces. In Proceedings of the NSDI 2011, Boston, MA, USA, 30 March–1 April 2011. [Google Scholar]

- Mesosphere. mesos/architecture.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/architecture.md#example-of-resource-offer (accessed on 9 November 2018).

- Mesosphere. “mesos/reservation.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/reservation.md#offeroperationunreserve (accessed on 9 November 2018).

- Mesosphere. marathon/high-availability.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/high-availability.md (accessed on 9 November 2018).

- Mesosphere. mesos/reconciliation.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/reconciliation.md (accessed on 9 November 2018).

- Docker Inc. docker.github.io/admin_guide.md at v17.06 · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06/engine/swarm/admin_guide.md (accessed on 9 November 2018).

- Apache. aurora/configuration.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/operations/configuration.md#replicated-log-configuration (accessed on 9 November 2018).

- Mesosphere. High Availability—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.10/overview/high-availability/ (accessed on 9 November 2018).

- Cloud Native Computing Foundation. website/highly-available-master.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/tasks/administer-cluster/highly-available-master.md (accessed on 9 November 2018).

- Google LLC. Google Kubernetes Engine|Kubernetes Engine|Google Cloud. Available online: https://cloud.google.com/kubernetes-engine/ (accessed on 9 November 2018).

- Canonical. Kubernetes|Ubuntu. Available online: https://www.ubuntu.com/kubernetes (accessed on 9 November 2018).

- CoreOS. Coreos/Tectonic-Installer: Install a Kubernetes Cluster the CoreOS Tectonic Way: HA, Self-Hosted, RBAC, Etcd Operator, and More. Available online: https://github.com/coreos/tectonic-installer (accessed on 9 November 2018).

- Apache. aurora/constraints.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/features/constraints.md (accessed on 9 November 2018).

- Mesosphere. marathon/constraints.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/constraints.md#operators (accessed on 9 November 2018).

- Gog, I.; Schwarzkopf, M.; Gleave, A.; Watson, R.N.M.; Hand, S. Firmament: Fast, Centralized Cluster Scheduling at Scale Firmament: Fast, centralized cluster scheduling at scale. In Proceedings of the OSDI, Savannah, GA, USA, 2–4 November 2016; pp. 99–115. [Google Scholar]

- Delimitrou, C.; Kozyrakis, C. Quasar: Resource-Efficient and QoS-Aware Cluster Management. In Proceedings of the 19th international conference on Architectural support for programming languages and operating systems, Salt Lake City, UT, USA, 1–5 March 2014. [Google Scholar]

- Delimitrou, C.; Kozyrakis, C. QoS-Aware scheduling in heterogeneous datacenters with paragon. ACM Trans. Comput. Syst. 2013, 31, 1–34. [Google Scholar] [CrossRef]

- Jyothi, S.A.; Curino, C.; Menache, I.; Narayanamurthy, S.M.; Tumanov, A.; Yaniv, J.; Mavlyutov, R.; Goiri, Í.; Krishnan, S.; Kulkarni, J.; et al. Morpheus: Towards Automated SLAs for Enterprise Clusters. In Proceedings of the OSDI 2016, Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Grillet, A. Comparison of Container Schedulers. 2016. Available online: https://medium.com/@ArmandGrillet/comparison-of-container-schedulers-c427f4f7421 (accessed on 31 October 2017).

- Cloud Native Computing Foundation. Home—Open Containers Initiative. Available online: https://www.opencontainers.org/ (accessed on 9 November 2018).

- Cloud Native Computing Foundation. kubernetes-sigs/cri-o: Open Container Initiative-Based Implementation of Kubernetes Container Runtime Interface. Available online: https://github.com/kubernetes-sigs/cri-o/ (accessed on 9 November 2018).

- Mesosphere. [MESOS-5011] Support OCI Image Spec.—ASF JIRA. Available online: https://issues.apache.org/jira/browse/MESOS-5011 (accessed on 9 November 2018).

- Cloud Native Computing Foundation. opencontainers/runc: CLI Tool for Spawning and Running Containers According to the OCI Specification. Available online: https://github.com/opencontainers/runc (accessed on 9 November 2018).

- Cloud Native Computing Foundation. website/components.md at release-1.11 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.11/content/en/docs/concepts/overview/components.md#container-runtime (accessed on 9 November 2018).

- Mesosphere. mesos/containerizers.md at 1.5.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.5.x/docs/containerizers.md (accessed on 9 November 2018).

- Cloud Native Computing Foundation. community/scheduler_extender.md at master · kubernetes/community. Available online: https://github.com/kubernetes/community/blob/master/contributors/design-proposals/scheduling/scheduler_extender.md (accessed on 9 November 2018).

- Mesosphere. “mesos/allocation-module.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/allocation-module.md#writing-a-custom-allocator (accessed on 9 November 2018).

- Apache. aurora/RELEASE-NOTES.md at master · apache/aurora. Available online: https://github.com/apache/aurora/blob/master/RELEASE-NOTES.md#0200 (accessed on 9 November 2018).

- Apache. aurora/scheduler-configuration.md at rel/0.20.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.20.0/docs/reference/scheduler-configuration.md (accessed on 9 November 2018).

- Mesosphere. marathon/plugin.md at v1.6.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.6.0/docs/docs/plugin.md#scheduler (accessed on 9 November 2018).

- Cloud Native Computing Foundation. website/configure-multiple-schedulers.md at release-1.11 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.11/content/en/docs/tasks/administer-cluster/configure-multiple-schedulers.md (accessed on 9 November 2018).

- Cloud Native Computing Foundation. website/admission-controllers.md at release-1.11 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.11/content/en/docs/reference/access-authn-authz/admission-controllers.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/extensible-admission-controllers.md#initializers at release-1.11 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.11/content/en/docs/reference/access-authn-authz/extensible-admission-controllers.md#initializers (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/extensible-admission-controllers.md#admission webhooks at release-1.11 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.11/content/en/docs/reference/access-authn-authz/extensible-admission-controllers.md#admission-webhooks (accessed on 12 November 2018).

- Mesosphere. mesos/modules.md#hook at 1.6.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.6.x/docs/modules.md#hook (accessed on 12 November 2018).

- Mesosphere. aurora/client-hooks.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/reference/client-hooks.md (accessed on 12 November 2018).

- Apache. aurora/RELEASE-NOTES.md at master · apache/aurora. Available online: https://github.com/apache/aurora/blob/master/RELEASE-NOTES.md#0190 (accessed on 12 November 2018).

- Linux Virtual Server Project. IPVS Software—Advanced Layer-4 Switching. Available online: http://www.linuxvirtualserver.org/software/ipvs.html (accessed on 12 November 2018).

- Docker Inc. docker.github.io/networking.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/swarm/networking.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/service.md at release-1.9 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.9/docs/concepts/services-networking/service.md (accessed on 12 November 2018).

- Mesosphere. marathon/networking.md at v1.6.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.6.0/docs/docs/networking.md#specifying-service-ports (accessed on 12 November 2018).

- Mesosphere. Networking—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.11/networking/ (accessed on 12 November 2018).

- Mesosphere. Networking—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.11/networking/#layer-7 (accessed on 12 November 2018).

- Docker Inc. docker.github.io/overlay.md at v17.12 · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.12/network/overlay.md#bypass-the-routing-mesh-for-a-swarm-service (accessed on 12 November 2018).

- Apache. aurora/service-discovery.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/features/service-discovery.md#using-mesos-dns (accessed on 12 November 2018).

- Mesosphere. marathon/service-discovery-load-balancing.md at v1.6.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.6.0/docs/docs/service-discovery-load-balancing.md#mesos-dns (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/overview.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/configuration/overview.md#services (accessed on 12 November 2018).

- Docker Inc. docker.github.io/services.md at master · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/master/engine/swarm/services.md#publish-a-services-ports-directly-on-the-swarm-node (accessed on 12 November 2018).

- Apache. aurora/services.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/features/services.md#ports (accessed on 12 November 2018).

- Mesosphere. marathon/ports.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/ports.md#random-port-assignment (accessed on 12 November 2018).

- Docker Inc. docker.github.io/services.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/engine/swarm/services.md#publish-a-services-ports-directly-on-the-swarm-node (accessed on 12 November 2018).

- RedHat. Default Scheduling—Scheduling|Cluster Administration|OKD Latest. Available online: https://docs.okd.io/latest/admin_guide/scheduling/scheduler.html#scheduler-sample-policies (accessed on 12 November 2018).

- Cloud Native Computing Foundation. containernetworking/cni: Container Network Interface—Networking for Linux Containers. Available online: https://github.com/containernetworking/cni (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/network-plugins.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/cluster-administration/network-plugins.md#cni (accessed on 12 November 2018).

- Mesosphere. mesos/cni.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/cni.md (accessed on 12 November 2018).

- Mesosphere. CNI Plugin Support—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.10/networking/virtual-networks/cni-plugins/ (accessed on 12 November 2018).

- Cloud Native Computing Foundation. CNI Plugins Should Allow Hairpin Traffic · Issue #476 · containernetworking/cni. Available online: https://github.com/containernetworking/cni/issues/476 (accessed on 12 November 2018).

- Docker Inc. docker/libnetwork: Docker Networking. Available online: https://github.com/docker/libnetwork (accessed on 12 November 2018).

- Mesosphere. mesos/networking.md at 1.5.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.5.x/docs/networking.md (accessed on 12 November 2018).

- Mesosphere. Virtual Networks—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.10/networking/virtual-networks/ (accessed on 12 November 2018).

- Mesosphere. DC/OS Overlay—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.11/networking/SDN/dcos-overlay/#replacing-or-adding-new-virtual-networks (accessed on 12 November 2018).

- Mesosphere. mesos/networking.md at 1.5.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.5.x/docs/networking.md#limitations-of-docker-containerizer (accessed on 12 November 2018).

- Docker Inc. docker.github.io/overlay.md at v17.12 · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.12/network/overlay.md#separate-control-and-data-traffic (accessed on 12 November 2018).

- Intel. intel/multus-cni: Multi-Homed pod cni. Available online: https://github.com/intel/multus-cni (accessed on 12 November 2018).

- Daboo, C.; Daboo, C. Use of SRV Records for Locating Email Submission/Access Services; Internet Engineering Task Force|IETF]. 2011. Available online: https://tools.ietf.org/html/rfc6186 (accessed on 1 March 2019).

- Cloud Native Computing Foundation. website/dns-pod-service.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/services-networking/dns-pod-service.md#srv-records (accessed on 12 November 2018).

- Mesosphere. Service Naming—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.11/networking/DNS/mesos-dns/service-naming/#srv-records (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/service.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/services-networking/service.md#headless-services (accessed on 12 November 2018).

- Mesosphere. Edge-LB—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/services/edge-lb/ (accessed on 13 November 2018).

- Mesosphere. Service Docs—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/services/ (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/create-external-load-balancer.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/tasks/access-application-cluster/create-external-load-balancer.md (accessed on 12 November 2018).

- Docker Inc. docker.github.io/services.md at v17.09-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.09-release/engine/swarm/services.md (accessed on 13 November 2018).

- Mesosphere. Load Balancing and Virtual IPs (VIPs)—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.11/networking/load-balancing-vips/ (accessed on 12 November 2018).

- Mesosphere. DC/OS Domain Name Service—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.11/networking/DNS/ (accessed on 12 November 2018).

- Docker Inc. swarmkit/task_model.md at master · docker/swarmkit. Available online: https://github.com/docker/swarmkit/blob/master/design/task_model.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/pod.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/workloads/pods/pod.md (accessed on 12 November 2018).

- Mesosphere. mesos/nested-container-and-task-group.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/nested-container-and-task-group.md (accessed on 12 November 2018).

- Mesosphere. marathon/pods.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/pods.md (accessed on 12 November 2018).

- Mesosphere. Pods—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.11/deploying-services/pods/ (accessed on 13 November 2018).

- Mesosphere. mesos/mesos-containerizer.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/mesos-containerizer.md#posix-disk-isolator (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/jobs-run-to-completion.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/workloads/controllers/jobs-run-to-completion.md (accessed on 12 November 2018).

- Apache. aurora/configuration-tutorial.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/reference/configuration-tutorial.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/cron-jobs.md at release-1.11 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.11/content/en/docs/concepts/workloads/controllers/cron-jobs.md (accessed on 12 November 2018).

- Apache. aurora/cron-jobs.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/features/cron-jobs.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/replicaset.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/workloads/controllers/replicaset.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/labels.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/overview/working-with-objects/labels.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/labels.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/overview/working-with-objects/labels.md#label-selectors (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/horizontal-pod-autoscale.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/tasks/run-application/horizontal-pod-autoscale.md (accessed on 12 November 2018).

- Mesosphere. Autoscaling with Marathon—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.10/tutorials/autoscaling/ (accessed on 12 November 2018).

- Docker Inc. docker.github.io/services.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/engine/swarm/services.md#control-service-scale-and-placement (accessed on 9 November 2018).

- Cloud Native Computing Foundation. website/daemonset.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/workloads/controllers/daemonset.md (accessed on 12 November 2018).

- Docker Inc. docker.github.io/stack-deploy.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/engine/swarm/stack-deploy.md (accessed on 12 November 2018).

- Mesosphere. marathon/application-groups.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/application-groups.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. helm/helm: The Kubernetes Package Manager. Available online: https://github.com/helm/helm (accessed on 12 November 2018).

- Cloud Native Computing Foundation. Kubernetes/Kompose: Go from Docker Compose to Kubernetes. Available online: https://github.com/kubernetes/kompose (accessed on 12 November 2018).

- Docker Inc. docker.github.io/index.md at v18.03-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v18.03/engine/extend/index.md (accessed on 12 November 2018).

- Mesosphere. mesos/docker-volume.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/docker-volume.md#motivation (accessed on 12 November 2018).

- Mesosphere. marathon/external-volumes.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/external-volumes.md (accessed on 12 November 2018).

- Portworx. Using Portworx Volumes with DCOS. Available online: https://docs.portworx.com/scheduler/mesosphere-dcos/portworx-volumes.html (accessed on 12 November 2018).

- Dell. thecodeteam/mesos-module-dvdi: Mesos Docker Volume Driver Isolator Module. Available online: https://github.com/thecodeteam/mesos-module-dvdi (accessed on 12 November 2018).

- K. and M. Cloud Foundry. Container-Storage-Interface/Spec: Container Storage Interface (CSI) Specification. Available online: https://github.com/container-storage-interface/spec (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/volumes.md at release-1.10 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.10/content/en/docs/concepts/storage/volumes.md#csi (accessed on 12 November 2018).

- Mesosphere. mesos/csi.md at 1.7.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.7.x/docs/csi.md (accessed on 12 November 2018).

- Mesosphere. Volume Plugins—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/services/beta-storage/0.3.0-beta/volume-plugins/ (accessed on 12 November 2018).

- Docker Inc. docker.github.io/plugins_volume.md at v18.03-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v18.03/engine/extend/plugins_volume.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/downward-api-volume-expose-pod-information.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/tasks/inject-data-application/downward-api-volume-expose-pod-information.md (accessed on 12 November 2018).

- Mesosphere. marathon/networking.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/networking.md#downward-api (accessed on 12 November 2018).

- Docker Inc. docker.github.io/configs.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/engine/swarm/configs.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/configmap.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/tasks/configure-pod-container/configmap.md (accessed on 12 November 2018).

- Apache. aurora/job-updates.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/features/job-updates.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/configure-liveness-readiness-probes.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/tasks/configure-pod-container/configure-liveness-readiness-probes.md#define-readiness-probes (accessed on 12 November 2018).

- Mesosphere. marathon/readiness-checks.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/readiness-checks.md (accessed on 12 November 2018).

- Docker Inc. docker.github.io/index.md at master · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06/compose/compose-file/index.md#update_config (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/deployment.md at release-1.10 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.10/content/en/docs/concepts/workloads/controllers/deployment.md#proportional-scaling (accessed on 12 November 2018).

- Mesosphere. marathon/deployments.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/deployments.md#rolling-restarts (accessed on 12 November 2018).

- Docker Inc. docker Service Update|Docker Documentation. Available online: https://docs.docker.com/engine/reference/commandline/service_update/#roll-back-to-the-previous-version-of-a-service (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/deployment.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/workloads/controllers/deployment.md#rolling-back-to-a-previous-revision (accessed on 12 November 2018).

- Apache. aurora/configuration.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/reference/configuration.md#updateconfig-objects (accessed on 12 November 2018).

- Mesosphere. Dcos Marathon Deployment Rollback—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.10/cli/command-reference/dcos-marathon/dcos-marathon-deployment-rollback/ (accessed on 12 November 2018).

- Limoncelli, T.A.; Chalup, S.R.; Hogan, C.J. The Practice of Cloud System Administration: Designing and Operating Large Distributed Systems. 2014, Volume 2. Available online: http://the-cloud-book.com/ (accessed on 14 January 2016).

- Schermann, G.; Leitner, P.; Gall, H.C. Bifrost—Supporting Continuous Deployment with Automated Enactment of Multi-Phase Live Testing Strategies. In Proceedings of the 17th International Middleware Conference (Middleware ’16), Trento, Italy, 12–16 December 2016. [Google Scholar]

- Cloud Native Computing Foundation. website/manage-deployment.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/cluster-administration/manage-deployment.md#canary-deployments (accessed on 12 November 2018).

- Mesosphere. marathon/blue-green-deploy.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/blue-green-deploy.md (accessed on 12 November 2018).

- Mesosphere. mesos/roles.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/roles.md (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/resource-quotas.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/policy/resource-quotas.md#compute-resource-quota (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/resource-quotas.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/policy/resource-quotas.md#storage-resource-quota (accessed on 12 November 2018).

- Mesosphere. mesos/quota.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/quota.md (accessed on 12 November 2018).

- Mesosphere. mesos/operator-http-api.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/operator-http-api.md#get_roles (accessed on 12 November 2018).

- Mesosphere. mesos/weights.md at 1.4.x · apache/mesos. Available online: https://github.com/apache/mesos/blob/1.4.x/docs/weights.md (accessed on 12 November 2018).

- Apache. aurora/multitenancy.md at rel/0.18.0 · apache/aurora. Available online: https://github.com/apache/aurora/blob/rel/0.18.0/docs/features/multitenancy.md#configuration-tiers (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/resource-quotas.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/policy/resource-quotas.md#object-count-quota (accessed on 12 November 2018).

- Docker Inc. docker.github.io/index.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/datacenter/ucp/2.2/guides/access-control/index.md (accessed on 12 November 2018).

- Docker Inc. docker.github.io/isolate-nodes-between-teams.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/datacenter/ucp/2.2/guides/access-control/isolate-nodes-between-teams.md (accessed on 12 November 2018).

- Docker Inc. docker.github.io/isolate-volumes-between-teams.md at v17.06-release · docker/docker.github.io. Available online: https://github.com/docker/docker.github.io/blob/v17.06-release/datacenter/ucp/2.2/guides/access-control/isolate-volumes-between-teams.md (accessed on 12 November 2018).

- Mesosphere. Tutorial—Restricting Access to DC/OS Service Groups—Mesosphere DC/OS Documentation. Available online: https://docs.mesosphere.com/1.10/security/ent/restrict-service-access/#create-users-and-groups (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/reserve-compute-resources.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/tasks/administer-cluster/reserve-compute-resources.md (accessed on 12 November 2018).

- Mesosphere. marathon/pods.md at v1.5.0 · mesosphere/marathon. Available online: https://github.com/mesosphere/marathon/blob/v1.5.0/docs/docs/pods.md#executor-resources (accessed on 12 November 2018).

- Cloud Native Computing Foundation. website/manage-compute-resources-container.md at release-1.8 · kubernetes/website. Available online: https://github.com/kubernetes/website/blob/release-1.8/docs/concepts/configuration/manage-compute-resources-container.md (accessed on 12 November 2018).