A New Method of Simultaneous Localization and Mapping for Mobile Robots Using Acoustic Landmarks

Abstract

:1. Introduction

2. Localization of Microphones Based on Sound Source Array

2.1. Time Delay of Arrival (TDOA) Estimation

2.2. Localization of Microphones

3. Acoustic SLAM Based on Microphone Landmarks

3.1. EKF-SLAM Algorithm

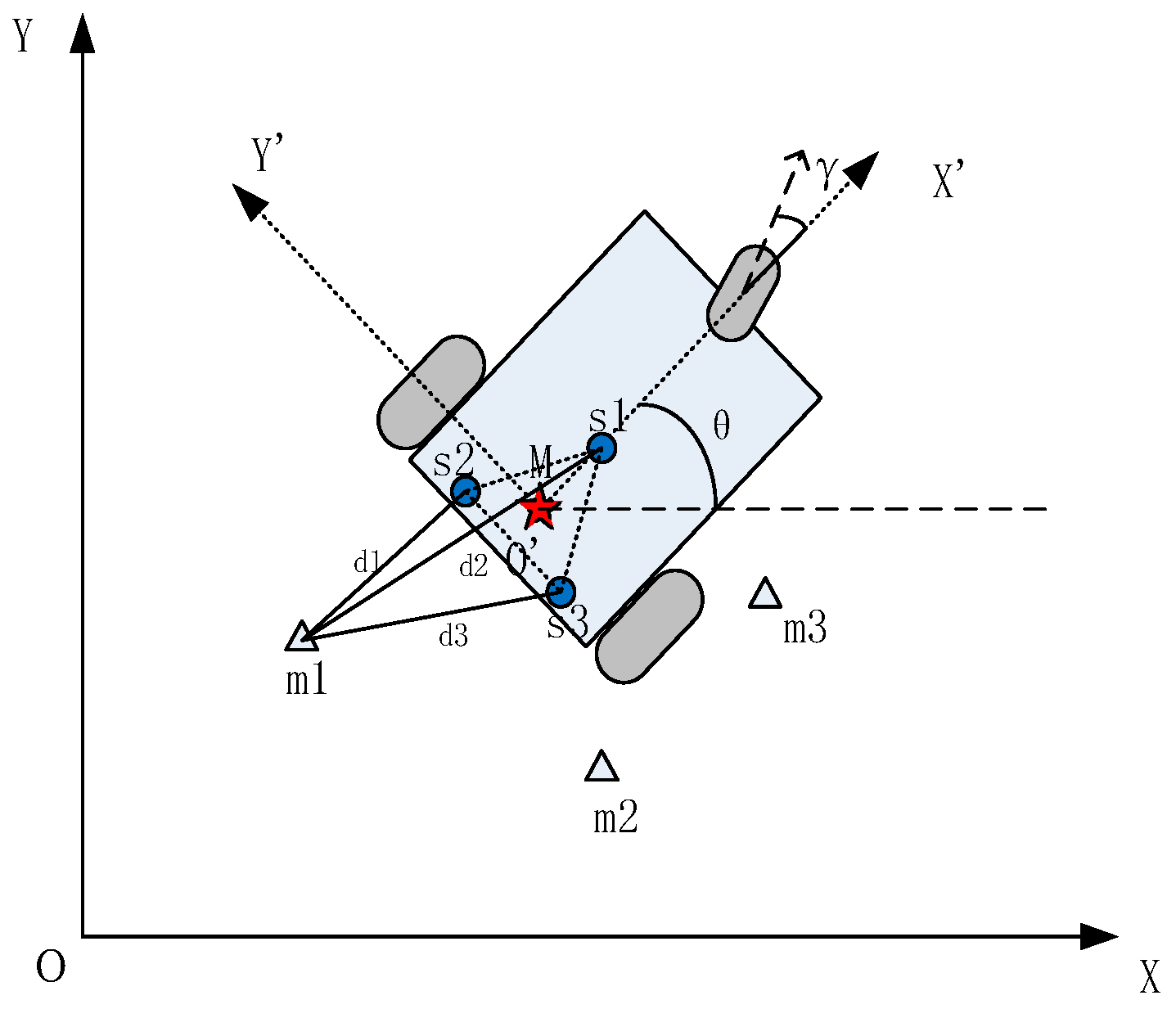

3.2. System Model

3.3. EKF-SLAM Based on Microphone Landmark Observation

4. Simulation and Experiment Results

4.1. Room Simulations

4.2. Experimental Results in a Realistic Environment

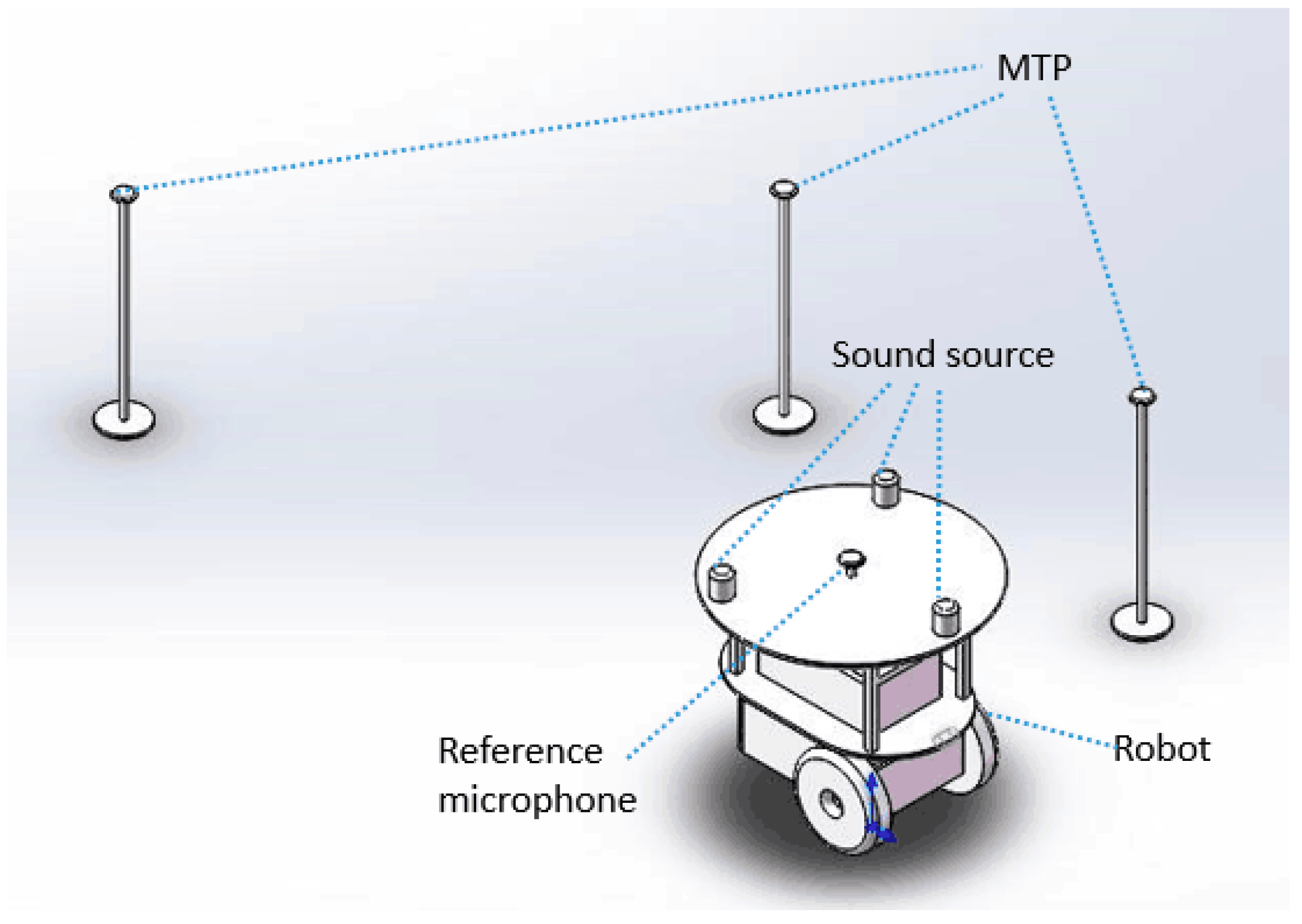

4.2.1. Experiment Setup

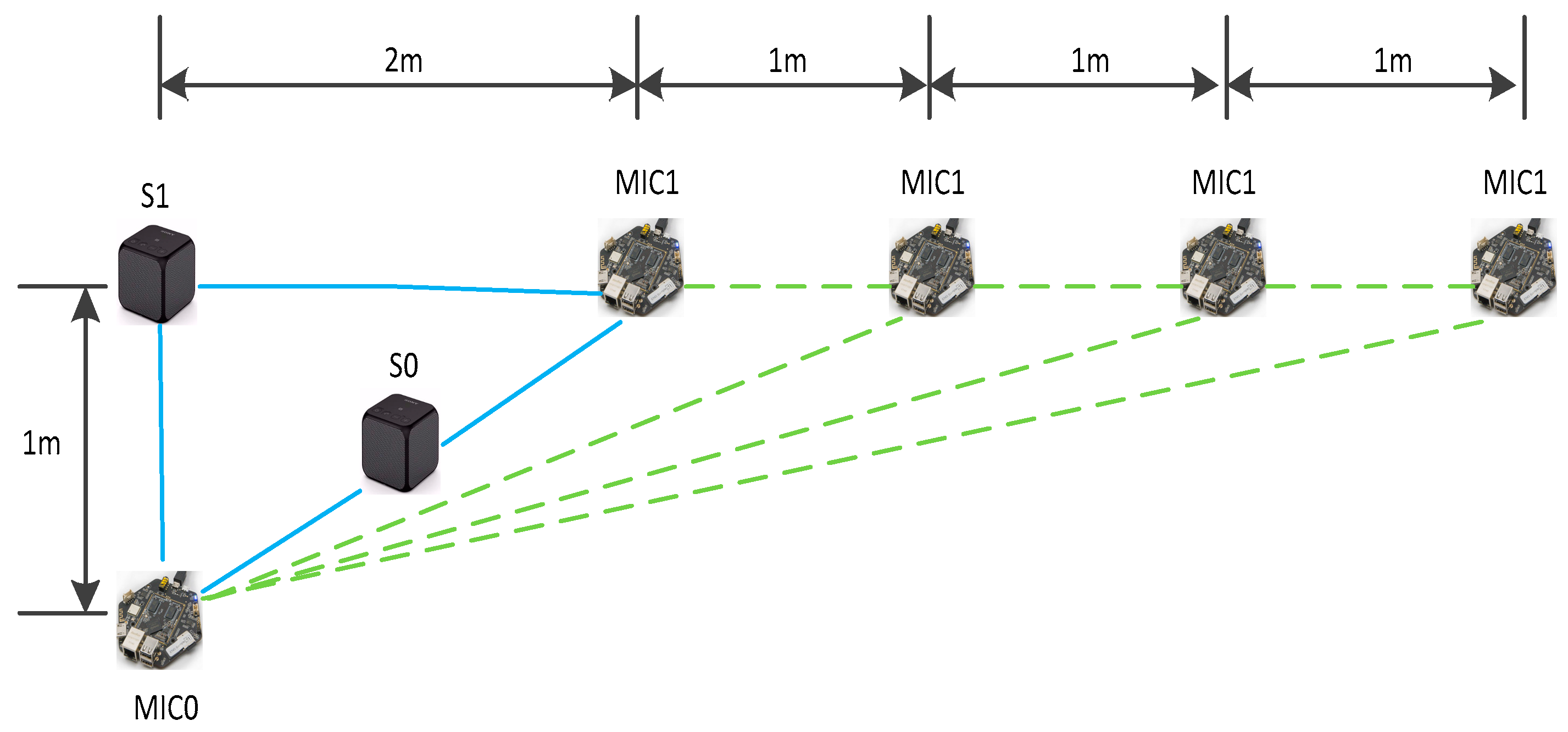

4.2.2. The Calibration Method

4.2.3. Experiment Results

- For the first microphone to be positioned (m1), use the calibration method described in Section 4.2.2, to obtain the precise value of time delay of arrival between m0 and m1 (TDOA1).

- Similar to Step 1, first s0 sounds, and then s2 sounds. Finally, TDOA2 between m0 and m1 is obtained. When it is s3, TDOA3 is obtained too.

- Distances between m1 and the three sound sources (d1, d2, d3) is calculated by Equations (6) and (7).

- Evaluate whether m1 is a valid characteristic landmark, and if it is, put m1 into the dataset or abandon it. The evaluation method is described as follows and the evaluation standard value is obtained from actual experimental data analysis in this paper.

- Repeat Steps 1–4 and the other valid microphones are detected in sequence.

- The method of EKF-SLAM based on microphone landmark observations described in Section 3.3 is applied to this procedure.

- The robot insists on moving to the next position and performing the above operations until the end of the experiment.

| Algorithm 1: Algorithm of Acoustic EKF-SLAM Based on Microphone Landmark Observation. |

|

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cadena, C.; Carlone, L.; Carrillo, H. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Jingwen, L.; Shiyin, Q. A fast algorithm of slam based on combinatorial interval filters. IEEE Access 2018, 6, 28174–28192. [Google Scholar]

- Luo, J.; Qin, S. A fast algorithm of simultaneous localization and mapping for mobile robot based on ball particle filter. IEEE Access 2018, 6, 20412–20429. [Google Scholar] [CrossRef]

- Norgren, P.; Skjetne, R. A multibeam-based slam algorithm for iceberg mapping using auvs. IEEE Access 2018, 6, 26318–26337. [Google Scholar] [CrossRef]

- Grisetti, G.; KuMmerle, R.; Stachniss, C.; Burgard, W. A tutorial on graph-based slam. IEEE Intell. Transp. Syst. Mag. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Lowry, S. Visual place recognition: A survey. IEEE Trans. Robot. 2016, 32, 1–19. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. Monoslam: Real-time single camera slam. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Clipp, B.; Lim, J.; Frahm, J.M.; Pollefeys, M. Parallel, real-time visual SLAM. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Taipei, Taiwan, 18–22 October 2010; pp. 3961–3968. [Google Scholar]

- Blösch, M.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Vision based MAV navigation in unknown and unstructured environments. In Proceedings of the IEEE International Conference on Robotics & Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 21–28. [Google Scholar]

- Gao, L.; Yuan, P.; Wang, T.; Shi, Z.; Ji, X. New research on SLAM algorithm based on feature matching. In Proceedings of the 2017 2nd International Conference on Advanced Robotics and Mechatronics (ICARM), Hefei, China, 27–31 August 2017; pp. 1–6. [Google Scholar]

- Esparza-Jiménez, J.; Devy, M.; Gordillo, J. Visual ekf-slam from heterogeneous landmarks. Sensors 2016, 16, 489. [Google Scholar] [CrossRef]

- Evers, C.; Naylor, P.A. Acoustic slam. IEEE/ACM Trans. Audio Speechand Lang. Process. 2018, 26, 1484–1498. [Google Scholar] [CrossRef]

- Krekovic, M.; Dokmanic, I.; Vetterli, M. EchoSLAM: Simultaneous localization and mapping with acoustic echoes. In Proceedings of the IEEE International Conference on Acoustics 2016, Shanghai, China, 20–25 March 2016; pp. 11–15. [Google Scholar]

- Huang, J.; Kume, K.; Saji, A.; Nishihashi, M.; Watanabe, T.; Martens, W.L. Robotic spatial sound localization and its 3D sound human interface. In Proceedings of the International Symposium on Cyber Worlds, Tokyo, Japan, 6–8 November 2002. [Google Scholar]

- Okuno, H.G.; Nakadai, K. Robot audition: Its rise and perspectives. In Proceedings of the IEEE International Conference on Acoustic, Brisbane, QLD, Australia, 19–24 April 2015; pp. 5610–5614. [Google Scholar]

- Nakadai, K.; Ince, G.; Nakamura, K.; Nakajima, H. Robot audition for dynamic environments. In Proceedings of the IEEE International Conference on Signal Processing, Hong Kong, China, 12–15 August 2012; pp. 125–130. [Google Scholar]

- Nadiri, O.; Rafaely, B. Localization of multiple speakers under high reverberation using a spherical microphone array and the direct-path dominance test. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1494–1505. [Google Scholar] [CrossRef]

- Evers, C.; Moore, A.H.; Naylor, P.A. Localization of moving microphone arrays from moving sound sources for robot audition. In Proceedings of the 2016 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; pp. 1008–1012. [Google Scholar]

- Valin, J.M. Enhanced robot audition based on microphone array source separation with post-filter. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2123–2128. [Google Scholar]

- Dokmanic, I.; Daudet, L.; Vetterli, M. How to Localize Ten Microphones in One Fingersnap. In Proceedings of the Signal Processing Conference, Lisbon, Portugal, 1–5 September 2014; pp. 2275–2279. [Google Scholar]

- Okuno, H.G. Robot Recognizes Three Simultaneous Speech by Active Audition. In Proceedings of the IEEE International Conference on Robotics & Automation, Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 398–405. [Google Scholar]

- Nakajima, H.; Nakadai, K.; Hasegawa, Y.; Tsujino, H. Blind source separation with parameter-free adaptive step-size method for robot audition. IEEE Trans. Audio Speech Lang. 2010, 18, 1476–1485. [Google Scholar] [CrossRef]

- Evers, C.; Dorfan, Y.; Gannot, S.; Naylor, P.A. Source tracking using moving microphone arrays for robot audition. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 6145–6149. [Google Scholar]

- Knapp, C.; Carter, G. The generalized correlation method for estimation of time delay. IEEE Trans. Acoust. Speech Signal Process. 1976, 24, 320–327. [Google Scholar] [CrossRef]

- Omologo, M.; Svaizer, P. Acoustic source location in noisy and reverberant environment using csp analysis. In Proceedings of the ICASSP IEEE International Conference on Acoustics, Speech and Signal Processing, Atlanta, GA, USA, 7–10 May 1996; Volume 2, pp. 921–924. [Google Scholar]

- Dvorkind, T.G.; Gannot, S. Time difference of arrival estimation of speech source in a noisy and reverberant environment. Signal Process. 2005, 85, 177–204. [Google Scholar] [CrossRef]

- Ali, S. An application of passive human—Robot interaction: Human tracking based on attention distraction. Syst. Man Cybern. Part A Syst. Hum. IEEE Trans. 2002, 32, 248–259. [Google Scholar]

- Hu, J.S.; Chan, C.Y.; Wang, C.K.; Lee, M.T.; Kuo, C.Y. Simultaneous Localization of a Mobile Robot and Multiple Sound Sources Using a Microphone Array. In Proceedings of the IEEE International Conference on Robotics & Automation, Kobe, Japan, 12–17 May 2009; pp. 29–34. [Google Scholar]

- Hu, J.S.; Yang, C.H.; Wang, C.K. Estimation of Sound Source Number and Directions under a Multi-source Environment. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 181–186. [Google Scholar]

- Bailey, T.; Nieto, J.; Guivant, J.; Stevens, M.; Nebot, E. Consistency of the EKF-SLAM Algorithm. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–13 October 2006; pp. 3562–3568. [Google Scholar]

- Jianjun, Y.; Yingkun, Z.; Xiaogang, R.; Yongfang, S. Implementation of an improved UKF algorithm in Electronic whiteboard positioning. In Proceedings of the 26th Chinese Control and Decision Conference (2014 CCDC), Changsha, China, 31 May–2 June 2014; pp. 3824–3828. [Google Scholar]

- Su, X.; Yan, J.; Yan, S. A UKF algorithm with two arrays in bearings-only tracking. In Proceedings of the 2011 4th International Congress on Image and Signal Processing, Shanghai, China, 15–17 October 2011; pp. 2709–2712. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improving Grid-based SLAM with Rao-Blackwellized Particle Filters by Adaptive Proposals and Selective Resampling. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 2432–2437. [Google Scholar]

- He, H.; Jia, Y.; Sun, L. Simultaneous Location and Map Construction Based on RBPF-SLAM Algorithm. In Proceedings of the 2018 Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 4907–4910. [Google Scholar]

- Li, Q.; Wang, Y.; Huang, Y.; Huang, X. Research on Four-Wheeled Indoor Mobile Robot SLAM Based on RBPF Algorithm. In Proceedings of the 2018 IEEE International Conference of Intelligent Robotic and Control Engineering (IRCE), Lanzhou, China, 24–27 August 2018; pp. 24–28. [Google Scholar]

- Hentout, A.; Beghni, A.; Benahmed Nourine, A.; Maoudj, A.; Bouzouia, B. Mobile robot rbpf-slam with lms sensor in indoor environments application on robuTER robot. In Proceedings of the 2017 5th International Conference on Electrical Engineering—Boumerdes (ICEE-B), Boumerdes, Algeria, 29–31 October 2017; pp. 1–6. [Google Scholar]

- Kurt-Yavuz, Z.; Yavuz, S. A comparison of EKF, UKF, FastSLAM2.0, and UKF-based FastSLAM algorithms. In Proceedings of the 2012 IEEE 16th International Conference on Intelligent Engineering Systems (INES), Lisbon, Portugal, 13–15 June 2012; pp. 37–43. [Google Scholar]

- López, E.; García, S.; Barea, R.; Bergasa, L.; Molinos, E.; Arroyo, R.; Romera, E.; Pardo, S. A Multi-Sensorial Simultaneous Localization and Mapping (SLAM) System for Low-Cost Micro Aerial Vehicles in GPS-Denied Environments. Sensors 2017, 17, 802. [Google Scholar] [CrossRef] [PubMed]

- Cho, H.; Kim, E.K.; Kim, S. Indoor SLAM application using geometric and ICP matching methods based on line features. Robot. Auton. Syst. 2018, 100, 206–224. [Google Scholar] [CrossRef]

- Mingjie, D.; Wusheng, C.; Bin, F. Underwater Matching Correction Navigation Based on Geometric Features Using Sonar Point Cloud Data. Sci. Program. 2017, 1, 1–10. [Google Scholar]

- Sun, Q.S.Q.; Wang, H.W.H.; Shen, X.S.X.; Ning, W.N.W.; Fu, X.F.X. Research on the statistical modeling and simulation for interface reverberation. In Proceedings of the 2010 3rd International Conference on Computer Science and Information Technology, Chengdu, China, 9–11 July 2010; pp. 566–570. [Google Scholar]

- Paik, H.; Sastry, N.N.; Santiprabha, I. Effectiveness of noise jamming with White Gaussian Noise and phase noise in amplitude comparison monopulse radar receivers. In Proceedings of the 2014 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 6–7 January 2014; pp. 1–5. [Google Scholar]

- Millioz, F.; Martin, N. Estimation of a white Gaussian noise in the Short Time Fourier Transform based on the spectral kurtosis of the minimal statistics: Application to underwater noise. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 5638–5641. [Google Scholar]

- Vargas-Rubio, J.G.; Santhanam, B. On the multiangle centered discrete fractional Fourier transform. IEEE Signal Process. Lett. 2005, 12, 273–276. [Google Scholar] [CrossRef]

- Ersoy, O.K. A comparative review of real and complex Fourier-related transforms. Proc. IEEE 1994, 82, 429–447. [Google Scholar] [CrossRef]

- Ojha, S.; Srivastava, P.D. New class of sequences of fuzzy numbers defined by modulus function and generalized weighted mean. arXiv, 2016; arXiv:1602.03747. [Google Scholar]

- Yang, Y.; Liu, G. Multivariate time series prediction based on neural networks applied to stock market. In Proceedings of the 2001 IEEE International Conference on Systems, Man and Cybernetics. e-Systems and e-Man for Cybernetics in Cyberspace (Cat.No.01CH37236), Tucson, AZ, USA, 7–10 October 2001; Volume 4, p. 2680. [Google Scholar]

- Syeda, M.; Zhang, Y.Q.; Pan, Y. Parallel granular neural networks for fast credit card fraud detection. 2002 IEEE World Congress on Computational Intelligence. In Proceedings of the 2002 IEEE International Conference on Fuzzy Systems. FUZZ-IEEE′02. Proceedings (Cat. No.02CH37291), Honolulu, HI, USA, 12–17 May 2002; Volume 1, pp. 572–577. [Google Scholar]

- Shaikh-Husin, N.; Hani, M.K.; Seng, T.G. Implementation of recurrent neural network algorithm for shortest path calculation in network routing. In Proceedings of the International Symposium on Parallel Architectures, Algorithms and Networks. I-SPAN′02, Makati City, Metro Manila, Philippines, 22–24 May 2002; pp. 355–359. [Google Scholar]

- Smith, R.; Self, M.; Cheeseman, P. Estimating uncertain spatial relationships in robotics. In Proceedings of the 1987 IEEE International Conference on Robotics and Automation, Raleigh, NC, USA, 31 March–3 April 1987; p. 850. [Google Scholar]

- Choi, J.; Choi, M.; Chung, W.K.; Choi, H.T. Data association using relative compatibility of multiple observations for EKF-SLAM. Intell. Serv. Robot. 2016, 9, 177–185. [Google Scholar] [CrossRef]

| SNR | x-Direction | y-Direction |

|---|---|---|

| No noise | 0.214 m | 0.106 m |

| 65 dB | 0.365 m | 0.206 m |

| 50 dB | 0.651 m | 0.378 m |

| SNR | x-Direction | y-Direction |

|---|---|---|

| No noise | 0.098 m | 0.152 m |

| 65 dB | 0.105 m | 0.165 m |

| 50 dB | 0.452 m | 0.219 m |

| Actual Position | Estimated Position | x-Error | y-Error | |

|---|---|---|---|---|

| Robot’s Position | (0, 0) | (0, 0) | 0 | 0 |

| (0, 0.586) | (0, 0.586) | 0 | 0 | |

| (0, 1.172) | (0.021, 1.177) | 0.021 | 0.005 | |

| (0, 1.758) | (0.116, 1.806) | 0.116 | 0.048 | |

| (0, 2.344) | (0.185, 2.400) | 0.185 | 0.056 | |

| (0, 2.930) | (0.187, 3.045) | 0.187 | 0.115 | |

| (0, 3.516) | (0.201, 3.643) | 0.201 | 0.127 | |

| (0.586, 3.516) | (0.785, 3.646) | 0.199 | 0.130 | |

| (1.172, 3.516) | (1.371, 3.646) | 0.199 | 0.130 | |

| (1.758, 3.516) | (1.840, 3.646) | 0.082 | 0.130 | |

| Standard Deviation | 0.132 | 0.082 | ||

| Actual Position | Estimated Position | x-Error | y-Error | |

|---|---|---|---|---|

| Landmarks | (−1.775, 0.135) | (−1.788, 0.114) | 0.013 | 0.249 |

| (−1.775, 1.157) | (−1.646, 1.347) | 0.129 | 0.190 | |

| (−1.775, 1.833) | (−1.606, 1.993) | 0.169 | 0.160 | |

| (−1.775, 2.735) | (−1.579, 2.873) | 0.196 | 0.138 | |

| (−1.775, 4.092) | (−1.568, 4.234) | 0.207 | 0.142 | |

| (−0.915, 5.479) | (−0.712, 5.617) | 0.203 | 0.138 | |

| (−0.125, 5.479) | (0.087, 5.607) | 0.212 | 0.128 | |

| (0.695, 5.479) | (0.898, 5.598) | 0.203 | 0.119 | |

| (−1.775, 5.187) | (−1.554, 5.332) | 0.221 | 0.145 | |

| (1.685, 5.479) | (1.887, 5.589) | 0.202 | 0.110 | |

| Standard Deviation | 0.195 | 0.169 | ||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Sun, H.; Zhang, H. A New Method of Simultaneous Localization and Mapping for Mobile Robots Using Acoustic Landmarks. Appl. Sci. 2019, 9, 1352. https://doi.org/10.3390/app9071352

Chen X, Sun H, Zhang H. A New Method of Simultaneous Localization and Mapping for Mobile Robots Using Acoustic Landmarks. Applied Sciences. 2019; 9(7):1352. https://doi.org/10.3390/app9071352

Chicago/Turabian StyleChen, Xiaohui, Hao Sun, and Heng Zhang. 2019. "A New Method of Simultaneous Localization and Mapping for Mobile Robots Using Acoustic Landmarks" Applied Sciences 9, no. 7: 1352. https://doi.org/10.3390/app9071352