1. Introduction

Virtual environment is defined as the awareness of the environments’ contents by using synthetic sensory information as if they were not synthetic [

1]. Feeling immersed in this type of environment requires the user to be in a psychological state that he/she feels included in the environment.

Many researchers aimed to enhance the immersive experience for the users of virtual environments by attempting to mimic the real environment. Since we perceive our surrounding by our senses in the real environment, the absence of any sense will affect our engagement in the environment. The same thing can be generalized in the virtual environment. A player’s presence will be increased by increasing the senses that will be active during the experiment [

2]. Thus tactile, olfactory, audio and visual sensory cues will result in stimulating the senses from the real environment and also give the user a strong sense of presence in the virtual environment [

3].

However, among the sensory cues, olfactory is the less used to enrich experience of the virtual environment for the users [

4]. It is mainly due to the slow advancement especially in emitting the scents when needed, and hence failing to integrate the smells with many software applications [

5]. Moreover, the absence of a simple and robust device that can emit the scents hinders using smell compared with the visual and auditory sensory modalities [

6]. The device which is responsible for delivering the scents to the user during such experiments is called olfactory display. Olfactory devices are controlled by a computer that delivers odorants to the human olfactory organ [

7]. Very few are commercial olfactory displays and are mainly used in cinema movies such as Olorama [

8].

In literature, several olfactory displays were produced releasing scents in the set time. It is a method that works well if we know the type of scents the user will come across such as in the movies. One major issue of this approach is that in the virtual environment such as games or virtual reality applications, it is hard to predict where the player will decide to go or do next, hence what type of scent should be released. Nonetheless, it is possible to further improve the timing of the release of the scents by associating it with the contents of the virtual environment. To the best of our knowledge, no previous attempt or study has fully accomplished and implemented a content aware solution, that can be generalized. With this goal, the work seeks to develop an olfactory display with the use of Inception-V3 model as an approach used in deep learning for image recognition and to release the corresponding scents. The data used in the study is the Minecraft computer game [

9].

The main objective of the study is to propose a new approach for associating scents with visual content of the virtual environment. The findings should make an important contribution in the field of virtual reality that can potentially offer a more immersive experience. Additionally, this work contributes to artificial intelligence by showing that the use of pre-trained model such as the Inception model can be used to solve such multilabel classification problems without the need to develop and train a new CNN from scratch.

This paper has been divided into four parts. The first part is a literature review to show different types of olfactory displays. The second part covers the methods and materials that describe the system design. The third part presents the result and discussion while the last part is the conclusion and future work.

2. Literature Review

According to the literature, there are two main types of olfactory displays: Wearable and devices placed in environment. The wearable devices are attached on the user’s body or head [

10]. This type of displays ensures the delivery of scents to the user but it has the drawback that the user is aware of the device, which can cause discomfort and therefore, the experience may not be as immersed as it should be. On the other hand, utilizing olfactory displays that are placed in the environment is not disturbing the user, since the user does not have to wear any hardware. However, a key limitation in this type of olfactory devices is that the scent may not reach the user, especially if it is placed far from the user or due to the weakness of the scent resulting from the air movement.

Each type is subdivided into different categories. The external devices also known as “placed in environment” are divided based on the scent generation technique that is used in the air canon, natural vaporization and air flow. While the wearable devices are divided according to their placement on the user to either “body” or “head mounted”.

The paper in [

11] introduces an olfactory display called inScent. A wearable device worn as necklace, it emits different scents upon receiving a mobile notification known as scentification in which scents are used for delivering information. The scents are emitted automatically based on different scenarios either through a predefined name or the contents of a message or may be based on the timing as in calendar events. Scents are chosen on behalf of the user’s preference, thus the cartridge can be exchanged. To generate and control the amount of scents to be produced, they use heating followed by fans’ air for delivering to the user. Heating of a wearable device can be risky and also the fan produces a loud sound that can cause discomfort for the user.

Recently, several researchers focused on designing a fashionable wearable device known as Essence [

12]. They are developing a lightweight necklace-shape olfactory comfortable enough to be worn on a daily basis and controlled wirelessly via an Android application. The device can either release scent manually in which the user pulls down the string that will send data for the release of the scent or may be receiving some data from the Smartphone such as location and time. They also release the scent based on the heart rate, brain activity or electro dermal activity. The device can be controlled by someone to release the scent. However, the device can only release one scent thus it is not practical to have one scent that will be released according to the user’s circumstances.

Smelling Screen device in [

13] can generate scent, based on the image shown in the Liquid Crystal Display (LCD), or by placing four fans on the corners of the screen indicating the image position in the screen resulting from the airflow of these fans. These devices did not operate with games, applications, or a movie but rather on random images which were shown on different corners of the screen.

An inexpensive olfactory display has been developed in [

14]. The device uses the Arduino Uno microcontroller as it is economical and capable of controlling the olfactory display. They also used fans to generate the airflow for scent delivery. The device was tested in different experiments involving games, advertising and procedural memories. For gaming, they used the Unity-based Tuscany. They had to modify the environment by adding a bowl of oranges that released the scent once the player came near it. However, this approach is not generic as most games are not designed to support olfactory displays. The researchers also developed their own application, presented in [

15].

Researchers in [

16] present an olfactory display that is simple, economical and capable of releasing 8 scents based on timed events. The scent is generated by a heating process to vaporize the essential oils and water that is used to clean the air from the previous scents. Along with the olfactory display, they also created the software that will be used to select the list of scents, control the speed of the fans and the intensity of aromas. However, the heating process is time consuming until the scent is released based on their experiments. It takes 6 s thus it is not an instant release of the scents.

Olfactory displays were used in many studies regarding synchronizing movie with scents. The commercial olfactory display known as “The Vortex Active” was used in research [

16] that aims to synchronize a movie clip with some specific scents. The synchronization based olfactory display releases scents on time. The device is installed in the environment and uses fans to deliver the scents for the users. The device is capable of releasing up to four scents at a time. It is connected to the computer via USB in order to set the timing of the scent releasing.

The study in [

17] uses an olfactory display called Exhalia SBi4. The research used six scents based on the chosen movie clips and synchronized the scents with them. The device used fans to deliver the scents for the users and could release up to four scents at a given time.

Another work done in [

5] was supposed to alter the contents of the film with the addition of the subtitle, which is a logo with different colors and was used to release the scents. The researchers made an olfactory display called Sub Smell. The system used different colors in every scene and when the movie was played, the machine identified the logo and analyzed the color with the release of the corresponding scent.

In [

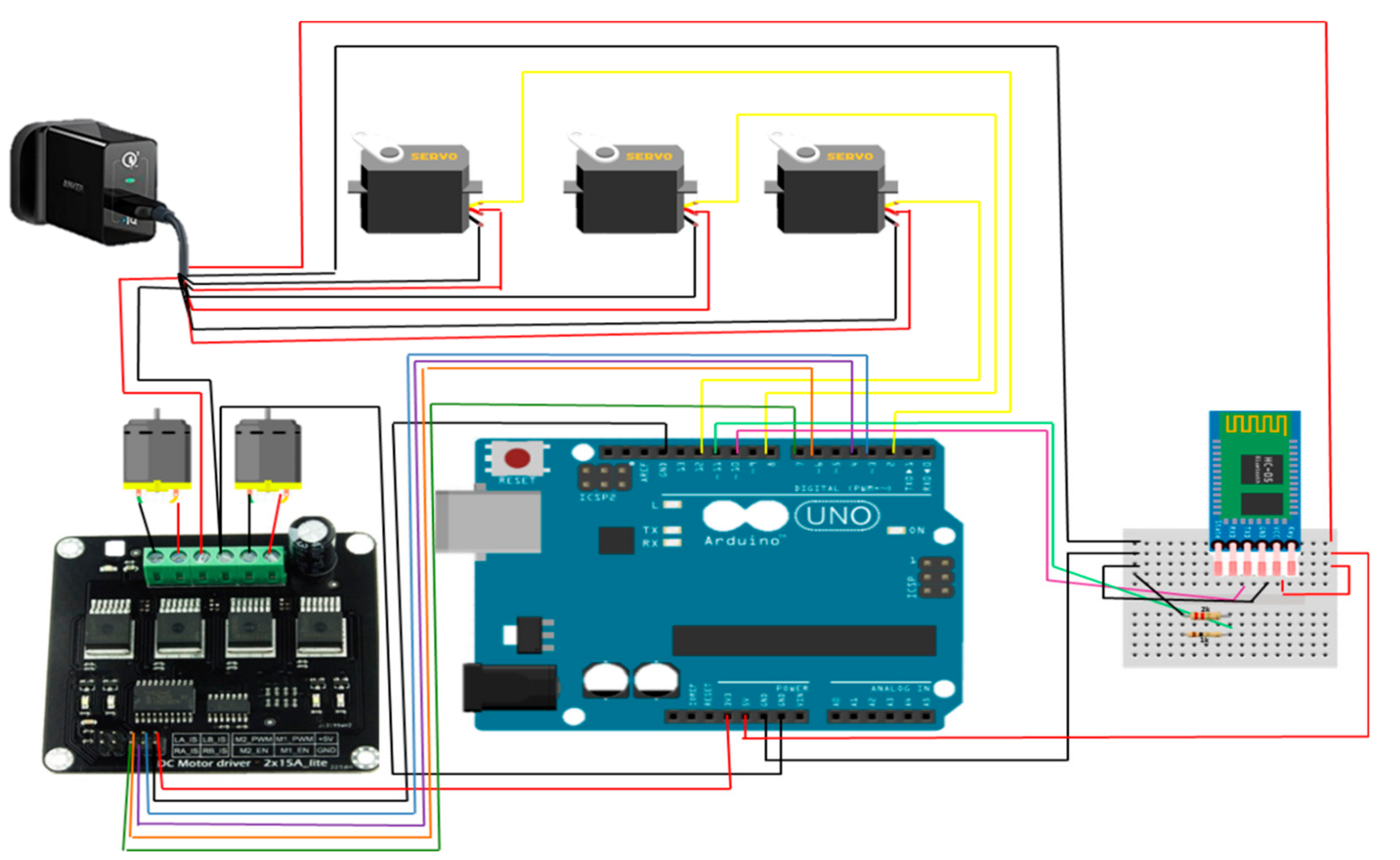

18], the authors developed a device for research purposes. The device is controlled by Arduino, and has three servo motors to press the jars of scents after sending the command through a wire connection from the Arduino. The advantage of this device is that due to its innovative design, each servo can press release two scents. Additionally, the device can be easily expanded. The device utilized fans to spread the scent but also to absorb when it should not be present. They developed two applications that use their device. One C# based application that utilizes timers to release scents and a unity Asset that allows games that use it to release scents when the player was in a certain area. Even though this device has the advantage of being low cost, like the other olfactory devices, the releasing of scents depends on the specific time or game, which is a problem we overcame with the proposed approach.

The literature shows that the existing olfactory displays are not used in the games or VR application due to difficulties in identifying the order of the scents. Few olfactory displays used in games depend on creating a game for the device and by setting the objects, a player might collide with and use them to release the scents.

Table 1 below shows a comparison of the different olfactory displays covered in the literature.

4. System Evaluation and Results

The retrained model was trained on Windows 10 PC with 8 GB RAM, Intel core i7 processor. We set the training steps to be 20,000 steps consuming an hour, based on default value. In order to retrain the model, we calculated the accuracy of new images from the Minecraft game that was not used for training before. There were 90 images in which each class comprised of 10 images. Also, we calculated the recall, precision and F-score.

4.1. Accuracy of Retrained Model

To evaluate the accuracy before integrating the model to our application, we provided the model with ten testing images for each class. Learning rate was set to 0.01 with batch size as 100.

Table 2 shows the results we got for each testing image. For the dirt images, the accuracy differed nonetheless, the model gave correct prediction of the label. For the grass class and ocean, they gave the highest accuracy results compared to the rest of the classes. However, the ocean images with dark color—images that have been taken after setting the time to night—gave lower scores. For the fire images, even though we reached an accuracy score of 0.99 in a few testing images, most of them ranged between 0.7 and 0.6 and this, due to the difficulty in collecting data which had fire only from the game. Fire was usually found in images that had grass too. For images containing snow, the color of snow was similar to that of the ocean and sky in some weather conditions provided by the game, thus causing the accuracy to drop in many of the testing images. If more training images were fed to stimulate the model in all conditions, then the accuracy would be much higher. In fact, the increasing of training images in all classes would increase the overall accuracy of the whole model. The images that contained illustrations of zombies were associated with the scent of mildew to represent an unpleasant scent. In comparison to the other classes, the zombies had the lowest scores when it was tested on the application, mainly due to the difficulty in training the system on high resolution images that represent zombies alone.

In some cases, we wanted two scents to be released, therefore; we had 3 cases for training models to recognize them. We selected these three cases with a motive of how frequent they were shown in the game.

Table 3 shows the results of these images. The first case is that, if the image has grass and ocean, then we wanted both of these classes to have high accuracy; we provided the model with 10 testing images similar to the previous cases. The model had higher accuracy for both classes of ocean and grass. However, in this data for the images that contained both grasses and ocean, we noticed that the grass class gave higher scores than the ocean’s class. This is similar with the image that contains either grass or ocean; the grass was correctly classified with high scores compared to the ocean class. In the second case, if we want both scents to be released when the image contains both grass and fire; only one image gave an accuracy of 0.99 for both fire and grass. Similar to the grass and ocean images, we got a higher accuracy score for the grass compared to the accuracy of the fire. This can be well seen if we compare the accuracy of the fire and grass alone. In many cases the grass had a much higher score than the fire. The last case was an image containing zombie–mildew and grass, the result showed high accuracy for the grass in all of the ten images compared with the mildew–zombie class. However, the mildew class got higher scores when combined with the grass in comparison with the classification of mildew class alone. For all these three cases during gameplay, both scents were released. Thus, it is important to have high accuracy for both of the classes in order to achieve this.

Figure 6 shows samples of the images which were used for the testing of the accuracy of the model before integrating it with the Windows application.

4.2. Accuracy of the Model within the Application

After integrating the CNN model to the Windows application, we ran the application while playing the game and we calculated the time to recognize each captured image and its accuracy as illustrated in

Table 4.

As the results show, the recognition of the image takes a few seconds, which makes the application running in almost real time. To release the scents, the accuracy was set to be at least 90% or higher.

4.3. Precision, Recall and F-Score

To compute the precision and recall, we had multiple confusion matrices, stated in

Table 5,

Table 6,

Table 7,

Table 8,

Table 9 and

Table 10, for each label; we accepted the score of the predication with threshold value 0.5. And then we applied micro-average method and macro-average method to get the model precision, recall and F-score performance evaluation metrics. Each label had 10 testing images.

For every class we have computed the precision and recall. The results in

Table 11 have been produced by the following formulas:

Then we applied the micro-average method and macro-average method and we produced the results in

Table 12.

The overall accuracy was 97% with individual accuracies reaching as high as 99%. This overall accuracy is satisfactory to the needs of the olfactory display. Furthermore, statistical results of Precision and Recall are also closer to 0.9 in most cases. Thus, validating the acceptance of this approach.

5. Conclusions and Future Work

The study presented an economical placed-in environment olfactory display, capable of releasing 6 scents which can easily be extended. The olfactory display can be used with movies but most importantly, it can be used with games and interactive content, where using time to decide when to release the scents is not possible. The device associates the scents with live images of the virtual environment by using Convolutional Neural Network for the image recognition. The study has shown how the process of automatic association of scents with content is possible and has solved the problems of releasing the scents in games when the player teleports from one place to another. The device is controlled by the Windows application that the user can connect via Bluetooth with the device and sets time to make sure how frequently the scents are released after the image is recognized.

The current version is not generic and requires the system to be trained for each target virtual artefact and for each title separately. Training the system for each game title is possible with a small team of experts and certainly is not a show stopper.

Further work needs to be done for the establishment of adding sound recognition along with the image recognition which could contribute best for the user’s experience. Also, a natural progression of this work will enhance the accuracy of the model by feeding the model with more training data. Finally, improving the design of the olfactory display is a possible future work.