Abstract

The question of the possible impact of deafness on temporal processing remains unanswered. Different findings, based on behavioral measures, show contradictory results. The goal of the present study is to analyze the brain activity underlying time estimation by using functional near infrared spectroscopy (fNIRS) techniques, which allow examination of the frontal, central and occipital cortical areas. A total of 37 participants (19 deaf) were recruited. The experimental task involved processing a road scene to determine whether the driver had time to safely execute a driving task, such as overtaking. The road scenes were presented in animated format, or in sequences of 3 static images showing the beginning, mid-point, and end of a situation. The latter presentation required a clocking mechanism to estimate the time between the samples to evaluate vehicle speed. The results show greater frontal region activity in deaf people, which suggests that more cognitive effort is needed to process these scenes. The central region, which is involved in clocking according to several studies, is particularly activated by the static presentation in deaf people during the estimation of time lapses. Exploration of the occipital region yielded no conclusive results. Our results on the frontal and central regions encourage further study of the neural basis of time processing and its links with auditory capacity.

1. Introduction

Current findings from research works dealing with deafness and temporal skills are often contradictory. The study of the temporal dimension is of interest because hearing is the sense which has the highest resolution for the estimation of time duration. It therefore appears to be essential for time processing, even when stimuli are presented via other modalities [1,2]. In addition, some experiments show that stimulus sequences are easier to recall when they are presented in the auditory modality [3,4].

These contradictory conclusions can be at least partially explained by the fact that “temporal skills” can encompass different notions, depending on the type of protocol used to examine time-related issues, as suggested by [5]. While some studies have evaluated the ability to replicate temporal sequences [6,7,8], others have focused on the ability to estimate time interval duration [5,9,10]. Results from the first category of works are extremely heterogeneous. However, there is more of a consensus in conclusions reached by studies in the second category, which have shown, for instance, that deaf adults experience difficulty in carrying out a time estimation in task based on visual stimuli [10]. Similar results were found in other studies using not only visual stimuli [9], but also the tactile modality [5]. Therefore, in order to elucidate the origins of the potential difficulties linked to time processing, it is essential to focus on specific mechanisms, such as clocking. Experimental tasks aimed at studying the ability to estimate time interval duration are usually either based directly on the estimation of a time period, or on time-to-contact assessments. Either way, these tasks are usually carried out in laboratory settings, with strict constraints for stimulus characterization. In addition, the variables used to draw conclusions are often linked to task performance, and to behavioural or subjective self-reported measures [11,12,13]. This could lead to biased results if confounding variables intervene in task execution. In some cases, therefore, the generalization of the results to daily life activities remains uncertain, and using them to aid deaf people becomes difficult.

In order to overcome the above-mentioned limitations, in the present work we recorded brain activity during a decision-making task involving road scenes. Interest in the study of brain activity linked to daily situations, such as driving, where time estimation and motion prediction are needed, is currently on the increase. Earlier studies have, for instance, looked at electrical activity during overtaking, a situation in which accurate motion prediction is crucial to guarantee safety [14]. According to [12], motion prediction is the product of two cognitive operations: cognitive motion extrapolation (CME), and a clocking mechanism. In the case of driving, the first operation is based on a mental model of vehicle movement, and on its subsequent extrapolation in order to predict its evolution. The second operation, which we manipulated in the present study, consists of the advance estimation of time to contact. Both cognitive processes have temporal components, since the estimation of speed is essential to build a mental representation of dynamism for the CME, and a time judgment is needed for the clocking mechanism.

In the present study we used the same protocol as in [15] to design two conditions, each with a qualitatively different clocking mechanism. These conditions were defined by the presentation format of the road situations, via either an animated video or a sequence of static images. According to the Attentional Theory of Cinematic Continuity (AToCC), the flow of information in animation makes construction of a CME easier than a motion sampled presentation, thanks to the attentional guidance of perceptual and cognitive processing provided by the dynamic presentation [7,16]. Several empirical studies have shown behavioral and eye-movement results consistent with the AToCC approach, for example, in the domain of multimedia learning [17,18], segmentation and comprehension of movies and films [19,20], visual narrative processing [21], and event prediction [22]. In contrast, the presentation of motion sampled by means of a sequence of static images, forces the subject to make more elaborate spatiotemporal inferences. In road situations, a time judgement and, therefore, a clocking mechanism is needed to infer vehicle speed when dynamic information is not provided continuously. Even though numerous behavioral studies have been carried out to infer these processes, physiological and brain measures can provide objective evidence of these difficulties or reorient the research towards other possible factors which may influence, for example, decision times or performance.

In the present work, we used modern functional near-infrared spectroscopy (fNIRS) to study brain activity linked to the clocking mechanism and to cognitive effort [23,24]. The fNIRS is a noninvasive technique that enables cortical activity to be inferred by measuring the light absorbed by the blood flow in the brain surface. It provides better temporal resolution than fMRI [25,26] (~250 ms), and is less sensitive to motion artifacts than the EEG [27]. Furthermore, fNIRS measurement is limited to the cortex and consequently no contribution of subcortical regions is expected in the signals, unlike EEG. The cortical area is the most relevant brain region for the study of the above-mentioned cognitive processes. fNIRS has the advantage of being portable and easy to set up, and is therefore a promising technique which will very probably be used in real life settings to monitor the cognitive state of users in the near future [28,29]. In addition, fNIRS is completely innocuous and has proven to be suitable in the field of brain-computer interface [30,31] through neurofeedback and also in the study of sensitive populations, such as newborns [32], deaf children [33] or Alzheimer Disease [34] and Parkinson’s [35] patients. Although this technique is usually employed in block-based analysis, an event related approach, such as the one we present here, is also suitable. Our framework allows us to determine whether cortical activity in different brain regions differs significantly in deaf people compared to people with normal hearing when the clocking mechanism is implemented [12] during the evaluation of road scenes.

To sum up, according to the findings from previous research works on the neural substrates of the clocking mechanism [36], and to the recent literature about attentional difficulties [37] and brain plasticity due to hearing loss or deafness [38], our hypotheses concerning the different brain regions studied (see Section 2.4. for details) are as follows: (1) evoked hemodynamic responses in the frontal region will be higher in deaf participants due to a greater cognitive effort when making spatiotemporal inferences [5,9]; (2) higher hemodynamic responses will be found in the central region for sequences of static images than for animations, because the clocking mechanism is required, and its underlying neural substrate is located in the pre-supplementary motor [36,39,40] and sensorimotor areas [41], both of which are located in the central brain region; (3) Because deaf people are attracted to spatial elements in tasks involving the estimation of time intervals [10] and because of their more highly-developed visual skills [42], we explored the occipital region to find out if there was any difference in the hemodynamic responses of deaf versus hearing participants, linked, for example, to deeper visual processing on the part of deaf participants, to alleviate the difficulties of time estimation.

2. Materials and Methods

2.1. Participants

A total of 19 bilaterally deaf or severely hearing-impaired individuals (age: M = 28.1 years, SD = 8.8, 9 male) and 18 hearing individuals (age: M = 26.1 years, SD = 6.6, 10 male) participated in the study. Information about deafness onset, hearing aids and language of deaf individuals is presented in Table 1. All participants had normal or corrected to normal vision. None of them declared a history of psychological or neurological disorders. None of them had a cochlear implant. They were all taking lessons in traffic rules and preparing the Highway Code examination in order to obtain their driving license in an accredited driving school in France. All participants gave their informed consent for inclusion. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the French Biomedical research ethics committee.

Table 1.

Demographic information about deaf participants.

2.2. Task Involving Clocking

A decision-making task requiring spatiotemporal inferences was used. Participants were positioned in the driving seat and had to decide whether or not they had enough time to accomplish a driving action: overtaking, entering a roundabout, joining a highway and crossing an intersection, where other vehicles were involved. Participants had to respond “Yes I have time” or “No I don’t have time”, as quickly as possible, either during the presentation or at the end of the stimulus by using a keyboard with colored stickers (blue and yellow) placed on the two answer keys “S” and “L”.

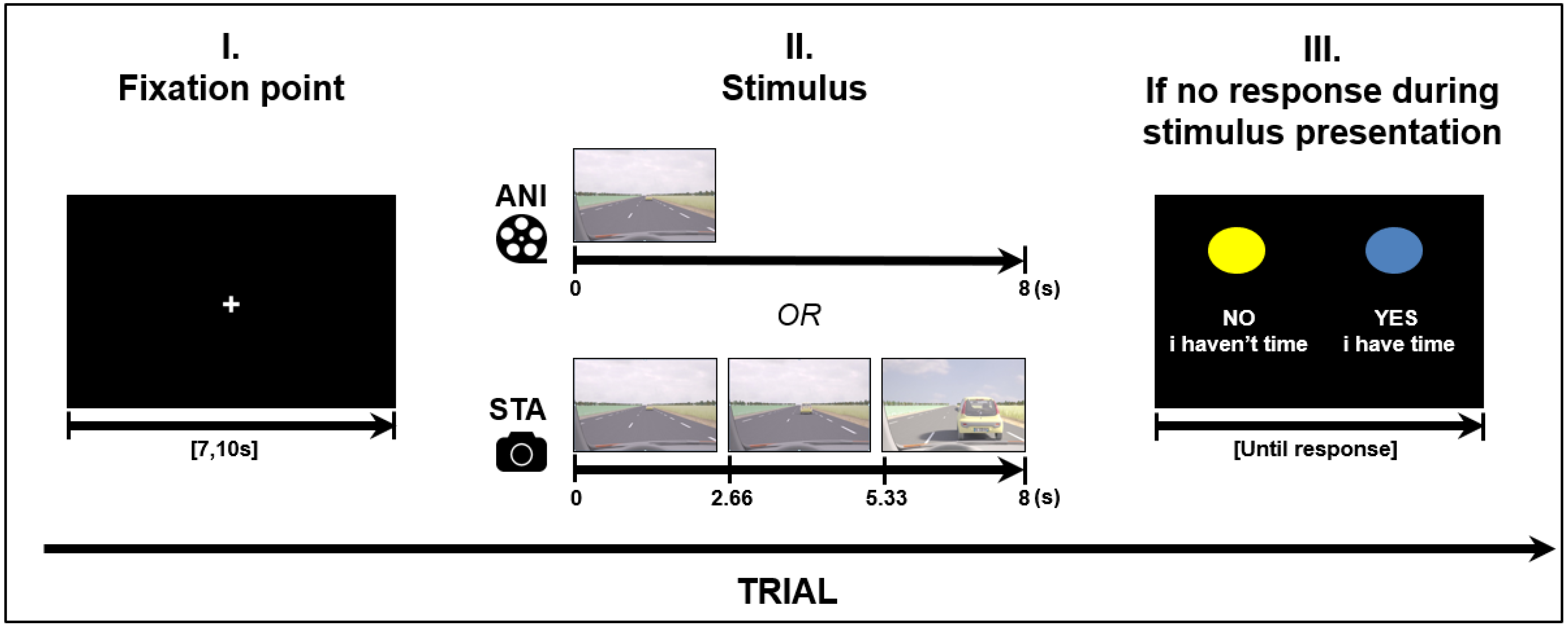

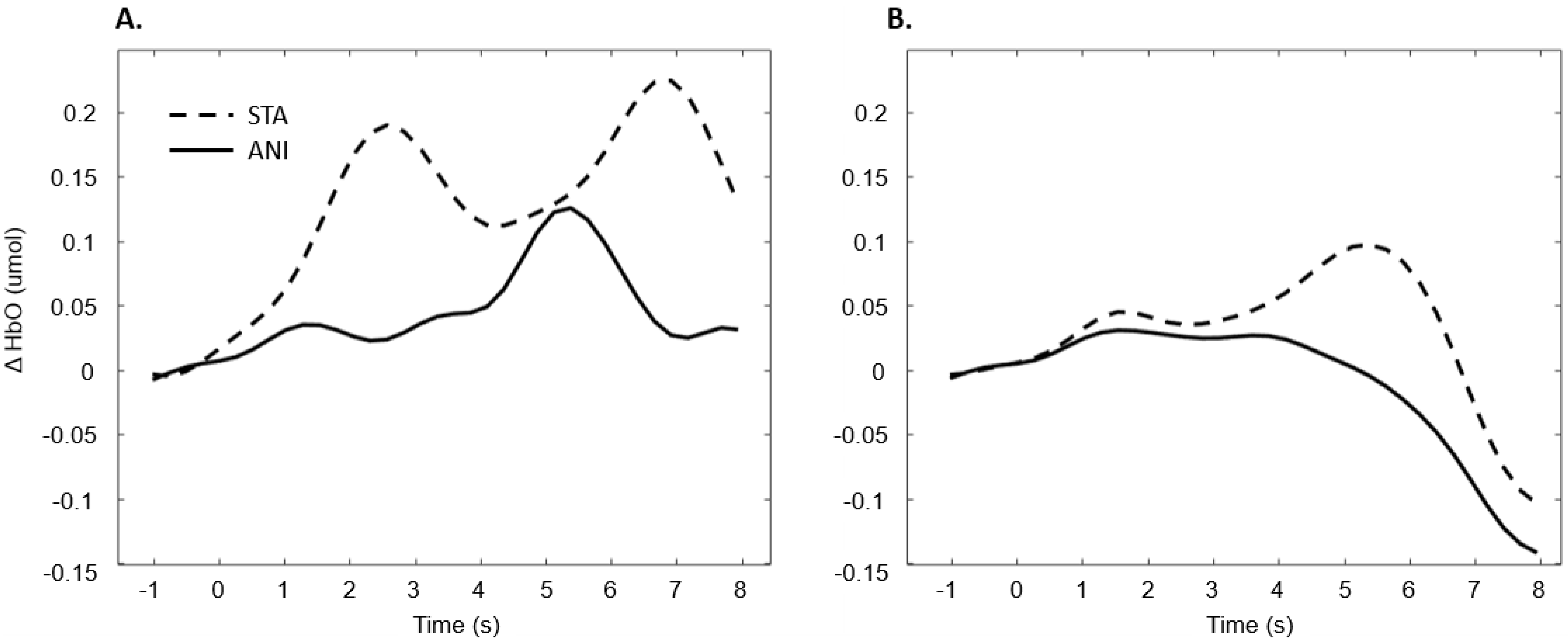

Road situations were presented for 8 s in two different formats: Animated, which consisted of an animated movie; and static image sequences, which consisted of three static pictures (each presented for 2.66 s) extracted from other animations (first, middle and last pictures). In order to make a decision when using the static format, participants needed to judge the length of time between images and estimate vehicle speed based on the evolution from the initial spatial cues. A schema of the stimulus presentation is depicted in Figure 1.

Figure 1.

Structure of a trial (road scene presentation), ANI: Animated format; STA: sequence of static images.

Four road situations requiring motion prediction were created: overtaking, entering a roundabout, joining a highway and crossing an intersection. These driving situations were modelled using 3D Studio software in accordance with French road norms. A judgment task, performed beforehand by expert drivers, was used to validate “yes” and “no” responses in complex situations. The experiment was designed in E.Prime 2.0 presented on a 23-inch screen (1920 × 768), in the same pseudo-randomized order for every participant. A total of 32 different stimuli were created for each format. The order was created in such a way that the same road situation, or the same format, was never presented consecutively. Before stimulus presentation, each trial started with a white fixation point in the middle of a black screen with a variable duration of {7, 7.5, 8, 10}. The variable duration of the fixation point can be considered as a jitter to avoid periodical physiological artefacts such as Mayer waves on the fNIRS signal (see Section 2.4 for fNIRS data processing).

2.3. Experimental Setting

Participants carried out the experiment individually in a single session. Once the participant was seated comfortably, the fNIRS cap was put on his head and the light dimmed. A calibration was performed by means of NIRStar software version 15.3 to ensure good quality signals in terms of Signal to Noise Ratio and gain. Conductive gel was applied to the participant’s hair when necessary. Eye-tracking (Tobii TX300) was recorded simultaneously for further analysis. An over cap was used to prevent possible interference on the fNIRS signal from the eye-tracker. Instructions were then presented on a 23-inch screen, and explained orally to hearing participants, and in French Sign Language to deaf participants by a qualified sign language interpreter. The fNIRS recording lasted approximately 34 min on average, taking into account response times and baseline.

2.4. fNIRS Data Processing

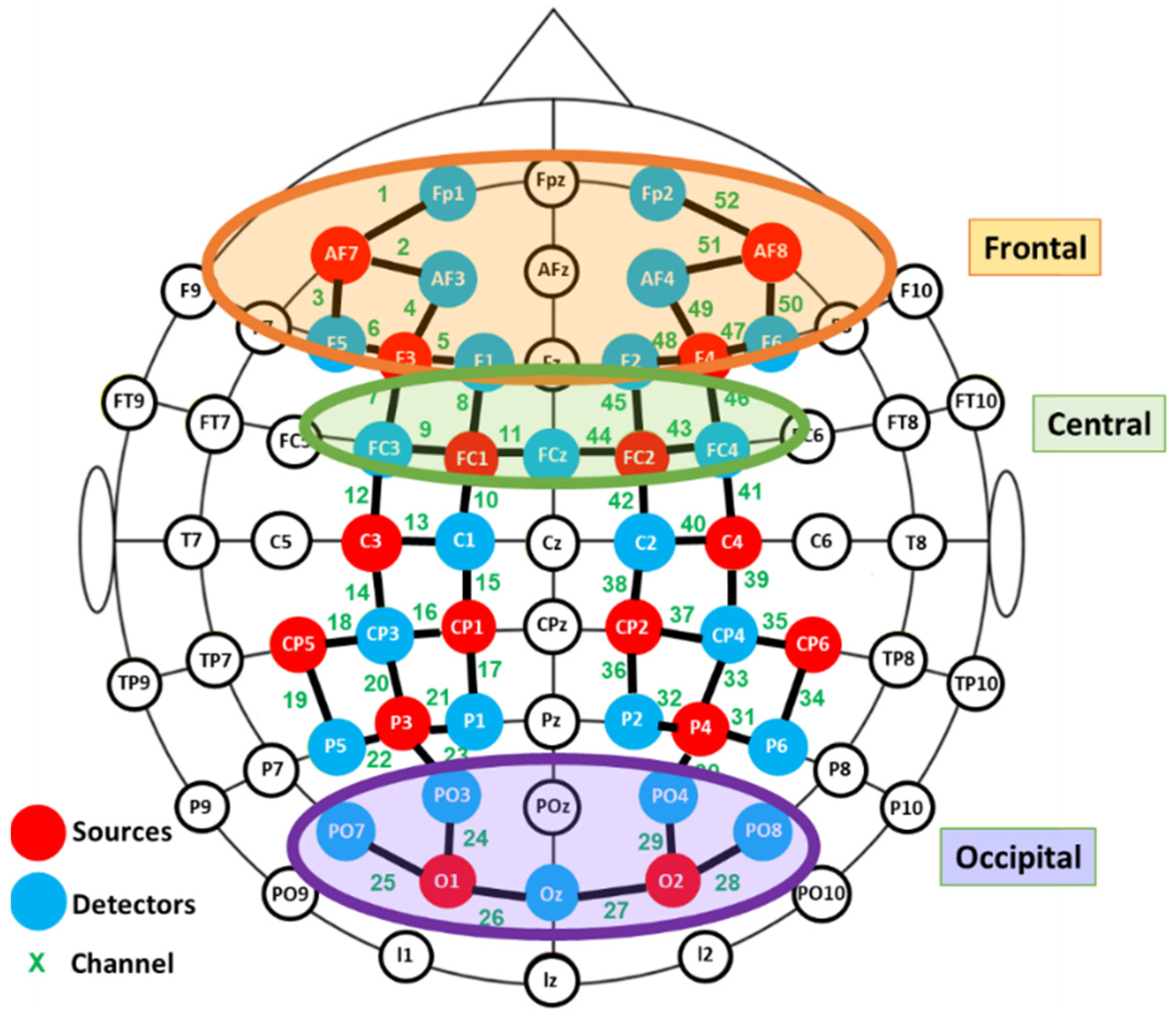

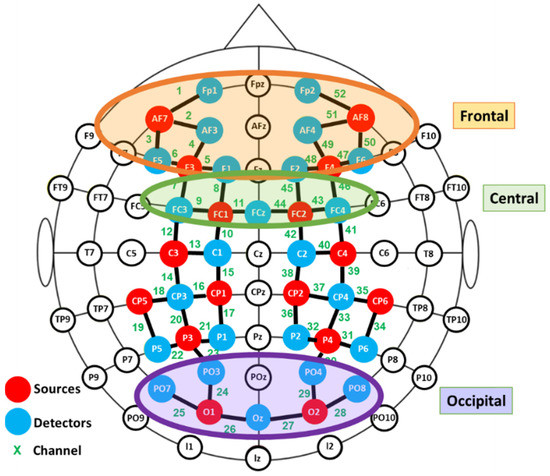

fNIRS data were recorded using a NIRScout device (NIRX Medical Technologies) with a sampling rate of 3.9 Hz. Two cap sizes were used, 54 and 58 cm, adapted to head size. This system has 40 optical sensors: 16 sources, emitting light at 760 and 850 nm, and 24 detectors. As a result, 52 channels were obtained by the combination of sources and detectors (Figure 2).

Figure 2.

Functional near infrared spectroscopy (fNIRS) montage. Signal acquisition was made by the colored optodes.

Signal processing was carried out with MATLAB version R2019a (The MathWorks Inc., Natick, MA, USA, v. R2019a) with the Homer2 software package. After visual verification, channels without visible heartbeat oscillations (~1 Hz) or with large motion artefacts were considered as noisy channels and were discarded from analyses [43]. Pruning was then used to remove channels with low SNR [44]. Intensity values were converted into optical density. A band pass filter of 0.01–0.5 Hz was applied to attenuate noise from physiological changes (heart rate and breathing) occurring at high and low frequencies. A combination of Spline interpolation [45] and wavelet filtering [46] was made to correct motion artefacts, as proposed by [47]. The spline interpolation was used with p = 0.99 [45,48,49] while the wavelet had an IQR = 0.1. Optical density values were then converted into relative concentration changes of oxyhemoglobin: Δ(HbO); and de-oxyhemoglobin: Δ(HbR); using the modified Beer–Lambert law with a differential path length factor of 6 [50].

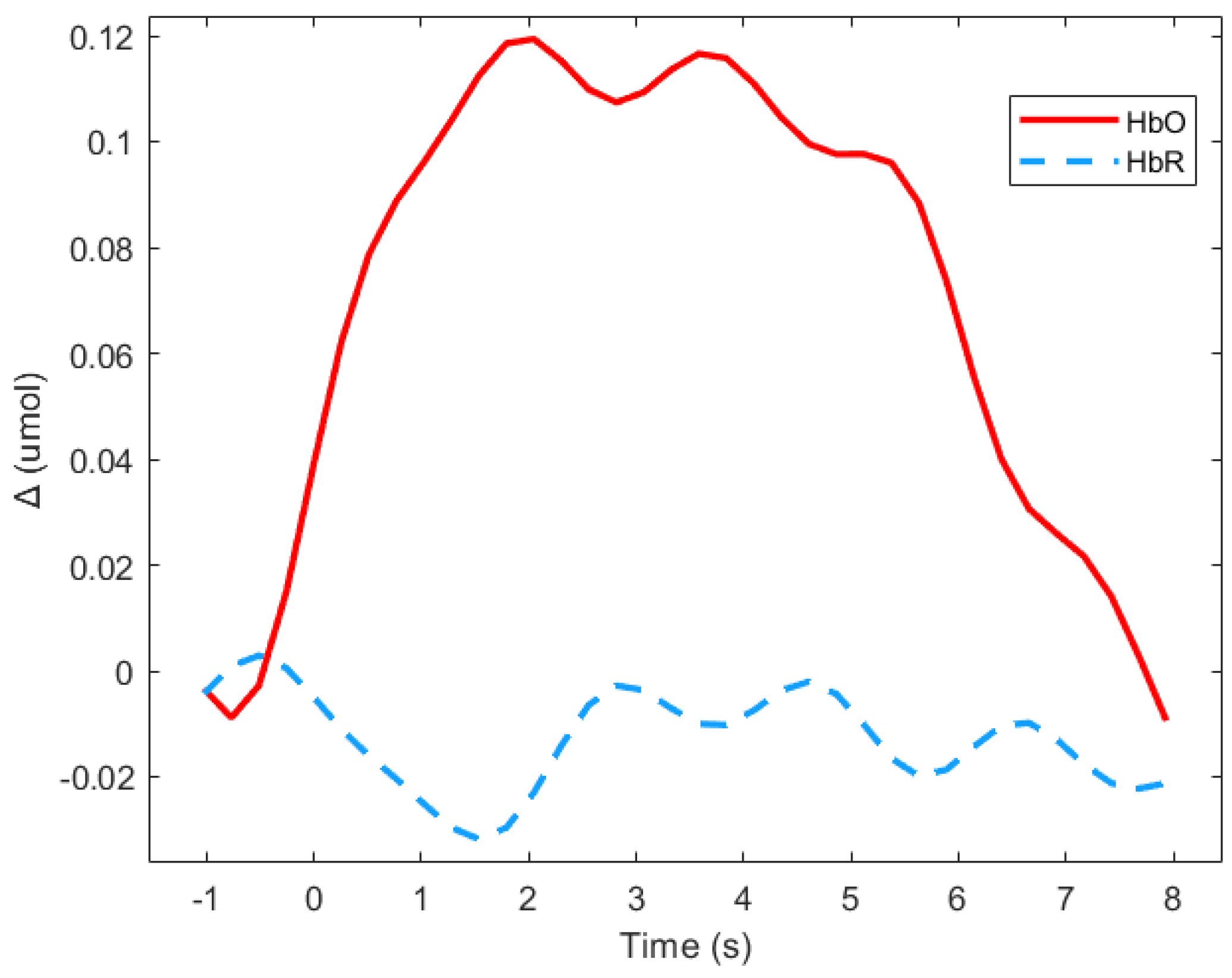

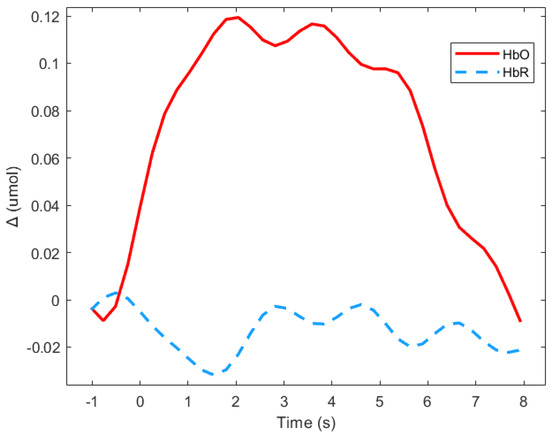

Event related responses were computed within 8 s segments, and a baseline of 1 s before stimulus onset was considered (see Figure 3 for an example). The approach of studying evoked hemodynamic responses can therefore be considered to be a rapid event-related study, in which the inter-stimuli intervals may be shorter than the elicited response [51]. As segments in the study were short, the mean signal value of Δ(HbO) was the most representative parameter [52].

Figure 3.

Average of the hemodynamic evoked response in the deaf group during animated road scene processing in the frontal region.

Exploratory paired Student’s t-tests were performed to detect aberrant results and outliers. In order to avoid the influence of individual differences in placement, the channels were grouped into different clusters and the averaged signals were computed for further statistical analysis. Three regions of interest were defined: frontal, central and occipital, as depicted in Figure 2. Due to technical limitations it was not possible to cover the temporal region.

Figure 3 shows means Δ(HbO) and Δ(HbR) for the deaf group in the frontal region during the animated presentation. For the subsequent analysis, only Δ(HbR)was considered since it is supposed to be more sensitive to hemodynamic variations induced by cognitive changes than Δ(HbR), and is more frequently used [53]. Figure 3 shows that HbR value is indeed close to zero, which suggests the absence of any global blood flow artefacts due to head movements. The mean values of the Δ(HbR) during the 8 s windows were computed as the variables.

2.5. Statistical Analysis

Table 2 depicts channels discarded by region and participant, due to poor SNR, by the prune channel function in Homer2. Regions were not considered in future analyses when a minimum of 50% of their channels had been discarded. Δ(HbO) mean value distributions respected normality.

Table 2.

Channels discarded by participant and region (see Figure 2 for channel identification). Channels are in bold when they belong to discarded regions (more than 50 % of channels were withdrawn due to artefacts).

For whole-brain analyses, one-sample t-Student tests were computed channel by channel for every condition and for the two groups to verify whether mean values were significantly different to zero. Three repeated measure analyses of variance (ANOVA)-one for each brain region as depicted in Figure 2 were performed, where one within-subject factor: Format (two levels: Animation (ANI) and sequences of static images (STA), and one between-group variable (defined by deafness) were considered. Statistical analysis was complemented with planned contrasts according to our hypotheses between hearing and deaf groups for the STA condition. Statistical analyses were carried out using JASP v14.0 software.

3. Results

3.1. Whole Brain Analysis

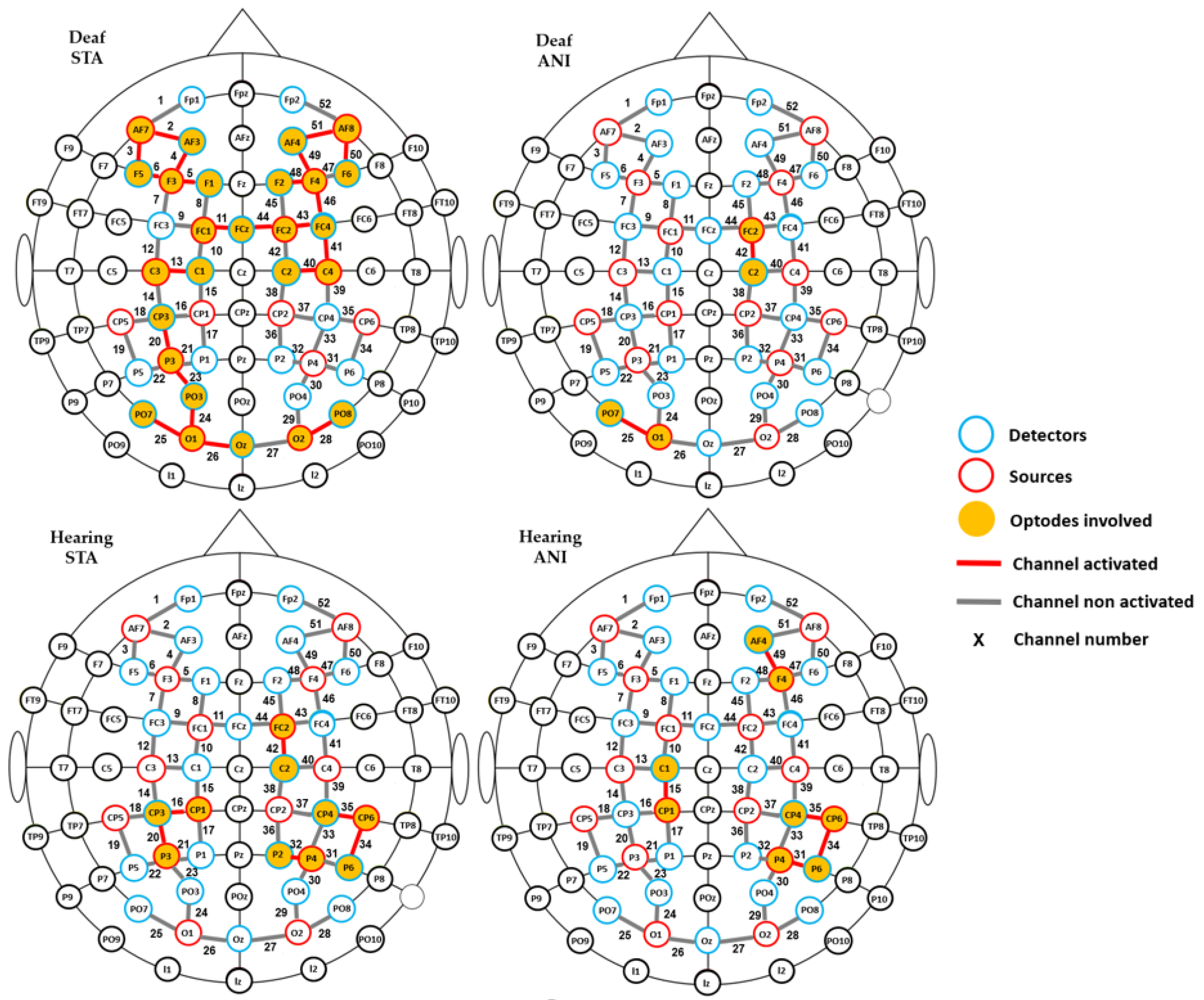

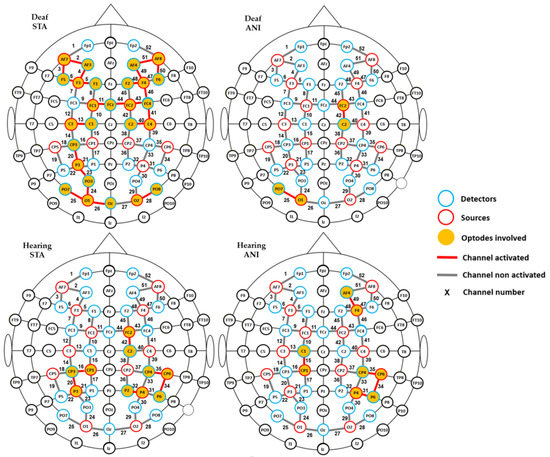

Firstly, in order to determine which relevant scalp region was the most involved during the task, one-sample t-tests vs. 0 were carried out for every recording point (52 channels), for each group (hearing and deaf participants), and for every format condition (ANI and STA). Channels which were significantly different from zero are shown in Figure 4. More channels were activated in the STA condition for deaf people than in any other condition. Activation was seen mainly in channels from the frontal and central regions, but also appeared in the occipital area. In contrast, few channels were different from zero in the hearing group, where isolated channels were activated in parietal and frontal regions.

Figure 4.

Channels with significantly positive activation for each group (deaf and hearing) and format (STA: sequence of static images; ANI: Animated format).

To complement the previous results, a channel-wise analysis was performed to find the channels where significant differences existed between groups. As expected, numerous channels (numbers 5, 23–26, 28, 29, 34, 35, 36, 40, 41–44 and 48–50 represented in Figure 3) showed significant results for STA (p < 0.05). These preliminary results justify grouping the proposed ROI, as suggested in the literature [54].

3.2. Analysis Per Region

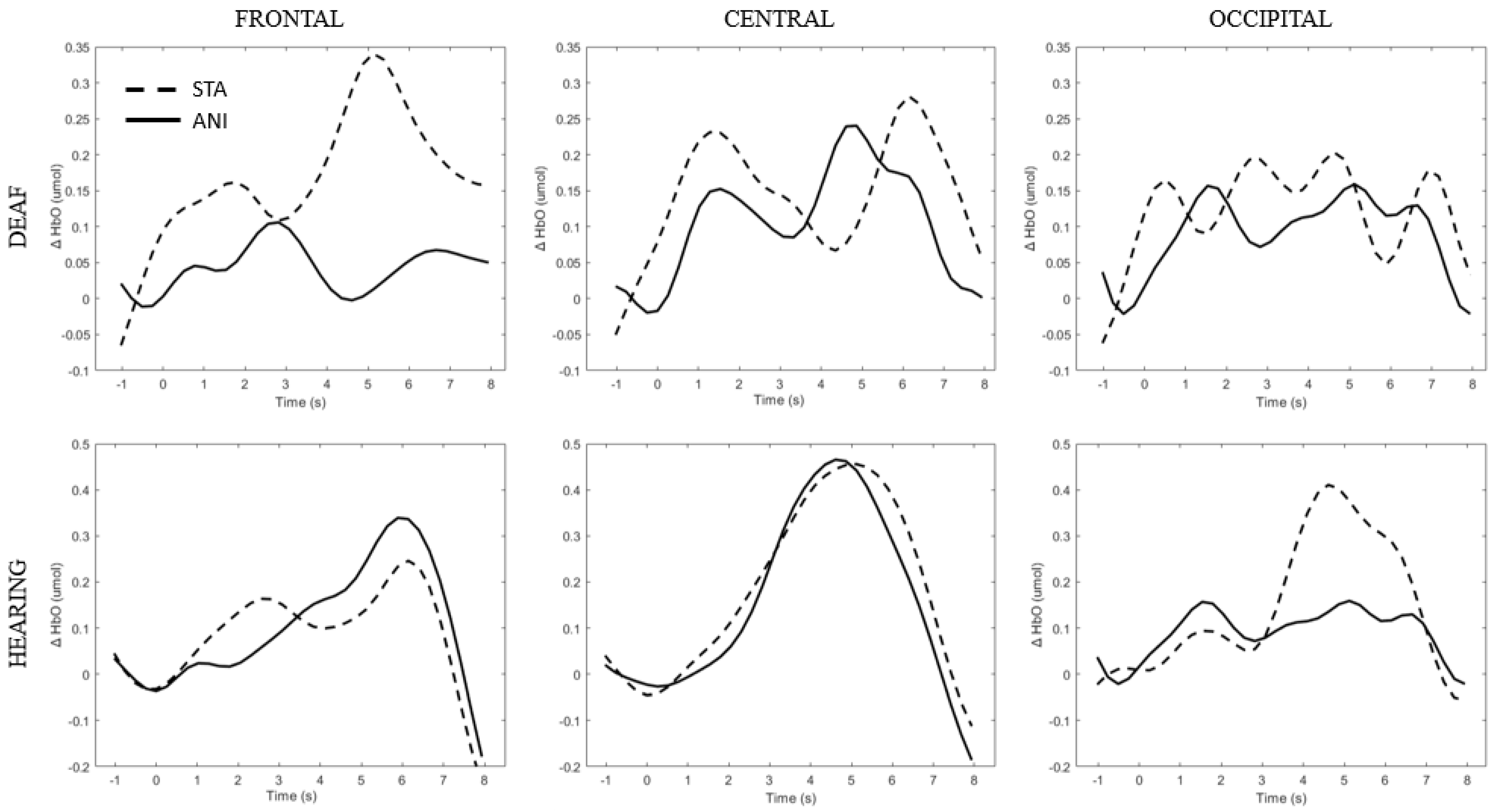

Figure 5 depicts the individual signals from the participants with the highest quality signals and the smallest number of discarded channels according to Table 2 (Participants D8 and H6), used as controls. Although the signal quality was high, the classical hemodynamic shape was not always found. However, a net typical shape of HbO in the central region can be observed in hearing participants. Full details of the main findings for each region are provided in the text below.

Figure 5.

Average of the hemodynamic evoked response for one deaf (D8) and one hearing individual (S6) for each region (frontal, central and occipital) and each format (STA: sequence of static images; ANI: Animated format.

Although the significant results will be explained again in the text, Table 3 displays all p-values and related statistical parameters for each ANOVA.

Table 3.

p-values and related statistical parameters for each ANOVA.

3.2.1. Frontal Region

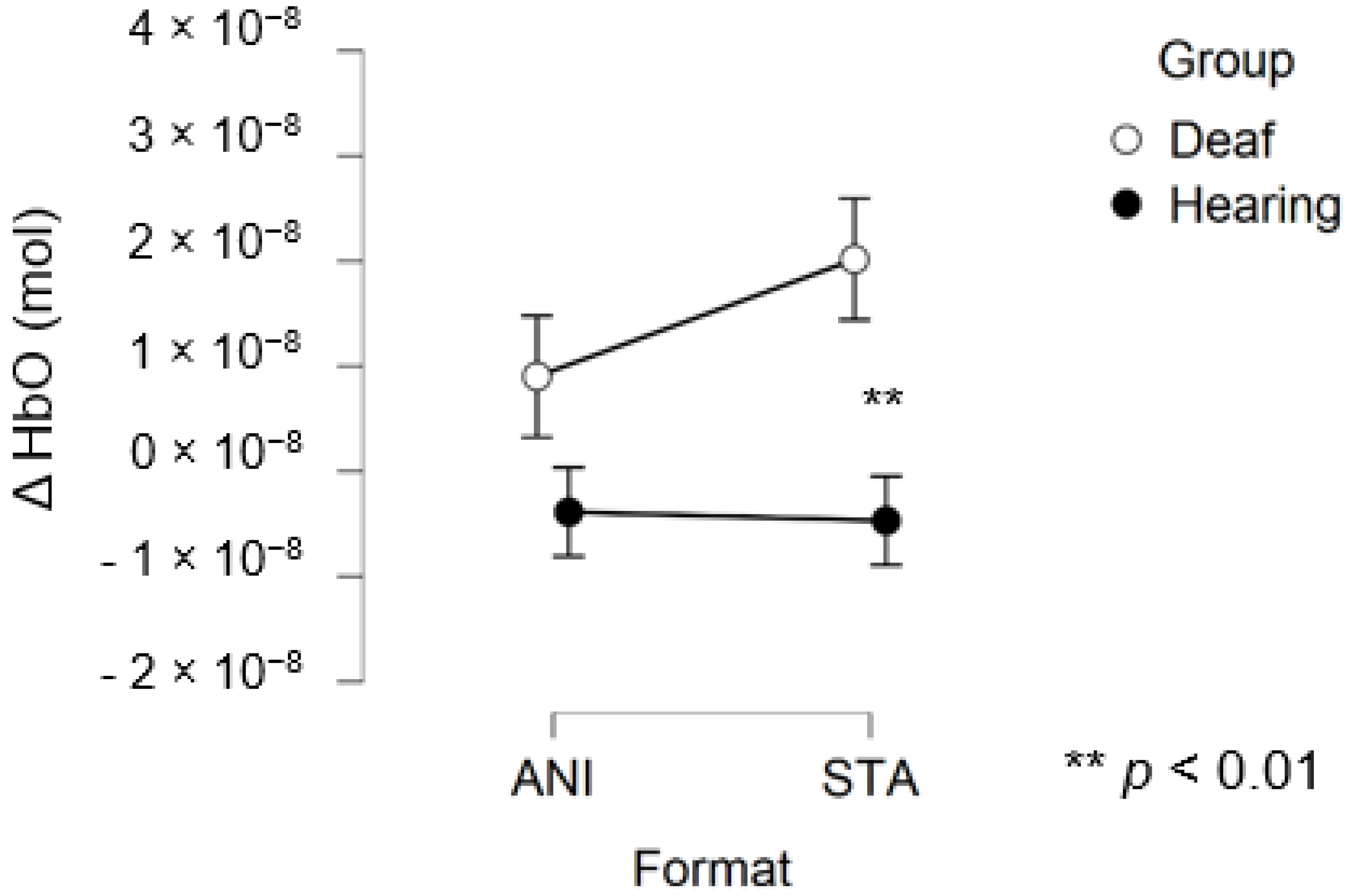

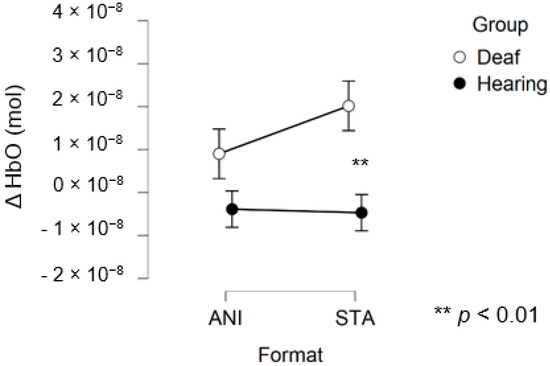

After data processing, estimation of artefact contribution and channel pruning, 16 deaf and 12 hearing participants remained in the set for frontal region analysis. The signals from the other participants were discarded due to poor SNR. A main effect of GROUP was significant (F (1, 26) = 8.439; p = 0.007; η2 = 0.157). The planned contrast between hearing and deaf groups for the STA condition revealed that the responses of the deaf group to the STA format were significantly higher than hearing group (t (50, 27) = 2.945; p = 0.005). However, no significant differences were found for the ANI condition (t (50, 27) = 1.529; p = 0.133).

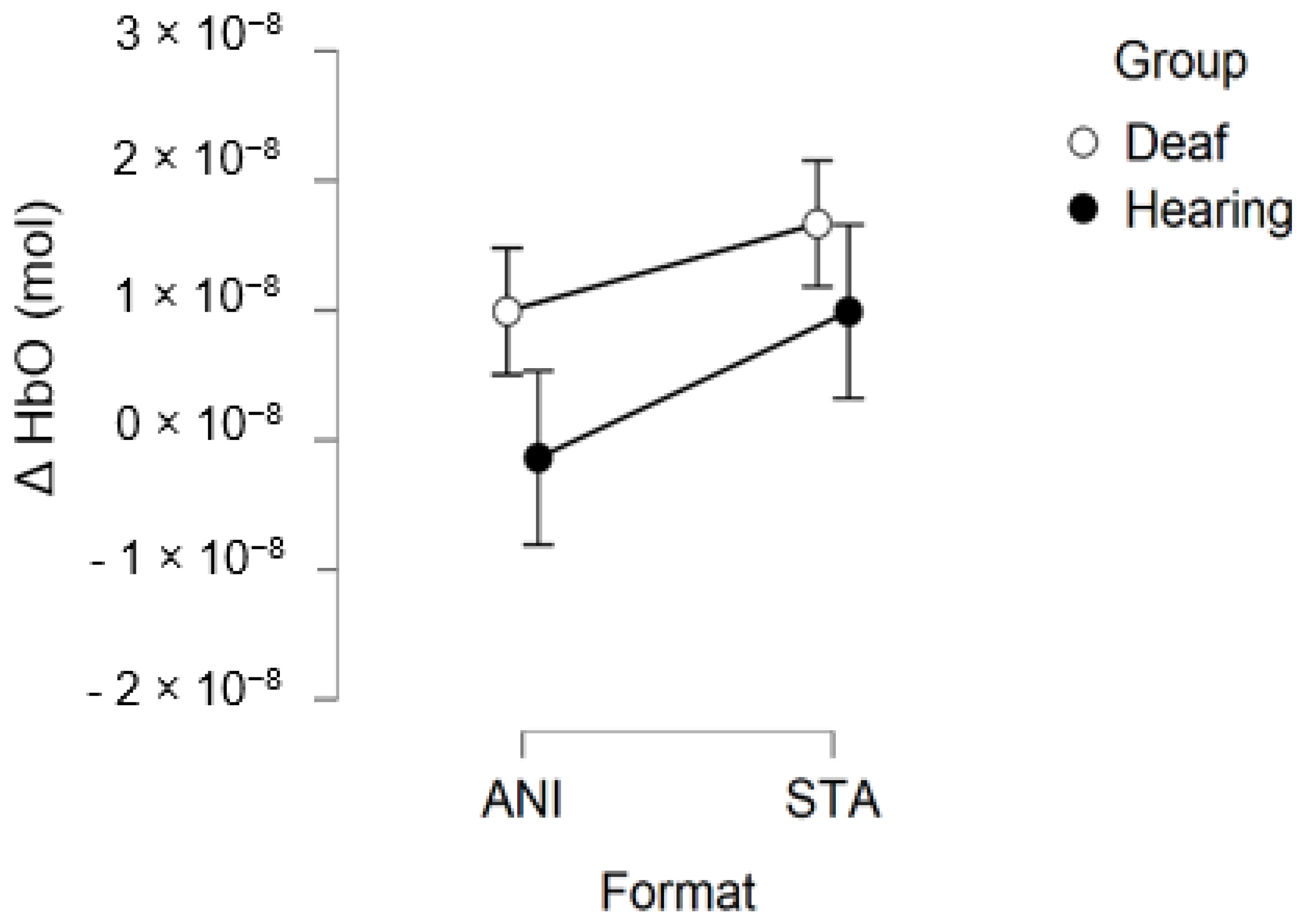

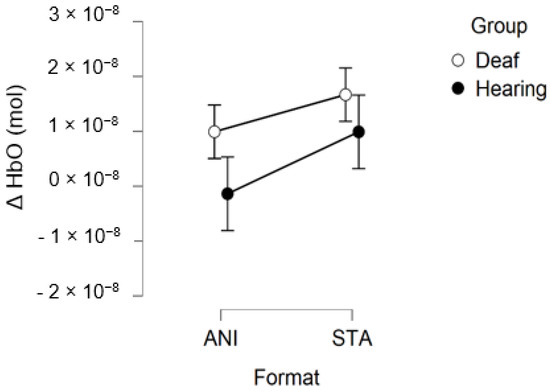

Figure 6 depicts the mean values of Δ(HbO) in the frontal region for each condition. Even when the mean value for the hearing group was lower than zero, the one-factor t-test suggested that this variable was not different from 0, contrary to the values for the STA condition in the deaf group, where the variable magnitude was significantly positive (p = 0.005).

Figure 6.

Mean amplitude values of the hemodynamic evoked responses in the frontal region for animated (ANI) and static image sequence (STA) formats for both groups.

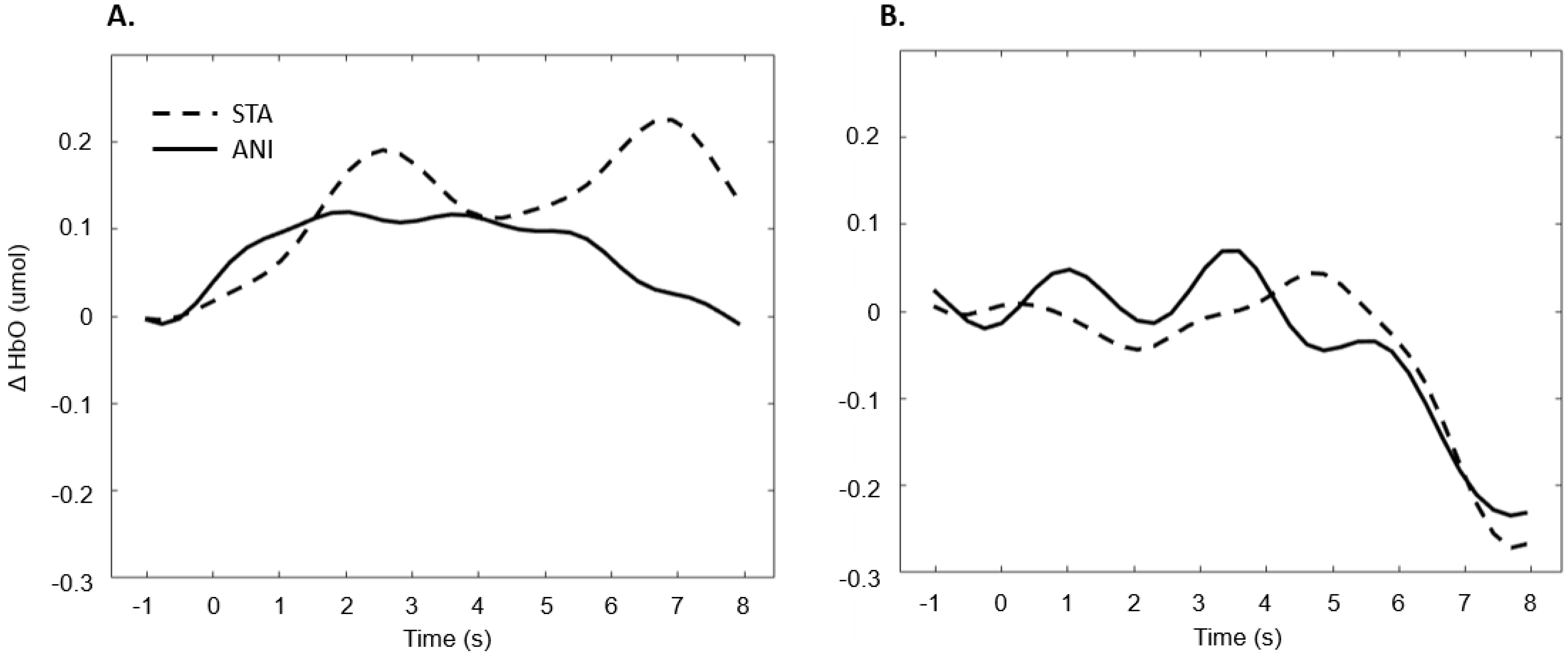

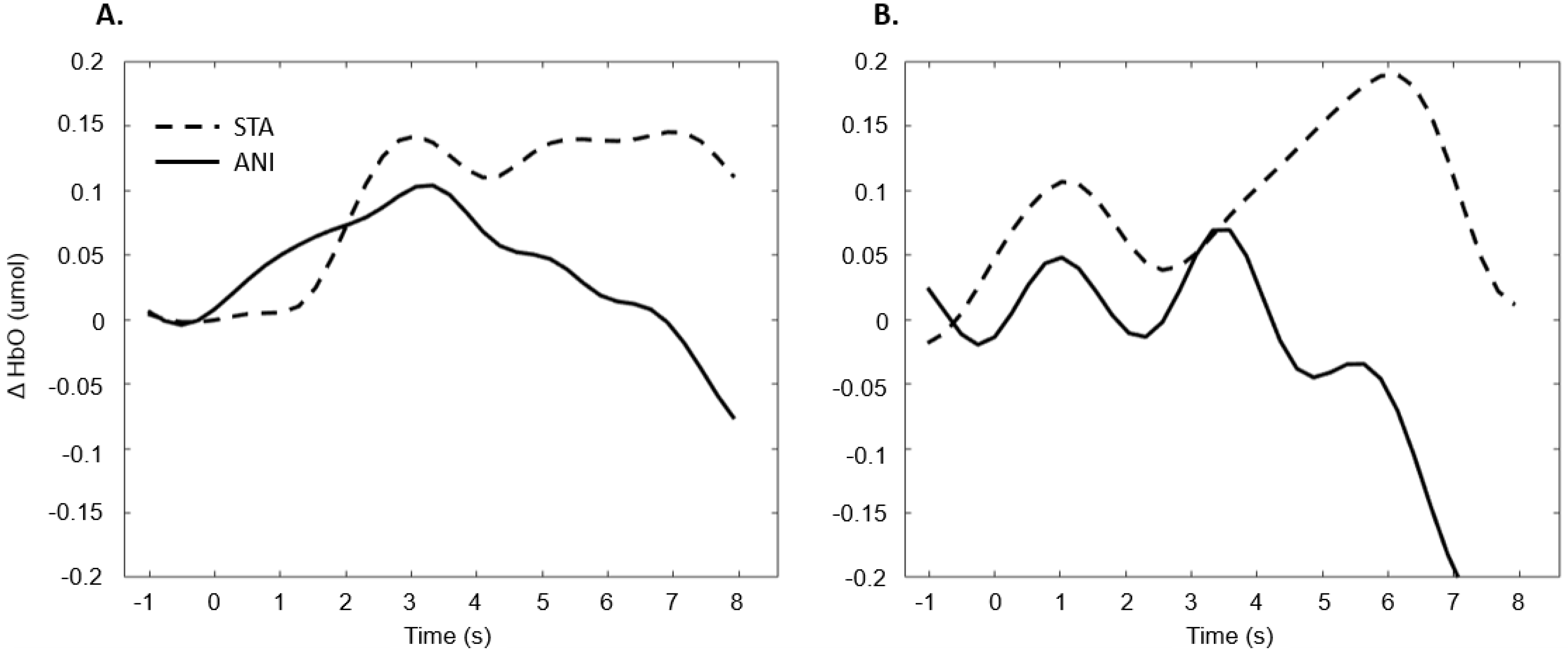

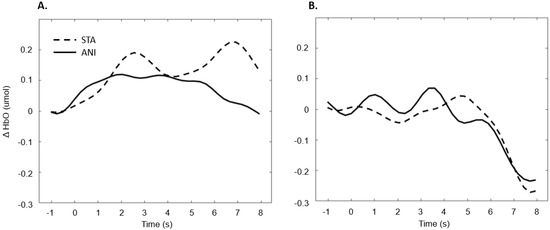

In addition, Figure 7 illustrates the averaged signals obtained for static (STA) and animated (ANI) conditions in the deaf group in the frontal region.

Figure 7.

Average of the hemodynamic evoked response in the deaf group (A) and hearing group (B) for the two conditions in the frontal region.

3.2.2. Central Region

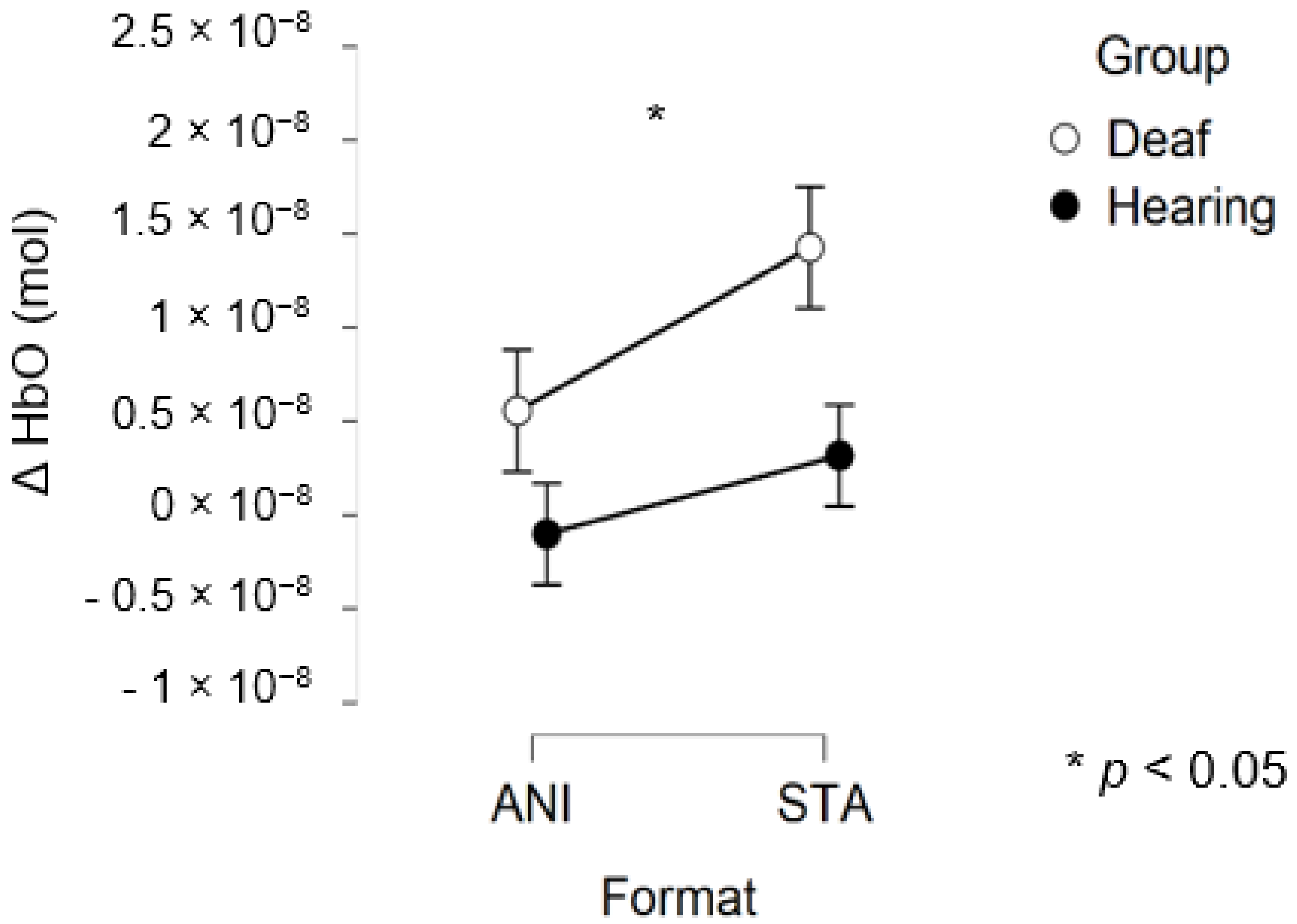

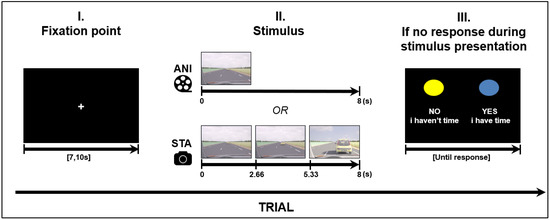

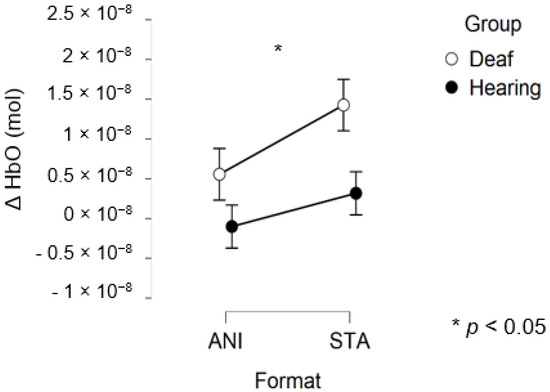

After data processing, 17 deaf and 13 hearing participants remained from the set for central region analysis. Only a main effect of FORMAT was significant (F (1, 28) = 4.281, p = 0.048, η2 = 0.029).

Figure 8 depicts the mean values of Δ(HbO) in the central region.

Figure 8.

Mean amplitude values of the hemodynamic evoked responses in the central region for animated (ANI) and static image sequence (STA) formats for both groups.

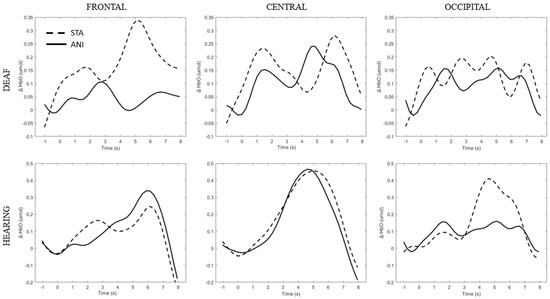

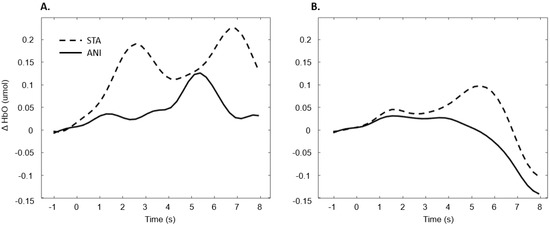

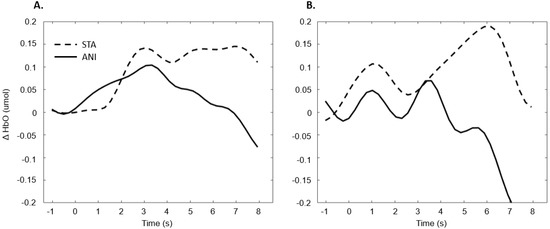

In addition, Figure 9 illustrates the averaged signals obtained for static (STA) and animated (ANI) conditions in both groups in the central region involved in the clocking mechanism.

Figure 9.

Average of the hemodynamic evoked response in the deaf group (A) and hearing group (B) for the two conditions in the central region, which is involved in clocking mechanism.

3.2.3. Occipital Region

After data processing and estimation of artefact contribution, 9 deaf and 11 hearing participants remained from the set for occipital region analysis. It should be noted that, due to its particular anatomical characteristics, the occipital region is the most difficult area of the scalp from which to obtain good fNIRS signals. Neither the ANOVA nor the planned contrast for the STA condition (t (32.19) = 0.635; p = 0.530) revealed significant effects on the occipital region. The smaller sample size may have prevented us from obtaining significant results.

Figure 10 depicts the mean values of Δ(HbO) in the occipital region.

Figure 10.

Mean amplitude values of the hemodynamic evoked responses in the occipital region for animated (ANI) and static image sequence (STA) formats for both groups.

Figure 11 illustrates the averaged signals obtained for static (STA) and animated (ANI) conditions in both groups in the occipital region.

Figure 11.

Average of the hemodynamic evoked response in the deaf group (A) and hearing group (B) for the two conditions in the occipital region.

4. Discussion

The overall objective of the present study was twofold. Firstly, we aimed to verify whether the fNIRS technique could provide insight into the study of the clocking mechanism involved in motion prediction. In order to do so, we used animation, along with another format consisting of sequential images, which required the addition of a clocking mechanism. Our second aim was to analyse the difference in the hemodynamic responses evoked by these stimuli in deaf people, and people with normal hearing. The results suggest that relevant conclusions can be made on our hypotheses about the brain regions studied.

Our first hypothesis concerned the frontal region. Based on previous research showing that deaf people experience difficulty when estimating time intervals, we expected to observe greater activation when they were asked to make a spatio-temporal inference [5,9]. We found that evoked response in the frontal region was higher in deaf participants than in their hearing counterparts only in the condition where a sequence of images was presented and an additional time estimation between images was required, whereas no difference was evidenced for the animations. As Figure 7 shows, the classical hemodynamic signal from fNIRS was obtained in the deaf group, while a more complex response was obtained in the static condition. This hemodynamic response is usually associated with the trigger of determined cognitive processes in determined regions. In the case of the frontal and prefrontal regions, it is linked to a wide range of cognitive mechanisms including executive functions. It is, therefore, a reliable measure of cognitive effort, as suggested in other studies [23,24]. However, it is more difficult to reach any conclusions from the mean average of the hearing group, shown in Figure 7, since an unstable signal seems to appear. The mean values are not statistically different from zero for this group. This suggests that the task probably required less cognitive effort and was performed quickly, which in turn implies that participants disengaged rapidly after making their decision.

The reasons for this difference in effort in deaf candidates may be varied, and deeper brain analysis is required to determine the specific processes. One possible explanation may be related to the difficulties in time processing, and in subsequent spatiotemporal inference and motion prediction, shown in a number of studies [5,9]. Nevertheless, caution is required, since many cognitive processes take place in the frontal region, and in the case of deaf people, recalibration processes and specific plasticity characteristics are involved. This fact motivates the study of more specific regions which are thought to be more closely linked to time cue processing, such as preSMA, discussed below.

Our hypothesis on the central region, which has been particularly linked to the clocking mechanism [11], is in agreement with the present results, which showed that activation occurred during the estimation of time. Interestingly, two main conclusions can be drawn from the grand average shown in Figure 9. Firstly, in the case of the static format, two consecutive peaks were elicited. This phenomenon was also found in the individual signal shown in Figure 5 for deaf participants. Although the response delays here seem very short in comparison to the usual hemodynamic response latencies, we cannot dismiss the possible contribution of the two clocking mechanisms in the static condition. Unlike the animated condition, in this format, dynamism is not transmitted directly. In its absence, participants tried to mentally infer the speed of the vehicles from the spatial elements with a first clocking mechanism. Alternative interpretations could also be considered. Processes which disrupt attention, as described in the AToCC model, could be linked to the reactivation of attentional resources when a second picture is presented. However, if the contributions to these peaks were linked exclusively to automatic processes, no difference in the signal shape would be expected compared to the hearing group. Indeed, the average of all participants showed a similar shape, with a lower amplitude in the hearing group. This could be linked to lower cognitive effort or less attentional disengagement. In order to nuance these statements and ensure that they are not only speculative, it is crucial to pursue the research and include other, similar tasks.

The peak found in the central region in the animated condition could be linked to the clocking mechanism required to predict vehicle movement, if we focus on our hypotheses and on the justification of our work. The continuity of information provided by animation would therefore require the intervention of only one clocking mechanism. This explanation is also in agreement with the AToCC theory, as the introduction of intervals can trigger ruptures that disrupt continuity [16,17]. No significant evoked responses were found in people with normal hearing. Their clocking mechanism is probably more developed and the effectiveness of the process does not allow evoked responses to be obtained. However, we consider that it is important to note the surprising typical hemodynamic response in the hearing participant with the highest quality signals (in terms of gain level, SNR and artifact contribution) depicted in Figure 5. This signal, which was reproduced in the other channels composing this region for this participant, hints at a specific common processing in both conditions, which could arguably be linked to spatio-temporal inferences. Either way, the activation of this region may have been impacted by the motor preparation prior to response. However, this influence would be comparable in both presentation formats, and would consequently have been cancelled out once all the trials had been averaged. Further analyses, where more spatial and temporal resolution are available, are needed to confirm this hypothesis.

The exploration of the occipital region did not provide clear results. Despite the fact that several studies have shown more highly developed visual capacities in deaf individuals [55] and an attraction to spatial elements in tasks involving time interval estimation [10], the fNIRS technique did not evidence this using the present protocol. It could be argued that the present measure of global activity in the occipital region would not really show the preferential processing by deaf participants of the spatial features in visual scenes. It is possible that a compensation mechanism acts primarily within the temporal region, as suggested by numerous studies [56,57,58]. Moreover, as mentioned above, the occipital region is the scalp area from which it is most difficult to obtain good signals, and another functional technique would probably be more suitable than fNIRS.

Our work presents a number of limitations. The inherent characteristics of each road situation in each environment could lead to qualitative differences when making the CME, mainly in the case of sequences of images. This can be particularly observed in the case of joining a highway, which involves the visualization of a rear-view mirror and lateral vision. However, this issue is not supposed to interfere with the clocking mechanism, and makes the study more ecological and complete for a wider range of driving situations. It would have been interesting to study activity in the temporal region, especially in relation to brain plasticity, and because the auditory cortex is situated in this region. However, the area is characterized by high levels of blood flow artefacts and there are technical limitations on the number of sources and detectors which can be used (16 sources and 24 detectors). There is also no evidence in the literature of its involvement in the clocking mechanism. For these reasons, we chose not to include it in the present study. A larger sample size might have provided more generalizable results and may have allowed the study of specific deaf characteristics. In future works, a subgroup of the deaf population could be studied with a view to designing specific aids. Conducting studies using fNIRS in ecological settings could also enable these processes to be monitored in different contexts.

5. Conclusions

To sum up, these preliminary results encourage further analysis of the neural basis of the clocking mechanism in the deaf population, since they show higher hemodynamic responses in both frontal and central regions when time estimation is required to determine vehicle speed. The experimental protocol based on animations and image presentation has proved to be suitable for this goal. These promising results suggest that time estimation could be analyzed by using the fNIRS technique in central and frontal regions to complement performance measures using different approaches. In addition, the example used in this protocol, which was extracted from pictures from the Highway Code test, highlights the potential difficulties that should be considered when designing material for learning and the evaluation of skills in deaf people.

Author Contributions

Conceptualization, S.L., L.P.-F., J.-M.B. and A.R.H.-M.; methodology, S.L., S.A. and A.R.H.-M.; validation, S.L., L.P.-F., J.-M.B. and A.R.H.-M.; formal analysis, S.L. and A.R.H.-M.; investigation, S.L., L.P.-F., J.-M.B. and A.R.H.-M.; resources, L.P.-F., and J.-M.B.; data curation, S.L. and L.P-F.; writing—original draft preparation, S.L. and A.R.H.-M.; writing—review and editing, S.L., L.P.-F., J.-M.B. and A.R.H.-M.; supervision, L.P.-F. and J.-M.B.; funding acquisition, L.P.-F. and J.-M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Direction de la Sécurité Routière (DSR).

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the French Biomedical research ethics committee on 9 April 2018 (04/09/2018).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Acknowledgments

We are particularly grateful to the driving schools “Ecart de conduite” and “ARIS” as well as to their respective managers Dolorès Robert and Jacky Rabot for their precious help in recruiting participants. The authors would also like to thank the precious help of Cate Dalmolin, for English proofreading and Adolphe J. Bequet for his aid for data visualisation. Finally, we also warmly thank all the participants.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gori, M.; Chilosi, A.; Forli, F.; Burr, D. Audio-visual temporal perception in children with restored hearing. Neuropsychologia 2017, 99, 350–359. [Google Scholar] [CrossRef] [PubMed]

- Guttman, S.E.; Gilroy, L.A.; Blake, R. Hearing what the eyes see: Auditory encoding of visual temporal sequences. Psychol. Sci. 2005, 16, 228–235. [Google Scholar] [CrossRef] [PubMed]

- Collier, G.L.; Logan, G. Modality differences in short-term memory for rhythms. Mem. Cogn. 2000, 28, 529–538. [Google Scholar] [CrossRef][Green Version]

- Repp, B.H.; Penel, A. Rhythmic movement is attracted more strongly to auditory than to visual rhythms. Psychol. Res. 2003, 68, 252–270. [Google Scholar] [CrossRef] [PubMed]

- Bolognini, N.; Cecchetto, C.; Geraci, C.; Maravita, A.; Pascual-Leone, A.; Papagno, C. Hearing shapes our perception of time: Temporal discrimination of tactile stimuli in deaf people. J. Cogn. Neurosci. 2012, 24, 276–286. [Google Scholar] [CrossRef]

- Conway, C.M.; Pisoni, D.B.; Kronenberger, W.G. The importance of sound for cognitive sequencing abilities. Curr. Dir. Psychol. Sci. 2009, 18, 275–279. [Google Scholar] [CrossRef]

- Poizner, H. Temporal processing in deaf signers. Brain Lang. 1987, 30, 52–62. [Google Scholar] [CrossRef]

- Bross, M.; Sauerwein, H. Signal detection analysis of visual flicker in deaf and hearing individuals. Percept. Mot. Ski. 1980, 51, 839–843. [Google Scholar] [CrossRef]

- Kowalska, J.; Szelag, E. The effect of congenital deafness on duration judgment. J. Child. Psychol. Psychiatry 2006, 47, 946–953. [Google Scholar] [CrossRef]

- Amadeo, M.B.; Campus, C.; Pavani, F.; Gori, M. Spatial cues influence time estimations in deaf individuals. iScience 2019, 19, 369–377. [Google Scholar] [CrossRef]

- DeLucia, P.R.; Liddell, G.W. Cognitive motion extrapolation and cognitive clocking in prediction motion tasks. J. Exp. Psychol. Hum. Percept. Perform. 1998, 24, 901–914. [Google Scholar] [CrossRef] [PubMed]

- Tresilian, J.R. Perceptual and cognitive processes in time-to-contact estimation: Analysis of prediction-motion and relative judgment tasks. Percept. Psychophys. 1995, 57, 231–245. [Google Scholar] [CrossRef]

- Li, Y.; Mo, L.; Chen, Q. Differential contribution of velocity and distance to time estimation during self-initiated time-to-collision judgment. Neuropsychologia 2015, 73, 35–47. [Google Scholar] [CrossRef] [PubMed]

- Daneshi, A.; Towhidkhah, F.; Faubert, J. Assessing changes in brain electrical activity and functional connectivity while overtaking a vehicle. J. Cogn. Psychol. 2020, 1–15. [Google Scholar] [CrossRef]

- Laurent, S.; Boucheix, J.-M.; Argon, S.; Hidalgo-Muñoz, A.R.; Paire-Ficout, L. Can animation compensate for temporal processing difficulties in deaf people? Appl. Cogn. Psychol. 2019, 34, 308–317. [Google Scholar] [CrossRef]

- Smith, T.J. The attentional theory of cinematic continuity. Projections 2012, 6, 1–27. [Google Scholar] [CrossRef]

- Smith, T.J.; Levin, D.; Cutting, J.E. A window on reality: Perceiving edited moving images. Curr. Dir. Psychol. Sci. 2012, 21, 107–113. [Google Scholar] [CrossRef]

- Boucheix, J.-M.; Forestier, C. Reducing the transience effect of animations does not (always) lead to better performance in children learning a complex hand procedure. Comput. Hum. Behav. 2017, 69, 358–370. [Google Scholar] [CrossRef]

- Schwan, S.; Ildirar, S. Watching film for the first time. Psychol. Sci. 2010, 21, 970–976. [Google Scholar] [CrossRef] [PubMed]

- Zacks, J.M.; Speer, N.K.; Swallow, K.M.; Maley, C.J. The brain’s cutting-room floor: Segmentation of narrative cinema. Front. Hum. Neurosci. 2010, 4. [Google Scholar] [CrossRef] [PubMed]

- Loschky, L.C.; Larson, A.M.; Smith, T.J.; Magliano, J.P. The scene perception & event comprehension theory (SPECT) applied to visual narratives. Top. Cogn. Sci. 2020, 12, 311–351. [Google Scholar] [CrossRef]

- Mital, P.K.; Smith, T.J.; Hill, R.L.; Henderson, J.M. Clustering of gaze during dynamic scene viewing is predicted by motion. Cogn. Comput. 2011, 3, 5–24. [Google Scholar] [CrossRef]

- Causse, M.; Chua, Z.; Peysakhovich, V.; Del Campo, N.; Matton, N. Mental workload and neural efficiency quantified in the prefrontal cortex using fNIRS. Sci. Rep. 2017, 7, 1–15. [Google Scholar] [CrossRef]

- Herff, C.; Heger, D.; Fortmann, O.; Hennrich, J.; Putze, F.; Schultz, T. Mental workload during n-back task—quantified in the prefrontal cortex using fNIRS. Front. Hum. Neurosci. 2014, 7, 935. [Google Scholar] [CrossRef] [PubMed]

- Wilcox, T.; Biondi, M. fNIRS in the developmental sciences. Wiley Interdiscip. Rev. Cogn. Sci. 2015, 6, 263–283. [Google Scholar] [CrossRef] [PubMed]

- Lloyd-Fox, S.; Blasi, A.; Elwell, C. Illuminating the developing brain: The past, present and future of functional near infrared spectroscopy. Neurosci. Biobehav. Rev. 2010, 34, 269–284. [Google Scholar] [CrossRef]

- Huppert, T.J.; Diamond, S.G.; Franceschini, M.A.; Boas, D.A. HomER: A review of time-series analysis methods for near-infrared spectroscopy of the brain. Appl. Opt. 2009, 48, D280–D298. [Google Scholar] [CrossRef]

- Ferrari, M.; Mottola, L.; Quaresima, V. Principles, techniques, and limitations of near infrared spectroscopy. Can. J. Appl. Physiol. 2004, 29, 463–487. [Google Scholar] [CrossRef] [PubMed]

- Ferrari, M.; Quaresima, V. A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. NeuroImage 2012, 63, 921–935. [Google Scholar] [CrossRef]

- Kober, S.; Wood, G.; Kurzmann, J.; Friedrich, E.; Stangl, M.; Wippel, T.; Väljamäe, A.; Neuper, C. Near-infrared spectroscopy based neurofeedback training increases specific motor imagery related cortical activation compared to sham feedback. Biol. Psychol. 2014, 95, 21–30. [Google Scholar] [CrossRef] [PubMed]

- Lapborisuth, P.; Zhang, X.; Noah, A.; Hirsch, J. Neurofeedback-based functional near-infrared spectroscopy upregulates motor cortex activity in imagined motor tasks. Neurophotonics 2017, 4, 021107. [Google Scholar] [CrossRef] [PubMed]

- Karen, T.; Morren, G.; Haensse, D.; Bauschatz, A.S.; Bucher, H.U.; Wolf, M. Hemodynamic response to visual stimulation in newborn infants using functional near-infrared spectroscopy. Hum. Brain Mapp. 2008, 29, 453–460. [Google Scholar] [CrossRef]

- Sevy, A.B.; Bortfeld, H.; Huppert, T.J.; Beauchamp, M.S.; Tonini, R.E.; Oghalai, J.S. Neuroimaging with near-infrared spectroscopy demonstrates speech-evoked activity in the auditory cortex of deaf children following cochlear implantation. Hear. Res. 2010, 270, 39–47. [Google Scholar] [CrossRef] [PubMed]

- Chou, P.-H.; Lan, T.-H. The role of near-infrared spectroscopy in Alzheimer’s disease. J. Clin. Gerontol. Geriatr. 2013, 4, 33–36. [Google Scholar] [CrossRef]

- Ranchet, M.; Hoang, I.; Cheminon, M.; Derollepot, R.; Devos, H.; Perrey, S.; Luauté, J.; Danaila, T.; Paire-Ficout, L. Changes in prefrontal cortical activity during walking and cognitive functions among patients with Parkinson’s disease. Front. Neurol. 2020, 11, 601–686. [Google Scholar] [CrossRef]

- Pouthas, V.; George, N.; Poline, J.-B.; Pfeuty, M.; VandeMoorteele, P.-F.; Hugueville, L.; Ferrandez, A.-M.; Lehericy, S.; LeBihan, D.; Renault, B. Neural network involved in time perception: An fMRI study comparing long and short interval estimation. Hum. Brain Mapp. 2005, 25, 433–441. [Google Scholar] [CrossRef]

- Dye, M.W.G.; Bavelier, D. Attentional enhancements and deficits in deaf populations: An integrative review. Restor. Neurol. Neurosci. 2010, 28, 181–192. [Google Scholar] [CrossRef] [PubMed]

- Simon, M.; Campbell, E.; Genest, F.; MacLean, M.W.; Champoux, F.; Lepore, F. The impact of early deafness on brain plasticity: A systematic review of the white and gray matter changes. Front. Neurosci. 2020, 14, 206. [Google Scholar] [CrossRef]

- Coull, J.T.; Vidal, F.; Nazarian, B.; Macar, F. Functional anatomy of the attentional modulation of time estimation. Science 2004, 303, 1506–1508. [Google Scholar] [CrossRef]

- Forstmann, U.B.; Dutilh, G.; Brown, S.; Neumann, J.; von Cramon, Y.D.; Ridderinkhof, K.R.; Wagenmakers, E.-J. Striatum and pre-SMA facilitate decision-making under time pressure. Proc. Natl. Acad. Sci. USA 2008, 105, 17538–17542. [Google Scholar] [CrossRef] [PubMed]

- Field, D.T.; Wann, J.P. Perceiving time to collision activates the sensorimotor cortex. Curr. Biol. 2005, 15, 453–458. [Google Scholar] [CrossRef] [PubMed]

- Lore, W.H.; Song, S. Central and peripheral visual processing in hearing and nonhearing individuals. Bull. Psychon. Soc. 1991, 29, 437–440. [Google Scholar] [CrossRef]

- Pinti, P.; Tachtsidis, I.; Hamilton, A.; Hirsch, J.; Aichelburg, C.; Gilbert, S.; Burgess, P.W. The present and future use of functional near-infrared spectroscopy (fNIRS) for cognitive neuroscience. Ann. New York Acad. Sci. 2020, 1464, 5–29. [Google Scholar] [CrossRef] [PubMed]

- Von Lühmann, A.; Ortega-Martinez, A.; Boas, D.A.; Yücel, M.A. Using the general linear model to improve performance in fNIRS single trial analysis and classification: A perspective. Front. Hum. Neurosci. 2020, 14, 30. [Google Scholar] [CrossRef] [PubMed]

- Scholkmann, F.; Spichtig, S.; Muehlemann, T.; Wolf, M. How to detect and reduce movement artifacts in near-infrared imaging using moving standard deviation and spline interpolation. Physiol. Meas. 2010, 31, 649–662. [Google Scholar] [CrossRef]

- Molavi, B.A.; Dumont, G. Wavelet-based motion artifact removal for functional near-infrared spectroscopy. Physiol. Meas. 2012, 33, 259–270. [Google Scholar] [CrossRef]

- Di Lorenzo, R.; Pirazzoli, L.; Blasi, A.; Bulgarelli, C.; Hakuno, Y.; Minagawa, Y.; Brigadoi, S. Recommendations for motion correction of infant fNIRS data applicable to multiple data sets and acquisition systems. NeuroImage 2019, 200, 511–527. [Google Scholar] [CrossRef]

- Brigadoi, S.; Ceccherini, L.; Cutini, S.; Scarpa, F.; Scatturin, P.; Selb, J.; Gagnon, L.; Boas, D.A.; Cooper, R.J. Motion artifacts in functional near-infrared spectroscopy: A comparison of motion correction techniques applied to real cognitive data. NeuroImage 2014, 85, 181–191. [Google Scholar] [CrossRef]

- Cooper, R.J.; Eselb, J.; Egagnon, L.; Ephillip, D.; Schytz, H.W.; Iversen, H.K.; Eashina, M.; Boas, D.A. A systematic comparison of motion artifact correction techniques for functional near-Infrared spectroscopy. Front. Behav. Neurosci. 2012, 6, 147. [Google Scholar] [CrossRef]

- Yücel, M.A.; Selb, J.; Aasted, C.M.; Lin, P.-Y.; Borsook, D.; Becerra, L.; Boas, D.A. Mayer waves reduce the accuracy of estimated hemodynamic response functions in functional near-infrared spectroscopy. Biomed. Opt. Express 2016, 7, 3078–3088. [Google Scholar] [CrossRef] [PubMed]

- Amaro, E.; Barker, G.J. Study design in fMRI: Basic principles. Brain Cogn. 2006, 60, 220–232. [Google Scholar] [CrossRef] [PubMed]

- Hidalgo-Muñoz, A.R.; Jallais, C.; Evennou, M.; Ndiaye, D.; Moreau, F.; Ranchet, M.; Derollepot, R.; Fort, A. Hemodynamic responses to visual cues during attentive listening in autonomous versus manual simulated driving: A pilot study. Brain Cogn. 2019, 135, 103–583. [Google Scholar] [CrossRef]

- Quaresima, V.; Ferrari, M. A mini-review on functional near-infrared spectroscopy (fNIRS): Where do we stand, and where should we go? Photon 2019, 6, 87. [Google Scholar] [CrossRef]

- Yücel, M.A.; Lühmann, A.V.; Scholkmann, F.; Gervain, J.; Dan, I.; Ayaz, H.; Boas, D.; Cooper, R.J.; Culver, J.; Elwell, C.E.; et al. Best practices for fNIRS publications. Neurophotonics 2021, 8, 012101. [Google Scholar] [CrossRef]

- Alencar, C.D.C.; Butler, B.E.; Lomber, S.G. What and how the deaf brain sees. J. Cogn. Neurosci. 2019, 31, 1091–1109. [Google Scholar] [CrossRef]

- Scott, G.D.; Karns, C.M.; Dow, M.W.; Estevens, C.; Neville, H.J. Enhanced peripheral visual processing in congenitally deaf humans is supported by multiple brain regions, including primary auditory cortex. Front. Hum. Neurosci. 2014, 8, 177. [Google Scholar] [CrossRef]

- Benetti, S.; Van Ackeren, M.J.; Rabini, G.; Zonca, J.; Foa, V.; Baruffaldi, F.; Rezk, M.; Pavani, F.; Rossion, B.; Collignon, O. Functional selectivity for face processing in the temporal voice area of early deaf individuals. Proc. Natl. Acad. Sci. USA 2017, 114, E6437–E6446. [Google Scholar] [CrossRef] [PubMed]

- Bola, Ł; Zimmermann, M.; Mostowski, P.; Jednoróg, K.; Marchewka, A.; Rutkowski, P.; Szwed, M. Task-specific reorganization of the auditory cortex in deaf humans. Proc. Natl. Acad. Sci. USA 2017, 114, E600–E609. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).