Familiarity of Background Music Modulates the Cortical Tracking of Target Speech at the “Cocktail Party”

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Stimuli and Task

2.3. EEG Recording Procedures

2.4. Behavioral Data Analysis

2.5. Electrophysiological Data Analysis: Temporal Response Functions (TRFs)

2.6. Statistical Analyses

3. Results

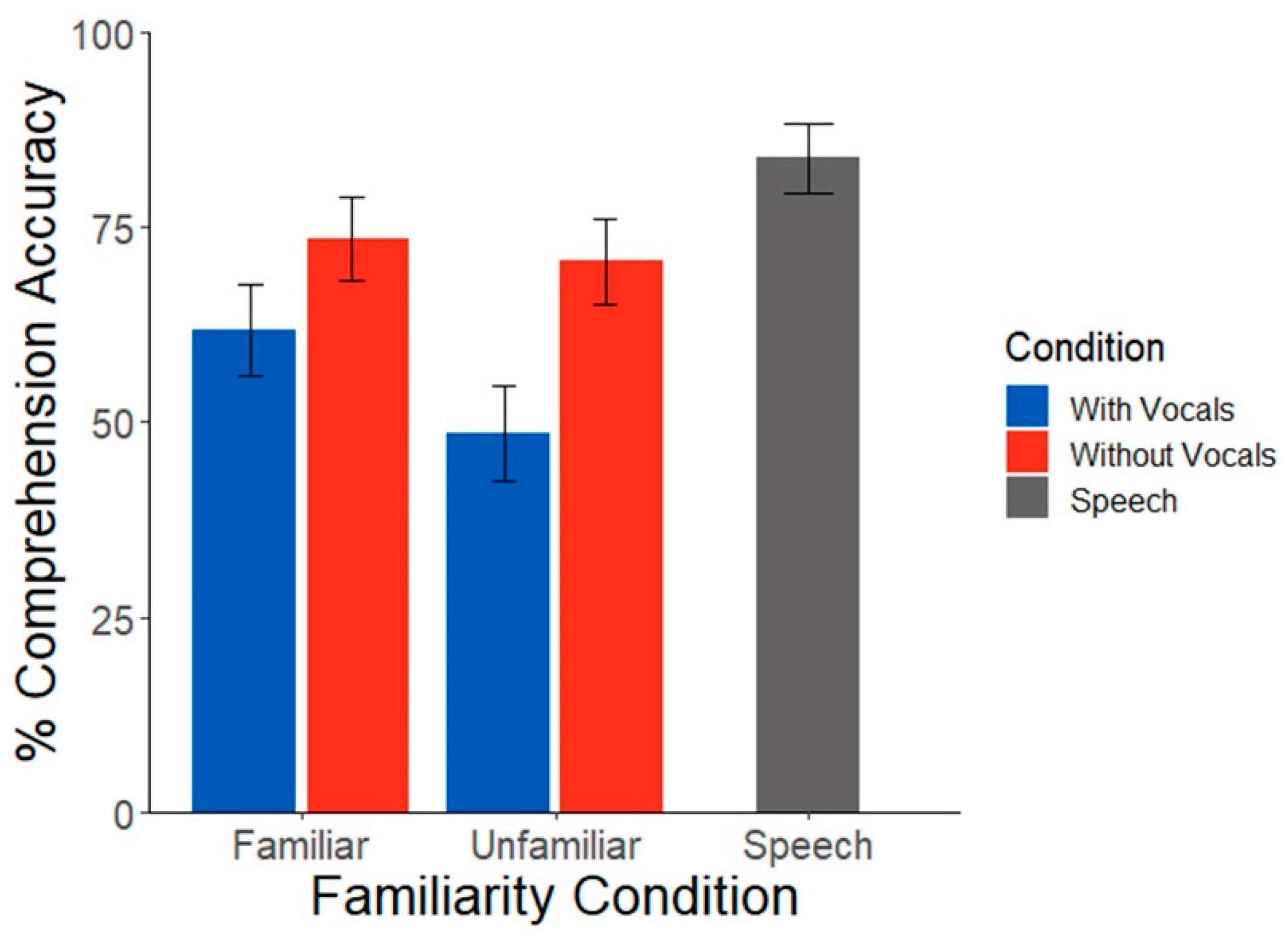

3.1. Behavioral Data

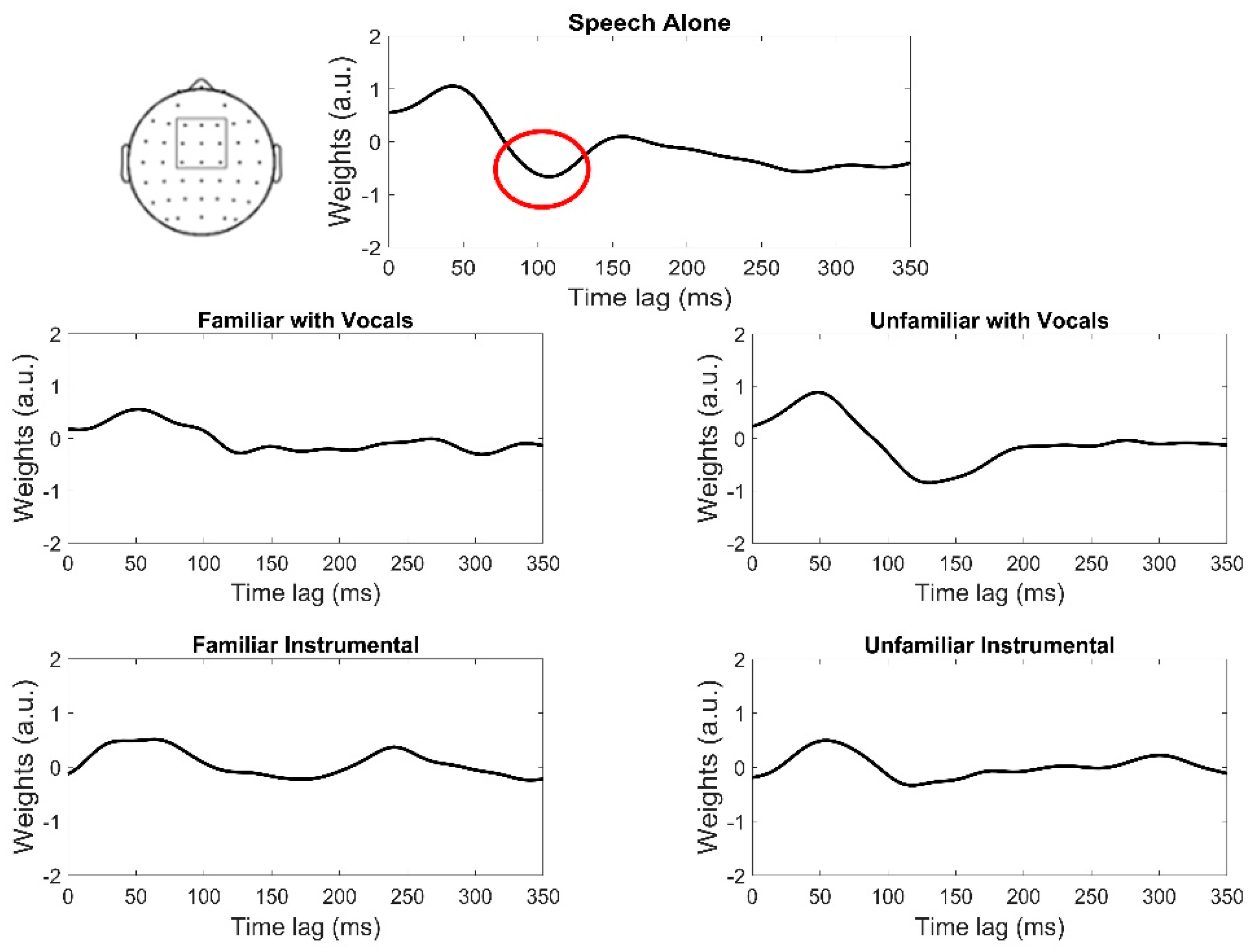

3.2. Electrophysiological Data

3.3. Neural Speech Tracking as a Function of Listeners’ Musicality

3.3.1. Behavioral Data

3.3.2. Electrophysiological Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kämpfe, J.; Sedlmeier, P.; Renkewitz, F. The impact of background music on adult listeners: A meta-analysis. Psychol. Music 2011, 39, 424–448. [Google Scholar] [CrossRef]

- Crawford, H.J.; Strapp, C.M. Effects of vocal and instrumental music on visuospatial and verbal performance as moderated by studying preference and personality. Personal. Individ. Differ. 1994, 16, 237–245. [Google Scholar] [CrossRef]

- Angel, L.A.; Polzella, D.J.; Elvers, G.C. Background music and cognitive performance. Percept. Mot. Ski. 2010, 110, 1059–1064. [Google Scholar] [CrossRef] [PubMed]

- Thompson, W.F.; Schellenberg, E.G.; Letnic, A.K. Fast and loud background music disrupts reading comprehension. Psychol. Music 2011, 40, 700–708. [Google Scholar] [CrossRef]

- Brown, J.A.; Bidelman, G.M. Song properties and familiarity affect speech recognition in musical noise. Psychomusicol. Music Mind Brain 2022, 32, 1–6. [Google Scholar] [CrossRef]

- Darrow, A.A.; Johnson, C.; Agnew, S.; Fuller, E.R.; Uchisaka, M. Effect of preferred music as a distraction on music majors’ and nonmusic majors’ selective attention. Bull. Counc. Res. Music Educ. 2006, 170, 21–31. [Google Scholar] [CrossRef]

- Martin, R.C.; Wogalter, M.S.; Forlano, J.G. Reading comprehension in the presence of unattended speech and music. J. Mem. Lang. 1988, 27, 382–398. [Google Scholar] [CrossRef]

- Perham, N.; Currie, H. Does listening to preferred music improve reading comprehension performance? Appl. Cogn. Psychol. 2014, 28, 279–284. [Google Scholar] [CrossRef]

- Lee, E.K.; Lee, S.E.; Kwon, Y.S. The effect of lyrical and non-lyrical background music on different types of language processing—An ERP study. Korean J. Cogn. Sci. 2020, 31, 155–178. [Google Scholar] [CrossRef]

- Vasilev, M.R.; Kirkby, J.A.; Angele, B. Auditory Distraction During Reading: A Bayesian Meta-Analysis of a Continuing Controversy. Perspect. Psychol. Sci. 2018, 13, 567–597. [Google Scholar] [CrossRef]

- Souza, P.; Gehani, N.; Wright, R.; McCloy, D. The advantage of knowing the talker. J. Am. Acad. Audiol. 2013, 24, 689–700. [Google Scholar] [CrossRef] [PubMed]

- Yonan, C.A.; Sommers, M.S. The Effects of Talker Familiarity on Spoken Word Identification in Younger and Older Listeners. Psychol. Aging 2000, 15, 88–99. [Google Scholar] [CrossRef] [PubMed]

- Johnsrude, I.S.; Mackey, A.; Hakyemez, H.; Alexander, E.; Trang, H.P.; Carlyon, R.P. Swinging at a cocktail party: Voice familiarity aids speech perception in the presence of a competing voice. Psychol. Sci. 2013, 24, 1995–2004. [Google Scholar] [CrossRef] [PubMed]

- Pisoni, D.B. Long-term memory in speech perception: Some new findings on talker variability, speaking rate and perceptual learning. Speech Commun. 1993, 13, 109–125. [Google Scholar] [CrossRef]

- Feng, S.; Bidelman, G.M. Music listening and song familiarity modulate mind wandering and behavioral success during lexical processing. In Proceedings of the Annual Meeting of the Cognitive Science Society (CogSci 2015), Pasadena, CA, USA, 22–25 July 2015. [Google Scholar]

- Russo, F.A.; Pichora-Fuller, M.K. Tune in or tune out: Age-related differences in listening to speech in music. Ear Hear. 2008, 29, 746–760. [Google Scholar] [CrossRef]

- De Groot, A.M.B.; Smedinga, H.E. Let the music play!: A short-term but no long-term detrimental effect of vocal background music with familiar language lyrics on foreign language vocabulary learning. Stud. Second Lang. Acquis. 2014, 36, 681–707. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Dexter, L. Bilinguals at the “cocktail party”: Dissociable neural activity in auditory-linguistic brain regions reveals neurobiological basis for nonnative listeners’ speech-in-noise recognition deficits. Brain Lang. 2015, 143, 32–41. [Google Scholar] [CrossRef]

- Strait, D.L.; Kraus, N. Can you hear me now? Musical training shapes functional brain networks for selective auditory attention and hearing speech in noise. Front. Psychol. 2011, 2, 113. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Krishnan, A. Effects of reverberation on brainstem representation of speech in musicians and non-musicians. Brain Res. 2010, 1355, 112–125. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Yoo, J. Musicians Show Improved Speech Segregation in Competitive, Multi-Talker Cocktail Party Scenarios. Front. Psychol. 2020, 11, 1927. [Google Scholar] [CrossRef]

- Coffey, E.B.J.; Mogilever, N.B.; Zatorre, R.J. Speech-in-noise perception in musicians: A review. Hear. Res. 2017, 352, 49–69. [Google Scholar] [CrossRef] [PubMed]

- Parbery-Clark, A.; Skoe, E.; Kraus, N. Musical experience limits the degradative effects of background noise on the neural processing of sound. J. Neurosci. 2009, 29, 14100–14107. [Google Scholar] [CrossRef] [PubMed]

- Yoo, J.; Bidelman, G.M. Linguistic, perceptual, and cognitive factors underlying musicians’ benefits in noise-degraded speech perception. Hear. Res. 2019, 377, 189–195. [Google Scholar] [CrossRef] [PubMed]

- Zendel, B.R.; Alain, C. Concurrent sound segregation is enhanced in musicians. J. Cogn. Neurosci. 2009, 21, 1488–1498. [Google Scholar] [CrossRef] [PubMed]

- Oxenham, A.J.; Fligor, B.J.; Mason, C.R.; Kidd, G., Jr. Informational masking and musical training. J. Acoust. Soc. Am. 2003, 114, 1543–1549. [Google Scholar] [CrossRef]

- Parbery-Clark, A.; Skoe, E.; Lam, C.; Kraus, N. Musician enhancement for speech-in-noise. Ear Hear. 2009, 30, 653–661. [Google Scholar] [CrossRef]

- Patston, L.; Tippett, L. The Effect of Background Music on Cognitive Performance in Musicians and Nonmusicians. Music Backgr. Music Cogn. 2011, 29, 173–184. [Google Scholar] [CrossRef]

- Mankel, K.; Bidelman, G.M. Inherent auditory skills rather than formal music training shape the neural encoding of speech. Proc. Natl. Acad. Sci. USA 2018, 115, 13129–13134. [Google Scholar] [CrossRef]

- Ekström, S.R.; Borg, E. Hearing speech in music. Noise Health 2011, 13, 277–285. [Google Scholar] [CrossRef]

- Eskridge, E.; Galvin, J., III; Aronoff, J.; Li, T.; Fu, Q.J. Speech Perception with Music MAskers by Cochlear Implant Users and Normal Hearing Listeners. J. Speech Lang. Hear. Res. 2012, 55, 800–810. [Google Scholar] [CrossRef] [Green Version]

- Crosse, M.J.; Di Liberto, G.M.; Bednar, A.; Lalor, E.C. The multivariate temporal response function (mTRF) toolbox: A MATLAB toolbox for relating neural signals to continuous stimuli. Front. Hum. Neurosci. 2016, 10, 604. [Google Scholar] [CrossRef]

- Forte, A.E.; Etard, O.; Reichenbach, T. The human auditory brainstem response to running speech reveals a subcortical mechanism for selective attention. eLife 2017, 6, e27203. [Google Scholar] [CrossRef] [PubMed]

- Lalor, E.C.; Power, A.J.; Reilly, R.B.; Foxe, J.J. Resolving precise temporal processing properties of the auditory system using continuous stimuli. J. Neurophysiol. 2009, 102, 349–359. [Google Scholar] [CrossRef] [PubMed]

- Ding, N.; Chatterjee, M.; Simon, J.Z. Robust cortical entrainment to the speech envelope relies on the spectro-temporal fine structure. Neuroimage 2014, 88, 41–46. [Google Scholar] [CrossRef] [PubMed]

- Oldfield, R. The Assessment and Analysis of Handedness: The Edinburgh Inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Ding, N.; Simon, J.Z. Neural coding of continuous speech in auditory cortex during monaural and dichotic listening. J. Neurophysiol. 2012, 107, 78–89. [Google Scholar] [CrossRef]

- Law, L.N.; Zentner, M. Assessing musical abilities objectively: Construction and validation of the profile of music perception skills. PLoS ONE 2012, 7, e52508. [Google Scholar] [CrossRef]

- Oostenveld, R.; Praamstra, P. The five percent electrode system for high-resolution EEG and ERP measurements. Clin. Neurophysiol. 2001, 112, 713–719. [Google Scholar] [CrossRef]

- Crosse, M.J.; Zuk, N.J.; Liberto, G.M.D.; Nidiffer, A.R.; Molholm, S.; Lalor, E.C. Linear Modeling of Neurophysiological Responses to Naturalistic Stimuli: Methodological Considerations for Applied Research. Front. Neurosci. 2021, 15, 705621. [Google Scholar] [CrossRef]

- Kulasingham, J.P.; Simon, J.Z. Algorithms for Estimating Time-Locked Neural Response Components in Cortical Processing of Continuous Speech. IEEE Trans. Biomed. Eng. 2022, 1–9. [Google Scholar] [CrossRef]

- Hillyard, S.A.; Hink, R.F.; Schwent, V.L.; Picton, T.W. Electrical signs of selective attention in the human brain. Science 1973, 182, 177–180. [Google Scholar] [CrossRef] [PubMed]

- Näätänen, R.; Picton, T. The N1 wave of the human electric and magnetic response to sound: A review and an analysis of the component structure. Psychophysiology 1987, 24, 375–425. [Google Scholar] [CrossRef] [PubMed]

- Picton, T.W.; Woods, D.L.; Proulx, G.B. Human auditory sustained potentials. I. The nature of the response. Electroencephalogr. Clin. Neurophysiol. 1978, 45, 186–197. [Google Scholar] [CrossRef]

- Muncke, J.; Kuruvila, I.; Hoppe, U. Prediction of Speech Intelligibility by Means of EEG Responses to Sentences in Noise. Front. Neurosci. 2022, 16, 876421. [Google Scholar] [CrossRef] [PubMed]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- Mankel, K.; Barber, J.; Bidelman, G.M. Auditory categorical processing for speech is modulated by inherent musical listening skills. NeuroReport 2020, 31, 162–166. [Google Scholar] [CrossRef]

- MacCallum, R.C.; Zhang, S.; Preacher, K.J.; Rucker, D. On the practice of dichotomization of quantitative variables. Psychol. Methods 2002, 7, 19–40. [Google Scholar] [CrossRef]

- Brouwer, S.; Akkermans, N.; Hendriks, L.; van Uden, H.; Wilms, V. “Lass frooby noo!” the interference of song lyrics and meaning on speech intelligibility. J. Exp. Psychol. Appl. 2022, 28, 576–588. [Google Scholar] [CrossRef]

- Kidd, G.; Colburn, H.S. Informational Masking in Speech Recognition. In The Auditory System at the Cocktail Party; Middlebrooks, J.C., Simon, J.Z., Popper, A.N., Fay, R.R., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 75–109. [Google Scholar]

- Scharenborg, O.; Larson, M. Investigating the Effect of Music and Lyrics on Spoken-Word Recognition. arXiv 2018, arXiv:1803.05058. [Google Scholar]

- Lavie, N.; Hirst, A.; De Fockert, J.W.; Viding, E. Load theory of selective attention and cognitive control. J. Exp. Psychol. Gen. 2004, 133, 339–354. [Google Scholar] [CrossRef]

- Dong, Y.; Zheng, H.-Y.; Wu, S.X.-Y.; Huang, F.-Y.; Peng, S.-N.; Sun, S.Y.-K.; Zeng, H. The effect of Chinese pop background music on Chinese poetry reading comprehension. Psychol. Music 2022, 50, 1–22. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Howell, M. Functional changes in inter- and intra-hemispheric auditory cortical processing underlying degraded speech perception. Neuroimage 2016, 124, 581–590. [Google Scholar] [CrossRef] [PubMed]

- Key, A.P.F.; Dove, G.O.; Maguire, M.J. Linking brainwaves to the brain: An ERP primer. Dev. Neuropsychol. 2005, 27, 183–215. [Google Scholar] [CrossRef] [PubMed]

- Ding, N.; Simon, J.Z. Emergence of neural encoding of auditory objects while listening to competing speakers. Proc. Natl. Acad. Sci. USA 2012, 109, 11854–11859. [Google Scholar] [CrossRef] [PubMed]

- Strauss, D.J.; Corona-Strauss, F.I.; Trenado, C.; Bernarding, C.; Reith, W.; Latzel, M.; Froehlich, M. Electrophysiological correlates of listening effort: Neurodynamical modeling and measurement. Cogn. Neurodyn. 2010, 4, 119–131. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Lowther, J.E.; Tak, S.H.; Alain, C. Mild cognitive impairment is characterized by deficient hierarchical speech coding between auditory brainstem and cortex. J. Neurosci. 2017, 37, 3610–3620. [Google Scholar] [CrossRef]

- Hennessy, S.; Mack, W.J.; Habibi, A. Speech-in-noise perception in musicians and non-musicians: A multi-level meta-analysis. Hear. Res. 2022, 416, 108442. [Google Scholar] [CrossRef]

- Du, M.; Jiang, J.; Li, Z.; Man, D.; Jiang, C. The effects of background music on neural responses during reading comprehension. Sci. Rep. 2020, 10, 18651. [Google Scholar] [CrossRef]

- Román-Caballero, R.; Martín-Arévalo, E.; Lupiáñez, J. Attentional networks functioning and vigilance in expert musicians and non-musicians. Psychol. Res. 2021, 85, 1121–1135. [Google Scholar] [CrossRef]

- Bialystok, E.; DePape, A.M. Musical expertise, bilingualism, and executive functioning. J. Exp. Psychol. Hum. Percept. Perform. 2009, 35, 565–574. [Google Scholar] [CrossRef]

- Weiss, M.W.; Trehub, S.E.; Schellenberg, E.G.; Habashi, P. Pupils Dilate for Vocal or Familiar Music. J. Exp. Psychol. Hum. Percept. Perform. 2016, 42, 1061–1065. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Swaminathan, J.; Mason, C.R.; Streeter, T.M.; Best, V.; Kidd, G., Jr.; Patel, A.D. Musical training, individual differences and the cocktail party problem. Sci. Rep. 2015, 5, 11628. [Google Scholar] [CrossRef] [PubMed]

- Bendixen, A. Predictability effects in auditory scene analysis: A review. Front. Neurosci. 2014, 8, 60. [Google Scholar] [CrossRef] [PubMed] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brown, J.A.; Bidelman, G.M. Familiarity of Background Music Modulates the Cortical Tracking of Target Speech at the “Cocktail Party”. Brain Sci. 2022, 12, 1320. https://doi.org/10.3390/brainsci12101320

Brown JA, Bidelman GM. Familiarity of Background Music Modulates the Cortical Tracking of Target Speech at the “Cocktail Party”. Brain Sciences. 2022; 12(10):1320. https://doi.org/10.3390/brainsci12101320

Chicago/Turabian StyleBrown, Jane A., and Gavin M. Bidelman. 2022. "Familiarity of Background Music Modulates the Cortical Tracking of Target Speech at the “Cocktail Party”" Brain Sciences 12, no. 10: 1320. https://doi.org/10.3390/brainsci12101320

APA StyleBrown, J. A., & Bidelman, G. M. (2022). Familiarity of Background Music Modulates the Cortical Tracking of Target Speech at the “Cocktail Party”. Brain Sciences, 12(10), 1320. https://doi.org/10.3390/brainsci12101320