Examining Individual Differences in Singing, Musical and Tone Language Ability in Adolescents and Young Adults with Dyslexia

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Musical Background

2.3. Measuring Mandarin Ability

2.3.1. Tone Discrimination Task (Mandarin D)

2.3.2. Syllable Tone Recognition Task (Mandarin S)

2.3.3. Mandarin Pronunciation Task (Mandarin P)

2.4. Gordon’s Musical Ability Test

2.5. Tone Frequency and Duration Test

2.6. Singing Ability and Singing Behavior during Childhood

2.7. Neurophysiological Measurement: Magnetencephalography (MEG)

2.8. Statistical Analysis

3. Results

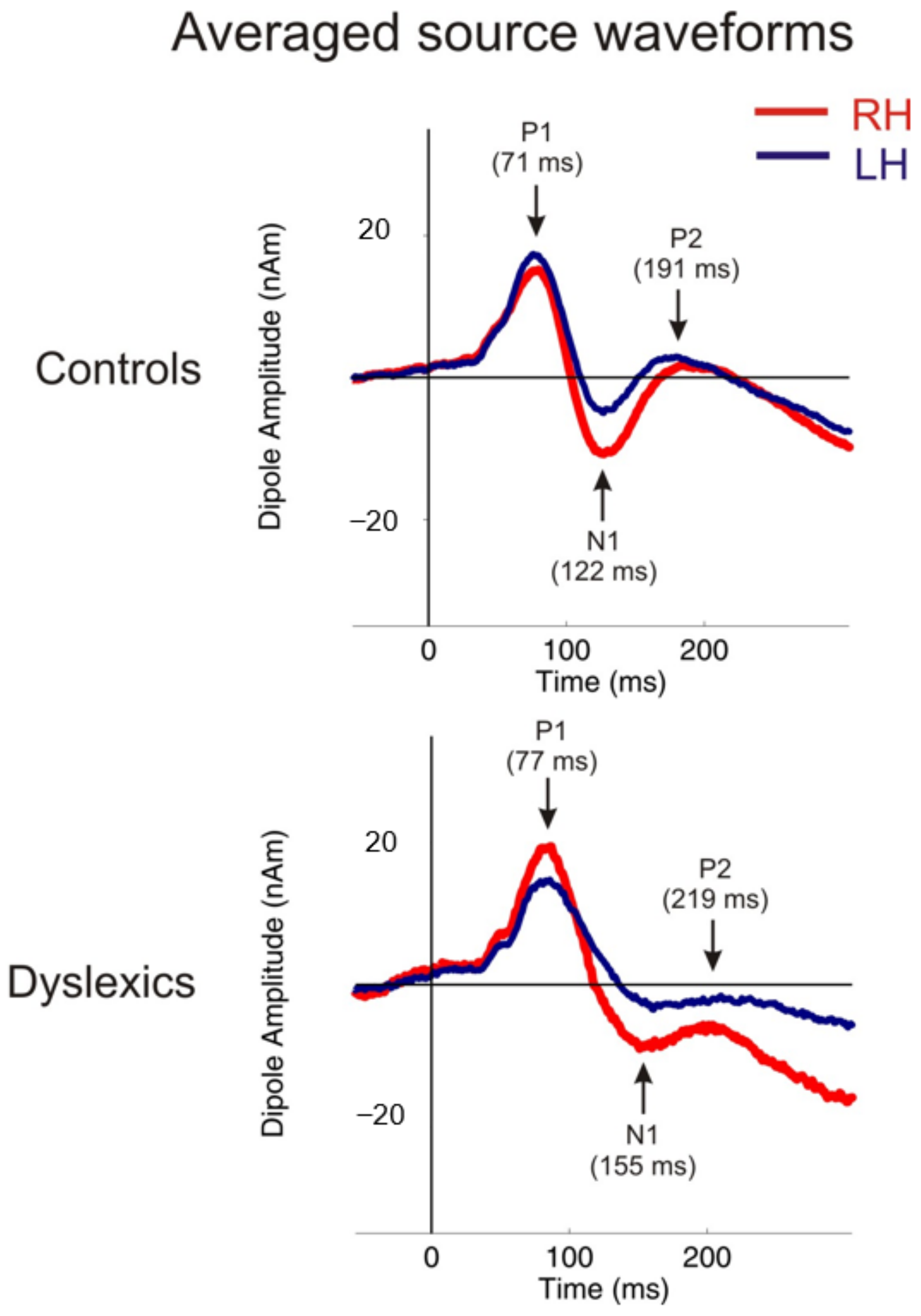

3.1. MEG Variables

3.1.1. Independent t-Tests: MEG

| Variables | Controls: Mean ± SE | Controls: Min.|Max. | Dyslexia: Mean ± SE | Dyslexia: Min.|Max. | p | r |

|---|---|---|---|---|---|---|

| P1 latency right and left (mean) + | 70.6 4 ± 1.94 | 56.50|97.50 | 77.71 ± 2.75 | 59.50|101.00 | p < 0.041 | r = 0.29 |

| absolute P1 latency asynchrony |R-L| | 3.89 ± 0.75 | 0.00|13.00 | 8.65 ± 2.66 | 1.00|70.00 | p < 0.091 | r = 0.24 |

| N1 latency right and left (mean) + | 121.52 ± 2.38 | 104.00|163.00 | 154.58 ± 9.18 | 106.50|236.50 | p < 0.001 | r = 0.44 |

| absolute N1 latency asynchrony |R-L| | 9.62 ± 2.53 | 0.00|52.00 | 16.85 ± 6.16 | 4.00|141.00 | p < 0.283 | r = 0.15 |

| P2 latency right and left (mean) + | 191.15 ± 6.29 | 144.00|251.50 | 219.50 ± 12.31 | 144.50|357.00 | p < 0.046 | r = 0.28 |

| absolute P2 latency asynchrony |R-L| | 11.81 ± 2.37 | 0.20|28.80 | 21.27 ± 4.56 | 0.10|31.20 | p < 0.071 | r = 0.25 |

3.1.2. Discriminant Analysis: MEG

| MEG Predictors | |

|---|---|

| N1 latency right and left (mean) | 0.736 |

| P1 latency right and left (mean) | 0.444 |

| P2 latency right and left (mean) | 0.433 |

| absolute P2 latency asynchrony |R-L| | 0.389 |

| absolute P1 latency asynchrony |R-L| | 0.364 |

| absolute P2 latency asynchrony |R-L| | 0.229 |

3.2. Behavioral Variables

3.2.1. Independent t-Tests: Behavioral

3.2.2. Discriminant Analysis: Behavioral

3.3. Correlational Analysis

4. Discussion

5. Implications and Future Research Directions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Polanczyk, G.; de Lima, M.S.; Horta, B.L.; Biederman, J.; Rohde, L.A. The worldwide prevalence of ADHD: A systematic review and metaregression analysis. Am. J. Psychiatry 2007, 164, 942–948. [Google Scholar] [CrossRef]

- Shaywitz, S.E.; Shaywitz, B.A. Dyslexia (specific reading disability). Pediatrics Rev. 2003, 24, 147–153. [Google Scholar] [CrossRef]

- Seither-Preisler, A.; Parncutt, R.; Schneider, P. Size and synchronization of auditory cortex promotes musical, literacy, and attentional skills in children. J. Neurosci. 2014, 34, 10937–10949. [Google Scholar] [CrossRef] [Green Version]

- Hämäläinen, J.A.; Lohvansuu, K.; Ervast, L.; Leppänen, P.H.T. Event-related potentials to tones show differences between children with multiple risk factors for dyslexia and control children before the onset of formal reading instruction. Int. J. Psychophysiol. 2015, 95, 101–112. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tallal, P.; Miller, S.; Fitch, R.H. Neurobiological basis of speech: A case for the preeminence of temporal processing. Ann. N. Y. Acad. Sci. 1993, 682, 27–47. [Google Scholar] [CrossRef]

- Huss, M.; Verney, J.P.; Fosker, T.; Mead, N.; Goswami, U. Music, rhythm, rise time perception and developmental dyslexia: Perception of musical meter predicts reading and phonology. Cortex 2011, 47, 674–689. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Groß, C.; Serrallach, B.L.; Möhler, E.; Pousson, J.E.; Schneider, P.; Christiner, M.; Bernhofs, V. Musical Performance in Adolescents with ADHD, ADD and Dyslexia—Behavioral and Neurophysiological Aspects. Brain Sci. 2022, 12, 127. [Google Scholar] [CrossRef] [PubMed]

- Serrallach, B.; Groß, C.; Bernhofs, V.; Engelmann, D.; Benner, J.; Gündert, N.; Blatow, M.; Wengenroth, M.; Seitz, A.; Brunner, M.; et al. Neural biomarkers for dyslexia, ADHD, and ADD in the auditory cortex of children. Front. Neurosci. 2016, 10, 324. [Google Scholar] [CrossRef] [Green Version]

- Turker, S.; Reiterer, S.M.; Seither-Preisler, A.; Schneider, P. “When Music Speaks”: Auditory Cortex Morphology as a Neuroanatomical Marker of Language Aptitude and Musicality. Front. Psychol. 2017, 8, 2096. [Google Scholar] [CrossRef] [Green Version]

- Schneider, P.; Scherg, M.; Dosch, H.G.; Specht, H.J.; Gutschalk, A.; Rupp, A. Morphology of Heschl’s gyrus reflects enhanced activation in the auditory cortex of musicians. Nat. Neurosci. 2002, 5, 688–694. [Google Scholar] [CrossRef]

- Sharma, A.; Kraus, N.; McGee, T.J.; Nicol, T.G. Developmental changes in P1 and N1 central auditory responses elicited by consonant-vowel syllables. Electroencephalogr. Clin. Neurophysiol. Evoked Potentials Sect. 1997, 104, 540–545. [Google Scholar] [CrossRef]

- Wengenroth, M.; Blatow, M.; Heinecke, A.; Reinhardt, J.; Stippich, C.; Hofmann, E.; Schneider, P. Increased volume and function of right auditory cortex as a marker for absolute pitch. Cereb. Cortex 2014, 24, 1127–1137. [Google Scholar] [CrossRef] [Green Version]

- Benner, J.; Wengenroth, M.; Reinhardt, J.; Stippich, C.; Schneider, P.; Blatow, M. Prevalence and function of Heschl’s gyrus morphotypes in musicians. Brain Struct. Funct. 2017, 222, 3587–3603. [Google Scholar] [CrossRef]

- Tremblay, K.L.; Ross, B.; Inoue, K.; McClannahan, K.; Collet, G. Is the auditory evoked P2 response a biomarker of learning? Front. Syst. Neurosci. 2014, 8, 28. [Google Scholar] [CrossRef] [Green Version]

- Seppänen, M.; Hämäläinen, J.; Pesonen, A.-K.; Tervaniemi, M. Music training enhances rapid neural plasticity of N1 and P2 source activation for unattended sounds. Front. Hum. Neurosci. 2012, 6, 43. [Google Scholar] [CrossRef] [Green Version]

- Shahin, A.; Roberts, L.E.; Trainor, L.J. Enhancement of auditory cortical development by musical experience in children. NeuroReport 2004, 15, 1917. [Google Scholar] [CrossRef]

- Shahin, A.; Roberts, L.E.; Pantev, C.; Trainor, L.J.; Ross, B. Modulation of P2 auditory-evoked responses by the spectral complexity of musical sounds. NeuroReport 2005, 16, 1781–1785. [Google Scholar] [CrossRef] [PubMed]

- Kraus, N.; Chandrasekaran, B. Music training for the development of auditory skills. Nat. Rev. Neurosci. 2010, 11, 599–605. [Google Scholar] [CrossRef]

- Kraus, N.; White-Schwoch, T. Neurobiology of Everyday Communication: What Have We Learned from Music? Neuroscientist 2017, 23, 287–298. [Google Scholar] [CrossRef]

- Magne, C.; Schön, D.; Besson, M. Musician Children Detect Pitch Violations in Both Music and Language Better than Nonmusician Children: Behavioral and Electrophysiological Approaches. J. Cogn. Neurosci. 2006, 18, 199–211. [Google Scholar] [CrossRef]

- Musso, M.; Fürniss, H.; Glauche, V.; Urbach, H.; Weiller, C.; Rijntjes, M. Musicians use speech-specific areas when processing tones: The key to their superior linguistic competence? Behav. Brain Res. 2020, 390, 112662. [Google Scholar] [CrossRef] [PubMed]

- Wong, P.C.M.; Skoe, E.; Russo, N.M.; Dees, T.; Kraus, N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat. Neurosci. 2007, 10, 420–422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Franklin, M.S.; Sledge Moore, K.; Yip, C.-Y.; Jonides, J.; Rattray, K.; Moher, J. The effects of musical training on verbal memory. Psychol. Music 2008, 36, 353–365. [Google Scholar] [CrossRef] [Green Version]

- Jentschke, S.; Koelsch, S. Musical training modulates the development of syntax processing in children. NeuroImage 2009, 47, 735–744. [Google Scholar] [CrossRef]

- Milovanov, R. Musical aptitude and foreign language learning skills: Neural and behavioural evidence about their connections, 2009. In Proceedings of the 7th 94 Triennial Conference of European Society for the Cognitive Sciences of Music (ESCOM 2009), Jyväskylä, Finland, 12–16 August 2009; pp. 338–342. Available online: https://jyx.jyu.fi/dspace/bitstream/handle/123456789/20935/urn_nbn_fi_jyu-2009411285.pdf (accessed on 9 October 2012).

- Milovanov, R.; Tervaniemi, M. The Interplay between musical and linguistic aptitudes: A review. Front. Psychol. 2011, 2, 321. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Christiner, M.; Reiterer, S.M. Song and speech: Examining the link between singing talent and speech imitation ability. Front. Psychol. 2013, 4, 874. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Christiner, M.; Reiterer, S.M. A Mozart is not a Pavarotti: Singers outperform instrumentalists on foreign accent imitation. Front. Hum. Neurosci. 2015, 9, 482. [Google Scholar] [CrossRef] [Green Version]

- Christiner, M.; Reiterer, S.M. Early influence of musical abilities and working memory on speech imitation abilities: Study with pre-school children. Brain Sci. 2018, 8, 169. [Google Scholar] [CrossRef] [Green Version]

- Christiner, M.; Rüdegger, S.; Reiterer, S.M. Sing Chinese and tap Tagalog? Predicting individual differences in musical and phonetic aptitude using language families differing by sound-typology. Int. J. Multiling. 2018, 15, 455–471. [Google Scholar] [CrossRef] [Green Version]

- Coumel, M.; Christiner, M.; Reiterer, S.M. Second language accent faking ability depends on musical abilities, not on working memory. Front. Psychol. 2019, 10, 257. [Google Scholar] [CrossRef]

- Fonseca-Mora, C.; Jara-Jiménez, P.; Gómez-Domínguez, M. Musical plus phonological input for young foreign language readers. Front. Psychol. 2015, 6, 286. [Google Scholar] [CrossRef] [Green Version]

- Golestani, N.; Pallier, C. Anatomical correlates of foreign speech sound production. Cereb. Cortex 2007, 17, 929–934. [Google Scholar] [CrossRef] [PubMed]

- Berkowska, M.; Dalla Bella, S. Acquired and congenital disorders of sung performance: A review. Adv. Cogn. Psychol. 2009, 5, 69–83. [Google Scholar] [CrossRef]

- Christiner, M.; Reiterer, S. Music, song and speech. In The Internal Context of Bilingual Processing; Truscott, J., Sharwood Smith, M., Eds.; John Benjamins: Amsterdam, The Netherlands, 2019; pp. 131–156. [Google Scholar]

- Christiner, M. Musicality and Second Language Acquisition: Singing and Phonetic Language Aptitude Phonetic Language Aptitude. Ph.D. Thesis, University of Vienna, Vienna, Austria, 2020. [Google Scholar]

- Patel, A.D. Why would musical training benefit the neural encoding of speech? The OPERA hypothesis. Front. Psychol. 2011, 2, 142. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moreno, S. Can music influence language and cognition? Contemp. Music Rev. 2009, 28, 329–345. [Google Scholar] [CrossRef]

- Chao, Y.R. A Grammar of Spoken Chinese; University of California Press: Berkeley, CA, USA, 1965. [Google Scholar]

- Bidelman, G.M.; Hutka, S.; Moreno, S. Tone language speakers and musicians share enhanced perceptual and cognitive abilities for musical pitch: Evidence for bidirectionality between the domains of language and music. PLoS ONE 2013, 8, e60676. [Google Scholar] [CrossRef] [Green Version]

- Lee, C.-Y.; Hung, T.-H. Identification of Mandarin tones by English-speaking musicians and nonmusicians. J. Acoust. Soc. Am. 2008, 124, 3235–3248. [Google Scholar] [CrossRef]

- Han, Y.; Goudbeek, M.; Mos, M.; Swerts, M. Mandarin Tone Identification by Tone-Naïve Musicians and Non-musicians in Auditory-Visual and Auditory-Only Conditions. Front. Commun. 2019, 4, 70. [Google Scholar] [CrossRef] [Green Version]

- Apfelstadt, H. Effects of melodic perception instruction on pitch discrimination and vocal accuracy of kindergarten children. J. Res. Music. Educ. 1984, 32, 15–24. [Google Scholar] [CrossRef]

- Ludke, K.M.; Ferreira, F.; Overy, K. Singing can facilitate foreign language learning. Mem. Cogn. 2014, 42, 41–52. [Google Scholar] [CrossRef]

- Christiner, M.; Bernhofs, V.; Groß, C. Individual Differences in Singing Behavior during Childhood Predicts Language Performance during Adulthood. Languages 2022, 7, 72. [Google Scholar] [CrossRef]

- Christiner, M.; Gross, C.; Seither-Preisler, A.; Schneider, P. The Melody of Speech: What the Melodic Perception of Speech Reveals about Language Performance and Musical Abilities. Languages 2021, 6, 132. [Google Scholar] [CrossRef]

- Flege, J.E.; Hammond, R.M. Mimicry of Non-distinctive Phonetic Differences Between Language Varieties. Stud. Sec. Lang. Acquis. 1982, 5, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Christiner, M. Singing Performance and Language Aptitude: Behavioural Study on Singing Performance and Its Relation to the Pronunciation of a Second Language. Master’s Thesis, University of Vienna, Vienna, Austria, 2013. [Google Scholar]

- Christiner, M. Let the music speak: Examining the relationship between music and language aptitude in pre-school children. In Exploring Language Aptitude: Views from Psychology, the Language Sciences, and Cognitive Neuroscience; Reiterer, S.M., Ed.; Springer Nature: Cham, Switzerland, 2018; pp. 149–166. [Google Scholar]

- Hebert, M.; Kearns, D.M.; Hayes, J.B.; Bazis, P.; Cooper, S. Why Children with Dyslexia Struggle with Writing and How to Help Them. Lang. Speech Hear. Serv. Sch. 2018, 49, 843–863. [Google Scholar] [CrossRef]

- Tierney, A.; Kraus, N. Auditory-motor entrainment and phonological skills: Precise auditory timing hypothesis (PATH). Front. Hum. Neurosci. 2014, 8, 949. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gromko, J.E. The Effect of Music Instruction on Phonemic Awareness in Beginning Readers. J. Res. Music. Educ. 2005, 53, 199–209. [Google Scholar] [CrossRef]

- Christiner, M.; Renner, J.; Christine, G.; Seither-Preisler, A.; Jan, B.; Schneider, P. Singing Mandarin? What elementary short-term memory capacity, basic auditory skills, musical and singing abilities reveal about learning Mandarin. Front. Psychol. 2022. [Google Scholar] [CrossRef]

- Lenhard, W. ELFE 1–6. Ein Leseverständnistest für Erst-bis Sechstklässler; Hogrefe: Göttingen, Germany, 2006. [Google Scholar]

- Brunner, M.; Baeumer, C.; Dockter, S.; Feldhusen, F.; Plinkert, P.; Proeschel, U. Heidelberg Phoneme Discrimination Test (HLAD): Normative data for children of the third grade and correlation with spelling ability. Folia Phoniatr. Logop. 2008, 60, 157–161. [Google Scholar] [CrossRef]

- Serrallach, B.L.; Groß, C.; Christiner, M.; Wildermuth, S.; Schneider, P. Neuromorphological and Neurofunctional Correlates of ADHD and ADD in the Auditory Cortex of Adults. Front. Neurosci. 2022, 16, 631. [Google Scholar] [CrossRef] [PubMed]

- Carroll, J.B.; Sapon, S. Modern Language Aptitude Test (MLAT); The Psychological Corporation: New York, NY, USA, 1959. [Google Scholar]

- Meara, P. LLAMA Language Aptitude Tests; Lognostics: Swansea, UK, 2005. [Google Scholar]

- Gordon, E. Advanced Measures of Music Audiation; GIA Publications Inc.: Chicago, IL, USA, 1989. [Google Scholar]

- Jepsen, M.L.; Ewert, S.D.; Dau, T. A computational model of human auditory signal processing and perception. J. Acoust. Soc. Am. 2008, 124, 422–438. [Google Scholar] [CrossRef] [Green Version]

- Dalla Bella, S.; Giguère, J.-F.; Peretz, I. Singing proficiency in the general population. J. Acoust. Soc. Am. 2007, 121, 1182–1189. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dalla Bella, S.; Berkowska, M. Singing proficiency in the majority: Normality and “phenotypes” of poor singing. Ann. N. Y. Acad. Sci. 2009, 1169, 99–107. [Google Scholar] [CrossRef] [PubMed]

- Welch, G.F.; Himonides, E.; Saunders, J.; Papageorgi, I.; Rinta, T.; Preti, C.; Stewart, C.; Lani, J.; Hill, J. Researching the first year of the national singing programme sing up in England: An initial impact evaluation. Psychomusicology 2011, 21, 83–97. [Google Scholar] [CrossRef]

- Schneider, P.; Sluming, V.; Roberts, N.; Bleeck, S.; Rupp, A. Structural, functional, and perceptual differences in Heschl’s gyrus and musical instrument preference. Ann. N. Y. Acad. Sci. 2005, 1060, 387–394. [Google Scholar] [CrossRef]

- Hämäläinen, M.S.; Sarvas, J. Feasibility of the homogeneous head model in the interpretation of neuromagnetic fields. Phys. Med. Biol. 1987, 32, 91–97. [Google Scholar] [CrossRef]

- Sarvas, J. Basic mathematical and electromagnetic concepts of the biomagnetic inverse problem. Phys. Med. Biol. 1987, 32, 11–22. [Google Scholar] [CrossRef]

- Ponton, C.W.; Don, M.; Eggermont, J.J.; Waring, M.D.; Masuda, A. Maturation of Human Cortical Auditory Function: Differences between Normal-Hearing Children and Children with Cochlear Implants. Ear Hear. 1996, 17, 430. [Google Scholar] [CrossRef]

- Warner, R.M. Applied Statistics: From Bivariate through Multivariate Techniques, 2nd ed.; Sage: Los Angeles, CA, USA, 2013. [Google Scholar]

- Butler, B.E.; Trainor, L.J. Sequencing the Cortical Processing of Pitch-Evoking Stimuli Using EEG Analysis and Source Estimation. Front. Psychol. 2012, 3, 180. [Google Scholar] [CrossRef] [Green Version]

- Näätänen, R.; Picton, T. The N1 wave of the human electric and magnetic response to sound: A review and an analysis of the component structure. Psychophysiology 1987, 24, 375–425. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A.; Campbell, J.; Cardon, G. Developmental and cross-modal plasticity in deafness: Evidence from the P1 and N1 event related potentials in cochlear implanted children. Int. J. Psychophysiol. 2015, 95, 135–144. [Google Scholar] [CrossRef] [Green Version]

- Näätänen, R.; Winkler, I. The concept of auditory stimulus representation in cognitive neuroscience. Psychol. Bull. 1999, 125, 826–859. [Google Scholar] [CrossRef]

- Giard, M.H.; Perrin, F.; Echallier, J.F.; Thévenet, M.; Froment, J.C.; Pernier, J. Dissociation of temporal and frontal components in the human auditory N1 wave: A scalp current density and dipole model analysis. Electroencephalogr. Clin. Neurophysiol. Evoked Potentials Sect. 1994, 92, 238–252. [Google Scholar] [CrossRef]

- Sharma, A.; Martin, K.; Roland, P.; Bauer, P.; Sweeney, M.H.; Gilley, P.; Dorman, M. P1 latency as a biomarker for central auditory development in children with hearing impairment. J. Am. Acad. Audiol. 2005, 16, 564–573. [Google Scholar] [CrossRef]

- Tremblay, K.; Kraus, N.; McGee, T.; Ponton, C.; Otis, B. Central auditory plasticity: Changes in the N1-P2 complex after speech-sound training. Ear Hear. 2001, 22, 79–90. [Google Scholar] [CrossRef]

- Seither-Preisler, A.; Schneider, P. Positive Effekte des Musizierens auf Wahrnehmung und Kognition aus Neurowissenschaftlicher Perspektive. In Musik und Medizin: Chancen für Therapie, Prävention und Bildung; Bernatzky, G., Kreutz, G., Eds.; Springer Vienna: Vienna, Austria, 2015; pp. 375–393. [Google Scholar]

- Goswami, U. A temporal sampling framework for developmental dyslexia. Trends Cogn. Sci. 2011, 15, 3–10. [Google Scholar] [CrossRef] [PubMed]

- Fiveash, A.; Bedoin, N.; Gordon, R.L.; Tillmann, B. Processing rhythm in speech and music: Shared mechanisms and implications for developmental speech and language disorders. Neuropsychology 2021, 35, 771–791. [Google Scholar] [CrossRef]

- Trainor, L.J.; Shahin, A.; Roberts, L.E. Effects of musical training on the auditory cortex in children. Ann. N. Y. Acad. Sci. 2003, 999, 506–513. [Google Scholar] [CrossRef]

- Baumann, S.; Meyer, M.; Jäncke, L. Enhancement of auditory-evoked potentials in musicians reflects an influence of expertise but not selective attention. J. Cogn. Neurosci. 2008, 20, 2238–2249. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Halwani, G.F.; Loui, P.; Rüber, T.; Schlaug, G. Effects of practice and experience on the arcuate fasciculus: Comparing singers, instrumentalists, and non-musicians. Front. Psychol. 2011, 2, 156. [Google Scholar] [CrossRef] [Green Version]

- Kleber, B.; Veit, R.; Birbaumer, N.; Gruzelier, J.; Lotze, M. The brain of opera singers: Experience-dependent changes in functional activation. Cereb. Cortex 2010, 20, 1144–1152. [Google Scholar] [CrossRef] [Green Version]

- Nan, Y.; Liu, L.; Geiser, E.; Shu, H.; Gong, C.C.; Dong, Q.; Gabrieli, J.D.E.; Desimone, R. Piano training enhances the neural processing of pitch and improves speech perception in Mandarin-speaking children. Proc. Natl. Acad. Sci. USA 2018, 115, E6630–E6639. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Landerl, K.; Ramus, F.; Moll, K.; Lyytinen, H.; Leppänen, P.H.T.; Lohvansuu, K.; O’Donovan, M.; Williams, J.; Bartling, J.; Bruder, J. Predictors of developmental dyslexia in European orthographies with varying complexity. J. Child Psychol. Psychiatry 2013, 54, 686–694. [Google Scholar] [CrossRef]

- Schlaug, G.; Norton, A.; Marchina, S.; Zipse, L.; Wan, C.Y. From singing to speaking: Facilitating recovery from nonfluent aphasia. Future Neurol. 2010, 5, 657–665. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Falk, S.; Schreier, R.; Russo, F.A. Singing and stuttering. In The Routledge Companion to Interdisciplinary Studies in Singing: Volume III: Wellbeing; Heydon, R., Fancourt, D., Cohen, A.J., Eds.; Routledge: New York, NY, USA, 2020; pp. 50–60. [Google Scholar]

- Stager, S.V.; Jeffries, K.J.; Braun, A.R. Common features of fluency-evoking conditions studied in stuttering subjects and controls: An H215O PET study. J. Fluen. Disord. 2003, 28, 319–336. [Google Scholar] [CrossRef] [PubMed]

| Variables | Controls: Mean ± SE | Controls: Min.|Max. | Dyslexia: Mean ± SE | Dyslexia: Min.|Max. | p | r |

|---|---|---|---|---|---|---|

| Mandarin D: Tone discrimination + | 6.27 ± 1.71 | 2.00|8.00 | 5.23 ± 1.42 | 3.00|8.00 | p < 0.021 | r = 0.32 |

| Mandarin S: Syllable tone recognition + | 7.19 ± 0.32 | 4.00|10.00 | 5.46 ± 0.39 | 1.00|9.00 | p < 0.001 | r = 0.43 |

| Mandarin P + | 4.50 ± 0.22 | 2.34|6.03 | 3.26 ± 0.20 | 1.71|5.64 | p < 0.000 | r = 0.51 |

| Singing total + | 6.47 ± 0.22 | 4.19|8.81 | 5.38 ± 0.17 | 3.81|6.81 | p < 0.000 | r = 0.49 |

| AMMA total + | 52.77 ± 1.53 | 41.00|76.00 | 44.69 ± 1.48 | 30.00|58.00 | p < 0.000 | r = 0.47 |

| Duration | 39.78 ± 3.78 | 9.50|107.70 | 50.04 ± 4.67 | 15.70|93.80 | p < 0.094 | r = 0.26 |

| Tone Frequency + | 14.87 ± 2.68 | 1.30|70.80 | 45.29 ± 5.30 | 5.10|104.60 | p < 0.000 | r = 0.55 |

| Behavioral Predictors | |

|---|---|

| Tone frequency | −0.621 |

| Mandarin P | 0.537 |

| Singing total | 0.506 |

| AMMA total | 0.482 |

| Mandarin S: Syllable tone recognition | 0.432 |

| Mandarin D: Tone discrimination | 0.302 |

| Duration | −0.217 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Christiner, M.; Serrallach, B.L.; Benner, J.; Bernhofs, V.; Schneider, P.; Renner, J.; Sommer-Lolei, S.; Groß, C. Examining Individual Differences in Singing, Musical and Tone Language Ability in Adolescents and Young Adults with Dyslexia. Brain Sci. 2022, 12, 744. https://doi.org/10.3390/brainsci12060744

Christiner M, Serrallach BL, Benner J, Bernhofs V, Schneider P, Renner J, Sommer-Lolei S, Groß C. Examining Individual Differences in Singing, Musical and Tone Language Ability in Adolescents and Young Adults with Dyslexia. Brain Sciences. 2022; 12(6):744. https://doi.org/10.3390/brainsci12060744

Chicago/Turabian StyleChristiner, Markus, Bettina L. Serrallach, Jan Benner, Valdis Bernhofs, Peter Schneider, Julia Renner, Sabine Sommer-Lolei, and Christine Groß. 2022. "Examining Individual Differences in Singing, Musical and Tone Language Ability in Adolescents and Young Adults with Dyslexia" Brain Sciences 12, no. 6: 744. https://doi.org/10.3390/brainsci12060744

APA StyleChristiner, M., Serrallach, B. L., Benner, J., Bernhofs, V., Schneider, P., Renner, J., Sommer-Lolei, S., & Groß, C. (2022). Examining Individual Differences in Singing, Musical and Tone Language Ability in Adolescents and Young Adults with Dyslexia. Brain Sciences, 12(6), 744. https://doi.org/10.3390/brainsci12060744