Effects of Audiovisual Interactions on Working Memory Task Performance—Interference or Facilitation

Abstract

:1. Introduction

1.1. Study of Working Memory

1.2. Study of Audiovisual Interactions

2. Materials and Methods

2.1. Participants

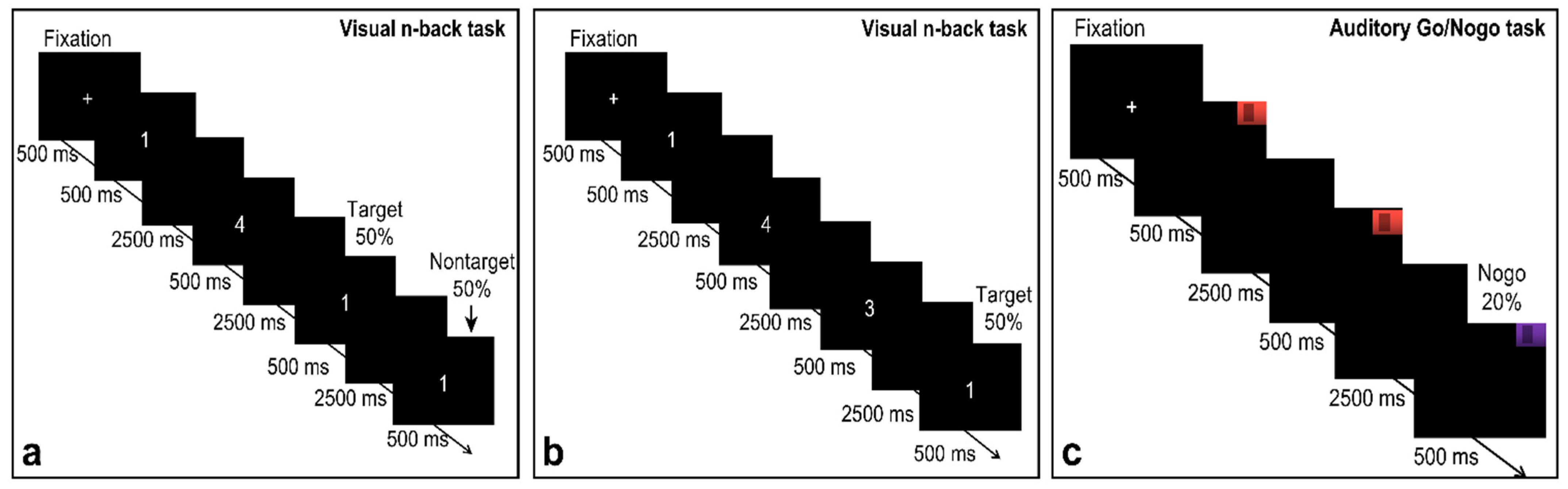

2.2. Tasks and Procedure

2.2.1. Single Visual Working Memory Task

2.2.2. Single Auditory Working Memory Task

2.2.3. Working Memory Tasks Involving Audiovisual Interactions

2.3. Statistical Analysis

3. Results

3.1. Comparisons between the Single Visual n-Back Task and Dual Visual n-Back + Auditory Go/NoGo Task

3.2. Comparisons between the Single Auditory Go/NoGo Task and Dual Auditory Go/NoGo + Visual n-Back Task

4. Discussion

Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Baddeley, A.D.; Thomson, N.; Buchanan, M. Word length and the structure of short-term memory. J. Verbal Learn. Verbal Behav. 1975, 14, 575–589. [Google Scholar] [CrossRef]

- Baddeley, A. Working memory: Theories, models, and controversies. Annu. Rev. Psychol. 2012, 63, 1–29. [Google Scholar] [CrossRef] [Green Version]

- Baddeley, A.D.; Hitch, G.J.; Allen, R.J. Working memory and binding in sentence recall. J. Mem. Lang. 2009, 61, 438–456. [Google Scholar] [CrossRef]

- Baddeley, A. Working memory. Science 1992, 255, 556–559. [Google Scholar] [CrossRef]

- Renlai, Z.; Xin, Z. From “Dwarf” to “Giant”: The research of the central executive system in working memory. J. Northwest Norm. Univ. 2010, 47, 82–89. [Google Scholar] [CrossRef]

- Xin, Z.; Renlai, Z. Working memory: Critical role in human cognition. J. Beijing Norm. Univ. (Soc. Sci.) 2010, 5, 38–44. [Google Scholar] [CrossRef]

- Barrouillet, P.; Mignon, M.; Thevenot, C. Strategies in subtraction problem solving in children. J. Exp. Child Psychol. 2008, 99, 233–251. [Google Scholar] [CrossRef] [Green Version]

- Bateman, J.E.; Birney, D.P. The link between working memory and fluid intelligence is dependent on flexible bindings, not systematic access or passive retention. Acta Psychol. 2019, 199, 102893. [Google Scholar] [CrossRef]

- Zhang, L.; Cai, D.; Ren, S.; Psychology, D.O.; University, S.N. Working memory training and the transfer effects on the math skills. J. Psychol. Sci. 2019, 42, 1120–1126. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, W.; Duan, H.; Zhao, Y.; Kan, Y.; Hu, W. Effect of working memory on insight and analytic problem solving. J. Psychol. Sci. 2019, 42, 777–783. [Google Scholar]

- Karr, J.E.; Areshenkoff, C.N.; Rast, P.; Hofer, S.M.; Iverson, G.L.; Garcia-Barrera, M.A. The unity and diversity of executive functions: A systematic review and re-analysis of latent variable studies. Psychol. Bull. 2018, 144, 1147–1185. [Google Scholar] [CrossRef]

- Friedman, N.P.; Miyake, A. Unity and diversity of executive functions: Individual differences as a window on cognitive structure. Cortex 2017, 86, 186–204. [Google Scholar] [CrossRef] [Green Version]

- Collette, F.; Van der Linden, M. Brain imaging of the central executive component of working memory. Neurosci. Biobehav. Rev. 2002, 26, 105–125. [Google Scholar] [CrossRef] [Green Version]

- Nee, D.E.; Brown, J.W.; Askren, M.K.; Berman, M.G.; Demiralp, E.; Krawitz, A.; Jonides, J. A meta-analysis of executive components of working memory. Cereb. Cortex 2012, 23, 264–282. [Google Scholar] [CrossRef] [Green Version]

- Miyake, A.; Friedman, N.P. The nature and organization of individual differences in executive functions: Four general conclusions. Curr. Dir. Psychol. Sci. 2012, 21, 8–14. [Google Scholar] [CrossRef]

- Miyake, A.; Friedman, N.P.; Emerson, M.J.; Witzki, A.H.; Howerter, A.; Wager, T.D. The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: A latent variable analysis. Cogn. Psychol. 2000, 41, 49–100. [Google Scholar] [CrossRef] [Green Version]

- Tianyong, C.; Deming, L. The diversity of executive functions in normal adults: A latent variable analysis. Acta Psychol. Sin. 2005, 37, 210–217. [Google Scholar] [CrossRef]

- Schmidt, M.; Egger, F.; Benzing, V.; Jäger, K.; Conzelmann, A.; Roebers, C.M.; Pesce, C. Disentangling the relationship between children’s motor ability, executive function and academic achievement. PLoS ONE 2017, 12, e0182845. [Google Scholar] [CrossRef]

- Xin, Z.; Xiaoning, H. The Training of Updating Function: Content, Effect and Mechanism. Chin. J. Clin. Psychol. 2016, 24, 7. [Google Scholar] [CrossRef]

- Xin, Z.; Renlai, Z. Sub-function Evaluation Method of Central Executive System in Working Memory. Chin. J. Clin. Psychol. 2011, 19, 5. [Google Scholar] [CrossRef]

- Diamond, A. Executive Functions. Annu. Rev. Psychol. 2012, 64, 135–168. [Google Scholar] [CrossRef] [Green Version]

- Bredemeiersupa, S.N.S.K. Individual differences at high perceptual load: The relation between trait anxiety and selective attention. Cogn. Emot. 2011, 25, 747–755. [Google Scholar] [CrossRef]

- Qinxue, L.; Juyuan, Z.; Yue, L. The Relationship between College Students’ Smartphone Addiction and Inhibitory Control: The Moderating Roles of Phone Position and Cognitive Load. Psychol. Dev. Educ. 2021, 37, 257–265. [Google Scholar] [CrossRef]

- Luck, S.; Vogel, E. The capacity of visual working memory for features and conjunctions. Nature 1997, 37, 279–281. [Google Scholar] [CrossRef]

- Lavie, N.; Hirst, A.; de Fockert, J.W.; Viding, E. Load theory of selective attention and cognitive control. J. Exp. Psychol. 2004, 133, 339–354. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Ku, Y. Perceiving better, inhibiting better: Effects of perceptual precision on distractor-inhibition processes during working memory. Acta Psychol. Sin. 2017, 49, 1247–1255. [Google Scholar] [CrossRef]

- Russell, E.; Costa, F.J. Inhibition, interference, and conflict in task switching. Psychon. Bull. Rev. 2012, 19, 1193–1201. [Google Scholar] [CrossRef] [Green Version]

- Kraus, M.S.; Gold, J.M.; Barch, D.M.; Walker, T.M.; Chun, C.A.; Buchanan, R.W.; Csernansky, J.G.; Goff, D.C.; Green, M.F.; Jarskog, L.F. The characteristics of cognitive neuroscience tests in a schizophrenia cognition clinical trial: Psychometric properties and correlations with standard measures. Schizophr. Res. Cogn. 2019, 19, 100161. [Google Scholar] [CrossRef]

- Madireddy, S.; Madireddy, S. Regulation of Reactive Oxygen Species-Mediated Damage in the Pathogenesis of Schizophrenia. Brain Sci. 2020, 10, 742. [Google Scholar] [CrossRef]

- Halperin, J.M.; Marks, D.J.; Chacko, A.; Bedard, A.-C.; O’Neill, S.; Curchack-Lichtin, J.; Bourchtein, E.; Berwid, O.G. Training executive, attention, and motor skills (TEAMS): A preliminary randomized clinical trial of preschool youth with ADHD. J. Abnorm. Child Psychol. 2019, 48, 375–389. [Google Scholar] [CrossRef]

- Xixi, Z.; Li, S.; Changming, W. An event related potential study of working memory in adults with attention deficit/hyperactivity disorder. Chin. J. Psychiatry 2020, 53, 406–413. [Google Scholar] [CrossRef]

- Chainay, H.; Gaubert, F. Affective and cognitive theory of mind in Alzheimer’s disease: The role of executive functions. J. Clin. Exp. Neuropsychol. 2020, 42, 371–386. [Google Scholar] [CrossRef] [PubMed]

- Fetta, A.; Carati, E.; Moneti, L.; Pignataro, V.; Angotti, M.; Bardasi, M.C.; Cordelli, D.M.; Franzoni, E.; Parmeggiani, A. Relationship between Sensory Alterations and Repetitive Behaviours in Children with Autism Spectrum Disorders: A Parents’ Questionnaire Based Study. Brain Sci. 2021, 11, 484. [Google Scholar] [CrossRef]

- Perry, G.; Polito, V.; Thompson, W.F. Rhythmic Chanting and Mystical States across Traditions. Brain Sci. 2021, 11, 101. [Google Scholar] [CrossRef]

- Hartcher-O’Brien, J.; Gallace, A.; Krings, B.; Koppen, C.; Spence, C. When vision ‘extinguishes’ touch in neurologically-normal people: Extending the Colavita visuadominance effect. Exp. Brain Res. 2008, 186, 643. [Google Scholar] [CrossRef]

- Pick, H.L.; Warren, D.H.; Hay, J.C. Sensory conflict in judgments of spatial direction. Atten. Percept. Psychophys. 1969, 6, 203–205. [Google Scholar] [CrossRef]

- Gallace, A.; Spence, C. Visual capture of apparent limb position influences tactile temporal order judgments. Neurosci. Lett. 2005, 379, 63–68. [Google Scholar] [CrossRef]

- Colavita, F.B. Human sensory dominance. Percept. Psychophys. 1974, 16, 409–412. [Google Scholar] [CrossRef] [Green Version]

- Feng, Z.; Yang, J. Research on information processing mechanism of human visual and auditory information based on selective attention. J. Chang. Univ. Sci. Technol. 2018, 41, 131–135. [Google Scholar]

- Zampini, M.; Shore, D.I.; Spence, C. Audiovisual prior entry. Neurosci. Lett. 2004, 381, 217–222. [Google Scholar] [CrossRef]

- Vibell, J.; Klinge, C.; Zampini, M.; Spence, C.; Nobre, A.C. Temporal order is coded temporally in the brain: Early ERP latency shifts underlying prior entry in a crossmodal temporal order judgment task. J. Cogn. Neurosci. 2007, 19, 109–120. [Google Scholar] [CrossRef]

- McGurk, H.; Macdonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef]

- Keil, J.; Muller, N.; Ihssen, N.; Weisz, N. On the variability of the McGurk effect: Audiovisual integration depends on prestimulus brain states. Cereb. Cortex 2012, 22, 221–231. [Google Scholar] [CrossRef] [Green Version]

- Gau, R.; Noppeney, U. How prior expectations shape multisensory perception. NeuroImage 2015, 124, 876–886. [Google Scholar] [CrossRef]

- Diaconescu, A.O.; Alain, C.; McIntosh, A.R. The co-occurrence of multisensory facilitation and cross-modal conflict in the human brain. J. Neurophysiol. 2011, 106, 2896–2909. [Google Scholar] [CrossRef] [Green Version]

- Shams, L.; Ma, W.J.; Beierholm, U. Sound-induced flash illusion as an optimal percept. NeuroReport 2005, 16, 1923–1927. [Google Scholar] [CrossRef]

- Meylan, R.V.; Murray, M.M. Auditory–visual multisensory interactions attenuate subsequent visual responses in humans. NeuroImage 2007, 35, 244–254. [Google Scholar] [CrossRef]

- Shams, L.; Kamitani, Y.; Shimojo, S. Visual illusion induced by sound. Cogn. Brain Res. 2002, 14, 147–152. [Google Scholar] [CrossRef]

- Mishra, J.; Martínez, A.; Hillyard, S.A. Effect of attention on early cortical processes associated with the sound-induced extra flash illusion. J. Cogn. Neurosci. 2010, 22, 1714–1729. [Google Scholar] [CrossRef]

- Mishra, J.; Martinez, A.; Sejnowski, T.J.; Hillyard, S.A. Early cross-modal interactions in auditory and visual cortex underlie a sound-induced visual illusion. J. Neurosci. 2007, 27, 4120–4131. [Google Scholar] [CrossRef] [Green Version]

- Apthorp, D.; Alais, D.; Boenke, L.T. Flash illusions induced by visual, auditory, and audiovisual stimuli. J. Vis. 2013, 13, 3. [Google Scholar] [CrossRef] [Green Version]

- Wang, A.; Huang, J.; Lu, F.; He, J.; Tang, X.; Zhang, M. Sound-induced flash illusion in multisensory integration. Adv. Psychol. Sci. 2020, 28, 1662–1677. [Google Scholar] [CrossRef]

- Mason, S.G.; Allison, B.Z.; Wolpaw, J.R. BCI operating protocols. In Brain–Computer Interfaces Principles and Practice; Wolpaw, J.R., Wolpaw, E.W., Eds.; Oxford University Press: New York, NY, USA, 2012; pp. 190–195. [Google Scholar]

- Breitling-Ziegler, C.; Tegelbeckers, J.; Flechtner, H.-H.; Krauel, K. Economical assessment of working memory and response inhibition in ADHD using a combined n-back/nogo paradigm: An ERP study. Front. Hum. Neurosci. 2020, 14, 322. [Google Scholar] [CrossRef] [PubMed]

- Chaolu, Q. Significance of study of the size of effect and its testing methods. Psychol. Explor. 2003, 23, 39–44. [Google Scholar]

- Yeung, M.K.; Lee, T.L.; Han, Y.M.Y.; Chan, A.S. Prefrontal activation and pupil dilation during n-back task performance: A combined fNIRS and pupillometry study. Neuropsychologia 2021, 159, 107954. [Google Scholar] [CrossRef]

- Carriedo, N.; Corral, A.; Montoro, P.R.; Herrero, L.; Rucián, M. Development of the updating executive function: From 7-year-olds to young adults. Dev. Psychol. 2016, 52, 666–678. [Google Scholar] [CrossRef]

- Pergher, V.; Wittevrongel, B.; Tournoy, J.; Schoenmakers, B.; Van Hulle, M.M. Mental workload of young and older adults gauged with ERPs and spectral power during N-Back task performance. Biol. Psychol. 2019, 146, 107726. [Google Scholar] [CrossRef]

- Wang, X.; Wang, D.; Wang, Y.; Sun, Q.; Bao, X.; Liu, C.; Liu, Y. Compared to outcome pressure, observation pressure produces differences in performance of N-back tasks. NeuroReport 2019, 30, 771–775. [Google Scholar] [CrossRef]

- Menon, V.; Adleman, N.E.; White, C.D.; Glover, G.H.; Reiss, A.L. Error-related brain activation during a Go/NoGo response inhibition task. Hum. Brain Mapp. 2015, 12, 131–143. [Google Scholar] [CrossRef]

- Adrian, J.; Moessinger, M.; Charles, A.; Postal, V. Exploring the contribution of executive functions to on-road driving performance during aging: A latent variable analysis. Accid. Anal. Prev. 2019, 127, 96–109. [Google Scholar] [CrossRef]

- Evans, K.K.; Treisman, A. Natural cross-modal mappings between visual and auditory features. J. Vis. 2010, 10, 96–109. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Loomis, J.M.; Klatzky, R.L.; McHugh, B.; Giudice, N.A. Spatial working memory for locations specified by vision and audition: Testing the amodality hypothesis. Atten. Percept. Psychophys. 2012, 74, 1260–1267. [Google Scholar] [CrossRef] [Green Version]

- Harvey, P.-O.; Fossati, P.; Pochon, J.-B.; Levy, R.; LeBastard, G.; Lehéricy, S.; Allilaire, J.-F.; Dubois, B. Cognitive control and brain resources in major depression: An fMRI study using the n-back task. NeuroImage 2005, 26, 860–869. [Google Scholar] [CrossRef] [PubMed]

- Román, F.J.; García-Rubio, M.J.; Privado, J.; Kessel, D.; López-Martín, S.; Martínez, K.; Shih, P.-C.; Tapia, M.; Serrano, J.M.; Carretié, L.; et al. Adaptive working memory training reveals a negligible effect of emotional stimuli over cognitive processing. Pers. Individ. Differ. 2015, 74, 165–170. [Google Scholar] [CrossRef]

- Cowan, N. Working Memory Capacity: Classic Edition; Routledge: New York, NY, USA, 2016. [Google Scholar]

- Horat, S.K.; Herrmann, F.R.; Favre, G.; Terzis, J.; Debatisse, D.; Merlo, M.C.G.; Missonnier, P. Assessment of mental workload: A new electrophysiological method based on intra-block averaging of ERP amplitudes. Neuropsychologia 2016, 82, 11–17. [Google Scholar] [CrossRef] [Green Version]

- Wickens, C.; Kramer, A.; Vanasse, L.; Donchin, E. Performance of concurrent tasks: A psychophysiological analysis of the reciprocity of information-processing resources. Science 1983, 221, 1080–1082. [Google Scholar] [CrossRef]

- Dehais, F.; Roy, R.N.; Scannella, S. Inattentional deafness to auditory alarms: Inter-individual differences, electrophysiological signature and single trial classification. Behav. Brain Res. 2019, 360, 51–59. [Google Scholar] [CrossRef] [Green Version]

- Marsh, J.E.; Campbell, T.A.; Vachon, F.; Taylor, P.J.; Hughes, R.W. How the deployment of visual attention modulates auditory distraction. Atten. Percept. Psychophys. 2020, 82, 350–362. [Google Scholar] [CrossRef] [Green Version]

- Misselhorn, J.; Friese, U.; Engel, A.K. Frontal and parietal alpha oscillations reflect attentional modulation of cross-modal matching. Sci. Rep. 2019, 9, 5030. [Google Scholar] [CrossRef] [Green Version]

- Thelen, A.; Cappe, C.; Murray, M.M. Electrical neuroimaging of memory discrimination based on single-trial multisensory learning. NeuroImage 2012, 62, 1478–1488. [Google Scholar] [CrossRef]

- Bigelow, J.; Poremba, A. Audiovisual integration facilitates monkeys’ short-term memory. Anim. Cogn. 2016, 19, 799–811. [Google Scholar] [CrossRef] [PubMed]

- Heikkilä, J.; Alho, K.; Hyvönen, H.; Tiippana, K. Audiovisual semantic congruency during encoding enhances memory performance. Exp. Psychol. 2015, 62, 123–130. [Google Scholar] [CrossRef] [PubMed]

- Alderson, R.M.; Patros, C.H.G.; Tarle, S.J.; Hudec, K.L.; Kasper, L.J.; Lea, S.E. Working memory and behavioral inhibition in boys with ADHD: An experimental examination of competing models. Child Neuropsychol. 2015, 23, 255–272. [Google Scholar] [CrossRef] [PubMed]

- Tellinghuisen, D.J.; Nowak, E.J. The inability to ignore auditory distractors as a function of visual task perceptual load. Percept. Psychophys. 2003, 65, 817–828. [Google Scholar] [CrossRef] [Green Version]

- Duncan, J.; Martens, S.; Ward, R. Restricted attentional capacity within but not between sensory modalities. Nature 1997, 387, 808–810. [Google Scholar] [CrossRef]

- Kiesel, A.; Steinhauser, M.; Wendt, M.; Falkenstein, M.; Jost, K.; Philipp, A.M.; Koch, I. Control and interference in task switching—a review. Psychol. Bull. 2010, 136, 849–874. [Google Scholar] [CrossRef] [Green Version]

- Kreutzfeldt, M.; Stephan, D.N.; Willmes, K.; Koch, I. Modality-specific preparatory influences on the flexibility of cognitive control in task switching. J. Cogn. Psychol. 2017, 29, 607–617. [Google Scholar] [CrossRef]

- Lehnert, G.; Zimmer, H.D. Auditory and visual spatial working memory. Mem. Cogn. 2006, 34, 1080–1090. [Google Scholar] [CrossRef] [Green Version]

- Ernst, M.O.; Bülthoff, H.H. Merging the senses into a robust percept. Trends Cogn. Sci. 2004, 8, 162–169. [Google Scholar] [CrossRef]

- Morein-Zamir, S.; Soto-Faraco, S.; Kingstone, A. Auditory capture of vision: Examining temporal ventriloquism. Cogn. Brain Res. 2003, 17, 154–163. [Google Scholar] [CrossRef]

- Sinnett, S.; Spence, C.; Soto-Faraco, S. Visual dominance and attention: The Colavita effect revisited. Percept. Psychophys. 2007, 69, 673–686. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xingwei, A.; Yong, C.; Xuejun, J.; Dong, M. Research on cognitive mechanism and brain-computer interface application in visual-auditory crossmodal stimuli. J. Electron. Meas. Instrum. 2017, 31, 983–993. [Google Scholar] [CrossRef]

- Huang, S.; Li, Y.; Zhang, W.; Zhang, B.; Liu, X.; Mo, L.; Chen, Q. Multisensory competition is modulated by sensory pathway interactions with fronto-sensorimotor and default-mode network regions. J. Neurosci. 2015, 35, 9064–9077. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Welch, R.B.; Warren, D.H. Immediate perceptual response to intersensory discrepancy. Psychol. Bull. 1980, 88, 638–667. [Google Scholar] [CrossRef] [PubMed]

- Spence, C. Explaining the Colavita visual dominance effect. Progress. Brain Res. 2009, 176, 245–258. [Google Scholar] [CrossRef]

- Warren, D.H. Spatial localization under conflict conditions: Is there a single explanation? Perception 1979, 8, 323–337. [Google Scholar] [CrossRef]

- Koppen, C.; Spence, C. Audiovisual asynchrony modulates the Colavita visual dominance effect. Brain Res. 2007, 1186, 224–232. [Google Scholar] [CrossRef]

- Colavita, F.B.; Weisberg, D. A further investigation of visual dominance. Percept. Psychophys. 1979, 25, 345–347. [Google Scholar] [CrossRef]

- Spence, C.; Parise, C.; Chen, Y.C. The colavita visual dominance effect. In The Neural Bases of Multisensory Processes; Murray, M.M., Wallace, M.T., Eds.; CRC Press/Taylor & Francis: Boca Raton, FL, USA, 2012; pp. 523–550. [Google Scholar]

- Ciraolo, M.F.; O’Hanlon, S.M.; Robinson, C.W.; Sinnett, S. Stimulus onset modulates auditory and visual dominance. Vision 2020, 4, 14. [Google Scholar] [CrossRef] [Green Version]

- Robinson, C.W.; Sloutsky, V.M. Development of cross-modal processing. Wiley Interdiscip. Rev. Cogn. Sci. 2010, 1, 135–141. [Google Scholar] [CrossRef]

- Kimura, M.; Katayama, J.; Murohashi, H. Underlying mechanisms of P3a-task-difficulty effect. Psychophysiology 2008, 45, 731–741. [Google Scholar] [CrossRef] [PubMed]

| Single-Task Group | Dual-Task Group | t | p | rpb2 | |

|---|---|---|---|---|---|

| n | 44 | 44 | - | - | - |

| Age in years | 20.05 ± 1.08 | 19.75 ± 1.04 | 1.311 | 0.193 | 0.01 |

| IQ | 598.66 ± 30.86 | 606.25 ± 24.889 | −1.270 | 0.207 | 0.02 |

| Task Type | Memory Condition | Behavioral Results | ||

|---|---|---|---|---|

| Accuracy | Reaction Time | Visual Performance | ||

| Single-task group (visual) | 2-back | 0.94 ± 0.04 | 916.79 ± 254.45 | 0.89 ± 0.07 |

| Dual-task group (audiovisual interaction) | 2-back | 0.94 ± 0.06 | 1099.445 ± 242.55 | 0.86 ± 0.13 |

| Task Type | Memory Condition | Behavioral Results | ||

|---|---|---|---|---|

| Accuracy | Reaction Time | Visual Performance | ||

| Single-task group (visual) | 3-back | 0.90 ± 0.08 | 1004.19 ± 290.54 | 0.79 ± 0.18 |

| Dual-task group (audiovisual interaction) | 3-back | 0.83 ± 0.06 | 1167.97 ± 282.59 | 0.66 ± 0.19 |

| Different Visual Interference Conditions | Task Type | Behavioral Results | |

|---|---|---|---|

| Accuracy | Auditory Performance | ||

| Single-task group (auditory) | 0.98 ± 0.04 | 1.02 ± 0.04 | |

| 2-back | Dual-task group (audiovisual interaction) | 0.96 ± 0.05 | 1.05 ± 0.08 |

| 3-back | Dual-task group (audiovisual interaction) | 0.95 ± 0.06 | 1.06 ± 0.08 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Y.; Guo, Z.; Wang, X.; Sun, K.; Lin, X.; Wang, X.; Li, F.; Guo, Y.; Feng, T.; Zhang, J.; et al. Effects of Audiovisual Interactions on Working Memory Task Performance—Interference or Facilitation. Brain Sci. 2022, 12, 886. https://doi.org/10.3390/brainsci12070886

He Y, Guo Z, Wang X, Sun K, Lin X, Wang X, Li F, Guo Y, Feng T, Zhang J, et al. Effects of Audiovisual Interactions on Working Memory Task Performance—Interference or Facilitation. Brain Sciences. 2022; 12(7):886. https://doi.org/10.3390/brainsci12070886

Chicago/Turabian StyleHe, Yang, Zhihua Guo, Xinlu Wang, Kewei Sun, Xinxin Lin, Xiuchao Wang, Fengzhan Li, Yaning Guo, Tingwei Feng, Junpeng Zhang, and et al. 2022. "Effects of Audiovisual Interactions on Working Memory Task Performance—Interference or Facilitation" Brain Sciences 12, no. 7: 886. https://doi.org/10.3390/brainsci12070886