The Role of the Left Inferior Frontal Gyrus in Introspection during Verbal Communication

Abstract

1. Introduction

2. Methods

2.1. Participants

2.2. Hyperscanning fMRI System

2.3. Stimulus Presentation

2.4. MRI Data Acquisition

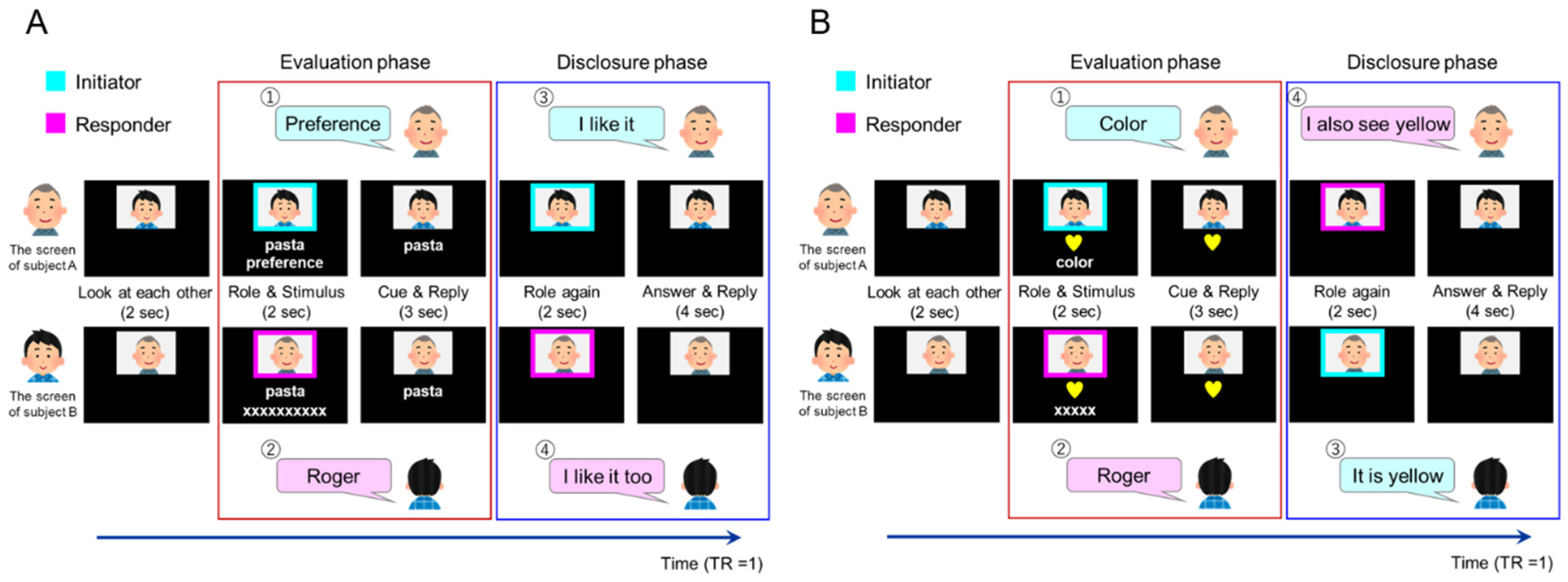

2.5. Task Design

2.5.1. Task Conditions

2.5.2. Introspection (Preference/Familiarity) Tasks

2.5.3. Object Feature Identification Task

2.5.4. Adjustment of the Preference/Familiarity of Target Words

2.6. fMRI Imaging Protocol

2.7. Data Analysis

2.7.1. Image Preprocessing

2.7.2. Statistical Analysis

2.7.3. Generalized PPI Analysis

3. Results

3.1. Behavioral Results

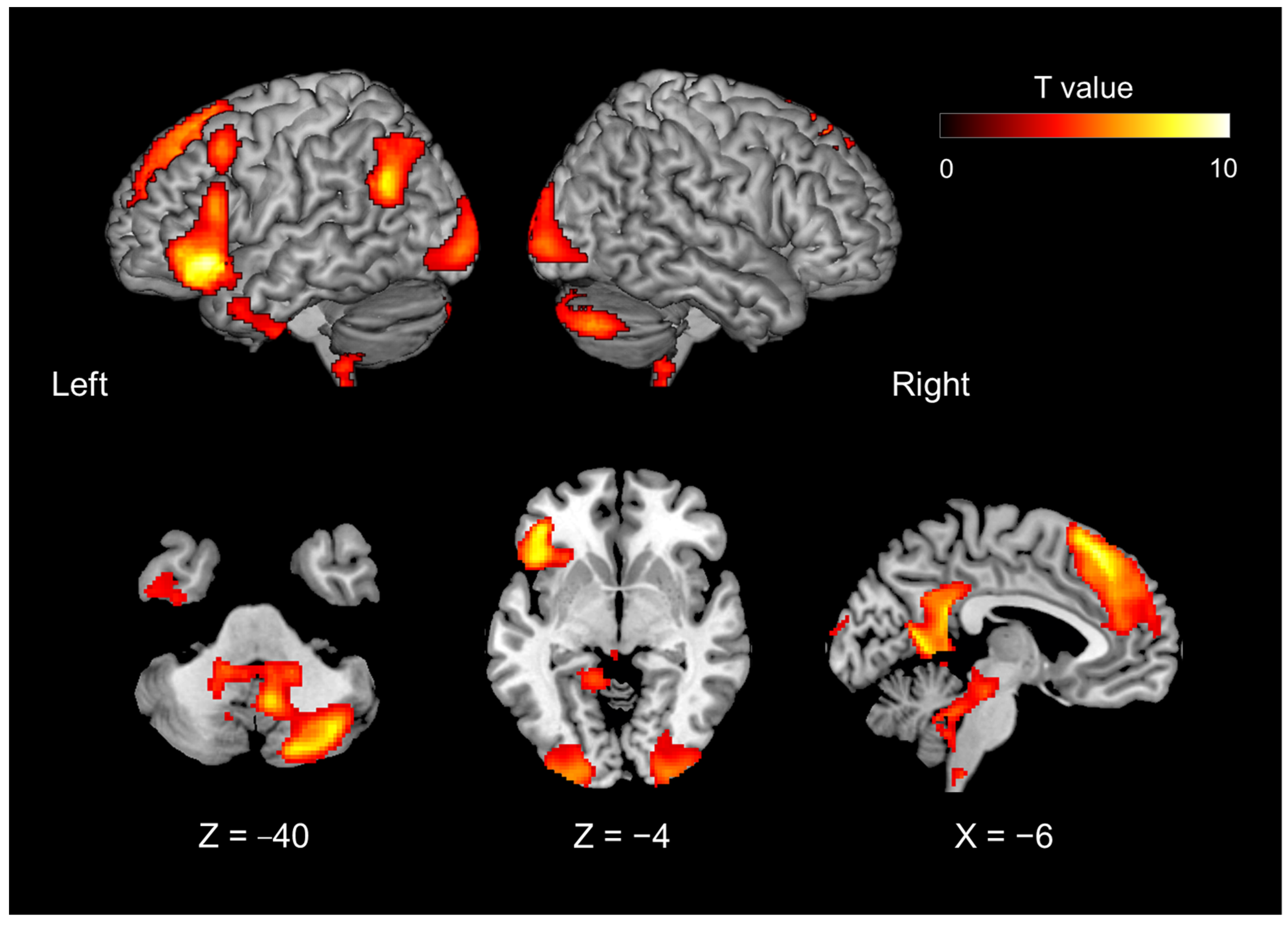

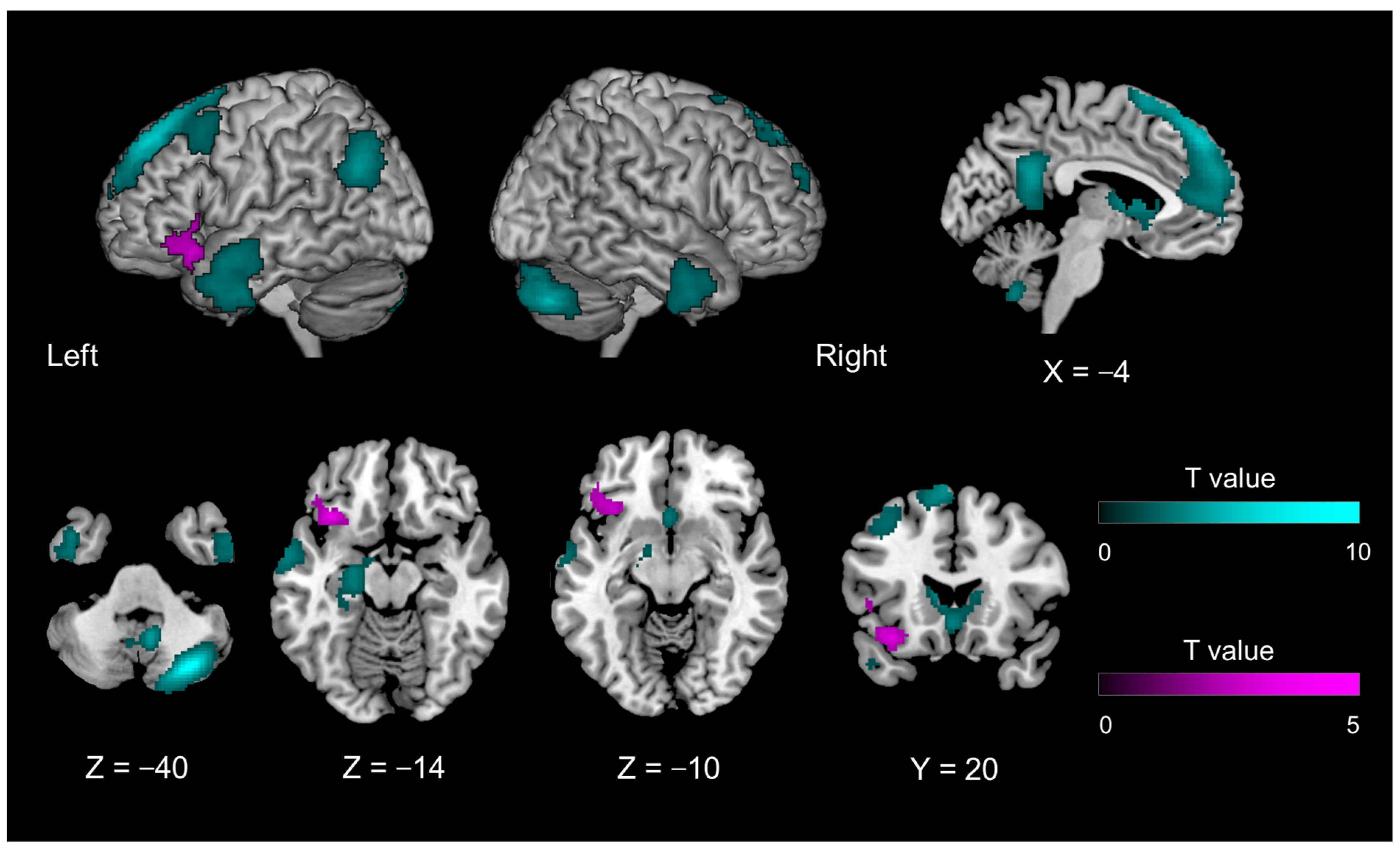

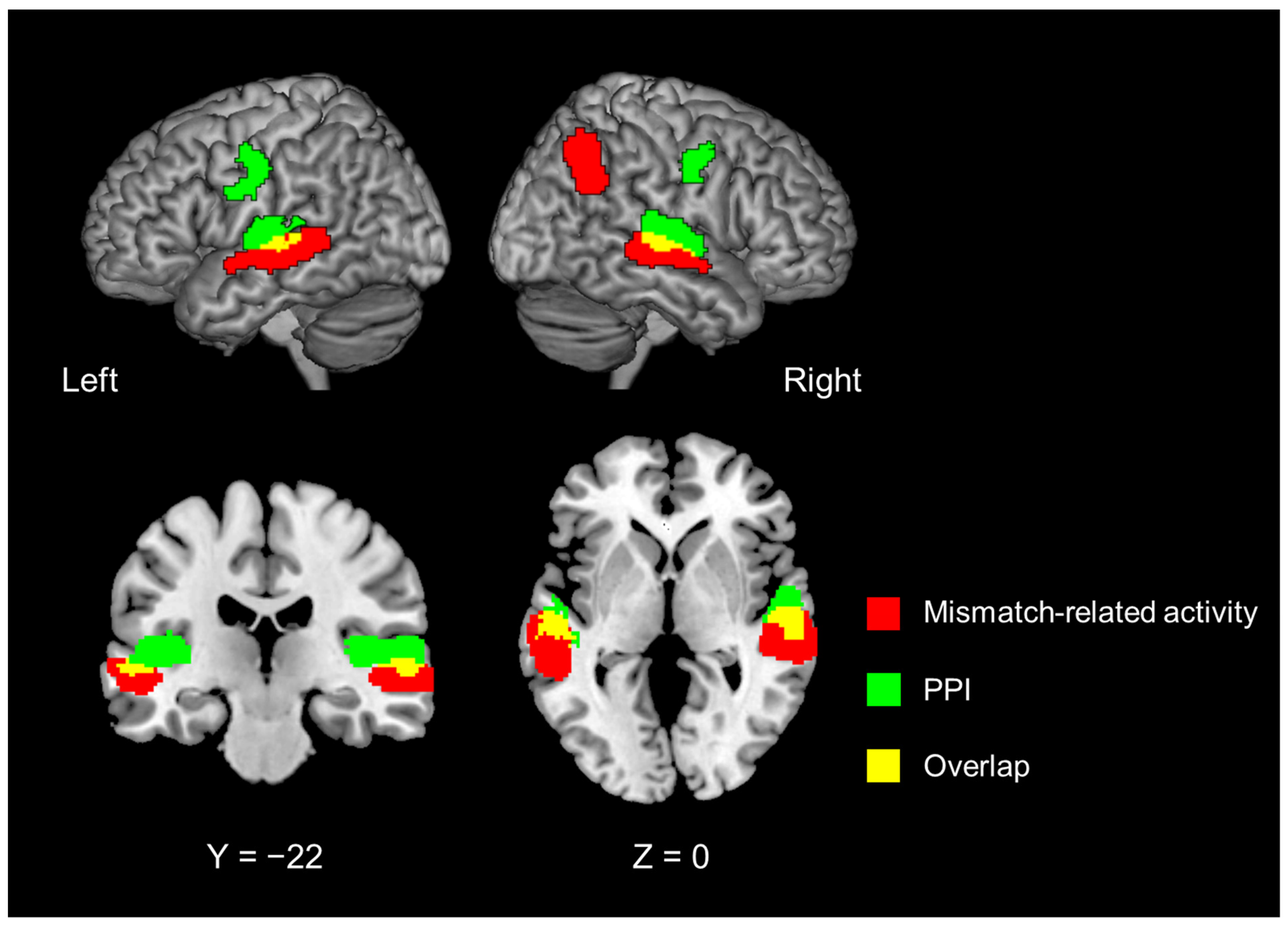

3.2. Functional Imaging Results

4. Discussion

4.1. Introspection-Related Activation of the Evaluation Phase

4.2. Introspection-Specific Mismatch-Specific Activity in the Left IFG during the Disclosure Phase

4.3. Comparison with a Previous Study by Yoshioka et al. [2]

4.4. Limitations and Future Perspective

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tomasello, M.; Carpenter, M.; Call, J.; Behne, T.; Moll, H. Understanding and Sharing Intentions: The Origins of Cultural Cognition. Behav. Brain Sci. 2005, 28, 675–691; discussion 691. [Google Scholar] [CrossRef]

- Yoshioka, A.; Tanabe, H.C.; Sumiya, M.; Nakagawa, E.; Okazaki, S.; Koike, T.; Sadato, N. Neural Substrates of Shared Visual Experiences: A Hyperscanning fMRI Study. Soc. Cogn. Affect. Neurosci. 2021, 16, 1264–1275. [Google Scholar] [CrossRef] [PubMed]

- Garrod, S.; Pickering, M.J. Why Is Conversation so Easy? Trends Cogn. Sci. 2004, 8, 8–11. [Google Scholar] [CrossRef] [PubMed]

- Menenti, L.; Pickering, M.J.; Garrod, S.C. Toward a Neural Basis of Interactive Alignment in Conversation. Front. Hum. Neurosci. 2012, 6, 185. [Google Scholar] [CrossRef]

- Zwaan, R.A.; Radvansky, G.A. Situation Models in Language Comprehension and Memory. Psychol. Bull. 1998, 123, 162–185. [Google Scholar] [CrossRef] [PubMed]

- Sasaki, A.T.; Kochiyama, T.; Sugiura, M.; Tanabe, H.C.; Sadato, N. Neural Networks for Action Representation: A Functional Magnetic-Resonance Imaging and Dynamic Causal Modeling Study. Front. Hum. Neurosci. 2012, 6, 236. [Google Scholar] [CrossRef]

- Wolpert, D.M.; Kawato, M. Multiple Paired Forward and Inverse Models for Motor Control. Neural Netw. 1998, 11, 1317–1329. [Google Scholar] [CrossRef]

- Wolpert, D.M.; Miall, R.C.; Kawato, M. Internal Models in the Cerebellum. Trends Cogn. Sci. 1998, 2, 338–347. [Google Scholar] [CrossRef]

- Penhune, V.B.; Steele, C.J. Parallel Contributions of Cerebellar, Striatal and M1 Mechanisms to Motor Sequence Learning. Behav. Brain Res. 2012, 226, 579–591. [Google Scholar] [CrossRef]

- Kilner, J.M.; Friston, K.J.; Frith, C.D. The Mirror-Neuron System: A Bayesian Perspective. NeuroReport 2007, 18, 619–623. [Google Scholar] [CrossRef]

- Friston, K. A Theory of Cortical Responses. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2005, 360, 815–836. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.; Frith, C. A Duet for One. Conscious. Cogn. 2015, 36, 390–405. [Google Scholar] [CrossRef] [PubMed]

- Miyata, K.; Koike, T.; Nakagawa, E.; Harada, T.; Sumiya, M.; Yamamoto, T.; Sadato, N. Neural Substrates for Sharing Intention in Action During Face-to-Face Imitation. NeuroImage 2021, 233, 117916. [Google Scholar] [CrossRef]

- Friston, K. Hierarchical Models in the Brain. PLoS Comput. Biol. 2008, 4, e1000211. [Google Scholar] [CrossRef]

- Mumford, D. On the Computational Architecture of the Neocortex. II. the Role of Cortico-Cortical Loops. Biol. Cybern. 1992, 66, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Rao, R.P.N.; Ballard, D.H. Hierarchical Predictive Coding Model Hierarchical Predictive Coding of Natural Images. Nat. Neurosci. 1999, 2, 79–87. [Google Scholar] [CrossRef]

- Stefanics, G.; Heinzle, J.; Horváth, A.A.; Stephan, K.E. Visual Mismatch and Predictive Coding: A Computational Single-Trial ERP Study. J. Neurosci. 2018, 38, 4020–4030. [Google Scholar] [CrossRef]

- Shain, C.; Blank, I.A.; Van Schijndel, M.; Schuler, W.; Fedorenko, E. fMRI Reveals Language-Specific Predictive Coding During Naturalistic Sentence Comprehension. Neuropsychologia 2020, 138, 107307. [Google Scholar] [CrossRef]

- Schilbach, L.; Bzdok, D.; Timmermans, B.; Fox, P.T.; Laird, A.R.; Vogeley, K.; Eickhoff, S.B. Introspective Minds: Using ALE Meta-analyses to Study Commonalities in the Neural Correlates of Emotional Processing, Social & Unconstrained Cognition. PLoS ONE 2012, 7, e30920. [Google Scholar] [CrossRef]

- Andrews-Hanna, J.R. The Brain’s Default Network and Its Adaptive Role in Internal Mentation. Neuroscientist 2012, 18, 251–270. [Google Scholar] [CrossRef]

- Sperber, D.; Wilson, D. Relevance: Communication and Cognition; Blackwell: Cambridge, MA, USA, 1986. [Google Scholar]

- Utsumi, A. Verbal Irony as Implicit Display of Ironic Environment: Distinguishing Ironic Utterances from Nonirony. J. Pragmatics 2000, 32, 1777–1806. [Google Scholar] [CrossRef]

- Uchiyama, H.; Seki, A.; Kageyama, H.; Saito, D.N.; Koeda, T.; Ohno, K.; Sadato, N. Neural Substrates of Sarcasm: A Functional Magnetic-Resonance Imaging Study. Brain Res. 2006, 1124, 100–110. [Google Scholar] [CrossRef] [PubMed]

- Matsui, T.; Nakamura, T.; Utsumi, A.; Sasaki, A.T.; Koike, T.; Yoshida, Y.; Harada, T.; Tanabe, H.C.; Sadato, N. The Role of Prosody and Context in Sarcasm Comprehension: Behavioral and fMRI Evidence. Neuropsychologia 2016, 87, 74–84. [Google Scholar] [CrossRef] [PubMed]

- Nakamura, T.; Matsui, T.; Utsumi, A.; Sumiya, M.; Nakagawa, E.; Sadato, N. Context-Prosody Interaction in Sarcasm Comprehension: A Functional Magnetic Resonance Imaging Study. Neuropsychologia 2022, 170, 108213. [Google Scholar] [CrossRef]

- Pineda, J.A. Sensorimotor Cortex as a Critical Component of an “Extended” Mirror Neuron System: Does It Solve the Development, Correspondence, and Control Problems in Mirroring? Behav. Brain Funct. 2008, 4, 47. [Google Scholar] [CrossRef] [PubMed]

- Hickok, G.; Poeppel, D. The Cortical Organization of Speech Processing. Nat. Rev. Neurosci. 2007, 8, 393–402. [Google Scholar] [CrossRef] [PubMed]

- Skeide, M.A.; Friederici, A.D. The Ontogeny of the Cortical Language Network. Nat. Rev. Neurosci. 2016, 17, 323–332. [Google Scholar] [CrossRef]

- Rauschecker, J.P.; Scott, S.K. Maps and Streams in the Auditory Cortex: Nonhuman Primates Illuminate Human Speech Processing. Nat. Neurosci. 2009, 12, 718–724. [Google Scholar] [CrossRef]

- Rauschecker, J.P. Where Did Language Come From? Precursor Mechanisms in Nonhuman Primates. Curr. Opin. Behav. Sci. 2018, 21, 195–204. [Google Scholar] [CrossRef]

- Oldfield, R.C. The Assessment and Analysis of Handedness: The Edinburgh Inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Koike, T.; Tanabe, H.C.; Okazaki, S.; Nakagawa, E.; Sasaki, A.T.; Shimada, K.; Sugawara, S.K.; Takahashi, H.K.; Yoshihara, K.; Bosch-Bayard, J.; et al. Neural Substrates of Shared Attention as Social Memory: A Hyperscanning Functional Magnetic Resonance Imaging Study. Neuroimage 2016, 125, 401–412. [Google Scholar] [CrossRef] [PubMed]

- Feinberg, D.A.; Moeller, S.; Smith, S.M.; Auerbach, E.; Ramanna, S.; Gunther, M.; Glasser, M.F.; Miller, K.L.; Ugurbil, K.; Yacoub, E. Multiplexed Echo Planar Imaging for Sub-second Whole Brain Fmri and Fast Diffusion Imaging. PLoS ONE 2010, 5, e15710. [Google Scholar] [CrossRef] [PubMed]

- Moeller, S.; Yacoub, E.; Olman, C.A.; Auerbach, E.; Strupp, J.; Harel, N.; Uǧurbil, K. Multiband Multislice GE-EPI at 7 tesla, with 16-Fold Acceleration Using Partial Parallel Imaging with Application to High Spatial and Temporal Whole-Brain FMRI. Magn. Reson. Med. 2010, 63, 1144–1153. [Google Scholar] [CrossRef]

- Xu, J.; Moeller, S.; Auerbach, E.J.; Strupp, J.; Smith, S.M.; Feinberg, D.A.; Yacoub, E.; Uǧurbil, K. Evaluation of Slice Accelerations Using Multiband Echo Planar Imaging at 3T. Neuroimage 2013, 83, 991–1001. [Google Scholar] [CrossRef]

- Wager, T.D.; Nichols, T.E. Optimization of Experimental Design in fMRI: A General Framework Using a Genetic Algorithm. Neuroimage 2003, 18, 293–309. [Google Scholar] [CrossRef] [PubMed]

- Ashburner, J. A Fast Diffeomorphic Image Registration Algorithm. Neuroimage 2007, 38, 95–113. [Google Scholar] [CrossRef]

- Ashburner, J.; Friston, K.J. Unified Segmentation. Neuroimage 2005, 26, 839–851. [Google Scholar] [CrossRef]

- Friston, K.J.; Penny, W.; Phillips, C.; Kiebel, S.; Hinton, G.; Ashburner, J. Classical and Bayesian Inference in Neuroimaging: Theory. Neuroimage 2002, 16, 465–483. [Google Scholar] [CrossRef]

- Friston, K.J.; Holmes, A.; Poline, J.B.; Price, C.J.; Frith, C.D. Detecting Activations in PET and fMRI: Levels of Inference and Power. Neuroimage 1996, 4, 223–235. [Google Scholar] [CrossRef]

- Flandin, G.; Friston, K.J. Analysis of Family-Wise Error Rates in Statistical Parametric Mapping Using Random Field Theory. Hum. Brain Mapp. 2019, 40, 2052–2054. [Google Scholar] [CrossRef]

- Mai, J.K.; Majtanik, M.; Paxinos, G. Atlas of the Human Brain, 4th ed.; Academic Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Friston, K.J.; Buechel, C.; Fink, G.R.; Morris, J.; Rolls, E.; Dolan, R.J. Psychophysiological and Modulatory Interactions in Neuroimaging. Neuroimage 1997, 6, 218–229. [Google Scholar] [CrossRef] [PubMed]

- McLaren, D.G.; Ries, M.L.; Xu, G.; Johnson, S.C. A Generalized Form of Context-Dependent Psychophysiological Interactions (GPPI): A Comparison to Standard Approaches. Neuroimage 2012, 61, 1277–1286. [Google Scholar] [CrossRef] [PubMed]

- Whitfield-Gabrieli, S.; Nieto-Castanon, A. Conn: A Functional Connectivity Toolbox for Correlated and Anticorrelated Brain Networks. Brain Connect. 2012, 2, 125–141. [Google Scholar] [CrossRef]

- Eklund, A.; Nichols, T.E.; Knutsson, H. Cluster Failure: Why fMRI Inferences for Spatial Extent Have Inflated False-Positive Rates. Proc. Natl. Acad. Sci. USA 2016, 113, 7900–7905. [Google Scholar] [CrossRef] [PubMed]

- Denny, B.T.; Kober, H.; Wager, T.D.; Ochsner, K.N. A Meta-analysis of Functional Neuroimaging Studies of Self and Other Judgments Reveals a Spatial Gradient for Mentalizing in Medial Prefrontal Cortex. J. Cogn. Neurosci. 2012, 24, 1742–1752. [Google Scholar] [CrossRef]

- Davey, C.G.; Pujol, J.; Harrison, B.J. Mapping the Self in the Brain’s Default Mode Network. Neuroimage 2016, 132, 390–397. [Google Scholar] [CrossRef]

- van Overwalle, F. Social Cognition and the Brain: A Meta-analysis. Hum. Brain Mapp. 2009, 30, 829–858. [Google Scholar] [CrossRef] [PubMed]

- Van Overwalle, F.; Manto, M.; Cattaneo, Z.; Clausi, S.; Ferrari, C.; Gabrieli, J.D.E.; Guell, X.; Heleven, E.; Lupo, M.; Ma, Q.; et al. Consensus Paper: Cerebellum and Social Cognition. Cerebellum 2020, 19, 833–868. [Google Scholar] [CrossRef]

- van Overwalle, F.; van de Steen, F.; van Dun, K.; Heleven, E. Connectivity Between the Cerebrum and Cerebellum During Social and Non-social Sequencing Using Dynamic Causal Modelling. Neuroimage 2020, 206, 116326. [Google Scholar] [CrossRef] [PubMed]

- Badre, D.; Wagner, A.D. Left Ventrolateral Prefrontal Cortex and the Cognitive Control of Memory. Neuropsychologia 2007, 45, 2883–2901. [Google Scholar] [CrossRef]

- Thompson-Schill, S.L.; Bedny, M.; Goldberg, R.F. The Frontal Lobes and the Regulation of Mental Activity. Curr. Opin. Neurobiol. 2005, 15, 219–224. [Google Scholar] [CrossRef]

- Wood, J.N.; Romero, S.G.; Makale, M.; Grafman, J. Category-Specific Representations of Social and Nonsocial Knowledge in the Human Prefrontal Cortex. J. Cogn. Neurosci. 2003, 15, 236–248. [Google Scholar] [CrossRef]

- Heberlein, A.S.; Saxe, R.R. Dissociation Between Emotion and Personality Judgments: Convergent Evidence from Functional Neuroimaging. Neuroimage 2005, 28, 770–777. [Google Scholar] [CrossRef]

- Morin, A.; Michaud, J. Self-Awareness and the Left Inferior Frontal Gyrus: Inner Speech Use during Self-Related Processing. Brain Res. Bull. 2007, 74, 387–396. [Google Scholar] [CrossRef]

- Kana, R.K.; Sartin, E.B.; Stevens, C.; Deshpande, H.D.; Klein, C.; Klinger, M.R.; Klinger, L.G. Neural Networks Underlying Language and Social Cognition During Self–Other Processing in Autism Spectrum Disorders. Neuropsychologia 2017, 102, 116–123. [Google Scholar] [CrossRef]

- Uchiyama, Y.; Toyoda, H.; Honda, M.; Yoshida, H.; Kochiyama, T.; Ebe, K.; Sadato, N. Functional Segregation of the Inferior Frontal Gyrus for Syntactic Processes: A Functional Magnetic-Resonance Imaging Study. Neurosci. Res. 2008, 61, 309–318. [Google Scholar] [CrossRef]

- Dapretto, M.; Bookheimer, S.Y. Form and Content: Dissociating Syntax and Semantics in Sentence Comprehension. Neuron 1999, 24, 427–432. [Google Scholar] [CrossRef]

- Kelley, W.M.; Macrae, C.N.; Wyland, C.L.; Caglar, S.; Inati, S.; Heatherton, T.F. Finding the Self? An Event-Related fMRI Study. J. Cogn. Neurosci. 2002, 14, 785–794. [Google Scholar] [CrossRef]

- Lombardo, M.V.; Chakrabarti, B.; Bullmore, E.T.; Sadek, S.A.; Pasco, G.; Wheelwright, S.J.; Suckling, J.; MRC AIMS Consortium; Baron-Cohen, S. Atypical Neural Self-Representation in Autism. Brain 2010, 133, 611–624. [Google Scholar] [CrossRef]

- Toichi, M.; Kamio, Y.; Okada, T.; Sakihama, M.; Youngstrom, E.A.; Findling, R.L.; Yamamoto, K. A Lack of Self-Consciousness in Autism. Am. J. Psychiatry 2002, 159, 1422–1424. [Google Scholar] [CrossRef]

- Belin, P.; Zatorre, R.J.; Lafaille, P.; Ahad, P.; Pike, B. Voice-Selective Areas in Human Auditory Cortex. Nature 2000, 403, 309–312. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Ding, Y.; Huang, J.; Zhou, W.; Ling, Z.; Hong, B.; Wang, X. Hierarchical Cortical Networks of “Voice Patches” for Processing Voices in Human Brain. Proc. Natl. Acad. Sci. USA 2021, 118, e2113887118. [Google Scholar] [CrossRef] [PubMed]

- Rizzolatti, G.; Craighero, L. The Mirror-Neuron System. Annu. Rev. Neurosci. 2004, 27, 169–192. [Google Scholar] [CrossRef]

- Sugiura, M.; Sassa, Y.; Watanabe, J.; Akitsuki, Y.; Maeda, Y.; Matsue, Y.; Fukuda, H.; Kawashima, R. Cortical Mechanisms of Person Representation: Recognition of Famous and Personally Familiar Names. Neuroimage 2006, 31, 853–860. [Google Scholar] [CrossRef] [PubMed]

- Harrison, B.J.; Davey, C.G.; Savage, H.S.; Jamieson, A.J.; Leonards, C.A.; Moffat, B.A.; Glarin, R.K.; Steward, T. Dynamic Subcortical Modulators of Human Default Mode Network Function. Cereb. Cortex 2022, 32, 4345–4355. [Google Scholar] [CrossRef] [PubMed]

- Fleming, S.M.; Weil, R.S.; Nagy, Z.; Dolan, R.J.; Rees, G. Relating Introspective Accuracy to Individual Differences in Brain Structure. Science 2010, 329, 1541–1543. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association: Washington, DC, USA, 2013. [Google Scholar]

| Evaluation Phase | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| contrast # | Preference | Familiarity | Object feature | ||||||||||

| I | R | I | R | I | R | ||||||||

| 1 | Introspection > Object feature identification | 1 | 1 | 1 | 1 | −2 | −2 | ||||||

| 2 | Introspection > 0 | 1 | 1 | 1 | 1 | 0 | 0 | ||||||

| Conjunction analysis | |||||||||||||

| 3 | #1 & #2 | Introspection-specific activity compared with object feature identification | |||||||||||

| Disclosure phase | |||||||||||||

| Match | Mismatch | ||||||||||||

| Preference | Familiarity | Object feature | Preference | Familiarity | Object feature | ||||||||

| contrast # | I | R | I | R | I | R | I | R | I | R | I | R | |

| 4 | Mismatch introspection > 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 |

| 5 | Mismatch object feature identification > 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

| 6 | Mismatch introspection > Match introspection | −1 | −1 | −1 | −1 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 |

| 7 | Match (introspection > object feature identification) | 1 | 1 | 1 | 1 | −2 | −2 | 0 | 0 | 0 | 0 | 0 | 0 |

| 8 | Mismatch (introspection > object feature identification) | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | −2 | −2 |

| 9 | (Mismatch-Match) introspection > (Mismatch-Match) object feature identification | −1 | −1 | −1 | −1 | 2 | 2 | 1 | 1 | 1 | 1 | −2 | −2 |

| 10 | Mismatch object feature identification > Match object feature identification | 0 | 0 | 0 | 0 | −1 | −1 | 0 | 0 | 0 | 0 | 1 | 1 |

| Conjunction analysis | |||||||||||||

| 11 | #7 & #8 | Introspection relative to object feature identification, irrespective of mismatch and match. | |||||||||||

| 12 | #4 & #9 | Introspection-specific mismatch compared with object feature identification-related mismatch. | |||||||||||

| 13 | #4 & #5 & #6 & #10 | Mismatch-related activity, irrespective of introspection or object feature identification. | |||||||||||

| Cluster Size | MNI-Coordinates of Peak-Voxel | p-Value | t-Value | Hemisphere | Location | ||

|---|---|---|---|---|---|---|---|

| (mm3) | x | y | z | (FWE-corr) | |||

| 16,256 | −44 | 26 | −8 | <0.0001 | 10.691 | left | inferior frontal gyrus, orbital part |

| −30 | 22 | −6 | 4.878 | left | basal operculum | ||

| 8488 | −46 | −58 | 30 | 0.0001 | 9.034 | left | superior temporal gyrus |

| −40 | −70 | 44 | 5.459 | left | angular gyrus | ||

| 21,576 | −8 | 32 | 50 | <0.0001 | 8.788 | left | superior frontal gyrus, medial part |

| −16 | 42 | 42 | 6.303 | left | superior frontal gyrus, lateral part | ||

| −6 | 42 | 22 | 5.387 | left | cingulate sulcus, anterior part | ||

| 47,944 | 16 | −80 | −30 | <0.0001 | 8.125 | right | cerebellum CrusI |

| 6 | −58 | −42 | 8.077 | right | cerebellum IX | ||

| −14 | −48 | 2 | 8.007 | left | occipital gyrus | ||

| −10 | −56 | 6 | 7.675 | left | calcarine salcus, anterior part | ||

| 28 | −76 | −42 | 7.673 | right | cerebellum CrusII | ||

| −4 | −60 | 10 | 7.509 | left | precuneus | ||

| −6 | −46 | 22 | 7.342 | left | splenium of corpus callosum | ||

| 16 | −44 | 4 | 6.505 | right | hippocampal fissure | ||

| −2 | −24 | −18 | 6.008 | left | pons | ||

| −8 | −46 | −34 | 5.930 | left | cerebellum IX | ||

| −6 | −46 | 8 | 5.898 | left | isthmus of cingulate gyrus | ||

| −18 | −40 | −8 | 5.536 | left | perisplenial region | ||

| 16 | −42 | −36 | 5.477 | right | cerebellum X | ||

| −26 | −32 | −22 | 5.251 | left | parahippocampal gyrus | ||

| −16 | −42 | −40 | 5.243 | left | cerebellum X | ||

| −6 | −40 | −28 | 5.099 | left | cerebellum I-IV | ||

| −22 | −18 | −26 | 5.030 | left | cingulum (hippocampal part) | ||

| 14 | −42 | −60 | 4.801 | right | cerebellum VIIIb | ||

| −44 | −8 | −44 | 4.762 | left | fusiform gyrus | ||

| −22 | −48 | −52 | 4.590 | left | cerebellum VIIIb | ||

| −16 | −22 | −14 | 4.491 | left | cerebral peduncle | ||

| −20 | −52 | −40 | 4.449 | left | cerebellum Dentate | ||

| −44 | 8 | −34 | 4.432 | left | inferior temporal gyrus | ||

| 2 | −34 | −18 | 4.304 | right | decussation of the superior cerebellar peduncle | ||

| 26 | −48 | 8 | 3.909 | right | CA3 field of hippocampus | ||

| −4 | −30 | −6 | 3.575 | left | central tegmental tract | ||

| 2720 | −38 | 18 | 44 | 0.0370 | 6.649 | left | middle frontal gyrus |

| 7288 | −26 | −100 | −2 | 0.0003 | 6.007 | left | inferior occipital gyrus |

| −22 | −102 | 10 | 5.912 | left | superior occipital gyrus, lat. part | ||

| −24 | −82 | −6 | 4.473 | left | inferior lingual gyrus, lateral part | ||

| −14 | −98 | 16 | 3.964 | left | gyrus descendens | ||

| 6168 | 26 | −98 | 0 | 0.0009 | 5.933 | right | inferior occipital gyrus |

| 26 | −98 | 18 | 5.124 | right | superior occipital gyrus, lat. part | ||

| Cluster Size | MNI-Coordinates of Peak-Voxel | p-Value | t-Value | Hemisphere | Location | ||

|---|---|---|---|---|---|---|---|

| (mm3) | x | y | z | (FWE-corr) | |||

| The brain activity of the introspection-relative to object feature identification, irrespective of mismatch and match | |||||||

| 13,656 | 30 | −74 | −36 | <0.0001 | 11.953 | right | cerebellum CrusI |

| 33,168 | −14 | 46 | 44 | <0.0001 | 8.776 | left | superior frontal gyrus, lateral part |

| −2 | 56 | 26 | 6.649 | left | superior frontal gyrus, medial part | ||

| −2 | 58 | −6 | 5.275 | left | inferior frontopolar gyrus | ||

| 4 | 46 | 48 | 4.776 | right | superior frontal gyrus, medial part | ||

| −40 | 16 | 52 | 4.511 | left | middle frontal gyrus | ||

| 6 | 40 | 56 | 3.872 | right | superior frontal gyrus, lateral part | ||

| −4 | 68 | 6 | 3.260 | left | middle frontopolar gyrus | ||

| 3712 | 4 | −60 | −48 | 0.0115 | 8.303 | right | cerebellum IX |

| 6048 | −52 | −68 | 38 | 0.0010 | 6.936 | left | angular gyrus |

| 6648 | −4 | −52 | 26 | 0.0006 | 6.086 | left | isthmus of cingulate gyrus |

| 9632 | −44 | 0 | −36 | <0.0001 | 5.444 | left | inferior temporal gyrus |

| −58 | 6 | −18 | 5.235 | left | middle temporal gyrus | ||

| 6432 | 0 | 16 | −6 | 0.0007 | 5.433 | cingulate gyrus | |

| −4 | 0 | 6 | 5.090 | left | bed. N. of the stria terminalis, medial div. | ||

| −6 | 14 | 6 | 5.088 | left | fundus region of caudate n. | ||

| 6 | 10 | 2 | 4.940 | right | accumbens n., lateral p. | ||

| 12 | 20 | 8 | 3.981 | right | medial caudate n. | ||

| 2488 | −22 | −14 | −16 | 0.0496 | 5.143 | left | CA3 field of hippocampas |

| −12 | −4 | −10 | 3.745 | left | lateral hypothalamic area | ||

| −28 | −30 | −14 | 3.556 | left | subiculum | ||

| 6480 | 54 | 0 | −36 | 0.0007 | 5.116 | right | inferior temporal sulcus |

| 50 | −2 | −28 | 4.597 | right | middle temporal gyrus | ||

| Introspection-specific mismatch compared with object feature identification-related mismatch | |||||||

| 2576 | −34 | 22 | −16 | 0.0444 | 4.664 | left | transverse insular gyrus |

| −46 | 32 | −10 | 3.546 | left | inferior frontal gyrus, orbital part | ||

| −48 | 20 | 4 | 3.347 | left | inferior frontal gyrus, triangular part | ||

| Cluster Size | MNI-Coordinates of Peak-Voxel | p-Value | t-Value | Hemisphere | Location | ||

|---|---|---|---|---|---|---|---|

| (mm3) | x | y | z | (FWE-corr) | |||

| The mismatch-related activity, irrespective of introspection or object feature identification. | |||||||

| 8696 | 58 | −18 | −2 | <0.0001 | 5.419 | right | superior temporal gyrus |

| 8552 | −54 | −24 | −4 | 0.0001 | 5.015 | left | superior temporal gyrus |

| 4320 | 50 | −52 | 32 | 0.0059 | 4.925 | right | superior temporal gyrus |

| 44 | −62 | 48 | 4.066 | right | angular gyrus | ||

| Psychophysiological interaction analysis with the left IFG as the seed region. | |||||||

| 7912 | −40 | −30 | 10 | <0.0001 | 5.494 | left | ant. transverse temporal gyrus |

| −64 | −30 | 4 | 3.368 | left | superior temporal gyrus | ||

| 8400 | 52 | −14 | 4 | <0.0001 | 5.367 | right | ant. transverse temporal gyrus |

| 60 | −24 | 10 | 5.190 | right | superior temporal gyrus | ||

| 56 | −6 | −2 | 4.561 | right | planum polare, lat. part | ||

| 2688 | −50 | −8 | 44 | 0.0002 | 4.108 | left | precentral gyrus |

| −38 | −16 | 34 | 3.553 | left | superior longitudinal fascicle III, vent. comp. | ||

| 1520 | 50 | −4 | 42 | 0.0069 | 4.041 | right | precentral gyrus |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoshioka, A.; Tanabe, H.C.; Nakagawa, E.; Sumiya, M.; Koike, T.; Sadato, N. The Role of the Left Inferior Frontal Gyrus in Introspection during Verbal Communication. Brain Sci. 2023, 13, 111. https://doi.org/10.3390/brainsci13010111

Yoshioka A, Tanabe HC, Nakagawa E, Sumiya M, Koike T, Sadato N. The Role of the Left Inferior Frontal Gyrus in Introspection during Verbal Communication. Brain Sciences. 2023; 13(1):111. https://doi.org/10.3390/brainsci13010111

Chicago/Turabian StyleYoshioka, Ayumi, Hiroki C. Tanabe, Eri Nakagawa, Motofumi Sumiya, Takahiko Koike, and Norihiro Sadato. 2023. "The Role of the Left Inferior Frontal Gyrus in Introspection during Verbal Communication" Brain Sciences 13, no. 1: 111. https://doi.org/10.3390/brainsci13010111

APA StyleYoshioka, A., Tanabe, H. C., Nakagawa, E., Sumiya, M., Koike, T., & Sadato, N. (2023). The Role of the Left Inferior Frontal Gyrus in Introspection during Verbal Communication. Brain Sciences, 13(1), 111. https://doi.org/10.3390/brainsci13010111