Abstract

Spatial visualization ability (SVA) has been identified as a potential key factor for academic achievement and student retention in Science, Technology, Engineering, and Mathematics (STEM) in higher education, especially for engineering and related disciplines. Prior studies have shown that training using virtual reality (VR) has the potential to enhance learning through the use of more realistic and/or immersive experiences. The aim of this study was to investigate the effect of VR-based training using spatial visualization tasks on participant performance and mental workload using behavioral (i.e., time spent) and functional near infrared spectroscopy (fNIRS) brain-imaging-technology-derived measures. Data were collected from 10 first-year biomedical engineering students, who engaged with a custom-designed spatial visualization gaming application over a six-week training protocol consisting of tasks and procedures that varied in task load and spatial characteristics. Findings revealed significant small (Cohen’s d: 0.10) to large (Cohen’s d: 2.40) effects of task load and changes in the spatial characteristics of the task, such as orientation or position changes, on time spent and oxygenated hemoglobin (HbO) measures from all the prefrontal cortex (PFC) areas. Transfer had a large (d = 1.37) significant effect on time spent and HbO measures from right anterior medial PFC (AMPFC); while training had a moderate (d = 0.48) significant effect on time spent and HbR measures from left AMPFC. The findings from this study have important implications for VR training, research, and instructional design focusing on enhancing the learning, retention, and transfer of spatial skills within and across various VR-based training scenarios.

1. Introduction

Engineering is expected to play a pivotal role in the global economy and societal growth over the next decade. Employment opportunities in engineering and engineering-related occupations are expected to increase from 2021 to 2031, resulting in the creation of 91,300 new jobs in the United States over the next decade with approximately 200,900 openings each year coming from growth and replacement needs [1]. It is critical that institutions of higher education be able to prepare adequately educated graduates to fill these positions. Additionally, in The Future of Jobs Report published in October 2020 by the World Economic Forum, several skills were identified as emerging for the coming decade [2]. These skills included problem solving, critical thinking/analysis, and technology use and development, which are also part of the fundamental education and training of engineers [2]. It is imperative that higher education be able to anticipate society’s needs and provide well-educated and skilled graduates in all professions, including engineering.

Spatial visualization abilities (SVA) have been shown to be a predictive factor in Science, Technology, Engineering, and Mathematics (STEM) academic achievement [3,4,5] and retention [5,6]. Spatial visualization is defined by Voyer et al. as “the ability to manipulate complex spatial information when several stages are needed to produce the correct solution” [7] (p. 250). Spatial visualization skills are closely linked to spatial ability—the capability to transform, generate, and recall symbolic information [8]—as well as spatial thinking, which involves the three essential concepts of space, representation tools, and reasoning [9]. In engineering disciplines, spatial abilities contributed to achievement in first-year engineering design beyond other abilities, such as numerical, verbal, and general reasoning [4]. According to Fontaine and De Rosa, students with low spatial visualization skills (SVS) are more likely to drop out of an engineering program [5], highlighting a need to enhance such skills to improve academic performance and retention.

With changing demographics and residual effects from the COVID-19 pandemic, there has been increasing research on developing “new teaching strategies that enhance students’ motivation and commitment and maximize their knowledge acquisition” [10] (p. 2), including virtual reality (VR) training. Digital technologies, such as VR systems, are at the forefront of innovative teaching strategies for supporting SVA skill acquisition in STEM disciplines. According to Oberero-Gaitán et al., the utilization of VR devices in teaching and learning is supported through scientific evidence and enhances educational possibilities in the classroom [11] (p. 3). In support of this assertion, a recent study reported that spatial visualization and mental rotation could be improved through desktop VR training [12]. Thus, a potential use of VR would be to provide training in spatial visualization skills in lieu of the manual manipulation of actual objects in order to promote retention in engineering and other STEM disciplines through enhanced SVS. Recognizing that STEM occupations are projected to grow two times faster than the total of all other occupations between 2021 and 2031, further research is needed on the use of VR to support knowledge acquisition and enhance motivation as well as increase student retention and graduation rates across STEM programs.

The aim of this exploratory study was to examine the effect of VR-based training using spatial visualization tasks on participant performance and mental workload using behavioral (i.e., time spent) and functional near infrared spectroscopy (fNIRS) brain-imaging-technology-derived measures. This study was guided by the following broad research questions:

- (1)

- What are the effects of VR training with spatial visualization tasks on participant behavioral performance and learning (i.e., acquisition and transfer)?

- (2)

- What are the effects of VR training with spatial visualization on participant mental workload using selected cortical hemodynamic measures (e.g., HbO and HbR) within the prefrontal cortex (PFC) area?

This exploratory study was conducted to advance the understanding of how biomedical engineering students learn SVA and to develop technology-rich learning environments with a pedagogy that fosters learning transfer and student retention.

2. Literature Review

2.1. Spatial Visualization

Various studies have reported that engaging students in authentic spatial visualization projects can enhance deeper learning [13,14,15]. Deeper learning has been defined in terms of key competencies, including the ability to think critically and solve complex problems [16,17,18]. Such learning is crucial to transferring skills both within and beyond academic settings. It is therefore essential to design and implement innovative curricula that will effectively foster transferable spatial visualization skills, particularly during the first year of college. Spatial visualization tutorials and workshops have been shown to generate overall improvements in spatial ability for first-year engineering students [13,14,19]. Furthermore, studies have reported that those students who can increase their spatial visualization skills demonstrate greater self-efficacy, which, in turn, appears to positively affect retention in engineering [20,21,22]. Retention is a metric that reflects a student’s ability to continue from one term to the next through to graduation. According to Gómez-Tone et al. [14], “Strong spatial skills have been shown to be associated with overall success and achievement, but also retention in engineering programs” (p. 4).

2.2. Purdue Spatial Visualization Test: Rotations (PSVT:R)

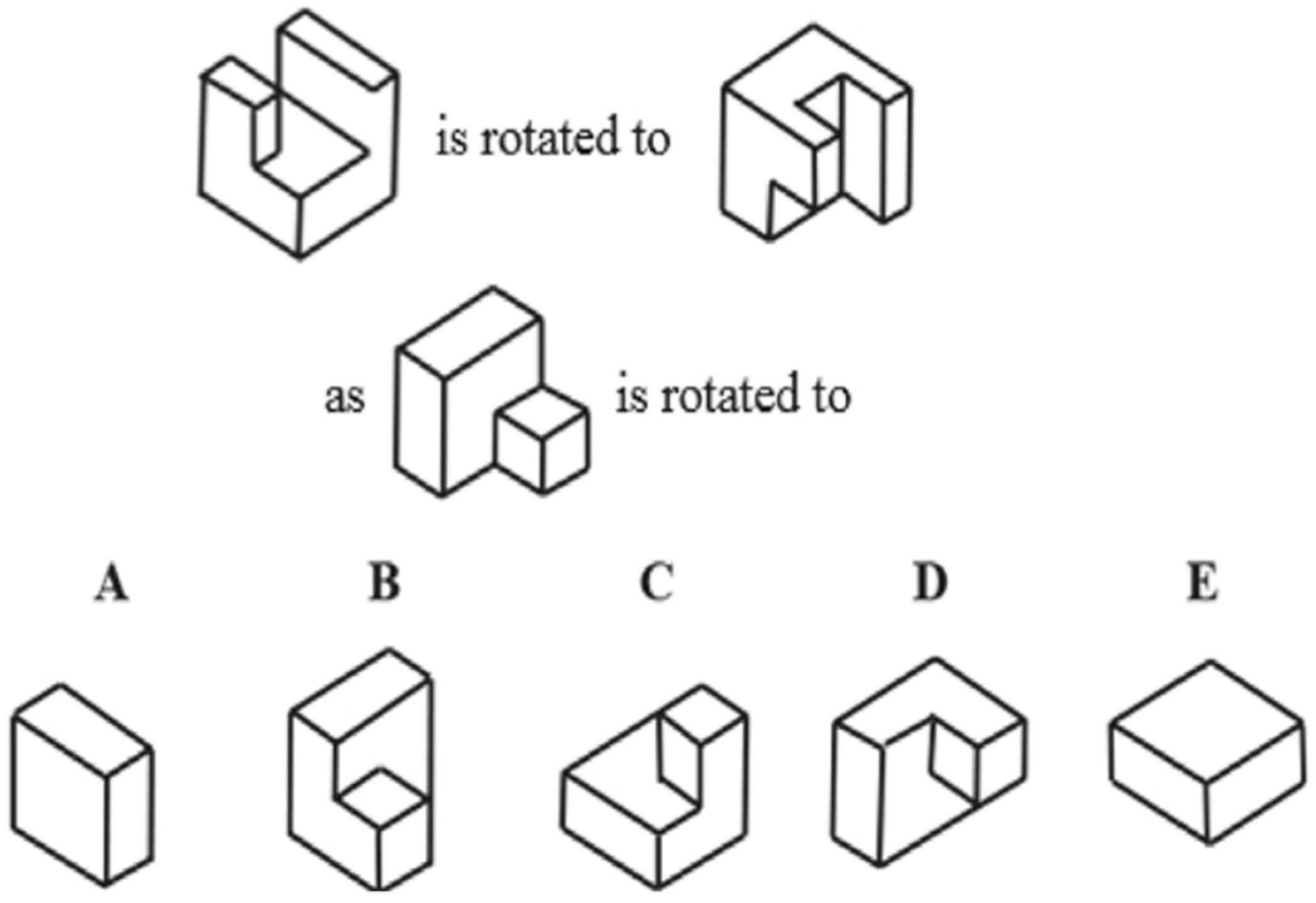

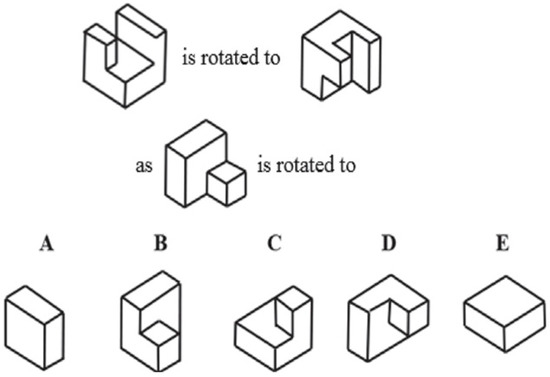

The Purdue Spatial Visualization Test: Rotations (PSVT:R), which involves rotating objects, was selected for this study since it is one of the most widely used spatial visualization tests in the engineering education research community [20,23]. The PSVT:R was designed by Guay in 1976 to determine a “student’s ability to visualize and recognize orthographic drawings” [24] (p. 161). The PSVT:R assessment includes 30 multiple-choice questions in which students mentally rotate 3D geometric shapes through specified rotations (see Figure 1 for an example).

Figure 1.

Example of a PSVT question.

2.3. Virtual Reality

Providing learners with alternatives to traditional lectures is becoming increasingly important in 21st century classrooms that are characterized as being more individual-oriented, task-based, and technology-enhanced environments [25]. Two of the Grand Challenges proposed by the National Academy of Engineering in 2017 were Enhance Virtual Reality and Advance Personalized Learning (NAE Grand Challenges for Engineering, 2017). Advances in virtual reality (VR) technologies can address both challenges, allowing the creation of innovative and interactive personal learning environments that can simulate real environments in terms of sight, hearing, and touch [26]. VR environments provide novel approaches to educational content delivery and learner engagement. Studies have shown that interactive media tools can have a positive effect on the understanding of the learning content, learner motivation, engagement, and creative self-expression [27,28,29]. In engineering education, the application of head-mounted displays for immersive VR experiences has led to learning enhancements due to the increased interactivity of simulation tasks [25,30]. Studies have also indicated that teaching via interactive 3D models in VR environments have advantages in terms of efficient learning, transfer, higher motivation, and greater engagement when compared to the traditional approaches [26,28,31]. A recent study by Kuznetcova and colleagues [32] showed that VR intervention improved middle school students’ visuospatial self-efficacy. Another related study involving three comparison groups (i.e., VR, Desktop VR, and 2D) showed increased usability and learning outcomes for the immersive VR group as compared to the other groups [33]. Virtual reality training has also been used to promote spatial ability in older adults with mild cognitive impairment [34], allocentric space representations in young males [35], as well as spatial visualization and mental rotation in a controlled experiment [12]. The latter study used college-age students (27 females and 22 males, ages 19–28). The evidence clearly supports the use of VR training in an effort to enhance spatial visualization ability in first year college students.

The efficacy of VR in enhancing learning can be evaluated by assessing the effects of task and environmental characteristics on performance and mental workload [28]. Performance is commonly evaluated using behavioral measures, such as time, speed, and accuracy. A comparison of VR training versus traditional training has demonstrated a faster improvement in performance during skill acquisition, and greater skill transfer when using VR [36]. Several methods currently exist to measure mental workload. The oldest and the most widely used approaches have involved subjective ratings. These methods are effective but are usually performed after the completion of a task and require participant introspection. Alternatively, physiological (i.e., heart rate, eye tracking, etc.) and neurophysiological measures (i.e., electroencephalography, functional near infrared spectroscopy) enable the assessment of mental workload during task execution.

2.4. Functional near Infrared Spectroscopy (fNIRS)

Functional near infrared spectroscopy (fNIRS) is a non-invasive portable brain imaging technology that measures hemodynamic changes within the cerebral cortex in response to sensory, motor, and cognitive stimuli [37,38,39]. Previous studies have associated fNIRS measures with varying workload levels during cognitive tasks [39,40,41,42,43,44]. Furthermore, studies with fNIRS have shown a significant reduction in the activation within the PFC with increasing familiarity with the task at hand and improved performance, thus providing a quantitative measurement of trainees’ expertise development [45]. Research has shown that mental workload is sensitive to task-related features such as difficulty level, the order of the tasks (e.g., blocked vs. random practice), and the type of task (e.g., learning vs. transfer task) [39,43,44].

Based on the studies shared in the literature, we decided to implement the Purdue Assessment in VR for a hands-on application of special visualization practice and selected fNIRS as the neurophysiological biometric to assess participants’ mental workload during the VR experience.

3. Materials and Methods

3.1. Participants

Ten participants between the ages of 18 and 20 consented to participate in a 6-week immersive virtual reality (VR) intervention study. The study was approved by the Institutional Review Board (IRB) of Drexel University. Out of the 10 participants, 7 were male. Recruited individuals were right-handed; had either normal or corrected-to-normal vision; had no history of seizures, head injury, or neurological dysfunction; had no history of depression, schizophrenia, or social phobia; had not had previously admitted to an alcohol/drug treatment program or diagnosed as suffering from of alcohol/drug abuse; were not taking medications that could affect or alter brain activity (i.e., sleeping pills, Valium, or Xanax); and were not pregnant.

3.2. VR Protocol

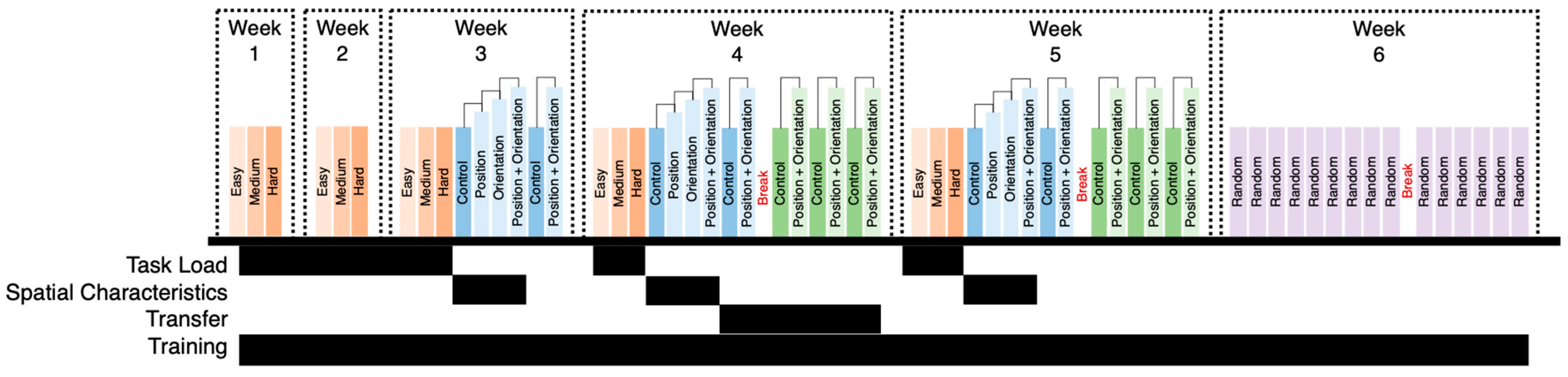

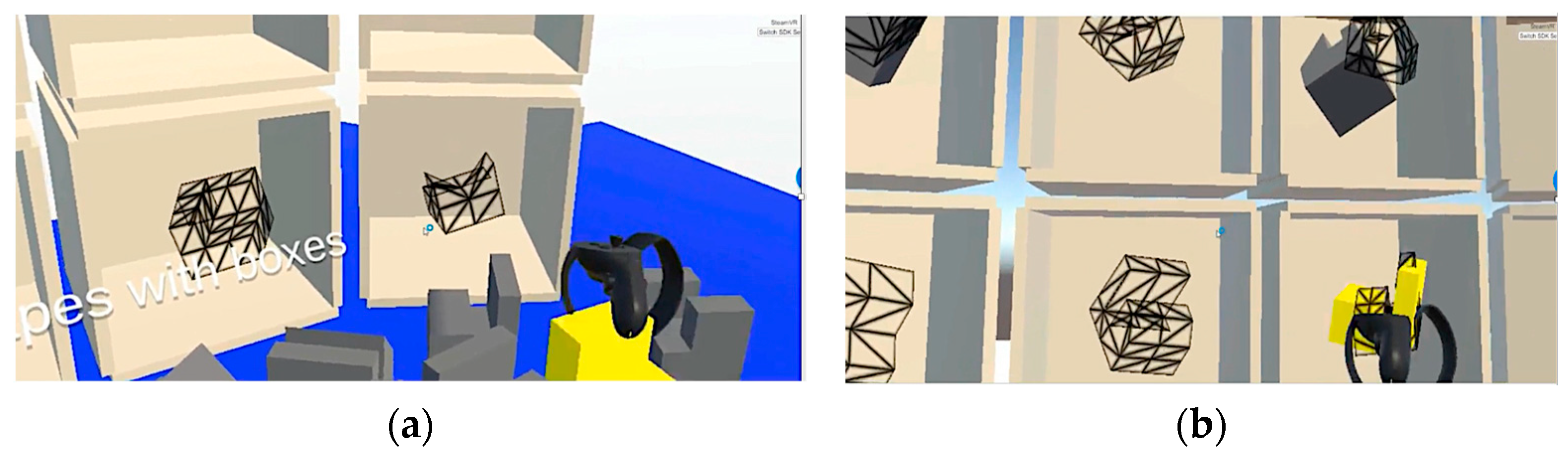

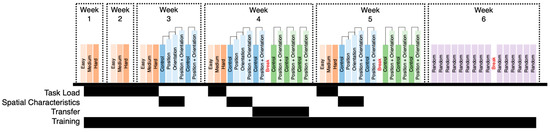

The 6-week VR protocol is shown in Figure 2 broken down by week. A total of three different protocols were implemented. Each block, regardless of color, consisted of 12 target shapes, 3 extra shapes, and 12 panel boxes, where the subject was required to match the correct shape to the correct box (see Figure 3). The first protocol, as indicated by orange blocks, consisted of three different tasks with increasing difficulty. The level of difficulty was manipulated by making the shapes and panel boxes more complex. The Purdue Spatial Visualization Task identifies levels of increasing difficulty (complexity). Thus, we based our protocol and levels of difficulty on the Purdue criteria [30]. The second protocol, as indicated by blue blocks, consisted of three spatial manipulations of either the target shapes, the panel boxes, or both. The first and fourth blue blocks, noted as control in Figure 2, consisted of a new set of target shapes and panel boxes. The second blue block, noted as position, consisted of the same setup of target shapes as the first block with changes in the position of the panel boxes. The third blue block, noted as orientation, consisted of the same setup of panel boxes as the second block with changes in the orientation of the target shapes. The fourth and sixth blue block, noted as position + orientation, consisted of changes in the position of the panel box and the orientation of the target shapes. The third protocol, as indicated by green blocks, consisted of three control blocks and three blocks where the position of the panel box and the orientation of the target shapes from the preceding control blocks were manipulated. The primary difference between the second protocol and third protocol is that a new set of target shapes and panel boxes was used. The final protocol implemented during week 6 utilized a randomly selected block from any of the three protocols administered during weeks 1 through 5.

Figure 2.

Six-week experimental protocol consisting of three different task sets (orange, blue, and green blocks). The first set (orange blocks) consisted of changes in task load. The second and third sets (represented via blue and green blocks) consisted of changes in spatial characteristics. Purple blocks (week 6) represent tasks sampled from either of the task sets. The black bars under each of the colored blocks represent which data were used in linear mixed-effects models to investigate the effect of task difficulty, spatial characteristics, transfer, and training.

Figure 3.

VR application: picking up Purdue cube (a) and placing cube in selection box (b).

3.3. VR and fNIRS Equipment

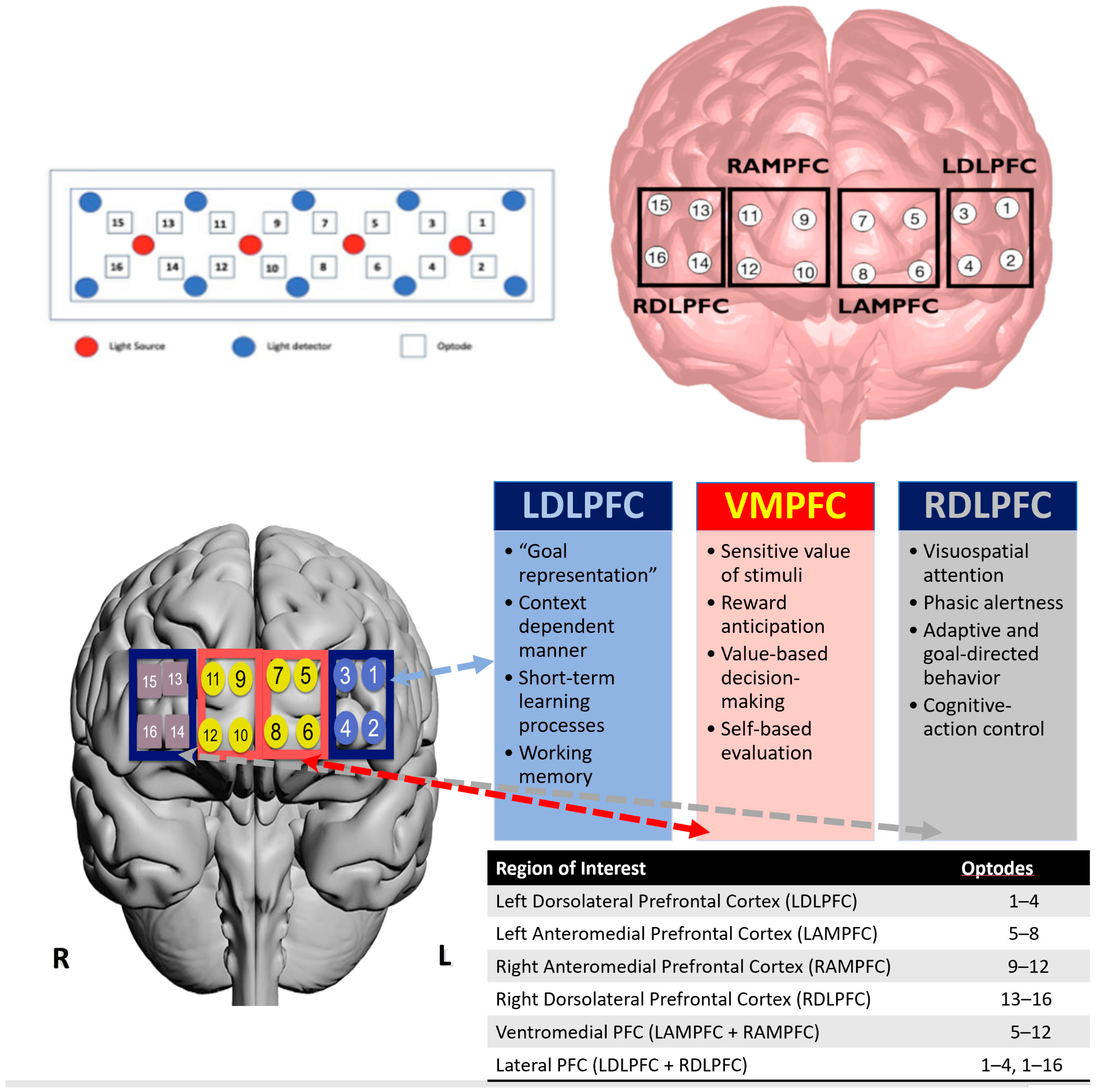

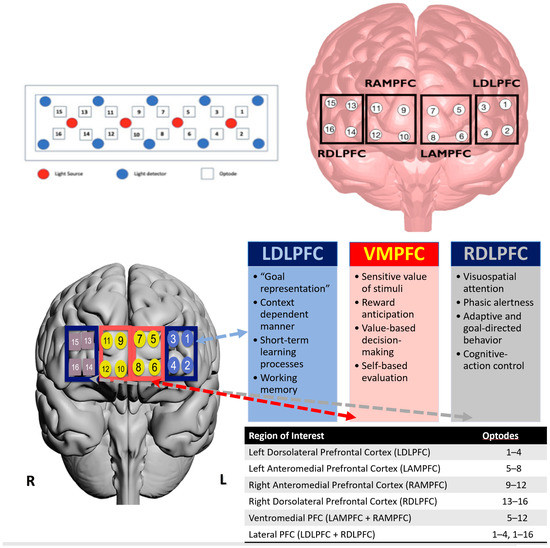

For hardware, this study utilized an Oculus Rift with the Touch Reality System and a Razer Blade gaming laptop. The continuous-wave fNIRS device (fNIRS Imager 1200; fNIR Devices LLC, Potomac, MD, USA) utilized in this study emitted light at peak wavelengths of 730 and 850 nm, sampled every 2 Hz, and consisted of 4 light-emitting diodes (LEDs) and 10 photodetectors. The detector and light source combination results in a total of 16 cerebral measurement locations and enabled monitoring of hemodynamic changes in the PFC region (see Figure 4).

Figure 4.

fNIRS sensor layout and the grouping of fNIRS channels based on functional regions—Right Dorsolateral Prefrontal Cortex (RDLPFC), Right Anterior Medial Prefrontal Cortex (RAMPFC), Left Anterior Medial Prefrontal Cortex (LAMPFC), and Left Dorsolateral Prefrontal cortex (LDLPFC) (top); topographical overlay across functional regions of the prefrontal cortex with associated functions and sensor groupings (bottom). Adapted graphic from Getchell and Shewokis [46].

3.4. fNIRS Signal Processing and Feature Extraction

fNIRS signals are affected by noise arising from motion, ambient light, saturation, and physiological factors, such as cardiac activity, respiratory function, and Mayer waves (low-frequency spontaneous oscillations in arterial blood pressure) [47]. To remove the effects due to motion, ambient light, and saturation, channels were rejected if differences between light intensity measurements from the two wavelengths were less than 70, average light intensity measurements were less than 700, and/or the standard deviations (SDs) of light intensity measurements were greater than 3 SD [48,49]. To remove effects due to physiological sources, a low-pass filter with a cut-off frequency of 0.09 Hz was employed [47]. A modified Beer–Lambert law and local baselining were then used to calculate relative concertation changes in HbO and HbR [50]. Average HbO and HbR measures per block (protocol X level) were extracted from Left Dorsolateral PFC (LDLPFC—channels 1 to 4), Left Anterior Medial PFC (LAMPFC—channels 5 to 8), Right Anterior Medial PFC (RAMPFC—channels 9 to 12), and Right Dorsolateral PFC (RDLPFC—channels 13 to 16). The location of these regions over the PFC is shown in Figure 4.

3.5. Statistics

A longitudinal, repeated-measures research design was used which included a hierarchical nesting structure. In addition, missing data were assessed for characterization and percent missed (determined to be missing at random as shown in Table A1). Given that data are missing at random, linear mixed-effects models provide a viable statistical technique to analyze the data without imputations [51]. Therefore, linear mixed-effects regression (LMER) modelling was used [52], with nlme and emmeans used to perform LMER analyses [51,53]. Dependent variables evaluated consisted of one behavioral (time spent—s) and eight fNIRS measures (HbO and HbR—uM measures from LDLPFC, LAMPFC, RAMPFC, and RDLPFC). The dependent variable was normalized (i.e., centered and scaled) prior to statistical evaluation. The data were examined in four separate analyses to assess task load, spatial characteristics, transfer, and training, respectively. For the first analysis, data associated with protocol 1 from weeks 1 to 5 (see blocks highlighted by a black bar associated with task load in Figure 2) were extracted to investigate the effect of changes in task load (easy, medium, hard) on dependent variables (see Model 1). Post hoc analysis for this investigation consisted of three task load comparisons (easy vs. medium, medium vs. hard, and easy vs. hard). For the second analysis, data associated with the first four blocks of protocol 2 from weeks 3 to 5 (see blocks highlighted by a black bar associated with spatial characteristics in Figure 2) were extracted to investigate the effect of changes in spatial characteristics (control, position change, orientation change, and position + orientation change) on dependent variables (see Model 2). Post hoc analysis consisted of six comparisons (control vs. position, control vs. orientation, control vs. position + orientation, position vs. orientation, position vs. position + orientation, and origin vs. position + orientation).

For the third analysis, data associated with last two blocks of protocol 2 and all blocks of protocol 3 from week 4 (see blocks highlighted by a black bar associated with transfer in Figure 2) were extracted to investigate the effect of introducing new scenarios with same task objective (see Model 3). Post hoc analysis consisted of two within comparisons (Old: Control vs. Position + Orientation; New: Control vs. Position + Orientation) and two across comparisons (Control: Old vs. New; Position + Orientation: Old vs. New). Finally, for the fourth analysis, data from weeks 1 through 5 were labelled as acquisition, while the data from week 6 were labelled retention (see blocks highlighted by a black bar associated with training in Figure 2) to investigate training effect (see Model 4). Post hoc analysis consisted of three total comparisons, with one comparison (Acquisition vs. Retention) per protocol:

The LMER analyses were based on the following general statistical models and equations.

Model 1: Random intercept, fixed effect: task load (easy, medium, hard).

The random intercept model is the simplest model in which the ith observation of the DV in the jth task load and the kth subject is depicted as:

where “1” is the intercept representing the overall mean, “Task Load” is a fixed effect, and “Subject” indicates a random effect due to the subject that the ith observation was in.

DVijk = 1 + Task Loadj + (1|Subjectk) + εijk

Model 2: Random intercept, fixed effect: spatial characteristics (control, origin, position, position + origin).

Model 2 is a similar model to Model 1 with a change in the fixed effect to “Spatial Characteristics”.

DVijk = 1 + Spatial Characteristicsj + (1|Subjectk) + εijk

Model 3: Random intercept, fixed effects: protocol (old: week 4—protocol 2; new: week 4—protocol 3); protocol: dependency (old: control, position + origin, new: control, position + origin); protocol: dependency interaction.

where “1” is the intercept representing the overall mean; “Protocol” is a fixed effect; protocol: dependency is an interaction fixed effect; and “Subject” indicates a random effect due to the subject that the ith observation was in.

DVijk = 1 + Protocolj + Protocol: Dependencyjl + (1|Subjectk) + εijlk

Model 4: Random intercept, fixed effect: session (acquisition: week 1—5, retention: week 6), session: protocol (acquisition: protocol 1, protocol 2, protocol 3, retention: protocol 1, protocol 2, protocol 3) interaction.

Model 4 is a similar model to Model 3 with a change in the fixed effects to “Session” main effect and “Session: Protocol” interaction fixed effect.

DVijk = 1 + Sessionj + Session: Protocoljl + (1|Subjectk) + εijlk

The heterogeneous AR1 covariance model is a first-order autoregressive structure with heterogeneous variances. Defining the correlations between any two elements results in a correlation coefficient r, while correlations between two elements that are separated by a third element (time point) will be (r2) with this pattern continuing. Within our study, we assumed that the adjacent observations (time points) on the same subject (performer) will have errors with a higher correlation than observations that are not adjacent or further apart. Additionally, since the covariance structures assume different variances for each time point, these different variances are labeled “heterogeneous” covariance structures. In the nlme R package, we chose the coAR1 function because the function uses a discrete-time first-order autocorrelation model [51].

Due to strong correlations observed between measures that varied across weeks, all models used a heterogenous AR1 covariance structure [51]. Tests of the assumptions of homogeneity of variance and normality of residuals along with random effects were conducted using visual inspection. If model predictions showed heteroscedasticity or a non-normal distribution, then log10 transformations were performed on the response variables. The significance of fixed effect terms was evaluated using likelihood ratio tests, where the full effects model was compared against a model without the effect in question. For example, while investigating the effect of task load, a reduced (null–random intercept) model of 1 + (1|Subject) was compared against the (random intercept, fixed effect model) 1 + Task Load + (1|Subject). Maximum likelihood estimation was used to conduct likelihood ratio tests, while restricted maximum likelihood was used to evaluate post hoc comparisons. For all statistical analyses, the level of significance was set at = 0.05. Adjustments using false discovery rate (FDR) were made on p-values to account for Type I error inflation per dependent variable. Cohen’s d was used to examine post hoc effects [54]. A d of 0.2 is considered a small effect, while 0.5 and 0.8 represent medium and large effects, respectively.

4. Results

4.1. Effect of Task Load on Behavioral and fNIRS Measures

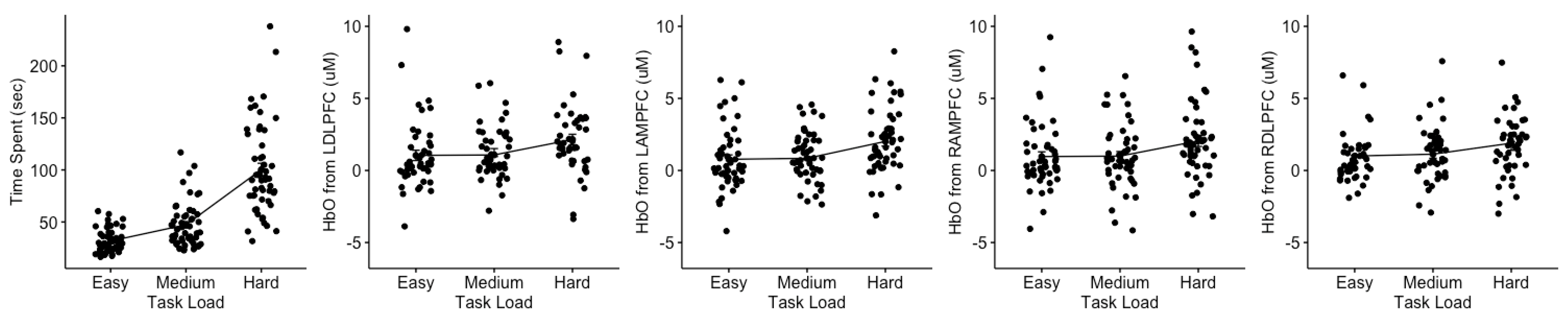

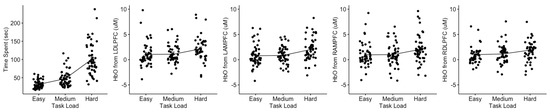

Task load had a significant effect on time spent ( = 191.50, p < 0.001), with increases observed from easy to medium, medium to hard, and easy to hard (see leftmost plot in Figure 5 and Table 1). Task load had a significant effect on all PFC areas for HbO measures (LDLPFC: = 33.56, p < 0.001; LAMPFC: = 28.55, p < 0.001; RAMPFC: = 35.01, p < 0.001; RDLPFC: = 40.76, p < 0.001), and only the LDLPFC ( = 6.67, p = 0.036), RAMPFC ( = 6.07, p = 0.048), and RDLPFC ( = 10.24, p = 0.006) areas for HbR measures. Post hoc comparisons, as shown in Table 1 and Figure 5 and Figure 6 (topographical maps), revealed significant increases from (i) easy to medium conditions in only RAMPFC and RDLPFC; (ii) medium to hard in all regions; and (iii) easy to hard in all regions. Although significant, most detected effects ranged from small (d ~ 0.1) to small–moderate (d = 0.31 to 0.51).

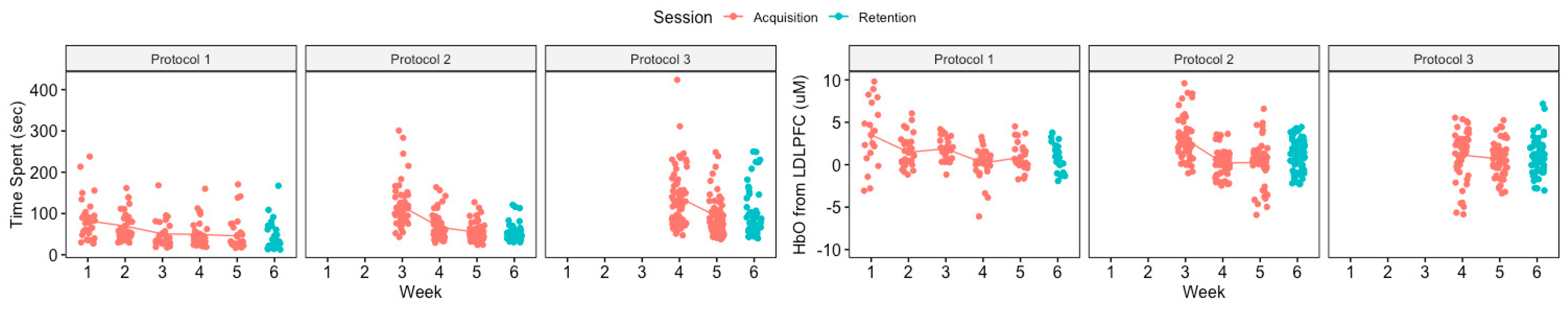

Figure 5.

Effect of changes in task load on time spent and HbO measures from Left Dorsolateral Prefrontal Cortex (LDLPFC), Left Anterior Medial Prefrontal Cortex (LAMPFC), Right Anterior Medial Prefrontal Cortex (RAMPFC), and Right Dorsolateral Prefrontal Cortex (RDLPFC). Data represented using mean and standard error of mean. Data were pooled across weeks 1 through 5 from protocol 1.

Table 1.

Descriptive statistics associated with each task load level and differences between them.

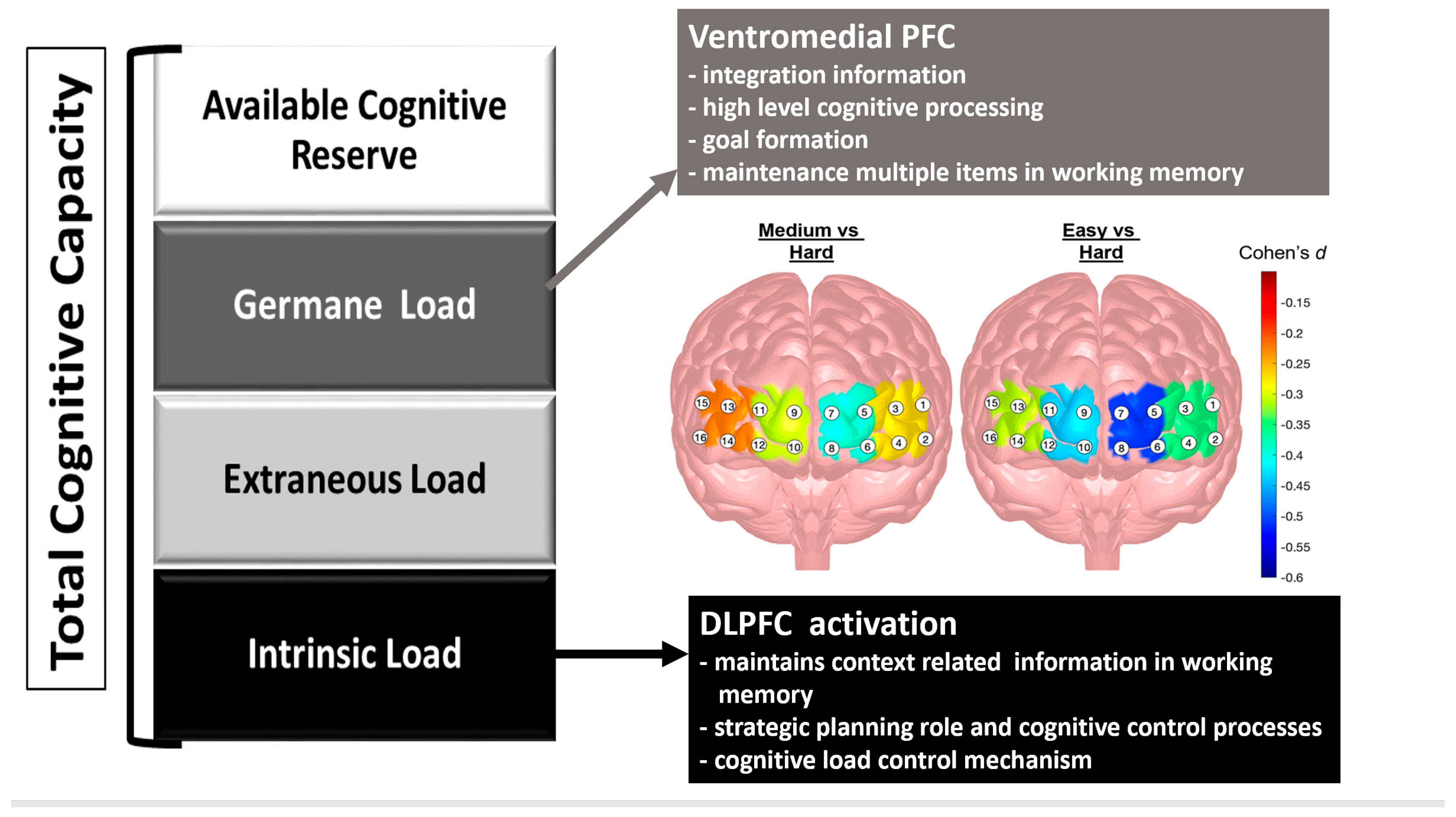

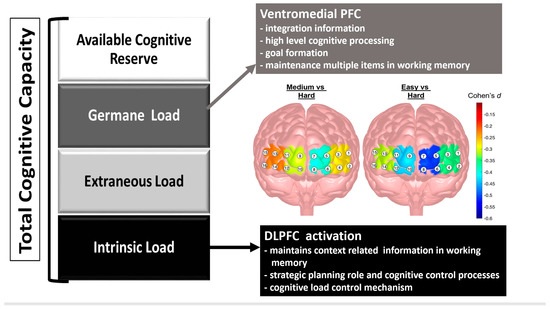

Figure 6.

Schematic of Total Cognitive Capacity with Suggested Activation Functions of the Dorsolateral Prefrontal Cortex (DLPFC), Ventromedial Prefrontal Cortex, Associated Types of Cognitive Load based on Cognitive Load Theory, and Contrast Topographical Maps of the Cohen’s d Effect Sizes Comparing Significant Task Load Comparisons [43,55,56]. Adapted graphic [46].

4.2. Effect of Spatial Characteristics on Behavioral and fNIRS Measures

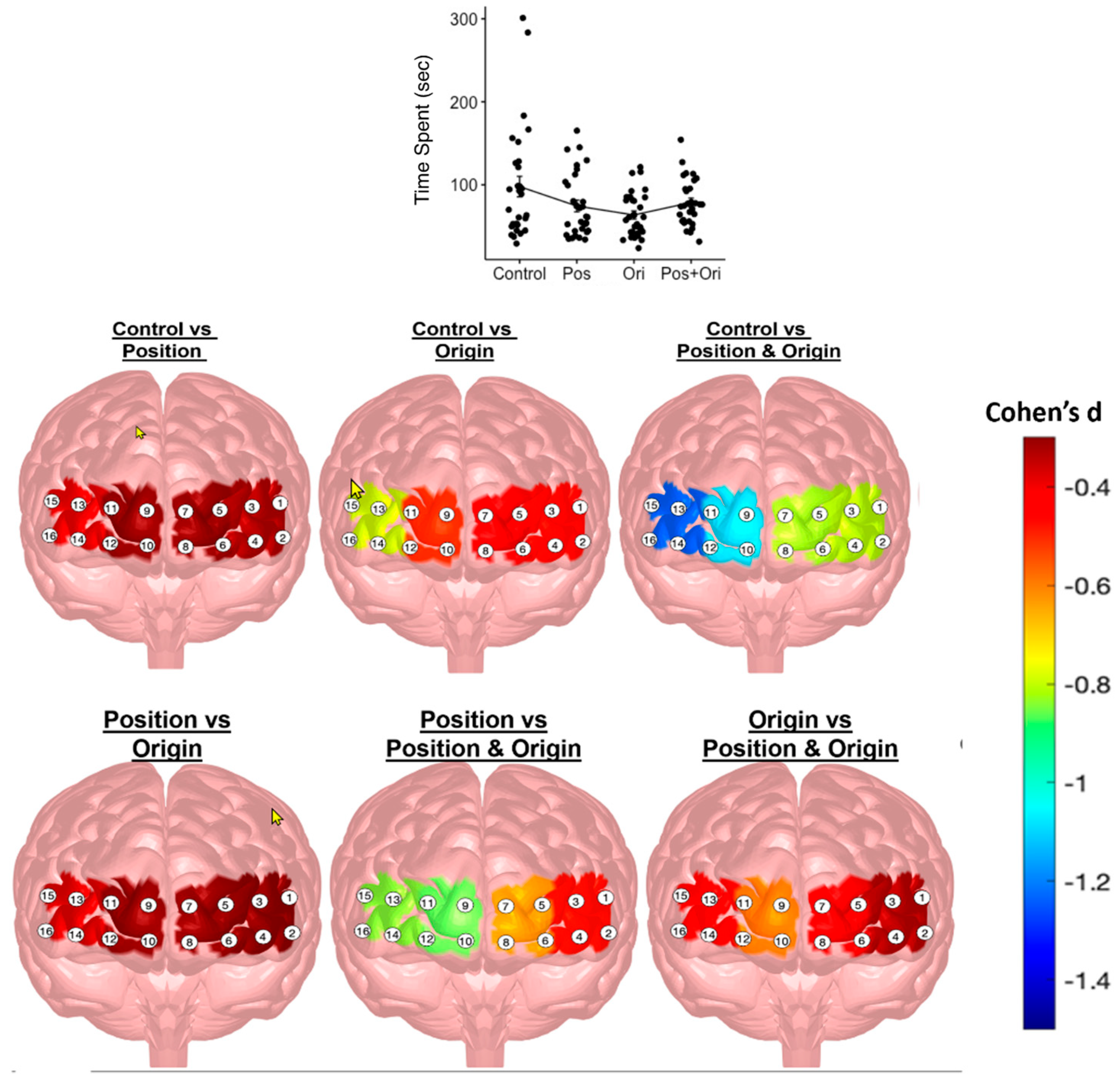

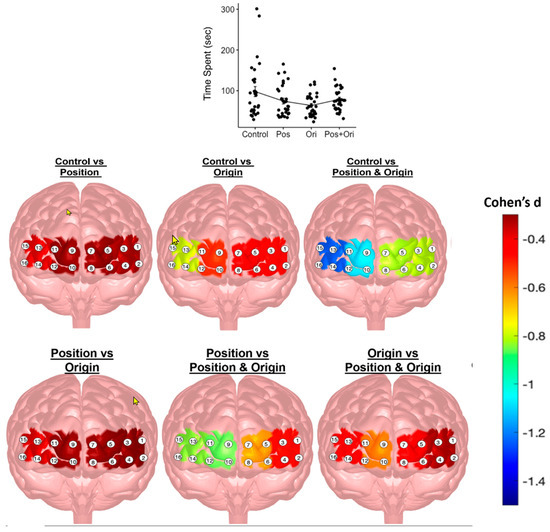

Changes in spatial characteristics had a significant effect on time spent ( = 35.68, p < 0.001) (see first plot of top panel in Figure 7). Post hoc comparisons, as shown in Table A2, revealed that the time spent by the control group significantly decreased during position change, orientation change, and during position + orientation change. Significant increases in time spent were observed from position change or orientation change to position + orientation change. No differences were observed between position and orientation changes. Spatial characteristics had significant differences with effect sizes ranging from small to large (d = 0.24 to d = −0.81), respectively.

Figure 7.

Effect of changes in spatial characteristics on time spent and HbO measures from LDLPFC, LAMPFC, RAMPFC, and RDLPFC. Data were pooled across weeks 3 through 5 from first four blocks of protocol 2 and represented using mean and standard error of mean (top panel). Topographical maps of contrast effect sizes (Cohen’s d) for spatial characteristics of HbO for all regions of interest in the PFC (bottom panel).

Spatial characteristics had a significant effect on all PFC areas of HbO measures (LDLPFC: = 21.24, p < 0.001; LAMPFC: = 26.04, p < 0.001; RAMPFC: = 39.88, p < 0.001; RDLPFC: = 35.87, p < 0.001), and only the RDLPFC area ( = 8.15, p = 0.043) of HbR measures. Post hoc comparisons, as shown in Table A2 and the bottom panel of Figure 7, revealed significant increases from (i) control to position change in LDLPFC and RDLPFC; (ii) control to orientation change in all areas; (iii) control to position + orientation change in all areas; (iv) position to position + orientation change in all areas; (v) orientation to position + orientation change in all areas; and (vi) position to orientation change in only RDLPFC. Effect sizes ranged from small–moderate (d = −0.34) to large (−1.09). Table A2 provides descriptive statistics associated with each change in spatial characteristics and the differences between them.

4.3. Effect of Transfer on Behavioral and fNIRS Measures

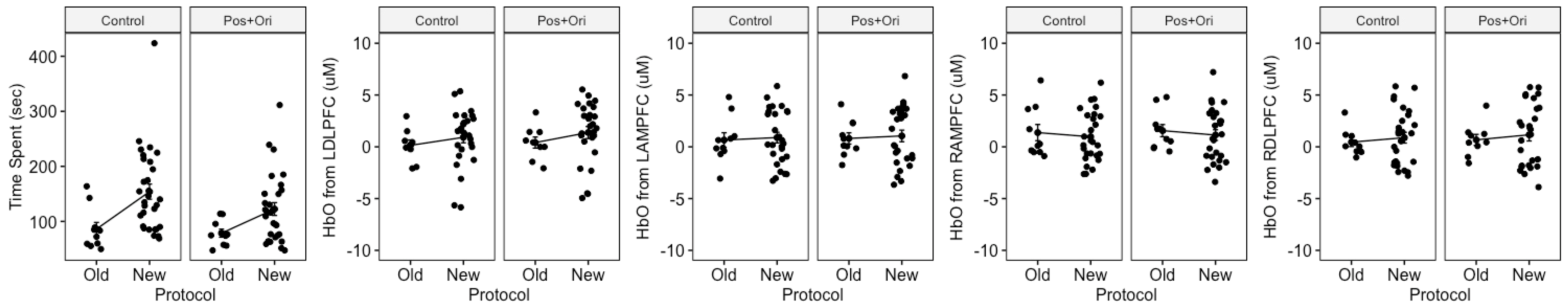

The introduction to a new scenario with same the task objective as previously assessed scenarios resulted in a significant interaction effect between the protocol and dependency on time spent ( = 18.52, p < 0.001) (see first plot of Figure 8). Post hoc comparisons revealed significant increases from control to position + origin change in the new scenario (adj. p < 0.001, d = 0.83), and increases from the old to new scenario for the control dependency (adj. p < 0.001, d = −1.37). Although the interaction effect between the protocol and dependency was not significant for either HbO or HbR for any PFC areas, the main effect of the protocol was significant for RAMPFC ( = 4.55, p = 0.033) for HbO measures, specifically with decreases observed during performance of the new scenario (see fourth pair of plots of Figure 8).

Figure 8.

Effect of introduction to new scenarios on time spent and HbO measures from LDLPFC, LAMPFC, RAMPFC, and RDLPFC. Data plotted for old are from last two blocks of protocol 2, and new are from blocks of protocol 3 during week 4, and represented using mean and standard error of mean.

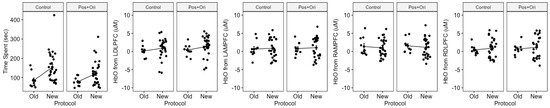

4.4. Effect of Training on Behavioral and fNIRS Measures

As shown in Figure 9, changes in performance and brain activity were observed across weeks as per the protocol. However, statistical evaluation revealed significant interactions between session and protocol only on time spent ( = 78.99, p < 0.001) and HbR measures from LAMPFC ( = 10.87, p = 0.028). Post hoc comparisons between sessions per protocol for time spent revealed significant decreases in time spent during the retention session for protocols 1 (adj. p = 0.009, d = 0.58), 2 (adj. p = 0.025, d = 0.48), and 3 (adj. p = 0.009, d = 0.64), yielding approximately moderate effect sizes across each protocol. Unlike the trends observed in behavioral measures, no post hoc comparisons were significant for HbR measures from LAMPFC.

Figure 9.

Effect of training on time spent and HbO measures from LDLPFC. Data are represented using mean and standard error of mean.

5. Discussion

The purpose of this exploratory study was to examine the effect of customized immersive VR spatial visualization training on participant acquisition, transfer, and mental workload using selected performance and cortical hemodynamic measures. Standard behavioral performance metrics (total time to task completion (s)) were obtained from a customized VR task based on the Purdue Spatial Visualization Test: Rotations (PSVT:R), while cortical hemodynamic responses were measured by a wearable optical brain imaging device that was worn on the participants’ foreheads for all trials. The PSVT:R test assesses spatial visualization ability (SVA), an important skill for students enrolled in engineering and related disciplines that impacts those students’ success and retention in STEM-related disciplines. The effects of the customized VR on task load, spatial characteristics, transfer, and training with a focus on the influence of cortical hemodynamics on SVA are discussed below.

A primary feature of skill acquisition is the impact of task load or task difficulty on goal attainment and prefrontal cortex activation [42,43,47,57]. Behaviorally, we found that time increased monotonically with increased task load (difficulty) for the easy, medium, and hard tasks as noted in Table 1 and Figure 5. The effect sizes (see Table 1) ranged from large to very large (Cohen’s d: −0.78 to −2.61) for the easy to hard tasks, respectively. Our task load findings on time were similar to those detected by Haji et al. with simple and complex lumbar puncture surgical simulated tasks [47], insofar as increases in task difficulty result in additional time to complete the task. The effect of task load on the fNIRS biomarkers of HbO and HbR are depicted in Table 1 and Figure 5. As reported, all four regions of the prefrontal cortex—LDLPFC, LAMPFC, RAMPFC, and RDLPC—were significantly impacted by the task load manipulation (p < 0.001), where there were small to moderate effects (d = −0.23 to −0.51) for the contrasts of the medium to hard and hard to easy tasks. The right prefrontal cortex (RDLPFC and RAMPFC) showed increases in activation for HbO for the easy to medium task load conditions. In terms of HbR, task load resulted in differences for the LDLPFC, RDLPFC, and RAMPFC, albeit with a smaller impact.

To understand the association between task load and brain activity, it is critical to be familiar with the fundamental neurovascular coupling physiological principles. These principles describe the relationship between neuronal activity and cerebral hemodynamics for fNIRS measurements, allowing insight into the role of brain activation for the interpretation of activities and tasks with varying difficulty levels or task loads [57,58,59]. The physiological foundation of neurovascular coupling links oxygen requirements and the delivery of oxygen used for glucose metabolism through the neuronal activation process. This increased neuronal activation process results in an increase in cerebral blood flow that carries oxygen to the needed region through the biomarker oxygenated hemoglobin (HbO). The conversion process of HbO to deoxygenated hemoglobin (HbR) then yields the release of oxygen. Since HbO and HbR are the primary absorbers of the near-infrared light bands in fNIRS cognitive functioning implementation studies, they are used to assess the relative concentrations of biomarkers, allowing a calculation of brain activity to be correlated with task performances.

In his review of the role of neuroimaging for cognitive load theory, Whelan summarized various fMRI papers indicating that for intrinsic cognitive load, the dorsolateral prefrontal cortices (right and left) were the primary regions of activation associated with attentional control and working memory [59] that were critically modulated by cognitive task load and/or task difficulty [56]. Figure 6 denotes the functions of the relevant regions of the prefrontal cortex that have been associated with the different types of cognitive load. Specifically, the dorsolateral prefrontal cortex (DLPFC) and ventromedial PFC are the regions associated with the task load manipulations in this VR study (specific functions of the regions are specified in Figure 4—bottom panel). Importantly, the DLPFC is thought of as the cognitive load control mechanism where the DLPFC structure is considered the manager of the buffering, retrieval, and computation of task-related information [49]. The intrinsic load is associated with the performer and task as well as the interaction between them. The functions of the right DLPFC and the left DLPFC (identified in Figure 4—bottom panel) facilitate the processing of task-relevant information and integrate the processes of attention, short-term memory, retrieval, and decision making [42,55,56,60]. When Zhu and colleagues assessed the combined impact of galvanic skin response, eye-movement tracking, and EEG with varying arithmetic tasks designed using the NIH cognitive toolbox [60,61,62]; they found that frontal brain activation increased monotonically although with varying slopes as the task difficulty or load increased [60]. Our task load findings showed a stronger slope of changes from our medium to hard task while the easy to medium task had either no change or a slight reduction in slope (see Figure 5). Our findings support Zhu’s suggestion that it is important to discern differences in brain activity patterns when assessing the decoding of cognitive load, given that brain activity patterns manifest in various ways to different types of cognitive tasks and levels of task difficulty or load [60,61,62].

Four manipulations of the spatial characteristics of the immersive virtual reality tasks were assessed in our experiment: (1) control; (2) position change; (3) orientation change; and (4) position + orientation change. Spatial characteristics yielded important differences in time spent for task completion as depicted in Table A2 and Figure 7. As expected, behavioral data showed that time spent in the control condition decreased during the position and during the orientation change. In addition, when the transition was from control to position and orientation change, there was a decrease in time spent on the task. The spatial characteristics and task manipulations were particularly important in our study given that the tasks were designed based on the PSVT:R for the skill assessment of students enrolled in engineering and STEM-related areas in terms of spatial visualization abilities. The task spatial characteristics significantly impacted all PFC regions for HbO while they reliably influenced only the RDLPFC for HbR.

Learning is considered to be robustly determined through memory and generalizability assessments [39,43]. In the current investigation, we introduced a new scenario with the same task objective as the previously assessed scenarios; namely, the assessment of the data associated with the last two blocks of the week 2 protocol and all blocks of the week 3 protocol that were tested in week 4 as depicted in Figure 2. The transfer assessment yielded a significant interaction of protocol X condition on the behavioral measure of time spent on the task. There were large effects of a reduction in time spent on task that were reliably detected from control to position and orientation changes in the new scenario, which may be considered an interpolation of the spatial characteristics that were acquired during the week 2 protocol. An interpolation transfer of this type is comparable to the transfer of behavioral scores in a VR surgical coordination task in Shewokis et al. [42,43], as well as the findings of Galoyan et al. [39] of reduced total time in week 2 relative to week 1 in a virtual spatial navigation transfer game.

In terms of brain imaging and decoding of cognitive load [43,56,62], we posit that transfer is associated with germane cognitive load [55], which is realized by an activation of the ventromedial prefrontal cortex (see Figure 4 bottom panel and Figure 6). The ventromedial prefrontal cortex incorporates the integration of information and allows for multiple items in working memory, which are functions necessary for the facilitation of generalizability or transfer. Although we did not detect any significant hemodynamic differences across the VR spatial characterization tasks (see Figure 8—third (HbO-LAMPFC) and fourth (HbO-RAMPFC) panels), there was a trend for transfer from the old to new protocols with the RAMPFC with reduced HbO activation. The lack of differences may be attributed to the high variability in hemodynamic responses. Future work with increased training on the tasks, perhaps to a criterion level of behavioral performance, may elicit stronger hemodynamic responses that are more stable.

Like traditional training findings, studies have demonstrated positive connections between task load and mental workload in VR environments [63]. On the other hand, studies have reported contradictory findings when comparing mental workload in VR environments versus 2D environments, with some reporting greater mental workload in VR environments [63,64] and others reporting no differences [65,66]. Similarly, studies have reported contradictory findings when comparing mental workload during VR training with traditional training. Some studies reported decreases in mental workload and better learning because of VR training [67], while others reported increases in mental workload and poorer learning [63].

From the inception of our study through its culmination, we were interested in determining if the training protocols allowed for improved performance and learning with this applied task performed in a virtual environment. We found that there was a significant interaction between session and protocol for the behavioral measure of time spent on the task, yielding moderate effects with a time decrease for protocol 1. In addition, there was a significant interaction effect of session and protocol for HbR in the LAMPFC, indicating the activation of the neurovascular coupling process and the release of oxygen. However, as depicted in Figure 9—last series of panels on HbO—the high variability in responses to the spatial characteristics of the virtual tasks may be due to having insufficient practice trials (6 weeks including testing and orientation to the tasks) along with a small size (N = 10).

There were two primary limitations to this study. First, the small sample size and sampling method imply that our findings cannot be generalized to broader populations. Instead, our results will be used to inform an expanded next phase of this research inquiry. Second, the immersive virtual reality intervention was produced on a limited budget. With additional resources, a more graphically robust intervention could further enhance the nature of how learners interact with spatial visualization objects.

6. Conclusions

In engineering and related STEM disciplines, spatial visualization skills have been shown to be a critical factor in enhancing learner academic achievement and retention [3,4,5,6]. Findings from this study revealed important small (Cohen’s d: 0.10 to large 2.40) effects of task load and changes in the spatial characteristics of the task, such as orientation or position changes, on time spent and HbO measures from all the prefrontal cortex (PFC) areas when students underwent varying levels of spatial visualization training using VR simulations of object manipulation based upon the Purdue Spatial Visualization Test. Transfer had a large (d = 1.37) effect on time spent and HbO measures from right anterior medial PFC (AMPFC); while training had a moderate (d = 0.48) significant effect on time spent and HbR measures from left AMPFC. High variability in some measurements could be due to limitations in the number of practice trials combined with the small sample size. In order to come to more definitive conclusions, further research will be needed to examine the specific effects of different task characteristics on participant mental workload and behavioral performance during VR training. Future research will also be necessary to explore the extent to which learners generalized the acquired skills from VR to other non-VR learning scenarios.

Author Contributions

Conceptualization, K.B., T.G., P.A.S., K.I., P.R., B.D. and D.L.M.; Methodology, P.A.S., K.I., P.R., K.B., T.G., D.L.M. and B.D.; Software, K.I., P.A.S., P.R. and T.G.; Validation, P.R., P.A.S., K.I. and T.G.; Formal Analysis, P.R., P.A.S. and K.I.; Investigation, T.G., P.A.S., K.I., B.D. and K.B.; Resources, T.G., K.I. and P.A.S.; Data Curation, T.G. and P.R.; Writing—Original Draft Preparation; K.B., P.R., P.A.S., K.I., T.G., B.D. and D.L.M.; Writing—Review and Editing, P.A.S., K.B., P.R., K.I., D.L.M., T.G. and B.D.; Visualization, P.R., P.A.S. and K.I.; Supervision, P.A.S. and K.I.; Project Administration, K.B., P.A.S. and K.I.; Funding Acquisition, K.B., T.G., P.A.S., K.I., B.D. and D.L.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received funding from Drexel University’s Office of the Provost through an internal grant.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Drexel University on 05/04/2017 under the designation IRB#1703005233.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy concerns regarding student information on enrollment and retention.

Acknowledgments

The authors would like to acknowledge the assistance of Nick Jushchyshyn, Program Director for Virtual Reality & Immersive Media at Drexel University, for his work on the project in the development of the VR application used in the study. We would also like to acknowledge Andrew Marku for assistance in working with the participants and data collection.

Conflicts of Interest

fNIR Devices, LLC, manufactures the optical brain imaging instrument, fNIRS, and licensed IP and know-how from Drexel University. Kurtulus Izzetoglu was involved in technology development and thus was offered a minor share in the startup firm, fNIR Devices, LLC. The remaining authors declare no conflict of interest.

Appendix A

Table A1.

Percentage of missing data per each behavioral and fNIRS measure.

Table A1.

Percentage of missing data per each behavioral and fNIRS measure.

| Dependent Variable | Missing (%) |

|---|---|

| Time Spent | 0.55 |

| HbO or HbR from LDLPFC | 7.06 |

| HbO or HbR from LAMPFC | 2.87 |

| HbO or HbR from RAMPFC | 3.75 |

| HbO or HbR from RDLPFC | 11.26 |

Table A2.

Descriptive statistics associated with each change in spatial characteristic and supplemental differences between them. A positive Cohen’s d value indicates that the effect is greater in the first term of the comparison. For example, time spent was greater during control when compared against change in position, while time spent was lower during control when compared against changes in position and orientation.

Table A2.

Descriptive statistics associated with each change in spatial characteristic and supplemental differences between them. A positive Cohen’s d value indicates that the effect is greater in the first term of the comparison. For example, time spent was greater during control when compared against change in position, while time spent was lower during control when compared against changes in position and orientation.

| Spatial Characteristics | Mean | SD | C − P | C − O | C − (P + O) | P − O | P − (P + O) | O − (P + O) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| adj. p | d | adj. p | d | adj. p | d | adj. p | d | adj. p | d | adj. p | d | |||||

| Behavioral | Time Spent (sec) | C | 97.83 | 67.56 | 0.048 | 0.24 | 0.010 | 0.38 | 0.004 | 0.43 | 0.230 | 0.14 | <0.001 | −0.67 | <0.001 | −0.81 |

| O | 74.47 | 38.53 | ||||||||||||||

| P | 63.64 | 26.96 | ||||||||||||||

| P + O | 78.69 | 28.65 | ||||||||||||||

| HbO | LDLPFC (uM) | C | 0.33 | 1.78 | 0.027 | −0.34 | 0.017 | −0.46 | <0.001 | −0.81 | 0.391 | −0.13 | 0.017 | −0.47 | 0.027 | −0.34 |

| O | 0.80 | 2.08 | ||||||||||||||

| P | 1.04 | 2.40 | ||||||||||||||

| P + O | 1.66 | 2.73 | ||||||||||||||

| LAMPFC (uM) | C | 0.27 | 2.54 | 0.136 | −0.19 | 0.012 | −0.42 | <0.001 | −0.82 | 0.109 | −0.22 | <0.001 | −0.63 | 0.005 | −0.41 | |

| O | 0.52 | 2.73 | ||||||||||||||

| P | 0.87 | 3.07 | ||||||||||||||

| P + O | 1.36 | 3.43 | ||||||||||||||

| RAMPFC (uM) | C | 0.38 | 2.31 | 0.105 | −0.22 | 0.004 | −0.50 | <0.001 | −1.09 | 0.056 | −0.28 | <0.001 | −0.86 | <0.001 | −0.59 | |

| O | 0.73 | 2.41 | ||||||||||||||

| P | 1.23 | 2.84 | ||||||||||||||

| P + O | 1.98 | 3.31 | ||||||||||||||

| RDLPFC (uM) | C | −0.16 | 1.51 | 0.014 | −0.40 | <0.001 | −0.78 | <0.001 | −1.24 | 0.015 | −0.39 | <0.001 | −0.84 | 0.008 | −0.45 | |

| O | 0.38 | 1.69 | ||||||||||||||

| P | 0.94 | 1.88 | ||||||||||||||

| P + O | 1.69 | 2.14 | ||||||||||||||

| HbR | LDLPFC (uM) | C | 0.45 | 1.03 | 0.846 | 0.03 | 0.407 | 0.20 | 0.407 | 0.29 | 0.407 | 0.17 | 0.407 | 0.26 | 0.700 | 0.09 |

| O | 0.55 | 1.21 | ||||||||||||||

| P | 0.41 | 1.49 | ||||||||||||||

| P + O | 0.30 | 1.90 | ||||||||||||||

| LAMPFC (uM) | C | 0.28 | 0.95 | 0.989 | 0.02 | 0.756 | 0.12 | 0.989 | 0.02 | 0.756 | 0.10 | 0.989 | 0.00 | 0.756 | −0.10 | |

| O | 0.29 | 1.15 | ||||||||||||||

| P | 0.11 | 1.26 | ||||||||||||||

| P + O | 0.05 | 2.06 | ||||||||||||||

| RAMPFC (uM) | C | 0.43 | 0.98 | 0.988 | 0.00 | 0.322 | 0.12 | 0.322 | 0.13 | 0.322 | 0.12 | 0.322 | 0.13 | 0.988 | 0.00 | |

| O | 0.43 | 1.12 | ||||||||||||||

| P | 0.17 | 1.23 | ||||||||||||||

| P + O | 0.22 | 2.45 | ||||||||||||||

| RDLPFC (uM) | C | 0.19 | 0.96 | 0.934 | −0.01 | 0.292 | 0.22 | 0.292 | −0.25 | 0.292 | 0.24 | 0.292 | −0.23 | 0.037 | −0.47 | |

| O | 0.21 | 1.02 | ||||||||||||||

| P | −0.10 | 1.14 | ||||||||||||||

| P + O | 0.35 | 1.79 | ||||||||||||||

References

- U.S. Bureau of Labor Statistics. Architecture and Engineering Occupations; U.S. Bureau of Labor Statistics: Washington, DC, USA, 2022. Available online: https://www.bls.gov/ooh/architecture-and-engineering/home.htm (accessed on 1 December 2022).

- World Economic Forum. The Future of Jobs Report; World Economic Forum: Geneva, Switzerland, 2020; Available online: https://www3.weforum.org/docs/WEF_Future_of_Jobs_2020.pdf (accessed on 2 December 2022).

- Kahl, T.; Grob, A.; Segerer, R.; Möhring, W. Executive functions and visual-spatial skills predict mathematical achievement: Asymmetrical associations across age. Psychol. Res. 2021, 85, 36–46. [Google Scholar] [CrossRef] [PubMed]

- Berkowitz, M.; Stern, E. Which cognitive abilities make the difference? Predicting Academic Achievements in Advanced STEM Studies. J. Intell. 2018, 6, 48. [Google Scholar] [CrossRef] [PubMed]

- Fontaine, M.; De Rosa, A.J. Longitudinal analysis of spatial ability over an undergraduate engineering degree program. In Proceedings of the 2020 ASEE Virtual Annual Conference Content Access, Virtual Online, 22 June 2020; ASEE Conferences: Washington, DC, USA, 2020. Available online: https://peer.asee.org/34931 (accessed on 22 July 2020).

- Branoff, T.; Mohammed, J.; Brown, J. Student retention in an engineering technology program: The role of spatial visualization ability. In Proceedings of the 2022 ASEE Annual Conference & Exposition, Minneapolis, MN, USA, 26–29 June 2022; Available online: https://peer.asee.org/40962 (accessed on 23 September 2022).

- Voyer, D.; Voyer, S.; Bryden, M.P. Magnitude of sex differences in spatial abilities: A meta-analysis and consideration of critical variables. Psychol. Bull. 1995, 117, 250–270. [Google Scholar] [CrossRef]

- Linn, M.C.; Petersen, A.C. Emergence and characterization of sex differences in spatial ability: A meta-analysis. Child Dev. 1985, 56, 1479–1498. [Google Scholar] [CrossRef]

- National Research Council. Learning to Think Spatially; The National Academies Press: Washington, DC, USA, 2006. [CrossRef]

- Nieto-Escamez, F.A.; Roldán-Tapia, M.D. Gamification as online teaching strategy during COVID-19: A mini-review. Front. Psychol. 2021, 12, 648552. [Google Scholar] [CrossRef] [PubMed]

- Obrero-Gaitán Nieto-Escamez, F.A.; Zagalaz-Anula, N.; Cortés-Pérez, I. An innovative approach for online neuroanatomy and neurorrehabilitation teaching based on 3d virtual anatomical models using leap motion controller during COVID-19 pandemic. Front. Psychol. 2021, 12, 590196. [Google Scholar] [CrossRef]

- Zhou, Y.; Xu, T.; Yang, H.; Li, S. Improving spatial visualization and mental rotation using FORSpatial through shapes and letters in virtual environment. Iieee Trans. Learn. Technol. 2022, 15, 326–337. [Google Scholar] [CrossRef]

- Adebayo, O.O.; Farrar, E.J.; Evans, R.; McCray, T.L.; Nathans-Kelly, T. Empowering early mastery of spatial visualization skills in underrepresented minority engineering students. In Proceedings of the 2014 IEEE Frontiers in Education Conference (FIE) Proceedings, Madrid, Spain, 22–25 October 2014; pp. 1–8. [Google Scholar] [CrossRef]

- Gómez-Tone, H.C.; Martin-Gutierrez, J.; Valencia Anci, L.; Mora Luis, C.E. International comparative pilot study of spatial skill development in engineering students through autonomous augmented reality-based training. Symmetry 2020, 12, 1401. [Google Scholar] [CrossRef]

- Uttal, D.H.; Cohen, C.A. Spatial thinking and STEM education: When, why, and how? In Psychology of Learning and Motivation; Ross’, Ed.; Academic Press: Cambridge, MA, USA, 2012; Volume 57, pp. 147–181. [Google Scholar] [CrossRef]

- Bitter, C.; Loney, E. Deeper Learning: Improving Student Outcomes for College, Career, and Civic Life; Education Policy Center, American Institutes for Research: Cambridge, MA, USA, 2015. Available online: https://files.eric.ed.gov/fulltext/ED571850.pdf (accessed on 1 August 2019).

- William and Flora Hewlett Foundation. Deeper Learning Defined; Hewlett Foundation: Menlo Park, CA, USA, 2013; Available online: http://www.hewlett.org/library/hewlett-foundation-publication/deeper-learning-defined (accessed on 15 November 2022).

- Deeper Learning Hub. What Is Deeper Learning. Available online: https://deeper-learning.org/ (accessed on 15 July 2022).

- Segil, J.L.; Myers, B.A.; Sullivan, J.F.; Reamon, D.T. Efficacy of various spatial visualization implementation approaches in a first-year engineering projects course. In Proceedings of the 2015 ASEE Annual Conference and Exposition, Seattle, WA, USA, 14–17 June 2015. [Google Scholar] [CrossRef]

- Sorby, S. Educational research in developing 3-d spatial skills for engineering students. Int. J. Sci. Educ. 2009, 31, 459–480. [Google Scholar] [CrossRef]

- Metz, S.S.; Donohue, S.; Moore, C. Spatial skills: A focus on gender and engineering. In Apply Research to Practice (ARP) Resources; Bogue, B., Cady, E., Eds.; AWE: New York, NY, USA, 2012; Available online: http://aweonline.org/arp_spatialskills_literature@20review.pdf (accessed on 20 October 2021).

- Hsi, S.; Linn, M.C.; Bell, J.E. The role of spatial reasoning in engineering and the design of spatial instruction. J. Eng. Educ. 1997, 86, 151–158. [Google Scholar] [CrossRef]

- Lane, D.; Sorby, S. Bridging the gap: Blending spatial skills instruction into a technology teacher preparation programme. Int. J. Technol. Des. Educ. 2022, 32, 2195–2215. [Google Scholar] [CrossRef]

- Nagy-Kondor, R.; Esmailnia, S. A comparison between the spatial intelligence of the freshman engineering students of Sharif University of Technology and Debrecen University. Period. Polytech. Soc. Manag. Sci. 2021, 29, 159–167. [Google Scholar] [CrossRef]

- Coyne, L.; Merritt, T.A.; Parmentier, B.L.; Sharpton, R.A.; Takemoto, J.K. The past, present, and future of virtual reality in pharmacy education. Am. J. Pharm. Educ. 2019, 83, 281–290. [Google Scholar] [CrossRef]

- Garcia, C.A.; Caiza, G.; Naranjo, J.E.; Ortiz, A.; Garcia, M.V. An approach of training virtual environment for teaching electro-pneumatic systems. IFAC-Pap. 2019, 52, 278–284. [Google Scholar] [CrossRef]

- Kaimal, G.; Carroll-Haskins, K.; Topoglu, Y.; Ramakrishnan, A.; Arslanbek, A.; Ayaz, H. Exploratory fNIRS assessment of differences in activation in virtual reality visual self-expression including with a fragrance stimulus. Art Ther. 2022, 39, 128–137. [Google Scholar] [CrossRef]

- Pellas, N.; Fotaris, P.; Kazanidis, I.; Wells, D. Augmenting the learning experience in primary and secondary school education: A systematic review of recent trends in augmented reality game-based learning. Virtual Real. Educ. 2018, 23, 329–346. [Google Scholar] [CrossRef]

- Prensky, M. From Digital Natives to Digitalwisdom: Hopeful Essays for 21st Century Learning; Corwin Press: Thousand Oaks, CA, USA, 2012. [Google Scholar]

- Wang, C.; Tang, Y.; Kassem, M.A.; Li, H.; Hua, B. Application of VR technology in civil engineering education. Comput. Appl. Eng. Educ. 2022, 30, 335–348. [Google Scholar] [CrossRef]

- Deterding, S. Gameful design for learning. TD Mag. 2013, 67, 60–63. [Google Scholar]

- Kuznetcova, I.; Glassman, M.; Tilak, S.; Wen, Z.; Evans, M.; Pelfrey, L.; Lin, T.J. Using a mobile virtual reality and computer game to improve visuospatial self-efficacy in middle school students. Comput. Educ. 2023, 192, 104660. [Google Scholar] [CrossRef]

- Qorbani, H.S.; Arya, A.; Nowlan, N.; Abdinejad, M. ScienceVR: A virtual reality framework for STEM education, simulation and assessment. In Proceedings of the IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Taichung, Taiwan, 15–17 November 2021; pp. 267–275. [Google Scholar] [CrossRef]

- Park, J.H. Effects of virtual reality-based spatial cognitive training on hippocampal function of older adults with mild cognitive impairment. Int. Psychogeriatr. 2022, 34, 157–163. [Google Scholar] [CrossRef]

- Sacco, K.; Ronga, I.; Perna, P.; Cicerale, A.; Del Fante, E.; Sarasso, P.; Geminiani, G.C. A virtual navigation training promotes the remapping of space in allocentric coordinates: Evidence from behavioral and neuroimaging data. Front. Hum. Neurosci. 2022, 16, 693968. [Google Scholar] [CrossRef] [PubMed]

- Cates, C.U.; Lönn, L.; Gallagher, A.G. Prospective, randomised and blinded comparison of proficiency-based progression full-physics virtual reality simulator training versus invasive vascular experience for learning carotid artery angiography by very experienced operators. BMJ Simul. Technol. Enhanc. Learn. 2016, 2, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Izzetoglu, K.; Ayaz, H.; Merzagora, A.; Izzetoglu, M.; Shewokis, P.A.; Bunce, S.C.; Pourrezaei, K.; Rosen, A.; Onaral, B. The evolution of field deployable fNIR spectroscopy from bench to clinical settings. J. Innov. Opt. Health Sci. 2011, 4, 239–250. [Google Scholar] [CrossRef]

- Shewokis, P.A.; Ayaz, H.; Curtin, A.; Izzetoglu, K.; Onaral, B. Brain in the loop learning using functional near infrared spectroscopy. In Proceedings of the 5th International Conference on Augmented Cognition, Las Vegas, NV, USA, 21–26 July 2013; Volume 8027, pp. 381–389. [Google Scholar] [CrossRef]

- Galoyan, T.; Betts, K.; Abramian, H.; Reddy, P.; Izzetoglu, K.; Shewokis, P.A. Examining mental workload in a spatial navigation transfer game via functional near infrared spectroscopy. Brain Sci. 2021, 11, 45. [Google Scholar] [CrossRef] [PubMed]

- Liang, L.Y.; Getchell, N.; Shewokis, P.A. Brain activation in the prefrontal cortex during motor and cognitive tasks in adults. J. Behav. Brain Sci. 2016, 6, 463–474. [Google Scholar] [CrossRef]

- Fishburn, F.A.; Norr, M.E.; Medvedev, A.V.; Vaidya, C.J. Sensitivity of fNIRS to cognitive state and load. Front. Hum. Neurosci. 2014, 8, 76. [Google Scholar] [CrossRef]

- Shewokis, P.A.; Ayaz, H.; Panait, L.; Liu, Y.; Syed, M.; Greenawald LShariff, F.U.; Castellanos, A.; Lind, D.S. Brain-in-the-loop learning using fNIR and simulated virtual reality surgical tasks: Hemodynamic and behavioral effects. In Proceedings of the 9th International Conference on Augmented Cognition, Los Angeles, CA, USA, 2–7 August 2015; Volume 9183, pp. 324–355. [Google Scholar] [CrossRef]

- Shewokis, P.A.; Shariff, F.U.; Liu, Y.; Ayaz, H.; Castellanos, A.; Lind, D.S. Acquisition, retention, and transfer of simulated laparoscopic tasks using fNIR and a contextual interference paradigm. Am. J. Surg. 2017, 213, 336–345. [Google Scholar] [CrossRef]

- Aksoy, E.; Izzetoglu, K.; Baysoy, E.; Agrali, A.; Kitapcioglu, D.; Onaral, B. Performance monitoring via functional near infrared spectroscopy for virtual reality based basic life support training. Front. Neurosci. 2019, 13, 1336. [Google Scholar] [CrossRef]

- Liu, Y.; Ayaz, H.; Shewokis, P.A. Mental workload classification with concurrent electroencephalography and functional near-infrared spectroscopy. Brain Comput. Interfaces 2017, 4, 175–185. [Google Scholar] [CrossRef]

- Getchell, N.; Shewokis, P.A. Understanding the role of cognitive effort within contextual interference paradigms: Theory, measurement, and tutorial. Braz. J. Mot. Behav. 2023, 17, 59–69. [Google Scholar] [CrossRef]

- Reddy, P.; Izzetoglu, M.; Shewokis, P.A.; Sangobowale, M.; Diaz-Arrastia, R.; Izzetoglu, K. Evaluation of fNIRS signal components elicited by cognitive and hypercapnic stimuli. Sci. Rep. 2021, 11, 23457. [Google Scholar] [CrossRef] [PubMed]

- Ayaz, H.; Shewokis, P.A.; Curtin, A.; Izzetoglu, M.; Izzetoglu, K.; Onaral, B. Using MazeSuite and functional near infrared spectroscopy to study learning in spatial navigation. J. Vis. Exp. 2011, 8, 3443. [Google Scholar] [CrossRef]

- Izzetoglu, M.; Izzetoglu, K. Real Time Artifact Removal. Google Patent US20140372081A1, 18 December 2014. Available online: https://patents.google.com/patent/US20140372081 (accessed on 14 July 2022).

- Villringer, A.; Chance, B. Non invasive optical spectroscopy and imaging of human brain function. Trends Neurosci. 1997, 20, 435–442. [Google Scholar] [CrossRef]

- Pinheiro, J.; Bates, D.; R Core Team. nlme: Linear and Nonlinear Mixed Effects Models. R package version 3.1-162. 2023. Available online: https://CRAN.R-project.org/package=nlme (accessed on 15 May 2023).

- R Core Team. A Language and Environment for Statistical Computing. R Foundation for Statistical Computing. 2020. Available online: https://www.eea.europa.eu/data-and-maps/indicators/oxygen-consuming-substances-in-rivers/r-development-core-team-2006 (accessed on 31 March 2023).

- Lenth, R. Estimated Marginal Means, aka Least Squares Mean. 2020. Available online: https://cran.r-project.org/web/packages/emmeans/index.html (accessed on 15 April 2023).

- Westfall, J.; Kenny, D.A.; Judd, C.M. Statistical power and optimal design in experiments in which samples of participants respond to samples of stimuli. J. Exp. Psychol. Gen. 2014, 143, 2020–2045. [Google Scholar] [CrossRef] [PubMed]

- Sweller, J.; van Merriënboer, J.J.G.; Paas, F. Cognitive architecture and instructional design: 20 years later. Educ. Psychol. Rev. 2019, 31, 261–292. [Google Scholar] [CrossRef]

- Reddy, P.; Shewokis, P.A.; Izzetoglu, K. Can variability of brain activity serve as a metric for assessing human performance during UAS dual-task training. In Proceedings of the 2022 IEEE 3rd International Conference on Human-Machine Systems (ICHMS), Orlando, FL, USA, 17–19 November 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Kerr, J.; Reddy, P.; Shewokis, P.A.; Izzetoglu, K. Cognitive workload impacts of simulated visibility changes during search and surveillance tasks quantified by Functional Near Infrared Spectroscopy. IEEE Trans. Hum. Mach. Syst. 2022, 52, 658–667. [Google Scholar] [CrossRef]

- Izzetoglu, K.; Bunce, S.; Onaral, B.; Pourrezaei, K.; Chance, B. Functional optical brain imaging using near-infrared during cognitive tasks. Int. J. Hum. Comput. Interact. 2004, 17, 211–227. [Google Scholar] [CrossRef]

- Whelan, R.R. Neuroimaging of cognitive load in instructional multimedia. Educ. Res. Rev. 2007, 2, 1–12. [Google Scholar] [CrossRef]

- Zhu, X.; Korivand, S.; Hamill, K.; Jalili, N.; Gong, J. A comprehensive decoding of cognitive load. Smart Health 2022, 26, 100336. [Google Scholar] [CrossRef]

- NIH Cognitive Toolbox URL © 2022 Toolbox Assessments, Inc. Reserved. Available online: https://www.healthmeasures.net/explore-measurement-systems/nih-toolbox/intro-to-nih-toolbox/cognition (accessed on 21 April 2023).

- Makransky, G.; Terkildsen, T.S.; Mayer, R.E. Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learn. Instr. 2019, 60, 225–236. [Google Scholar] [CrossRef]

- Kakkos, I.; Dimitrakopoulos, G.N.; Gao, L.; Zhang, Y.; Qi, P.; Matsopoulos, G.K.; Thakor, N.; Bezerianos, A.; Sun, Y. Mental workload drives different reorganizations of functional cortical connectivity between 2D and 3D simulated flight experiments. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1704–1713. [Google Scholar] [CrossRef]

- Sun, J.; Cheng, S.; Ma, J.; Xiong, K.; Su, M.; Hu, W. Assessment of the static upright balance index and brain blood oxygen levels as parameters to evaluate pilot workload. PLoS ONE 2019, 14, e0214277. [Google Scholar] [CrossRef]

- Oberhauser, M.; Dreyer, D.; Braunstingl, R.; Koglbauer, I. What’s real about virtual reality flight simulation? Comparing the fidelity of a virtual reality with a conventional flight simulation environment. Aviat. Psychol. Appl. Hum. Factors 2018, 8, 22–34. [Google Scholar] [CrossRef]

- Chao, C.J.; Wu, S.Y.; Yau, Y.J.; Feng, W.Y.; Tseng, F.Y. Effects of three-dimensional virtual reality and traditional training methods on mental workload and training performance. Hum. Factors Ergon. Manuf. Serv. Ind. 2017, 27, 187–196. [Google Scholar] [CrossRef]

- Jeffri, N.F.S.; Awang Rambli, D.R. A review of augmented reality systems and their effects on mental workload and task performance. Heliyon 2021, 7, e06277. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).