Neuroplasticity Meets Artificial Intelligence: A Hippocampus-Inspired Approach to the Stability–Plasticity Dilemma

Abstract

:1. Introduction

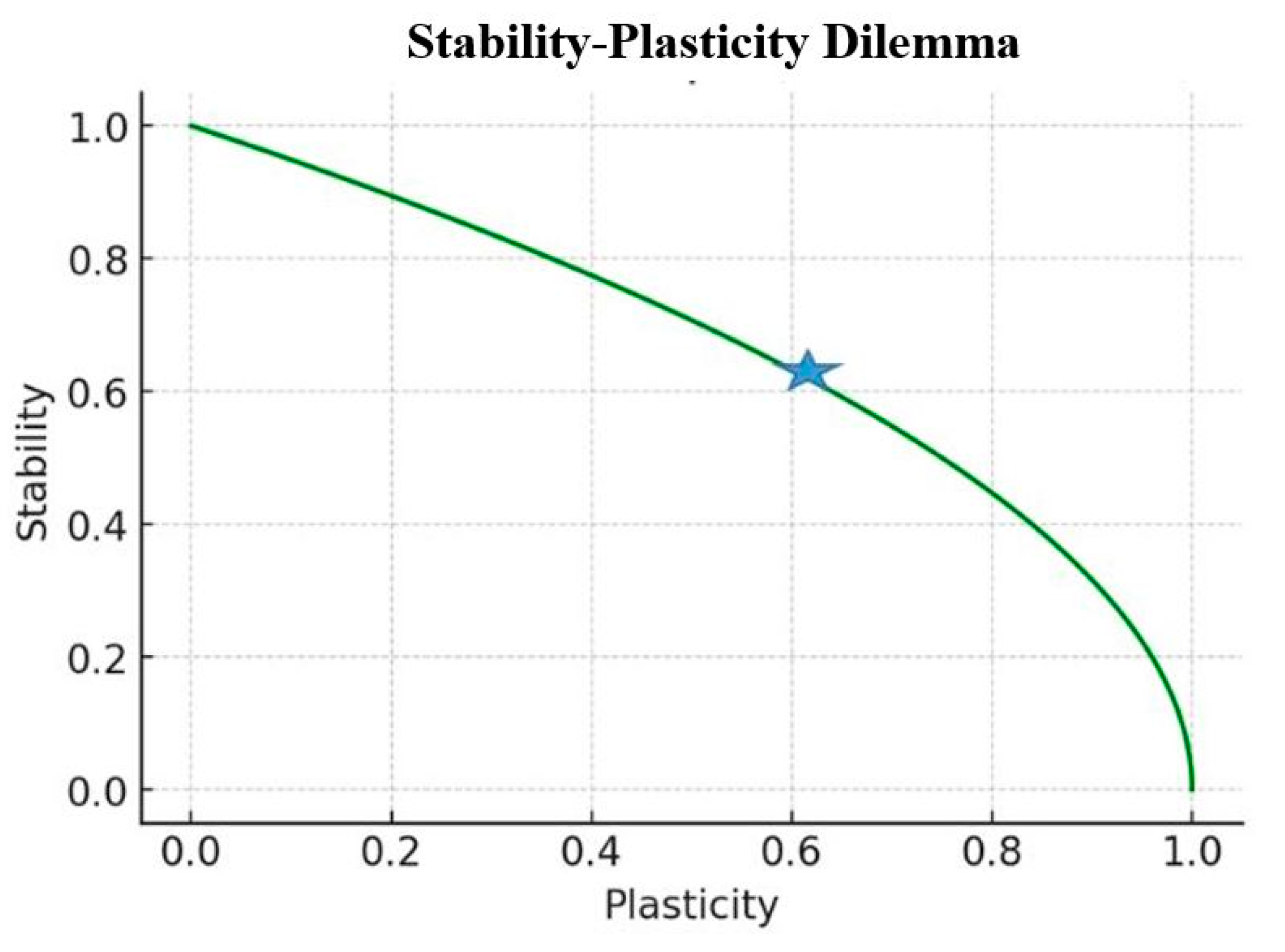

- The current state of the stability–plasticity dilemma in AI and its parallels in neuroscience.

- Proposed AI architectures inspired by the hippocampus–cortex system, emphasizing dual learning rates and offline consolidation mechanisms.

- Potential implications and challenges in implementing these biologically inspired approaches in AI systems.

- Future directions for research at the intersection of neuroscience and AI, with a focus on continual learning and knowledge integration.

2. The Hippocampus–Cortex System

2.1. Key Concepts and Terminology

2.2. Complementary Learning Systems

2.3. Biological Solutions to the Stability–Plasticity Dilemma

2.3.1. Complementary Network Events: SWRs and BARRs

- SWRs: SWRs have long been recognized as crucial for memory consolidation. During these events, neurons that were active during recent experiences are reactivated in a time-compressed manner [13]. This reactivation strengthens the neural connections associated with new memories, facilitating their integration into existing knowledge structures in the neocortex.

- BARRs: Karaba and colleagues [13] discovered that BARRs play a complementary role to SWRs. BARRs involve the synchronized firing of a subset of CA2 pyramidal cells and cholecystokinin-expressing (CCK+) basket cells. Crucially, neurons and assemblies that were active during learning and subsequently reactivated during SWRs are inhibited during BARRs.

2.3.2. Balancing Act: Selective Strengthening and Network Stability

- Plasticity through SWRs: SWRs promote plasticity by selectively reactivating and strengthening neural patterns associated with recent, important experiences. This process allows for the rapid acquisition and initial consolidation of new memories.

- Stability through BARRs: BARRs contribute to network stability by inhibiting the same neurons and assemblies that were previously activated. This inhibition may serve several crucial functions: (a) Preventing runaway excitation: By dampening the activity of recently strengthened neural patterns, BARRs may prevent the excessive amplification of new memories, which could otherwise lead to network instability. (b) Maintaining relative synaptic weights: The inhibition during BARRs might help preserve the relative importance of different memories by preventing the over-strengthening of the most recent experiences. (c) Facilitating integration: By temporarily suppressing recently acquired information, BARRs may create opportunities for older, related memories to be activated and integrated with new learning.

- Dynamic regulation: Karaba and colleagues [13] found that the initial increase in reactivation during SWRs gradually returned to baseline levels through sleep. This trend was abolished when CCK+ basket cells were silenced during BARRs, resulting in higher synchrony of the CA1 assemblies and impaired memory consolidation. This suggests that the interplay between SWRs and BARRs is crucial for appropriately balancing synaptic strengthening and network stability over time.

2.3.3. Implications for Artificial Intelligence

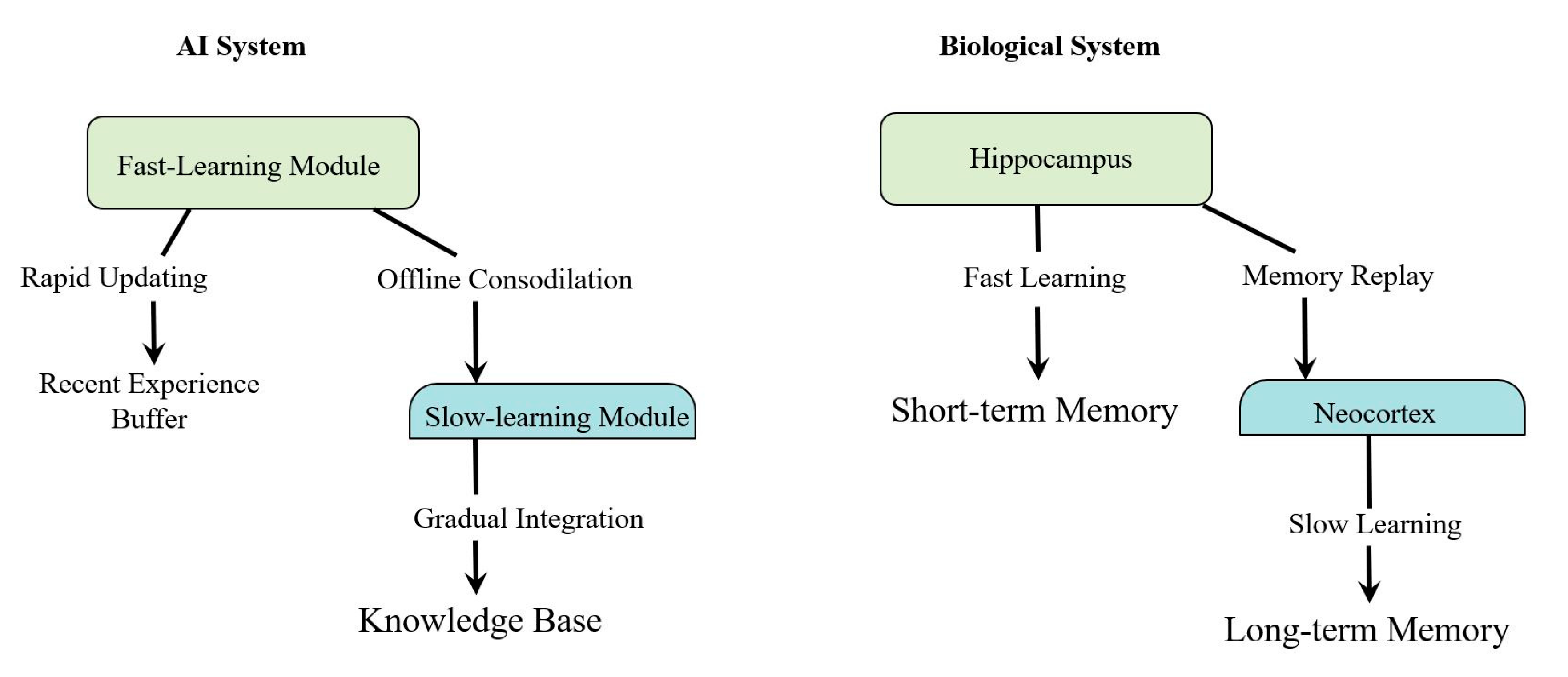

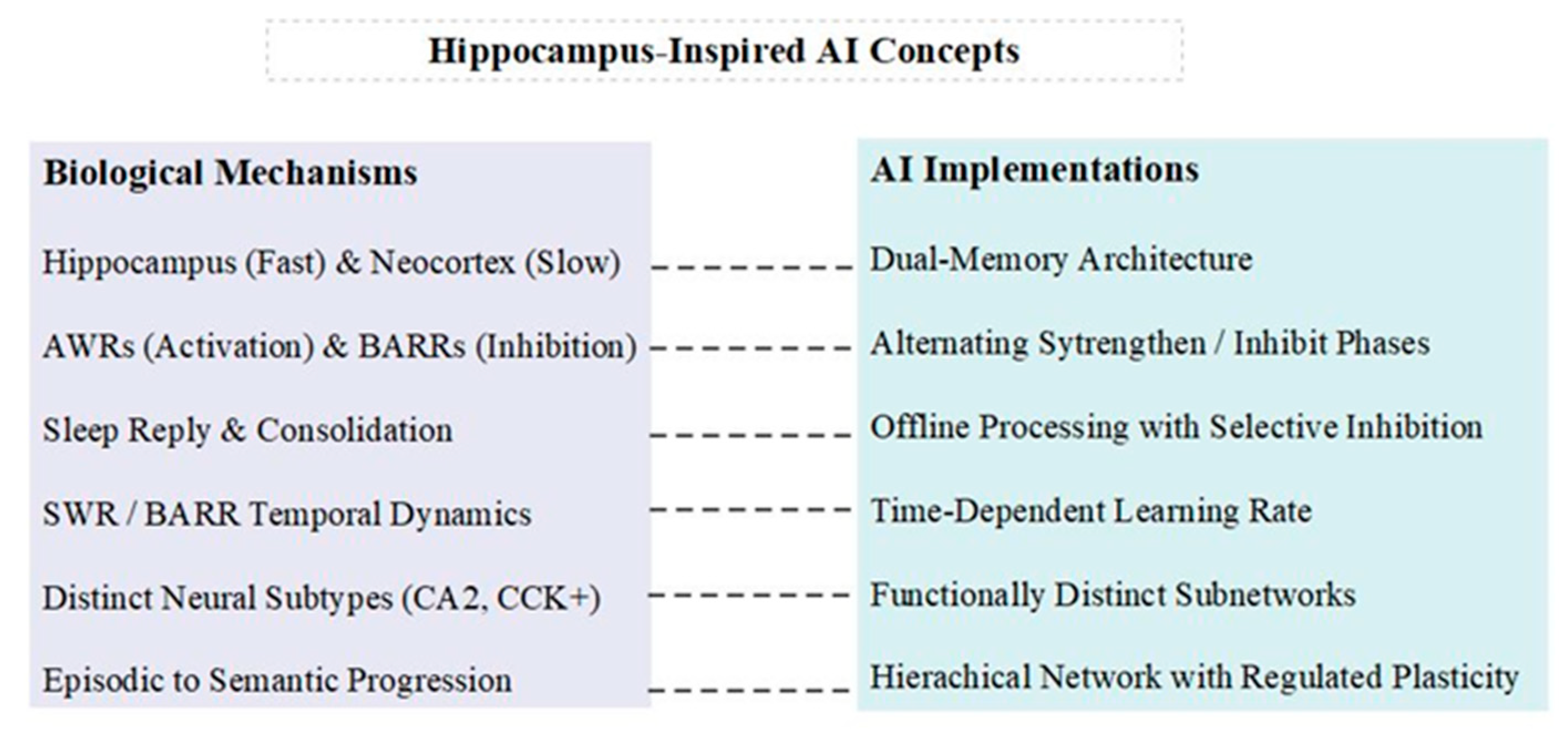

- Dual Learning Rates and Complementary Processes: The combination of fast hippocampal learning with slow cortical integration provides a model for balancing quick adaptation with long-term stability. AI systems could implement dual-memory architectures with different learning rates to mimic this biological strategy [17]. Furthermore, inspired by the complementary roles of SWRs and BARRs, AI systems could incorporate alternating phases of activation and targeted inhibition during the consolidation process.Implementation: Design a neural network with two interacting components: a fast-learning module (analogous to the hippocampus) and a slow-learning module (analogous to the neocortex). The training regime should alternate between strengthening recent patterns (inspired by SWRs) and selectively inhibiting these same patterns (inspired by BARRs) to prevent overfitting and maintain network stability.

- Offline Consolidation with Selective Inhibition: The use of replay for gradual knowledge integration during periods of rest or low activity could inspire similar mechanisms in AI [18,19]. This could involve periodic “sleep” phases where the AI system consolidates recent experiences into a more stable knowledge base. The discovery of BARRs suggests that this process should include not only the reactivation of recent experiences but also their selective inhibition.Implementation: Design an AI system with dedicated offline processing periods that implement both prioritized experience replay (inspired by SWRs) and a novel “selective inhibition” mechanism (inspired by BARRs) that temporarily downregulates the influence of recently strengthened connections.

- Dynamic Regulation of Plasticity: The temporal dynamics observed in the interplay between SWRs and BARRs, where reactivation strength gradually returns to the baseline, suggesting that AI systems could benefit from the dynamic regulation of plasticity over time.Implementation: Develop a time-dependent learning rate that starts high for new information and gradually decreases, mimicking the biological return to the baseline activation. This could be coupled with a complementary inhibition strength that increases over time for recently learned patterns.

- Circuit-Specific Mechanisms: The distinct roles of different neural subtypes (e.g., CA2 pyramidal cells and CCK+ basket cells) in BARRs highlight the potential benefits of incorporating diverse, functionally specialized components in AI architectures.Implementation: Design AI architectures with functionally distinct subnetworks that play different roles in learning and consolidation. For example, one subnetwork could specialize in the rapid encoding of new information, while another focuses on selectively inhibiting recent patterns to maintain overall network stability.

- Contextual Representation with Balanced Plasticity: The hippocampus’s ability to form distinct representations of similar experiences (pattern separation) while also generalizing across contexts (pattern completion) could inform the development of more flexible AI learning algorithms [20,21]. The addition of BARR-inspired mechanisms could help maintain the stability of these representations over time.Implementation: Develop AI systems with enhanced abilities to create distinct contextual representations, coupled with a mechanism for selectively strengthening or inhibiting these representations based on their recency and importance.

- Hierarchical Organization with Regulated Plasticity: The progression from specific episodic memories in the hippocampus to more abstract, semantic knowledge in the cortex suggests a hierarchical organization of knowledge. This principle could be applied to develop AI architectures that build increasingly abstract representations over time, with BARR-inspired mechanisms helping to regulate plasticity at different levels of the hierarchy.Implementation: Create a hierarchical neural network where lower levels capture specific details, and higher levels represent more general concepts. Implement different plasticity rules at each level, with BARR-inspired inhibition more strongly regulating lower, more plastic levels to prevent overfitting to recent experiences.

2.3.4. Neuronal Representations and Plasticity

- Cell-type specific roles: The study found that deep CA2 pyramidal cells were more active during BARRs, while superficial CA2 cells were more active during SWRs. This anatomical and functional segregation suggests highly specialized roles for different neuronal subpopulations in memory processing.

- Differential plasticity regulation: During BARRs, CA1 neurons that had increased their activity during learning were selectively inhibited. This mechanism appears to provide a counterbalance to the strengthening of synapses that occurs during SWR-associated replay.

- Dynamic plasticity modulation: The alternation between SWRs and BARRs creates a dynamic modulation of neuronal plasticity. Synapses associated with recent learning are strengthened during SWRs and then selectively suppressed during BARRs, potentially preventing runaway excitation and maintaining network stability.

- Time-dependent plasticity changes: Karaba and colleagues [13] observed that the reactivation of learning-related neural patterns during SWRs gradually decreased over the course of sleep, returning to baseline levels. This suggests a time-dependent regulation of plasticity, where initial strong reactivations give way to more stabilized representations.

- Interneuron-mediated plasticity control: The study highlighted the crucial role of CCK+ interneurons in regulating plasticity. These cells were highly active during BARRs but not during SWRs, suggesting they play a key role in the selective inhibition of recently potentiated synapses.

2.4. Synaptic Plasticity and Long-Term Potentiation

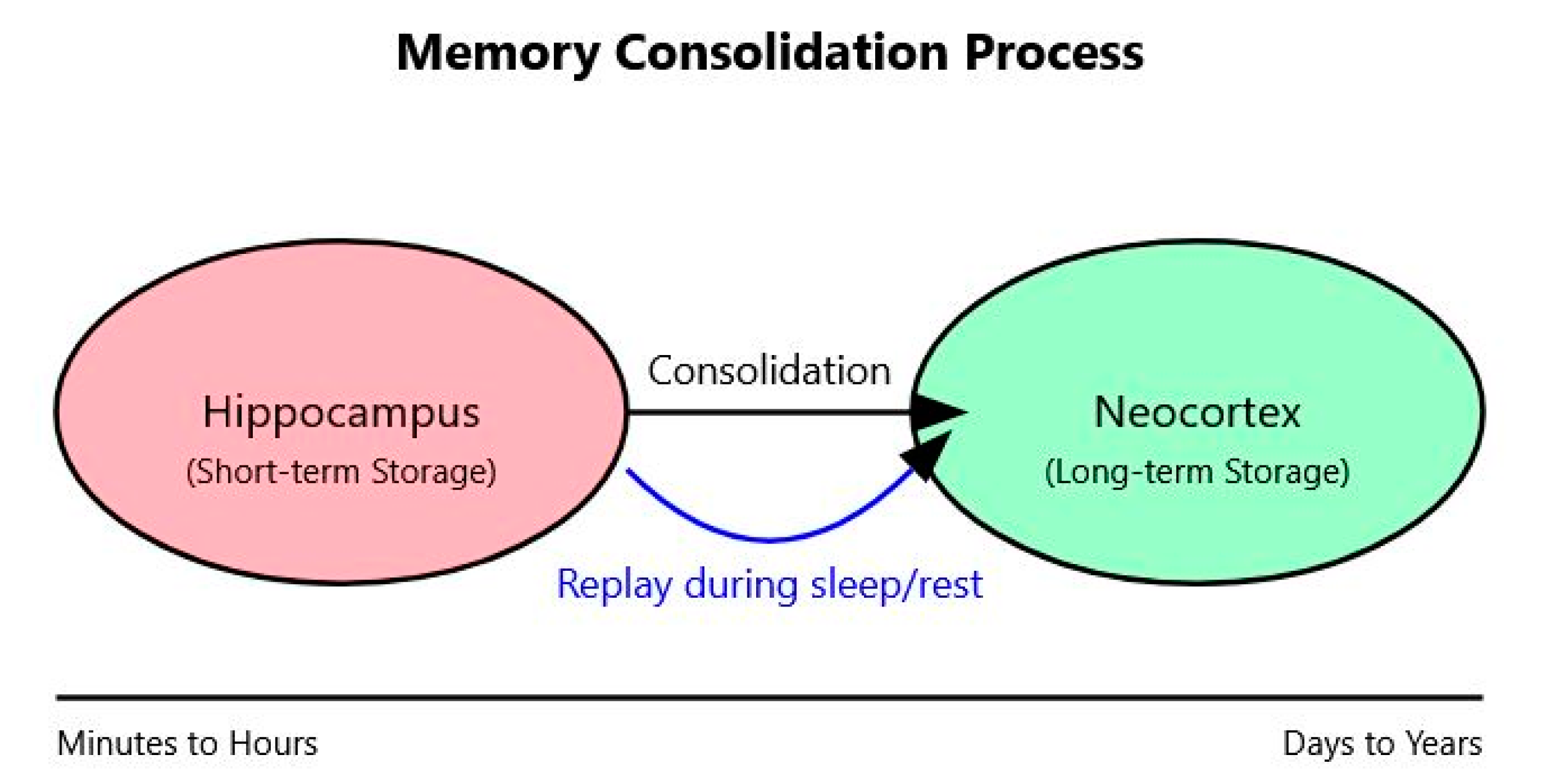

2.5. Memory Consolidation and Replay

- Offline Processing: AI systems engage in internal computations to consolidate and optimize learning, analogous to memory consolidation during sleep [9].

- Experience Replay: Inspired by hippocampal replay, AI systems reprocess previous experiences to reinforce learning and integrate new information with existing knowledge [31].

- Model Consolidation: In continual learning setups, “sleep” phases consolidate knowledge from recent learning into a more stable, general model, mirroring hippocampal–neocortical dialogue [15].

- Regularization and Pruning: AI systems maintain network efficiency through processes analogous to synaptic homeostasis in neuroscience [31].

2.6. Contextual Representation and Pattern Separation

3. Current Challenges in AI: Catastrophic Forgetting

- Elastic Weight Consolidation (EWC): This method slows down learning on weights crucial to previously seen tasks, akin to how the brain consolidates important memories [33].

- Memory replay techniques: Inspired by hippocampal replay, these methods periodically revisit and retrain on past experiences, reinforcing previously learned knowledge [34].

- Model storage and revisiting: This approach involves saving snapshots of the model at various training stages and selectively fine-tuning them later, aligning with the concept of memory rehearsal in cognitive psychology [34].

4. The Grand Challenge: Integrating Diverse AI Solutions

- Architectural Integration: Developing AI architectures that incorporate both fast and slow learning components, inspired by the hippocampus and neocortex respectively. This involves creating systems that can rapidly encode new experiences while gradually integrating this information into a stable knowledge base [5].

- Balancing Plasticity and Stability: Implementing mechanisms that dynamically adjust the balance between rapid learning and long-term stability, analogous to the interplay between SWRs and BARRs observed in the hippocampus [13].

- Memory Consolidation and Replay: Incorporating offline processing periods that allow for the gradual integration of new information into existing knowledge structures, inspired by hippocampal replay during sleep [35].

- Hierarchical Knowledge Organization: Developing architectures that support the progression from specific episodic memories to more abstract, semantic knowledge, reflecting the hippocampus–neocortex dialogue [3].

- -

- Dual-process learning algorithms that alternate between phases of rapid acquisition and selective inhibition.

- -

- Hierarchical neural networks with regulated plasticity at different levels.

- -

- Meta-learning approaches that continuously adapt learning strategies based on task demands and past experiences.

5. Lessons from Neuroscience: Synthesizing Hippocampus-Inspired AI Concepts

6. Future Directions: The Promise and Challenges of Brain-Inspired AI

- Learning continuously without forgetting, adapting quickly to new situations while maintaining a stable knowledge base [5].

- Handling noisy or incomplete data more effectively through pattern separation and completion mechanisms [9].

- Engaging in episodic memory and mental time travel, enabling more context-rich decision-making and creativity [27].

- Integrating emotions and value judgments for a more nuanced understanding of human preferences [55].

- Bridging the gap between biological and artificial neural networks [56].

- Scaling brain-inspired principles to handle vast amounts of data and complex tasks.

- Achieving the energy efficiency of the human brain in artificial systems [57].

- Translating the temporal dynamics of biological neural networks into artificial systems [58].

- Integrating various brain-inspired mechanisms into a cohesive system [59].

- Determining the appropriate level of biological mimicry [8].

- Addressing the ethical implications of advanced AI systems [60].

7. Testable Predictions

8. Conclusions: Charting the Course for Brain-Inspired AI

Author Contributions

Funding

Conflicts of Interest

References

- Mermillod, M.; Bugaiska, A.; Bonin, P. The stability–plasticity dilemma: Investigating the continuum from catastrophic forgetting to age-limited learning effects. Front. Psychol. 2013, 4, 504. [Google Scholar] [CrossRef] [PubMed]

- Schrittwieser, J.; Antonoglou, I.; Hubert, T.; Simonyan, K.; Sifre, L.; Schmitt, S.; Guez, A.; Lockhart, E.; Hassabis, D.; Graepel, T.; et al. Mastering atari, go, chess and shogi by planning with a learned model. Nature 2020, 588, 604–609. [Google Scholar] [CrossRef] [PubMed]

- McClelland, J.L.; McNaughton, B.L.; O’Reilly, R.C. Why there are complementary learning systems in the hippocampus and neocortex: Insights from the successes and failures of connectionist models of learning and memory. Psychol. Rev. 1995, 102, 419–457. [Google Scholar] [CrossRef] [PubMed]

- Wirtshafter, H.S.; Wilson, M.A. Artificial intelligence insights into hippocampal processing. Front. Comput. Neurosci. 2022, 16, 1044659. [Google Scholar] [CrossRef]

- Kumaran, D.; Hassabis, D.; McClelland, J.L. What learning systems do intelligent agents need? Complementary learning systems theory updated. Trends Cogn. Sci. 2016, 20, 512–534. [Google Scholar] [CrossRef]

- Olafsdottir, H.F.; Bush, D.; Barry, C. The role of hippocampal replay in memory and planning. Curr. Biol. 2018, 28, R37–R50. [Google Scholar] [CrossRef]

- Gershman, S.J. The successor representation: Its computational logic and neural substrates. J. Neurosci. 2018, 38, 7193–7200. [Google Scholar] [CrossRef]

- Lake, B.M.; Ullman, T.D.; Tenenbaum, J.B.; Gershman, S.J. Building machines that learn and think like people. Behav. Brain Sci. 2017, 40, e253. [Google Scholar] [CrossRef]

- Rasch, B.; Born, J. About sleep’s role in memory. Physiol. Rev. 2013, 93, 681–766. [Google Scholar] [CrossRef]

- Diekelmann, S.; Born, J. The memory function of sleep. Nat. Rev. Neurosci. 2010, 11, 114–126. [Google Scholar] [CrossRef]

- Buzsáki, G. Hippocampal sharp wave-ripple: A cognitive biomarker for episodic memory and planning. Hippocampus 2015, 25, 1073–1188. [Google Scholar] [CrossRef] [PubMed]

- Girardeau, G.; Zugaro, M. Hippocampal ripples and memory consolidation. Curr. Opin. Neurobiol. 2011, 21, 452–459. [Google Scholar] [CrossRef] [PubMed]

- Karaba, L.A.; Robinson, H.L.; Harvey, R.E.; Chen, W.; Fernandez-Ruiz, A.; Oliva, A. A hippocampal circuit mechanism to balance memory reactivation during sleep. Science 2024, 385, 738–743. [Google Scholar] [CrossRef] [PubMed]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual lifelong learning with neural networks: A review. Neural Netw. 2019, 113, 54–71. [Google Scholar] [CrossRef]

- O’Reilly, R.C.; Bhattacharyya, R.; Howard, M.D.; Ketz, N. Complementary learning systems. Cogn. Sci. 2014, 38, 1229–1248. [Google Scholar] [CrossRef]

- Kumaran, D.; McClelland, J.L. Generalization through the recurrent interaction of episodic memories: A model of the hippocampal system. Psychol. Rev. 2012, 119, 573–616. [Google Scholar] [CrossRef]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized experience replay. arXiv 2015, arXiv:1511.05952. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef]

- Rolnick, D.; Ahuja, A.; Schwarz, J.; Lillicrap, T.P.; Wayne, G. Experience replay for continual learning. Adv. Neural Inf. Process. Syst. 2019, 32, 350–360. [Google Scholar]

- Yassa, M.A.; Stark, C.E. Pattern separation in the hippocampus. Trends Neurosci. 2011, 34, 515–525. [Google Scholar] [CrossRef]

- Rolls, E.T. The mechanisms for pattern completion and pattern separation in the hippocampus. Front. Syst. Neurosci. 2013, 7, 74. [Google Scholar] [CrossRef] [PubMed]

- Malenka, R.C.; Bear, M.F. LTP and LTD: An embarrassment of riches. Neuron 2004, 44, 5–21. [Google Scholar] [CrossRef] [PubMed]

- Kandel, E.R.; Dudai, Y.; Mayford, M.R. The molecular and systems biology of memory. Cell 2014, 157, 163–186. [Google Scholar] [CrossRef] [PubMed]

- Luscher, C.; Malenka, R.C. NMDA receptor-dependent long-term potentiation and long-term depression (LTP/LTD). Cold Spring Harb. Perspect. Biol. 2012, 4, a005710. [Google Scholar] [CrossRef]

- Huganir, R.L.; Nicoll, R.A. AMPARs and synaptic plasticity: The last 25 years. Neuron 2013, 80, 704–717. [Google Scholar] [CrossRef]

- Bliss, T.V.; Lømo, T. Long-lasting potentiation of synaptic transmission in the dentate area of the anaesthetized rabbit following stimulation of the perforant path. J. Physiol. 1973, 232, 331–356. [Google Scholar] [CrossRef]

- Hassabis, D.; Kumaran, D.; Summerfield, C.; Botvinick, M. Neuroscience-inspired artificial intelligence. Neuron 2017, 95, 245–258. [Google Scholar] [CrossRef]

- Richards, B.A.; Lillicrap, T.P. Dendritic solutions to the credit assignment problem. Curr. Opin. Neurobiol. 2019, 54, 28–36. [Google Scholar] [CrossRef]

- Marblestone, A.H.; Wayne, G.; Kording, K.P. Toward an integration of deep learning and neuroscience. Front. Comput. Neurosci. 2016, 10, 94. [Google Scholar] [CrossRef]

- Moser, E.I.; Kropff, E.; Moser, M.B. Place cells, grid cells, and the brain’s spatial representation system. Annu. Rev. Neurosci. 2008, 31, 69–89. [Google Scholar] [CrossRef]

- Tononi, G.; Cirelli, C. Sleep and the price of plasticity: From synaptic and cellular homeostasis to memory consolidation and integration. Neuron 2014, 81, 12–34. [Google Scholar] [CrossRef] [PubMed]

- McCloskey, M.; Cohen, N.J. Catastrophic interference in connectionist networks: The sequential learning problem. Psychol. Learn. Motiv. 1989, 24, 109–165. [Google Scholar] [CrossRef]

- French, R.M. Catastrophic forgetting in connectionist networks. Trends Cogn. Sci. 1999, 3, 128–135. [Google Scholar] [CrossRef] [PubMed]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental classifier and representation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2001–2010. [Google Scholar]

- Wilson, M.A.; McNaughton, B.L. Reactivation of hippocampal ensemble memories during sleep. Science 1994, 265, 676–679. [Google Scholar] [CrossRef]

- O’Reilly, R.C.; Norman, K.A. Hippocampal and neocortical contributions to memory: Advances in the complementary learning systems framework. Trends Cogn. Sci. 2002, 6, 505–510. [Google Scholar] [CrossRef]

- Sprechmann, P.; Jayakumar, S.M.; Rae, J.W.; Pritzel, A.; Badia, A.P.; Uria, B.; Vinyals, O.; Hassabis, D.; Pascanu, R.; Blundell, C. Memory-based parameter adaptation. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Cayco-Gajic, N.A.; Silver, R.A. Re-evaluating circuit mechanisms underlying pattern separation. Neuron 2019, 101, 584–602. [Google Scholar] [CrossRef]

- Tulving, E. Episodic memory: From mind to brain. Annu. Rev. Psychol. 2002, 53, 1–25. [Google Scholar] [CrossRef]

- Renoult, L.; Irish, M.; Moscovitch, M.; Rugg, M.D. From knowing to remembering: The semantic-episodic distinction. Trends Cogn. Sci. 2019, 23, 1041–1057. [Google Scholar] [CrossRef]

- Botvinick, M.M.; Niv, Y.; Barto, A.C. Hierarchically organized behavior and its neural foundations: A reinforcement learning perspective. Cognition 2009, 113, 262–280. [Google Scholar] [CrossRef]

- Rothschild, G.; Eban, E.; Frank, L.M. A cortical-hippocampal-cortical loop of information processing during memory consolidation. Nat. Neurosci. 2017, 20, 251–259. [Google Scholar] [CrossRef]

- Smith, D.M.; Bulkin, D.A. The form and function of hippocampal context representations. Neurosci. Biobehav. Rev. 2014, 40, 52–61. [Google Scholar] [CrossRef] [PubMed]

- Kinsky, N.R.; Sullivan, D.W.; Mau, W.; Hasselmo, M.E.; Eichenbaum, H.B. Hippocampal place fields maintain a coherent and flexible map across long timescales. Curr. Biol. 2018, 28, 3578–3588. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Friston, K. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef]

- Keller, G.B.; Mrsic-Flogel, T.D. Predictive processing: A canonical cortical computation. Neuron 2018, 100, 424–435. [Google Scholar] [CrossRef]

- Whittington, J.C.; Bogacz, R. Theories of error back-propagation in the brain. Trends Cogn. Sci. 2019, 23, 235–250. [Google Scholar] [CrossRef]

- Aimone, J.B.; Li, Y.; Lee, S.W.; Clemenson, G.D.; Deng, W.; Gage, F.H. Regulation and function of adult neurogenesis: From genes to cognition. Physiol. Rev. 2014, 94, 991–1026. [Google Scholar] [CrossRef]

- Ge, S.; Yang, C.H.; Hsu, K.S.; Ming, G.L.; Song, H. A critical period for enhanced synaptic plasticity in newly generated neurons of the adult brain. Neuron 2007, 54, 559–566. [Google Scholar] [CrossRef]

- Dai, X.; Yin, H.; Jha, N.K. NeST: A neural network synthesis tool based on a grow-and-prune paradigm. IEEE Trans. Comput. 2019, 68, 1487–1497. [Google Scholar] [CrossRef]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both weights and connections for efficient neural network. Adv. Neural Inf. Process. Syst. 2015, 28, 1135–1143. [Google Scholar]

- Colgin, L.L. Rhythms of the hippocampal network. Nat. Rev. Neurosci. 2016, 17, 239–249. [Google Scholar] [CrossRef] [PubMed]

- Yamins, D.L.; DiCarlo, J.J. Using goal-driven deep learning models to understand sensory cortex. Nat. Neurosci. 2016, 19, 356–365. [Google Scholar] [CrossRef] [PubMed]

- Phelps, E.A. Human emotion and memory: Interactions of the amygdala and hippocampal complex. Curr. Opin. Neurobiol. 2004, 14, 198–202. [Google Scholar] [CrossRef]

- Marcus, G. Deep learning: A critical appraisal. arXiv 2018, arXiv:1801.00631. [Google Scholar]

- Raichle, M.E.; Gusnard, D.A. Appraising the brain’s energy budget. Proc. Natl. Acad. Sci. USA 2002, 99, 10237–10239. [Google Scholar] [CrossRef]

- Güçlü, U.; van Gerven, M.A. Modeling the dynamics of human brain activity with recurrent neural networks. Front. Comput. Neurosci. 2017, 11, 7. [Google Scholar] [CrossRef]

- Pessoa, L. Understanding brain networks and brain organization. Phys. Life Rev. 2014, 11, 400–435. [Google Scholar] [CrossRef]

- Bostrom, N.; Yudkowsky, E. The ethics of artificial intelligence. In The Cambridge Handbook of Artificial Intelligence; Frankish, K., Ramsey, W.M., Eds.; Cambridge University Press: Cambridge, UK, 2014; pp. 316–334. [Google Scholar]

- Botvinick, M.; Ritter, S.; Wang, J.X.; Kurth-Nelson, Z.; Blundell, C.; Hassabis, D. Reinforcement learning, fast and slow. Trends Cogn. Sci. 2019, 23, 408–422. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Ketz, N.; Morkonda, S.G.; O’Reilly, R.C. Theta coordinated error-driven learning in the hippocampus. PLoS Comput. Biol. 2013, 9, e1003067. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

| Concept | Prediction | Testable Hypothesis |

|---|---|---|

| 1. Dual-Process Learning | Outperform single-process systems in rapid adaptation and retention | Higher performance in continued learning with novel tasks |

| 2. SWR–BARR Consolidation | More stable and generalizable learning | Decreased learning interference while improving skill adaptation |

| 3. Context-Dependent Representations | Improved fine discrimination | Higher accuracy in visual recognition of similar stimuli |

| 4. Time-Dependent Plasticity | More robust fine discrimination | Better retention in sequential learning tasks |

| 5. Hierarchal Knowledge | Improved generalization and reasoning | Faster learning and better skill transfer in multi-task environments |

| 6. Pattern Separation/Completion | Enhanced specific recall and generalization | Higher accuracy in few-shot transfer in multi-task environments |

| 7. Oscillation-Inspired Training | More efficient learning and consolidation | Comparable performance with fewer iterations and improved stability |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rudroff, T.; Rainio, O.; Klén, R. Neuroplasticity Meets Artificial Intelligence: A Hippocampus-Inspired Approach to the Stability–Plasticity Dilemma. Brain Sci. 2024, 14, 1111. https://doi.org/10.3390/brainsci14111111

Rudroff T, Rainio O, Klén R. Neuroplasticity Meets Artificial Intelligence: A Hippocampus-Inspired Approach to the Stability–Plasticity Dilemma. Brain Sciences. 2024; 14(11):1111. https://doi.org/10.3390/brainsci14111111

Chicago/Turabian StyleRudroff, Thorsten, Oona Rainio, and Riku Klén. 2024. "Neuroplasticity Meets Artificial Intelligence: A Hippocampus-Inspired Approach to the Stability–Plasticity Dilemma" Brain Sciences 14, no. 11: 1111. https://doi.org/10.3390/brainsci14111111

APA StyleRudroff, T., Rainio, O., & Klén, R. (2024). Neuroplasticity Meets Artificial Intelligence: A Hippocampus-Inspired Approach to the Stability–Plasticity Dilemma. Brain Sciences, 14(11), 1111. https://doi.org/10.3390/brainsci14111111