The Development of Global-Level Categorization: Frequency Tagging EEG Responses

Abstract

:1. Introduction

2. Experiment 1

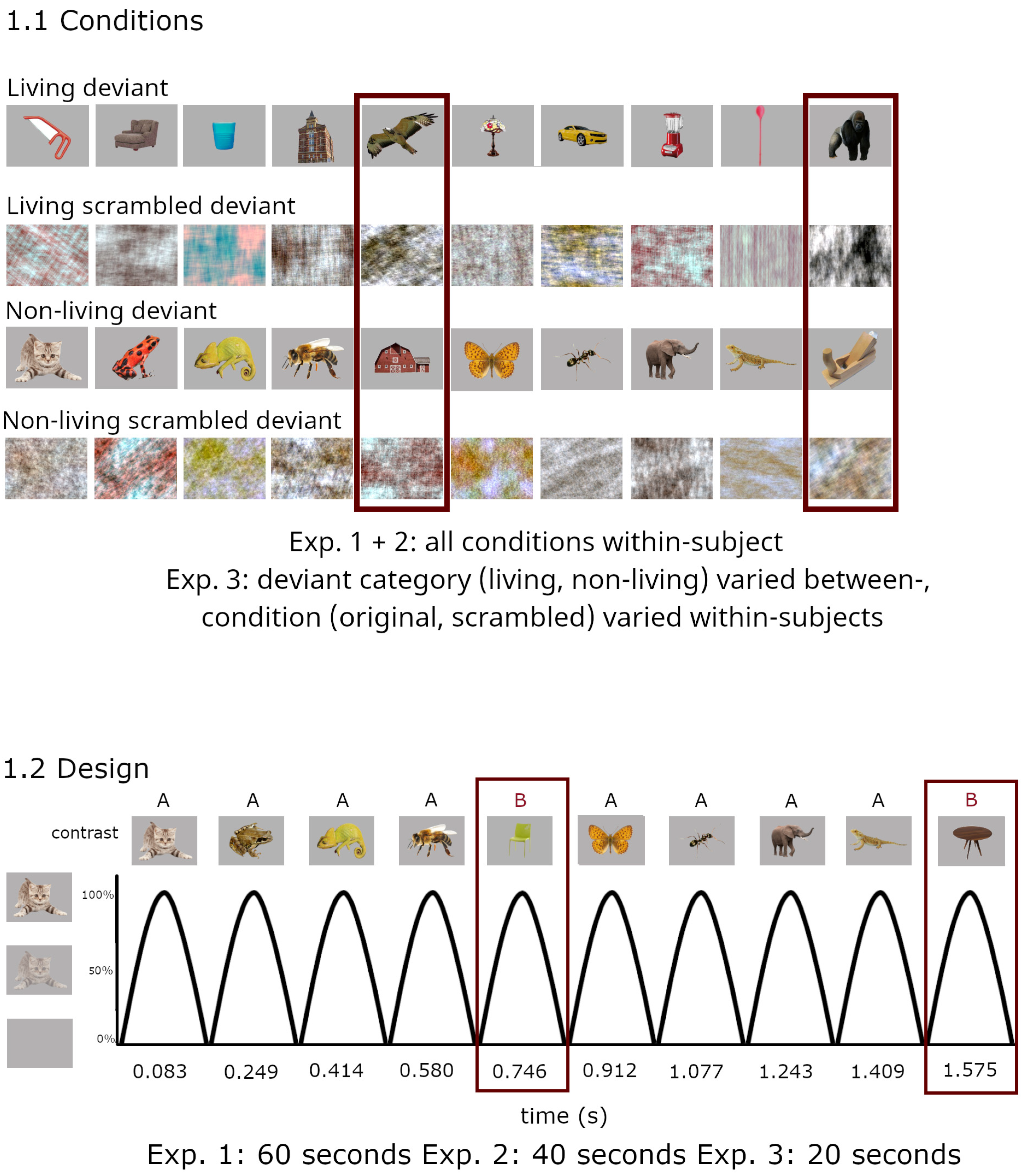

2.1. Materials and Methods

2.1.1. Participants

2.1.2. Stimuli/Presentation

2.1.3. EEG Recordings and Analyses

2.1.4. EEG Preprocessing and Frequency Domain Analyses

2.1.5. Statistical Analyses

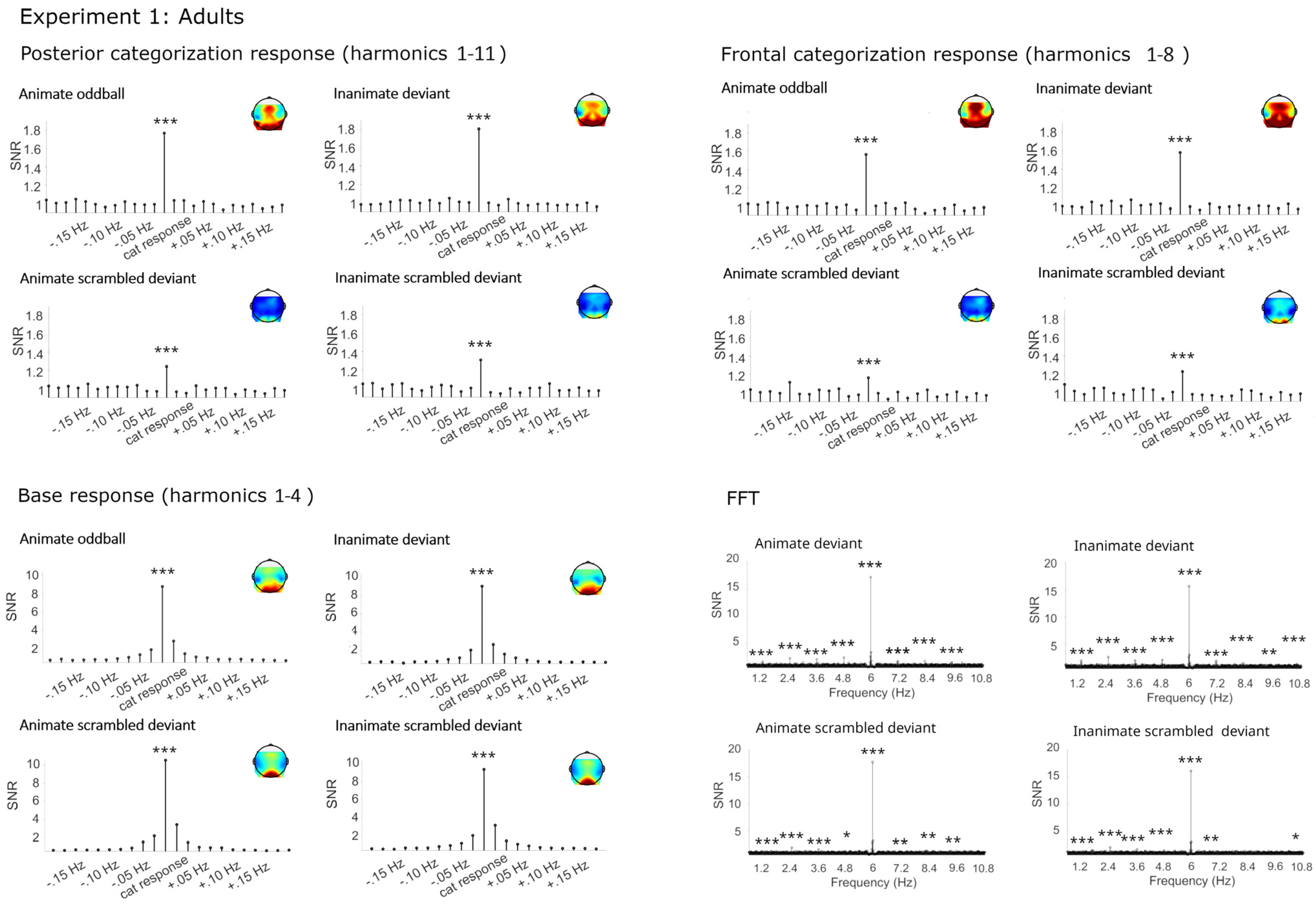

2.2. Results

2.2.1. Categorization Responses

Categorization Responses in Posterior-Occipital Cluster (Harmonics 1–11)

Categorization Response in Anterior Cluster (Harmonics 1–8)

2.2.2. Base Frequency (Harmonics 1–4)

2.3. Summary

3. Experiment 2

3.1. Materials and Methods

3.1.1. Participants

3.1.2. Stimulation

3.1.3. EEG Analysis

3.2. Results

3.2.1. Categorization Responses

Categorization Responses in Posterior-Occipital Cluster (Harmonics 1–12)

Categorization Response in Anterior Cluster (Harmonics 1–7)

3.2.2. Base Frequency (Harmonics 1–5)

3.3. Summary

4. Experiment 3

4.1. Materials and Methods

4.1.1. Participants

4.1.2. Stimulation

4.1.3. EEG analysis

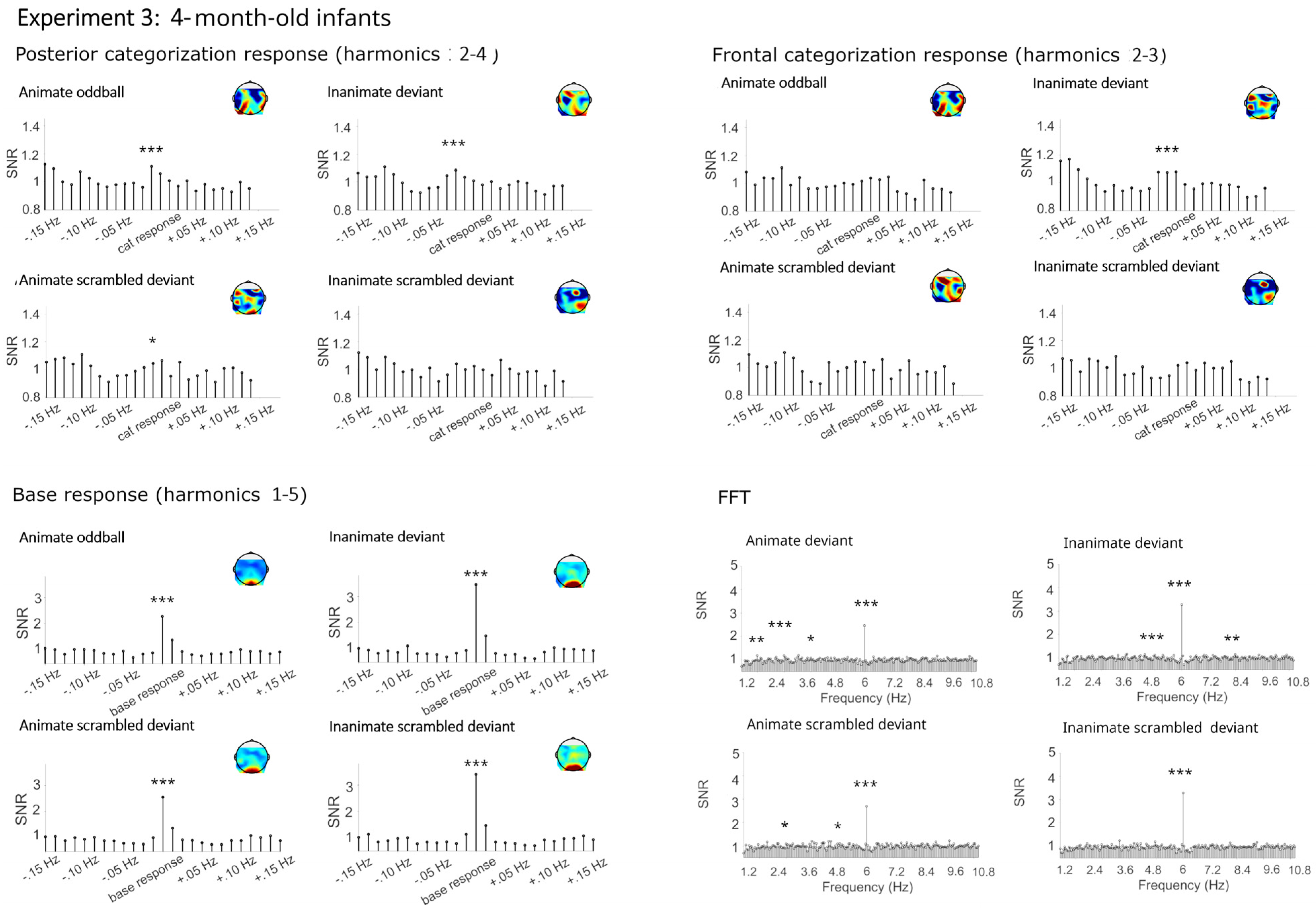

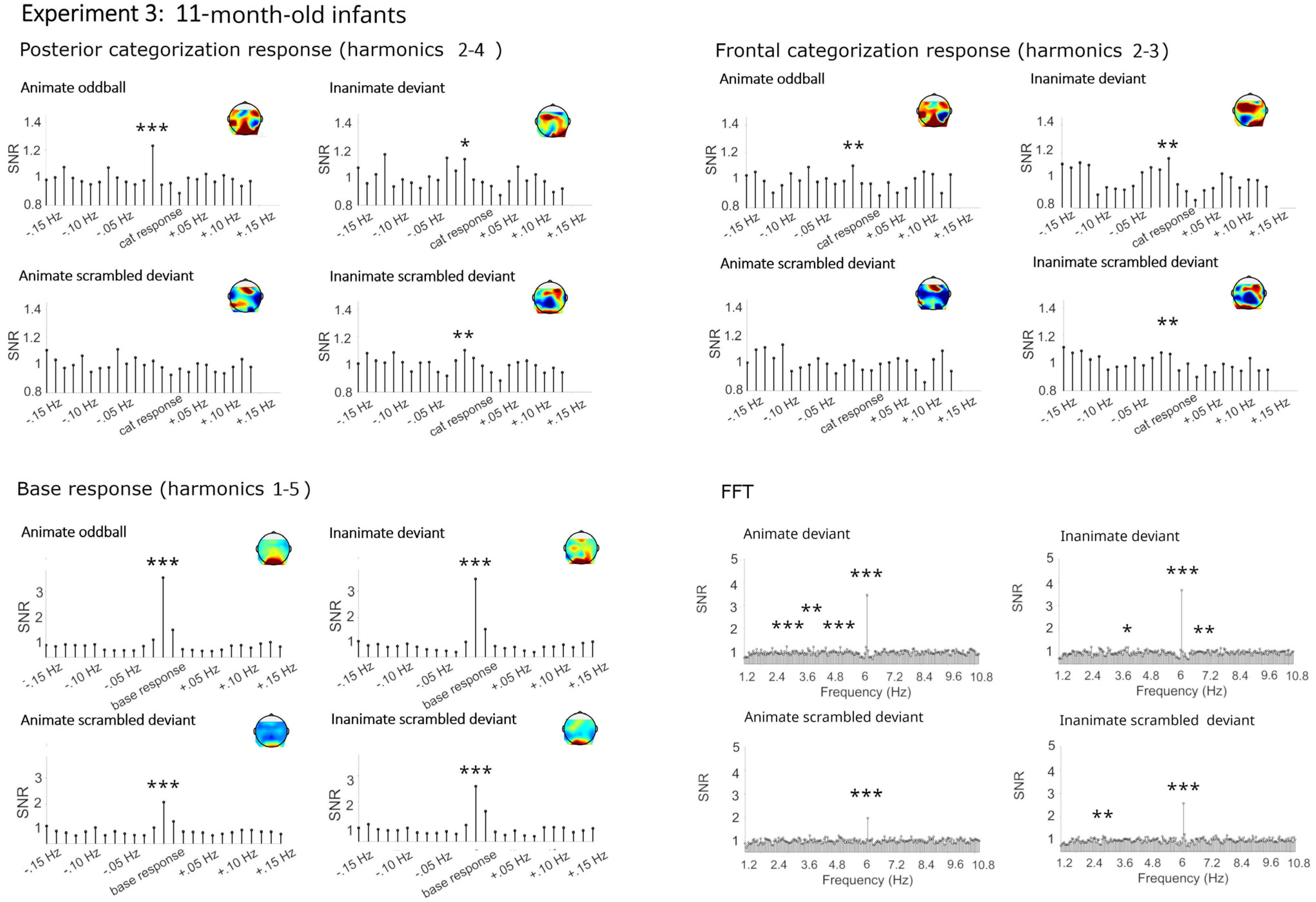

4.2. Results

4.2.1. Categorization Responses

Categorization Responses in Posterior-Occipital Cluster (Harmonics 2–4)

Categorization Response in Anterior Cluster (Harmonics 2–3)

4.2.2. Base Frequency (Harmonics 1–5)

4.3. Summary

4.4. Control Analyses

5. General Discussion

6. Conclusions

Contributions

- Neural correlates revealed the categorization of living and non-living visual objects in infants, children, and adults;

- At all ages, categorization is based on high-level visual cues;

- Categorization emerges in the posterior-occipital and frontal areas.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Thorpe, S.; Fize, D.; Marlot, C. Speed of Processing in the Human Visual System. Nature 1996, 381, 520–522. [Google Scholar] [CrossRef] [PubMed]

- Mack, M.L.; Palmeri, T.J. The Dynamics of Categorization: Unraveling Rapid Categorization. J. Exp. Psychol. Gen. 2015, 144, 551. [Google Scholar] [CrossRef] [PubMed]

- Poncet, M.; Fabre-Thorpe, M. Stimulus Duration and Diversity Do Not Reverse the Advantage for Superordinate-level Representations: The Animal Is Seen before the Bird. Eur. J. Neurosci. 2014, 39, 1508–1516. [Google Scholar] [CrossRef] [PubMed]

- Pauen, S. The Global-to-Basic Level Shift in Infants’ Categorical Thinking: First Evidence from a Longitudinal Study. Int. J. Behav. Dev. 2002, 26, 492–499. [Google Scholar] [CrossRef]

- Quinn, P.C.; Johnson, M.H.; Mareschal, D.; Rakison, D.H.; Younger, B.A. Understanding Early Categorization: One Process or Two? Infancy 2000, 1, 111–122. [Google Scholar] [CrossRef] [PubMed]

- Mandler, J.M.; Bauer, P.J.; McDonough, L. Separating the Sheep from the Goats: Differentiating Global Categories. Cognit. Psychol. 1991, 23, 263–298. [Google Scholar] [CrossRef]

- Proklova, D.; Kaiser, D.; Peelen, M.V. Disentangling Representations of Object Shape and Object Category in Human Visual Cortex: The Animate–Inanimate Distinction. J. Cogn. Neurosci. 2016, 28, 680–692. [Google Scholar] [CrossRef] [PubMed]

- Spriet, C.; Abassi, E.; Hochmann, J.-R.; Papeo, L. Visual Object Categorization in Infancy. Proc. Natl. Acad. Sci. USA 2022, 119, e2105866119. [Google Scholar] [CrossRef] [PubMed]

- Rostad, K.; Yott, J.; Poulin-Dubois, D. Development of Categorization in Infancy: Advancing Forward to the Animate/Inanimate Level. Infant Behav. Dev. 2012, 35, 584–595. [Google Scholar] [CrossRef]

- Wright, K.; Poulin-Dubois, D.; Kelley, E. The Animate–Inanimate Distinction in Preschool Children. Br. J. Dev. Psychol. 2015, 33, 73–91. [Google Scholar] [CrossRef]

- Younger, B.A.; Fearing, D.D. A Global-to-basic Trend in Early Categorization: Evidence from a Dual-category Habituation Task. Infancy 2000, 1, 47–58. [Google Scholar] [CrossRef] [PubMed]

- Poulin-Dubois, D.; Pauen, S. The development of object categories: What, when, and how? In Handbook of Categorization in Cognitive Science, 2nd ed.; Cohen, H., Lefebvre, C., Eds.; Elsevier Academic Press: San Diego, CA, USA, 2017; pp. 653–671. [Google Scholar]

- Elsner, B.; Jeschonek, S.; Pauen, S. Event-Related Potentials for 7-Month-Olds’ Processing of Animals and Furniture Items. Dev. Cogn. Neurosci. 2013, 3, 53–60. [Google Scholar] [CrossRef] [PubMed]

- Grossmann, T.; Gliga, T.; Johnson, M.H.; Mareschal, D. The Neural Basis of Perceptual Category Learning in Human Infants. J. Cogn. Neurosci. 2009, 21, 2276–2286. [Google Scholar] [CrossRef] [PubMed]

- Peykarjou, S.; Wissner, J.; Pauen, S. Categorical Erp Repetition Effects for Human and Furniture Items in 7-month-old Infants. Infant Child Dev. 2017, 26, e2016. [Google Scholar] [CrossRef]

- Peykarjou, S.; Hoehl, S.; Pauen, S. The Development of Visual Categorization Based on High-level Cues. Child Dev. 2024, 95, e122–e138. [Google Scholar] [CrossRef] [PubMed]

- Rossion, B. Understanding Individual Face Discrimination by Means of Fast Periodic Visual Stimulation. Exp. Brain Res. 2014, 232, 1599–1621. [Google Scholar] [CrossRef] [PubMed]

- Torralba, A.; Oliva, A. Statistics of Natural Image Categories. Netw. Comput. Neural Syst. 2003, 14, 391. [Google Scholar] [CrossRef]

- Xie, S.; Hoehl, S.; Moeskops, M.; Kayhan, E.; Kliesch, C.; Turtleton, B.; Köster, M.; Cichy, R.M. Visual Category Representations in the Infant Brain. Curr. Biol. 2022, 32, 5422–5432. [Google Scholar] [CrossRef]

- Yetter, M.; Robert, S.; Mammarella, G.; Richmond, B.; Eldridge, M.A.; Ungerleider, L.G.; Yue, X. Curvilinear Features Are Important for Animate/Inanimate Categorization in Macaques. J. Vis. 2021, 21, 3. [Google Scholar] [CrossRef] [PubMed]

- Zachariou, V.; Del Giacco, A.C.; Ungerleider, L.G.; Yue, X. Bottom-up Processing of Curvilinear Visual Features Is Sufficient for Animate/Inanimate Object Categorization. J. Vis. 2018, 18, 3. [Google Scholar] [CrossRef]

- Stothart, G.; Quadflieg, S.; Milton, A. A Fast and Implicit Measure of Semantic Categorisation Using Steady State Visual Evoked Potentials. Neuropsychologia 2017, 102, 11–18. [Google Scholar] [CrossRef] [PubMed]

- Behl-Chadha, G. Basic-Level and Superordinate-like Categorical Rep resentations in Early Infancy. Cognition 1996, 60, 105–141. [Google Scholar] [CrossRef]

- Dzhelyova, M.; Rossion, B. The Effect of Parametric Stimulus Size Variation on Individual Face Discrimination Indexed by Fast Periodic Visual Stimulation. BMC Neurosci. 2014, 15, 87. [Google Scholar] [CrossRef] [PubMed]

- Liu-Shuang, J.; Norcia, A.M.; Rossion, B. An Objective Index of Individual Face Discrimination in the Right Occipito-Temporal Cortex by Means of Fast Periodic Oddball Stimulation. Neuropsychologia 2014, 52, 57–72. [Google Scholar] [CrossRef]

- Morey, R.D.; Rouder, J.N. BayesFactor, version 0.9.12-2; Comprehensive R Archive Network: Vienna, Austria, 2015.

- Rouder, J.N.; Morey, R.D.; Speckman, P.L.; Province, J.M. Default Bayes Factors for ANOVA Designs. J. Math. Psychol. 2012, 56, 356–374. [Google Scholar] [CrossRef]

- JASP Team JASP 2024. Available online: https://jasp-stats.org/wp-content/uploads/2024/03/Statistical-Analysis-in-JASP-2024.pdf (accessed on 17 May 2024).

- Bertels, J.; Bourguignon, M.; De Heering, A.; Chetail, F.; De Tiège, X.; Cleeremans, A.; Destrebecqz, A. Snakes Elicit Specific Neural Responses in the Human Infant Brain. Sci. Rep. 2020, 10, 7443. [Google Scholar] [CrossRef]

- Jeschonek, S.; Marinovic, V.; Hoehl, S.; Elsner, B.; Pauen, S. Do Animals and Furniture Items Elicit Different Brain Responses in Human Infants? Brain Dev. 2010, 32, 863–871. [Google Scholar] [CrossRef]

- Träuble, B.; Pauen, S.; Poulin-Dubois, D. Speed and Direction Changes Induce the Perception of Animacy in 7-Month-Old Infants. Front. Psychol. 2014, 5, 109716. [Google Scholar] [CrossRef]

- Pauen, S.; Träuble, B. How 7-Month-Olds Interpret Ambiguous Motion Events: Category-Based Reasoning in Infancy. Cognit. Psychol. 2009, 59, 275–295. [Google Scholar] [CrossRef]

- Poulin-Dubois, D.; Crivello, C.; Wright, K. Biological Motion Primes the Animate/Inanimate Distinction in Infancy. PLoS ONE 2015, 10, e0116910. [Google Scholar] [CrossRef]

| Response | Condition | Deviant Category | Bca Mean | Bca SD | Z-Score Range | SNR Range |

|---|---|---|---|---|---|---|

| Posterior categorization response (harmonics 1–11) | Original | Animal | 0.38 | 0.12 | 3.05–16.79 (100%) | 1.30–2.34 |

| Furniture | 0.41 | 0.14 | 3.17–17.59 (100%) | 1.25–2.23 | ||

| Phase-scrambled | Animal | 0.12 | 0.08 | −0.91–9.51 (70%) | 0.94–1.48 | |

| Furniture | 0.15 | 0.08 | 1.14–8.76 (85%) | 1.11–1.68 | ||

| Anterior categorization response (harmonics 1–8) | Original | Animal | 0.23 | 0.11 | 3.13–16.32 (100%) | 1.11–2.06 |

| Furniture | 0.24 | 0.13 | 2.97–17.67 (100%) | 1.05–2.10 | ||

| Phase-scrambled | Animal | 0.07 | 0.07 | −0.28–9.46 (70%) | 0.87–1.38 | |

| Furniture | 0.10 | 0.08 | 0.82–8.61 (85%) | 0.97–1.60 | ||

| Base response (harmonics 1–4) | Original | Animal | 1.21 | 0.43 | 17.42–51.00 (100%) | 3.55–15.92 |

| Furniture | 1.26 | 0.53 | 19.24–48.20 (100%) | 3.78–17.81 | ||

| Phase-scrambled | Animal | 1.42 | 0.78 | 11.91–50.25 (100%) | 3.12–19.01 | |

| Furniture | 1.37 | 0.81 | 12.96–47.78 (100%) | 4.13–18.82 |

| Response | Condition | Deviant Category | Bca Mean | Bca SD | Z-Score Range | SNR Range |

|---|---|---|---|---|---|---|

| Posterior categorization response (harmonics 1–12) | Original | Animal | 1.63 | 0.70 | 2.04–20.52 (100%) | 1.19–2.48 |

| Furniture | 1.53 | 0.63 | 2.99–14.16 (100%) | 1.23–2.34 | ||

| Phase-scrambled | Animal | 0.47 | 0.44 | −3.28–6.58 (59%) | 0.86–1.48 | |

| Furniture | 0.36 | 0.44 | −2.97–5.47 (55%) | 0.78–1.39 | ||

| Anterior categorization response (harmonics 1–7) | Original | Animal | 0.66 | 0.51 | −0.74–18.94 (81%) | 0.95–2.26 |

| Furniture | 0.69 | 0.52 | −1.25–7.28 (77%) | 0.86–2.00 | ||

| Phase-scrambled | Animal | 0.24 | 0.36 | −1.51–11.04 (41%) | 0.86–1.58 | |

| Furniture | 0.17 | 0.44 | −3.01–6.95 (32%) | 0.80–1.59 | ||

| Base response (harmonics 1–5) | Original | Animal | 1.97 | 0.94 | 1.65–35.05 (100%) | 1.26–7.31 |

| Furniture | 2.12 | 1.15 | 2.25–59.05 (100%) | 1.58–9.02 | ||

| Phase-scrambled | Animal | 1.87 | 1.11 | 2.96–38.30 (100%) | 1.64–7.52 | |

| Furniture | 1.85 | 0.96 | 1.56–40.62 (95%) | 1.24–6.85 |

| Response | Condition | Deviant Category | Age Group | N | Bca Mean | Bca SD | Z-Score Range | SNR Range |

|---|---|---|---|---|---|---|---|---|

| Posterior-occipital categorization response (harmonics 2–4) | Original | Animal | 4 | 11 | 0.36 | 0.69 | −1.99–5.54 (18%) | 0.80–1.41 |

| 7 | 9 | 0.37 | 0.46 | −0.48–3.73 (33%) | 0.95–1.45 | |||

| 11 | 12 | 0.52 | 0.43 | −0.74–12.54 (67%) | 0.92–1.71 | |||

| Furniture | 4 | 13 | 0.09 | 0.54 | −1.33–2.45 (31%) | 0.87–1.33 | ||

| 7 | 15 | 0.30 | 0.34 | −1.28–3.70 (40%) | 0.87–1.44 | |||

| 11 | 9 | 0.31 | 0.58 | −1.53–3.95 (33%) | 0.86–1.71 | |||

| Phase-scrambled | Animal | 4 | 11 | 0.15 | 0.49 | −0.93–2.94 (27%) | 0.90–1.30 | |

| 7 | 9 | −0.05 | 0.46 | −3.58–1.78 (11%) | 0.73–1.18 | |||

| 11 | 12 | 0.08 | 0.37 | −0.91–2.15 (8%) | 0.81–1.28 | |||

| Furniture | 4 | 13 | 0.00 | 0.43 | −1.90–1.56 (0%) | 0.76–1.20 | ||

| 7 | 15 | 0.08 | 0.44 | −2.01–3.59 (20%) | 0.75–1.41 | |||

| 11 | 9 | 0.31 | 0.46 | −0.71–5.03 (33%) | 0.93–1.60 | |||

| Frontal categorization response (harmonics 2–3) | Original | Animal | 4 | 11 | −0.02 | 0.35 | −1.38–4.42 (36%) | 0.80–1.63 |

| 7 | 9 | 0.16 | 0.27 | −1.41–3.55 (11%) | 0.77–1.28 | |||

| 11 | 12 | 0.15 | 0.33 | −1.52–6.63 (25%) | 0.64–1.73 | |||

| Furniture | 4 | 13 | 0.10 | 0.40 | −2.47–9.91 (31%) | 0.73–1.60 | ||

| 7 | 15 | 0.35 | 0.50 | −1.62–7.24 (33%) | 0.93–1.71 | |||

| 11 | 9 | 0.21 | 0.22 | −0.01–3.25 (33%) | 1.00–1.60 | |||

| Phase-scrambled | Animal | 4 | 11 | 0.08 | 0.28 | −1.31–2.82 (18%) | 0.87–1.39 | |

| 7 | 9 | 0.01 | 0.20 | −1.50–1.10 (0%) | 0.81–1.19 | |||

| 11 | 12 | 0.03 | 0.32 | −2.25–3.43 (8%) | 0.72–1.32 | |||

| Furniture | 4 | 13 | −0.10 | 0.28 | −2.80−0.92 (0%) | 0.69–1.18 | ||

| 7 | 15 | 0.07 | 0.28 | −1.45–2.78 (13%) | 0.80–1.45 | |||

| 11 | 9 | 0.12 | 0.37 | −1.19–3.93 (11%) | 1.05–3.47 | |||

| Base response (harmonics 1–5) | Original | Animal | 4 | 11 | 1.56 | 0.86 | 3.42–13.21 (100%) | 1.62–3.54 |

| 7 | 9 | 2.05 | 1.21 | −0.62–49.39 (91%) | 0.95–4.83 | |||

| 11 | 12 | 2.91 | 1.74 | 3.33–35.61 (100%) | 1.80–7.30 | |||

| Furniture | 4 | 13 | 2.54 | 1.18 | 4.35–23.25 (100%) | 1.72–7.47 | ||

| 7 | 15 | 2.14 | 1.03 | 3.08–25.25 (100%) | 1.55–6.18 | |||

| 11 | 9 | 2.90 | 1.09 | 3.83–30.24 (100%) | 1.88–8.09 | |||

| Phase-scrambled | Animal | 4 | 11 | 1.90 | 0.93 | 1.25–18.02 (91%) | 1.22–4.95 | |

| 7 | 9 | 1.67 | 1.53 | −0.16–25.16 (67%) | 0.93–5.98 | |||

| 11 | 12 | 1.38 | 1.23 | −0.03–19.14 (75%) | 0.96–3.95 | |||

| Furniture | 4 | 13 | 3.08 | 1.52 | 2.04–25.36 (100%) | 0.95–4.83 | ||

| 7 | 15 | 2.02 | 1.04 | 1.81–27.03 (100%) | 1.49–6.80 | |||

| 11 | 9 | 2.77 | 2.64 | 1.20–17.98 (89%) | 1.33–6.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peykarjou, S.; Hoehl, S.; Pauen, S. The Development of Global-Level Categorization: Frequency Tagging EEG Responses. Brain Sci. 2024, 14, 541. https://doi.org/10.3390/brainsci14060541

Peykarjou S, Hoehl S, Pauen S. The Development of Global-Level Categorization: Frequency Tagging EEG Responses. Brain Sciences. 2024; 14(6):541. https://doi.org/10.3390/brainsci14060541

Chicago/Turabian StylePeykarjou, Stefanie, Stefanie Hoehl, and Sabina Pauen. 2024. "The Development of Global-Level Categorization: Frequency Tagging EEG Responses" Brain Sciences 14, no. 6: 541. https://doi.org/10.3390/brainsci14060541