Abstract

Intracerebral hemorrhage (ICH) is a critical condition characterized by a high prevalence, substantial mortality rates, and unpredictable clinical outcomes, which results in a serious threat to human health. Improving the timeliness and accuracy of prognosis assessment is crucial to minimizing mortality and long-term disability associated with ICH. Due to the complexity of ICH, the diagnosis of ICH in clinical practice heavily relies on the professional expertise and clinical experience of physicians. Traditional prognostic methods largely depend on the specialized knowledge and subjective judgment of healthcare professionals. Meanwhile, existing artificial intelligence (AI) methodologies, which predominantly utilize features derived from computed tomography (CT) scans, fall short of capturing the multifaceted nature of ICH. Although existing methods are capable of integrating clinical information and CT images for prognosis, the effectiveness of this fusion process still requires improvement. To surmount these limitations, the present study introduces a novel AI framework, termed the ICH Network (ICH-Net), which employs a joint-attention cross-modal network to synergize clinical textual data with CT imaging features. The architecture of ICH-Net consists of three integral components: the Feature Extraction Module, which processes and abstracts salient characteristics from the clinical and imaging data, the Feature Fusion Module, which amalgamates the diverse data streams, and the Classification Module, which interprets the fused features to deliver prognostic predictions. Our evaluation, conducted through a rigorous five-fold cross-validation process, demonstrates that ICH-Net achieves a commendable accuracy of up to 87.77%, outperforming other state-of-the-art methods detailed within our research. This evidence underscores the potential of ICH-Net as a formidable tool in prognosticating ICH, promising a significant advancement in clinical decision-making and patient care.

1. Introduction

Intracerebral hemorrhage (ICH) constitutes a severe threat to human health, ac-counting for 20% to 30% of all stroke cases. As a critical cerebrovascular condition, ICH is characterized by its complex etiologies and heterogeneous clinical presentations. Within the first 30 days post-onset, the mortality rates for ICH patients remain alarmingly high, ranging from 35% to 52% []. Additionally, a prospective observational cohort study demonstrated a cumulative recurrence rate of 6.1% within the first year, increasing to 7.9% by the fifth year following a lobar hemorrhage []. Furthermore, survivors of ICH often face the prospect of enduring long-term disabilities, epilepsy, blood clotting, vision, or vascular issues []. Considering the notable incidence, disability, and mortality rates associated with ICH, the urgency of timely and precise diagnostic processes cannot be overstated [].

Historically, the diagnosis of ICH has relied upon the professional understanding and empirical knowledge of physicians, who interpret computed tomography (CT) scans by examining parameters such as the location, volume, and distinctive texture characteristics of the hemorrhagic site, in conjunction with the Glasgow Coma Scale (GCS) score []. This conventional method is inherently subjective, heavily dependent on the clinician’s expertise, and can be resource-intensive.

To mitigate these issues, earlier research adopted machine learning techniques with promising outcomes. However, the potential for further enhancement remains. The burgeoning field of artificial intelligence (AI) has heralded significant advancements in medical imaging technology, thereby enhancing the comprehensiveness of imaging data available for clinical use. This innovation plays an increasingly pivotal role in facilitating disease screening, informing treatment planning, and assessing prognostic outcomes. Biomedical images are particularly informative, as they encapsulate crucial information reflecting underlying pathophysiological changes. CT, especially the widely utilized and straightforward non-contrast-enhanced CT, is instrumental in the diagnosis and management of ICH and its potential complication, hematoma expansion. Diagnostic signs detectable on non-contrast CT, such as the black hole sign [], mixed-density sign, low-density areas, and the island sign, hold clinical significance for predicting hematoma growth. Nevertheless, the interpretation of these imaging features hinges on the expertise of well-trained clinicians and is subject to the limitations of an individual reader’s experience and subjective judgment, which often results in low sensitivity. AI models offer a solution to surmount these challenges. By mitigating the impact of subjective biases during the analysis, AI can provide more precise and reproducible assessments. In addition, the integration of AI into medical imaging analysis has the potential to augment the objective evaluation of signs, such as those indicative of hematoma expansion in ICH, to improve the quality of patient care and outcomes.

To refine diagnostic accuracy, contemporary AI methods have embraced more sophisticated algorithms. In the medical domain, AI has demonstrated notable successes in diagnosing conditions, such as breast cancer [], prostate cancer [], intracranial hematoma [], and pleural effusion []. In the specific context of ICH, cross-modal methods that leverage comprehensive datasets have become increasingly relevant. Recent advancements in deep learning models have been adopted to effectively boost ICH diagnosis. For instance, Wang et al. [] proposed a data fusion framework based on convolutional neural networks (CNNs) for early prediction of hematoma expansion. Likewise, del Barrio et al. [] presented a deep learning model based on CNN for prognosis prediction after ICH. Also, the current methods include variational autoencoder (VAE) [,,,] and generative adversarial networks (GANs) []. In the current landscape, image-based methodologies [] and multi-task strategies [] have been employed in this domain, producing commendable outcomes. Specifically, the Res-Inc-LGBM model [], a cross-modal technique that extracts information from two distinct modalities within CT imagery, has demonstrated promising results. Nevertheless, this model did not utilize clinical data to further enhance its efficacy. In addition, the UniMiSS framework [] represents an innovative approach by incorporating an extensive array of 2D medical images into a 3D self-supervised learning paradigm, thereby addressing the limitation imposed by the paucity of 3D datasets, such as those obtained from CT scans. Additionally, GCS-ICH-Net [] has improved performance by employing a self-attention mechanism to integrate imaging data with domain knowledge. However, existing methodologies have yet to implement effective fusion mechanisms.

To rectify these deficiencies, this article puts forth a novel approach with the following advantages:

- (1)

- We introduce a cross-modal loss function that accounts for the intrinsic correlation between the disparate data modalities.

- (2)

- We incorporate clinical data to enrich the model’s comprehension and enhance ICH prognosis accuracy.

- (3)

- Our fusion model incorporates a joint-attention mechanism, effectively facilitating the extraction of more salient and comprehensive fusion features.

This innovative strategy promises to enhance the precision of ICH diagnosis, thereby facilitating more effective patient management and improving clinical outcomes. In our study, we incorporated spontaneous ICH into our dataset, explicitly excluding cases with causes such as arteriovenous malformations, cerebral aneurysms, traumatic brain injury, brain tumors, and cerebral infarctions.

2. Materials and Methods

2.1. Problem Formalization

Within the specified dataset comprising patients diagnosed with ICH, each patient’s record encompasses clinical data alongside one or more CT slices. The objective of this study is to develop a predictive model that, when trained on the designated training set, can process the provided inputs and yield outputs that closely align with the target la-bels. Upon evaluation using the test set, the model demonstrated commendable performance.

2.2. Patient Population

A retrospective study was conducted on a cohort of 294 patients who were admitted to our hospital with spontaneous ICH from August 2013 to May 2021 and completed the prescribed treatment regimen. The study received approval from the hospital’s ethics committee and informed verbal consent was obtained from all participants. The inclusion criteria for the data were as follows: (1) diagnosis of spontaneous ICH confirmed, (2) completion of plain CT scans within 24 h after the cessation of bleeding, (3) availability of complete GCS scores at admission, (4) prognostic data based on the Glasgow Outcome Scale (GOS) at discharge, and (5) comprehensive clinical information, including age, gender, and location of hemorrhage, among other variables. Patients presenting with secondary ICH resulting from arteriovenous malformation, cerebral aneurysm, traumatic brain injury, brain tumor, or cerebral infarction were excluded from the study. Regarding imaging equipment, the study utilized a Philips Brilliance 16-slice CT scanner and a Toshiba Aquilion ONE 320-slice CT scanner. The scans were conducted with a slice thickness of 6 mm and a matrix size of 512 × 512, which has voxel sizes in millimeters (0.488, 0.488, and 6).

2.3. Data Acquisition

Our proprietary dataset comprised 294 clinical cases obtained from our partner hospital, representing a balanced collection, with 149 cases classified as having positive outcomes and 145 cases with negative outcomes. The prognostic labels for these datasets were determined by three neurosurgeons, one with a senior professional title, one with an intermediate professional title, and one with a junior professional title. Prognosis was predicted using a double-blind method based on two approaches: utilizing image features alone and combining image features with GCS scoring information to assess the prognosis of enrolled ICH cases. Patient demographics and clinical characteristics were extracted from the electronic medical record system, encompassing variables such as gender, age, CT scan acquisition time, length of hospital stay, GCS score, treatment methodology, and the location and volume of the hemorrhage. If the patient has a good prognosis, they are unlikely to experience any other concurrent symptoms following treatment. Conversely, a patient with a poor prognosis may develop sequelae in the later stages of treatment, such as hemiplegia, language disorders, and decreased muscle strength. Prognosis was stratified based on the GOS, with a GOS score of ≥4 indicating a good prognosis, and a GOS score of ≤3 indicating a poor prognosis. Additionally, a GOS of 1–5 corresponds to outcomes ranging from death, vegetative state, severe disability, mild disability, to return to normal life.

In our dataset, the good prognosis group included 149 patients, 109 males and 40 females, with an age range of 29–88 years and a mean age of 53.85 years. Their hospitalization period varied from 3 to 104 days, with an average stay of 19.40 days. Treatment approaches varied, with 119 patients receiving conservative management in the internal medicine department and 25 undergoing surgical interventions. Hemorrhage locations were distributed as follows: 48 cases in the basal ganglia, 29 in the thalamus, 5 in the external capsule, 19 in the cerebral lobes, 16 in the brainstem, 14 in the cerebellum, 6 in the ventricles, 5 in multiple regions, and 32 with secondary ventricular involvement. Conversely, in the poor prognosis group, there were 145 individuals, comprising 108 males and 37 females, with an age range of 29 to 90 years and a mean age of 54.16 years. The duration of hospital stays ranged from 1 to 388 days, with an average of 39.63 days. In terms of treatment, 67 patients received conservative care within internal medicine, while 75 underwent surgical procedures. The distribution of hemorrhage locations included 76 cases in the basal ganglia, 18 in the thalamus, 6 in the external capsule, 12 in the cerebral lobes, 13 in the brainstem, 2 in the cerebellum, 1 in the ventricles, 10 spanning multiple regions, and 49 associated with secondary ventricular bleeding. Although no universally accepted GCS threshold exists for predicting the prognosis in ICH patients, it is generally held by clinicians that a GCS score of 9 or above is predictive of a more favorable outcome.

2.4. ICH-Net Architecture

Enhancing the accuracy of prognosis classification for ICH can significantly reduce mortality and disability risks. However, current methods fail to efficiently integrate clinical and imaging data for prognosis classification, thereby limiting their effectiveness in aiding accurate diagnosis by medical professionals. To address the challenge of integrating clinical and imaging data and to enhance prognosis classification accuracy, we proposed ICH-Net, a novel network framework.

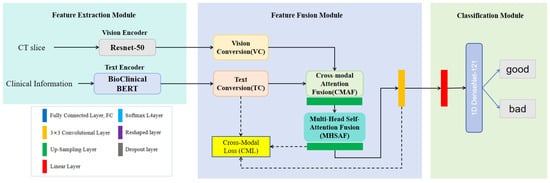

The architecture of our ICH-Net is depicted in Figure 1 and is composed of three sequential components: the Feature Extraction Module, the Feature Fusion Module, and the Classification Module. Notably, the Feature Fusion Module employs a cross-modal attention mechanism to fully account for the intrinsic relationships between the different modalities, thereby enabling targeted and pertinent feature integration within the network.

Figure 1.

The diagram illustrates the architecture of ICH-Net, which comprises a Feature Extraction Module, a Feature Fusion Module, and a Classification Module, in sequential order. Ultimately, it outputs the final results.

Clinicians utilize imaging data and clinical texts to comprehensively assess prognosis. Clinical text information, such as GCS, offers domain-specific knowledge that aids in prognostic evaluation. Additionally, factors such as age, gender, and other demographic information have distinct impacts on ICH outcomes. Consequently, we designed various modules to extract both image and text features. In the Feature Extraction Module, we utilized two distinct encoders: and , to obtain the textual representation and the visual representation, respectively. For text encoding, we leveraged the pre-trained BioClinicalBERT model [], while for visual encoding, we employed the pre-trained 2D ResNet50 architecture [].

Within the Feature Fusion Module, the textual representation, , and the visual representation, , are processed through a Text Conversion (TC) sub-module and a Vision Conversion (VC) sub-module, respectively. The outputs from these sub-modules, denoted as and , were subsequently input into the Cross-Modal Attention Fusion (CMAF) and the Multi-Head Self-Attention Fusion (MHSAF). This step facilitated the effective integration of textual and visual representations. Finally, in the Classification Module, the fused representation was passed through our neural network, culminating in a classification task that discerns the prognostic outcomes.

2.5. The Detail Blocks

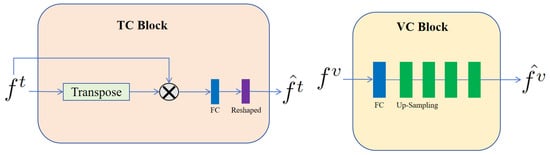

TC block and VC block. In our architecture, the TC and VC blocks are integral to the efficacious fusion of textual and visual data. As depicted in Figure 2, the TC block began by calculating the product of the text representation, , and its transpose. This operation facilitated the modeling of associations between each word in the text representation and its counterparts, thereby capturing the semantic relationships and contextual nuances inherent within the text sequence. Leveraging an attention-based mechanism, the TC block enhanced the model’s comprehension of the textual input and fostered a more nuanced and information-rich representation during text data processing. Following this, the resulting matrix was subjected to a transformation via a Fully Connected (FC) layer. The FC layer was designed to discern nonlinear relationships within the input data, ultimately yielding output representations that are tailored to meet the specific demands of our task. The sequence concluded with the reshaping of this output to produce , which represents the refined final form of the text data, as processed by the TC blocks.

Figure 2.

Architecture of the TC block and VC block. These blocks were designed to specifically process text and visual information, respectively. The symbol stands for matrix multiplication.

In contrast, within the VC block of our architecture, we handled the visual representation, , derived from the input visual data. To refine the integration of these visual features, the initial step involved passing them through a FC layer. This FC layer executed linear transformations on the visual representations, thereby remapping them into a representation space that was more apt for the ensuing analytical steps. Subsequent to the FC layer’s processing, the visual representations underwent a sequence of four up-sampling operations. These operations incrementally enhanced the resolution of the feature map, enabling a more detailed and precise capture of the structural nuances present in the input image. The amalgamation of these transformative components culminated in the output visual representation, , which embodied an effectively processed and integrated depiction of the visual feature set.

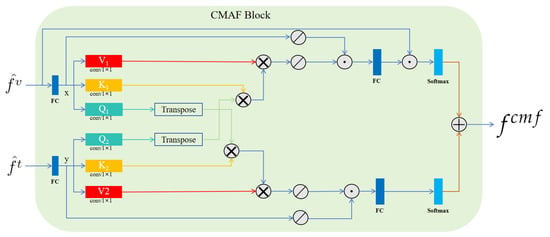

CMAF block. Inspired by the methodological framework of CMAFGAN [], for simple notation, we denoted and as x and y, respectively. As shown in Figure 3, the CMAF block began with six 1 × 1 convolution layers applied to x and y. These layers transformed each input into three matrices—V1, K1, and Q1 for x, and V2, K2, and Q2 for y—with their associated weights represented by . The transformation can be formalized to yield the attention matrices, as follows:

where signifies the linear transformation of the input via the weight matrix followed by transposition. Subsequently, as depicted in Figure 3, we obtained the final output representation, , through these operations. Here, is the representation of after passing through an up-sampling layer.

Figure 3.

Architecture of the proposed CMAF block. Here, represents matrix multiplication, stands for SoftPool, symbolizes matrix addition, and signifies concatenation. In this block, are inputs, then is obtained as the output.

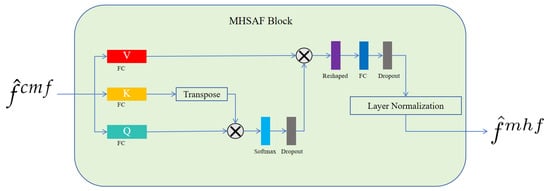

MHSAF block. As depicted in Figure 4, to enhance feature representation, our approach projected features onto three distinct subspaces, utilizing a trio of independent linear transformations, each governed by a unique weight matrix. Following this projection, self-attention computations were executed within each subspace to yield a set of output vectors. These vectors were subsequently concatenated, resulting in a comprehensive final output that captured the diversified interactions within the data. This method allowed for a more nuanced and multi-faceted analysis by capitalizing on the strengths of multiple representational spaces.

Figure 4.

Detailed architecture of the proposed MHSAF block. The symbol denotes matrix multiplication.

The merit of this methodology lies in its capacity to process features with enhanced granularity across disparate subspaces while concurrently conducting self-attention computations within each individual subspace. This approach enables the model to more adeptly discern the inter-feature relationships from varied perspectives. By carrying out attention-based calculations within each designated subspace, the model gains a more profound comprehension of the dependencies among features, which allows for the extraction of richer and more precise information. Ultimately, this refined understanding contributes to the improvement of the model’s final predictive accuracy.

Furthermore, by concatenating multiple output vectors from within each subspace to construct the final output, we enable the effective integration of information across each subspace. This process yields a more holistic and nuanced representation, thereby augmenting the model’s expressive capabilities and enhancing its predictive accuracy. In essence, by distributing features across various subspaces and executing self-attention computations within these discrete domains, we capitalize on the inter-feature relationships. This strategy not only facilitates the extraction of richer and more precise information but also substantially elevates the predictive efficacy of the model.

2.6. Loss Function

We utilized a composite loss function termed Cross-Modal Fusion (CMF) Loss, which comprises three components: Intra-Modality and Inter-Modality Alignment (IMIMA) loss, Similarity Distribution Matching (SDM) loss, and Masked Language Modeling (MLM) loss.

IMIMA Loss. We deployed four principal loss terms, namely, Text-to-Text (t2t), Vision-to-Vision (v2v), Vision-to-Text (v2t), and Text-to-Vision (t2v). We designated the negative sample set for a given sample as . The formulation for each loss component is as follows:

where the pairwise similarity measure .

To encapsulate the total loss, we aggregated these terms to define the IMIMA loss:

SDM Loss. We incorporated the SDM loss, as delineated by Jiang et al. [], to quantify the discrepancy between the predicted similarity distribution and the ground-truth similarity distribution produced by the model. The computation of this loss leverages the Kullback–Leibler (KL) divergence, encompassing bi-directional components: Visual-to-Text (v2t) and Text-to-Visual (t2v). The formulation of this loss function was meticulously designed to steer the model toward a more accurate alignment of similarity distributions between visual and textual representations of the input data. Such alignment is instrumental in amplifying the model’s performance across cross-modal tasks. The v2t loss is defined as follows:

where represents the true probability distribution of matches for the -th sample, and denotes the SoftMax-normalized cosine similarity scores. In this context, is the predicted probability that the -th sample corresponds to the -th category, and is the ground-truth probability.

Consequently, the cumulative SDM loss is the sum of the v2t and t2v losses, calculated by:

MLM Loss. Drawing on the architectural principles of the BERT model, we devised a novel method. Initially, we obscured select words within the input sequence using a specialized masking token. Subsequently, the model’s predicted probability distribution for these masked positions was contrasted with the actual labels to assess the model’s predictive bias. This bias was quantified and integrated into the loss function as a facet of the training process. The intent behind this methodology was to aid the model in attaining a more profound comprehension of the context enveloping the input sequence. By diminishing the disparity between the predicted and true labels, we aimed to bolster the model’s overall performance.

Ultimately, our CMF loss function is a weighted sum of the individual loss components. Each loss term was assigned a corresponding weight, reflected in the final formulation. The comprehensive loss function is expressed as follows:

where and are hyperparameters that balance the contributions of the SDM and MLM losses, respectively, alongside the IMIMA loss.

3. Results

3.1. Data Pretreatment

In the preprocessing of the imaging data, we employed multi-threshold segmentation and connected component analysis to delineate the regions of interest, thereby mitigating interference from non-brain-tissue areas. Threshold segmentation was applied to discriminate bone structures from other tissues, effectively isolating the hemorrhagic zones and preserving normal brain parenchyma. To further enhance precision and eliminate extraneous noise, connected component analysis was performed in a batch-processing manner. The objective was to ensure that the final image comprised solely of pertinent brain tissue regions; specifically, the hemorrhagic sites, gray matter, and intact brain tissue. This meticulous approach to image preprocessing is critical for the accuracy of subsequent analyses.

Regarding the text data preprocessing, we extracted key variables, such as age, gender, time from onset to CT, hospital stay, GCS score, treatment method, and physician’s diagnosis, from the clinical dataset. Then, each variable was individually processed through a word segmentation tool integrated within the pre-trained Bio-ClinicalBERT model. This process facilitated the conversion of the textual data into a tensor representation to be suitable for subsequent computational analysis.

3.2. Experiments

To demonstrate the superiority and validate the robustness of our methodology, we executed a comprehensive suite of experiments, encompassing both comparative and ablation studies. Throughout the training phase, we meticulously optimized hyperparameters. Specifically, we set the learning rate at 0.0001, determined the number of training epochs to be 300, and established the batch size at 128. These configurations were carefully chosen to balance computational efficiency with model performance. The libraries used in our experiment included but were not limited to torch = 1.21.1 + cu116 and torch vision = 0.13.1 + cu116. And the code is available at https://github.com/YU-deep/ICH-2D, accessed on 18 June 2024.

3.2.1. Comparative Experiments

As detailed in Table 1, our comparative study benchmarked our algorithm against the leading contemporary methodologies, which span purely 3D, purely 2D, and hybrid 2D + 3D approaches. The results underscored our method’s superiority across all evaluated metrics, thereby confirming its efficacy. Specifically, when juxtaposed with the best-performing metrics of alternative methods, our approach exhibited an increment of 2.35% in accuracy (ACC), a 0.13% rise in recall, and a 0.0027 enhancement in the area under the receiver operating characteristic curve (AUC). Despite employing a 2D-based framework, these results clearly demonstrated that our method’s overall performance surpassed that of both existing 3D and 2D techniques.

Table 1.

The comparative experiments comparing our method with other methods.

Based on the outcomes of our analysis, we posit that the observed superiority of our method can be attributed to the following factors:

- (1)

- Multimodal Information Fusion: Our model incorporated a CMF loss function, which effectively harnessed the intrinsic correlations between various modalities. By synergistically integrating CT images with clinical data, our model achieved a more holistic understanding of the tasks at hand, consequently enhancing its overall performance.

- (2)

- Feature Fusion Mechanism: Our CMAF module employed a cross-modal attention mechanism designed to extract salient and comprehensive fusion features. This method facilitated a more discerning aggregation of information from multiple sources, enhancing the representational power of the fused features.

- (3)

- Utilization of Advanced Pre-trained Models: Our framework incorporated two distinct modules for feature extraction—a visual feature extraction module utilizing the ResNet50 model and a text feature extraction module employing the BioClinicalBERT model. These pre-trained models were instrumental in enhancing the capability of our system to extract more robust and nuanced features. By leveraging the extensive knowledge encoded within these pre-trained models, our approach achieved superior feature extraction performance.

3.2.2. Ablation Experiment

As presented in Table 2, the Vision-Only approach exclusively processed visual data within the model, whereas the Text-Only approach was limited to textual information. The tabulated results elucidated that the cross-modal input strategy implemented in our ICH-Net significantly enhanced the performance, yielding an 11.18% increase in accuracy and a 0.0934 improvement in AUC compared to the Vision-Only method. These findings affirm the value of integrating clinical information to enhance diagnostic accuracy.

Table 2.

Ablation experiment on loss function.

Our perspective posits that patient information extends beyond the confines of isolated modalities, such as CT images or clinical data; rather, there is an intrinsic interrelation between these two forms of information. A concurrent comprehension of both modalities can significantly augment the model’s proficiency in executing predictive tasks. By harnessing this synergistic understanding, our model was tailored to leverage the compounded insights gained from the integrated analysis of multimodal data, thereby enhancing the accuracy and efficacy of its predictions.

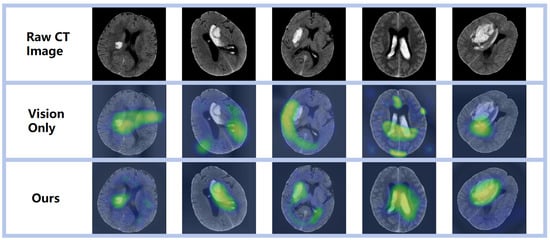

3.3. Visualization Analysis

Through meticulous examination of the images within the test set and the delineation of regions of interest, we gained a refined understanding of the network’s recognition accuracy of areas afflicted by ICH. As depicted in Figure 5, the employed visualization technique served a dual purpose: it corroborated the model’s remarkable precision in localizing hemorrhagic sites and underscored its capacity for accurate hemorrhage localization predictions—both of which are pivotal for assessing the network’s predictive capabilities. Moreover, these visualization outcomes provided a more profound insight into the network’s cognitive mechanisms, specifically its process for identifying hemorrhagic areas within images. Such clarity presents an opportunity to refine and enhance network performance. By deciphering the pivotal features and patterns that the network relies on for decision-making, we can implement targeted adjustments and advancements, thereby augmenting its efficacy in recognizing ICH. Consequently, this thorough inspection and visualization approach yielded indispensable insights for model refinement and the optimization of predictive performance. It equipped us with a deeper comprehension of the complexity inherent in the tasks at hand and propelled the enhancement of our models’ predictive acumen.

Figure 5.

Visual representation of diverse prediction outcomes, emphasizing the activation zone of class association in the predicted network with a prominent red hue.

4. Discussion

Our investigation spanned numerous datasets, yet we found that none offered concurrent public access to both imaging and clinical information. Our private dataset stood out as both comprehensive and reflective of real-world conditions. However, future applications to external datasets may influence the performance of our model. Thus, enhancing the model’s generalization capability remains a primary objective.

Within our methodological framework, segmentation techniques were not utilized. We posit that approaches eschewing segmentation might offer a more holistic consideration of a patient’s condition, encompassing clinical presentation, imaging findings, medical history, and other pertinent factors. Such an approach could potentially aid physicians in conducting a more thorough prognostic assessment of patients and formulating optimal treatment strategies. Nevertheless, it is acknowledged that both segmentation and non-segmentation methods have their respective merits and limitations, contingent on the clinical scenario.

Our study identified several pathways for further enhancement and outlined key areas for future research. Firstly, the dataset used was sourced from collaborating hospitals, which may limit its size and diversity, potentially reducing the effectiveness of our model when applied to datasets from different institutions or that include varied data types. Secondly, during feature extraction, we utilized pre-trained text and visual encoders. Should these models perform sub-optimally on certain tasks, the efficacy of our methodology could be compromised. Lastly, our model integrated CT imaging data and text, which has been demonstrated to influence prognosis classification outcomes, such as gender [,,], early cognitive status [], and the location and volume of the hemorrhage [,]. Therefore, any loss of information between modalities might impair the model’s performance. These identified limitations guide a roadmap for future improvements, including expanding the dataset’s scope and diversity, enhancing feature extraction techniques, and mitigating information loss across different modalities.

5. Conclusions

In current methodologies, the lack of effective fusion mechanisms has resulted in the suboptimal amalgamation of clinical data with CT imaging for the prognostic classification of ICH across different modalities. To address this shortfall, we proposed a pioneering framework named ICH-Net, which stands for joint-attention cross-modal network. ICH-Net comprises a Feature Extraction Module, a Feature Fusion Module, and a Classification Module. Additionally, ICH-Net incorporates a CMF loss function, which includes IMAMA loss, SDM loss, and MLM loss, to enhance modality alignment and improve the model’s interpretability with respect to the task at hand. Moreover, in the CMAF block, a cross-modal attention mechanism was employed to strategically focus on significant regions within the data.

Our empirical assessments, encompassing both comparative and ablation studies, have substantiated the efficacy of our proposed approach. In our future work, we plan to collect a more comprehensive dataset of clinical information to strengthen the model’s generalizability. Furthermore, we aim to extend the application of ICH-Net to a broader spectrum of tasks by embracing multi-modal and multi-task learning paradigms.

Author Contributions

Conceptualization, G.X., Z.M., C.P. and B.L.; software, M.X. and X.F.; validation, X.F. and B.L.; investigation, M.X., G.X., Z.M. and C.P.; data curation, H.J., X.Y., G.X. and B.L.; writing—original draft preparation, H.J. and X.Y.; writing—review and editing, Z.M. and C.P.; supervision, M.X., X.F., H.J., X.Y. and G.X.; project administration, G.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Wenzhou Science and Technology Bureau Project (Y2023149).

Institutional Review Board Statement

This study was conducted in accordance with the principles of the Declaration of Helsinki. Approval was granted by the Ethics Committee of Partner Hospitals (Approval Date: 26 October 2023, Reference No. 2023ECPJ077).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Our data were obtained from collaborating hospitals and are not publicly available.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jakubovic, R.; Aviv, R.I. Intracerebral Hemorrhage; towards physiological imaging of hemorrhage risk in acute and chronic bleeding. Front. Neurol. 2012, 3, 24754. [Google Scholar] [CrossRef]

- Toffali, M.; Carbone, F.; Fainardi, E.; Morotti, A.; Montecucco, F.; Liberale, L.; Padovani, A. Secondary prevention after intracerebral haemorrhage. Eur. J. Clin. Investig. 2023, 53, e13962. [Google Scholar] [CrossRef]

- Øie, L.R.; Madsbu, M.A.; Solheim, O.; Jakola, A.S.; Giannadakis, C.; Vorhaug, A.; Padayachy, L.; Jensberg, H.; Dodick, D.; Salvesen, Ø.; et al. Functional outcome and survival following spontaneous intracerebral hemorrhage: A retrospective population-based study. Brain Behav. 2018, 8, e01113. [Google Scholar] [CrossRef]

- Hemphill III, J.C.; Greenberg, S.M.; Anderson, C.S.; Becker, K.; Bendok, B.R.; Cushman, M.; Fung, G.L.; Goldstein, J.N.; Macdonald, R.L.; Mitchell, P.H.; et al. Guidelines for the management of spontaneous intracerebral hemorrhage: A guideline for healthcare pr fessionals from the American Heart Association/American Stroke Association. Stroke 2015, 46, 2032–2060. [Google Scholar] [CrossRef]

- Teasdale, G.; Maas, A.; Lecky, F.; Manley, G.; Stocchetti, N.; Murray, G. The Glasgow Coma Scale at 40 years: Standing the test of time. Lancet Neurol. 2014, 13, 844–854. [Google Scholar] [CrossRef]

- Chen, Y.; Tian, L.; Wang, L.; Qin, Y.; Cai, J. Black hole sign on noncontrast computed tomography in predicting hematoma expansion in patients with intracerebral hemorrhage: A meta-analysis. Curr. Med. Imaging 2020, 16, 878–886. [Google Scholar] [CrossRef]

- Cire ̧san, D.C.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J. Mitosis detection in breast cancer histology images with deep neural networks. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2013: 16th International Conference, Nagoya, Japan, 22–26 September 2013, Proceedings, Part II 16; Springer: Berlin/Heidelberg, Germany, 2013; pp. 411–418. [Google Scholar]

- Litjens, G.; Sánchez, C.I.; Timofeeva, N.; Hermsen, M.; Nagtegaal, I.; Kovacs, I.; Hulsbergen-Van De Kaa, C.; Bult, P.; Van Ginneken, B.; Van Der Laak, J. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Sci. Rep. 2016, 6, 26286. [Google Scholar] [CrossRef]

- El Refaee, E.; Ali, T.M.; Al Menabbawy, A.; Elfiky, M.; El Fiki, A.; Mashhour, S.; Harouni, A. Machine learning in action: Revolutionizing intracranial hematoma detection and patient transport decision-making. J. Neurosci. Rural Pract. 2024, 15, 62. [Google Scholar] [CrossRef]

- Huang, L.; Lin, Y.; Cao, P.; Zou, X.; Qin, Q.; Lin, Z.; Liang, F.; Li, Z. Automated detection and segmentation of pleural effusion on ultrasound images using an Attention U-net. J. Appl. Clin. Med. Phys. 2024, 25, e14231. [Google Scholar] [CrossRef]

- Wang, C.; Deng, X.; Yu, L.; Kuang, Z.; Ma, H.; Hua, Y.; Liang, B. Data fusion framework for the prediction of early hematoma expansion based on cnn. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), Nice, France, 13–16 April 2021; pp. 169–173. [Google Scholar]

- Pérez del Barrio, A.; Esteve Domínguez, A.S.; Menéndez Fernández-Miranda, P.; Sanz Bellón, P.; Rodríguez González, D.; Lloret Iglesias, L.; Marqués Fraguela, E.; González Mandly, A.A.; Vega, J.A. A deep learning model for prognosis prediction after intracranial hemorrhage. J. Neuroimaging 2023, 33, 218–226. [Google Scholar] [CrossRef]

- Louizos, C.; Shalit, U.; Mooij, J.M.; Sontag, D.; Zemel, R.; Welling, M. Causal effect inference with deep latent-variable models. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Wu, P.A.; Fukumizu, K. Identifying and Estimating Causal Effects under Weak Overlap by Generative Prognostic Model 2021. In Proceedings of the Advances in Neural Information Processing Systems 34 (NeurIPS 2021), Virtual, 6–14 December 2021. [Google Scholar]

- Lee, C.; Van der Schaar, M. A variational information bottleneck approach to multiomics data integration. In Proceedings of the International Conference on Artificial Intelligence and Statistics; PMLR: London, UK, 2021; pp. 1513–1521. [Google Scholar]

- Shi, Y.; Paige, B.; Torr, P. Variational mixture-of-experts autoencoders for multi-modal deep generative models. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Yoon, J.; Jordon, J.; Van Der Schaar, M. GANITE: Estimation of individualized treatment effects using generative adversarial nets. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Nawabi, J.; Kniep, H.; Elsayed, S.; Friedrich, C.; Sporns, P.; Rusche, T.; Böhmer, M.; Morotti, A.; Schlunk, F.; Dührsen, L.; et al. Imaging-based outcome prediction of acute intracerebral hemorrhage. Transl. Stroke Res. 2021, 12, 958–967. [Google Scholar] [CrossRef]

- Gong, K.; Dai, Q.; Wang, J.; Shi, T.; Huang, S.; Wang, Z. Unified ICH quantification and prognosis prediction in NCCT images using a multi-task interpretable network. Front. Neurosci. 2023, 17, 1118340. [Google Scholar] [CrossRef]

- Asif, M.; Shah, M.A.; Khattak, H.A.; Mussadiq, S.; Ahmed, E.; Nasr, E.A.; Rauf, H.T. Intracranial hemorrhage detection using parallel deep convolutional models and boosting mechanism. Diagnostics 2023, 13, 652. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, J.; Xia, Y.; Wu, Q. Unimiss: Universal medical self-supervised learning via breaking dimensionality barrier. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 558–575. [Google Scholar]

- Shan, X.; Li, X.; Ge, R.; Wu, S.; Elazab, A.; Zhu, J.; Zhang, L.; Jia, G.; Xiao, Q.; Wan, X.; et al. GCS-ICHNet: Assessment of Intracerebral Hemorrhage Prognosis using Self-Attention with Domain Knowledge Integration. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkiye, 5–8 December 2023; pp. 2217–2222. [Google Scholar]

- Alsentzer, E.; Murphy, J.R.; Boag, W.; Weng, W.H.; Jin, D.; Naumann, T.; McDermott, M. Publicly available clinical BERT embeddings. arXiv 2019, arXiv:1904.03323. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Luo, X.; Chen, X.; He, X.; Qing, L.; Tan, X. CMAFGAN: A Cross-Modal Attention Fusion based Generative Adversarial Network for attribute word-to-face synthesis. Knowl. Based Syst. 2022, 255, 109750. [Google Scholar] [CrossRef]

- Jiang, D.; Ye, M. Cross-Modal Implicit Relation Reasoning and Aligning for Text-to-Image Person Retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 2787–2797. [Google Scholar]

- Ganti, L.; Shameem, M.; Houck, J.; Stead, T.S.; Stead, T.G.; Cesarz, T.; Mirajkar, A. Gender disparity in stoke: Women have higher ICH scores than men at initial ED presentation for intracerebral hemorrhage. J. Natl. Med. Assoc. 2023, 115, 186–190. [Google Scholar] [CrossRef]

- Broberg, E.; Hjalmarsson, C.; Setalani, M.; Milenkoski, R.; Andersson, B. Sex Differences in Treatment and Prognosis of Acute Intracerebral Hemorrhage. J. Women’s Health 2023, 32, 102–108. [Google Scholar] [CrossRef]

- Foschi, M.; D’Anna, L.; Gabriele, C.; Conversi, F.; Gabriele, F.; De Santis, F.; Orlandi, B.; De Santis, F.; Ornello, R.; Sacco, S. Sex Differences in the Epidemiology of Intracerebral Hemorrhage over 10 Years in a Population-Based Stroke Registry. J. Am. Heart Assoc. 2024, 13, e032595. [Google Scholar] [CrossRef]

- Li, Z.Q.; Bu, X.Q.; Cheng, J.; Deng, L.; Lv, X.N.; Wang, Z.J.; Hu, X.; Yang, T.N.; Yin, H.; Liu, X.Y.; et al. Impact of early cognitive impairment on outcome trajectory in patients with intracerebral hemorrhage. Ann. Clin. Transl. Neurol. 2024, 11, 368–376. [Google Scholar] [CrossRef]

- An, S.J.; Kim, T.J.; Yoon, B.W. Epidemiology, risk factors, and clinical features of intracerebral hemorrhage: An update. J. Stroke 2017, 19, 3. [Google Scholar] [CrossRef]

- Teo, K.C.; Fong, S.M.; Leung, W.C.; Leung, I.Y.; Wong, Y.K.; Choi, O.M.; Yam, K.K.; Lo, R.C.; Cheung, R.T.; Ho, S.L.; et al. Location-specific hematoma volume cutoff and clinical outcomes in intracerebral hemorrhage. Stroke 2023, 54, 1548–1557. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).