You’re Beautiful When You Smile: Event-Related Brain Potential (ERP) Evidence of Early Opposite-Gender Bias in Happy Faces

Abstract

:1. Introduction

2. Method

2.1. Participants

2.2. Stimuli and Apparatus

2.3. Procedure

2.4. EEG Recording and Pre-Processing

2.5. Statistical Analyses

3. Results

3.1. Sociodemographic Differences

3.2. Behavioral Results

3.3. EEG Results

3.3.1. P1 (105–135 ms)

3.3.2. N170 (145–175 ms)

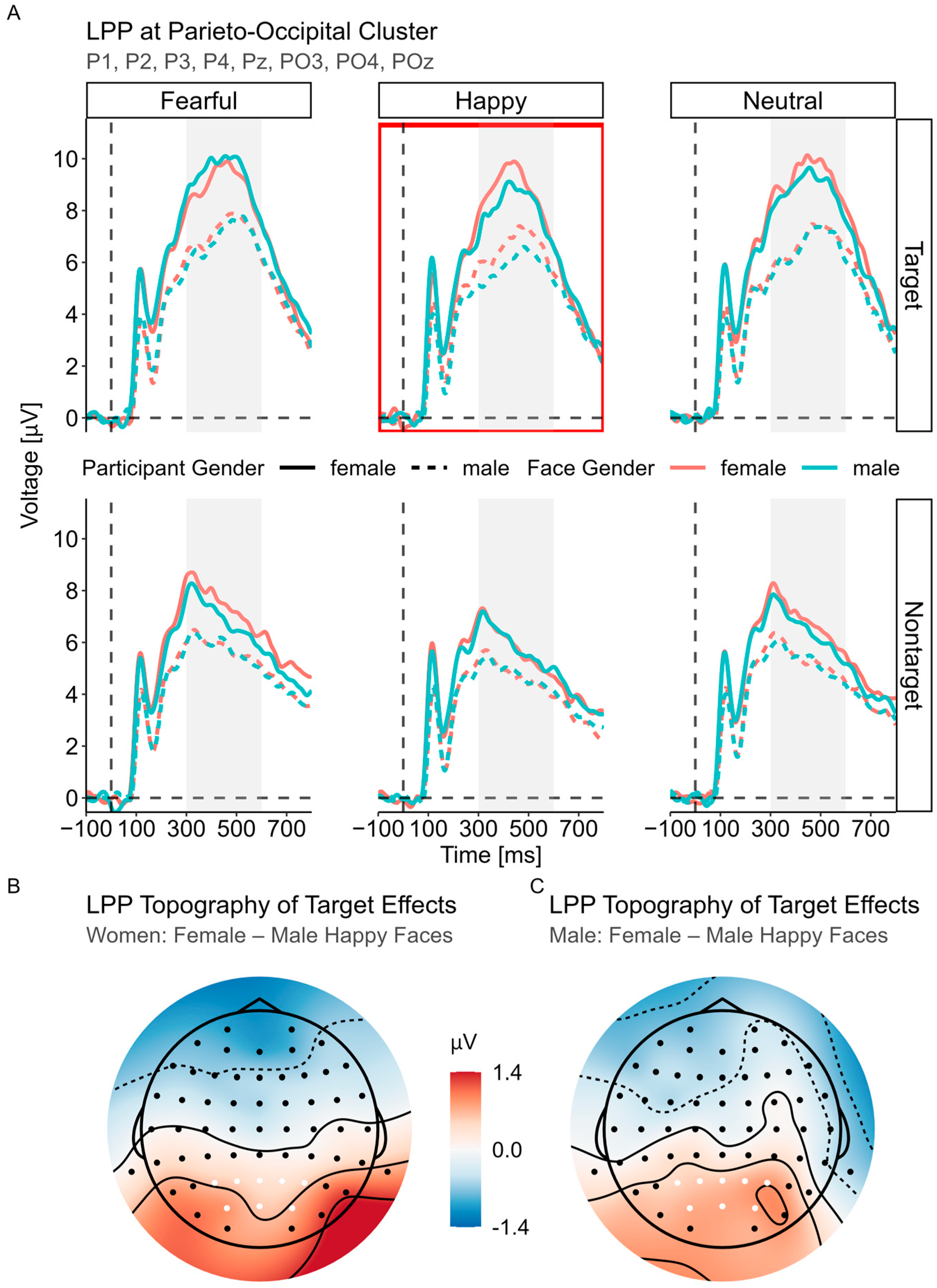

3.3.3. LPP (300–600 ms)

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Crouzet, S.M.; Kirchner, H.; Thorpe, S.J. Fast saccades toward faces: Face detection in just 100 ms. J. Vis. 2010, 10, 16. [Google Scholar] [CrossRef] [PubMed]

- Palermo, R.; Rhodes, G. Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia 2007, 45, 75–92. [Google Scholar] [CrossRef] [PubMed]

- Besson, G.; Barragan-Jason, G.; Thorpe, S.; Fabre-Thorpe, M.; Puma, S.; Ceccaldi, M.; Barbeau, E. From face processing to face recognition: Comparing three different processing levels. Cognition 2017, 158, 33–43. [Google Scholar] [CrossRef] [PubMed]

- Rossion, B. Humans Are Visual Experts at Unfamiliar Face Recognition. Trends Cogn. Sci. 2018, 22, 471–472. [Google Scholar] [CrossRef] [PubMed]

- Proverbio, A.M. Sex differences in the social brain and in social cognition. J. Neurosci. Res. 2023, 101, 730–738. [Google Scholar] [CrossRef] [PubMed]

- Herlitz, A.; Lovén, J. Sex differences and the own-gender bias in face recognition: A meta-analytic review. Vis. Cogn. 2013, 21, 1306–1336. [Google Scholar] [CrossRef]

- Rehnman, J.; Herlitz, A. Women remember more faces than men do. Acta Psychol. 2007, 124, 344–355. [Google Scholar] [CrossRef] [PubMed]

- Kret, M.; De Gelder, B. A review on sex differences in processing emotional signals. Neuropsychologia 2012, 50, 1211–1221. [Google Scholar] [CrossRef]

- McClure, E.B. A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychol. Bull. 2000, 126, 424–453. [Google Scholar] [CrossRef]

- Whittle, S.; Yücel, M.; Yap, M.B.; Allen, N.B. Sex differences in the neural correlates of emotion: Evidence from neuroimaging. Biol. Psychol. 2011, 87, 319–333. [Google Scholar] [CrossRef]

- Clark, V.P.; Hillyard, S.A. Spatial selective attention affects early extrastriate but not striate components of the visual evoked potential. J. Cogn. Neurosci. 1996, 8, 387–402. [Google Scholar] [CrossRef]

- Di Russo, F.; Martínez, A.; Sereno, M.I.; Pitzalis, S.; Hillyard, S.A. Cortical sources of the early components of the visual evoked potential. Hum. Brain Mapp. 2002, 15, 95–111. [Google Scholar] [CrossRef]

- Dering, B.; Martin, C.D.; Moro, S.; Pegna, A.J.; Thierry, G. Face-sensitive processes one hundred milliseconds after picture onset. Front. Hum. Neurosci. 2011, 5, 93. [Google Scholar] [CrossRef]

- Herrmann, M.J.; Ehlis, A.-C.; Ellgring, H.; Fallgatter, A.J. Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs). J. Neural Transm. 2005, 112, 1073–1081. [Google Scholar] [CrossRef] [PubMed]

- Kuefner, D.; de Heering, A.; Jacques, C.; Palmero-Soler, E.; Rossion, B. Early visually evoked electrophysiological responses over the human brain (P1, N170) show stable patterns of face-sensitivity from 4 years to adulthood. Front. Hum. Neurosci. 2010, 3, 67. [Google Scholar] [CrossRef]

- Rossion, B.; Caharel, S. ERP evidence for the speed of face categorization in the human brain: Disentangling the contribution of low-level visual cues from face perception. Vis. Res. 2011, 51, 1297–1311. [Google Scholar] [CrossRef] [PubMed]

- Bentin, S.; Allison, T.; Puce, A.; Perez, E.; McCarthy, G. Electrophysiological Studies of Face Perception in Humans. J. Cogn. Neurosci. 1996, 8, 551–565. [Google Scholar] [CrossRef]

- Eimer, M.; Gosling, A.; Nicholas, S.; Kiss, M. The N170 component and its links to configural face processing: A rapid neural adaptation study. Brain Res. 2011, 1376, 76–87. [Google Scholar] [CrossRef] [PubMed]

- Schindler, S.; Bruchmann, M.; Gathmann, B.; Moeck, R.; Straube, T. Effects of low-level visual information and perceptual load on P1 and N170 responses to emotional expressions. Cortex 2021, 136, 14–27. [Google Scholar] [CrossRef]

- Hinojosa, J.; Mercado, F.; Carretié, L. N170 sensitivity to facial expression: A meta-analysis. Neurosci. Biobehav. Rev. 2015, 55, 498–509. [Google Scholar] [CrossRef]

- Schindler, S.; Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 2020, 130, 362–386. [Google Scholar] [CrossRef] [PubMed]

- Hajcak, G.; Dunning, J.P.; Foti, D. Motivated and controlled attention to emotion: Time-course of the late positive potential. Clin. Neurophysiol. 2009, 120, 505–510. [Google Scholar] [CrossRef] [PubMed]

- Hajcak, G.; Foti, D. Significance? Significance! Empirical, methodological, and theoretical connections between the late positive potential and P300 as neural responses to stimulus significance: An integrative review. Psychophysiology 2020, 57, e13570. [Google Scholar] [CrossRef] [PubMed]

- Schupp, H.T.; Flaisch, T.; Stockburger, J.; Junghöfer, M. Emotion and attention: Event-related brain potential studies. In Understanding Emotions; Anders, S., Ende, G., Junghofer, M., Eds.; Elsevier Textbooks; Elsevier: Amsterdam, The Netherlands, 2006; Volume 156, pp. 31–51. [Google Scholar] [CrossRef]

- Ferri, J.; Weinberg, A.; Hajcak, G. I see people: The presence of human faces impacts the processing of complex emotional stimuli. Soc. Neurosci. 2012, 7, 436–443. [Google Scholar] [CrossRef] [PubMed]

- Ito, T.A.; Cacioppo, J.T. Electrophysiological Evidence of Implicit and Explicit Categorization Processes. J. Exp. Soc. Psychol. 2000, 36, 660–676. [Google Scholar] [CrossRef]

- Weinberg, A.; Hajcak, G. Beyond good and evil: The time-course of neural activity elicited by specific picture content. Emotion 2010, 10, 767–782. [Google Scholar] [CrossRef] [PubMed]

- Duval, E.R.; Moser, J.S.; Huppert, J.D.; Simons, R.F. What’s in a Face? J. Psychophysiol. 2013, 27, 27–38. [Google Scholar] [CrossRef]

- Sun, D.; Chan, C.C.H.; Fan, J.; Wu, Y.; Lee, T.M.C. Are happy faces attractive? The roles of early vs. Late processing. Front. Psychol. 2015, 6, 1812. [Google Scholar] [CrossRef] [PubMed]

- van Hooff, J.C.; Crawford, H.; van Vugt, M. The wandering mind of men: ERP evidence for gender differences in attention bias towards attractive opposite sex faces. Soc. Cogn. Affect. Neurosci. 2011, 6, 477–485. [Google Scholar] [CrossRef]

- Langeslag, S.J.; Jansma, B.M.; Franken, I.H.; Van Strien, J.W. Event-related potential responses to love-related facial stimuli. Biol. Psychol. 2007, 76, 109–115. [Google Scholar] [CrossRef]

- Dzhelyova, M.; Perrett, D.I.; Jentzsch, I. Temporal dynamics of trustworthiness perception. Brain Res. 2012, 1435, 81–90. [Google Scholar] [CrossRef]

- Lee, S.A.; Kim, C.-Y.; Shim, M. Gender differences in neural responses to perceptually invisible fearful face—An ERP study. Front. Behav. Neurosci. 2017, 11, 6. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.-H.; Kim, E.-Y.; Kim, S.; Bae, S.-M. Event-related potential patterns and gender effects underlying facial affect processing in schizophrenia patients. Neurosci. Res. 2010, 67, 172–180. [Google Scholar] [CrossRef]

- Li, Q.; Zhou, S.; Zheng, Y.; Liu, X. Female advantage in automatic change detection of facial expressions during a happy-neutral context: An ERP study. Front. Hum. Neurosci. 2018, 12, 146. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Brignone, V.; Matarazzo, S.; Del Zotto, M.; Zani, A. Gender and parental status affect the visual cortical response to infant facial expression. Neuropsychologia 2006, 44, 2987–2999. [Google Scholar] [CrossRef] [PubMed]

- Hahn, A.C.; Symons, L.A.; Kredel, T.; Hanson, K.; Hodgson, L.; Schiavone, L.; Jantzen, K.J. Early and late event-related potentials are modulated by infant and adult faces of high and low attractiveness. Soc. Neurosci. 2016, 11, 207–220. [Google Scholar] [CrossRef]

- Sun, T.; Li, L.; Xu, Y.; Zheng, L.; Zhang, W.; Zhou, F.A.; Guo, X. Electrophysiological evidence for women superiority on unfamiliar face processing. Neurosci. Res. 2017, 115, 44–53. [Google Scholar] [CrossRef]

- Rostami, H.N.; Hildebrandt, A.; Sommer, W. Sex-specific relationships between face memory and the N170 component in event-related potentials. Soc. Cogn. Affect. Neurosci. 2020, 15, 587–597. [Google Scholar] [CrossRef]

- Ran, G.; Chen, X.; Pan, Y. Human sex differences in emotional processing of own-race and other-race faces. NeuroReport 2014, 25, 683–687. [Google Scholar] [CrossRef]

- Archer, J. The reality and evolutionary significance of human psychological sex differences. Biol. Rev. 2019, 94, 1381–1415. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Matarazzo, S.; Brignone, V.; Zotto, M.D.; Zani, A. Processing valence and intensity of infant expressions: The roles of expertise and gender. Scand. J. Psychol. 2007, 48, 477–485. [Google Scholar] [CrossRef]

- Oliver-Rodriguez, J.C.; Guan, Z.Q.; Johnston, V.S. Gender differences in late positive components evoked by human faces. Psychophysiology 1999, 36, 176–185. [Google Scholar] [CrossRef] [PubMed]

- Ito, T.A.; Urland, G.R. Race and gender on the brain: Electrocortical measures of attention to the race and gender of multiply categorizable individuals. J. Personal. Soc. Psychol. 2003, 85, 616–626. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Riva, F.; Zani, A.; Martin, E. Is It a Baby? Perceived Age Affects Brain Processing of Faces Differently in Women and Men. J. Cogn. Neurosci. 2011, 23, 3197–3208. [Google Scholar] [CrossRef]

- Brunet, N.M. Face processing and early event-related potentials: Replications and novel findings. Front. Hum. Neurosci. 2023, 17, 1268972. [Google Scholar] [CrossRef] [PubMed]

- Mouchetant-Rostaing, Y.; Giard, M.H. Electrophysiological Correlates of Age and Gender Perception on Human Faces. J. Cogn. Neurosci. 2003, 15, 900–910. [Google Scholar] [CrossRef]

- Mouchetant-Rostaing, Y.; Giard, M.; Bentin, S.; Aguera, P.; Pernier, J. Neurophysiological correlates of face gender processing in humans. Eur. J. Neurosci. 2000, 12, 303–310. [Google Scholar] [CrossRef]

- Valdés-Conroy, B.; Aguado, L.; Fernández-Cahill, M.; Romero-Ferreiro, V.; Diéguez-Risco, T. Following the time course of face gender and expression processing: A task-dependent ERP study. Int. J. Psychophysiol. 2014, 92, 59–66. [Google Scholar] [CrossRef]

- Wolff, N.; Kemter, K.; Schweinberger, S.R.; Wiese, H. What drives social in-group biases in face recognition memory? ERP evidence from the own-gender bias. Soc. Cogn. Affect. Neurosci. 2014, 9, 580–590. [Google Scholar] [CrossRef]

- Sun, Y.; Gao, X.; Han, S. Sex differences in face gender recognition: An event-related potential study. Brain Res. 2010, 1327, 69–76. [Google Scholar] [CrossRef]

- Buss, D.M.; Barnes, M. Preferences in human mate selection. J. Personal. Soc. Psychol. 1986, 50, 559–570. [Google Scholar] [CrossRef]

- Hofmann, S.G.; Suvak, M.; Litz, B.T. Sex differences in face recognition and influence of facial affect. Personal. Individ. Differ. 2006, 40, 1683–1690. [Google Scholar] [CrossRef]

- Kranz, F.; Ishai, A. Face Perception Is Modulated by Sexual Preference. Curr. Biol. 2006, 16, 63–68. [Google Scholar] [CrossRef] [PubMed]

- Aharon, I.; Etcoff, N.; Ariely, D.; Chabris, C.F.; O’Connor, E.; Breiter, H.C. Beautiful Faces Have Variable Reward Value. Neuron 2001, 32, 537–551. [Google Scholar] [CrossRef]

- Fischer, H.; Sandblom, J.; Herlitz, A.; Fransson, P.; Wright, C.I.; Bäckman, L. Sex-differential brain activation during exposure to female and male faces. NeuroReport 2004, 15, 235–238. [Google Scholar] [CrossRef] [PubMed]

- Spreckelmeyer, K.N.; Rademacher, L.; Paulus, F.M.; Gründer, G. Neural activation during anticipation of opposite-sex and same-sex faces in heterosexual men and women. NeuroImage 2013, 66, 223–231. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, H.; Guo, Q. Sex and Physiological Cycles Affect the Automatic Perception of Attractive Opposite-Sex Faces: A Visual Mismatch Negativity Study. Evol. Psychol. 2018, 16, 1474704918812140. [Google Scholar] [CrossRef]

- Suyama, N.; Hoshiyama, M.; Shimizu, H.; Saito, H. Event-Related Potentials for Gender Discrimination: An Examination Between Differences in Gender Discrimination Between Males and Females. Int. J. Neurosci. 2008, 118, 1227–1237. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Riva, F.; Martin, E.; Zani, A. Neural markers of opposite-sex bias in face processing. Front. Psychol. 2010, 1, 1778. [Google Scholar] [CrossRef]

- Doi, H.; Amamoto, T.; Okishige, Y.; Kato, M.; Shinohara, K. The own-sex effect in facial expression recognition. NeuroReport 2010, 21, 564–568. [Google Scholar] [CrossRef]

- Miles, L.K. Who is approachable? J. Exp. Soc. Psychol. 2009, 45, 262–266. [Google Scholar] [CrossRef]

- Morrison, E.R.; Morris, P.H.; Bard, K.A. The Stability of Facial Attractiveness: Is It What You’ve Got or What You Do with It? J. Nonverbal Behav. 2013, 37, 59–67. [Google Scholar] [CrossRef]

- Lau, S. The Effect of Smiling on Person Perception. J. Soc. Psychol. 1982, 117, 63–67. [Google Scholar] [CrossRef]

- Mertens, A.; Hepp, J.; Voss, A.; Hische, A. Pretty crowds are happy crowds: The influence of attractiveness on mood perception. Psychol. Res. 2021, 85, 1823–1836. [Google Scholar] [CrossRef] [PubMed]

- Otta, E.; Abrosio, F.F.E.; Hoshino, R.L. Reading a Smiling Face: Messages Conveyed by Various Forms of Smiling. Percept. Mot. Ski. 1996, 82 (Suppl. S3), 1111–1121. [Google Scholar] [CrossRef] [PubMed]

- Reis, H.T.; Wilson, I.M.; Monestere, C.; Bernstein, S.; Clark, K.; Seidl, E.; Franco, M.; Gioioso, E.; Freeman, L.; Radoane, K. What is smiling is beautiful and good. Eur. J. Soc. Psychol. 1990, 20, 259–267. [Google Scholar] [CrossRef]

- Mehu, M.; Little, A.C.; Dunbar, R.I.M. Sex differences in the effect of smiling on social judgments: An evolutionary approach. J. Soc. Evol. Cult. Psychol. 2008, 2, 103–121. [Google Scholar] [CrossRef]

- Oosterhof, N.N.; Todorov, A. Shared perceptual basis of emotional expressions and trustworthiness impressions from faces. Emotion 2009, 9, 128–133. [Google Scholar] [CrossRef]

- Todorov, A. Evaluating Faces on Trustworthiness: An Extension of Systems for Recognition of Emotions Signaling Approach/Avoidance Behaviors. Ann. N. Y. Acad. Sci. 2008, 1124, 208–224. [Google Scholar] [CrossRef]

- Fletcher, G.J.O.; Simpson, J.A.; Thomas, G.; Giles, L. Ideals in intimate relationships. J. Personal. Soc. Psychol. 1999, 76, 72–89. [Google Scholar] [CrossRef]

- Little, A.C.; Jones, B.; DeBruine, L. Facial attractiveness: Evolutionary based research. Philos. Trans. R. Soc. B Biol. Sci. 2011, 366, 1638–1659. [Google Scholar] [CrossRef]

- Conway, C.; Jones, B.; DeBruine, L.; Little, A. Evidence for adaptive design in human gaze preference. Proc. R. Soc. B Biol. Sci. 2008, 275, 63–69. [Google Scholar] [CrossRef] [PubMed]

- Costa, M.; Braun, C.; Birbaumer, N. Gender differences in response to pictures of nudes: A magnetoencephalographic study. Biol. Psychol. 2003, 63, 129–147. [Google Scholar] [CrossRef] [PubMed]

- Costell, R.M.; Lunde, D.T.; Kopell, B.S.; Wittner, W.K. Contingent Negative Variation as an Indicator of Sexual Object Preference. Science 1972, 177, 718–720. [Google Scholar] [CrossRef] [PubMed]

- Howard, R.; Longmore, F.; Mason, P. Contingent negative variation as an indicator of sexual object preference: Revisited. Int. J. Psychophysiol. 1992, 13, 185–188. [Google Scholar] [CrossRef]

- Currie, T.E.; Little, A.C. The relative importance of the face and body in judgments of human physical attractiveness. Evol. Hum. Behav. 2009, 30, 409–416. [Google Scholar] [CrossRef]

- Schmuck, J.; Schnuerch, R.; Kirsten, H.; Shivani, V.; Gibbons, H. The influence of selective attention to specific emotions on the processing of faces as revealed by event-related brain potentials. Psychophysiology 2023, 60, e14325. [Google Scholar] [CrossRef] [PubMed]

- Becker, D.V.; Kenrick, D.T.; Neuberg, S.L.; Blackwell, K.C.; Smith, D.M. The confounded nature of angry men and happy women. J. Personal. Soc. Psychol. 2007, 92, 179–190. [Google Scholar] [CrossRef] [PubMed]

- Okubo, M.; Ishikawa, K.; Kobayashi, A.; Laeng, B.; Tommasi, L. Cool Guys and Warm Husbands: The Effect of Smiling on Male Facial Attractiveness for Short- and Long-Term Relationships. Evol. Psychol. 2015, 13, 147470491560056. [Google Scholar] [CrossRef]

- Battistoni, E.; Stein, T.; Peelen, M.V. Preparatory attention in visual cortex. Ann. N. Y. Acad. Sci. 2017, 1396, 92–107. [Google Scholar] [CrossRef]

- Störmer, V.S.; Cohen, M.A.; Alvarez, G.A. Tuning attention to object categories: Spatially global effects of attention to faces in visual processing. J. Cogn. Neurosci. 2019, 31, 937–947. [Google Scholar] [CrossRef]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.J.; Hawk, S.T.; van Knippenberg, A. Presentation and validation of the Radboud Faces Database. Cogn. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- Schneider, C.A.; Rasband, W.S.; Eliceiri, K.W. NIH Image to ImageJ: 25 Years of image analysis. Nat. Methods 2012, 9, 671–675. [Google Scholar] [CrossRef]

- Blechert, J.; Meule, A.; Busch, N.A.; Ohla, K. Food-pics: An image database for experimental research on eating and appetite. Front. Psychol. 2014, 5, 617. [Google Scholar] [CrossRef] [PubMed]

- Gramfort, A.; Luessi, M.; Larson, E.; Engemann, D.A.; Strohmeier, D.; Brodbeck, C.; Goj, R.; Jas, M.; Brooks, T.; Parkkonen, L.; et al. MEG and EEG data analysis with MNE-Python. Front. Neurosci. 2013, 7, 267. [Google Scholar] [CrossRef] [PubMed]

- Bigdely-Shamlo, N.; Mullen, T.; Kothe, C.; Su, K.-M.; Robbins, K.A. The PREP pipeline: Standardized preprocessing for large-scale EEG analysis. Front. Neuroinform. 2015, 9, 120. [Google Scholar] [CrossRef]

- Ablin, P.; Cardoso, J.-F.; Gramfort, A. Faster independent component analysis by preconditioning with hessian approximations. IEEE Trans. Signal Process. 2018, 66, 4040–4049. [Google Scholar] [CrossRef]

- Winkler, I.; Debener, S.; Muller, K.-R.; Tangermann, M. On the influence of high-pass filtering on ICA-based artifact reduction in EEG-ERP. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 4101–4105. [Google Scholar]

- Kim, H.; Luo, J.; Chu, S.; Cannard, C.; Hoffmann, S.; Miyakoshi, M. ICA’s bug: How ghost ICs emerge from effective rank deficiency caused by EEG electrode interpolation and incorrect re-referencing. Front. Signal Process. 2023, 3, 1064138. [Google Scholar] [CrossRef]

- Singmann, H.; Bolker, B.; Westfall, J.; Aust, F.; Ben-Shachar, M.S. Afex: Analysis of Factorial Experiments. Available online: https://CRAN.R-project.org/package=afex (accessed on 4 March 2024).

- Barr, D.J.; Levy, R.; Scheepers, C.; Tily, H.J. Random effects structure for confirmatory hypothesis testing: Keep it maximal. J. Mem. Lang. 2013, 68, 255–278. [Google Scholar] [CrossRef]

- Lenth, R. Emmeans: Estimated Marginal Means, Aka Least-Squares Means. Available online: https://CRAN.R-project.org/package=emmeans (accessed on 4 March 2024).

- Satterthwaite, F.E. Synthesis of variance. Psychometrika 1941, 6, 309–316. [Google Scholar] [CrossRef]

- Adams, R.B.; Hess, U.; Kleck, R.E. The Intersection of Gender-Related Facial Appearance and Facial Displays of Emotion. Emot. Rev. 2015, 7, 5–13. [Google Scholar] [CrossRef]

- Craig, B.M.; Lee, A.J. Stereotypes and Structure in the Interaction between Facial Emotional Expression and Sex Characteristics. Adapt. Hum. Behav. Physiol. 2020, 6, 212–235. [Google Scholar] [CrossRef]

- Thompson, A.E.; Voyer, D. Sex differences in the ability to recognise non-verbal displays of emotion: A meta-analysis. Cogn. Emot. 2014, 28, 1164–1195. [Google Scholar] [CrossRef] [PubMed]

- Hess, U.; Adams, R.B.; Grammer, K.; Kleck, R.E. Face gender and emotion expression: Are angry women more like men? J. Vis. 2009, 9, 19. [Google Scholar] [CrossRef] [PubMed]

- Hammerschmidt, W.; Sennhenn-Reulen, H.; Schacht, A. Associated motivational salience impacts early sensory processing of human faces. NeuroImage 2017, 156, 466–474. [Google Scholar] [CrossRef] [PubMed]

- Itier, R.J.; Taylor, M.J. Effects of repetition and configural changes on the development of face recognition processes. Dev. Sci. 2004, 7, 469–487. [Google Scholar] [CrossRef] [PubMed]

- Itier, R.J.; Taylor, M.J. Face Recognition Memory and Configural Processing: A Developmental ERP Study using Upright, Inverted, and Contrast-Reversed Faces. J. Cogn. Neurosci. 2004, 16, 487–502. [Google Scholar] [CrossRef] [PubMed]

- Gibbons, H.; Kirsten, H.; Seib-Pfeifer, L.-E. Attentional tuning of valent word forms. Int. J. Psychophysiol. 2023, 184, 84–93. [Google Scholar] [CrossRef] [PubMed]

- Maunsell, J.H.; Treue, S. Feature-based attention in visual cortex. Trends Neurosci. 2006, 29, 317–322. [Google Scholar] [CrossRef]

- Klimesch, W. Evoked alpha and early access to the knowledge system: The P1 inhibition timing hypothesis. Brain Res. 2011, 1408, 52–71. [Google Scholar] [CrossRef]

- Zhang, W.; Luck, S.J. Feature-based attention modulates feedforward visual processing. Nat. Neurosci. 2009, 12, 24–25. [Google Scholar] [CrossRef]

- Choi, D.; Egashira, Y.; Takakura, J.; Motoi, M.; Nishimura, T.; Watanuki, S. Gender difference in N170 elicited under oddball task. J. Physiol. Anthropol. 2015, 34, 7. [Google Scholar] [CrossRef] [PubMed]

- Proverbio, A.M.; Riva, F.; Martin, E.; Zani, A. Face Coding Is Bilateral in the Female Brain. PLoS ONE 2010, 5, e11242. [Google Scholar] [CrossRef] [PubMed]

- Andermann, M.; Hidalgo, N.A.I.; Rupp, A.; Schmahl, C.; Herpertz, S.C.; Bertsch, K. Behavioral and neurophysiological correlates of emotional face processing in borderline personality disorder: Are there differences between men and women? Eur. Arch. Psychiatry Clin. Neurosci. 2022, 272, 1583–1594. [Google Scholar] [CrossRef]

- Carrito, M.; Bem-Haja, P.; Silva, C.; Perrett, D.; Santos, I. Event-related potentials modulated by the perception of sexual dimorphism: The influence of attractiveness and sex of faces. Biol. Psychol. 2018, 137, 1–11. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Zani, A.; Adorni, R. Neural markers of a greater female responsiveness to social stimuli. BMC Neurosci. 2008, 9, 56. [Google Scholar] [CrossRef]

- Groen, Y.; Wijers, A.; Tucha, O.; Althaus, M. Are there sex differences in ERPs related to processing empathy-evoking pictures? Neuropsychologia 2013, 51, 142–155. [Google Scholar] [CrossRef] [PubMed]

- Sarauskyte, L.; Monciunskaite, R.; Griksiene, R. The role of sex and emotion on emotion perception in artificial faces: An ERP study. Brain Cogn. 2022, 159, 105860. [Google Scholar] [CrossRef]

- Steffensen, S.C.; Ohran, A.J.; Shipp, D.N.; Hales, K.; Stobbs, S.H.; Fleming, D.E. Gender-selective effects of the P300 and N400 components of the visual evoked potential. Vis. Res. 2008, 48, 917–925. [Google Scholar] [CrossRef]

- Yuan, J.; He, Y.; Qinglin, Z.; Chen, A.; Li, H. Gender differences in behavioral inhibitory control: ERP evidence from a two-choice oddball task. Psychophysiology 2008, 45, 986–993. [Google Scholar] [CrossRef]

- Chaplin, T.M. Gender and Emotion Expression: A Developmental Contextual Perspective. Emot. Rev. 2015, 7, 14–21. [Google Scholar] [CrossRef] [PubMed]

- Hall, J.A.; Gunnery, S.D. Gender Differences in Nonverbal Communication; Hall, J.A., Knapp, M.L., Eds.; DE GRUYTER: Berlin, Germany, 2013; pp. 639–670. [Google Scholar] [CrossRef]

- Hajcak, G.; MacNamara, A.; Olvet, D.M. Event-related potentials, emotion, and emotion regulation: An integrative review. Dev. Neuropsychol. 2010, 35, 129–155. [Google Scholar] [CrossRef]

- Liu, C.; Liu, Y.; Iqbal, Z.; Li, W.; Lv, B.; Jiang, Z. Symmetrical and asymmetrical interactions between facial expressions and gender information in face perception. Front. Psychol. 2017, 8, 1383. [Google Scholar] [CrossRef]

- Huerta-Chavez, V.; Ramos-Loyo, J. Emotional congruency between faces and words benefits emotional judgments in women: An event-related potential study. Neurosci. Lett. 2024, 822, 137644. [Google Scholar] [CrossRef] [PubMed]

- Spreckelmeyer, K.N.; Kutas, M.; Urbach, T.P.; Altenmüller, E.; Münte, T.F. Combined perception of emotion in pictures and musical sounds. Brain Res. 2006, 1070, 160–170. [Google Scholar] [CrossRef]

- Xu, Q.; Yang, Y.; Tan, Q.; Zhang, L. Facial expressions in context: Electrophysiological correlates of the emotional congruency of facial expressions and background scenes. Front. Psychol. 2017, 8, 2175. [Google Scholar] [CrossRef] [PubMed]

- Diéguez-Risco, T.; Aguado, L.; Albert, J.; Hinojosa, J.A. Faces in context: Modulation of expression processing by situational information. Soc. Neurosci. 2013, 8, 601–620. [Google Scholar] [CrossRef]

- Diéguez-Risco, T.; Aguado, L.; Albert, J.; Hinojosa, J.A. Judging emotional congruency: Explicit attention to situational context modulates processing of facial expressions of emotion. Biol. Psychol. 2015, 112, 27–38. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schmuck, J.; Voltz, E.; Gibbons, H. You’re Beautiful When You Smile: Event-Related Brain Potential (ERP) Evidence of Early Opposite-Gender Bias in Happy Faces. Brain Sci. 2024, 14, 739. https://doi.org/10.3390/brainsci14080739

Schmuck J, Voltz E, Gibbons H. You’re Beautiful When You Smile: Event-Related Brain Potential (ERP) Evidence of Early Opposite-Gender Bias in Happy Faces. Brain Sciences. 2024; 14(8):739. https://doi.org/10.3390/brainsci14080739

Chicago/Turabian StyleSchmuck, Jonas, Emely Voltz, and Henning Gibbons. 2024. "You’re Beautiful When You Smile: Event-Related Brain Potential (ERP) Evidence of Early Opposite-Gender Bias in Happy Faces" Brain Sciences 14, no. 8: 739. https://doi.org/10.3390/brainsci14080739

APA StyleSchmuck, J., Voltz, E., & Gibbons, H. (2024). You’re Beautiful When You Smile: Event-Related Brain Potential (ERP) Evidence of Early Opposite-Gender Bias in Happy Faces. Brain Sciences, 14(8), 739. https://doi.org/10.3390/brainsci14080739