A Machine Learning Approach to Classifying EEG Data Collected with or without Haptic Feedback during a Simulated Drilling Task

Abstract

1. Introduction

2. Materials and Methods

2.1. Ethical Approval

2.2. Paradigm and Data Collection

2.3. Pre-Processing

2.4. Feature Selection

2.5. Classification

2.6. EEG Characterization

3. Results

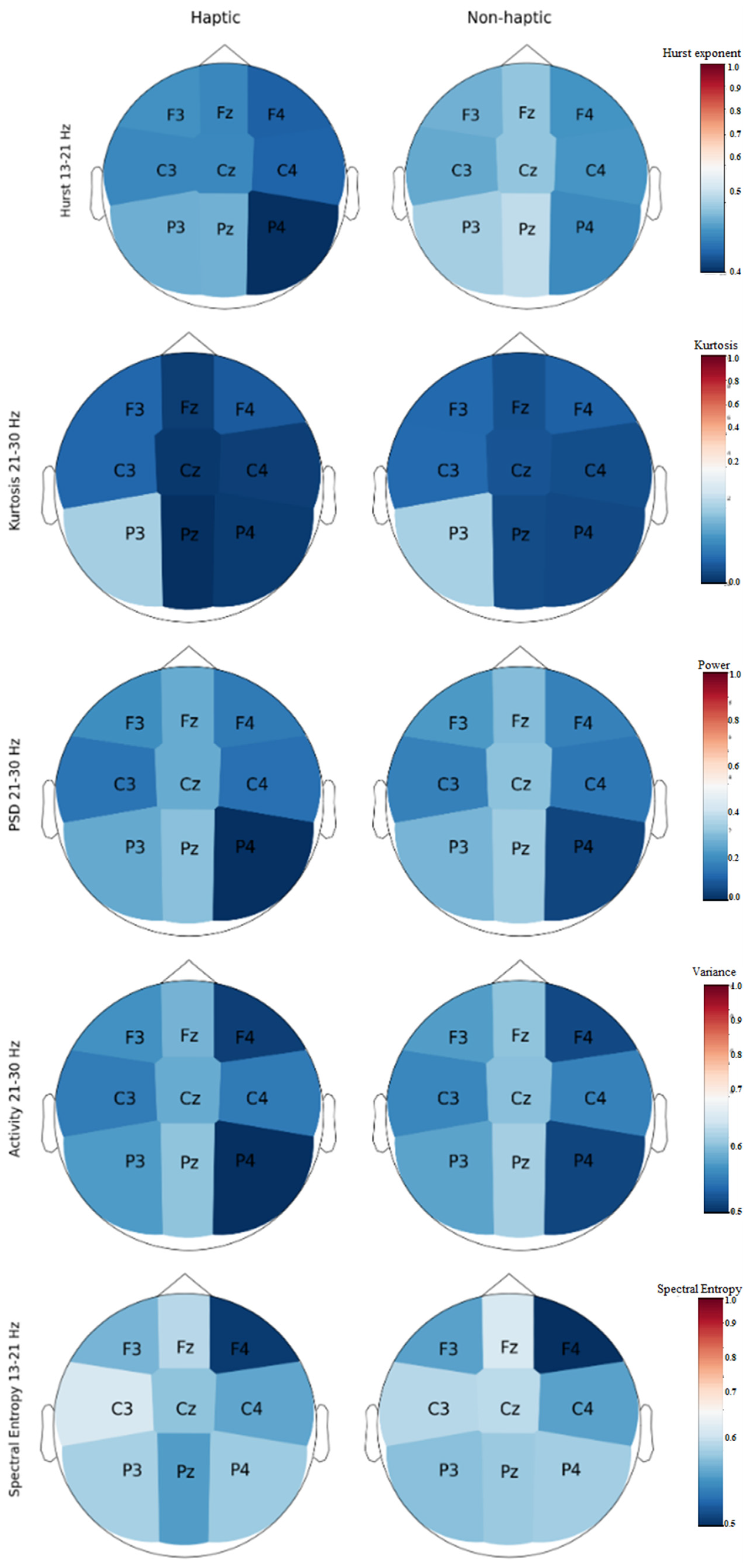

3.1. EEG Characterization

3.2. Feature Selection

3.3. Classification

3.4. EEG Characterization

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| 5 Best Features | 10 Best Features | 15 Best Features | 20 Best Features | All Features | |

|---|---|---|---|---|---|

| SGD | max_iter = 10 tol = 0.0001 | alpha = 0.01 max_iter = 100 tol = 0.0001 | alpha = 0.01 max_iter = 100 tol = 0.0001 | alpha = 0.01 max_iter = 100 penalty = ‘l1’ tol = 0.0001 | max_iter = 100 penalty = ‘l1’ tol = 0.0001 |

| SVC | C = 100 tol = 0.01 | C = 100 tol = 0.01 | C = 100 tol = 0.01 | C = 10 kernel = ‘poly’ tol = 0.01 | C = 100 kernel = ‘poly’ tol = 0.01 |

| DT | alpha = 0.001 | alpha = 0.001 criterion = ‘entropy’ splitter = ‘random’ | alpha = 0.01 criterion = ‘entropy’ | alpha = 0.01 splitter = ‘random’ | alpha = 0.01 splitter = ‘random’ |

| GNB | var_smoothing = 0.1 | var_smoothing = 100 | var_smoothing = 0.0001 | var_smoothing = 10 | var_smoothing = 100 |

| KNN | leaf_size = 10 metric = ‘cityblock’ n_neighbors = 3 | leaf_size = 10 metric = ‘cityblock’ n_neighbors = 7 weights = ‘distance’ | leaf_size = 10 metric = ‘cityblock’ n_neighbors = 7 | leaf_size = 10 metric = ‘cityblock’ n_neighbors = 1 | leaf_size = 10 metric = ‘cityblock’ n_neighbors = 19 |

| RF | max_depth = 10 n_estimators = 50 | max_depth = 5 n_estimators = 50 | max_depth = 10 n_estimators = 50 | criterion = ‘entropy’ max_depth = 10 n_estimators = 50 | max_depth = 5 n_estimators = 10 |

| MLP | alpha = 0.001 hidden_layer_sizes = 100 max_iter = 5000 solver = ‘lbfgs’ | activation = ‘tanh’ alpha = 0.001 hidden_layer_sizes = 50 max_iter = 5000 | activation = ‘logistic’ alpha = 0.001 hidden_layer_sizes = 100 max_iter = 5000 | activation = ‘logistic’ alpha = 0.001 hidden_layer_sizes = 50 max_iter = 5000 | alpha = 0.001 hidden_layer_sizes = 50 max_iter = 5000 |

| 5 Best Features | 10 Best Features | 14 Best Features | |

|---|---|---|---|

| SGD | loss = ‘perceptron’ max_iter = 100 | loss = ‘huber’ max_iter = 100 tol = 0.0001 | alpha = 0.01 loss = ‘log’ max_iter = 10 tol = 0.01 |

| SVC | C = 1 tol = 0.01 | C = 1 tol = 0.01 | C = 100 tol = 0.01 |

| DT | ccp_alpha = 0.001 max_features = ‘auto’ splitter = random | ccp_alpha = 0.001 max_features = ‘auto’ | ccp_alpha = 0.001 max_features = ‘auto’ |

| GNB | var_smoothing = 10 | var_smoothing = 1 | var_smoothing = 1 |

| KNN | leaf_size = 10 metric = ‘euclidean’ n_neighbors = 11 weights = ‘distance’ | leaf_size = 10 metric = ‘cityblock’ n_neighbors = 1 | leaf_size = 10 metric = ‘cityblock’ n_neighbors = 1 |

| RF | max_depth = 10 max_features = ‘auto’ n_estimators = 50 | max_depth = 10 max_features = ‘auto’ n_estimators = 200 | criterion = ‘entropy’ max_depth = 10 max_features = ‘auto’ n_estimators = 500, |

| MLP | activation = ‘tanh’ alpha = 0.001 hidden_layer_sizes = 20 max_iter = 5000 solver = ‘lbfgs’ | activation = ‘logistic’ alpha = 0.001 hidden_layer_sizes = 50 max_iter = 5000 solver = ‘lbfgs’ | alpha = 0.001 hidden_layer_sizes = 50 max_iter = 5000 |

References

- Cano Porras, D.; Sharon, H.; Inzelberg, R.; Ziv-Ner, Y.; Zeilig, G.; Plotnik, M. Advanced virtual reality-based rehabilitation of balance and gait in clinical practice. Ther. Adv. Chronic Dis. 2019, 10, 204062231986837. [Google Scholar] [CrossRef]

- Engelbrecht, H.; Lindeman, R.W.; Hoermann, S. A SWOT Analysis of the Field of Virtual Reality for Firefighter Training. Front. Robot. AI 2019, 6, 101. [Google Scholar] [CrossRef] [PubMed]

- Høeg, E.R.; Povlsen, T.M.; Bruun-Pedersen, J.R.; Lange, B.; Nilsson, N.C.; Haugaard, K.B.; Faber, S.M.; Hansen, S.W.; Kimby, C.K.; Serafin, S. System Immersion in Virtual Reality-Based Rehabilitation of Motor Function in Older Adults: A Systematic Review and Meta-Analysis. Front. Virtual Real. 2021, 2, 647993. [Google Scholar] [CrossRef]

- Jaunzems, K.; Green, L.; Leith, D. Virtual Reality Training for Workers in High-Risk Occupations. In Tracing Behind the Image; Brill: Buckinghamshire, UK, 2020. [Google Scholar] [CrossRef]

- Matijević, V.; Šečić, A.; Mašić Fabac, V.; Sunić, M.; Kolak, Z.; Znika, M. Virtual reality in rehabilitation and therapy. Acta Clin. Croat. 2013, 52, 453–457. [Google Scholar]

- Wen, D.; Fan, Y.; Hsu, S.-H.; Xu, J.; Zhou, Y.; Tao, J.; Lan, X.; Li, F. Combining brain–computer interface and virtual reality for rehabilitation in neurological diseases: A narrative review. Ann. Phys. Rehabil. Med. 2021, 64, 101404. [Google Scholar] [CrossRef] [PubMed]

- Arya, N.P.; Seth, N.H.; Raghuveer, R.; Sewani, Y. Exploring the Efficacy of Physiotherapy in Guillain-Barré Syndrome through Virtual Reality-Based Rehabilitation: A Case Report. Cureus 2024, 16, e59042. [Google Scholar] [CrossRef]

- Casuso-Holgado, M.J.; Martín-Valero, R.; Carazo, A.F.; Medrano-Sánchez, E.M.; Cortés-Vega, M.D.; Montero-Bancalero, F.J. Effectiveness of virtual reality training for balance and gait rehabilitation in people with multiple sclerosis: A systematic review and meta-analysis. Clin. Rehabil. 2018, 32, 1220–1234. [Google Scholar] [CrossRef]

- Massetti, T.; Trevizan, I.L.; Arab, C.; Favero, F.M.; Ribeiro-Papa, D.C.; De Mello Monteiro, C.B. Virtual reality in multiple sclerosis—A systematic review. Mult. Scler. Relat. Disord. 2016, 8, 107–112. [Google Scholar] [CrossRef]

- Donegan, T.; Ryan, B.E.; Sanchez-Vives, M.V.; Świdrak, J. Altered bodily perceptions in chronic neuropathic pain conditions and implications for treatment using immersive virtual reality. Front. Hum. Neurosci. 2022, 16, 1024910. [Google Scholar] [CrossRef]

- Sokolov, A.A.; Collignon, A.; Bieler-Aeschlimann, M. Serious video games and virtual reality for prevention and neurorehabilitation of cognitive decline because of aging and neurodegeneration. Curr. Opin. Neurol. 2020, 33, 239–248. [Google Scholar] [CrossRef]

- Danieau, F.; Lecuyer, A.; Guillotel, P.; Fleureau, J.; Mollet, N.; Christie, M. Enhancing Audiovisual Experience with Haptic Feedback: A Survey on HAV. IEEE Trans. Haptics 2013, 6, 193–205. [Google Scholar] [CrossRef]

- Kirginas, S. Exploring Players’ Perceptions of the Haptic Feedback in Haptic Digitat Games. J. Digit. Media Interact. 2022, 5, 7–22. [Google Scholar] [CrossRef]

- Morris, D.; Tan, H.; Barbagli, F.; Chang, T.; Salisbury, K. Haptic Feedback Enhances Force Skill Learning. In Proceedings of the 2007 2nd Joint EuroHaptics Conference and Symposium on Haptic Interfaces for Virtual Environments and Teleoperator Systems, Tsukaba, Japan, 22–24 March 2007; pp. 21–26. Available online: http://ieeexplore.ieee.org/document/4145145/ (accessed on 15 June 2024).

- Athif, M.; Rathnayake, B.L.K.; Nagahapitiya, S.M.D.B.S.; Samarasinghe, S.A.D.A.K.; Samaratunga, P.S.; Peiris, R.L.; De Silva, A.C. Using Biosignals for Objective Measurement of Presence in Virtual Reality Environments. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) in Conjunction with the 43rd Annual Conference of the Canadian Medical and Biological Engineering Society, Montreal, QC, Canada, 20–24 July 2020; pp. 3035–3039. [Google Scholar]

- Zhou, Z.-H. Machine Learning; Springer: Singapore, 2021; p. 458. [Google Scholar]

- Cao, L.; Tao, W.; An, S.; Jin, J.; Yan, Y.; Liu, X.; Ge, W.; Sah, A.; Battle, L.; Sun, J.; et al. Smile: A system to support machine learning on EEG data at scale. Proc. VLDB Endow. 2019, 12, 2230–2241. [Google Scholar] [CrossRef]

- Chan, A.; Early, C.E.; Subedi, S.; Yuezhe, L.; Hong, L. Systematic analysis of machine learning algorithms on EEG data for brain state intelligence. In Proceedings of the 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Washington, DC, USA, 9–12 November 2015; pp. 793–799. Available online: http://ieeexplore.ieee.org/document/7359788/ (accessed on 15 June 2024).

- Ng, B.; Reh, R.K.; Mostafavi, S. A practical guide to applying machine learning to infant EEG data. Dev. Cogn. Neurosci. 2022, 54, 101096. [Google Scholar] [CrossRef] [PubMed]

- Ponciano, V.; Pires, I.M.; Ribeiro, F.R.; Garcia, N.M.; Villasana, M.V.; Zdravevski, E.; Lameski, P. Machine Learning Techniques with ECG and EEG Data: An Exploratory Study. Computers 2020, 9, 55. [Google Scholar] [CrossRef]

- Sarkar, A.; Singh, A.; Chakraborty, R. A deep learning-based comparative study to track mental depression from EEG data. Neurosci. Inform. 2022, 2, 100039. [Google Scholar] [CrossRef]

- Stevens, C.E.; Zabelina, D.L. Classifying creativity: Applying machine learning techniques to divergent thinking EEG data. NeuroImage 2020, 219, 116990. [Google Scholar] [CrossRef]

- Vivaldi, N.; Caiola, M.; Solarana, K.; Ye, M. Evaluating Performance of EEG Data-Driven Machine Learning for Traumatic Brain Injury Classification. IEEE Trans. Biomed. Eng. 2021, 68, 3205–3216. [Google Scholar] [CrossRef]

- Liao, C.-Y.; Tai, S.-K.; Chen, R.-C.; Hendry, H. Using EEG and Deep Learning to Predict Motion Sickness under Wearing a Virtual Reality Device. IEEE Access 2020, 8, 126784–126796. [Google Scholar] [CrossRef]

- Yildirim, C. A Review of Deep Learning Approaches to EEG-Based Classification of Cybersickness in Virtual Reality. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Utrecht, The Netherlands, 14–18 December 2020; pp. 351–357. Available online: https://ieeexplore.ieee.org/document/9319098/ (accessed on 15 June 2024).

- Teo, J.; Chia, J.T. EEG-based excitement detection in immersive environments: An improved deep learning approach. AIP Conf. Proc. 2018, 2016, 020145. [Google Scholar]

- Karácsony, T.; Hansen, J.P.; Iversen, H.K.; Puthusserypady, S. Brain Computer Interface for Neuro-rehabilitation with Deep Learning Classification and Virtual Reality Feedback. In Proceedings of the AH2019: Augmented Human International Conference 2019, Reims, France, 11 March 2019; ACM: Reims, France, 2019; pp. 1–8. Available online: https://dl.acm.org/doi/10.1145/3311823.3311864 (accessed on 15 June 2024).

- Kamińska, D.; Smółka, K.; Zwoliński, G. Detection of Mental Stress through EEG Signal in Virtual Reality Environment. Electronics 2021, 10, 2840. [Google Scholar] [CrossRef]

- Alsuradi, H.; Pawar, C.; Park, W.; Eid, M. Detection of Tactile Feedback on Touch-Screen Devices using EEG Data. In Proceedings of the 2020 IEEE Haptics Symposium (HAPTICS), Crystal City, VA, USA, 28–31 March 2020; pp. 775–780. [Google Scholar] [CrossRef]

- Alsuradi, H.; Park, W.; Eid, M. Explainable Classification of EEG Data for an Active Touch Task Using Shapley Values. In HCI International 2020—Late Breaking Papers: Multimodality and Intelligence; Stephanidis, C., Kurosu, M., Degen, H., Reinerman-Jones, L., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 406–416. [Google Scholar]

- Alsuradi, H.; Eid, M. Trial-based Classification of Haptic Tasks Based on EEG Data. In Proceedings of the 2021 IEEE World Haptics Conference (WHC), Montreal, QC, Canada, 6–9 July 2021; pp. 37–42. [Google Scholar]

- Li, D.; Yang, Z.; Hou, F.; Kang, Q.; Liu, S.; Song, Y.; Gao, Q.; Dong, E. EEG-Based Emotion Recognition with Haptic Vibration by a Feature Fusion Method. IEEE Trans. Instrum. Meas. 2022, 71, 2504111. [Google Scholar] [CrossRef]

- Moncada, F.; Martín, S.; González, V.M.; Álvarez, V.M.; García-López, B.; Gómez-Menéndez, A.I.; Villar, J.R. Virtual reality and machine learning in the automatic photoparoxysmal response detection. Neural Comput. Appl. 2023, 35, 5643–5659. [Google Scholar] [CrossRef]

- Grant, B. Behavioral and Neurophysiological Measures of Haptic Feedback during a Drilling Simulation. Master’s Dissertation, Ontario Tech University, Oshawa, ON, Canada, 2019. [Google Scholar]

- Alegre, M.; Labarga, A.; Gurtubay, I.G.; Iriarte, J.; Malanda, A.; Artieda, J. Beta electroencephalograph changes during passive movements: Sensory afferences contribute to beta event-related desynchronization in humans. Neurosci. Lett. 2002, 331, 29–32. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Anand, R.; Wang, M. Maximum Relevance and Minimum Redundancy Feature Selection Methods for a Marketing Machine Learning Platform. In Proceedings of the 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Washington, DC, USA, 5–8 October 2019; pp. 442–452. Available online: https://ieeexplore.ieee.org/document/8964172/ (accessed on 15 June 2024).

- Pfurtscheller, G.; Lopes Da Silva, F.H. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Stancin, I.; Cifrek, M.; Jovic, A. A Review of EEG Signal Features and Their Application in Driver Drowsiness Detection Systems. Sensors 2021, 21, 3786. [Google Scholar] [CrossRef]

- Zhao, Q.; Jiang, H.; Hu, B.; Li, Y.; Zhong, N.; Li, M.; Lin, W.; Liu, Q. Nonlinear Dynamic Complexity and Sources of Resting-state EEG in Abstinent Heroin Addicts. IEEE Trans. Nanobioscience 2017, 16, 349–355. [Google Scholar] [CrossRef]

- Hurst, H.E. Long-Term Storage Capacity of Reservoirs. Trans. Am. Soc. Civil. Eng. 1951, 116, 770–799. [Google Scholar] [CrossRef]

- Bandt, C.; Pompe, B. Permutation Entropy: A Natural Complexity Measure for Time Series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef]

- Higuchi, T. Approach to an irregular time series on the basis of the fractal theory. Phys. D Nonlinear Phenom. 1988, 31, 277–283. [Google Scholar] [CrossRef]

- Mann, H.B.; Whitney, D.R. On a Test of Whether one of Two Random Variables is Stochastically Larger than the Other. Ann. Math. Statist. 1947, 18, 50–60. [Google Scholar] [CrossRef]

- Sun, W.; Quevedo, A. Caregiver usability testing of web-based and virtual reality reminiscence therapy for persons with dementia. Innov. Aging 2022, 6, 780. [Google Scholar] [CrossRef]

| Types of Features | Features |

|---|---|

| Activity (Variance) | |

| Time Domain | Mobility |

| Complexity | |

| Frequency Domain | Power Spectral Density (PSD) |

| Entropy | Permutation |

| Spectral | |

| Non-Linear | Higuchi’s Fractal Dimension |

| Hurst’s Exponent | |

| Statistical | Skewness |

| Kurtosis |

| Feature Name | p-Value |

|---|---|

| Pz 13–21 Hz Hurst | 1.20 × 108 |

| Fz 13–21 Hz Hurst | 1.72 × 108 |

| Cz 13–21 Hz Hurst | 4.65 × 108 |

| Cz 21–30 Hz Kurtosis | 8.98 × 106 |

| Cz 21–30 Hz PSD | 7.69 × 105 |

| Cz 21–30 Hz Activity | 8.20 × 105 |

| P4 13–21 Hz Hurst | 2.34 × 104 |

| C3 13–21 Hz Spectral_Ent | 7.78 × 104 |

| Cz 13–21 Hz Spectral_Ent | 1.83 × 103 |

| Pz 13–21 Hz Spectral_Ent | 1.07 × 102 |

| Pz 10–13 Hz Skewness | 1.29 × 102 |

| F4 13–21 Hz Kurtosis | 2.82 × 102 |

| Fz 10–13 Hz Activity | 2.89 × 102 |

| 5 Best Features | 10 Best Features | 15 Best Features | 20 Best Features | All Features | |

|---|---|---|---|---|---|

| SGD | 62 | 65 | 66 | 73 | 93 |

| SVC | 88 | 91 | 92 | 99 | 100 |

| DT | 85 | 86 | 92 | 96 | 100 |

| GNB | 54 | 59 | 52 | 66 | 58 |

| KNN | 90 | 92 | 94 | 99.4 | 100 |

| RF | 88 | 90 | 95 | 99.7 | 100 |

| MLP | 90 | 91 | 94 | 99.2 | 100 |

| 5 Best Features | 10 Best Features | 14 Best Features | |

|---|---|---|---|

| SGD | 66 | 71 | 71 |

| SVC | 84 | 98 | 99 |

| DT | 88 | 98 | 96 |

| GNB | 64 | 65 | 67 |

| KNN | 90 | 99 | 99 |

| RF | 90 | 99 | 100 |

| MLP | 91 | 99 | 99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramirez Campos, M.S.; McCracken, H.S.; Uribe-Quevedo, A.; Grant, B.L.; Yielder, P.C.; Murphy, B.A. A Machine Learning Approach to Classifying EEG Data Collected with or without Haptic Feedback during a Simulated Drilling Task. Brain Sci. 2024, 14, 894. https://doi.org/10.3390/brainsci14090894

Ramirez Campos MS, McCracken HS, Uribe-Quevedo A, Grant BL, Yielder PC, Murphy BA. A Machine Learning Approach to Classifying EEG Data Collected with or without Haptic Feedback during a Simulated Drilling Task. Brain Sciences. 2024; 14(9):894. https://doi.org/10.3390/brainsci14090894

Chicago/Turabian StyleRamirez Campos, Michael S., Heather S. McCracken, Alvaro Uribe-Quevedo, Brianna L. Grant, Paul C. Yielder, and Bernadette A. Murphy. 2024. "A Machine Learning Approach to Classifying EEG Data Collected with or without Haptic Feedback during a Simulated Drilling Task" Brain Sciences 14, no. 9: 894. https://doi.org/10.3390/brainsci14090894

APA StyleRamirez Campos, M. S., McCracken, H. S., Uribe-Quevedo, A., Grant, B. L., Yielder, P. C., & Murphy, B. A. (2024). A Machine Learning Approach to Classifying EEG Data Collected with or without Haptic Feedback during a Simulated Drilling Task. Brain Sciences, 14(9), 894. https://doi.org/10.3390/brainsci14090894