The Problem with Time: Application of Partial Least Squares Analysis on Time-Frequency Plots to Account for Varying Time Intervals with Applied EEG Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Multiple Choice and Script Concordance Questions

2.3. Experimental Procedure

2.4. EEG Acquisition and Preprocessing

2.5. Time/Frequency Analysis

2.6. Univariate Analysis

2.7. Partial Least Squares Analysis

3. Results

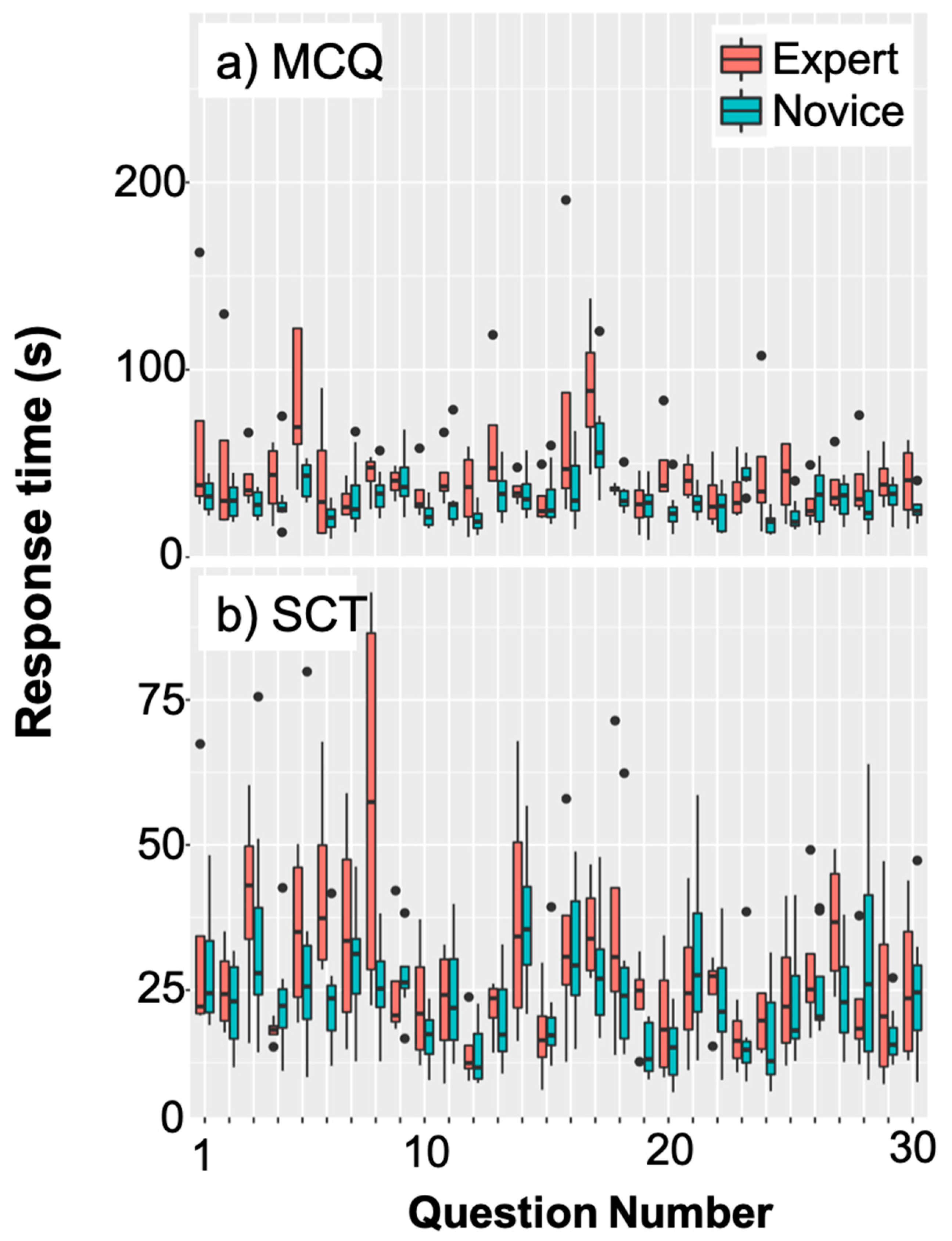

3.1. Varying Lengths of Trials

3.2. Univariate Statistics

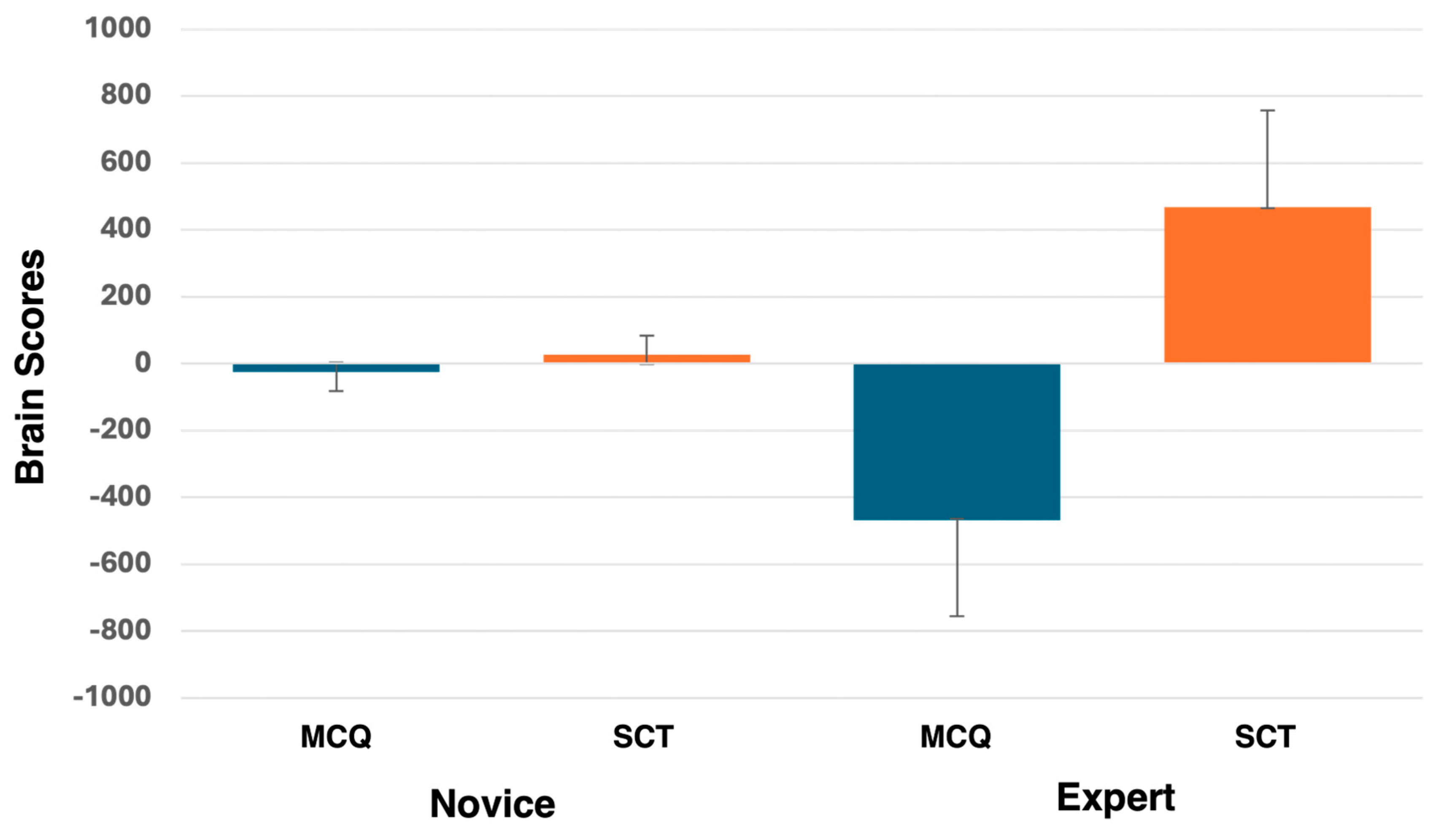

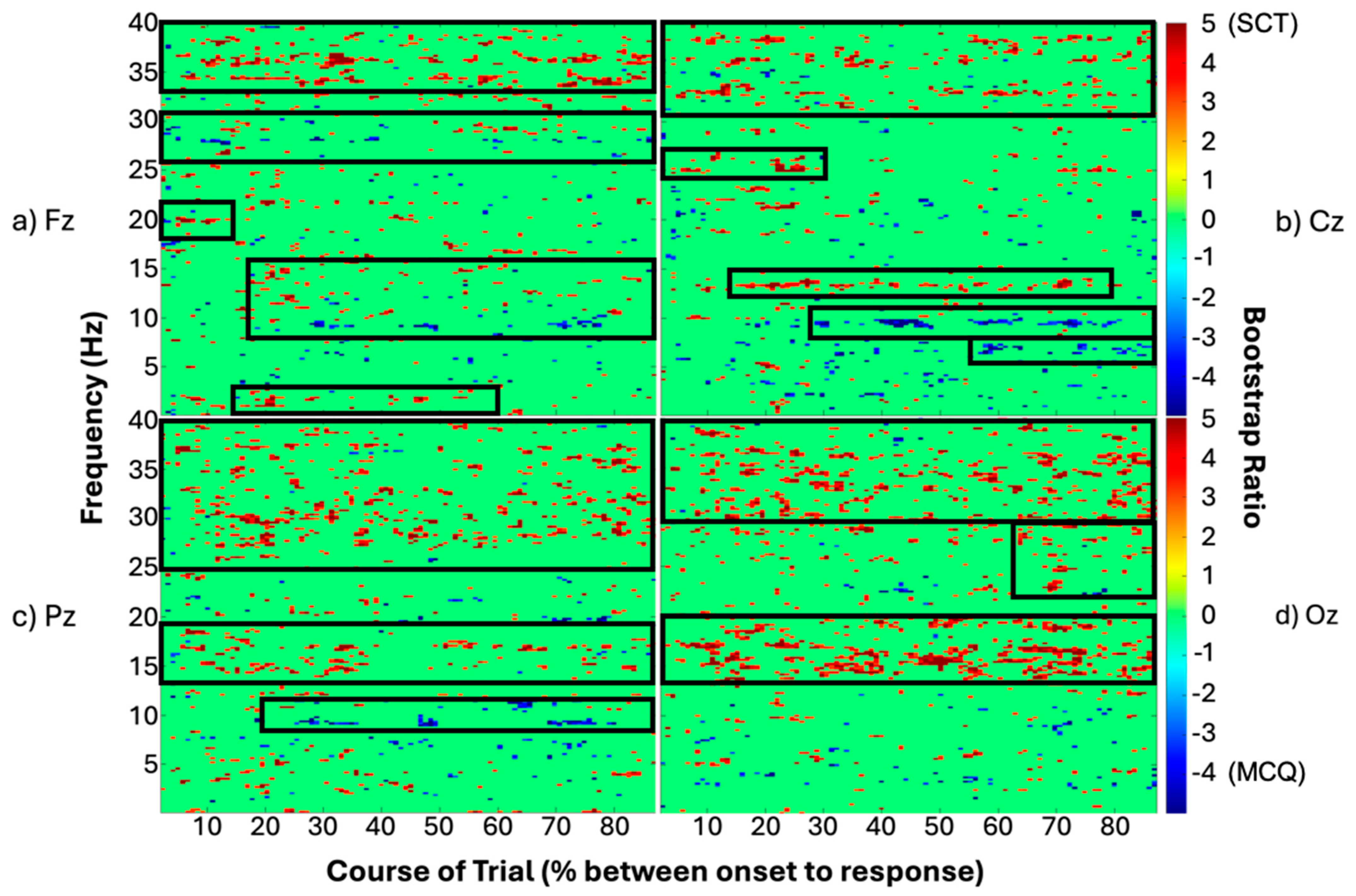

3.3. PLS

4. Discussion

5. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gold, J.I.; Shadlen, M.N. The neural basis of decision-making. Annu. Rev. Neurosci. 2007, 30, 535–575. [Google Scholar] [CrossRef] [PubMed]

- Reder, S. Strategy selection and question answering. Cogn. Psychol. 1987, 19, 90–138. [Google Scholar] [CrossRef]

- Boudewyn, M.; Luck, S.J.; Farrens, J.L.; Kappenman, E.S. How many trials does it take to get a significant ERP effect? It depends. Psychophysiology 2017, 55, e13049. [Google Scholar] [CrossRef] [PubMed]

- Deco, G.; Jirsa, V.; McIntosh, A.R.; Sporns, O.; Kotter, R. Key role of coupling, delay, and noise in resting brain fluctuations. Proc. Natl. Acad. Sci. USA 2009, 106, 10302–10307. [Google Scholar] [CrossRef] [PubMed]

- Onton, J.; Delorme, A.; Makeig, S. Frontal midline EEG dynamics during working memory. NeuroImage 2005, 27, 341–356. [Google Scholar] [CrossRef]

- Jacobs, J.; Hwang, G.; Curran, T.; Kahana, M.J. EEG oscillations and recognition memory: Theta correlates of memory retrieval and decision-making. NeuroImage 2006, 32, 978–987. [Google Scholar] [CrossRef]

- Ahmed, M.Z.I.; Sinha, N.; Ghaderpour, E.; Phadikar, S.; Ghosh, R. A novel baseline removal paradigm for subject-independent features in emotion classification using EEG. Bioengineering 2023, 10, 54. [Google Scholar] [CrossRef] [PubMed]

- Erat, K.; Sahin, E.B.; Dogan, F.; Merdanoglu, N.; Akcakaya, A.; Durdu, P.O. Emotion recognition with EEG-based brain-computer interfaces: A systematic literature review. Multimed. Tools Appl. 2024, 83, 79647–79694. [Google Scholar] [CrossRef]

- Picton, T.W. The P300 wave of the human event-related potential. J. Clin. Neurophys. 1992, 9, 456–479. [Google Scholar] [CrossRef] [PubMed]

- Proudfit, G.H. The reward positivity: From basic research on reward to a biomarker for depression. Psychophysiology 2012, 52, 449–459. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Aranibar, A. Evaluation of event-related desynchronization (ERD) preceding and following voluntary self-paced movement. Electroencephalogr. Clin. Neurophysiol. 1979, 46, 138–146. [Google Scholar] [CrossRef]

- Bressler, S.L.; Freeman, W.J. Frequency analysis of olfactory system EEG in cat, rabbit, and rat. Electroencephalogr. Clin. Neurophysiol. 1980, 50, 19–24. [Google Scholar] [CrossRef] [PubMed]

- Makeig, S. Auditory event-related dynamics of the EEG spectrum and effects of exposure to tones. Electroencephalogr. Clin. Neurophysiol. 1993, 86, 283–293. [Google Scholar] [CrossRef]

- Neuenschwander, S.; Varela, F.J. Visually triggered neuronal oscillations in the pigeon: An autocorrelation study of tectal activity. Eur. J. Neurosci. 1993, 5, 870–881. [Google Scholar] [CrossRef] [PubMed]

- Weiss, S.; Rappelsberger, P. EEG coherence within the 13-18 Hz band as a correlate of a distinct lexical organisation of concrete and abstract nouns in humans. Neurosci. Lett. 1996, 209, 17–20. [Google Scholar] [CrossRef] [PubMed]

- Tallon-Baudry, C.; Bertrand, O.; Delpuech, C.; Pernier, J. Oscillatory gamma-band (30–70 Hz) activity induced by a visual search task in humans. J. Neurosci. 1997, 17, 722–734. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Lobaugh, N.J.; West, R.; McIntosh, A.R. Spatiotemporal analysis of experimental differences in event-related potential data with partial least squares. Psychophysiology 2001, 38, 517–530. [Google Scholar] [CrossRef]

- McIntosh, A.R.; Lobaugh, N.J. Partial least squares analysis of neuroimaging data: Applications and advances. NeuroImage 2004, 23, S250–S263. [Google Scholar] [CrossRef]

- Kovacevic, N.; McIntosh, A.R. Groupwise independent component decomposition of EEG data and partial least squares analysis. NeuroImage 2003, 15, 1103–1112. [Google Scholar] [CrossRef] [PubMed]

- McIntosh, A.R.; Kovacevic, N.; Itier, R.J. Increased brain signal variability accompanies lower behavioral variability in development. PLoS Comput Biol. 2008, 4, e1000106. [Google Scholar] [CrossRef] [PubMed]

- Szostakiwskyj, J.M.H.; Willatt, S.E.; Cortese, F.; Protzner, A.B. The modulation of EEG variability between internally- and externally-driven cognitive states varies with maturation and task performance. PLoS ONE 2017, 12, e0181894. [Google Scholar] [CrossRef] [PubMed]

- Charlin, B.; Roy, L.; Brailovsky, C.; Goulet, F.; van der Vleuten, C. The Script Concordance Test: A tool to assess the reflective clinician. Teach. Learn. Med. 2000, 12, 189–195. [Google Scholar] [CrossRef]

- Gawad, N.; Wood, T.J.; Cowley, L.; Raiche, I. How do cognitive processes influence script concordance test responses? Med. Educ. 2021, 55, 359–369. [Google Scholar] [CrossRef] [PubMed]

- Mitra, P.; Bokil, H. Observed Brain Dynamics, Chapter 7.3.4; Oxford Academic: New York, NY, USA, 2007. [Google Scholar] [CrossRef]

- Makeig, S.; Bell, A.; Jung, T.P.; Sejnowski, T.J. Independent component analysis of electroencephalographic data. In Advances in Neural Information Processing Systems 8; Touretzky, D.S., Mozer, M., Hasselmo, M.E., Eds.; MIT Press: Cambridge, MA, USA, 1996; pp. 145–151. [Google Scholar]

- Pion-Tonachini, L.; Kreutz-Delgado, K.; Makeig, S. ICLabel: An automated electroencephalographic independent component classifier, dataset, and website. NeuroImage 2019, 198, 181–197. [Google Scholar] [CrossRef] [PubMed]

- Makeig, S.; Onton, J. ERP features and EEG dynamics: An ICA perspective. In Oxford Handbook of Event-Related Potential Components; Luck, S.J., Kappenman, E.S., Eds.; Oxford University Press: Oxford, UK, 2013; pp. 51–86. [Google Scholar]

- Lopez-Caleron, J.; Luck, S.J. ERPLAB: An open-source toolbox for the analysis of event-related potentials. Front. Hum. Neurosci. 2014, 8, 213. [Google Scholar] [CrossRef]

- Hsieh, L.-T.; Ranganath, C. Frontal midline theta oscillations during working memory maintenance and episodic encoding and retrieval. NeuroImage 2014, 85, 721–729. [Google Scholar] [CrossRef] [PubMed]

- Meyer, L.; Grigutsch, M.; Schmuck, N.; Gaston, P.; Friederici, A.D. Frontal–posterior theta oscillations reflect memory retrieval during sentence comprehension. Cortex 2015, 71, 205–218. [Google Scholar] [CrossRef] [PubMed]

- Sauseng, P.; Klimesch, W.; Schabus, M.; Doppelmayr, M. Fronto-parietal EEG coherence in theta and upper alpha reflect central executive functions of working memory. Int. J. Psychophysiol. 2015, 57, 97–103. [Google Scholar] [CrossRef]

- Good, P. Dependence. In Permutation Tests; Springer: New York, NY, USA, 2000. [Google Scholar] [CrossRef]

- Efron, B.; Tibshirani, R. Bootstrap methods for standard errors, confidence intervals, and other measures of statistical accuracy. Stat. Sci. 1986, 1, 54–75. [Google Scholar] [CrossRef]

- Efron, B.; Tibshirani, R.J. An Introduction to the Bootstrap; Chapman & Hall/CRC: Boca Raton, FL, USA, 1994. [Google Scholar] [CrossRef]

- Kornblith, S.; Buschman, T.J.; Miller, E.K. Stimulus load and oscillatory activity in higher cortex. Cereb. Cortex 2015, 26, 3772–3784. [Google Scholar] [CrossRef] [PubMed]

- Herrmann, C.S.; Munk, M.H.J.; Engel, A.K. Cognitive functions of gamma-band activity: Memory match and utilization. TiCS 2004, 8, 347–355. [Google Scholar] [CrossRef] [PubMed]

- Siegel, M.; Engel, A.K.; Donner, T.H. Cortical network dynamics of perceptual decision-making in the human brain. Front. Hum. Neurosci. 2011, 5, 21. [Google Scholar] [CrossRef] [PubMed]

- van Bree, S.; Melcon, M.; Kolibius, L.D.; Kerren, C.; Wimber, M.; Hanslmayr, S. The brain time toolbox, a software library to retune electrophysiology data to brain dynamics. Nat. Hum. Behav. 2002, 6, 1430–1439. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Szostakiwskyj, J.M.H.; Cortese, F.; Abdul-Rhaman, R.; Anderson, S.J.; Warren, A.L.; Archer, R.; Read, E.; Hecker, K.G. The Problem with Time: Application of Partial Least Squares Analysis on Time-Frequency Plots to Account for Varying Time Intervals with Applied EEG Data. Brain Sci. 2025, 15, 135. https://doi.org/10.3390/brainsci15020135

Szostakiwskyj JMH, Cortese F, Abdul-Rhaman R, Anderson SJ, Warren AL, Archer R, Read E, Hecker KG. The Problem with Time: Application of Partial Least Squares Analysis on Time-Frequency Plots to Account for Varying Time Intervals with Applied EEG Data. Brain Sciences. 2025; 15(2):135. https://doi.org/10.3390/brainsci15020135

Chicago/Turabian StyleSzostakiwskyj, Jessie M. H., Filomeno Cortese, Raneen Abdul-Rhaman, Sarah J. Anderson, Amy L. Warren, Rebecca Archer, Emma Read, and Kent G. Hecker. 2025. "The Problem with Time: Application of Partial Least Squares Analysis on Time-Frequency Plots to Account for Varying Time Intervals with Applied EEG Data" Brain Sciences 15, no. 2: 135. https://doi.org/10.3390/brainsci15020135

APA StyleSzostakiwskyj, J. M. H., Cortese, F., Abdul-Rhaman, R., Anderson, S. J., Warren, A. L., Archer, R., Read, E., & Hecker, K. G. (2025). The Problem with Time: Application of Partial Least Squares Analysis on Time-Frequency Plots to Account for Varying Time Intervals with Applied EEG Data. Brain Sciences, 15(2), 135. https://doi.org/10.3390/brainsci15020135